Content from Short introduction to Bayesian statistics

Last updated on 2025-11-10 | Edit this page

Estimated time: 68 minutes

Overview

Questions

- How are statistical models formulated and fitted within the Bayesian framework?

Objectives

Learn to

- formulate prior, likelihood, posterior distributions.

- fit a Bayesian model with the grid approximation.

- communicate posterior information.

- work with with posterior samples.

Bayes’ formula

The starting point of Bayesian statistics is Bayes’ theorem, expressed as:

\[ p(\theta | X) = \frac{p(X | \theta) p(\theta) }{p(X)} \\ \]

When dealing with a statistical model, this theorem is used to infer the probability distribution of the model parameters \(\theta\), conditional on the available data \(X\). These probabilities are quantified by the posterior distribution \(p(\theta | X)\), which is primary the target of probabilistic modeling.

On the right-hand side of the formula, the likelihood function \(p(X | \theta)\) gives plausibility of the data given \(\theta\), and determines the impact of the data on the posterior.

A defining feature of Bayesian modeling is the second term in the numerator, the prior distribution \(p(\theta)\). The prior is used to incorporate beliefs about \(\theta\) before considering the data.

The denominator on the right-hand side \(p(X)\) is called the marginal probability, and is often practically impossible to compute. For this reason the proportional version of Bayes’ formula is typically employed:

\[ p(\theta | X) \propto p(\theta) p(X | \theta). \]

The proportional Bayes’ formula yields an unnormalized posterior distribution, which can subsequently be normalized to obtain the posterior.

Example 1: handedness

Let’s illustrate the use of the Bayes’ theorem with an example.

Assume we are trying to estimate the prevalence of left-handedness in humans, based on a sample of \(N=50\) students, out of which \(x=7\) are left-handed and 43 right-handed.

The outcome is binary and the students are assumed to be independent (e.g. no twins), so the binomial distribution is the appropriate choice for likelihood:

\[ p(X|\theta) = Bin(7 | 50, \theta). \]

Without further justification, we’ll choose \(p(\theta) = Beta(\theta |1, 10)\) as the prior distribution, so the unnormalized posterior distribution is

\[ p(\theta | X) = \text{Bin}(7 | 50, \theta) \cdot \text{Beta}(\theta | 1, 10). \]

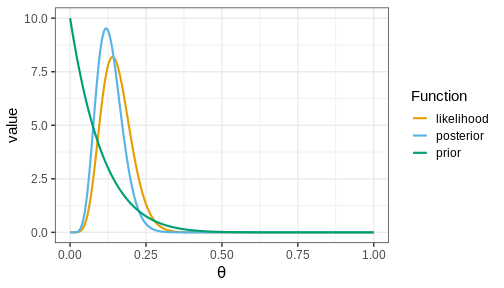

Below, we’ll plot these functions. Likelihood (which is not a distribution!) has been normalized for better illustration.

The figure shows that the majority of the mass in the posterior distribution is concentrated between 0 and 0.25. This implies that, given the available data and prior distribution, the model is fairly confident that the value of \(\theta\) is between these values. The peak of the posterior is at approximately 0.1 representing the most likely value. This aligns well with intuitive expectations about left-handedness in humans.

Actual value from a study from 1975 with 7,688 children in US grades 1-6 was 9.6%

Hardyck, C. et al. (1976), Left-handedness and cognitive deficit https://en.wikipedia.org/wiki/Handedness

Communicating posterior information

The posterior distribution \(p(\theta | X)\) contains all the information about \(\theta\) given the data, chosen model, and the prior distribution. However, understanding a distribution in itself can be challenging, especially if it is multidimensional. To effectively communicate posterior information, methods to quantify the information contained in the posterior are needed. Two commonly used types of estimates are point estimates, such as the posterior mean, mode, and variance, and posterior intervals, which provide probabilities for ranges of values.

Two specific types of posterior intervals are often of interest:

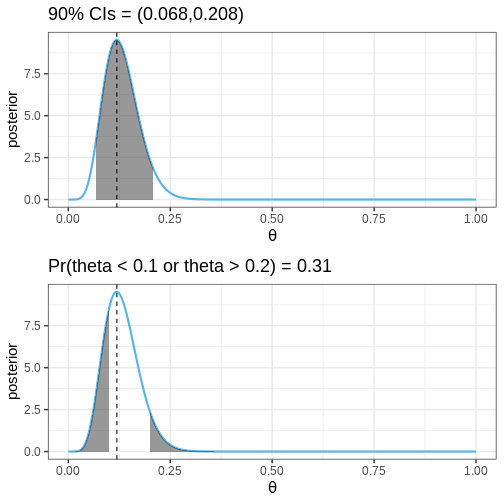

Credible intervals (CIs): These intervals leave equal posterior mass below and above them, computed as posterior quantiles. For instance, a 90% CI would span the range between the 5% and 95% quantiles.

Defined boundary intervals: Computed as the posterior mass for specific parts of the parameter space, these intervals quantify the probability for given parameter conditions. For example, we might be interested in the posterior probability that \(\theta > 0\), \(0<\theta<0.5\), or \(\theta<0\) or \(\theta > 0.5\). These probabilities can be computed by integrating the posterior over the corresponding sets.

The following figures illustrate selected posterior intervals for the previous example along with the posterior mode, or maximum a posteriori (MAP) estimate.

Grid approximation

Specifying a probabilistic model can be simple, but a common bottleneck in Bayesian data analysis is model fitting. Later in the course, we will begin using Stan, a state-of-the-art method for approximating the posterior. However, we’ll begin fitting probabilistic model using the grid approximation. This approach involves computing the unnormalized posterior distribution at a grid of evenly spaced values in the parameter space and can be specified as follows:

- Define a grid of parameter values.

- Compute the prior and likelihood on the grid.

- Multiply to get the unnormalized posterior.

- Normalize.

Now, we’ll implement the grid approximation for the handedness example in R.

Example 2: handedness with grid approximation

First, we’ll load the required packages, define the data variables, and the grid of parameter values

R

# Sample size

N <- 50

# 7/50 are left-handed

x <- 7

# Define a grid of points in the interval [0, 1], with 0.01 interval

delta <- 0.01

theta_grid <- seq(from = 0, to = 1, by = delta)

Computing the values of the likelihood, prior, and unnormalized posterior is straightforward. While you can compute these using for-loops, vectorization as used below, is a more efficient approach:

R

likelihood <- dbinom(x = x, size = N, prob = theta_grid)

prior <- dbeta(theta_grid, 1, 10)

posterior <- likelihood*prior

Next, the posterior needs to be normalized.

In practice, this means dividing the values by the area under the unnormalized posterior. The area is computed with the integral \[\int_0^1 p(\theta | X)_{\text{unnormalized}} d\theta,\] which is for a grid approximated function is the sum \[\sum_{\text{grid}} p(\theta | X)_{\text{unnormalized}} \cdot \delta,\] where \(\delta\) is the grid interval.

R

# Normalize

posterior <- posterior/(sum(posterior)*delta)

# Likelihood also normalized for better visualization

likelihood <- likelihood/(sum(likelihood)*delta)

Finally, we can plot these functions

R

# Make data frame

df1 <- data.frame(theta = theta_grid, likelihood, prior, posterior)

# Wide to long format

df1_l <- df1 %>%

gather(key = "Function", value = "value", -theta)

# Plot

p1 <- ggplot(df1_l,

aes(x = theta, y = value, color = Function)) +

geom_point(size = 2) +

geom_line(linewidth = 1) +

scale_color_grafify() +

labs(x = expression(theta))

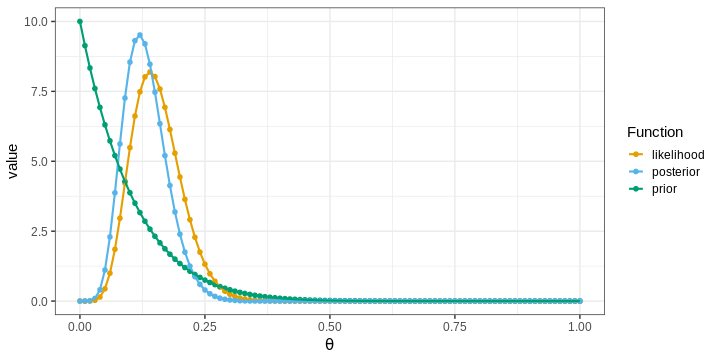

p1

The points in the figure represent the values of the functions computed at the grid locations. The lines depict linear interpolations between these points.

Challenge

Experiment with different priors and examine their effects on the posterior. You could try, for example, different Beta distributions, the normal distribution, or the uniform distribution.

How does the shape of the prior impact the posterior?

What is the relationship between the posterior, data (likelihood) and the prior?

Grid approximation and posterior summaries

Next, we’ll learn how to compute point estimates and posterior intervals based on the approximate posterior obtained with the grid approximation.

Computing the posterior mean and variance is based on the definition of these statistics for continuous variables. The mean is defined as \[\int \theta \cdot p(\theta | X) d\theta,\] and can be computed using discrete integration: \[\sum_{\text{grid}} \theta \cdot p(\theta | X) \cdot \delta.\] Similarly, variance can be computed based on the definition \[\text{var}(\theta) = \int (\theta - \text{mean}(\theta))^2p(\theta | X)d\theta.\] Posterior mode is simply the grid value where the posterior is maximized.

In R, these statistics can be computed as follows:

R

data.frame(Estimate = c("Mode", "Mean", "Variance"),

Value = c(df1[which.max(df1$posterior), "theta"],

sum(df1$theta*df1$posterior*delta),

sum(df1$theta^2*df1$posterior*delta) -

sum(df1$theta*df1$posterior*delta)^2))

OUTPUT

Estimate Value

1 Mode 0.120000000

2 Mean 0.131147540

3 Variance 0.001837869Posterior intervals are also relatively easy to compute.

Finding the quantiles used to determine CIs is based on the cumulative distribution function of the posterior \(F(\theta) = \int_{\infty}^{\theta}p(y | X) dy\). The locations where the \(F(\theta) = 0.05\) and \(F(\theta) = 0.95\) define the 90% CIs.

Probabilities for certain parameter ranges are computed simply by integrating over the appropriate set, for example, \(Pr(\theta < 0.1) = \int_0^{0.1} p(\theta | X) d\theta.\)

Challenge

Compute the 90% CIs and the probability \(Pr(\theta < 0.1)\) for the handedness example.

R

# Quantiles

q5 <- theta_grid[which.max(cumsum(posterior)*delta > 0.05)]

q95 <- theta_grid[which.min(cumsum(posterior)*delta < 0.95)]

# Pr(theta < 0.1)

Pr_theta_under_0.1 <- sum(posterior[theta_grid < 0.1])*delta

print(paste0("90% CI = (", q5,",", q95,")"))

OUTPUT

[1] "90% CI = (0.07,0.21)"R

print(paste0("Pr(theta < 0.1) = ",

round(Pr_theta_under_0.1, 5)))

OUTPUT

[1] "Pr(theta < 0.1) = 0.20659"Example 3: Gamma model with grid approximation

Let’s investigate another model and implement a grid approximation to fit it.

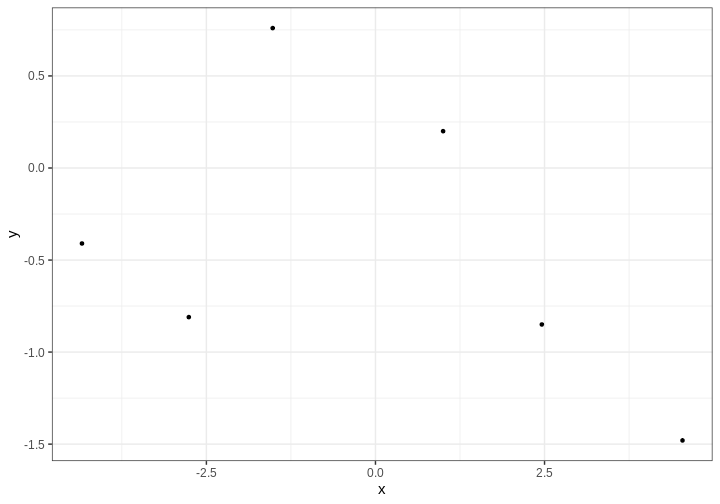

The gamma distribution arises, for example, in applications that model the waiting time between consecutive events. Let’s model the following data points as independent realizations from a \(\Gamma(\alpha, \beta)\) distribution with unknown shape \(\alpha\) and rate \(\beta\) parameters:

R

X <- c(0.34, 0.2, 0.22, 0.77, 0.46, 0.73, 0.24, 0.66, 0.64)

We’ll estimate \(\alpha\) and \(\beta\) using the grid approximation. Similarly as before, we’ll first need to define a grid. Since there are two parameters the parameter space is 2-dimensional and the grid needs to be defined at all pairwise combinations of the points of the individual grids.

R

delta <- 0.1

alpha_grid <- seq(from = 0.01, to = 15, by = delta)

beta_grid <- seq(from = 0.01, to = 25, by = delta)

# Get pairwise combinations

df2 <- expand.grid(alpha = alpha_grid, beta = beta_grid)

Next, we’ll compute the likelihood. As we assumed the data points to be independently generated from the gamma distribution, the likelihood is the product of the likelihoods of individual observations.

R

# Loop over all alpha, beta combinations

for(i in 1:nrow(df2)) {

df2[i, "likelihood"] <- prod(

dgamma(x = X,

shape = df2[i, "alpha"],

rate = df2[i, "beta"])

)

}

Next, we’ll define priors for \(\alpha\) and \(\beta\). Only positive values are allowed, which should be reflected in the prior. We’ll use \(\Gamma\) priors with large variances.

Notice, that normalizing the posterior now requires integrating over both dimensions, hence the \(\delta^2\) below.

R

# Priors: alpha, beta ~ Gamma(2, .1)

df2 <- df2 %>%

mutate(prior = dgamma(x = alpha, 2, 0.1)*dgamma(x = beta, 2, 0.1))

# Posterior

df2 <- df2 %>%

mutate(posterior = prior*likelihood) %>%

mutate(posterior = posterior/(sum(posterior)*delta^2)) # Normalize

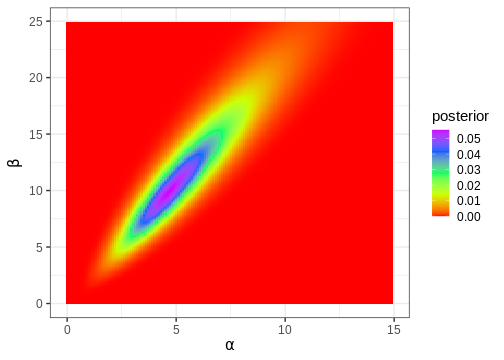

# Plot

p_joint_posterior <- df2 %>%

ggplot() +

geom_tile(aes(x = alpha, y = beta, fill = posterior)) +

scale_fill_gradientn(colours = rainbow(5)) +

labs(x = expression(alpha), y = expression(beta))

p_joint_posterior

Next, we’ll compute the posterior mode, which is a point in the 2-dimensional parameter space.

R

df2[which.max(df2$posterior), c("alpha", "beta")]

OUTPUT

alpha beta

14898 4.71 9.91Often, in addition to the parameters of interest, the model contains parameters we are not interested in. For instance, we might only be interested in \(\alpha\), in which case \(\beta\) would be a ‘nuisance’ parameter. Nuisance parameters are part of the full (‘joint’) posterior, but they can be discarded by integrating the joint posterior over these parameters. A posterior integrated over some parameters is called a marginal posterior.

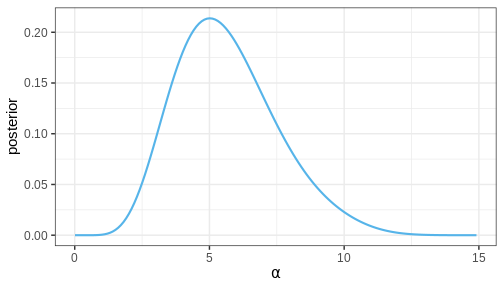

Let’s now compute the marginal posterior for \(\alpha\) by integrating over \(\beta\). Intuitively, it can be helpful to think of marginalization as a process where all of the joint posterior mass is drawn towards the \(\alpha\) axis, as if drawn by a gravitational force.

R

# Get marginal posterior for alpha

alpha_posterior <- df2 %>%

group_by(alpha) %>%

summarize(posterior = sum(posterior)) %>%

mutate(posterior = posterior/(sum(posterior)*delta))

p_alpha_posterior <- alpha_posterior %>%

ggplot() +

geom_line(aes(x = alpha, y = posterior),

color = posterior_color,

linewidth = 1) +

labs(x = expression(alpha))

p_alpha_posterior

Challenge

Does the MAP of the joint posterior of \(\theta = (\alpha, \beta)\) correspond to the MAPs of the marginal posteriors of \(\alpha\) and \(\beta\)?

The conjugate prior for the Gamma likelihood exists, which means there is a prior that causes the posterior to be of the same shape.

Working with samples

The main limitation of the grid approximation is that it becomes impractical for models with even a moderate number of parameters. The reason is that the number of computations grows as \(O \{ \Delta^p \}\) where \(\Delta\) is the number of grid points per model parameter and \(p\) the number of parameters. This quickly becomes prohibitive, and the grid approximation is seldom used in practice. The standard approach to fitting Bayesian models is to draw samples from the posterior with Markov chain Monte Carlo (MCMC) methods. These methods are the topic of a later episode but we’ll anticipate this now by studying how posterior summaries can be computed based on samples.

Example 4: handedness with samples

Let’s take the beta-binomial model (beta prior, binomial likelihood) of the handedness analysis as our example. It is an instance of a model for which the posterior can be computed analytically. Given a prior \(\text{Beta}(\alpha, \beta)\) and likelihood \(\text{Bin}(x | N, \theta)\), the posterior is \[p(\theta | X) = \text{Beta}(\alpha + x, \beta + N - x).\] Let’s generate \(n = 1000\) samples from this posterior using the handedness data:

R

n <- 1000

theta_samples <- rbeta(n, 1 + 7, 10 + 50 - 7)

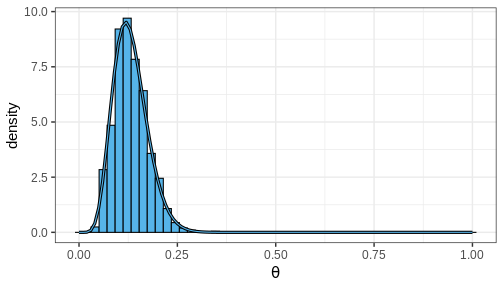

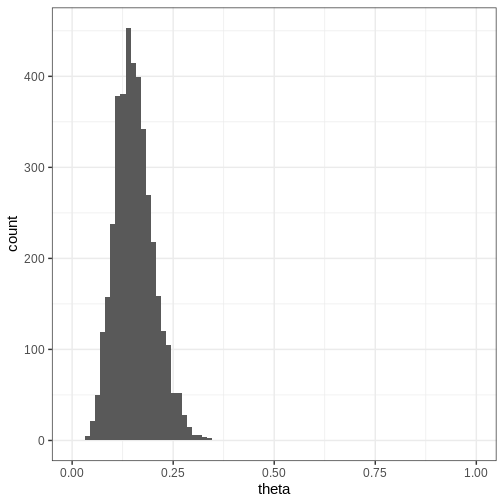

Plotting a histogram of these samples against the grid approximation posterior displays that both are indeed approximating the same distribution

R

ggplot() +

geom_histogram(data = theta_samples %>%

data.frame(theta = .),

aes(x = theta, y = after_stat(density)), bins = 50,

fill = posterior_color, color = "black") +

geom_line(data = df1,

aes(x = theta, y = posterior),

linewidth = 1.5) +

geom_line(data = df1,

aes(x = theta, y = posterior),

color = posterior_color) +

labs(x = expression(theta))

Computing posterior summaries from samples is easy. The posterior mean and variance are computed simply by taking the mean and variance of the samples, respectively. Posterior intervals are equally easy to compute: 90% CI is recovered from the appropriate quantiles and the probability of a certain parameter interval is the proportion of total samples within the interval.

Challenge

Compute the posterior mean, variance, 90% CI and \(Pr(\theta > 0.1)\) using the generated samples.

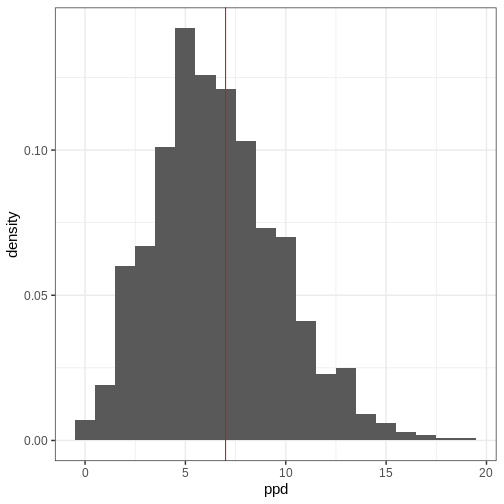

Posterior predictive distribution

Now we have learned how to fit a probabilistic model using the grid approximation and how to compute posterior summaries of the model parameters based on the fit or with posterior samples. A potentially interesting question that the posterior doesn’t directly answer is what do possible unobserved data values \(\tilde{X}\) look like, conditional on the observed values \(X\).

The unknown value can be predicted using the posterior predictive distribution \(p(\tilde{X} | X) = \int p(\tilde{X} | \theta) p(\theta | X) d\theta\). Using samples, this distribution can be sampled from by first drawing a value \(\theta^s\) from the posterior and then generating a random value from the likelihood function \(p(\tilde{X} | \theta^s)\).

A posterior predictive distribution for the beta-binomial model, using the posterior samples of the previous example can be generated as

R

ppd <- rbinom(length(theta_samples), 50, prob = theta_samples)

In other words, this is the distribution of the number of left-handed people in a yet unseen sample of 50 people. Let’s plot the histogram of these samples and compare it to the observed data (red vertical line):

R

ggplot() +

geom_histogram(data = data.frame(ppd),

aes(x = ppd, y = after_stat(density)), binwidth = 1) +

geom_vline(xintercept = 7, color = "red")

- Likelihood determines the probability of data conditional on the model parameters.

- Prior encodes beliefs about the model parameters without considering data.

- Posterior quantifies the probability of parameter values conditional on the data.

- The posterior is a compromise between the data and prior. The less data available, the greater the impact of the prior.

- The grid approximation is a method for inferring the (approximate) posterior distribution.

- Posterior information can be summarized with point estimates and posterior intervals.

- The marginal posterior is accessed by integrating over nuisance parameters.

- Usually, Bayesian models are fitted using methods that generate samples from the posterior.

Reading

- Bayesian Data Analysis (3rd ed.): Ch. 1-3

- Statistical Rethinking (2nd ed.): Ch. 1-3

- Bayes Rules!: Ch. 1-6

Content from Stan

Last updated on 2025-11-10 | Edit this page

Estimated time: 64 minutes

Overview

Questions

- How can posterior samples be generated using Stan?

Objectives

Learn how to

- implement statistical models in Stan.

- generate posterior samples with Stan.

- extract and process samples generated with Stan.

Stan is a probabilistic programming language that can be used to specify probabilistic models and to generate samples from posterior distributions.

The standard steps is using Stan is to first write the statistical model in a separate text file, then to call Stan from R (or other supported interface) which performs the sampling. Instead of having to write formulas the model can be written using built-in functions and sampling statements similar to written text. The sampling process is performed with a Markov Chain Monte Carlo (MCMC) algorithm, which we will study in a later episode. For now, however, our focus is on understanding how to execute it using Stan.

Several R packages have been built that simplify Stan usage. For example, brms allows specifying models via R’s customary formula syntax, while bayesplot provides a library of plotting functions. In this lesson, however, we will first learn using Stan from the bottom-up, by writing Stan programs, extracting the posterior samples and generating the plots ourselves. Later, in episode 7, we’ll introduce the usage of some of these additional packages.

To get started, follow the instructions provided at https://mc-stan.org/users/interfaces/ to install Stan on your local computer.

With Stan, you can fit models that have continuous parameters, but sampling from models with discrete parameters (e.g. clustering models) is not directly supported. In some cases, we can work around this by marginalizing out the discrete parameters, which makes the model fit possible in Stan. However, these workarounds are not always straightforward.

Basic program structure

A Stan program is organized into several blocks that collectively define the model. Typically, a Stan program includes at least the following blocks:

Data: This block is used to declare the input data provided to the model. It specifies the types and dimensions of the data variables incorporated into the model.

Parameters: In this block, the model parameters are declared.

Model: The likelihood and prior distributions are included here through sampling statements.

For best practices, it is recommended to specify Stan programs in separate text files with a .stan extension, which can then be called from R.

Example 1: Beta-binomial model

The following Stan program specifies the Beta-binomial model, and consists of data, parameters, and model blocks.

The data variables are the total sample size \(N\) and \(x\), the outcome of a binary variable (coin

flip, handedness etc.). The declared data type is int for

integer, and the variables have a lower bound 1 and 0 for \(N\) and \(x\), respectively. Notice that each line

ends with a semicolon.

In the parameters block we declare \(\theta\), the probability for a success. Since this parameter is a probability, it is a real number restricted between 0 and 1.

In the model block, the likelihood is specified with the sampling

statement x ~ binomial(N, theta). This line includes the

binomial distribution \(\text{Bin}(x | N,

theta)\) in the target distribution. The prior is set similarly,

and omitting the prior implies a uniform prior. Comments can be included

after two forward slashes.

STAN

data{

int<lower=1> N;

int<lower=0> x;

}

parameters{

real<lower=0, upper=1> theta;

}

model{

// Likelihood

x ~ binomial(N, theta);

// Uniform prior

}When the Stan program has been saved we need to compile it. In R,

this is done by running the following line, where

"binomial_model.stan" is the path of the program file.

R

binomial_model <- stan_model("binomial_model.stan")

Once the program has been compiled, it can be used to generate the

posterior samples by calling the function sampling(). The

data needs to be input as a list.

R

set.seed(135)

binom_data <- list(N = 50, x = 7)

binom_samples <- sampling(object = binomial_model,

data = binom_data)

OUTPUT

SAMPLING FOR MODEL 'anon_model' NOW (CHAIN 1).

Chain 1:

Chain 1: Gradient evaluation took 4e-06 seconds

Chain 1: 1000 transitions using 10 leapfrog steps per transition would take 0.04 seconds.

Chain 1: Adjust your expectations accordingly!

Chain 1:

Chain 1:

Chain 1: Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 1: Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 1: Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 1: Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 1: Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 1: Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 1: Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 1: Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 1: Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 1: Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 1: Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 1: Iteration: 2000 / 2000 [100%] (Sampling)

Chain 1:

Chain 1: Elapsed Time: 0.004 seconds (Warm-up)

Chain 1: 0.003 seconds (Sampling)

Chain 1: 0.007 seconds (Total)

Chain 1:

SAMPLING FOR MODEL 'anon_model' NOW (CHAIN 2).

Chain 2:

Chain 2: Gradient evaluation took 1e-06 seconds

Chain 2: 1000 transitions using 10 leapfrog steps per transition would take 0.01 seconds.

Chain 2: Adjust your expectations accordingly!

Chain 2:

Chain 2:

Chain 2: Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 2: Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 2: Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 2: Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 2: Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 2: Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 2: Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 2: Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 2: Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 2: Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 2: Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 2: Iteration: 2000 / 2000 [100%] (Sampling)

Chain 2:

Chain 2: Elapsed Time: 0.004 seconds (Warm-up)

Chain 2: 0.003 seconds (Sampling)

Chain 2: 0.007 seconds (Total)

Chain 2:

SAMPLING FOR MODEL 'anon_model' NOW (CHAIN 3).

Chain 3:

Chain 3: Gradient evaluation took 1e-06 seconds

Chain 3: 1000 transitions using 10 leapfrog steps per transition would take 0.01 seconds.

Chain 3: Adjust your expectations accordingly!

Chain 3:

Chain 3:

Chain 3: Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 3: Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 3: Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 3: Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 3: Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 3: Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 3: Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 3: Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 3: Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 3: Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 3: Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 3: Iteration: 2000 / 2000 [100%] (Sampling)

Chain 3:

Chain 3: Elapsed Time: 0.004 seconds (Warm-up)

Chain 3: 0.003 seconds (Sampling)

Chain 3: 0.007 seconds (Total)

Chain 3:

SAMPLING FOR MODEL 'anon_model' NOW (CHAIN 4).

Chain 4:

Chain 4: Gradient evaluation took 1e-06 seconds

Chain 4: 1000 transitions using 10 leapfrog steps per transition would take 0.01 seconds.

Chain 4: Adjust your expectations accordingly!

Chain 4:

Chain 4:

Chain 4: Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 4: Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 4: Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 4: Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 4: Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 4: Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 4: Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 4: Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 4: Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 4: Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 4: Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 4: Iteration: 2000 / 2000 [100%] (Sampling)

Chain 4:

Chain 4: Elapsed Time: 0.004 seconds (Warm-up)

Chain 4: 0.003 seconds (Sampling)

Chain 4: 0.007 seconds (Total)

Chain 4: With the default settings, Stan executes 4 MCMC chains, each with 2000 iterations (more about this in the next episode). During the run, Stan provides progress information, aiding in estimating the running time, particularly for complex models or extensive datasets. In this case the sampling took only a fraction of a second.

Running binom_samples, a summary for the model parameter

\(p\) is printed, facilitating a quick

review of the results.

R

binom_samples

OUTPUT

Inference for Stan model: anon_model.

4 chains, each with iter=2000; warmup=1000; thin=1;

post-warmup draws per chain=1000, total post-warmup draws=4000.

mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

theta 0.16 0.00 0.05 0.07 0.12 0.15 0.18 0.26 1545 1

lp__ -22.80 0.02 0.69 -24.75 -22.93 -22.53 -22.37 -22.33 1987 1

Samples were drawn using NUTS(diag_e) at Mon Nov 10 08:23:27 2025.

For each parameter, n_eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor on split chains (at

convergence, Rhat=1).This summary can also be accessed as a matrix with

summary(binom_samples)$summary.

Often, however, it is necessary process the individual samples. These can be extracted as follows:

R

theta_samples <- rstan::extract(binom_samples, "theta")[["theta"]]

Now we can use the methods presented in the previous Episode to compute posterior summaries, credible intervals and to generate figures.

Challenge

Compute the 95% credible intervals for the samples drawn with Stan. What is the probability that \(\theta \in (0.05, 0.15)\)? Plot a histogram of the posterior samples.

R

CI95 <- quantile(theta_samples, probs = c(0.025, 0.975))

theta_between_0.05_0.15 <- mean(theta_samples>0.05 & theta_samples<0.15)

p <- ggplot(data = data.frame(theta = theta_samples)) +

geom_histogram(aes(x = theta), bins = 30) +

coord_cartesian(xlim = c(0, 1))

print(p)

Challenge

Try modifying the Stan program so that you add a \(Beta(\alpha, \beta)\) prior for \(\theta\).

Can you modify the Stan program further so that you can set the hyperparameters \(\alpha, \beta\) as part of the data? What is the benefit of using this approach?

Modifying the data block so that it declares the hyperparameters as

data (e.g. real<lower=0> alpha;) enables setting the

hyperparameter values as part of data. This makes it possible to change

the hyperparameters without modifying the Stan file.

Additional Stan blocks

In addition to the data, parameters, and model blocks there are additional blocks that can be included in the program.

Functions: For user-defined functions. This block must be the first in the Stan program. It allows users to define custom functions.

Transformed data: This block is used for transformations of the data variables. It is often employed to preprocess or modify the input data before it is used in the main model. Common tasks include standardization, scaling, or other data adjustments.

Transformed parameters: In this block, transformations of the parameters are defined. If transformed parameters are used on the left-hand side of sampling statements in the model block, the Jacobian adjustment for the posterior density needs to be included in the model block as well.

Generated quantities: This block is used to define quantities based on both data and model parameters. These quantities are not part of the model but are useful for post-processing.

We will make use of these additional structures in subsequent illustrations.

Example 2: Normal model

Next, let’s implement the normal model in Stan. First we’ll generate some data \(X\) from a normal model with unknown mean and standard deviation parameters \(\mu\) and \(\sigma\)

R

# Sample size

N <- 99

# Generate data with unknown parameters

unknown_sigma <- runif(1, 0, 10)

unknown_mu <- runif(1, -5, 5)

X <- rnorm(n = N,

mean = unknown_mu,

sd = unknown_sigma)

normal_data <- list(N = N, X = X)

The Stan program for the normal model is specified in the next code chunk. It introduces a new data type (vector) and leverages vectorization in the likelihood statement. In the end of the program, a generated quantities block is included which generates new data (X_tilde) to estimate what unseen data points might look like. This resulting distribution is referred to as the posterior predictive distribution, which is generated by drawing a random realization from the normal distribution for each posterior sample \((\mu, \sigma)\).

STAN

data {

int<lower=0> N;

vector[N] X;

}

parameters {

real mu;

real<lower=0> sigma;

}

model {

// Vectorized likelihood

X ~ normal(mu, sigma);

// Priors

mu ~ normal(0, 1);

sigma ~ gamma(2, 1);

}

generated quantities {

real X_tilde;

X_tilde = normal_rng(mu, sigma);

}Let’s fit the model to the data

R

normal_samples <- rstan::sampling(normal_model,

normal_data)

OUTPUT

SAMPLING FOR MODEL 'anon_model' NOW (CHAIN 1).

Chain 1:

Chain 1: Gradient evaluation took 5e-06 seconds

Chain 1: 1000 transitions using 10 leapfrog steps per transition would take 0.05 seconds.

Chain 1: Adjust your expectations accordingly!

Chain 1:

Chain 1:

Chain 1: Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 1: Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 1: Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 1: Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 1: Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 1: Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 1: Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 1: Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 1: Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 1: Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 1: Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 1: Iteration: 2000 / 2000 [100%] (Sampling)

Chain 1:

Chain 1: Elapsed Time: 0.008 seconds (Warm-up)

Chain 1: 0.007 seconds (Sampling)

Chain 1: 0.015 seconds (Total)

Chain 1:

SAMPLING FOR MODEL 'anon_model' NOW (CHAIN 2).

Chain 2:

Chain 2: Gradient evaluation took 2e-06 seconds

Chain 2: 1000 transitions using 10 leapfrog steps per transition would take 0.02 seconds.

Chain 2: Adjust your expectations accordingly!

Chain 2:

Chain 2:

Chain 2: Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 2: Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 2: Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 2: Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 2: Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 2: Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 2: Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 2: Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 2: Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 2: Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 2: Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 2: Iteration: 2000 / 2000 [100%] (Sampling)

Chain 2:

Chain 2: Elapsed Time: 0.009 seconds (Warm-up)

Chain 2: 0.008 seconds (Sampling)

Chain 2: 0.017 seconds (Total)

Chain 2:

SAMPLING FOR MODEL 'anon_model' NOW (CHAIN 3).

Chain 3:

Chain 3: Gradient evaluation took 4e-06 seconds

Chain 3: 1000 transitions using 10 leapfrog steps per transition would take 0.04 seconds.

Chain 3: Adjust your expectations accordingly!

Chain 3:

Chain 3:

Chain 3: Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 3: Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 3: Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 3: Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 3: Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 3: Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 3: Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 3: Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 3: Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 3: Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 3: Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 3: Iteration: 2000 / 2000 [100%] (Sampling)

Chain 3:

Chain 3: Elapsed Time: 0.008 seconds (Warm-up)

Chain 3: 0.007 seconds (Sampling)

Chain 3: 0.015 seconds (Total)

Chain 3:

SAMPLING FOR MODEL 'anon_model' NOW (CHAIN 4).

Chain 4:

Chain 4: Gradient evaluation took 2e-06 seconds

Chain 4: 1000 transitions using 10 leapfrog steps per transition would take 0.02 seconds.

Chain 4: Adjust your expectations accordingly!

Chain 4:

Chain 4:

Chain 4: Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 4: Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 4: Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 4: Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 4: Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 4: Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 4: Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 4: Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 4: Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 4: Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 4: Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 4: Iteration: 2000 / 2000 [100%] (Sampling)

Chain 4:

Chain 4: Elapsed Time: 0.008 seconds (Warm-up)

Chain 4: 0.008 seconds (Sampling)

Chain 4: 0.016 seconds (Total)

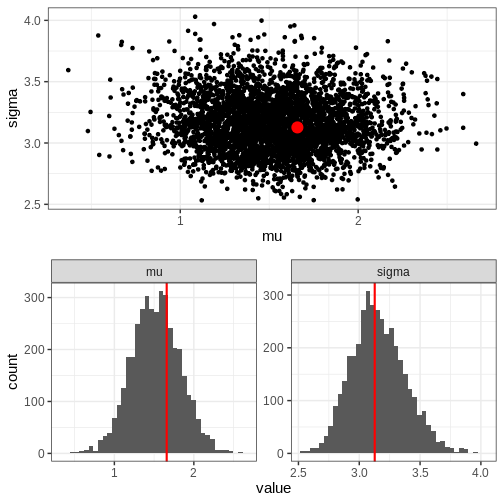

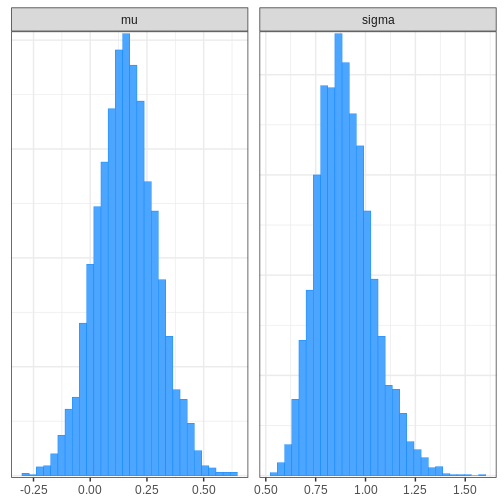

Chain 4: Next, we’ll extract posterior samples and generate a plot for the joint, and marginal posteriors. The true unknown parameter values are included in the plots in red.

R

# Extract parameter samples

par_samples <- rstan::extract(normal_samples, c("mu", "sigma")) %>%

do.call(cbind, .) %>%

data.frame

# Full posterior

p_posterior <- ggplot(data = par_samples) +

geom_point(aes(x = mu, y = sigma)) +

annotate("point", x = unknown_mu, y = unknown_sigma,

color = "red", size = 5)

# Marginal posteriors

p_marginals <- ggplot(data = par_samples %>% gather) +

geom_histogram(aes(x = value), bins = 40) +

geom_vline(data = data.frame(key = c("mu", "sigma"),

value = c(unknown_mu, unknown_sigma)),

aes(xintercept = value), color = "red", linewidth = 1) +

facet_wrap(~key, scales = "free")

p <- cowplot::plot_grid(p_posterior, p_marginals,

ncol = 1)

print(p)

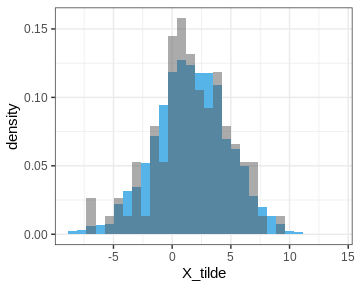

Let’s also plot the posterior predictive distribution samples histogram and compare it to that of the data.

R

PPD <- rstan::extract(normal_samples, c("X_tilde"))[[1]] %>%

data.frame(X_tilde = . )

p_PPD <- ggplot() +

geom_histogram(data = PPD,

aes(x = X_tilde, y = after_stat(density)),

bins = 30, fill = posterior_color) +

geom_histogram(data = data.frame(X), aes(x = X, y = after_stat(density)),

bins = 30, alpha = 0.5)

print(p_PPD)

Example 3: Linear regression

Challenge

Write a Stan program for linear regression with one dependent variable.

Generate data from the linear model and use the Stan program to estimate the intercept \(\alpha\), slope \(\beta\), and noise term \(\sigma\).

STAN

data {

int<lower=0> N; // Sample size

vector[N] x; // x-values

vector[N] y; // y-values

}

parameters {

real alpha; // intercept

real beta; // slope

real<lower=0> sigma; // noise

}

model {

// Likelihood

y ~ normal(alpha + beta * x, sigma);

// Priors

alpha ~ normal(0, 1);

beta ~ normal(0, 1);

sigma ~ inv_gamma(1, 1);

}Challenge

Modify the program for linear regression so it facilitates \(M\) dependent variables.

STAN

data {

int<lower=0> N; // Sample size

int<lower=0> M; // Number of features

matrix[N, M] x; // x-values

vector[N] y; // y-values

}

parameters {

real alpha; // intercept

vector[M] beta; // slopes

real<lower=0> sigma; // noise

}

model {

// Likelihood

y ~ normal(alpha + x * beta, sigma);

// Priors

alpha ~ normal(0, 1);

beta ~ normal(0, 1);

sigma ~ inv_gamma(1, 1);

}- Stan is a tool for efficient posterior distribution sample generation.

- A Stan program is specified in a separate text file that consists of code blocks, with the data, parameters, and model blocks being the most crucial ones.

Resources

- Official release paper https://www.jstatsoft.org/article/view/v076i01

- User’s guide https://mc-stan.org/docs/2_18/stan-users-guide/

- Function’s reference https://mc-stan.org/docs/functions-reference/

- Reference manual https://mc-stan.org/docs/reference-manual/

- Stan forum https://discourse.mc-stan.org

- Case studies https://mc-stan.org/users/documentation/case-studies

Reading

- BDA3: Ch. 12.6, Appendix C

- Bayes Rules!: Ch. 6.2

Content from Markov chain Monte Carlo

Last updated on 2025-11-10 | Edit this page

Estimated time: 63 minutes

Overview

Questions

- How does Stan generate the posterior samples?

Objectives

Learn

- the basic idea of the Metropolis-Hasting algorithm.

- how to assess Markov chain Monte Carlo convergence.

- how to implement a random walk Metropolis-Hasting algorithm.

The standard solution to fitting probabilistic models is to generate random samples from the posterior distribution. In the previous episode, we learned how to generate posterior samples using Stan without knowing how the samples are generated. In this episode, we’ll study Markov chain Monte Carlo (MCMC) methods, which are the class of algorithms Stan uses under the hood.

Metropolis-Hastings algorithm

MCMC methods draw samples from the posterior distribution by constructing sequences (chains) of values in the parameter space that ultimately converge to the posterior. While there are other variants of MCMC, on this course we will mainly focus on the Metropolis-Hasting (MH) algorithm outlined below. As this algorithm is ran long enough, convergence to posterior (or to other specified target density) is guaranteed. This means that that if the chain is run long enough, the samples will eventually start approximating the posterior distribution.

A chain starts is initialized at value \(\theta^{0}\), which can be manually set or random. The only precondition is that the target distribution has positive mass at the location, \(p(\theta^{0} | X) > 0\). Then, a proposal \(\theta^*\) for the next value is generated from a proposal distribution \(T_i\). An often-used solution is the normal distribution centered at the current value, \(\theta^* \sim N(\theta^{i}, \sigma^2)\). This is where the term “Markov chain” comes from, the value of each element depends only on the previous one.

Next, the proposal \(\theta^*\) is either accepted or rejected. If each proposal was accepted, the sequence would simply be a random walk in the parameter space and would not approximate the posterior to any degree. The rule that determines the acceptance should reflect this; proposals towards higher posterior densities should be favored over proposals toward low density areas. The solution is to compute the ratio

\[r = \frac{p(\theta^* | X) / T_i(\theta^* | \theta^{i})}{p(\theta^i | X) / T_i(\theta^{i} | \theta^{*})},\] and use is as the probability to move to the proposed value. In other words, the next element in the chain is \(\theta^{i+1} = \theta^*\) with probability \(\min(r, 1)\). If the proposal is not accepted the chain stays at the current value, \(\theta^{i+1} = \theta^{i}.\) This approach induces directional randomness in the chain; proposals towards higher density areas are generally accepted but transitions away from it are also possible. In situations where the transition density is symmetric, such as with the normal distribution, \(r\) reduces simply to the ratio of the posterior values, and all proposals toward higher posterior density areas are accepted.

Example: Banana distribution

Let’s implement the MH algorithm and use it to generate posterior samples from the following statistical model:

\[X \sim N(\theta_1 + \theta_2^2, 1) \\ \theta_1, \theta_2 \sim N(0, 1),\]

where \(X\) is univariate data and \(\theta_1, \theta_2\) the parameters.

Helper functions

We begin by writing some helper functions that carry out the incremental steps of the MH algorithm.

First, we need to be able to generate the proposals. Let’s use the

two-dimensional normal with identity covariance scaled by a scalar

jump_scale.

R

set.seed(12)

generate_proposal <- function(pars_now, jump_scale = 0.1) {

n_pars <- length(pars_now)

# Random draw from multivariate normal

theta_star <- mvtnorm::rmvnorm(1,

mean = pars_now,

sigma = jump_scale*diag(n_pars))

return(theta_star)

}

Running MH also requires computing the (unnormalized) posterior

density at the proposed parameter values. This function returns the log

posterior value at the location pars. The density is

computed on log scale to avoid issues with numerical precision.

R

get_log_target_value <- function(X, pars) {

# log(likelihood)

sum(

dnorm(X,

mean = pars[1] + pars[2]^2,

sd = 1,

log = TRUE)

) +

# log(prior)

dnorm(pars[1], 0, 1, log = TRUE) +

dnorm(pars[2], 0, 1, log = TRUE)

}

Then, we’ll write a function that computes the acceptance ratio \(r\). Since the proposal is symmetric, the expression reduces to the ratio of the posterior densities of the proposed and current parameter values. Notice that a ratio on a log scale is equal to the difference of logarithms.

R

# Compute ratio

get_ratio <- function(X, pars_now, pars_proposal) {

r <- exp(

get_log_target_value(X, pars_proposal) -

get_log_target_value(X, pars_now)

)

return(r)

}

Finally, we can wrap the helpers in a function that loops over the algorithm steps.

R

# Sampler

MH_sampler <- function(X, # Data

inits, # Initial values

n_samples = 1000, # Number of iterations

jump_scale = 0.1 # Proposal jump variance

) {

# Matrix for samples

pars <- matrix(nrow = n_samples, ncol = length(inits))

# Set initial values

pars[1, ] <- inits

# Generate samples

for(i in 2:n_samples) {

# Current parameters

pars_now <- pars[i-1, ]

# Proposal

pars_proposal <- generate_proposal(pars_now, jump_scale)

# Ratio

r <- get_ratio(X, pars_now, pars_proposal)

r <- min(1, r)

# Does the sampler move?

move <- sample(x = c(TRUE, FALSE),

size = 1,

prob = c(r, 1-r))

# OR:

# move <- runif(n = 1, min = 0, max = 1) <= r

if(move) {

pars[i, ] <- pars_proposal

} else {

pars[i, ] <- pars_now

}

}

pars <- data.frame(pars)

return(pars)

}

Run MH

Now we can try out our MH implementation. Let’s use the simulated

data points stored in vector X:

R

X <- c(3.78, 2.76, 2.84, 2.92, 1.3, 3.93, 3.69, 2.28, 2.81, 0.71)

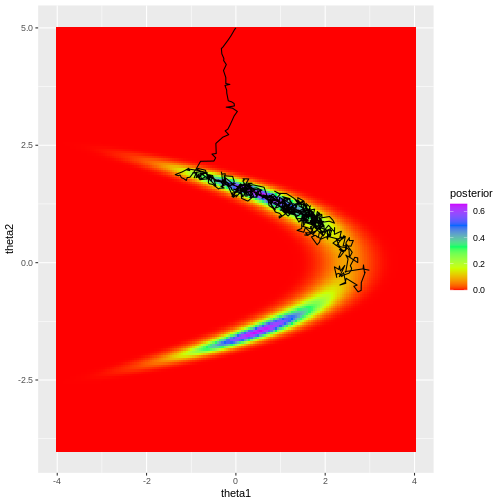

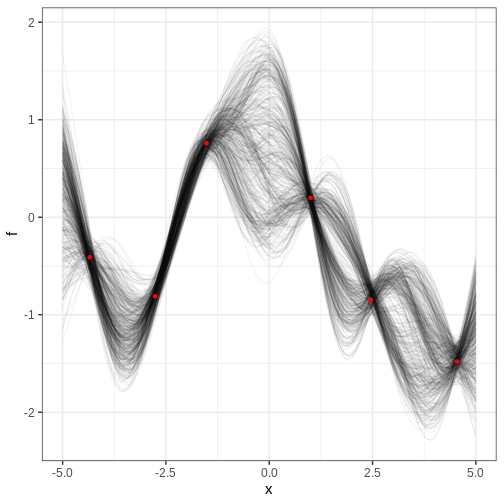

We’ll generate 1000 samples with initial value (0, 5) and jump scale 0.01. The trajectory of samples is plotted over the posterior density computed with the grid approximation.

R

# Draw samples

samples1 <- MH_sampler(X,

inits = c(0, 5),

n_samples = 1000,

jump_scale = 0.01)

colnames(samples1) <- c("theta1", "theta2")

# Add column for sample index

samples1$sample <- 1:nrow(samples1)

# Grid approximation

delta <- 0.05

df_grid <- expand.grid(seq(-4, 4, by = delta),

seq(-4, 5, by = delta)) %>%

set_colnames(c("theta1", "theta2"))

for(i in 1:nrow(df_grid)) {

df_grid[i, "likelihood"] <- prod(

dnorm(X,

df_grid[i, "theta1"] + df_grid[i, "theta2"]^2,

1)

)

}

df_grid <- df_grid %>%

mutate(prior = dnorm(theta1, 0, 1)*dnorm(theta2, 0, 1)) %>%

mutate(posterior = prior*likelihood) %>%

mutate(posterior = posterior / (sum(posterior)*delta^2))

# Plot

p_grid <- ggplot() +

geom_tile(data = df_grid,

aes(x = theta1, y = theta2, fill = posterior)) +

scale_fill_gradientn(colours = rainbow(5))

# Plot joint posterior samples

p_MH1 <- p_grid +

geom_path(data = samples1,

aes(x = theta1, y = theta2))

print(p_MH1)

Looking at the figure, a few observations become evident. Firstly, despite the chosen initial value being moderately far from the high-density areas of the posterior, the algorithm quickly converges to the target region. This rapid convergence is due to the fact that proposals toward higher density areas are favored, in fact they are always accepted when using normal density proposals. However, it’s important to note that such swift convergence is not guaranteed in all scenarios. In cases with a high number of model parameters, there’s an increased likelihood of the sampler taking ‘wrong’ directions. The samples before convergence introduce bias to the posterior approximation.

Secondly, the posterior is not fully explored; no samples are generated from the lower mode in the figure. This highlights a crucial point: even if the sampler has converged, it doesn’t guarantee that the drawn samples provide a representative picture of the target.

Challenge

Consider how you could address the two issues raised above:

- Initial unconverged samples introduce a bias.

- The sampler may not have explored the target distribution properly.

Try different proposal distributions variances in the MCMC example

above by changing jump_scale. How does this affect the

inference and convergence? Why?

Assessing convergence

Although convergence of MCMC is theoretically guaranteed, in practice, this is not always the case. Monitoring convergence is crucial whenever MCMC is utilized to ensure the reliability of recovered results.

Depending on the model used, initial values, amount of data, among other factors, can cause convergence issues. Earlier, we mentioned two common complications, and here we will list a few more, along with actions that can alleviate the issues.

Slow convergence can occur when initial values of the chain are far from most of the target mass, resulting in early iterations biasing the approximation. Another cause for slow convergence is that the proposals are not far enough from the current value, and the sampler moves too slowly.

Incomplete exploration: This means that the sampler doesn’t spend enough time in all significant posterior areas.

A large proportion of the proposals is rejected. When the proposal distribution generates proposals too far from the current value, the proposals are rejected and the sampler stands still for many iterations. This leads to inefficiency.

Sample autocorrelation: Consecutive samples are close to each other. Ideally, we’d like to generate independent samples from the target. High sample autocorrelation can be caused by several factors, including the ones mentioned in the previous points.

These issues can be remedied with:

Running multiple long chains with distinct or random initial values.

Discarding the early proportion of the chain as warm-up.

Setting an appropriate proposal distribution.

It also important to be able to monitor whether the sampler has converged. This can be done with statistics, such as effective sample size and \(\hat{R}\). Effective sample size estimates how many independent samples have been generated. Ideally, this number should be close to the total number of iterations the sampler has been ran for. \(\hat{R}\) on the other hand measures chain mixing, that is, how well the chains agree with each other. It is computed by comparing the variance within each chain to the total variance of all samples. Usually, values of \(\hat{R} > 1.1\) are considered as signaling convergence issues. However, the Stan development team recommends using 1.05 as the limit.

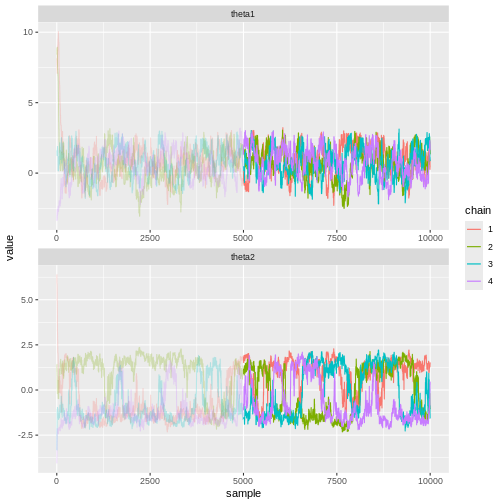

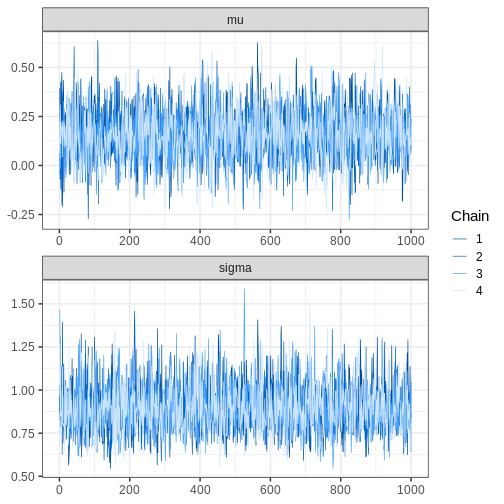

Besides statistics, visually evaluating the samples can be useful. Trace plots refer to graphs where the marginal posterior samples are plotted against sample index. Trace plots can be used to investigate convergence and mixing properties, and can reveal, for example, multimodality.

In Stan, many of the above-mentioned points have been automatized. By default, Stan runs 4 chains with 2000 iterations each, and discards the initial 50% as warm-up. Moreover, it computes \(\hat{R}\), effective sample size, and other statistics and throws warnings in case of issues.

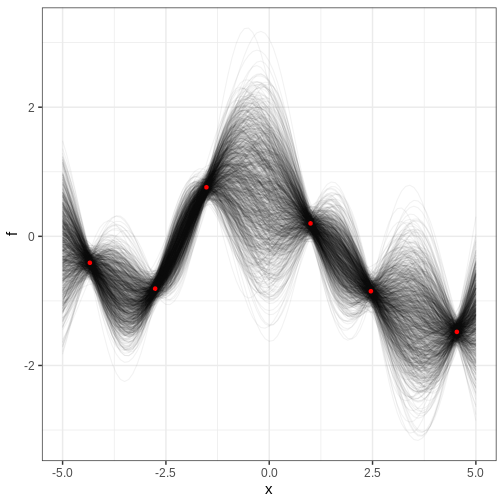

Example continued

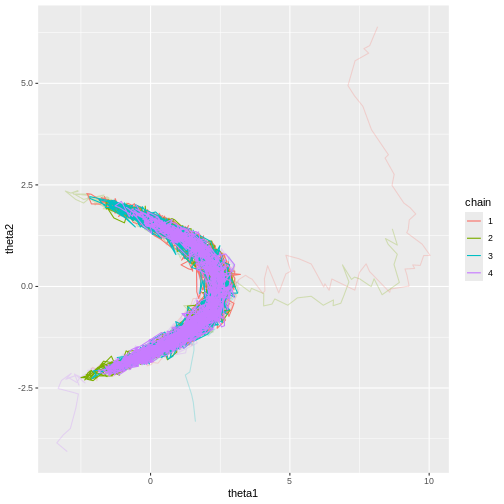

In light of the above information, let’s re-fit the model of the previous example. Now, we’ll run 4 chains with random initial values, 10000 samples each, and discard the first 50% of each chain as warm-up. We’ll use 0.1 as the proposal variance.

R

# Number of chains

n_chains <- 4

# Number of samples

n_samples <- 10000

# Initial warmup proportion

warmup <- 0.5

samples2 <- lapply(1:n_chains, function(i) {

# Use random initial values

inits <- rnorm(2, 0, 5)

chain <- MH_sampler(X, inits = inits,

n_samples = n_samples,

jump_scale = 0.1)

# Wrangle

colnames(chain) <- c("theta1", "theta2")

chain$sample <- 1:nrow(chain)

chain$chain <- as.factor(i)

chain[1:round(warmup*n_samples), "warmup"] <- TRUE

chain[(round(warmup*n_samples)+1):n_samples, "warmup"] <- FALSE

return(chain)

}) %>%

do.call(rbind, .)

Now it’s evident that the sample trajectories explore the entire posterior distribution:

R

# Plot

p_joint_2 <- ggplot() +

# Warm-up samples

geom_path(data = samples2 %>%

filter(warmup == TRUE),

aes(theta1, theta2, color = chain),

alpha = 0.25) +

# Post-warm-up samples

geom_path(data = samples2 %>%

filter(warmup == FALSE),

aes(theta1, theta2, color = chain))

print(p_joint_2)

Let’s see what the trace plots look like. Generally, we’d want to see the samples randomly scattered around a mean value. For \(\theta_1\) this is more or less the case, although there some autocorrelation is apparent. With \(\theta_2\) we can see that the chains have mixed as they explore the same parameter ranges. However, the bimodality of the posterior is quite apparent. The \(\hat{R}\) statistics are 1.0082279 and 1.1065365 for \(\theta_1\) and \(\theta_2\), respectively.

R

# Trace plots

p_trace_2 <- ggplot() +

geom_line(data = samples2 %>%

filter(warmup == TRUE) %>%

gather(key = "parameter",

value = "value",

-c("sample", "chain", "warmup")),

aes(x = sample, y = value, color = chain),

alpha = 0.25) +

geom_line(data = samples2 %>%

filter(warmup == FALSE) %>%

gather(key = "parameter",

value = "value",

-c("sample", "chain", "warmup")),

aes(x = sample, y = value, color = chain)) +

facet_wrap(~parameter,

ncol = 1,

scales = "free")

print(p_trace_2)

Hamiltonian Monte Carlo

Stan uses a variant of the Metropolis-Hastings algorithm called Hamiltonian Monte Carlo (HMC; or actually a variant HMC). The defining feature is the elaborate scheme it uses to generate proposals. Briefly, the idea is to simulate the dynamics of a particle moving in a potential landscape defined by the posterior. At each iteration, the particle is given a random momentum vector and then its dynamics are simulated forward for some time. The end of the trajectory is then taken as the proposal value.

Compared to the random walk Metropolis-Hastings we implemented in this episode, HMC is very efficient. The main advantages of HMC is its ability to explore high-dimensional spaces more effectively, making it especially useful in complex models with many parameters.

A type of convergence criterion exclusive to HMC is the divergent transition. In a region of the parameter space where the posterior has high curvature, the simulated particle dynamics can produce spurious transitions which do not represent the posterior accurately. Such transitions are called divergent and signal that the particular area of parameter space is not explored accurately. Stan provides information about divergent transitions automatically.

- Markov chain Monte Carlo methods can be used to generate samples from a posterior distribution.

- Values of the chain are generated from a proposal distribution.

- Proposals towards higher areas of the target distribution are accepted with higher probability.

- MCMC convergence should always be monitored.

Reading

See interactive visualization of different MCMC algorithms: https://chi-feng.github.io/mcmc-demo/app.html

Bayesian Data Analysis (3rd ed.): Ch. 11-12

Statistical Rethinking (2nd ed.): Ch. 9

Bayes Rules!: Ch. 6-7

Content from Hierarchical models

Last updated on 2025-11-10 | Edit this page

Estimated time: 14 minutes

Overview

Questions

- How does Bayesian modeling accommodate group structure?

Objectives

- Learn to construct and fit hierarchical models.

Hierarchical (or multi-level) models are a class of models suited for situations where the study population comprises distinct but interconnected groups. For example, analyzing student performance across different schools, income disparities among various regions, or studying animal behavior within different populations are scenarios where such models would be appropriate.

Incorporating group-wise parameters allows us to model each group separately, and a model becomes hierarchical when we treat the parameters of the prior distribution as unknown. These parameters, known as hyperparameters, are assigned their own prior distribution, referred to as a hyperprior, and are learned during the model fitting process. Similarly as priors, the hyperpriors should be set so they reflect ranges of possible hyperparameter values. Conceptually, these hyperparameters and hyperpriors operate at a higher level of hierarchy, hence the name.

For example, let’s consider the beta-binomial model discussed in Episode 1. We used it to estimate the prevalence of left-handedness based on a sample of 50 students. If we were to include additional information, such as the students’ majors, we could extend the model as follows:

\[X_g \sim \text{Bin}(N_g, \theta_g) \\ \theta_g \sim \text{Beta}(\alpha, \beta) \\ \alpha, \beta \sim \Gamma(2, 0.1).\]

Here, the subscript \(g\) indexes the groups based on majors. The group-specific prevalences for left-handedness \(\theta_g\) are assigned a beta prior with hyperparameters \(\alpha\) and \(\beta\) which are treated as random variables. The final line indicates the hyperprior \(\Gamma(2, 0.1)\) governing the prior beliefs about the hyperparameters.

In this hierarchical beta-binomial model, students are considered exchangeable within their majors but no longer across the entire population. However, an underlying assumption of similarity exists between the groups since they share a common prior, that is learned. This way the groups are not entirely independent but are not treated as equal either.

One of the key advantages of Bayesian hierarchical models is their capacity to leverage information across groups. By pooling information from various groups, these models can yield more robust estimates, particularly when data availability is limited.

Another distinction from non-hierarchical models is that the prior, or the population distribution, of the parameters is learned in the process. This population distribution can provide information into parameter variability on a broader scale, even for groups where data is scarce or completely missing. For instance, if we had data on the handedness of students majoring in natural sciences, the population distribution can give insights into students in humanities and social sciences as well.

In the following example, we will perform a hierarchical analysis of human heights across different countries.

Example: human height

Let’s examine the heights of adults in various countries. In [1], averages and standard errors of adult heights in centimeters across different countries and age groups were provided. We’ll utilize this dataset to generate a sample of hypothetical individual heights and then assess our ability to reproduce these measured height statistics.

This approach is commonly employed when building and testing models: we generate simulated data with known parameters and then compare the inferred results to these known parameters. In our case, the true parameters are derived from real-world data.

We’ll employ a normal model with unknown mean \(\mu\) and standard deviation \(\sigma\) as our generative model, and treat these parameters hierarchically.

First, let’s load the data and examine its structure.

R

set.seed(4985)

height <- read.csv("data/height_data.csv")

str(height)

OUTPUT

'data.frame': 210000 obs. of 8 variables:

$ Country : chr "Afghanistan" "Afghanistan" "Afghanistan" "Afghanistan" ...

$ Sex : chr "Boys" "Boys" "Boys" "Boys" ...

$ Year : int 1985 1985 1985 1985 1985 1985 1985 1985 1985 1985 ...

$ Age.group : int 5 6 7 8 9 10 11 12 13 14 ...

$ Mean.height : num 103 109 115 120 125 ...

$ Mean.height.lower.95..uncertainty.interval: num 92.9 99.9 106.3 112.2 117.9 ...

$ Mean.height.upper.95..uncertainty.interval: num 114 118 123 128 132 ...

$ Mean.height.standard.error : num 5.3 4.72 4.27 3.92 3.66 ...Let’s subset this data to simplify the analysis and focus on the height of adult women measured in 2019.

R

height_women <- height %>%

filter(

Age.group == 19,

Sex == "Girls",

Year == 2019

) %>%

# Select variables of interest

select(Country, Sex, Mean.height, Mean.height.standard.error)

Let’s select 10 countries randomly

R

set.seed(5431)

# Select countries

N_countries <- 10

Countries <- sample(unique(height_women$Country),

size = N_countries,

replace = FALSE) %>% sort

height_women10 <- height_women %>% filter(Country %in% Countries)

height_women10

OUTPUT

Country Sex Mean.height Mean.height.standard.error

1 Bangladesh Girls 152.3776 0.4966124

2 Belize Girls 158.1201 1.4026324

3 Cameroon Girls 160.4112 0.6146315

4 Chad Girls 162.1242 0.8894219

5 Cote d'Ivoire Girls 158.6524 0.9254438

6 Ghana Girls 158.8551 0.8002225

7 Kenya Girls 159.4338 0.6680202

8 Luxembourg Girls 165.0690 1.3778094

9 Taiwan Girls 160.6953 0.7307839

10 Venezuela Girls 160.0370 1.0813162Simulate data

Now, we can treat the values in the table above as ground truth and simulate some data based on them. Let’s generate \(N=10\) samples for each country from the normal model with \(\mu = \text{Mean.height}\) and \(\sigma = \text{Mean.height.standard.error}\).

R

# Sample size per group

N <- 10

# For each country, generate heights

height_sim <- lapply(1:N_countries, function(i) {

my_df <- height_women10[i, ]

data.frame(Country = my_df$Country,

# Random values from normal

Height = rnorm(N,

mean = my_df$Mean.height,

sd = my_df$Mean.height.standard.error))

}) %>%

do.call(rbind, .)

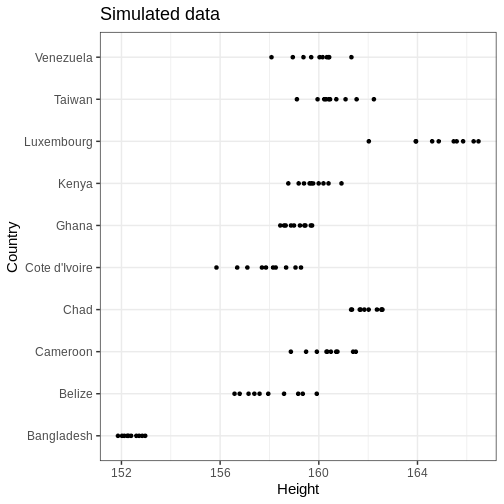

Let’s plot the data

R

# Plot

height_sim %>%

ggplot() +

geom_point(aes(x = Height, y = Country)) +

labs(title = "Simulated data")

Modeling

Let’s build a normal model that uses partial pooling for the country means and standard deviations. The model can be stated as follows:

\[\begin{align} X_{gi} &\sim \text{N}(\mu_g, \sigma_g) \\ \mu_g &\sim \text{N}(\mu_\mu, \sigma_\mu) \\ \sigma_g &\sim \Gamma(\alpha_\sigma, \beta_\sigma) \\ \mu_\mu &\sim \text{N}(0, 100)\\ \sigma_\mu &\sim \Gamma(2, 0.1) \\ \alpha_\sigma, \beta_\sigma &\sim \Gamma(2, 0.01). \end{align}\]

Above, \(X_{gi}\) denotes the height for individual \(i\) in country \(g\). The country specific parameters \(\mu_g\) and \(\sigma_g\) are given normal and gamma priors, respectively, with unknown hyperparameters that, in turn, are given hyperpriors on the last two lines. The hyperpriors are quite uninformative as they allow large hyperparameter ranges.

Below is the Stan program for this model. The data points are input

as a concatenated vector X. The country-specific start and

end indices are computed in the transformed data block. This approach

accommodates uneven sample sizes between groups, although in our data

these are equal.

The parameters block contains the declarations of mean and standard deviation vectors, along with the hyperparameters. The hyperparameter subscripts denote the parameter they are assigned to so, for instance, \(\sigma_{\mu}\) is the standard deviation of the mean parameter \(\mu\). The generated quantities block generates samples from the population distributions of \(\mu\) and \(\sigma\) and a country-agnostic posterior predictive distribution \(\tilde{X}\).

STAN

data {

int<lower=0> G; // number of groups

int<lower=0> N[G]; // sample size within each group

vector[sum(N)] X; // concatenated observations

}

transformed data {

// get first and last index for each group in X

int start_i[G];

int end_i[G];

for(g in 1:G) {

if(g == 1) {

start_i[1] = 1;

} else {

start_i[g] = start_i[g-1] + N[g-1];

}

end_i[g] = start_i[g] + N[g]-1;

}

}

parameters {

// parameters

vector[G] mu;

vector<lower=0>[G] sigma;

// hyperparameters

real mu_mu;

real<lower=0> sigma_mu;

real<lower=0> alpha_sigma;

real<lower=0> beta_sigma;

}

model {

// Likelihood for each group

for(i in 1:G) {

X[start_i[i]:end_i[i]] ~ normal(mu[i], sigma[i]);

}

// Priors

mu ~ normal(mu_mu, sigma_mu);

sigma ~ gamma(alpha_sigma, beta_sigma);

// Hyperpriors

mu_mu ~ normal(0, 100);

sigma_mu ~ gamma(2, 0.1);

alpha_sigma ~ gamma(2, 0.01);

beta_sigma ~ gamma(2, 0.01);

}

generated quantities {

real mu_tilde;

real<lower=0> sigma_tilde;

real X_tilde;

// Population distributions

mu_tilde = normal_rng(mu_mu, sigma_mu);

sigma_tilde = gamma_rng(alpha_sigma, beta_sigma);

// Posterior predictive distribution

X_tilde = normal_rng(mu_tilde, sigma_tilde);

} Now we can call Stan and fit the model. Hierarchical models can

encounter convergence issues and for this reason, we’ll use 10000

iterations and set adapt_delta = 0.99. This controls the

acceptance probability in Stan’s adaptation period and will result in a

smaller step size in the Markov chain. Moreover, we’ll speed up the

inference by running 2 chains in parallel by setting

cores = 2.

R

stan_data <- list(G = length(unique(height_sim$Country)),

N = rep(N, length(Countries)),

X = height_sim$Height)

normal_hier_fit <- rstan::sampling(normal_hier_model,

stan_data,

iter = 10000,

chains = 2,

# Use to avoid divergent transitions:

control = list(adapt_delta = 0.99),

cores = 2)

For comparison, we will also run an unpooled analysis of heights, which makes use of the following Stan file:

R

unpooled_summaries <- list()

for(i in 1:N_countries) {

my_country <- unique(height_sim$Country)[i]

my_df <- height_sim %>%

filter(Country == my_country)

my_stan_data <- list(N = my_df %>% nrow,

X = my_df$Height)

my_fit <- rstan::sampling(normal_pooled_model,

my_stan_data,

iter = 10000,

chains = 2,

# Use to get rid of divergent transitions:

control = list(adapt_delta = 0.99),

cores = 2,

refresh = 0

)

unpooled_summaries[[i]] <- rstan::summary(my_fit, c("mu", "sigma"))$summary %>%

data.frame() %>%

rownames_to_column(var = "par") %>%

mutate(country = my_country)

}

unpooled_summaries <- do.call(rbind, unpooled_summaries)

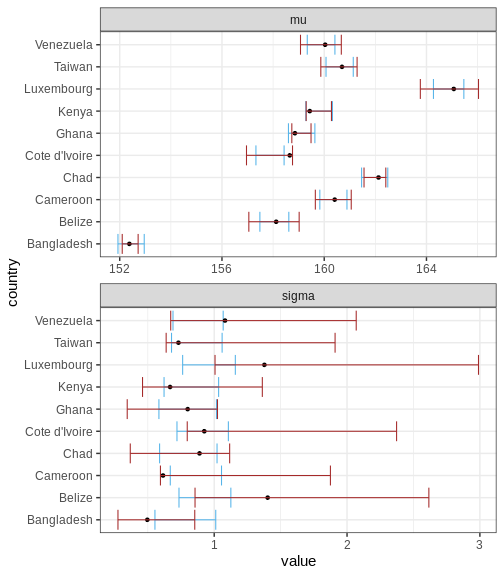

Country-specific estimates

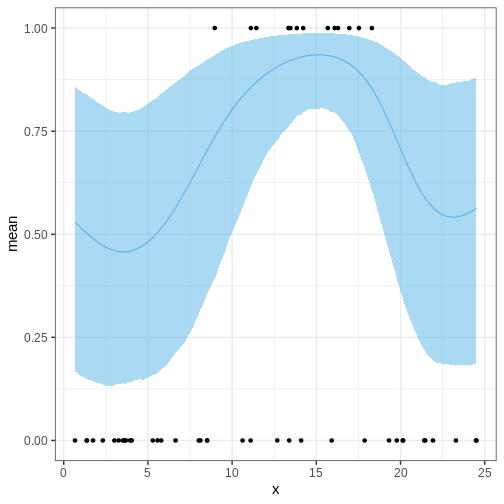

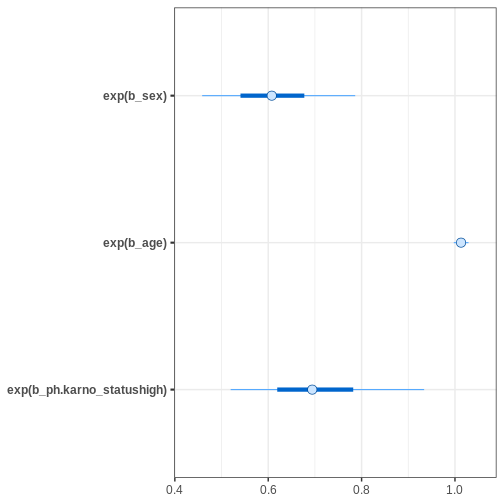

Let’s first compare the marginal posteriors for the country-specific estimates from the hierarchical model (blue) and an unpooled model (brown) that treats the parameters separately.

R

par_summary <- rstan::summary(normal_hier_fit, c("mu", "sigma"))$summary %>%

data.frame() %>%

rownames_to_column(var = "par") %>%

separate(par, into = c("par", "country"), sep = "\\[") %>%

mutate(country = gsub("\\]", "", country)) %>%

mutate(country = Countries[as.integer(country)])

ggplot() +

geom_errorbar(data = par_summary, aes(x = country, ymin = X2.5., ymax = X97.5.),

color = posterior_color) +

geom_point(data = height_women10 %>%

rename_with(~ c('mu', 'sigma'), 3:4) %>%

gather(key = "par",

value = "value",

-c(Country, Sex)),

aes(x = Country, y = value)) +

geom_errorbar(data = unpooled_summaries,

aes(x = country, ymin = X2.5., ymax = X97.5.),

color = "brown") +

facet_wrap(~ par, scales = "free", ncol = 1) +

coord_flip()

Above, the black points represent the true values, and the intervals are the 95% CIs for a hierarchical and non-hierarchical models, respectively. As apparent, the CIs from the hierarchical model are more concentrated and better capture the true values.

Challenge

Experiment with the data and fit. Explore the effect of sample size, unequal sample sizes between countries, and the amount of countries, for example.

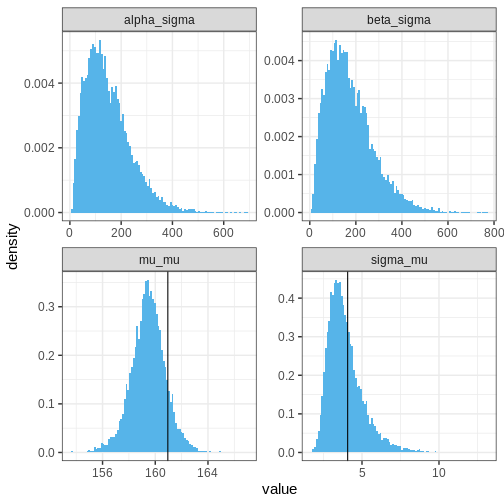

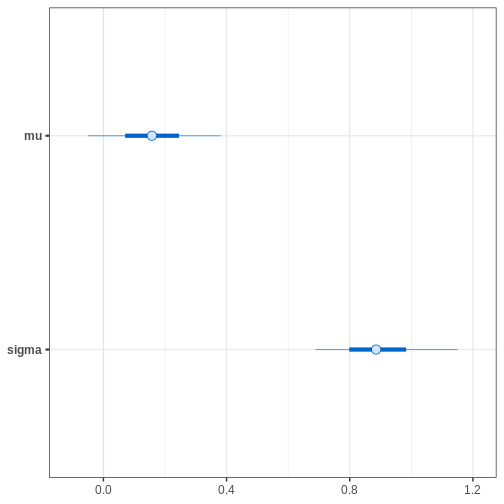

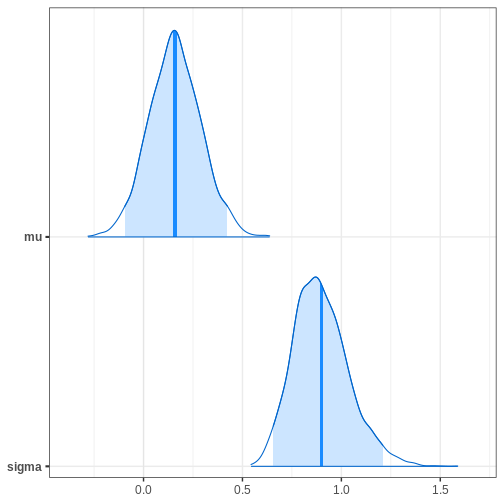

Hyperparameters

Let’s then plot the population distribution’s parameters, that is, the hyperparameters. The sample-based values are included in the plots of \(\mu_\mu\) and \(\sigma_\mu\) (why not for the other two hyperparameters?). It seems that the model has slightly underestimated the overall average and variance of the mean parameter \(\mu\), which is not suprising given the low number of data points.

R

## Population distributions:

population_samples_l <- rstan::extract(normal_hier_fit,

c("mu_mu", "sigma_mu", "alpha_sigma", "beta_sigma")) %>%

do.call(cbind, .) %>%

set_colnames(c("mu_mu", "sigma_mu", "alpha_sigma", "beta_sigma")) %>%

data.frame() %>%

mutate(sample = 1:nrow(.)) %>%

gather(key = "hyperpar", value = "value", -sample)

ggplot() +

geom_histogram(data = population_samples_l,

aes(x = value, y = after_stat(density)),

fill = posterior_color,

bins = 100) +

geom_vline(data = height_women %>%

rename_with(~ c('mu', 'sigma'), 3:4) %>%

filter(Sex == "Girls") %>%

summarise(mu_mu = mean(mu), sigma_mu = sd(mu)) %>%

gather(key = "hyperpar", value = "value"),

aes(xintercept = value)

)+

facet_wrap(~hyperpar, scales = "free")

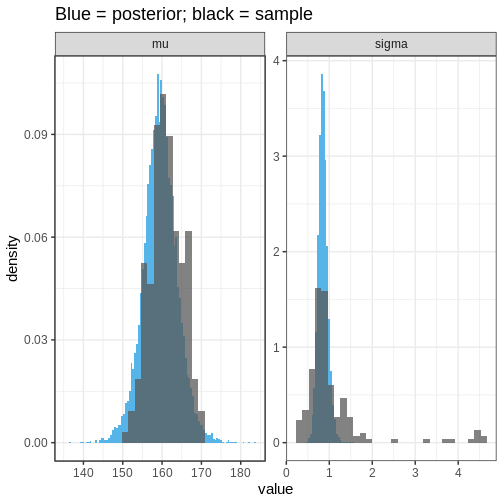

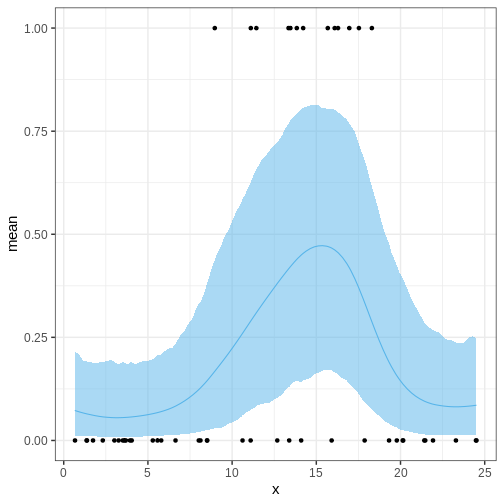

Population distributions

Let’s then plot the population distributions and compare to the true sample means and standard errors.

R

population_l <- rstan::extract(normal_hier_fit, c("mu_tilde", "sigma_tilde")) %>%

do.call(cbind, .) %>%

data.frame() %>%

set_colnames( c("mu", "sigma")) %>%

mutate(sample = 1:nrow(.)) %>%

gather(key = "par", value = "value", -sample)

ggplot() +

geom_histogram(data = population_l,

aes(x = value, y = after_stat(density)),

bins = 100, fill = posterior_color) +

geom_histogram(data = height_women %>%

rename_with(~ c('mu', 'sigma'), 3:4) %>%

gather(key = "par", value = "value", -c(Country, Sex)) %>%

filter(Sex == "Girls"),

aes(x = value, y = after_stat(density)),

alpha = 0.75, bins = 30) +

facet_wrap(~par, scales = "free") +

labs(title = "Blue = posterior; black = sample")

We can see that the population distribution is able to capture the

measured average heights and standard deviations relatively well,

although the within-country variation is estimated to be too

concentrated. However, remember that these estimates are based on a

limited sample: 10 out of 200 countries with 10 individuals in each

group.

We can see that the population distribution is able to capture the

measured average heights and standard deviations relatively well,

although the within-country variation is estimated to be too

concentrated. However, remember that these estimates are based on a

limited sample: 10 out of 200 countries with 10 individuals in each

group.

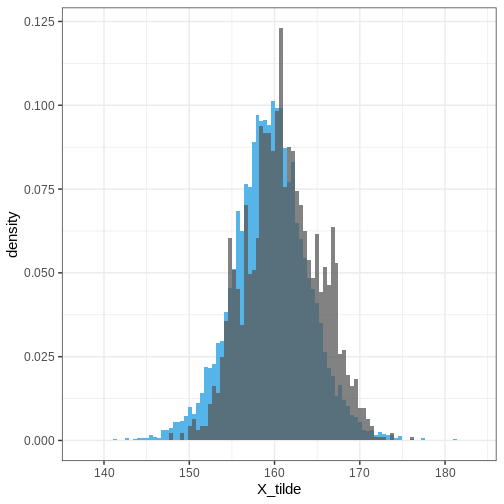

Posterior predictive distribution

Finally, let’s plot the posterior predictive distribution for the whole population and overlay it with the simulated data based on all countries.

R

# For each country, generate some random girl's heights

height_all <- lapply(1:nrow(height_women), function(i) {

my_df <- height_women[i, ] %>%

rename_with(~ c('mu', 'sigma'), 3:4)

data.frame(Country = my_df$Country,

Sex = my_df$Sex,

# Random normal values based on sample mu and sd

Height = rnorm(N, my_df$mu, my_df$sigma))

}) %>%

do.call(rbind, .)

R

# Extract the posterior predictive distribution

PPD <- rstan::extract(normal_hier_fit, c("X_tilde")) %>%

data.frame() %>%

set_colnames( c("X_tilde")) %>%

mutate(sample = 1:nrow(.))

ggplot() +

geom_histogram(data = PPD,

aes(x = X_tilde, y = after_stat(density)),

bins = 100,

fill = posterior_color) +

geom_histogram(data = height_all,

aes(x = Height, y = after_stat(density)),

alpha = 0.75,

bins = 100)

Extensions

Here, we analyzed the height for women in a randomly chosen countries using a hierarchical model. The model could be extended further, for instance, by adding hierarchy between sexes, continents, developed/developing countries etc.

- Hierarchical models are appropriate for scenarios where the study population naturally divides into subgroups.

- Hierarchical models borrow statistical strength across the population groups.

- Population distributions hold information about the variation of the model parameters over the whole population.

Reading

- Bayesian Data Analysis (3rd ed.): Ch. 5

- Statistical Rethinking (2nd ed.): Ch. 13

- Bayes Rules!: Ch. 15-19

References

[1] Height: Height and body-mass index trajectories of school-aged children and adolescents from 1985 to 2019 in 200 countries and territories: a pooled analysis of 2181 population-based studies with 65 million participants. Lancet 2020, 396:1511-1524

Content from Model comparison

Last updated on 2025-11-10 | Edit this page

Estimated time: 62 minutes

Overview

Questions

- How can competing models be compared?

Objectives

Get a basic understanding of comparing models with

posterior predictive check

information criteria

Bayesian cross-validation

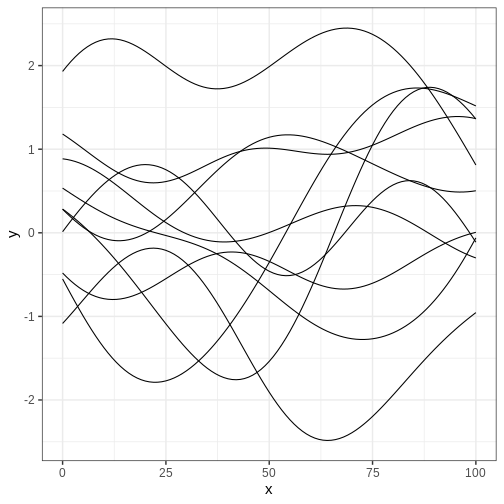

There is often uncertainty about which model would be the most appropriate choice a data being analysed. The aim of this episode is to introduce some tools that can be used to compare models systematically. We will explore three different approaches.

The first one is the posterior predictive check, which involves comparing a fitted model’s predictions with the observed data. The second approach is to use information criteria, which measure the balance between model complexity and goodness-of-fit. The episode concludes with Bayesian cross-validation.

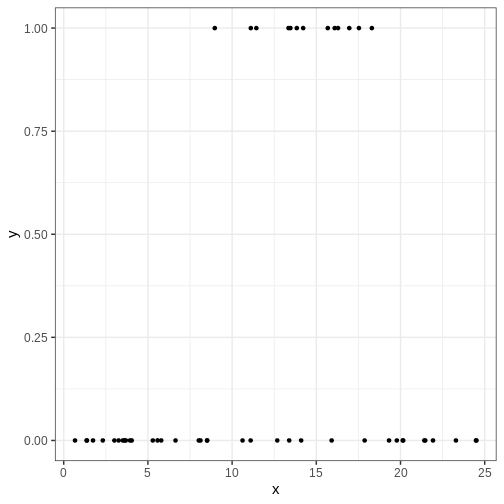

Data

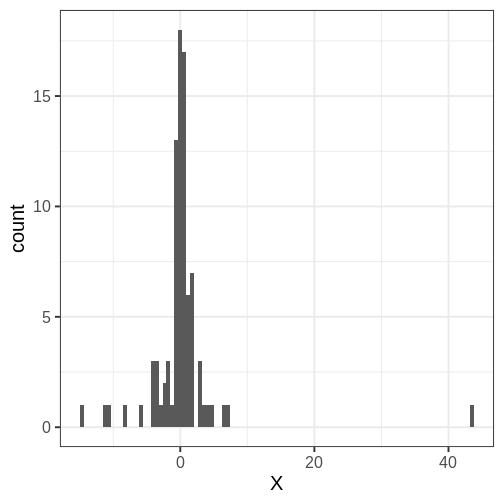

Throughout the chapter, we will use the same simulated data set in the examples, a set of \(N=88\) univariate numerical data points. The data is included in the course’s data folder at

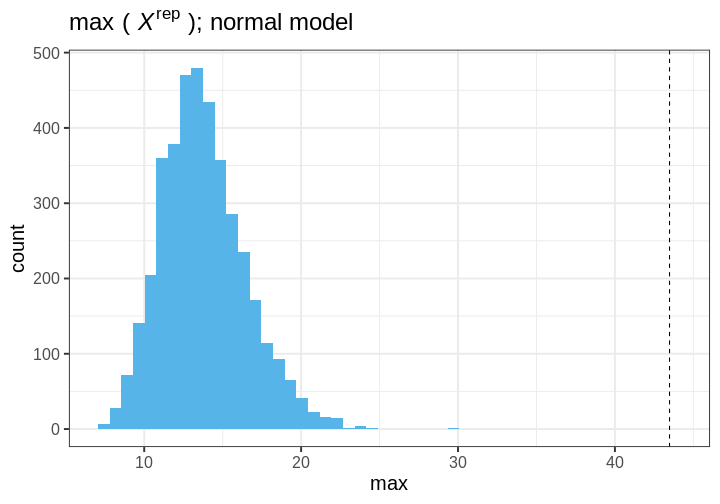

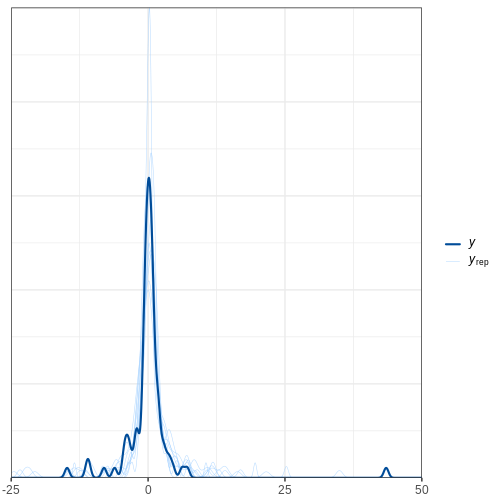

Looking at the data histogram, it’s evident that the data is approximately symmetrically distributed around 0. However, there is some dispersion in the data, and an extreme positive value, suggesting that the tails might be longer than those of a normal distribution. The Cauchy distribution is a potential alternative and below we will compare the suitability of these two distributions on this data.

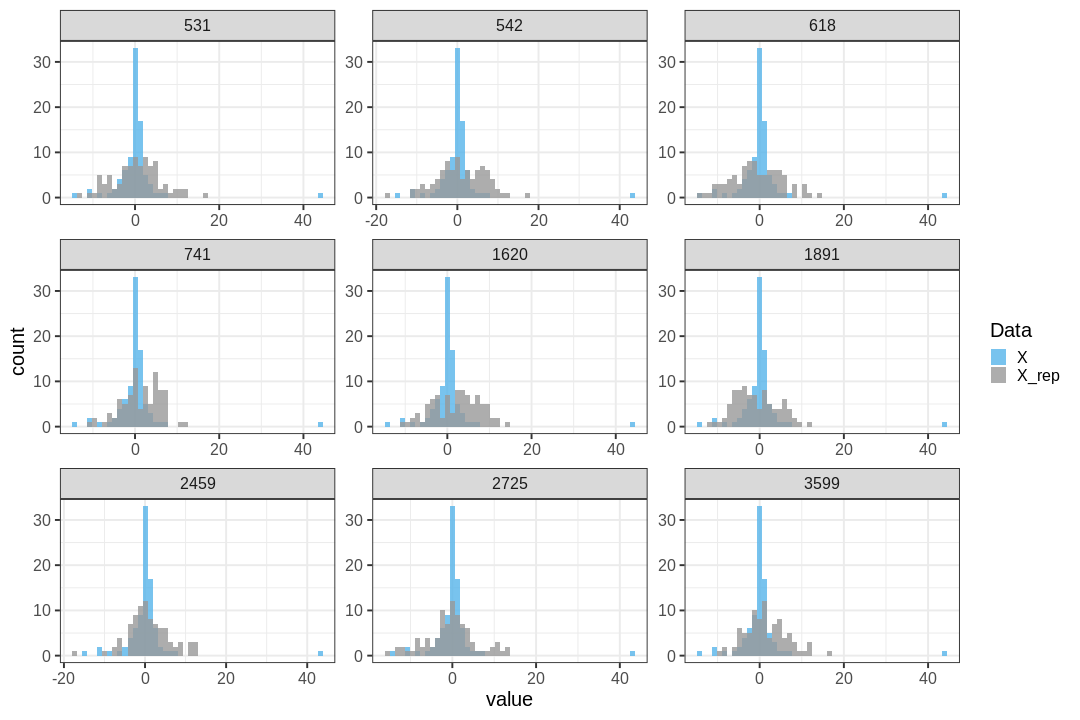

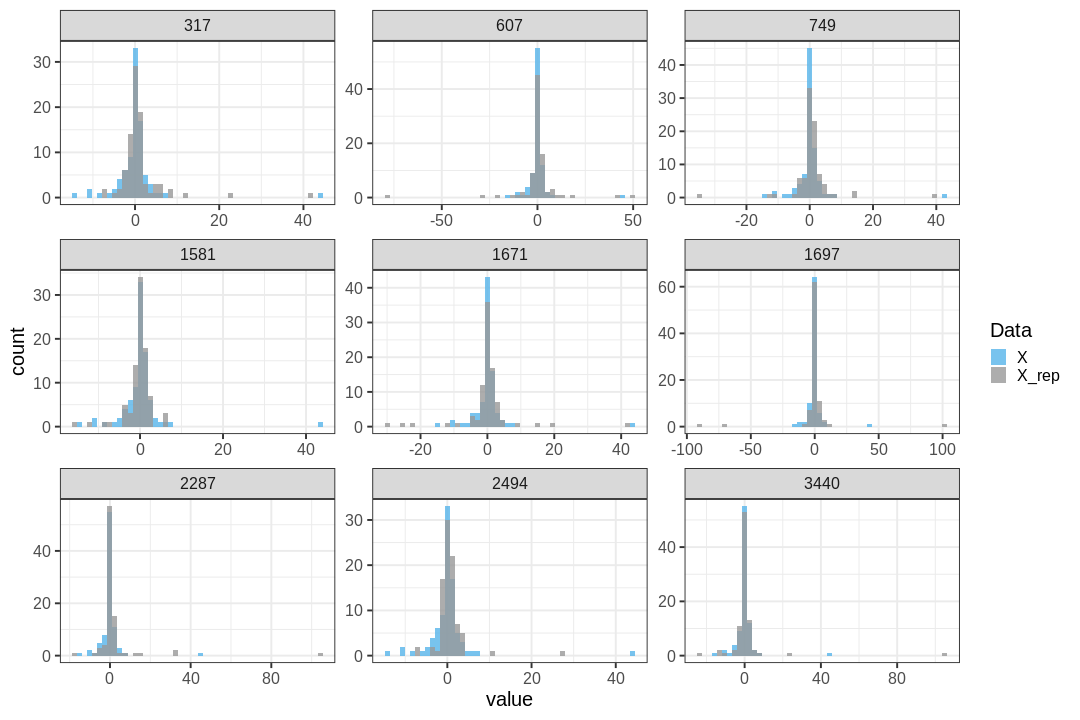

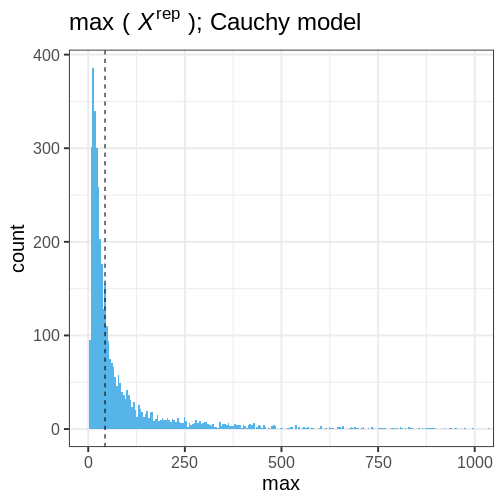

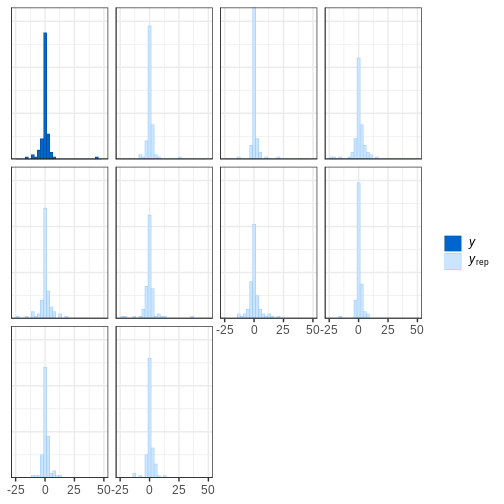

Posterior predictive check

The idea of posterior predictive checking is to use the posterior predictive distribution to simulate a replicate data set and compare it to the observed data. The reasoning behind this approach is that if the model is a good fit, then replicate data should look similar the observed one. Qualitative discrepancies between the simulated and observed data can imply that the model does not match the properties of the data or the domain.

The comparison can be done in different ways. Visual comparison is an option but a more rigorous approach is to compute the posterior predictive p-value (\(p_B\)), which measures how well the model can reproduce the observed data. Computing the \(p_B\) requires specifying a statistic whose value is compared between the posterior predictions and the observations.

The steps of a posterior predictive check can be formulated in the following points:

- Generate replicate data: Use the posterior predictive distribution to simulate replicate datasets \(X^{rep}\) with characteristics matching the observed data. In our example, this amounts to generating data with \(N=88\) for each posterior sample.

- Choose test quantity \(T(X)\): Choose an aspect of the data that you wish to check. We’ll use the maximum value as the test quantity and compute it for the observed data and each replicate. It’s important to note that not every imaginable data quantity will make a good \(T(X)\), see chapter 6.3 in BDA3 for details.

- Compute \(p_B\): The posterior predictive p-value is defined as the probability \(Pr(T(X^{rep}) \geq T(X) | X)\), that is, the probability that the predictions produce test quantity values at least as extreme as those found in the data. Using samples, it is computed as the proportion of replicate data sets with \(T\) not smaller than that of \(T(X)\). The closer the \(p_B\)-value is to 1 (or 0), the larger the evidence that the model cannot properly emulate the data.

Next we will perform these steps for the normal and Cauchy models.

Normal model

Below is a Stan program for the normal model that produces the

replicate data in the generated quantities block. The values of

X_rep are generated in a loop using the random number

generator normal_rng. Notice that a single posterior sample

\((\mu_s, \sigma_s)\) is used for each

evaluation of the generated quantities block, resulting in a

distribution of \(X^{rep}\)

STAN

data {

int<lower=0> N;

vector[N] X;

}

parameters {

real<lower=0> sigma;

real mu;

}

model {

X ~ normal(mu, sigma);

mu ~ normal(0, 1);

sigma ~ gamma(2, 1);

}

generated quantities {

vector[N] X_rep;

for(i in 1:N) {