Content from Running commands with Snakemake

Last updated on 2024-10-07 | Edit this page

Estimated time: 60 minutes

Overview

Questions

- How do I run a simple command with Snakemake?

Objectives

- Create a Snakemake recipe (a Snakefile)

- Use Snakemake to count the lines in a FASTQ file

Looking at the sample data

You should have the sample data files unpacked already (if not, refer

back to the lesson setup). Under yeast/reads you’ll see a

number of FASTQ files (extension .fq). These represent

short paired RNA-seq reads from an experiment on yeast cultured under

three experimental conditions. For now we’ll just look at one single

file, ref1_1.fq.

In the terminal:

Files in FASTQ format have 4 lines for each sequence.

- Header line, beginning with

@ - Sequence

+- Encoded quality per base

Let’s count the number of lines in the file with wc -l.

Again, in the shell:

We can redirect this result to a file like so:

BASH

$ wc -l reads/ref1_1.fq > ref1_1.fq.count

$ head -v *.count

==> ref1_1.fq.count <==

58708 reads/ref1_1.fqThe sample dataset

The sample dataset represents a transcriptomics experiment in brewer’s yeast (Saccharomyces cerevisiae) under three conditions:

- etoh60 - Treated with 60% ethanol

- temp33 - Treated at 33 degrees celsius

- ref - Untreated

For each condition there are 3 repeats, making 9 total samples. For

each, total RNA (or rather, cDNA) was sequenced on an Illumina HiSeq

instrument. For each repeat there is a pair of files as the sequencing

is double-ended, so for example reads/etoh60_3_2.fq

contains the second of the read pairs from the third ethanol-treated

sample.

Don’t worry about the biological meaning of this set-up. In the course, we’re only going to get as far as assessing the quality of the data and a very preliminary analysis. The data has been subsampled to a fraction of the original size to make filtering and alignment operations fast, so any deeper analysis is not going to yield meaningful results.

As well as the reads, we have a transcriptome for the yeast. This comes in a single FASTA file (as opposed to the cDNA reads which are in FASTQ format) which has been GZip compressed.

Making a Snakefile

Within the yeast directory, edit a new text file named

Snakefile.

Contents of Snakefile:

rule countlines:

output: "ref1_1.fq.count"

input: "reads/ref1_1.fq"

shell:

"wc -l reads/ref1_1.fq > ref1_1.fq.count"Key points about this file

- The file is named

Snakefile- with a capitalSand no file extension. - Some lines are indented. Indents must be with space characters, not tabs. See the setup section for how to make your text editor do this.

- The rule definition starts with the keyword

rulefollowed by the rule name, then a colon. - We named the rule

countreads. You may use letters, numbers or underscores, but the rule name must begin with a letter and may not be a keyword. - The keywords

input,output,shellare all followed by a colon. - The file names and the shell command are all in

"quotes". - The output filename is given before the input filename. In fact, Snakemake doesn’t care what order they appear in but we give the output first throughout this course. We’ll see why soon.

Back in the shell we’ll run our new rule. At this point, if there were any missing quotes, bad indents, etc. we may see an error.

For these early examples, we’ll always run Snakemake with the

-j1, -F and -p options. Later

we’ll look more deeply at these and other available command-line options

to Snakemake.

In the first few episodes we always run Snakemake with the

-F flag, and it’s not explained what this does until Ep.

04. The rationale is that the default Snakemake behaviour when pruning

the DAG leads to learners seeing different output (typically the message

“nothing to be done”) when repeating the exact same command. This can

seem strange to learners who are used to scripting and imperative

programming.

The internal rules used by Snakemake to determine which jobs in the

DAG are to be run, and which skipped, are pretty complex, but the

behaviour seen under -F is much more simple and consistent;

Snakemake simply runs every job in the DAG every time. You can think of

-F as disabling the lazy evaluation feature of Snakemake,

until we are ready to properly introduce and understand it.

Running Snakemake

Run snakemake --help | less to see the help for all

available options. What does the -p option in the

snakemake command above do?

- Protects existing output files

- Prints the shell commands that are being run to the terminal

- Tells Snakemake to only run one process at a time

- Prompts the user for the correct input file

Hint: you can search in the text by pressing /, and

quit back to the shell with q

- Prints the shell commands that are being run to the terminal

This is such a useful thing we don’t know why it isn’t the default!

The -j1 option is what tells Snakemake to only run one

process at a time, and we’ll stick with this for now as it makes things

simpler. The -F option tells Snakemake to always recreate

output files, and we’ll learn about protected outputs much later in the

course. Answer 4 is a total red-herring, as Snakemake never prompts

interactively for user input.

Counting sequences in a FASTQ file

FASTQ files contain 4 lines per sequence, as noted above. We can get

BASH to divide the output of wc by 4 to get the number of

sequences:

OUTPUT

$ echo $(( $(wc -l <reads/ref1_1.fq) / 4 ))

14677Note that the input filename is now preceeded by <.

This is a little trick to get wc to print only the number

of lines, and not the filename. The $( ... ) syntax

captures this output value and the $(( ... )) syntax

encloses an arithmetic expression, which needs to be printed with

echo. Don’t worry if this is unfamiliar - you just need to

know that this is a shell command you can copy and use to count the

sequences.

A command is presented to count the sequences in a FASTQ file:

$ echo $(( $(wc -l <file.fq) / 4 ))Understanding this in depth involves some advanced shell concepts

that learners will not necessarily be familiar with. However, other

alternatives involve extra software, or use curly brackets (which would

have to be doubled-up) or are not robust (eg.

grep '^@' file.fq).

Counting sequences in Snakemake

Modify the Snakefile to count the number of

sequences in reads/ref1_1.fq, rather than

the number of lines.

- Rename the rule to “countreads”

- Keep the output file name the same

- Remember that the result needs to go into the output file, not just be printed on the screen.

rule countreads:

output: "ref1_1.fq.count"

input: "reads/ref1_1.fq"

shell:

"echo $(( $(wc -l <reads/ref1_1.fq) / 4 )) > ref1_1.fq.count"Counting sequences in Snakemake (continued)

Add a second rule to count the sequences in

reads/etoh60_1_1.fq. Add this to the same Snakefile you

already made, under the “countreads” rule, and run your rules in the

terminal. When running the snakemake command you’ll need to

tell Snakemake to make both the output files.

You can choose whatever name you like for this second rule, but it can’t be “countreads” as rule names need to be unique within a Snakefile. So in this example answer we use “countreads2”.

rule countreads2:

output: "etoh60_1_1.fq.count"

input: "reads/etoh60_1_1.fq"

shell:

"echo $(( $(wc -l <reads/etoh60_1_1.fq) / 4 )) > etoh60_1_1.fq.count"Then in the shell…

If you think writing a separate rule for each output file is silly, you are correct. We’ll address this next!

For reference, this is the full Snakefile we should have by the end of this episode.

- Before running Snakemake you need to write a Snakefile

- A Snakefile is a text file which defines a list of rules

- Rules have inputs, outputs, and shell commands to be run

- You tell Snakemake what file to make and it will run the shell command defined in the appropriate rule

Content from Placeholders and wildcards

Last updated on 2024-10-07 | Edit this page

Estimated time: 70 minutes

Overview

Questions

- How do I make a generic rule?

- How does Snakemake decide what rule to run?

Objectives

- Use Snakemake to count the sequences in any file

- Understand the basic steps Snakemake goes through when running a workflow

- See how Snakemake deals with some errors

For reference, this is the Snakefile you should have to start the episode.

Trimming and counting reads

In the previous episode we used Snakemake to count the sequences in two FASTQ files. Later in this episode we will apply a filtering operation to remove low-quality sequences from the input files, and the ability to count the reads will show us how many reads have been discarded by the filter.

We have eighteen input files to process and we would like to avoid writing eighteen near-identical rules, so the first job is to make the existing read-counting rule generic - a single rule to count the reads in any file. We will then add a filtering rule which will also be generic.

Wildcards and placeholders

To make a rule that can process more than one possible input file we

need placeholders and wildcards. Here

is a new rule that will count the sequences in any of

the .fq files.

# New generic read counter

rule countreads:

output: "{myfile}.fq.count"

input: "reads/{myfile}.fq"

shell:

"echo $(( $(wc -l <{input}) / 4 )) > {output}"As a reminder, here’s the non-generic version from the last episode:

# Original version

rule countreads:

output: "ref1_1.fq.count"

input: "reads/ref1_1.fq"

shell:

"echo $(( $(wc -l <reads/ref1_1.fq) / 4 )) > ref1_1.fq.count"The new rule has replaced explicit file names with things in

{curly brackets}, specifically {myfile},

{input} and {output}.

{myfile} is a wildcard

Wildcards are used in the input and output

lines of the rule to represent parts of filenames. Much like the

* pattern in the shell, the wildcard can stand in for any

text in order to make up the desired filename. As with naming your

rules, you may choose any name you like for your wildcards, so here we

used myfile. If myfile is set to

ref1_1 then the new generic rule will have the same inputs

and outputs as the original rule. Using the same wildcards in the input

and output is what tells Snakemake how to match input files to output

files.

If two rules use a wildcard with the same name then Snakemake will treat them as different entities

- rules in Snakemake are self-contained in this way.

{input} and {output} are

placeholders

Placeholders are used in the shell section of a rule,

and Snakemake will replace them with appropriate values -

{input} with the full name of the input file, and

{output} with the full name of the output file – before

running the command.

If we had wanted to include the value of the myfile

wildcard directly in the shell command we could have used

the placeholder {wildcards.myfile} but in most cases, as

here, we just need the {input} and {output}

placeholders.

Running the general-purpose rule

Modify your Snakefile to incorporate the changes described above, using the wildcard and input/output placeholders. You should resist the urge to copy-and-paste from this workbook, but rather edit the file by hand, as this will stick better in your memory.

You should delete the now-redundant second rule, so your Snakefile should contain just one rule named countreads.

Using this new rule, determine: how many reads are in the

temp33_1_1.fq file?

Choosing the right wildcards

Our rule puts the sequence counts into output files named like

ref1_1.fq.count. How would you have to change the

“countreads” rule definition if you wanted:

the output file for

reads/ref1_1.fqto becounts/ref1_1.txt?the output file for

reads/ref1_1.fqto beref1_counts/fq.1.count(forreads/ref1_2.fqto beref1_counts/fq.2.count, etc.)?the output file for

reads/ref1_1.fqto becountreads_1.txt?

In all cases, there is no need to change the shell part

of the rule at all.

output: "counts/{myfile}.txt"

input: "reads/{myfile}.fq"This can be done just by changing the output: line. You

may also have considered the need to mkdir counts but in

fact this is not necessary as Snakemake will create the output directory

path for you before it runs the rule.

output: "{sample}_counts/fq.{readnum}.count"

input: "reads/{sample}_{readnum}.fq"In this case, it was necessary to introduce a second wildcard,

because the elements in the output file name are split up. The names

chosen here are {sample} and {readnum} but you

could choose any names as long as they match between the

input and output parts. Once again, the output

directory will be created for us by Snakemake, so the shell

command does not need to change.

This one isn’t possible, because Snakemake cannot determine which input file you want to count by matching wildcards on the file name “countreads_1.txt”. You could try a rule like this:

output: "countreads_{readnum}.count"

input: "reads/ref1_{readnum}.fq"…but it only works because “ref1” is hard-coded into the

input line, and the rule will only work on this specific

sample, not the other eight in our sample dataset. In general, input and

output filenames need to be carefully chosen so that Snakemake can match

everything up and determine the right input from the output

filename.

Snakemake order of operations

We’re only just getting started with some simple rules, but it’s worth thinking about exactly what Snakemake is doing when you run it. There are three distinct phases:

- Prepares to run:

- Reads in all the rule definitions from the Snakefile

- Plans what to do:

- Sees what file(s) you are asking it to make

- Looks for a matching rule by looking at the

outputs of all the rules it knows - Fills in the wildcards to work out the

inputfor this rule - Checks that this input file is actually available

- Runs the steps:

- Creates the directory for the output file, if needed

- Removes the old output file if it is already there

- Only then, runs the shell command with the placeholders replaced

- Checks that the command ran without errors and made the new output file as expected

For example, if we now ask Snakemake to generate a file named

wibble_1.fq.count:

OUTPUT

$ snakemake -j1 -F -p wibble_1.fq.count

Building DAG of jobs...

MissingInputException in line 1 of /home/zenmaster/data/yeast/Snakefile:

Missing input files for rule countreads:

reads/wibble_1.fqSnakemake sees that a file with a name like this could be produced by

the countreads rule. However, when it performs the wildcard

substitution it sees that the input file would need to be named

reads/wibble_1.fq, and there is no such file available.

Therefore Snakemake stops and gives an error before any shell commands

are run.

Dry-run (-n) mode

The amount of checking may seem pedantic right now, but as the workflow gains more steps this will become very useful to us indeed.

Filtering the reads for quality

Adding a rule for filtering the reads

Here is a command that will trim and filter low quality reads from a FASTQ file.

Add a second rule to your Snakefile to run this trimmer. You should make it so that valid outputs are files with the same name as the input, but in a subdirectory named ‘trimmed’, for example:

- trimmed/ref1_1.fq

- trimmed/temp33_1_1.fq

- etc.

# Trim any FASTQ reads for base quality

rule trimreads:

output: "trimmed/{myfile}.fq"

input: "reads/{myfile}.fq"

shell:

"fastq_quality_trimmer -t 20 -l 100 -o {output} <{input}"Bonus points if you added any comments to the code!

And of course you can run your new rule as before, to make one or more files at once. For example:

About fastq_quality_trimmer

fastq_quality_trimmer is part of the FastX toolkit and

performs basic trimming on single FASTQ files. The options

-t 20 -l 100 happen to be reasonable quality cutoffs for

this dataset. This program reads from standard input so we’re using

< to specify the input file, and the -o

flag specifies the output name.

For reference, this is a Snakefile incorporating the changes made in this episode.

- Snakemake rules are made generic with placeholders and wildcards

- Snakemake chooses the appropriate rule by replacing wildcards such the the output matches the target

- Placeholders in the shell part of the rule are replaced with values based on the chosen wildcards

- Snakemake checks for various error conditions and will stop if it sees a problem

Content from Chaining rules

Last updated on 2025-02-25 | Edit this page

Estimated time: 50 minutes

Overview

Questions

- How do I combine rules into a workflow?

- How can I make a rule with multiple input files?

Objectives

- Use Snakemake to filter and then count the sequences in a FASTQ file

- Understand how rules are linked by filename patterns

- Add a rule that calculates the number of reads filtered out

For reference, this is the Snakefile you should have to start the episode.

A pipeline of multiple rules

Our goal at this point is to apply a quality filter to our reads and to see how many reads are discarded by that filter for any given sample. We are not quite there yet, but we do have a countreads rule and a trimreads rule. Following the previous chapter, the contents of the Snakefile should be:

# New generic read counter

rule countreads:

output: "{myfile}.fq.count"

input: "reads/{myfile}.fq"

shell:

"echo $(( $(wc -l <{input}) / 4 )) > {output}"

# Trim any FASTQ reads for base quality

rule trimreads:

output: "trimmed/{myfile}.fq"

input: "reads/{myfile}.fq"

shell:

"fastq_quality_trimmer -t 20 -l 100 -o {output} <{input}"The missing piece is that there is no way to count the reads in the trimmed file. The countreads rule only takes input reads from the reads directory, whereas the trimreads rule puts all results into the trimmed directory.

To fix this, we could move the trimmed reads into the reads directory, or add a second read-counting rule, but the most elegant solution here is to make the countreads rule even more generic, so it can count everything.

# New even-more-generic read counter

rule countreads:

output: "{indir}.{myfile}.fq.count"

input: "{indir}/{myfile}.fq"

shell:

"echo $(( $(wc -l <{input}) / 4 )) > {output}"Now, the rule no longer requires the input files to be in the “reads”

directory. The directory name has been replaced by the

{indir} wildcard. We can request Snakemake to create a file

following this new output pattern:

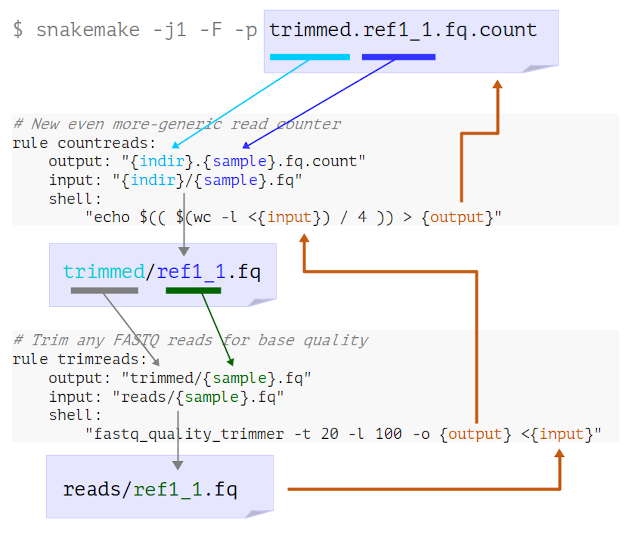

Look at the logging messages that Snakemake prints in the terminal. What has happened here?

- Snakemake looks for a rule to make

trimmed.ref1_1.fq.count - It determines that “countreads” can make this if

indir=trimmedandmyfile=ref1_1 - It sees that the input needed is therefore

trimmed/ref1_1.fq

- Snakemake looks for a rule to make

trimmed/ref1_1.fq - It determines that “trimreads” can make this if

myfile=ref1_1 - It sees that the input needed is therefore

reads/ref1_1.fq

- Now Snakemake has reached an available input file, it runs both steps to get the final output

Here’s a visual representation of this process:

A figure is shown here to illustrate the way Snakemake finds rules by wildcard matching and then tracks back until it runs out of rule matches and finds a file that it already has. You may find that showing an animated version of this is helpful, in which case there are some slides here.

This, in a nutshell, is how we build workflows in Snakemake.

- Define rules for all the processing steps

- Choose

inputandoutputnaming patterns that allow Snakemake to link the rules - Tell Snakemake to generate the final output files

If you are used to writing regular scripts this takes a little getting used to. Rather than listing steps in order of execution, you are always working backwards from the final desired result. The order of operations is determined by applying the pattern matching rules to the filenames, not by the order of the rules in the Snakefile.

Choosing file name patterns

Chaining rules in Snakemake is a matter of choosing filename patterns that connect the rules. There’s something of an art to it, and most times there are several options that will work, but in all cases the file names you choose will need to be consistent and unabiguous.

Seeing how many reads were discarded

How many reads were removed?

How many reads were removed from the ref1 sample by the filtering step?

After generating both the trimmed and untrimmed .count

files we can get this information.

BASH

$ snakemake -j1 -F -p reads.ref1_1.fq.count trimmed.ref1_1.fq.count

$ head *.ref1_1.fq.count

==> reads.ref1_1.fq.count <==

14677

==> trimmed.ref1_1.fq.count <==

14278Subtracting these numbers shows that 399 reads have been removed.

To finish this part of the workflow we will add a third rule to

perform the calculation for us. This rule will need to take both of the

.count files as inputs. We can use the arithmetic features

of the Bash shell to do the subtraction.

Check that this command runs in your terminal. Take care to get the symbols and spaces all correct. As with the original countreads rule, this shell syntax may well be unfamiliar to you, but armed with this working command we can simply substitute the names of any two files we want to compare.

We can put the shell command into a rule.

rule calculate_difference:

output: "ref1_1.reads_removed.txt"

input:

untrimmed = "reads.ref1_1.fq.count",

trimmed = "trimmed.ref1_1.fq.count",

shell:

"echo $(( $(<{input.untrimmed}) - $(<{input.trimmed}) )) > ref1_1.reads_removed.txt"Note that:

- The above rule has two inputs, trimmed and untrimmed

- We can choose what to call the individual inputs, so use descriptive names

- There is a newline after

input:and the next two lines are indented - The

=and,symbols are needed - You can leave off the final comma, but it’s generally easier to just put one on every line

- We refer to the input file names as

{input.untrimmed}and{input.trimmed} - There is only one output, but we can have multiple named outputs too.

Making this rule generic

Alter the above rule to make it generic by adding suitable wildcards. Use the generic rule to calculate the number of reads removed from the etoh60_1_1.fq input file.

rule calculate_difference:

output: "{myfile}.reads_removed.txt"

input:

untrimmed = "reads.{myfile}.fq.count",

trimmed = "trimmed.{myfile}.fq.count",

shell:

"echo $(( $(<{input.untrimmed}) - $(<{input.trimmed}) )) > {output}"Here, I’ve chosen to use the wildcard name {myfile}

again, but you can use any name you like. We do also need to ensure that

the output file is referenced using the {output}

placeholder.

Processing all the inputs

It would be nice to have Snakemake run this automatically for all our samples. We’ll see how to do this later, in episode 6.

Outputs first?

The Snakemake approach of working backwards from the desired output

to determine the workflow is why we’re putting the output

lines first in all our rules - to remind us that these are what

Snakemake looks at first!

Many users of Snakemake, and indeed the official documentation,

prefer to have the input first, so in practise you should

use whatever order makes sense to you.

For reference, this is a Snakefile incorporating the changes made in this episode.

- Snakemake links up rules by iteratively looking for rules that make missing inputs

- Careful choice of filenames allows this to work

- Rules may have multiple named input files (and output files)

Content from Complex outputs, logs and errors

Last updated on 2025-02-25 | Edit this page

Estimated time: 50 minutes

Overview

Questions

- How can we start to analyse the sample data?

- What can cause a job in Snakemake to fail?

Objectives

- Add an RNA quantification step in the data analysis

- Learn about adding log outputs to rules

- Understand why and how Snakemake deals with missing outputs

For reference, this is the Snakefile you should have to start the episode.

Adding a transcript counting step to the pipeline

The main point of the following exercise is to get some insight into what the learners might want to do with Snakemake. You should focus on the answers received rather than dwelling on the sample answers below.

Thinking about your own workflows

Think about any data processing task you have done yourself, and write down three or four steps from that workflow.

What were the inputs to, and outputs from, each step?

How did the steps connect up, in terms of data going from one to the next? You may want to sketch this out and use arrows to indicate the linkages between the steps.

Introducing Kallisto

Let’s add another rule to our Snakefile. The reads we have are from a yeast RNA-seq experiment so we might reasonably want to quantify transcript abundance using the kallisto program. The command to do so looks like this:

This command has three input files:

- The transcriptome index

- The first of the paired FASTQ files

- The second of the paired FASTQ files

And it produces a directory of output files. According to the Kallisto manual this directory will have three output files in it:

- abundance.h5

- abundance.tsv

- run_info.json

We’ll not worry about what the contents of these files mean just now,

or how Kallisto generates them. We just know that we want to run the

kallisto quant command and have it make the output files,

and the output files are going to be useful once we add later steps in

the analysis.

Making a rule with multiple inputs and outputs works much like we previously saw.

rule kallisto_quant:

output:

h5 = "kallisto.{sample}/abundance.h5",

tsv = "kallisto.{sample}/abundance.tsv",

json = "kallisto.{sample}/run_info.json",

input:

index = "Saccharomyces_cerevisiae.R64-1-1.kallisto_index",

fq1 = "trimmed/{sample}_1.fq",

fq2 = "trimmed/{sample}_2.fq",

shell:

"kallisto quant -i {input.index} -o kallisto.{wildcards.sample} {input.fq1} {input.fq2}"In this course, we introduce named inputs and outputs before lists of inputs and outputs. This results in shell commands like:

"kallisto quant -i {input.index} -o kallisto.{wildcards.sample} {input.fq1} {input.fq2}"

Rather than the less readable version with a simple list of inputs:

"kallisto quant -i {input[0]} -o kallisto.{wildcards.sample} {input[1]} {input[2]}"

Later, we introduce lists of inputs in tandem with the

expand() function. Of course it is possible to have a list

of outputs, but this is uncommon and not needed to solve any of the

challenges in this course. In fact, introducing lists of outputs may

confuse learners as they may think it is possible for a rule to yield a

variable number of outputs in the manner of the old

dynamic() behaviour, which is not a thing.

There are many things to note here:

- The individual input and output files are all given names.

- We’ve used the wildcard name

{sample}rather than{myfile}because this will match only the sample name, egref1, notref1_1. Snakemake doesn’t care what name we use, but carefully chosen names make for more readable rules. - Because

kallisto quantonly takes the output directory name, we’ve used the placeholder{wildcards.sample}rather than{output}which would expand to the full file names. - We’ve chosen to only quantify the trimmed version of the reads.

- We don’t actually have the

{input.index}file yet. This will need to be created using thekallisto indexcommand.

Even though the rule is not going to work without the index, we can still run it to check that Snakemake is happy with the rule definition.

Running Kallisto on all replicates

If you are previously familiar with the Kallisto software, you may be thinking about running Kallisto on all replicates of the condition at once. We’ll look at this later in the course, but for now we will be running Kallisto once per sample, ie. once for each pair of FASTQ files.

Running the kallisto_quant rule

Given that the index input is missing, what would you expect Snakemake to do if the new rule was run now?

Try it by telling Snakemake to run the new rule on the files

ref1_1.fq and ref1_2.fq. Since the rule

defines multiple outputs, asking for any one of the output files will be

enough.

Building the index

Instruct Snakemake how to build the genome index as part of the pipeline by adding another rule. The command we need to run is:

The file to be indexed is

transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz.

As there is only one input to the rule you don’t have to give it a name,

but you may do so if you prefer.

Make it so that the terminal messages printed by the program are

captured to a file, and therefore your rule will have two separate

outputs: the index file and the messages file. Note

that the program prints messages on stderr, so you will need to

use >& rather than > to capture that

output.

The rule you write could look something like this, but there are many

variations that will work just as well. Since there is only one

transcriptome in the project, you may feel that use of the

{strain} wildcard is overkill, but who’s to say we might

not want to use another in future?

rule kallisto_index:

output:

idx = "{strain}.kallisto_index",

messages = "{strain}.kallisto_stderr",

input:

fasta = "transcriptome/{strain}.cdna.all.fa.gz"

shell:

"kallisto index -i {output.idx} {input.fasta} >& {output.messages}"Log outputs in Snakemake

All being well, our new rules are now ready to run Kallisto, and we can analyse any sample we like.

BASH

$ snakemake -j1 -F -p kallisto.ref3/abundance.h5

...lots of output...

4 of 4 steps (100%) done

Complete log: /home/zenmaster/data/yeast/.snakemake/log/2021-04-23T142649.632834.snakemake.logThere is one particular improvement we can make, since Snakemake has a dedicated rule field for outputs that are log files. These are mostly treated the same as regular outputs except that log files are always kept even if the job produces an error, so you can look at the log to help diagnose the error.

For an output to be treated as a log file, list it under

log: instead of output: and then within the

shell command use the placeholder {log} instead of

{output}.

Using an explicit log output

Modify the solution to the previous challenge so that it uses the

log keyword to capture the terminal output from

kallisto quant.

rule kallisto_index:

output:

idx = "{strain}.kallisto_index",

input:

fasta = "transcriptome/{strain}.cdna.all.fa.gz"

log:

messages = "{strain}.kallisto_stderr",

shell:

"kallisto index -i {output.idx} {input.fasta} >& {log.messages}"The order of the log:, output: and

input: parts can be however you like. In this case since

there is now a single input file, a single output file, and a single log

file, you may feel that there is no point naming them all.

rule kallisto_index:

output: "{strain}.kallisto_index"

input: "transcriptome/{strain}.cdna.all.fa.gz"

log: "{strain}.kallisto_stderr"

shell:

"kallisto index -i {output} {input} >& {log}"Dealing with a missing files error

We’ll end the chapter by looking at a common problem that can arise

if you mistype a file name in a rule. Remember that we wrote the rule

based on the expected output filenames given in the Kallisto manual. In

an older version of this manual there was a typo where the file names

were incorrectly given as abundances.h5 and

abundances.tsv, with the extra s on each.

It may seem silly to break the workflow when we just got it working, but it will be instructive, so edit the Snakefile and change these names to the incorrect versions.

rule kallisto_quant:

output:

h5 = "kallisto.{sample}/abundances.h5",

tsv = "kallisto.{sample}/abundances.tsv",

json = "kallisto.{sample}/run_info.json",

...To keep things tidy, this time we’ll manually remove the output directory.

And re-run. Note that the output file name you’ll need to use on the

command line must match the edited Snakefile, or you will get a

MissingRuleException.

OUTPUT

$ snakemake -j1 -F -p kallisto.ref1/abundances.h5

...

kallisto quant -i Saccharomyces_cerevisiae.R64-1-1.kallisto_index -o kallisto.ref1 trimmed/ref1_2.fq trimmed/ref1_2.fq

[quant] fragment length distribution will be estimated from the data

...more Kallisto output...

[ em] the Expectation-Maximization algorithm ran for 265 rounds

Waiting at most 5 seconds for missing files.

MissingOutputException in line 24 of /home/zenmaster/data/yeast/Snakefile:

Job Missing files after 5 seconds:

kallisto.ref1/abundances.h5

kallisto.ref1/abundances.tsv

This might be due to filesystem latency. If that is the case, consider to increase the wait time with --latency-wait.

Job id: 0 completed successfully, but some output files are missing. 0

File "/opt/python3.7/site-packages/snakemake/executors/__init__.py", line 583, in handle_job_success

File "/opt/python3.7/site-packages/snakemake/executors/__init__.py", line 259, in handle_job_success

Removing output files of failed job kallisto_quant since they might be corrupted:

kallisto.ref1/run_info.json

Shutting down, this might take some time.

Exiting because a job execution failed. Look above for error message

Complete log: /home/zenmaster/data/yeast/.snakemake/log/2021-04-23T142649.632834.snakemake.logThere’s a lot to take in here. Some of the messages are very informative. Some less so.

- Snakemake did actually run kallisto, as evidenced by the output from kallisto that we see on the screen.

- There is no obvious error message being reported by kallisto.

- Snakemake complains some expected output files are missing:

kallisto.ref1/abundances.h5andkallisto.ref1/abundances.tsv. - The third expected output file

kallisto.ref1/run_info.jsonwas found but has now been removed by Snakemake. - Snakemake suggest this might be due to “filesystem latency”.

This last point is a red herring. “Filesystem latency” is not an

issue here, and never will be since we are not using a network

filesystem. We know what the problem is, as we deliberately caused it,

but to diagnose an unexpected error like this we would investigate

further by looking at the kallisto.ref1 subdirectory.

Remember that Snakemake itself does not create any output files. It

just runs the commands you put in the shell sections, then

checks to see if all the expected output files have appeared.

So if the file names created by kallisto are not exactly the same as

in the Snakefile you will get this error, and you will, in this case,

find that some output files are present but others

(run_info.json, which was named correctly) have been

cleaned up by Snakemake.

Errors are normal

Don’t be disheartened if you see errors like the one above when first testing your new Snakemake pipelines. There is a lot that can go wrong when writing a new workflow, and you’ll normally need several iterations to get things just right. One advantage of the Snakemake approach compared to regular scripts is that Snakemake fails fast when there is a problem, rather than ploughing on and potentially running junk calculations on partial or corrupted data. Another advantage is that when a step fails we can safely resume from where we left off, as we’ll see in the next episode.

Finally, edit the names in the Snakefile back to the correct version and re-run to confirm that all is well. Assure yourself that that the rules are still generic by processing the temp33_1 sample too:

For reference, this is a Snakefile incorporating the changes made in this episode.

- Try out commands on test files before adding them to the workflow

- You can build up the workflow in the order that makes sense to you, but always test as you go

- Use log outputs to capture the messages printed by programs as they run

- If a shell command exits with an error, or does not yield an expected output then Snakemake will regard that as a failure and stop the workflow

Content from How Snakemake plans its jobs

Last updated on 2025-02-25 | Edit this page

Estimated time: 70 minutes

Overview

Questions

- How do I visualise a Snakemake workflow?

- How does Snakemake avoid unecessary work?

- How do I control what steps will be run?

Objectives

- View the DAG for our pipeline

- Understand the logic Snakemake uses when running and re-running jobs

For reference, this is the Snakefile you should have to start the episode.

The DAG

You may have noticed that one of the messages Snakemake always prints is:

OUTPUT

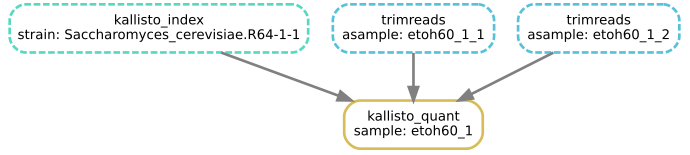

Building DAG of jobs...A DAG is a Directed Acyclic Graph and it can be pictured like so:

The above DAG is based on our existing rules, and shows all the jobs Snakemake would run to trim, count and quantify the ref1 sample.

Note that:

- A rule can appear more than once, with different wildcards (a rule plus wildcard values defines a job)

- A rule (here, calculate_difference) may not be used at all, if it is not required for the target outputs

- The arrows show dependency ordering between jobs

- Snakemake can run the jobs in any order that doesn’t break dependency - for example kallisto_quant cannot run until both kallisto_index and trimreads have completed, but it may run before or after countreads

- This is a work list, not a flowchart, so there are no if/else decisions or loops - Snakemake runs every job in the DAG exactly once

- The DAG depends both on the Snakefile and on the requested target outputs, and the files already present

- When building the DAG, Snakemake does not look at the shell part of the rules at all - only when running the DAG will Snakemake check that the shell commands are working and producing the expected output files

How many jobs?

If we asked Snakemake to run kallisto_quant on all three of the reference samples (ref1, ref2, ref3), how many jobs would that be in total?

10 in total:

- 3 * kallisto_quant

- 6 * trimreads

- 1 * kallisto_index

- 0 * countreads

- 0 * calculate_difference

Snakemake is lazy, and laziness is good

For the last few episodes, we’ve told you to run Snakemake like this:

As a reminder, the -j1 flag tells Snakemake to run one

job at a time, and -p is to print out the shell commands

before running them.

The -F flag turns on forceall mode, and in

normal usage you don’t want this.

At the end of the last chapter, we generated some kallisto results by running:

Now try without the -F option. Assuming that the output

files are already created, you’ll see this:

BASH

$ snakemake -j1 -p kallisto.temp33_1/abundance.h5

Building DAG of jobs...

Nothing to be done.

Complete log: /home/zenmaster/data/yeast/.snakemake/log/2021-04-23T172441.519500.snakemake.logIn normal operation, Snakemake only runs a job if:

- A target file you explicitly requested to make is missing

- An intermediate file is missing and it is needed in the process of making a target file

- Snakemake can see an input file which is newer than an output file

- A rule definition or configuration has changed since the output file was created

The last of these relies on a ledger that Snakemake saves into the

.snakemake directory.

Let’s demonstrate each of these in turn, by altering some files and

re-running Snakemake without the -F option.

This just re-runs kallisto_quant - the final step.

“Nothing to be done” - some intermediate output is missing but Snakemake already has the file you are telling it to make, so it doesn’t worry.

The touch command is a standard Linux command which sets

the timestamp of the file, so now the transcriptome looks to Snakemake

as if it was just modified.

Snakemake sees that one of the input files used in the process of

producing kallisto.temp33_1/abundance.h5 is newer than the

existing output file, so it needs to run the kallisto index and

kallisto quant steps again. Of course, the kallisto

quant step needs the trimmed reads which we deleted earlier, so now

the trimming step is re-run also.

Explicitly telling Snakemake what to re-run

The default timestamp-based logic is really useful when you want to:

- Change or add some inputs to an existing analysis without re-processing everything

- Continue running a workflow that failed part-way

In most cases you can also rely on Snakemake to detect when you have edited a rule, but sometimes you need to be explicit, for example if you have updated an external script or changed a setting which Snakemake doesn’t see.

The -R flag allows you to explicitly tell Snakemake that

a rule has changed and that all outputs from that rule need to be

re-evaluated.

Note on -R

Due to a quirk of the way Snakemake parses command-line options, you

need to make sure there are options after the -R ...,

before the list of target outputs. If you don’t do this, Snakemake will

think that the target files are instead items to add to the

-R list, and then when building the DAG it will just try to

run the default rule.

The easiest way is to put the -p flag before the target

outputs. Then you can list multiple rules to re-run, and also multiple

targets, and Snakemake can tell which is which.

BASH

$ snakemake -j1 -R trimreads kallisto_index -p kallisto.temp33_1/abundance.h5 kallisto.temp33_2/abundance.h5The reason for using the -p flag specifically is that

you pretty much always want this option.

The -f flag specifies that the target outputs named on

the command line should always be regenerated, so you can use this to

explicitly re-make specific files.

This always re-runs kallisto_quant, regardless of whether

the output file is there already. For all intermediate outputs,

Snakemake applies the default timestamp-based logic. Contrast with

-F which runs the entire DAG every time.

Visualising the DAG

Snakemake can draw a picture of the DAG for you, if you run it like this:

Using the --dag option implicitly activates the

-n (dry-run) option so that Snakemake will not actually run

any jobs, it will just print the DAG and stop. Snakemake prints the DAG

in a text format so we use the gm command to make this into

a picture and show it on the screen.

Note on gm display

The gm command is provided by the GraphicsMagick toolkit. On

systems where gm will not display an image directly, you

may instead save it to a PNG file. You will need the dot

program from the GraphViz package

installed.

The boxes drawn with dotted lines indicate steps that are not to be run, as the output files are already present and newer than the input files.

Visualising the effect of the -R

and -f flags

Run kallisto_quant on the first of the

etoh60 samples, then use the --dag option

as shown above to check:

How many jobs will run if you ask again to create this output with no

-f,-For-Roptions?How many if you use the

-foption?How many if you use the

-R trimreadsoption?How many if you edit the Snakefile so that the

qual_thresholdfortrimreadsis “22”, rather than “20”?

This is a way to make the Kallisto result in the first place:

- This command should show four boxes, but all are dotted so no jobs are actually to be run.

The

-fflag re-runs only the job to create the output file, so in this case one box is solid, and only that job will run.With

-R trimreads, the two trimreads jobs will re-run, and Snakemake sees that this also requires re-running kallisto_quant, so the answer is 3.

If you see a message like the one below, it’s because you need to put

an option after trimreads or else Snakemake gets confused

about what are parameters of -R, and what things are

targets.

ERROR

WorkflowError:

Target rules may not contain wildcards.- Editing the Snakefile has the same effect as forcing the trimreads rule to re-run, so again there will be three jobs to be run from the DAG.

With older versions of Snakemake this would not be auto-detected, and

in fact you can see this behaviour if you remove the hidden

.snakemake directory. Now Snakemake has no memory of the

rule change so it will not re-run any jobs unless explicitly told

to.

Removing files to trigger reprocessing

In general, getting Snakemake to re-run things by removing files is a

bad idea, because it’s easy to forget about intermediate files that

actually contain stale results and need to be updated. Using the

-R flag is simpler and more reliable. If in doubt, and if

it will not be too time consuming, keep it simple and just use

-F to run the whole workflow from scratch.

For the opposite case where you want to avoid re-running particular

steps, see the ‑‑touch option of Snakemake mentioned later in the course.

- A job in Snakemake is a rule plus wildcard values (determined by working back from the requested output)

- Snakemake plans its work by arranging all the jobs into a DAG (directed acyclic graph)

- If output files already exist, Snakemake can skip parts of the DAG

- Snakemake compares file timestamps and a log of previous runs to determine what need regenerating

Content from Processing lists of inputs

Last updated on 2025-02-25 | Edit this page

Estimated time: 80 minutes

Overview

Questions

- How do I define a default set of outputs for my Snakefile?

- How do I make rules which combine whole lists of files?

- How do I process all available input files at once?

Objectives

- Use Snakemake to process all our samples at once

- Make a summary of all read counts

For reference, this is the Snakefile you should have to start the episode. We didn’t modify it during the last episode.

Defining a list of samples to process

So far, we’ve told Snakemake what output files to generate by giving the names of the desired files on the command line. Often you want Snakemake to process all the available samples. How can we do this?

The yeast/reads directory contains results from three

conditions: ref, etoh60 and

temp33. There are three replicates for each condition.

There is minor inconsistency in the naming convention, as the

ref files do not have an underscore before the replicate

number. Consistent naming is important for Snakemake, so let’s fix up

the names before we go any further.

A good way to do this is by making symlinks, because that way you don’t lose sight of the original file names.

BASH

$ mv reads original_reads

$ mkdir reads

$ cd reads

$ ln -s ../original_reads/* .

$ rename -v -s ref ref_ *

$ cd ..File renaming

The rename command here is the one provided by Bioconda. Other Linux systems may have a different rename command installed by default.

Having harmonized the file names we’ll tell Snakemake about our conditions and replicates. To do this, we can define some lists as Snakemake global variables.

Global variables should be added before the rules in the Snakefile.

# Input conditions and replicates to process

CONDITIONS = ["ref", "etoh60", "temp33"]

REPLICATES = ["1", "2", "3"]- Unlike with variables in shell scripts, we can put spaces around the

=sign, but they are not mandatory. - The lists of quoted strings are enclosed in square brackets and comma-separated. If you know any Python you’ll recognise this as Python list syntax.

- A good convention is to use capitalized names for these variables, but this is not mandatory.

- Although these are referred to as variables, you can’t actually change the values once the workflow is running, so lists defined this way are more like constants.

Using a Snakemake rule to define a batch of outputs

We’ll add another rule to our Snakefile. This special target rule

will have an input section but no output or

shell sections (yet).

rule all_differences:

input: expand("{cond}_{rep}_1.reads_removed.txt", cond=CONDITIONS, rep=REPLICATES)The expand(...) function in this rule generates a list

of filenames, by taking the first thing in the parentheses as a template

and replacing cond with all the CONDITIONS

and {rep} with all the REPLICATES. Since there

are 3 of each, this will yield 9 combinations - ie. 9 files we want to

make.

This list goes into the input section of the rule. You might think that since these filenames are outputs that we want from our workflow they should go into the output section. However, remember that outputs of a rule are things the rule can make itself, and this rule doesn’t actually make anything. It’s just a placeholder for a bunch of filenames.

We now tell Snakemake to make all these files by using the target rule name on the command line:

Here, Snakemake sees that all_differences is the name of a

rule in the Snakefile, so rather than trying to make a file literally

named all_differences it looks at all the input files for

the rule and tries to make them. In this case, all of the inputs to

all_differences can be made by the

calculate_difference rule, and all of the inputs for those jobs

are made by trimreads and countreads. The resulting

workflow is the same as if we had typed out all 9 of the filenames on

the command line.

If you don’t specify a target rule name or any file names on the command line when running Snakemake, the default is to use the first rule in the Snakefile as the target. So if all_differences is defined at the top, before the other rules, you can simply say:

Rules as targets

Giving the name of a rule to Snakemake on the command line only works when that rule has no wildcards in the outputs, because Snakemake has no way to know what the desired wildcards might be. You will see the error “Target rules may not contain wildcards.” This can also happen when you don’t supply any explicit targets on the command line at all, and Snakemake tries to run the first rule defined in the Snakefile.

Counting all the reads

Check that the all_differences rule is working. Now adapt

the Snakefile so that it makes all the reads_removed.txt for

both of the pairs of reads (_1.fq and _2.fq).

So you should end up with 18 output files in total.

This will work.

# Input conditions and replicates to process

CONDITIONS = ["ref", "etoh60", "temp33"]

REPLICATES = ["1", "2", "3"]

READ_ENDS = ["1", "2"]

# Rule to make all reads_removed.txt files at once

rule all_differences:

input: expand("{cond}_{rep}_{end}.reads_removed.txt", cond=CONDITIONS, rep=REPLICATES, end=READ_ENDS)Alternatively you can put the list directly into the

expand() function rather than declaring more variables. To

aid readability of the code it’s also possible to split the function

over more than one line, but note that this only works if you put a

newline after the input: line too.

# Input conditions and replicates to process

CONDITIONS = ["ref", "etoh60", "temp33"]

REPLICATES = ["1", "2", "3"]

# Rule to make all reads_removed.txt files at once

rule all_differences:

input:

expand( "{cond}_{rep}_{end}.reads_removed.txt", cond = CONDITIONS,

rep = REPLICATES,

end = READ_ENDS )Rules that combine multiple inputs

Our all_differences rule is a rule which takes a list of

input files. The length of that list is not fixed by the rule, but can

change based on CONDITIONS and REPLICATES. If

we want to perform some combining operation on the whole list of files,

we can add output and shell sections to this

rule.

In typical bioinformatics workflows, the final steps will combine all the results together into some big report. For our final workflow we’ll be doing this with MultiQC, but as a simple first example, let’s just concatenate all the text files. In the shell this would be:

In the Snakemake rule we just say:

Within a rule definition you can combine named inputs and list inputs

- any named input can be list of files rather than just a single file.

When you use the {input.name} placeholder in the shell

command it will expand to the full list.

Combining the inputs of the all_differences rule

- Make it so that the all_differences rule concatenates all

the

.reads_removed.txtfiles into a single output file namedall_reads_removed.txt. - Adapt the rule further so that there are two outputs named

all_read1_removed.txtandall_read2_removed.txt, and the respective counts go into each.

Hint: you can put two commands into the shell part,

separated by a semicolon ;.

rule all_differences:

input:

expand( "{cond}_{rep}_{end}.reads_removed.txt", cond = CONDITIONS,

rep = REPLICATES,

end = READ_ENDS )

output:

"all_reads_removed.txt"

shell:

"cat {input} > {output}"And for the version with separate output files.

rule all_differences:

input:

read1 = expand( "{cond}_{rep}_1.reads_removed.txt", cond = CONDITIONS,

rep = REPLICATES ),

read2 = expand( "{cond}_{rep}_2.reads_removed.txt", cond = CONDITIONS,

rep = REPLICATES ),

output:

read1 = "all_read1_removed.txt",

read2 = "all_read2_removed.txt",

shell:

"cat {input.read1} > {output.read1} ; cat {input.read2} > {output.read2}"This works, but may more elegantly be broken down into two rules, so

that once again the target rule has only input and no

outputs. There is a quirk where we need to put extra braces

around {{end}} in the place where this is a wildcard, and

not to be expanded out.

rule all_differences:

input: expand("all_read{end}_removed.txt", end=["1","2"])

rule all_differences_per_end:

input:

expand( "{cond}_{rep}_{{end}}.reads_removed.txt", cond = CONDITIONS,

rep = REPLICATES )

output:

"all_read{end}_removed.txt"

shell:

"cat {input} > {output}"To run any version of the rule:

Dynamically determining the inputs

In the shell we can match multiple filenames with glob

patterns like original_reads/* or

reads/ref?_?.fq. Snakemake allows you to do something

similar with the glob_wildcards() function, so we’ll use

this in our Snakefile.

CONDITIONS = glob_wildcards("reads/{condition}_1_1.fq").condition

print("Conditions are: ", CONDITIONS)Here, the list of conditions is captured from the files seen in the

reads directory. The pattern used in glob_wildcards() looks

much like the input and output parts of rules, with a wildcard in {curly

brackets}, but here the pattern is being used to search for matching

files. We’re only looking for read 1 of replicate 1 so this will return

just three matches

Rather than getting the full file names, the function yields the

values of the wildcard, which we can assign directly to a list. The

print() statement will print out the value of

CONDITIONS when the Snakefile is run (including dry-run

mode, activated with the -n option as mentioned in Episode 2), and reassures us that the

list really is the same as before.

BASH

$ snakemake -j1 -F -n -p all_differences

Conditions are: ['etoh60', 'temp33', 'ref']

Building DAG of jobs...

Job stats:

job count

----------------------- -------

all_differences 1

all_differences_per_end 2

calculate_difference 18

countreads 36

trimreads 18

total 75

...Using glob_wildcards() gets a little more tricky when

you need a more complex match. To refine the match we can quickly test

out results by activating the Python interpreter. This saves editing the

Snakefile and running Snakemake just to see what

glob_wildcards() will match.

PYTHON

$ python3

>>> from snakemake.io import glob_wildcards, expand

>>> glob_wildcards("reads/{condition}_1_1.fq")

Wildcards(condition=['etoh60', 'temp33', 'ref'])This is the result we got before. So far, so good.

The Python interpreter

The Python interpreter is like a special shell for Python commands, and is a familiar to anyone who has learned Python. Because Snakemake functions are actually Python functions they can be run from the interpreter after being imported.

Note that >>> is the Python interpreter prompt,

and not part of the command to be typed. You can exit back to the

regular shell prompt by typing exit(). If you do exit and

then restart the interpreter, you will need to repeat the

import line.

‘Globbing’ the list of samples

Staying in the Python interpreter, use glob_wildcards()

to list the names of all nine samples, that is the three replicates of

each condition.

‘Globbing’ the list of samples (continued)

Still in the Python interpreter, use the expand()

function in combination with the glob_wildcards() to make a

list of all the count files, and then all the kallisto_quant

output files that would be generated for all the nine samples.

Remember you can save the result of glob_wildcards() to

a variable - this works the same way in the Python interpreter as it

does in the Snakefile.

Glob with multiple wildcards

If there are two or more wildcards in the glob pattern, dealing with the result becomes a little more tricky, but it does work.

Here is one way to re-combine two wildcards using

expand() and zip (demonstrated in the Python

interpreter as above).

PYTHON

$ python3

>>> from snakemake.io import glob_wildcards, expand

>>> DOUBLE_MATCH = glob_wildcards( "reads/{condition}_{samplenum}_1.fq" )

>>> DOUBLE_MATCH

Wildcards(condition=['temp33', 'temp33', 'etoh60', 'etoh60', 'ref', 'temp33', 'etoh60', 'ref', 'ref'],

samplenum=['3', '2', '1', '3', '2', '1', '2', '1', '3'])

>>> SAMPLES = expand(

... "{condition}_{samplenum}",

... zip,

... condition = DOUBLE_MATCH.condition,

... samplenum = DOUBLE_MATCH.samplenum )

>>> SAMPLES

['temp33_3', 'temp33_2', 'etoh60_1', 'etoh60_3', 'ref_2', 'temp33_1', 'etoh60_2', 'ref_1', 'ref_3']

>>> sorted(SAMPLES)

['etoh60_1', 'etoh60_2', 'etoh60_3', 'ref_1', 'ref_2', 'ref_3', 'temp33_1', 'temp33_2', 'temp33_3']Rules that make multiple outputs

If we can have rules that combine lists of files, can we do the opposite and have a rule that produces a list of outputs?

The answer is yes, but the situation is not completely symmetrical. Remember that Snakemake works out the full list of input and output files to every job before it runs a single job in the workflow. For a combining step, Snakemake will know how many samples/replicates are being combined from the start. For a splitting step, it may or may not be possible to predict the number of output files in advance. For cases where you really do need to handle a dynamic list of outputs, Snakemake has things called checkpoint rules.

In practise these are very rarely needed, so we’ll not be covering them here in the course.

For reference, this is a Snakefile incorporating the changes made in this episode.

- Rename your input files if necessary to maintain consistent naming

- List the things you want to proces as global variables, or discover

input files with

glob_wildcards() - Use the

expand()function to generate lists of filenames you want to combine - These functions can be tested in the Python interpreter

- Any

{input}to a rule can be a variable-length list - (But variable lists of outputs are trickier and rarely needed)

Content from Handling awkward programs

Last updated on 2024-10-07 | Edit this page

Estimated time: 80 minutes

Overview

Questions

- How do I handle tools which don’t let me specify output file names?

- How do I define a rule where the output is a directory?

Objectives

- Add the FastQC tool to the pipeline

- Understand some different choices available when defining a rule

- Learn about the

directory()function - Add multiple command lines to the

shellsection of a rule

For reference, this is the Snakefile you should have to start the episode.

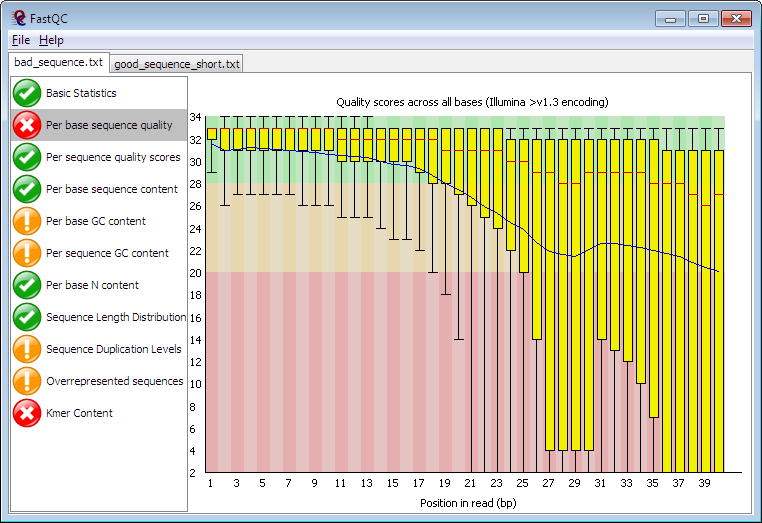

Introducing FastQC

FastQC is a popular tool for scanning FASTQ files and producing a selection of quality plots. It’s “fast” in that it runs quickly, and also in that you can normally use the default options so there is no configuration needed.

The program can be run interactively or in batch mode, where it saves out results as an HTML file plus a ZIP file. We’ll obviously need to use the batch mode to include it as part of our workflow, and we’ll see shortly that this presents a minor problem.

In general, real bioinformatics programs like FastQC can have quirks like:

- Not allowing you to specify input or output file names

- Outputting a whole directory of files

- Creating temporary files in various places

- Not supporting robust error handling

With a little care we can handle all these problems while still keeping our workflow tidy.

Adding a FastQC rule

We’ll first run FastQC on a single input file and see what is produced.

BASH

$ fastqc reads/ref_1_1.fq

$ ls -ltr reads

...

-rw-r--r-- 1 zenmaster users 464852 Jun 9 14:31 ref_1_1_fastqc.zip

-rw-r--r-- 1 zenmaster users 654810 Jun 9 14:31 ref_1_1_fastqc.htmlIt’s possible to supply multiple input files, but the resulting

output is exactly the same as if processing the files one at a time. Two

files are produced for each FASTQ file, and these files appear in the

same directory as the input file. The fastqc command does

not let us choose the output filenames, but we can set an alternative

output directory.

BASH

$ fastqc --help

...

-o --outdir Create all output files in the specified output directory.

Please note that this directory must exist as the program

will not create it. If this option is not set then the

output file for each sequence file is created in the same

directory as the sequence file which was processed.

...For the countreads rule we wrote earlier (see episode 4), we chose our preferred

output file name pattern so as to allow us to effectively link rules.

This gives us a rule that can count both trimmed and

untrimmed reads.

# Our existing countreads rule, for reference...

rule countreads:

output: "{indir}.{myfile}.fq.count"

input: "{indir}/{myfile}.fq"

shell:

"echo $(( $(wc -l <{input}) / 4 )) > {output}"To do the same with FastQC, to report on both the trimmed and untrimmed reads, we have various options:

- Work with the default file names produced by FastQC and leave the reports in the same directory with the FASTQ files.

- Make the outputs in a new directory named, eg. “reads.fastqc.ref_1_1/” (similar to what we did with Kallisto).

- Do this, but tell Snakemake that the directory itself is the output.

- Force our preferred naming convention by renaming the FastQC output files within the rule.

We’ll try all four, and see where this gets us.

Option 1: Working with the default FastQC output files

Adding a FastQC rule using the default output file names

Fill in the ??? to make a working rule for FastQC where

indir may be “reads” or “trimmed”. Do not change the shell

command or input pattern at all. Remember FastQC always makes two output

files, so add two named outputs.

rule fastqc:

output:

???

input: "{indir}/{myfile}.fq"

shell:

"fastqc {input}"Since the shell command is not to be changed, the output

names will be dictated by FastQC as we saw when running the command

directly in the terminal.

rule fastqc:

output:

html = "{indir}/{myfile}_fastqc.html",

zip = "{indir}/{myfile}_fastqc.zip",

input: "{indir}/{myfile}.fq"

shell:

"fastqc {input}"This rule contains wildcards, so in order to run it you specify one or more target output files:

This rule is fine, but maybe we don’t want to put the reports in with the sequences. As a general principle, when writing Snakemake rules, we want to be in charge of output file names. FastQC lets us specify the output directory, so we can use that…

Option 2: Using the default output filenames in a new directory

A FastQC rule where the output files go into a new directory

Modify the rule so that the output files go into a new directory.

This will be very similar to the rule for kallisto quant

added in episode 3.

For example, when running on the file “trimmed/ref_1_1.fq” the outputs should be

OUTPUT

trimmed.fastqc.ref_1_1/ref_1_1_fastqc.html

trimmed.fastqc.ref_1_1/ref_1_1_fastqc.zipThis involves using the {myfile} wildcard twice and then constructing

the output directory name to place in the -o option to

fastqc.

rule fastqc:

output:

html = "{indir}.fastqc.{myfile}/{myfile}_fastqc.html",

zip = "{indir}.fastqc.{myfile}/{myfile}_fastqc.zip",

input: "{indir}/{myfile}.fq"

shell:

"fastqc -o {wildcards.indir}.fastqc.{wildcards.myfile} {input}"Option 3: Using a directory() output

Our next option is to tell Snakemake not to worry about the individual files at all, and just say that the output of the rule is a whole directory. This makes the rule definition simpler.

We’ll amend the fastqc rule like so:

rule fastqc:

output: directory("{indir}.fastqc.{myfile}")

input: "{indir}/{myfile}.fq"

shell:

"fastqc -o {output} {input}"Only use directory() on outputs,

not inputs

You only use the directory() declaration for outputs.

Any input to a rule may be a directory without the need for any special

syntax.

On running this, we get an error.

BASH

$ snakemake -j1 -p reads.fastqc.ref_1_1

...

Specified output directory 'reads.fastqc.ref_1_1' does not exist

...This error is being printed by FastQC. FastQC requires that the

output directory must exist. (Other programs might insist that the

output directory does not already exist.) The error can be

rectified by making the directory explicitly in the shell

code.

rule fastqc:

output: directory("{indir}.fastqc.{myfile}")

input: "{indir}/{myfile}.fq"

shell:

"mkdir {output} ; fastqc -o {output} {input}"The rationale for making output directories

Remember that in most cases it is not necessary to manually create

directories because Snakemake will auto-create the directory for every

output file listed by a rule. When using a directory()

output, Snakemake will not create the directory itself but most

applications will make the directory for you. FastQC is an exception.

The best approach is to only add a mkdir command if you

first test the rule without it and see an error.

The modified rule works because the shell part of the

rule can contain a whole script, with multiple commands to be run. Above

we used a semicolon to split the commands on one line. For putting

multiple lines into a shell section there is a special

quoting syntax.

rule fastqc:

output: directory("{indir}.fastqc.{myfile}")

input: "{indir}/{myfile}.fq"

shell:

"""mkdir {output}

fastqc -o {output} {input}

"""The “triple quoting” syntax comes from Python. Not only does it allow

multiple lines to be added within the quotes but it also allows you to

embed both single and double quotes into the shell commands. For a

further discussion of string quoting and a way to disable the

interpretation of “backslash escapes” like \n and

\t see the extra

episode on quoting

This rule is also fine, but because the individual files are not explicitly named as outputs we may have problems chaining later rules. Also consider that some applications won’t give you any control at all over the output filenames. The most powerful solution is to use shell commands to move and/or rename the files to exactly the names you want.

Option 4: Insisting on our own file names

Fixing FastQC to use our own output file names

Complete the rule below so that the output filenames are correctly

produced. You will need to add extra commands to the shell

part after running fastqc. Do not alter the

output or input parts of the rule.

rule fastqc:

output:

html = "{indir}.{myfile}_fastqc.html",

zip = "{indir}.{myfile}_fastqc.zip"

input: "{indir}/{myfile}.fq"

shell:

"""???

"""This is one solution, using -o . to tell FastQC to

produce the files in the current directory, then explicitly renaming

them to match the declared rule outputs. Remember that, after Snakemake

runs all the commands in the shell block, it checks to see

that all the expected output files are present, but within

the block you can run any commands and make any files you like.

rule fastqc:

output:

html = "{indir}.{myfile}_fastqc.html",

zip = "{indir}.{myfile}_fastqc.zip"

input: "{indir}/{myfile}.fq"

shell:

"""fastqc -o . {input}

mv {wildcards.myfile}_fastqc.html {output.html}

mv {wildcards.myfile}_fastqc.zip {output.zip}

"""One problem with making intermediate outputs

There is actually a problem with the above solution which only starts

to matter when we allow Snakemake to run multiple jobs in parallel.

Right now we are always using -j1, but if we used, eg.

-j2, then potentially Snakemake may try to make

“reads.ref_1_1_fastqc.html” and “trimmed.ref_1_1_fastqc.html” in

parallel, and both instances would be trying to write to the same

temporary files at the same time. Snakemake has an elegant solution to

this, in the form of shadow rules, but we’re getting ahead

of ourselves. For now we’re running one job at a time, and this will

work.

Altering the Kallisto rule to have a directory()

output

We saw above that the output of a rule can be a directory and saw the

directory() function which declares this. If you remember

the rule for kallisto quant you may be thinking that this

could have been written with the whole directory as the output, and you

would be right.

# Existing rule for kallisto_quant

rule kallisto_quant:

output:

h5 = "kallisto.{sample}/abundance.h5",

tsv = "kallisto.{sample}/abundance.tsv",

json = "kallisto.{sample}/run_info.json",

...

# Alternative rule with directory() output

rule kallisto_quant:

output: directory("kallisto.{sample}")

...Make the change in your Snakefile now. In other workflows this might not be the right approach but in this case it works fine and makes the Snakefile neater. It will also make sense in the next chapter where we add the remaining rules and finish the workflow.

For reference, this is a Snakefile incorporating the changes made in this episode.

- Different bioinformatics tools will have different quirks

- If programs limit your options for choosing input and output filenames, you have several ways to deal with this

- Use triple-quote syntax to make longer shell scripts with multiple commands

Content from Finishing the basic workflow

Last updated on 2025-02-25 | Edit this page

Estimated time: 80 minutes

Overview

Questions

- What does the full sample workflow look like?

- How can we report some initial results from this analysis?

Objectives

- Finish the sample workflow to produce a final MultiQC report

- See more ways to handle awkward software by adding extra setup shell commands

For reference, this is the Snakefile you should have to start the episode.

We’ve seen how to link rules in a pipeline and how to merge all the

results at the final step. This is the basic pattern for many analysis

workflows. For simplicity, in episode 6, we just used the

cat command to combine all the .txt files but

now we’ll use MultiQC to combine the results from Kallisto and

FastQC into a single report for all samples. We’ll also add in an

alternative quantification tool called Salmon and this will

complete the pipeline.

The full workflow we are constructing

- fastq_quality_trimmer is part of the FastX toolkit and removes low-quality basecalls from the raw reads. We first used it in episode 2.

- FastQC calculates a variety of metrics on a FASTQ file and produces an HTML report and a ZIP file. We introduced this in episode 7.

- Kallisto performs pseudo-alignment of the reads to a reference transcriptome and produces a table of transcript abundance. We first used it in episode 4.

- Salmon is a alternative to Kallisto, using a different transcript quantification algorithm. We’ve not used it yet.

- MultiQC combines the reports from various tools, including FastQC, Kallisto, and Salmon, into a single HTML report over all samples. This is by no means a full RNA-Seq analysis report but today it completes our Snakemake pipeline.

At this point we have everything we need, in terms of Snakemake knowledge, to add the two remaining tools and complete the Snakefile. As with FastQC, some quirks in the way the new tools work will need to be accounted for.

Adding Salmon as an alternative to Kallisto

At the end of the previous episode we modified the kallisto rule by declaring that the output of the rule was a directory, rather than explicitly listing the three files that Kallisto writes in that directory. You should ensure that you are working with this new version of the kallisto rule as it will be a template for adding a salmon rule.

Adding Salmon as an alternative to Kallisto

An alternative application for transcript quantification is Salmon. The procedure is virtually identical, to Kallisto, having an indexing step and a quantification step. Note that in real usage you are advised to prepare and add decoy sequences to the transcriptome index, but for the purposes of this tutorial we’ll just keep things as simple as possible.

Based upon the following command templates:

BASH

$ salmon index -t <transcriptome as fasta> -i <index name> -k 31

$ salmon quant -i <index name> -l A -1 <fastq1> -2 <fastq2> --validateMappings -o <output path>Add a pair of rules to index and quantify the reads with Salmon. Note that:

- Unlike Kallisto, the index produced by Salmon is a directory of files, not a single file - so both of these new rules will produce a directory as output.

- As noted in the last episode, you only need the

directory()marker on the outputs of rules, not the inputs.

rule salmon_quant:

output: directory("salmon.{sample}")

input:

index = "Saccharomyces_cerevisiae.R64-1-1.salmon_index",

fq1 = "trimmed/{sample}_1.fq",

fq2 = "trimmed/{sample}_2.fq",

shell:

"salmon quant -i {input.index} -l A -1 {input.fq1} -2 {input.fq2} --validateMappings -o {output}"

rule salmon_index:

output:

idx = directory("{strain}.salmon_index")

input:

fasta = "transcriptome/{strain}.cdna.all.fa.gz"

shell:

"salmon index -t {input.fasta} -i {output.idx} -k 31"If you copied the kallisto_index rule and logged the output of salmon_index to a file this is fine. Just make sure when copying and pasting that you change all the parts that need to change!

Combining the outputs with MultiQC