Content from Introduction to Deep Learning

Last updated on 2024-05-30 | Edit this page

Overview

Questions

- What is machine learning and what is it used for?

- What is deep learning?

- How do I use a neural network for image classification?

Objectives

- Explain the difference between artificial intelligence, machine learning and deep learning.

- Understand the different types of computer vision tasks.

- Perform an image classification using a convolutional neural network (CNN).

Deep Learning, Machine Learning and Artificial Intelligence

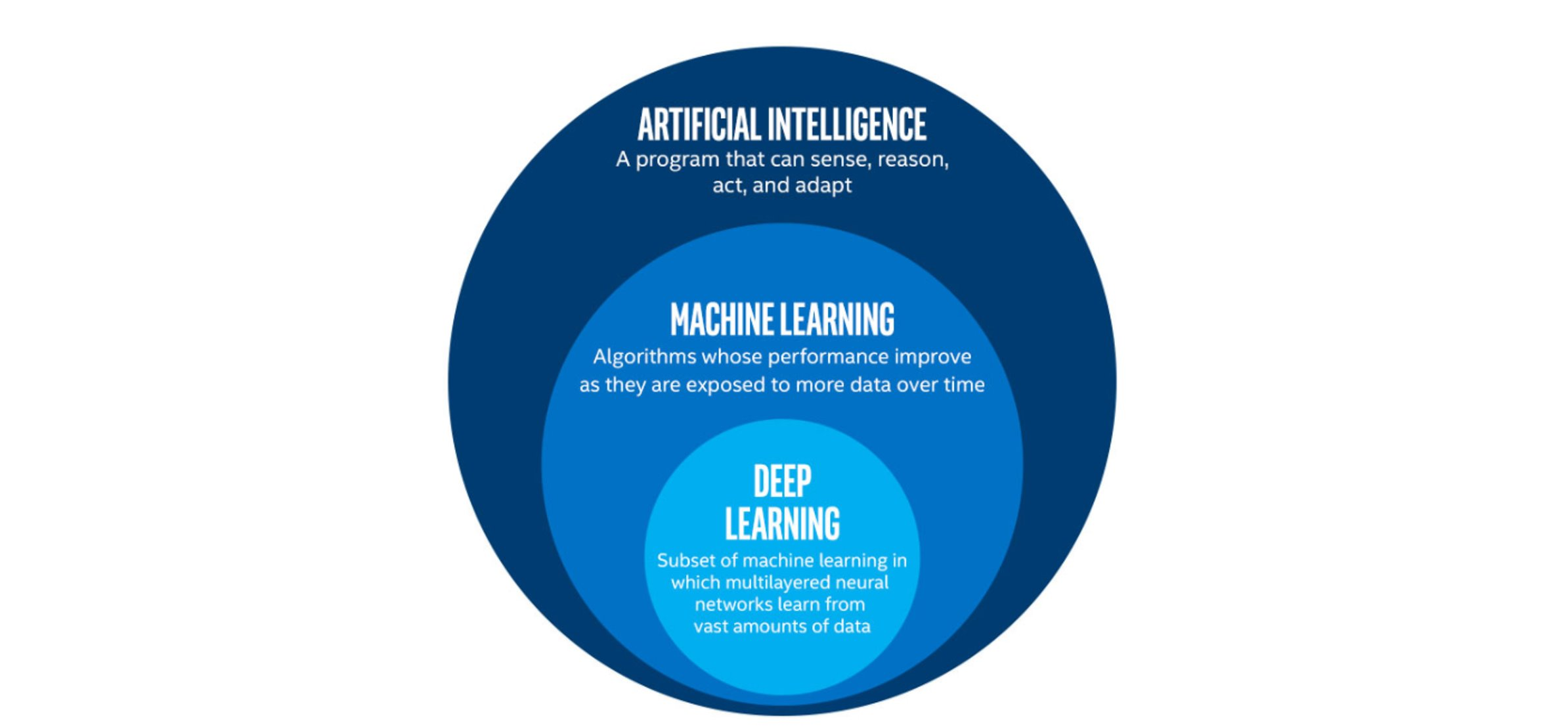

Artificial Intelligence (AI) is the broad field that involves creating machines capable of performing tasks that typically require human intelligence. This includes everything from recognizing speech and images to making decisions and translating languages. Within AI, Machine Learning (ML) is a subset focused on the development of algorithms that allow computers to learn and improve from experience without being explicitly programmed.

Deep Learning (DL), a further subset of ML, utilizes neural networks with many layers (hence “deep”) to model complex patterns in large amounts of data. This technique has led to significant advances in various fields, such as image and speech recognition.

Since the 1950s, the idea of AI has captured the imagination of many, often depicted in science fiction as machines with human-like or even superior intelligence. While recent advancements in AI and ML have been remarkable, we are currently capable of achieving human-like intelligence only in specific areas. The goal of creating a general-purpose AI, one that can perform any intellectual task a human can, remains a long-term challenge.

The image below illustrates some differences between artificial intelligence, machine learning and deep learning.

What is machine learning?

Machine learning is a set of tools and techniques which let us find patterns in data. The techniques break down into two broad categories, predictors and classifiers. Predictors are used to predict a value (or set of values) given a set of inputs whereas classifiers try to classify data into different categories, or assign a label.

Many, but not all, machine learning systems “learn” by taking a series of input data and output data and using it to form a model. The maths behind the machine learning doesn’t care what the data is as long as it can represented numerically or categorised. Some examples might include:

- Predicting a person’s weight based on their height.

- Predicting house prices given stock market prices.

- Classifying an email as spam or not.

- Classifying an image as, e.g., a person, place, or particular object.

Typically we train our models with hundreds, thousands or even millions of examples before they work well enough to do any useful predictions or classifications with them.

This lesson will introduce you to only one of these techniques, Deep Learning with Convolutional Neural Network, abbreviated as CNN, but there are many more.

A CNN is a DL algorithm that has become a cornerstone in image classification due to its ability to automatically learn features from images in a hierarchical fashion (i.e. each layer builds upon what was learned by the previous layer). It can achieve remarkable performance on a wide range of tasks.

What is image classification?

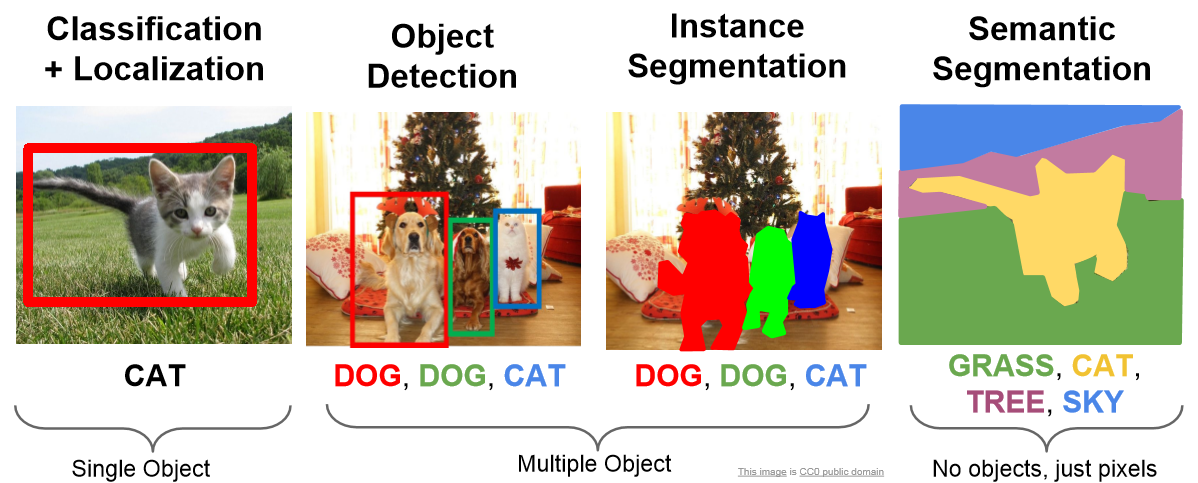

Image classification is a fundamental task in computer vision, which is a field of artificial intelligence focused on teaching computers to interpret and understand visual information from the world. Image classification specifically involves the process of assigning a label or category to an input image. The goal is to enable computers to recognise and categorise objects, scenes, or patterns within images, just as a human would. Image classification can refer to one of several computer vision tasks:

Image classification has numerous practical applications, including:

- Object Recognition: Identifying objects within images, such as cars, animals, or household items.

- Medical Imaging: Diagnosing diseases from medical images like X-rays or MRIs.

- Quality Control: Inspecting products for defects on manufacturing lines.

- Autonomous Vehicles: Identifying pedestrians, traffic signs, and other vehicles in self-driving cars.

- Security and Surveillance: Detecting anomalies or unauthorised objects in security footage.

Deep Learning Workflow

To apply Deep Learning to a problem there are several steps to go through:

Step 1. Formulate / Outline the problem

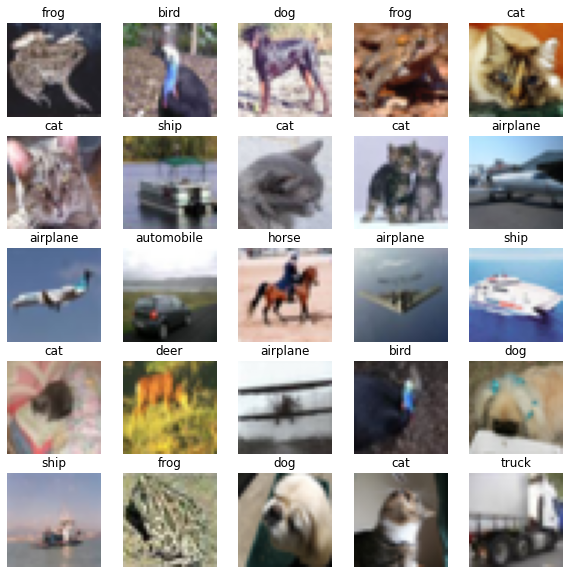

Firstly we must decide what it is we want our Deep Learning system to do. This lesson is all about image classification so our aim is to put an image into one of a few categories. Specifically in our case, we have 10 categories: airplane, automobile, bird, cat, deer, dog, frog, horse, ship, truck

Step 2. Identify inputs and outputs

Next identify what the inputs and outputs of the neural network will be. In our case, the data is images and the inputs could be the individual pixels of the images. We are performing a classification problem and we will have one output for each potential class.

Step 3. Prepare data

Many datasets are not ready for immediate use in a neural network and will require some preparation. Neural networks can only really deal with numerical data, so any non-numerical data (e.g., images) will have to be somehow converted to numerical data. Information on how this is done and the data structure will be explored in Episode 02 Introduction to Image Data.

For this lesson, we will use an existing image dataset known as CIFAR-10 (Canadian Institute for Advanced Research). We will introduce the different data preparation tasks in more detail in the next episode but for this introduction, we will prepare the datset with these steps:

- normalise the image pixel values to be between 0 and 1

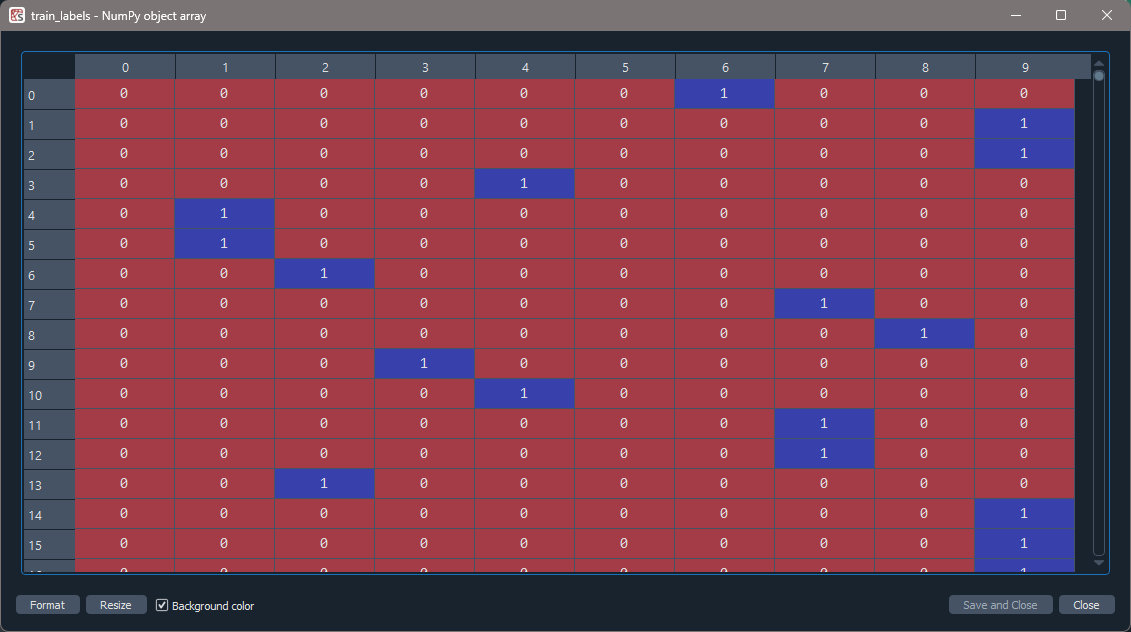

- one-hot encode the training image labels

- divide the data into training, validation, and test subsets

Preparing the code

It is the goal of this training workshop to produce a Deep Learning program, using a Convolutional Neural Network. At the end of this workshop, we hope this code can be used as a “starting point”. We will create an “initial program” for this introduction chapter that will be used as a foundation for the rest of the episodes.

Here’s one we prepared earlier!

To follow along in Spyder, Set the working directory to the ‘…/intro-image-classification-cnn/scripts’ folder where ‘…’ is your project folder.

PYTHON

# load the required packages

from tensorflow import keras # for neural networks

from sklearn.model_selection import train_test_split # for splitting data into sets

import matplotlib.pyplot as plt # for plotting

# create a function to prepare the training dataset

def prepare_dataset(train_images, train_labels):

# normalize the RGB values to be between 0 and 1

train_images = train_images / 255.0

# one hot encode the training labels

train_labels = keras.utils.to_categorical(train_labels, len(class_names))

# split the training data into training and validation set

train_images, val_images, train_labels, val_labels = train_test_split(

train_images, train_labels, test_size = 0.2, random_state=42)

return train_images, val_images, train_labels, val_labels

# load the data

(train_images, train_labels), (test_images, test_labels) = keras.datasets.cifar10.load_data()

# create a list of class names associated with each CIFAR-10 label

class_names = ['airplane', 'automobile', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']

### Step 3. Prepare data

# prepare the dataset for training

train_images, val_images, train_labels, val_labels = prepare_dataset(train_images, train_labels)CHALLENGE Examine the CIFAR-10 dataset

OUTPUT

Train: Images=(40000, 32, 32, 3), Labels=(40000, 10)

Validate: Images=(10000, 32, 32, 3), Labels=(10000, 10)

Test: Images=(10000, 32, 32, 3), Labels=(10000, 1)The training set consists of 40000 images of 32x32 pixels and three channels (RGB values) and one-hot encoded labels.

The validation set consist of 10000 images of 32x32 pixels and three channels (RGB values) and one-hot encoded labels.

The test set consists of 10000 images of 32x32 pixels and three channels (RGB values) and labels.

Visualise a subset of the CIFAR-10 dataset

PYTHON

# set up plot region, including width, height in inches

fig, axes = plt.subplots(nrows=5, ncols=5, figsize=(10,10))

# add images to plot

for i,ax in enumerate(axes.flat):

ax.imshow(train_images[i])

ax.axis('off')

ax.set_title(class_names[train_labels[i,].argmax()])

# view plot

plt.show()

Step 4. Choose a pre-trained model or build a new architecture from scratch

Often we can use an existing neural network instead of designing one from scratch. Training a network can take a lot of time and computational resources. There are a number of well publicised networks which have been demonstrated to perform well at certain tasks. If you know of one which already does a similar task well, then it makes sense to use one of these.

If instead we decide to design our own network, then we need to think about how many input neurons it will have, how many hidden layers and how many outputs, and what types of layers to use. This will require some experimentation and tweaking of the network design a few times before achieving acceptable results.

Here we present an initial model to be explained in detail later on:

Define the Model

PYTHON

def create_model_intro():

# CNN Part 1

# Input layer of 32x32 images with three channels (RGB)

inputs_intro = keras.Input(shape=train_images.shape[1:])

# CNN Part 2

# Convolutional layer with 16 filters, 3x3 kernel size, and ReLU activation

x_intro = keras.layers.Conv2D(filters=16, kernel_size=(3,3), activation='relu')(inputs_intro)

# Pooling layer with input window sized 2x2

x_intro = keras.layers.MaxPooling2D(pool_size=(2,2))(x_intro)

# Second Convolutional layer with 32 filters, 3x3 kernel size, and ReLU activation

x_intro = keras.layers.Conv2D(filters=32, kernel_size=(3,3), activation='relu')(x_intro)

# Second Pooling layer with input window sized 2x2

x_intro = keras.layers.MaxPooling2D(pool_size=(2,2))(x_intro)

# Flatten layer to convert 2D feature maps into a 1D vector

x_intro = keras.layers.Flatten()(x_intro)

# Dense layer with 64 neurons and ReLU activation

x_intro = keras.layers.Dense(units=64, activation='relu')(x_intro)

# CNN Part 3

# Output layer with 10 units (one for each class) and softmax activation

outputs_intro = keras.layers.Dense(units=10, activation='softmax')(x_intro)

# create the model

model_intro = keras.Model(inputs = inputs_intro,

outputs = outputs_intro,

name = "cifar_model_intro")

return model_intro

# create the introduction model

model_intro = create_model_intro()

# view model summary

model_intro.summary()Step 5. Choose a loss function and optimizer and compile model

To set up a model for training we need to compile it. This is when you set up the rules and strategies for how your network is going to learn.

The loss function tells the training algorithm how far away the predicted value was from the true value. We will learn how to choose a loss function in more detail in Episode 4 Compile and Train (Fit) a Convolutional Neural Network.

The optimizer is responsible for taking the output of the loss function and then applying some changes to the weights within the network. It is through this process that “learning” (adjustment of the weights) is achieved.

Step 6. Train the model

We can now go ahead and start training our neural network. We will probably keep doing this for a given number of iterations through our training dataset (referred to as epochs) or until the loss function gives a value under a certain threshold.

PYTHON

# fit the model

history_intro = model_intro.fit(x = train_images, y = train_labels,

batch_size = 32,

epochs = 10,

validation_data = (val_images, val_labels))Your output will begin to print similar to the output below:

OUTPUT

Epoch 1/10

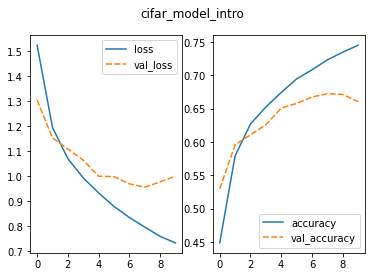

1250/1250 [==============================] - 15s 12ms/step - loss: 1.4651 - accuracy: 0.4738 - val_loss: 1.2736 - val_accuracy: 0.5507What does this output mean?

This output printed during the fit phase, i.e. training the model against known image labels, can be broken down as follows:

-

Epochdescribes the number of full passes over all training data. - `In the output above, there are 1250 batches

(steps) to complete each epoch.

- This number is calculated as the total number of images used as input divided by the batch size (40000/32). After 1250 batches, all training images will have been seen once and the model moves on to the next epoch.

-

lossis a value the model will attempt to minimise and is a measure of the dissimilarity or error between the true label of an image and the model prediction. Minimising this distance is where learning occurs to adjust weights and bias which reduceloss. -

val_lossis a value calculated against the validation data and is a measure of the model’s performance against unseen data.- Both values are a summation of errors made during each epoch.

-

accuracyandval_accuracyvalues are a percentage and are only revelant to classification problems.- The

val_accuracyscore can be used to communicate a model’s effectiveness on unseen data.

- The

Step 7. Perform a Prediction/Classification

After training the network we can use it to perform predictions. This is how you would use the network after you have fully trained it to a satisfactory performance. The predictions performed here on a special hold-out set is used in the next step to measure the performance of the network. Make sure the images you use to test are prepared the same way as the training images.

PYTHON

# normalize test dataset RGB values to be between 0 and 1

test_images = test_images / 255.0

# make prediction for the first test image

result_intro = model_intro.predict(test_images[0].reshape(1,32,32,3))

print(result_intro)

# extract class with highest probability

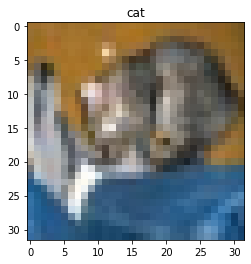

print(class_names[result_intro.argmax()])OUTPUT

1/1 [==============================] - 0s 93ms/step

[[0.00896197 0.00345764 0.20091638 0.3295959 0.3042777 0.03966621 0.06654432 0.00352677 0.03928733 0.00376582]]

catCongratulations, you just created your first image classification model and used it to classify an image!

Was the classification correct?

PYTHON

# plot the first test image with its true label

# create a plot

plt.figure()

# display image

plt.imshow(test_images[0])

plt.title('True class:' + class_names[result_intro.argmax()])

# view plot

plt.show()

My result is different! Why? What can I do about?

This is actually not surprising. The architecture we are using is shallow and the model only trained for 10 epochs.

Training a model for more epochs (longer time) and using a deeper model (more layers) usually helps it learn better and give more accurate predictions. When a model has learned well and its performance doesn’t change much with more training, we say it has ‘converged.’ Convergence refers to the point where the model has reached an optimal or near-optimal state in terms of learning from the training data. If you are finding significant differences in the model predictions, this could be a sign the model is not fully converged.

You may even find you get a different answer if you run this model again. Although the neural network itself is deterministic (ie without randomness), various factors in the training process, system setup, and hardware configurations can lead to small variations in the output. These variations are usually minor and should not significantly impact the overall performance of the model, if it has fully converged.

There are many ways to try to improve the accuracy of our model, such as adding or removing layers to the model definition and fine-tuning the hyperparameters, which takes us to the next steps in our workflow.

Step 8. Measure Performance

Once we trained the network we want to measure its performance. To do this, we use additional data that was not part of the training, called a test dataset. There are many different methods available for measuring performance and which one is best depends on the type of task we are attempting. These metrics are often published as an indication of how well our network performs.

Step 9. Tune Hyperparameters

When building image recognition models in Python, especially using libraries like TensorFlow or Keras, the process involves not only designing a neural network but also choosing the best values for various hyperparameters that govern the training process.

Hyperparameters are all the parameters set by the person configuring the model as opposed to those learned by the algorithm itself. These hyperparameters can include the learning rate, the number of layers in the network, the number of neurons per layer, and many more. Hyperparameter tuning refers to the process of systematically searching for the best combination of hyperparameters that will optimise the model’s performance. This concept will be continued, with practical examples, in Episode 05 Evaluate a Convolutional Neural Network and Make Predictions (Classifications).

Step 10. Share Model

Once we have a trained network that performs at a level we are happy with we can use it to predict on real-world data. At this point we might want to consider publishing a file with both the architecture of our network and the weights which it has learned (assuming we did not use a pre-trained network). This will allow others to use it as as pre-trained network for their own purposes and for them to (mostly) reproduce our result.

To share the model we must save it first:

We will return to each of these workflow steps throughout this lesson and discuss each component in more detail.

- Machine learning is the process where computers learn to recognise patterns of data.

- Deep learning is a subset of machine learning, which is a subset of artificial intelligence.

- Convolutional neural networks are well suited for image classification.

- To use Deep Learning effectively we follow a workflow of: defining the problem, identifying inputs and outputs, preparing data, choosing the type of network, training the model, tuning hyperparameters, and measuring performance before we can classify data.

Content from Introduction to Image Data

Last updated on 2024-05-30 | Edit this page

Overview

Questions

- How much data do you need for Deep Learning?

- How do I prepare image data for use in a convolutional neural network (CNN)?

- How do I work with image data in python?

- Where can I find image data to train my model?

Objectives

- Understand the properties of image data.

- Write code to prepare an image dataset to train a CNN.

- Know the difference between training, testing, and validation datasets.

- Identify sources of image data.

Deep Learning Workflow

Let’s start over from the beginning of our workflow.

Step 1. Formulate/ Outline the problem

Firstly we must decide what it is we want our Deep Learning system to do. This lesson is all about image classification and our aim is to put an image into one of ten categories: airplane, automobile, bird, cat, deer, dog, frog, horse, ship, or truck

Step 2. Identify inputs and outputs

Next we identify the inputs and outputs of the neural network. In our case, the data is images and the inputs could be the individual pixels of the images.

We are performing a classification problem and we want to output one category for each image.

Step 3. Prepare data

Deep Learning requires extensive training data which tells the network what output it should produce for a given input. In this workshop, our network will be trained on a series of images and told what they contain. Once the network is trained, it should be able to take another image and correctly classify its contents.

How much data do you need for Deep Learning?

Unfortunately, this question is not easy to answer. It depends, among other things, on the complexity of the task (which you often do not know beforehand), the quality of the available dataset and the complexity of the network. For complex tasks with large neural networks, adding more data often improves performance. However, this is also not a generic truth: if the data you add is too similar to the data you already have, it will not give much new information to the neural network.

In case you have too little data available to train a complex network from scratch, it is sometimes possible to use a pretrained network that was trained on a similar problem. Another trick is data augmentation, where you expand the dataset with artificial data points that could be real. An example of this is mirroring images when trying to classify cats and dogs. An horizontally mirrored animal retains the label, but exposes a different view.

Depending on your situation, you will prepare your own custom data for training or use pre-existing data.

Custom image data

In some cases, you will create your own set of labelled images.

The steps to prepare your own custom image data include:

Custom data i. Data collection and Labeling:

For image classification the label applies to the entire image; object detection requires bounding boxes around objects of interest, and instance or semantic segmentation requires each pixel to be labelled.

There are a number of open source software used to label your dataset, including:

- (Visual Geometry Group) VGG Image Annotator (VIA)

- ImageJ can be extended with plugins for annotation

- COCO Annotator is designed specifically for creating annotations compatible with Common Objects in Context (COCO) format

Custom data ii. Data preprocessing:

This step involves various tasks to enhance the quality and consistency of the data:

Resizing: Resize images to a consistent resolution to ensure uniformity and reduce computational load.

Augmentation: Apply random transformations (e.g., rotations, flips, shifts) to create new variations of the same image. This helps improve the model’s robustness and generalisation by exposing it to more diverse data.

Normalisation: Scale pixel values to a common range, often between 0 and 1 or -1 and 1. Normalisation helps the model converge faster during training.

Label encoding is a technique used to represent categorical data with numerical labels.

Data Splitting: Split the data set into separate parts to have one for training, one for evaluating the model’s performance during training, and one reserved for the final evaluation of the model’s performance.

Before jumping into these specific preprocessing tasks, it’s important to understand that images on a computer are stored as numerical representations or simplified versions of the real world. Therefore it’s essential to take some time to understand these numerical abstractions.

Pixels

Images on a computer are stored as rectangular arrays of hundreds, thousands, or millions of discrete “picture elements,” otherwise known as pixels. Each pixel can be thought of as a single square point of coloured light.

For example, consider this image of a Jabiru, with a square area designated by a red box:

Now, if we zoomed in close enough to the red box, the individual pixels would stand out:

Note each square in the enlarged image area (i.e. each pixel) is all one colour, but each pixel can be a different colour from its neighbours. Viewed from a distance, these pixels seem to blend together to form the image.

Working with Pixels

In python, an image can represented as a 2- or 3-dimensional array. An array is used to store multiple values or elements of the same datatype in a single variable. In the context of images, arrays have dimensions for height, width, and colour channels (if applicable) and each element corresponds to a pixel value in the image. Let us start with the Jabiru image.

PYTHON

# specify the image path

new_img_path = "../data/Jabiru_TGS.JPG"

# read in the image with default arguments

new_img_pil = keras.utils.load_img(path=new_img_path)

# check the image class and size

print('Image class :', new_img_pil.__class__)

print('Image size:', new_img_pil.size)OUTPUT

Image class : <class 'PIL.JpegImagePlugin.JpegImageFile'>

Image size (552, 573)Image Dimensions - Resizing

The new image has shape (573, 552), meaning it is much

larger in size, 573x552 pixels and is a rectangle instead of a

square.

Recall from the introduction that our training data set consists of 50000 images of 32x32 pixels.

To reduce the computational load and ensure all of our images have a uniform size, we need to choose an image resolution (or size in pixels) and ensure all of the images we use are resized to be consistent.

There are a couple of ways to do this in python but one way is to

specify the size you want using the target_size argument to

the keras.utils.load_img() function.

PYTHON

# read in the image and specify the target size

new_img_pil_small = keras.utils.load_img(path=new_img_path, target_size=(32,32))

# confirm the image class and size

print('Resized image class :', new_img_pil_small.__class__)

print('Resized image size', new_img_pil_small.size) OUTPUT

Resized image class : <class 'PIL.Image.Image'>

Resized image size (32, 32)Of course, if there are a large number of images to preprocess you do not want to copy and paste these steps for each image! Fortunately, Keras has a solution: keras.utils.image_dataset_from_directory()

Two of the most commonly used libraries for image representation and manipulation are NumPy and Pillow (PIL). Additionally, when working with deep learning frameworks like TensorFlow and PyTorch, images are often represented as tensors within these frameworks.

- NumPy is a powerful library for numerical computing in Python. It

provides support for creating and manipulating arrays, which can be used

to represent images as multidimensional arrays.

import numpy as np

- The Pillow library provides functions to open, manipulate, and save

various image file formats. It represents images using its own Image

class.

from PIL import Image- PIL Image Module documentation

- TensorFlow images are often represented as tensors that have

dimensions for batch size, height, width, and colour channels. This

framework provide tools to load, preprocess, and work with image data

seamlessly.

from tensorflow import keras- image preprocessing documentation

- Note Keras image functions also use PIL

Image augmentation

There are several ways to augment your data to increase the diversity of the training data and improve model robustness.

- Geometric Transformations

- rotation, scaling, zooming, cropping

- Flipping or Mirroring

- some classes, like horse, have a different shape when facing left or right and you want your model to recognize both

- Colour properties

- brightness, contrast, or hue

- these changes simulate variations in lighting conditions

We will not perform image augmentation in this lesson, but it is important that you are aware of this type of data preparation because it can make a big difference in your model’s ability to predict outside of your training data.

Information about these operations are included in the Keras document for Image augmentation layers.

Normalisation

Image RGB values are between 0 and 255. As input for neural networks, it is better to have small input values. The process of converting the RGB values to be between 0 and 1 is called normalisation.

Before we can normalise our image values we must convert the image to an numpy array.

We introduced how to do this in Episode 01 Introduction to Deep Learning

but what you may not have noticed is that the

keras.datasets.cifar10.load_data() function did the

conversion for us whereas now we will do it ourselves.

PYTHON

# first convert the image into an array for normalisation

new_img_arr = keras.utils.img_to_array(new_img_pil_small)

# confirm the image class and shape

print('Converted image class :', new_img_arr.__class__)

print('Converted image shape', new_img_arr.shape)OUTPUT

Converted image class : <class 'numpy.ndarray'>

Converted image shape (32, 32, 3)Now that the image is an array, we can normalise the values. Let us also investigate the image values before and after we normalise them.

PYTHON

# inspect pixel values before and after normalisation

# extract the min, max, and mean pixel values BEFORE

print('BEFORE normalisation')

print('Min pixel value ', new_img_arr.min())

print('Max pixel value ', new_img_arr.max())

print('Mean pixel value ', new_img_arr.mean().round())

# normalise the RGB values to be between 0 and 1

new_img_arr_norm = new_img_arr / 255.0

# extract the min, max, and mean pixel values AFTER

print('AFTER normalisation')

print('Min pixel value ', new_img_arr_norm.min())

print('Max pixel value ', new_img_arr_norm.max())

print('Mean pixel value ', new_img_arr_norm.mean().round())OUTPUT

BEFORE normalisation

Min pixel value 0.0

Max pixel value 255.0

Mean pixel value 87.0

AFTER normalisation

Min pixel value 0.0

Max pixel value 1.0

Mean pixel value 0.0ChatGPT

Normalizing the RGB values to be between 0 and 1 is a common pre-processing step in machine learning tasks, especially when dealing with image data. This normalisation has several benefits:

Numerical Stability: By scaling the RGB values to a range between 0 and 1, you avoid potential numerical instability issues that can arise when working with large values. Neural networks and many other machine learning algorithms are sensitive to the scale of input features, and normalizing helps to keep the values within a manageable range.

Faster Convergence: Normalizing the RGB values often helps in faster convergence during the training process. Neural networks and other optimisation algorithms rely on gradient descent techniques, and having inputs in a consistent range aids in smoother and faster convergence.

Equal Weightage for All Channels: In RGB images, each channel (Red, Green, Blue) represents different colour intensities. By normalizing to the range [0, 1], you ensure that each channel is treated with equal weightage during training. This is important because some machine learning algorithms could assign more importance to larger values.

Generalisation: Normalisation helps the model to generalize better to unseen data. When the input features are in the same range, the learned weights and biases can be more effectively applied to new examples, making the model more robust.

Compatibility: Many image-related libraries, algorithms, and models expect pixel values to be in the range of [0, 1]. By normalizing the RGB values, you ensure compatibility and seamless integration with these tools.

The normalisation process is typically done by dividing each RGB value (ranging from 0 to 255) by 255, which scales the values to the range [0, 1].

For example, if you have an RGB image with pixel values (100, 150, 200), after normalisation, the pixel values would become (100/255, 150/255, 200/255) ≈ (0.39, 0.59, 0.78).

Remember that normalisation is not always mandatory, and there could be cases where other scaling techniques might be more suitable based on the specific problem and data distribution. However, for most image-related tasks in machine learning, normalizing RGB values to [0, 1] is a good starting point.

One-hot encoding

A neural network can only take numerical inputs and outputs, and learns by calculating how “far away” the class predicted by the neural network is from the true class. When the target (label) is categorical data, or strings, it is very difficult to determine this “distance” or error. Therefore we will transform this column into a more suitable format. There are many ways to do this, however we will be using one-hot encoding.

One-hot encoding is a technique to represent categorical data as binary vectors, making it compatible with machine learning algorithms. Each category becomes a separate column, and the presence or absence of a category is indicated by 1s and 0s in the respective columns.

Let’s say you have a dataset with a “colour” column containing three categories: yellow, orange, purple.

Table 1. Original Data.

| colour | |

|---|---|

| yellow | 🟨 |

| orange | 🟧 |

| purple | 🟪 |

| yellow | 🟨 |

Table 2. After One-Hot Encoding.

| colour_yellow | colour_orange | colour_purple |

|---|---|---|

| 1 | 0 | 0 |

| 0 | 1 | 0 |

| 0 | 0 | 1 |

| 1 | 0 | 0 |

The Keras function for one_hot encoding is called to_categorical:

keras.utils.to_categorical(y, num_classes=None, dtype="float32")

-

yis an array of class values to be converted into a matrix (integers from 0 to num_classes - 1). -

num_classesis the total number of classes. If None, this would be inferred as max(y) + 1. -

dtypeis the data type expected by the input. Default: ‘float32’

Data Splitting

The typical practice in machine learning is to split your data into two subsets: a training set and a test set. This initial split separates the data you will use to train your model (Step 6) from the data you will use to evaluate its performance (Step 8).

After this initial split, you can choose to further split the training set into a training set and a validation set. This is often done when you are fine-tuning hyperparameters, selecting the best model from a set of candidate models, or preventing overfitting.

To split a dataset into training and test sets there is a very convenient function from sklearn called train_test_split:

sklearn.model_selection.train_test_split(*arrays, test_size=None, train_size=None, random_state=None, shuffle=True, stratify=None)

- The first two parameters are the dataset (X) and the corresponding targets (y) (i.e. class labels).

-

test_sizeis the fraction of the dataset used for testing -

random_statecontrols the shuffling of the dataset, setting this value will reproduce the same results (assuming you give the same integer) every time it is called. -

shufflecontrols whether the order of the rows of the dataset is shuffled before splitting and can be eitherTrueorFalse. -

stratifyis a more advanced parameter that controls how the split is done.

Pre-existing image data

In other cases you will be able to download an image dataset that is already labelled and can be used to classify a number of different object like the CIFAR-10 dataset. Other examples include:

- MNIST database - 60,000 training images of handwritten digits (0-9)

- ImageNet - 14 million hand-annotated images indicating objects from more than 20,000 categories. ImageNet sponsors an annual software contest where programs compete to achieve the highest accuracy. When choosing a pretrained network, the winners of these sorts of competitions are generally a good place to start.

- MS COCO - >200,000 labelled images used for object detection, instance segmentation, keypoint analysis, and captioning

Where labelled data exists, in most cases the data provider or other users will have created data-specific functions you can use to load the data. We already did this in the introduction:

PYTHON

from tensorflow import keras

# load the CIFAR-10 dataset included with the keras library

(train_images, train_labels), (test_images, test_labels) = keras.datasets.cifar10.load_data()

# create a list of classnames associated with each CIFAR-10 label

class_names = ['airplane', 'automobile', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']In this instance the data is likely already prepared for use in a CNN. However, it is always a good idea to first read any associated documentation to find out what steps the data providers took to prepare the images and second to take a closer at the images once loaded and query their attributes.

In our case, we still want prepare the dataset with these steps:

- normalise the image pixel values to be between 0 and 1

- one-hot encode the training image labels

- divide the training data into training and validation sets

We performed these operations in Step 3. Prepare data of the Introduction but let us create the function to prepare the dataset again knowing what we know now.

CHALLENGE Create a function to prepare the dataset

Hint 1: Your function should accept the training images and labels as arguments

Hint 2: Use 20% split for validation and a random state of ‘42’

PYTHON

def prepare_dataset(train_images, train_labels):

# normalise the RGB values to be between 0 and 1

train_images = train_images / 255

# one hot encode the training labels

train_labels = keras.utils.to_categorical(train_labels, len(class_names))

# split the training data into training and validation set

train_images, val_images, train_labels, val_labels = train_test_split(

train_images, train_labels, test_size = 0.2, random_state=42)

return train_images, val_images, train_labels, val_labelsInspect the labels before and after data preparation to visualise one-hot encoding.

PYTHON

print()

print('train_labels before one hot encoding')

print(train_labels)

# prepare the dataset for training

train_images, val_images, train_labels, val_labels = prepare_dataset(train_images, train_labels)

print()

print('train_labels after one hot encoding')

print(train_labels)OUTPUT

train_labels before one hot encoding

[[6]

[9]

[9]

...

[9]

[1]

[1]]

train_labels after one hot encoding

[[0. 0. 0. ... 0. 0. 0.]

[0. 0. 0. ... 0. 0. 1.]

[0. 0. 0. ... 0. 0. 1.]

...

[0. 0. 0. ... 0. 0. 1.]

[0. 1. 0. ... 0. 0. 0.]

[0. 1. 0. ... 0. 0. 0.]]WAIT I thought there were TEN classes!? Where is the rest of the data?

The Spyder IDE uses the ‘…’ notation when it “hides” some of the data for display purposes.

To view the entire array, go the Variable Explorer in the upper right hand corner of your Spyder IDE and double click on the ‘train_labels’ object. This will open a new window that shows all of the columns.

CHALLENGE Training and Validation

Inspect the training and validation sets we created.

How many samples does each set have and are the classes well balanced?

Hint: Use np.sum() on the ’*_labels’ to find out if the

classes are well balanced.

A. Training Set

PYTHON

print('Number of training set images', train_images.shape[0])

print('Number of images in each class:\n', train_labels.sum(axis=0))OUTPUT

Number of training set images: 40000

Number of images in each class:

[4027. 4021. 3970. 3977. 4067. 3985. 4004. 4006. 3983. 3960.]B. Validation Set (we can use the same code as the training set)

PYTHON

print('Number of validation set images', val_images.shape[0])

print('Nmber of images in each class:\n', val_labels.sum(axis=0))OUTPUT

Number of validation set images: 10000

Nmber of images in each class:

[ 973. 979. 1030. 1023. 933. 1015. 996. 994. 1017. 1040.]ChatGPT

Data is typically split into the training, validation, and test data sets using a process called data splitting or data partitioning. There are various methods to perform this split, and the choice of technique depends on the specific problem, dataset size, and the nature of the data. Here are some common approaches:

Hold-Out Method:

In the hold-out method, the dataset is divided into two parts initially: a training set and a test set.

The training set is used to train the model, and the test set is kept completely separate to evaluate the model’s final performance.

This method is straightforward and widely used when the dataset is sufficiently large.

Train-Validation-Test Split:

The dataset is split into three parts: the training set, the validation set, and the test set.

The training set is used to train the model, the validation set is used to tune hyperparameters and prevent overfitting during training, and the test set is used to assess the final model performance.

This method is commonly used when fine-tuning model hyperparameters is necessary.

K-Fold Cross-Validation:

In k-fold cross-validation, the dataset is divided into k subsets (folds) of roughly equal size.

The model is trained and evaluated k times, each time using a different fold as the test set while the remaining k-1 folds are used as the training set.

The final performance metric is calculated as the average of the k evaluation results, providing a more robust estimate of model performance.

This method is particularly useful when the dataset size is limited, and it helps in better utilizing available data.

Stratified Sampling:

Stratified sampling is used when the dataset is imbalanced, meaning some classes or categories are underrepresented.

The data is split in such a way that each subset (training, validation, or test) maintains the same class distribution as the original dataset.

This ensures all classes are well-represented in each subset, which is important to avoid biased model evaluation.

It’s important to note that the exact split ratios (e.g., 80-10-10 or 70-15-15) may vary depending on the problem, dataset size, and specific requirements. Additionally, data splitting should be performed randomly to avoid introducing any biases into the model training and evaluation process.

Data preprocessing completed!

We now have a function we can use throughout the lesson to preprocess our data which means we are ready to learn how to build a CNN like we used in the introduction.

- Image datasets can be found online or created uniquely for your research question.

- Images consist of pixels arranged in a particular order.

- Image data is usually preprocessed before use in a CNN for efficiency, consistency, and robustness.

- Input data generally consists of three sets: a training set used to fit model parameters; a validation set used to evaluate the model fit on training data; a test set used to evaluate the final model performance.

Content from Build a Convolutional Neural Network

Last updated on 2024-05-30 | Edit this page

Overview

Questions

- What is a (artificial) neural network (ANN)?

- How is a convolutional neural network (CNN) different from an ANN?

- What are the types of layers used to build a CNN?

Objectives

- Understand how a convolutional neural network (CNN) differs from an artificial neural network (ANN).

- Explain the terms: kernel, filter.

- Know the different layers: convolutional, pooling, flatten, dense.

Neural Networks

A neural network is an artificial intelligence technique loosely based on the way neurons in the brain work.

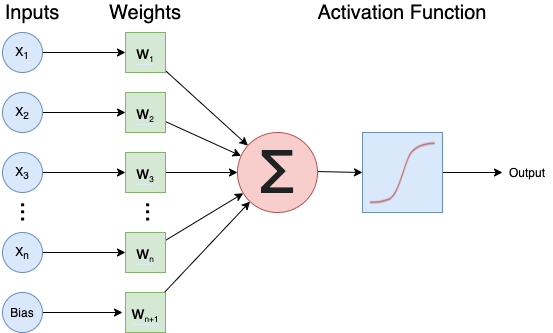

A single neuron

Each neuron will:

- Take one or more inputs (\(x_1, x_2, ...\)), e.g., floating point numbers, each with a corresponding weight.

- Calculate the weighted sum of the inputs where ($w_1, w_2, … $) indicate weights.

- Add an extra constant weight (i.e. a bias term) to this weighted sum.

- Apply a non-linear function to the bias-adjusted weighted sum.

- Return one output value, again a floating point number.

One example equation to calculate the output for a neuron is:

\(output=ReLU(∑i(xi∗wi)+bias)\)

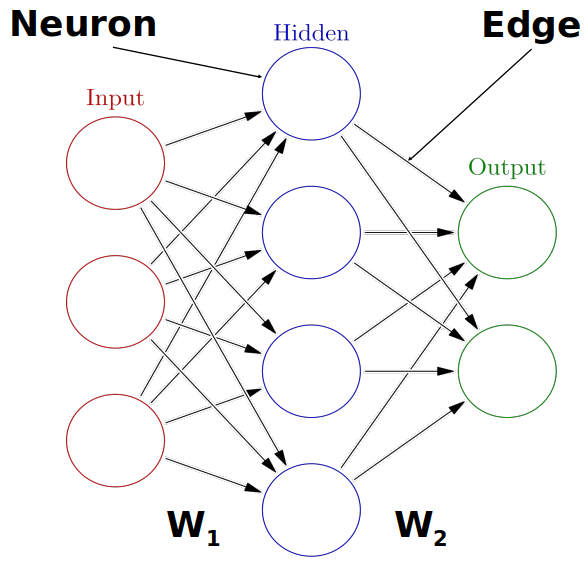

Combining multiple neurons into a network

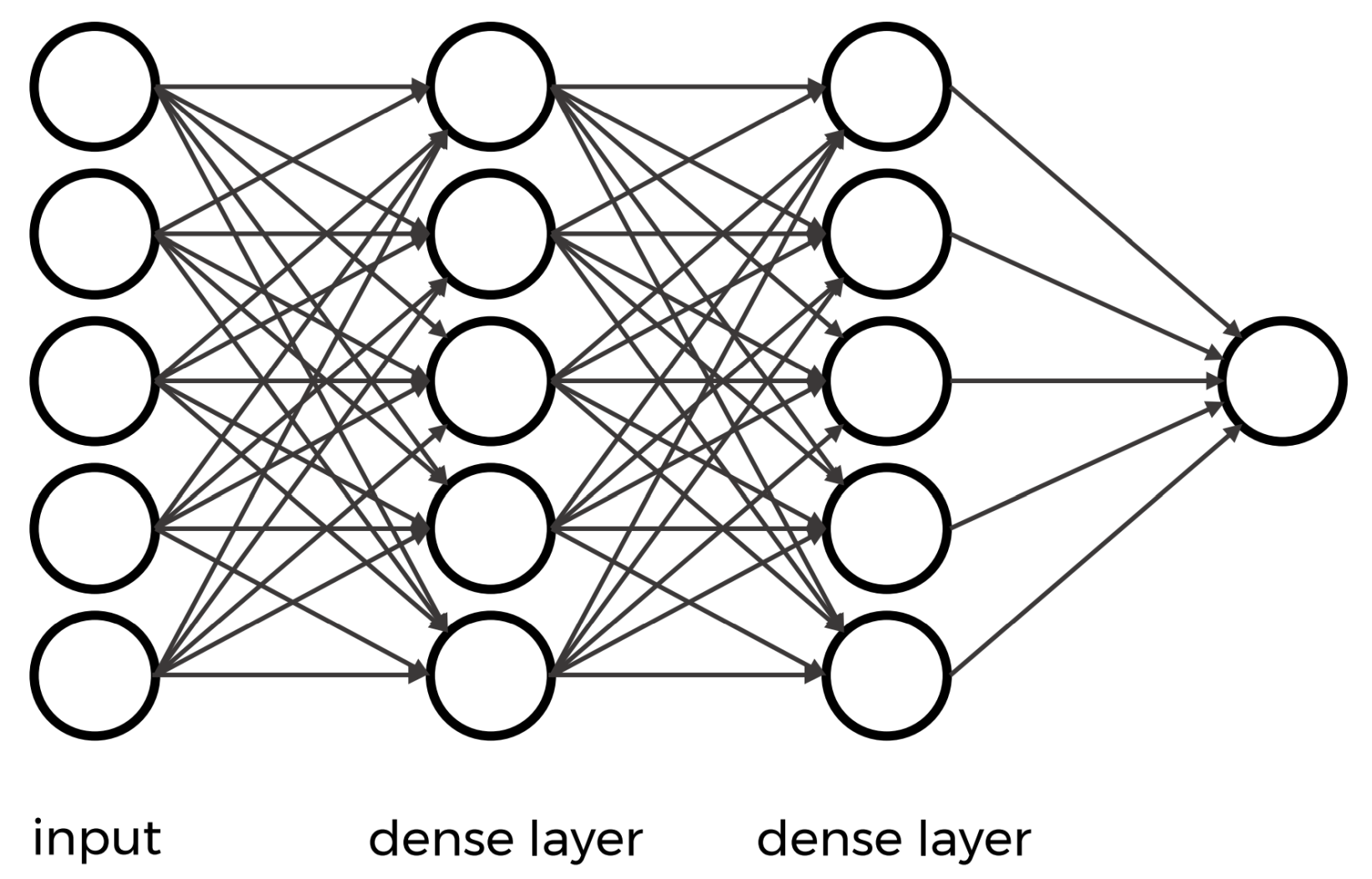

Multiple neurons can be joined together by connecting the output of one to the input of another. These connections are also associated with weights that determine the ‘strength’ of the connection, and these weights are also adjusted during training. In this way, the combination of neurons and connections describe a computational graph, an example can be seen in the image below.

In most neural networks neurons are aggregated into layers. Signals travel from the input layer to the output layer, possibly through one or more intermediate layers called hidden layers. The image below illustrates an example of a neural network with three layers, each circle is a neuron, each line is an edge and the arrows indicate the direction data moves in.

Neural networks aren’t a new technique, they have been around since the late 1940s. But until around 2010 neural networks tended to be quite small, consisting of only 10s or perhaps 100s of neurons. This limited them to only solving quite basic problems. Around 2010 improvements in computing power and the algorithms for training the networks made much larger and more powerful networks practical. These are known as deep neural networks or Deep Learning.

Convolutional Neural Networks

A convolutional neural network (CNN) is a type of artificial neural network (ANN) most commonly applied to analyze visual imagery. They are specifically designed for processing grid-like data, such as images, by leveraging convolutional layers that preserve spatial relationships, when extracting features.

Step 4. Build an architecture from scratch or choose a pretrained model

Let us explore how to build a neural network from scratch. Although this sounds like a daunting task, with Keras it is surprisingly straightforward. With Keras you compose a neural network by creating layers and linking them together.

Parts of a neural network

There are three main components of a neural network:

- CNN Part 1. Input Layer

- CNN Part 2. Hidden Layers

- CNN Part 3. Output Layer

The output from each layer becomes the input to the next layer.

CNN Part 1. Input Layer

The Input in Keras gets special treatment when images are used. Keras

automatically calculates the number of inputs and outputs a specific

layer needs and therefore how many edges need to be created. This means

we just need to let Keras know how big our input is going to be. We do

this by instantiating a keras.Input class and passing it a

tuple to indicate the dimensionality of the input data. In Python, a

tuple is a data type used to store collections of data.

It is similar to a list, but tuples are immutable, meaning once they are

created, their contents cannot be changed.

The input layer is created with the keras.Input function

and its first parameter is the expected shape of the input:

keras.Input(shape=None, batch_size=None, dtype=None, sparse=None, batch_shape=None, name=None, tensor=None)In our case, the shape of an image is defined by its pixel dimensions and number of channels:

OUTPUT

(40000, 32, 32, 3) # number of images, image width in pixels, image height in pixels, number of channels (RGB)CHALLENGE Create the input layer for our network

Hint 1: Specify shape argument only and use defaults for the rest.

Hint 2: The shape of our input dataset includes the total number of images. We want to take a slice of the shape for a single individual image to use an input.

OUTPUT

# CNN Part 1

# Input layer of 32x32 images with three channels (RGB)

inputs_intro = keras.Input(shape=train_images.shape[1:])CNN Part 2. Hidden Layers

The next component consists of the so-called hidden layers of the network.

In a neural network, the input layer receives the raw data, and the output layer produces the final predictions or classifications. These layers’ contents are directly observable because you can see the input data and the network’s output predictions.

However, the hidden layers, which lie between the input and output layers, contain intermediate representations of the input data. These representations are transformed through various mathematical operations performed by the network’s neurons. The specific values and operations within these hidden layers are not directly accessible or interpretable from the input or output data. Therefore, they are considered “hidden” from external observation or inspection.

In a CNN, the hidden layers typically consist of convolutional, pooling, reshaping (e.g., Flatten), and dense layers.

Check out the Layers API section of the Keras documentation for each layer type and its parameters.

Convolutional Layers

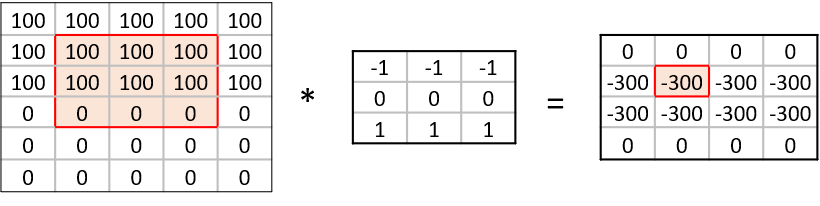

A convolutional layer is a fundamental building block in a CNN designed for processing structured, gridded data, such as images. It applies convolution operations to input data using learnable filters or kernels, extracting local patterns and features (e.g. edges, corners). These filters enable the network to capture hierarchical representations of visual information, allowing for effective feature learning.

To find the particular features of an image, CNNs make use of a concept from image processing that precedes Deep Learning.

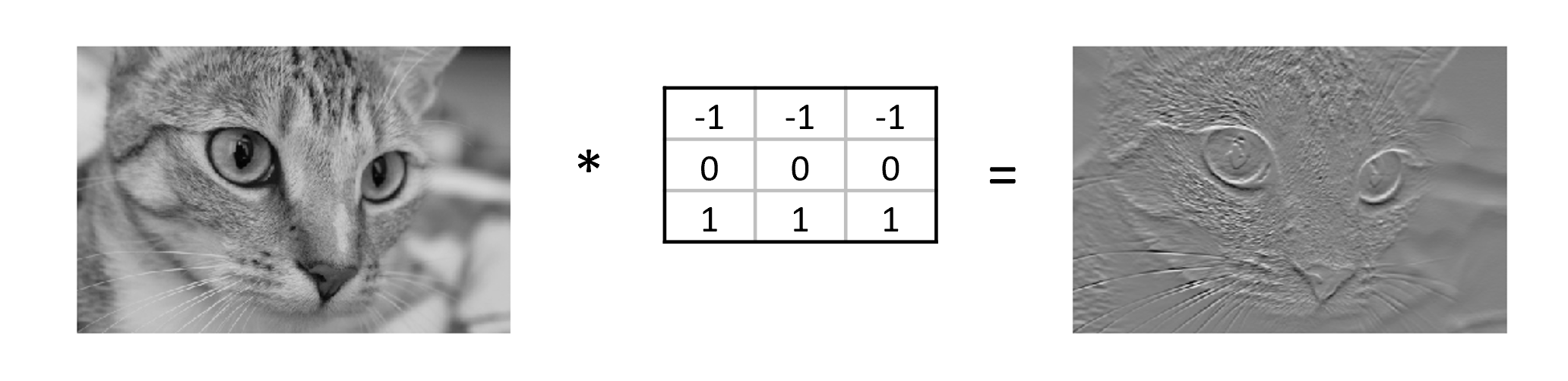

A convolution matrix, or kernel, is a matrix transformation that we ‘slide’ over the image to calculate features at each position of the image. For each pixel, we calculate the matrix product between the kernel and the pixel with its surroundings. Here is one example of a 3x3 kernel used to detect edges:

[[-1, -1, -1],

[0, 0, 0]

[1, 1, 1]]This kernel will give a high value to a pixel if it is on a horizontal border between dark and light areas.

In the following image, the effect of such a kernel on the values of a single-channel image stands out. The red cell in the output matrix is the result of multiplying and summing the values of the red square in the input, and the kernel. Applying this kernel to a real image demonstrates it does indeed detect horizontal edges.

There are several types of convolutional layers available in Keras depending on your application. We use the two-dimensional layer typically used for images:

keras.layers.Conv2D(filters, kernel_size, strides=(1, 1), padding="valid", activation=None, **kwargs)-

filtersis the number of filters in this layer.- This is one of the hyperparameters of our system and should be chosen carefully.

- Good practice is to start with a relatively small number of filters in the first layer to prevent overfitting.

- Choosing a number of filters as a power of two (e.g., 32, 64, 128) is common.

-

kernel sizeis the size of the convolution matrix which we already discussed. - Smaller kernels are often used to capture fine-grained features and odd-sized filters are preferred because they have a centre pixel which helps maintain spatial symmetry during convolutions. -

activationspecifies which activation function to use.

When specifying layers, remember each layer’s output is the input to the next layer. We must create a variable to store a reference to the output so we can pass it to the next layer. The basic format for doing this is:

output_variable = layer_name(layer_arguments)(input_variable)

CHALLENGE Create a 2D convolutional layer for our network

Create a Conv2D layer with 16 filters, a 3x3 kernel size, and the ‘relu’ activation function.

Here we choose relu which is one of the most

commonly used in deep neural networks that is proven to work well. We

will discuss activation functions later in Step 9. Tune

hyperparameters but to satisfy your curiosity,

ReLU stands for Rectified Linear Unit (ReLU).

Hint 1: The input to each layer is the output of the previous layer.

OUTPUT

# CNN Part 2

# Convolutional layer with 16 filters, 3x3 kernel size, and ReLU activation

x_intro = keras.layers.Conv2D(filters=16, kernel_size=(3,3), activation='relu')(inputs_intro)Playing with convolutions

Convolutions applied to images can be hard to grasp at first. Fortunately, there are resources out there that enable users to interactively play around with images and convolutions:

Image kernels explained illustrates how different convolutions can achieve certain effects on an image, like sharpening and blurring.

The convolutional neural network cheat sheet provides animated examples of the different components of convolutional neural nets.

Pooling Layers

The convolutional layers are often intertwined with Pooling layers. As opposed to the convolutional layer used in feature extraction, the pooling layer alters the dimensions of the image and reduces it by a scaling factor effectively decreasing the resolution of your picture.

The rationale behind this is that higher layers of the network should focus on higher-level features of the image. By introducing a pooling layer, the subsequent convolutional layer has a broader ‘view’ on the original image.

Similar to convolutional layers, Keras offers several pooling layers and one used for images (2D spatial data):

keras.layers.MaxPooling2D(pool_size=(2, 2), strides=None, padding="valid", data_format=None, name=None, **kwargs)-

pool_size, i.e., the size of the pooling window- In Keras, the default is usually (2, 2)

The function downsamples the input along its spatial dimensions (height and width) by taking the maximum value over an input window (of size defined by pool_size) for each channel of the input. By taking the maximum instead of the average, the most prominent features in the window are emphasized.

For example, a 2x2 pooling size reduces the width and height of the input by a factor of 2. Empirically, a 2x2 pooling size has been found to work well in various for image classification tasks and also strikes a balance between down-sampling for computational efficiency and retaining important spatial information.

OUTPUT

# Pooling layer with input window sized 2x2

x_intro = keras.layers.MaxPooling2D(pool_size=(2,2))(x_intro)Dense layers

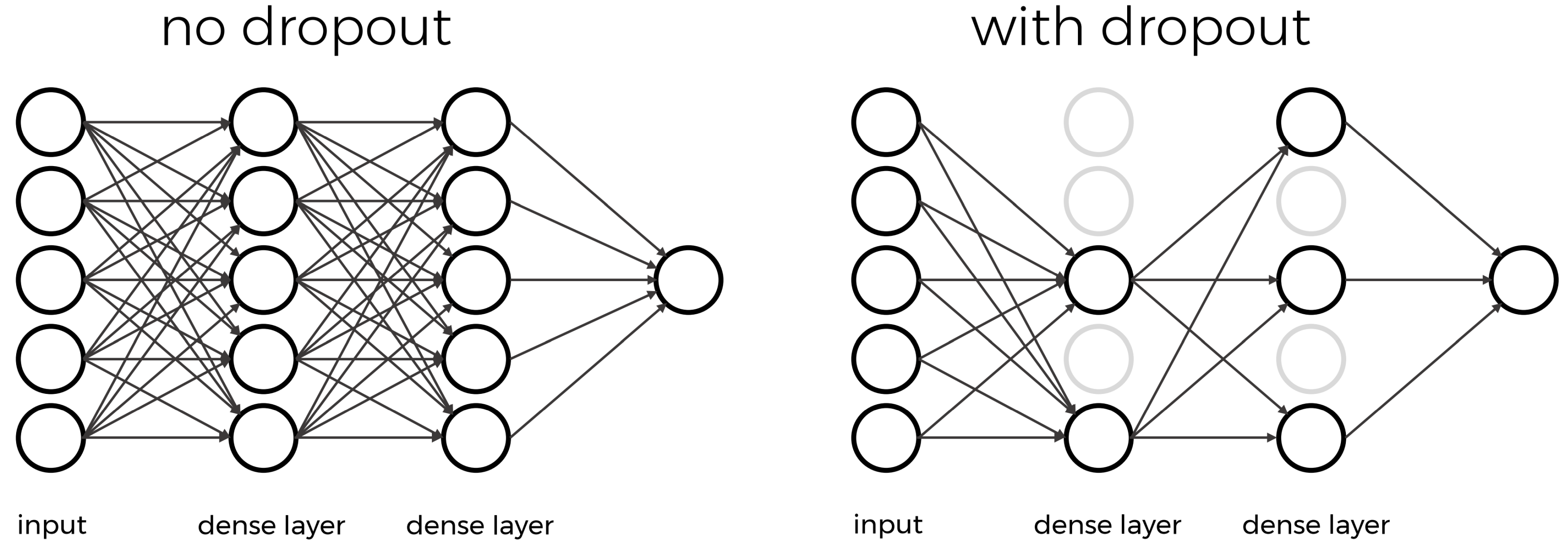

A dense layer is a fully connected layer where each neuron receives input from every neuron in the previous layer. When connecting the layer to its input and output layers every neuron in the dense layer gets an edge (i.e. connection) to all of the input neurons and all of the output neurons.

This layer aggregates global information about the features learned in previous layers to make a decision about the class of the input.

In Keras, a densely-connected layer is defined:

keras.layers.Dense(units, activation=None, **kwargs)- `units in this case refers to the number of neurons.

The choice of how many neurons to specify is often determined through experimentation and can impact the performance of our CNN. Too few neurons may not capture complex patterns in the data but too many neurons may lead to overfitting.

OUTPUT

# Dense layer with 64 neurons and ReLU activation

x_intro = keras.layers.Dense(units=64, activation='relu')(x_intro)Reshaping Layers: Flatten

The next type of hidden layer used in our introductory model is a

type of reshaping layer defined in Keras by the

keras.layers.Flatten class. It is necessary when

transitioning from convolutional and pooling layers to fully connected

layers.

keras.layers.Flatten(data_format=None, **kwargs)The Flatten layer converts the output of the previous layer into a single one-dimensional vector that can be used as input for a dense layer.

OUTPUT

# Flatten layer to convert 2D feature maps into a 1D vector

x_intro = keras.layers.Flatten()(x_intro)A flatten layer function is typically used to transform the two-dimensional arrays (matrices) generated by the convolutional and pooling layers into a one-dimensional array. This is necessary when transitioning from the convolutional/pooling layers to the fully connected layers, which require one-dimensional input.

During the convolutional and pooling operations, a neural network extracts features from the input images, resulting in multiple feature maps, each represented by a matrix. These feature maps capture different aspects of the input image, such as edges, textures, or patterns. However, to feed these features into a fully connected layer for classification or regression tasks, they must be a single vector.

The flatten layer takes each element from the feature maps and arranges them into a single long vector, concatenating them along a single dimension. This transformation preserves the spatial relationships between the features in the original image while providing a suitable format for the fully connected layers to process.

Is one layer of each type enough?

Not for complex data!

A typical architecture for image classification is likely to include at least one convolutional layer, one pooling layer, one or more dense layers, and possibly a flatten layer.

Convolutional and Pooling layers are often used together in multiple sets to capture a wider range of features and learn more complex representations of the input data. Using this technique, the network can learn a hierarchical representation of features, where simple features detected in early layers are combined to form more complex features in deeper layers.

There isn’t a strict rule of thumb for the number of sets of convolutional and pooling layers to start with, however, there are some guidelines.

We are starting with a relatively small and simple architecture because we are limited in time and computational resources. A simple CNN with one or two sets of convolutional and pooling layers can still achieve decent results for many tasks but for your network you will experiment with different architectures.

OUTPUT

# CNN Part 2

# Convolutional layer with 16 filters, 3x3 kernel size, and ReLU activation

x_intro = keras.layers.Conv2D(filters=16, kernel_size=(3,3), activation='relu')(inputs_intro)

# Pooling layer with input window sized 2x2

x_intro = keras.layers.MaxPooling2D(pool_size=(2,2))(x_intro)

# Second Convolutional layer with 32 filters, 3x3 kernel size, and ReLU activation

x_intro = keras.layers.Conv2D(filters=32, kernel_size=(3,3), activation='relu')(x_intro)

# Second Pooling layer with input window sized 2x2

x_intro = keras.layers.MaxPooling2D(pool_size=(2,2))(x_intro)

# Flatten layer to convert 2D feature maps into a 1D vector

x_intro = keras.layers.Flatten()(x_intro)

# Dense layer with 64 neurons and ReLU activation

x_intro = keras.layers.Dense(units=64, activation='relu')(x_intro)CNN Part 3. Output Layer

Recall for the outputs we asked ourselves what we want to identify from the data. If we are performing a classification problem, then typically we have one output for each potential class.

In traditional CNN architectures, a dense layer is typically used as the final layer for classification. This dense layer receives the flattened feature maps from the preceding convolutional and pooling layers and outputs the final class probabilities or regression values.

For multiclass data, the softmax activation is used

instead of relu because it helps the computer give each

option (class) a likelihood score, and the scores add up to 100 per

cent. This way, it’s easier to pick the one the computer thinks is most

probable.

CHALLENGE Create an Output layer for our network

Use a dense layer to create the output layer for a classification problem with 10 possible classes.

Hint 1: The input to each layer is the output of the previous layer.

Hint 2: The units (neurons) should be the same as number of classes as our dataset.

Hint 3: Use softmax activation.

OUTPUT

# CNN Part 3

# Output layer with 10 units (one for each class) and softmax activation

outputs_intro = keras.layers.Dense(units=10, activation='softmax')(x_intro)Putting it all together

Once you decide on the initial architecture of your CNN, the last

step to create the model is to bring all of the parts together using the

keras.Model class.

There are several ways of grouping the layers into an object as described in the Keras Models API.

We will use the Functional API to create our model using the inputs and outputs defined in this episode.

keras.Model(inputs=inputs, outputs=outputs)Note that there is additional argument that can be passed to the keras.Model class called ‘name’ that takes a string. Although it is no longer specified in the documentation, the ‘name’ argument is useful when deciding among different architectures.

CHALLENGE Create a function that defines an introductory CNN

Using the keras.Model class and the input, hidden, and output layers from the previous challenges, create a function that returns the CNN from the introduction.

Hint 1: Name the model “cifar_model_intro”

OUTPUT

def create_model_intro():

# CNN Part 1

# Input layer of 32x32 images with three channels (RGB)

inputs_intro = keras.Input(shape=train_images.shape[1:])

# CNN Part 2

# Convolutional layer with 16 filters, 3x3 kernel size, and ReLU activation

x_intro = keras.layers.Conv2D(filters=16, kernel_size=(3,3), activation='relu')(inputs_intro)

# Pooling layer with input window sized 2x2

x_intro = keras.layers.MaxPooling2D(pool_size=(2,2))(x_intro)

# Second Convolutional layer with 32 filters, 3x3 kernel size, and ReLU activation

x_intro = keras.layers.Conv2D(filters=32, kernel_size=(3,3), activation='relu')(x_intro)

# Second Pooling layer with input window sized 2x2

x_intro = keras.layers.MaxPooling2D(pool_size=(2,2))(x_intro)

# Flatten layer to convert 2D feature maps into a 1D vector

x_intro = keras.layers.Flatten()(x_intro)

# Dense layer with 64 neurons and ReLU activation

x_intro = keras.layers.Dense(units=64, activation='relu')(x_intro)

# CNN Part 3

# Output layer with 10 units (one for each class) and softmax activation

outputs_intro = keras.layers.Dense(units=10, activation='softmax')(x_intro)

# create the model

model_intro = keras.Model(inputs = inputs_intro,

outputs = outputs_intro,

name = "cifar_model_intro")

return model_introWe now have a function that defines the introduction model.

We can use this function to create the introduction model and and

view a summary of its structure using the Model.summary

method.

PYTHON

# create the introduction model

model_intro = create_model_intro()

# view model summary

model_intro.summary()OUTPUT

Model: "cifar_model_intro"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 32, 32, 3)] 0

conv2d (Conv2D) (None, 30, 30, 16) 448

max_pooling2d (MaxPooling2 (None, 15, 15, 16) 0

D)

conv2d_1 (Conv2D) (None, 13, 13, 32) 4640

max_pooling2d_1 (MaxPoolin (None, 6, 6, 32) 0

g2D)

flatten (Flatten) (None, 1152) 0

dense (Dense) (None, 64) 73792

dense_1 (Dense) (None, 10) 650

=================================================================

Total params: 79530 (310.66 KB)

Trainable params: 79530 (310.66 KB)

Non-trainable params: 0 (0.00 Byte)

_________________________________________________________________The Model.summary() output has three columns:

- Layer (type) lists the name and type of each layer.

- Remember the ‘name’ argument we used to name our model? This argument can also be supplied to each layer. If a name is not provided, Keras will assign a unique identifier starting from 1 and incrementing for each new layer of the same type within the model.

- Output Shape describes the shape of the output produced by each

layer as (batch_size, height, width, channels).

- Batch size is the number of samples processed in each batch during training or inference. This dimension is often denoted as None in the summary because the batch size can vary and is typically specified during model training or inference.

- Height, Width, Channels: The remaining dimensions represent the spatial dimensions and the number of channels in the output tensor. For convolutional layers and pooling layers, the height and width dimensions typically decrease as the network progresses through the layers due to the spatial reduction caused by convolution and pooling operations. The number of channels may change depending on the number of filters in the convolutional layer or the design of the network architecture.

- For example, in a convolutional layer, the output shape (None, 30,

30, 16) means:

- None: The batch size can vary.

- 30: The height and width of the output feature maps are both 30 pixels.

- 16: There are 16 channels in the output feature maps, indicating that the layer has 16 filters.

- Param # displays the number of parameters (weights and biases)

associated with each layer.

- The total number of parameters in a layer is calculated as follows:

- Total parameters = (number of input units) × (number of output units) + (number of output units)

- At the bottom of the Model.summary() you will find the number of

Total parameters and their size; the number of Trainable parameters and

their size; and the number of Non-trainable parameters and their size.

- In most cases, the total number of parameters will match the number of trainable parameters. In other cases, such as models using normalization layers, there will be some parameters that are fixed during training and not trainable.

- Disclaimer: We explicitly decided to focus on building a foundational understanding of convolutional neural networks for this course without delving into the detailed calculation of parameters. However, as your progress on your deep learning journey it will become increasingly important for you to understand parameter calculation in order to optimize model performance, troubleshoot issues, and design more efficient CNN architectures.

- The total number of parameters in a layer is calculated as follows:

How to choose an architecture?

For this neural network, we had to make many choices, including the number of hidden neurons. Other choices to be made are the number of layers and type of layers. You might wonder how you should make these architectural choices. Unfortunately, there are no clear rules to follow here, and it often boils down to a lot of trial and error. It is recommended to explore what others have done with similar data sets and problems. Another best practice is to start with a relatively simple architecture and then add layers and tweak the network to test if performance increases.

We have a model now what?

This CNN should be able to run with the CIFAR-10 data set and provide reasonable results for basic classification tasks. However, do keep in mind this model is relatively simple, and its performance may not be as high as more complex architectures. The reason it’s called deep learning is because, in most cases, the more layers we have, i.e. the deeper and more sophisticated CNN architecture we use, the better the performance.

How can we judge a model’s performance? We can inspect a couple metrics produced during the training process to detect whether our model is underfitting or overfitting. To do that, we continue with the next steps in our Deep Learning workflow, Step 5. Choose a loss function and optimizer and Step 6. Train model.

- Artificial neural networks (ANN) are a machine learning technique based on a model inspired by groups of neurons in the brain.

- Convolution neural networks (CNN) are a type of ANN designed for image classification and object detection.

- The number of filters corresponds to the number of distinct features the layer is learning to recognise whereas the kernel size determines the level of features being captured.

- A CNN can consist of many types of layers including convolutional, pooling, flatten, and dense (fully connected) layers

- Convolutional layers are responsible for learning features from the input data.

- Pooling layers are often used to reduce the spatial dimensions of the data.

- The flatten layer is used to convert the multi-dimensional output of the convolutional and pooling layers into a flat vector.

- Dense layers are responsible for combining features learned by the previous layers to perform the final classification.

Content from Compile and Train (Fit) a Convolutional Neural Network

Last updated on 2024-05-20 | Edit this page

Overview

Questions

- How do you compile a convolutional neural network (CNN)?

- What is a loss function?

- What is an optimizer?

- How do you train (fit) a CNN?

- How do you evaluate a model during training?

- What is overfitting?

Objectives

- Explain the difference between compiling and training (fitting) a CNN.

- Know how to select a loss function for your model.

- Understand what an optimizer is.

- Define the terms: learning rate, batch size, epoch.

- Understand what loss and accuracy are and how to monitor them during training.

- Explain what overfitting is and what to do about it.

Step 5. Choose a loss function and optimizer and compile model

We have designed a convolutional neural network (CNN) that in theory we should be able to train to classify images.

We now need to compile the model, or set up the rules and strategies for how the network will learn. To do this, we select an appropriate loss function and optimizer to use during training (fitting).

The Keras method to compile a model is found in the Model training APIs section of the documentation and has the following structure:

Model.compile(

optimizer="rmsprop",

loss=None,

loss_weights=None,

metrics=None,

weighted_metrics=None,

run_eagerly=False,

steps_per_execution=1,

jit_compile="auto",

auto_scale_loss=True,

)The three arguments we want to set for this method are for the optimizer, the loss, and the metrics parameters.

Optimizer

An optimizer in this case refers to the algorithm with which the model learns to optimize on the provided loss function, or minimise the model error. In other words, the optimizer is responsible for taking the output of the loss function and then applying some changes to the weights within the network. It is through this process that the “learning” (adjustment of the weights) is achieved.

We need to choose an optimizer and, if this optimizer has parameters, decide what values to use for those. Similar to other hyperparameters, the choice of optimizer depends on the problem you are trying to solve, your model architecture, and your data.

Adam

Here we picked one of the most common optimizers demonstrated to work well for most tasks, the Adam optimizer, as our starting point.

In Keras, Adam is defined by the keras.optimizers.Adam

class:

keras.optimizers.Adam(

learning_rate=0.001,

beta_1=0.9,

beta_2=0.999,

epsilon=1e-07,

amsgrad=False,

weight_decay=None,

clipnorm=None,

clipvalue=None,

global_clipnorm=None,

use_ema=False,

ema_momentum=0.99,

ema_overwrite_frequency=None,

loss_scale_factor=None,

gradient_accumulation_steps=None,

name="adam",

**kwargs

)As you can see, the Adam optimizer defines many parameters. For this introductory course, we will accept the default arguments but we highly recommend you investigate all of them if you decide to use this optimizer for your project.

For now, though, we do want to highlight the first parameter.

The Learning rate is a hyperparameter that determines the step size at which the model’s weights are updated during training. You can think of this like the pace of learning for your model because it’s basically how big (or small) a step your model takes to learn from its mistakes. Too high, and it might overshoot the optimal values; too low, and it might take forever to learn.

The learning rate may be fixed and remain constant throughout the entire training process or it may be adaptive and dynamically change during training.

The Keras optimizer documentation describes the other optimizers available to choose from. A couple more popular or famous ones include:

Stochastic Gradient Descent (sgd): Stochastic Gradient Descent (SGD) is one of the fundamental optimization algorithms used to train machine learning models, especially neural networks. It is a variant of the gradient descent algorithm, designed to handle large datasets efficiently.

-

Root Mean Square (rms)prop: RMSprop is widely used in various deep learning frameworks and is one of the predecessors of more advanced optimizers like Adam, which further refines the concept of adaptive learning rates. It is an extension of the basic Stochastic Gradient Descent (SGD) algorithm and addresses some of the challenges of SGD.

- For example, one of the main issues with the basic SGD is that it uses a fixed learning rate for all model parameters throughout the training process. This fixed learning rate can lead to slow convergence or divergence (over-shooting) in some cases. RMSprop introduces an adaptive learning rate mechanism to address this problem.

ChatGPT

Learning rate is a hyperparameter that determines the step size at which the model’s parameters are updated during training. A higher learning rate allows for more substantial parameter updates, which can lead to faster convergence, but it may risk overshooting the optimal solution. On the other hand, a lower learning rate leads to smaller updates, providing more cautious convergence, but it may take longer to reach the optimal solution. Finding an appropriate learning rate is crucial for effectively training machine learning models.

The figure below illustrates how a small learning rate will not traverse toward the minima of the gradient descent algorithm in a timely manner, i.e. number of epochs.

On the other hand, specifying a learning rate that is too high will result in a loss value that never approaches the minima. That is, ‘bouncing between the sides’, thus never reaching a minima to cease learning.

Finally, a modest learning rate will ensure that the product of multiplying the scalar gradient value and the learning rate does not result in too small steps, nor a chaotic bounce between sides of the gradient where steepness is greatest.

These images were obtained from Google Developers Machine Learning Crash Course and is licenced under the Creative Commons 4.0 Attribution Licence.

Loss function

The loss function tells the training algorithm how wrong, or how ‘far away’ from the true value, the predicted value is. The purpose of loss functions is to compute the quantity that a model should seek to minimize during training. Which class of loss functions you choose depends on your task.

Loss for classification

For classification purposes, there are a number of probabilistic

losses to choose from. Here we chose

CategoricalCrossentropy because we want to compute the

difference between our one-hot encoded class labels and the model

predictions and this loss function is appropriate when the data has two

or more label classes.

It is defined by the

keras.losses.CategoricalCrossentropy class:

keras.losses.CategoricalCrossentropy(

from_logits=False,

label_smoothing=0.0,

axis=-1,

reduction="sum_over_batch_size",

name="categorical_crossentropy",

)More information about loss functions can be found in the Keras loss documentation.

Metrics

After we select the desired optimizer and loss function we specify the metric(s) to be evaluated by the model during training and testing. A metric is a function used to judge the performance of the model.

Metric functions are similar to loss functions, except the results from evaluating a metric are not used when training the model. Note you can also use any loss function as a metric. The Keras metrics documentation provides a list of potential metrics.

Typically, for classification problems, you will use

CategoricalAccuracy, which calculates how often the model

predictions match the true labels, and in Keras is defined as:

keras.metrics.CategoricalAccuracy(name="categorical_accuracy", dtype=None)The accuracy function creates two local variables, total and count, that it uses to compute the frequency with which predictions matches labels. This frequency is ultimately returned as accuracy: an operation that divides the total by count.

Now that we selected which optimizer, loss function, and metric to use, we want to compile the model, or prepare it for training.

CHALLENGE Write the code to compile the introductory model

OUTPUT

# compile the model

model_intro.compile(optimizer = keras.optimizers.Adam(),

loss = keras.losses.CategoricalCrossentropy(),

metrics = keras.metrics.CategoricalAccuracy())Step 6. Train (Fit) model

We are ready to train the model.

Training a model means teaching the computer to recognize patterns in data by adjusting its internal parameters, or iteratively comparing its predictions with the actual outcomes to minimize errors. The result is a model capable of making accurate predictions on new, unseen data.

Training the model is done using the Model.fit

method:

Model.fit(

x=None,

y=None,

batch_size=None,

epochs=1,

verbose="auto",

callbacks=None,

validation_split=0.0,

validation_data=None,

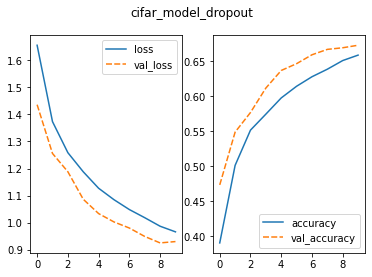

shuffle=True,