Introduction to high-dimensional data

Overview

Teaching: 30 min

Exercises: 20 minQuestions

What are high-dimensional data and what do these data look like in the biosciences?

What are the challenges when analysing high-dimensional data?

What statistical methods are suitable for analysing these data?

How can Bioconductor be used to access high-dimensional data in the biosciences?

Objectives

Explore examples of high-dimensional data in the biosciences.

Appreciate challenges involved in analysing high-dimensional data.

Explore different statistical methods used for analysing high-dimensional data.

Work with example data created from biological studies.

What are high-dimensional data?

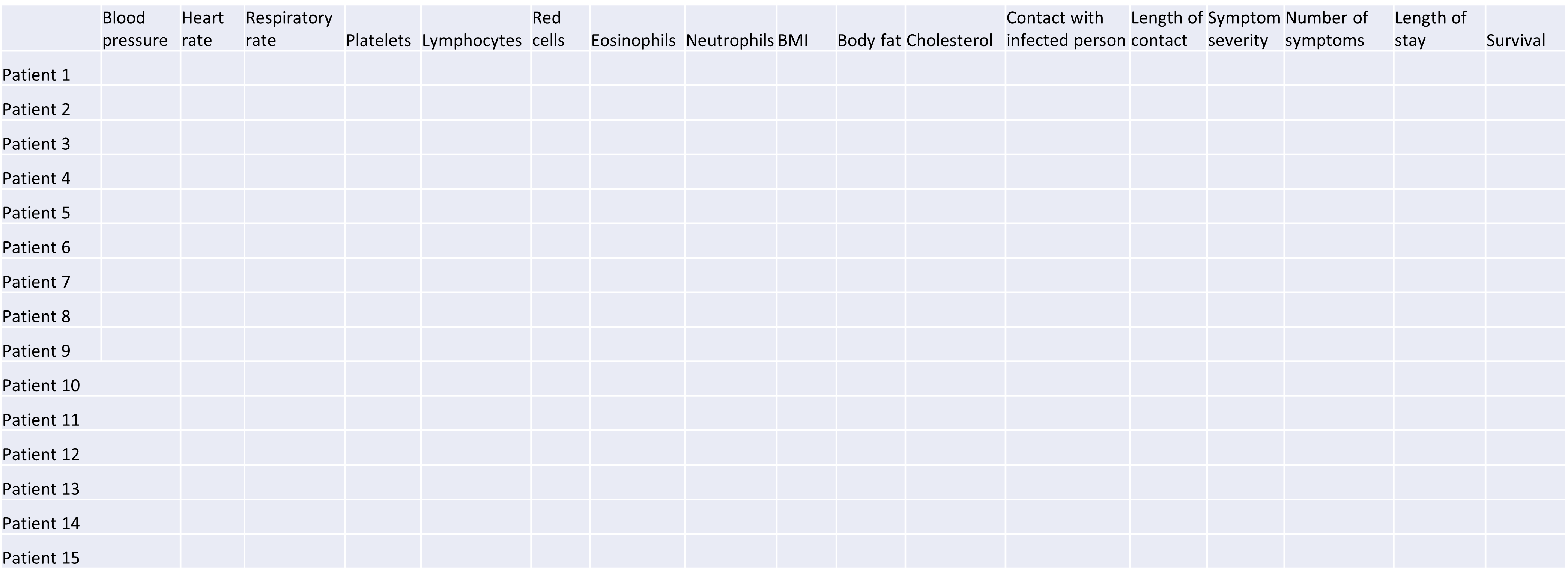

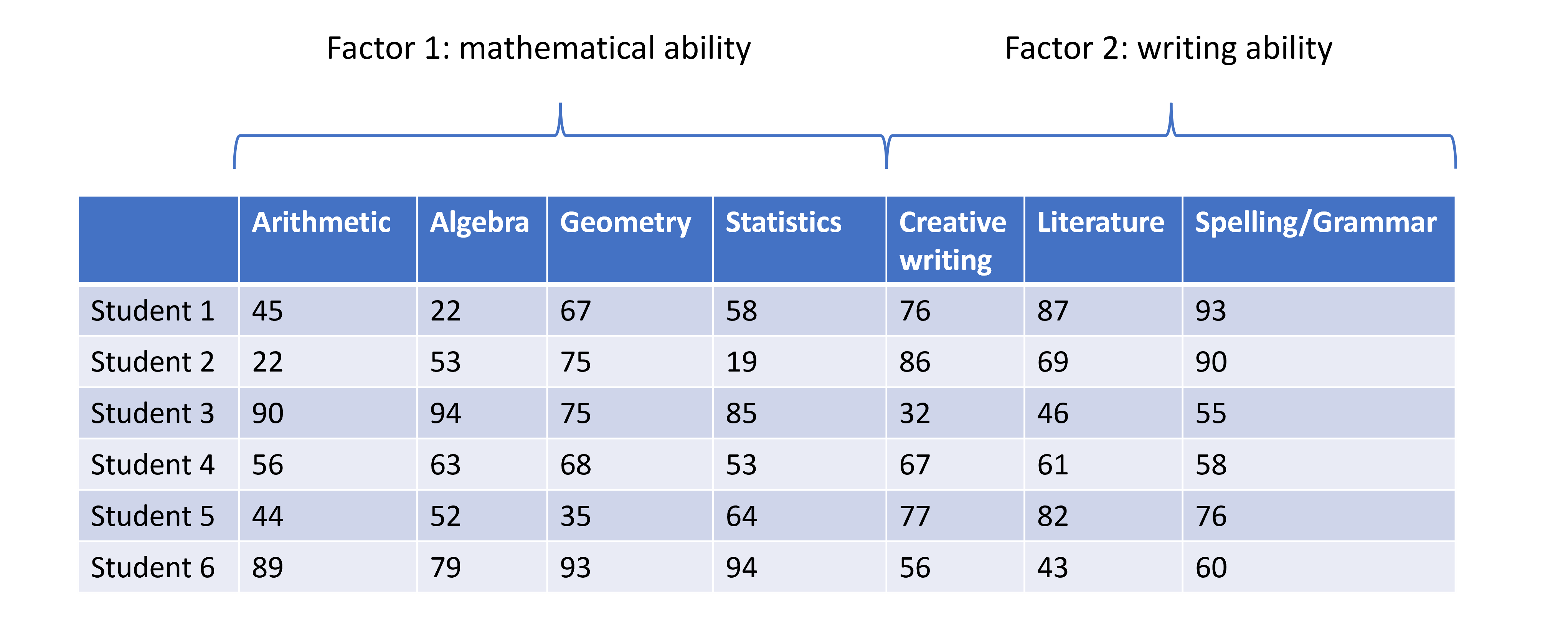

High-dimensional data are defined as data with many features (variables observed). In recent years, advances in information technology have allowed large amounts of data to be collected and stored with relative ease. As such, high-dimensional data have become more common in many scientific fields, including the biological sciences, where datasets in subjects like genomics and medical sciences often have a large numbers of features. For example, hospital data may record many variables, including symptoms, blood test results, behaviours, and general health. An example of what high-dimensional data might look like in a biomedical study is shown in the figure below.

Example of a high-dimensional data table with features in the columns and individual observations (patients) in rows.

Researchers often want to relate such features to specific patient outcomes (e.g. survival, length of time spent in hospital). However, analysing high-dimensional data can be extremely challenging since standard methods of analysis, such as individual plots of features and linear regression, are no longer appropriate when we have many features. In this lesson, we will learn alternative methods for dealing with high-dimensional data and discover how these can be applied for practical high-dimensional data analysis in the biological sciences.

Challenge 1

Descriptions of four research questions and their datasets are given below. Which of these scenarios use high-dimensional data?

- Predicting patient blood pressure using: cholesterol level in blood, age, and BMI measurements, collected from 100 patients.

- Predicting patient blood pressure using: cholesterol level in blood, age, and BMI, as well as information on 200,000 single nucleotide polymorphisms from 100 patients.

- Predicting the length of time patients spend in hospital with pneumonia infection using: measurements on age, BMI, length of time with symptoms, number of symptoms, and percentage of neutrophils in blood, using data from 200 patients.

- Predicting probability of a patient’s cancer progressing using gene expression data from 20,000 genes, as well as data associated with general patient health (age, weight, BMI, blood pressure) and cancer growth (tumour size, localised spread, blood test results).

Solution

- No. The number of features is relatively small (4 including the response variable since this is an observed variable).

- Yes, this is an example of high-dimensional data. There are 200,004 features.

- No. The number of features is relatively small (6).

- Yes. There are 20,008 features.

Now that we have an idea of what high-dimensional data look like we can think about the challenges we face in analysing them.

Why is dealing with high-dimensional data challenging?

Most classical statistical methods are set up for use on low-dimensional data (i.e. with a small number of features, $p$). This is because low-dimensional data were much more common in the past when data collection was more difficult and time consuming.

One challenge when analysing high-dimensional data is visualising the many variables. When exploring low-dimensional datasets, it is possible to plot the response variable against each of features to get an idea which of these are important predictors of the response. With high-dimensional data, the large number of features makes doing this difficult. In addition, in some high-dimensional datasets it can also be difficult to identify a single response variable, making standard data exploration and analysis techniques less useful.

Let’s have a look at a simple dataset with lots of features to understand some of the challenges we are facing when working with high-dimensional data. For reference, all data used throughout the lesson are described in the data page.

Challenge 2

For illustrative purposes, we start with a simple dataset that is not technically high-dimensional but contains many features. This will illustrate the general problems encountered when working with many features in a high-dimensional data set.

First, make sure you have completed the setup instructions here. Next, let’s load the

prostatedataset as follows:library("here") prostate <- readRDS(here("data/prostate.rds"))Examine the dataset (in which each row represents a single patient) to:

- Determine how many observations ($n$) and features ($p$) are available (hint: see the

dim()function).- Examine what variables were measured (hint: see the

names()andhead()functions).- Plot the relationship between the variables (hint: see the

pairs()function). What problem(s) with high-dimensional data analysis does this illustrate?Solution

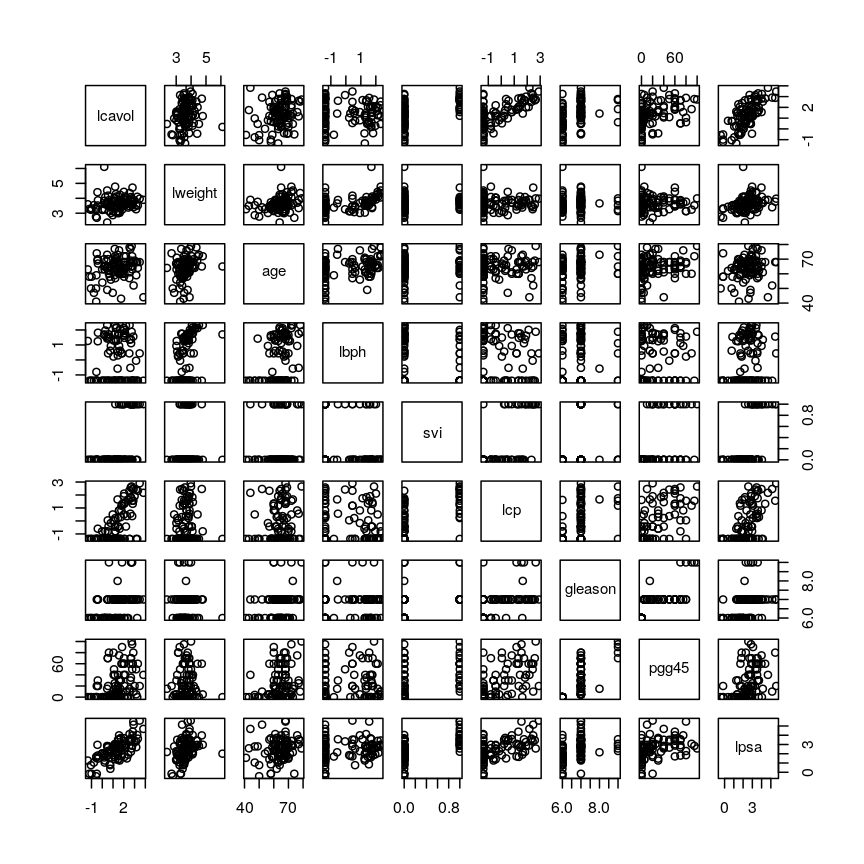

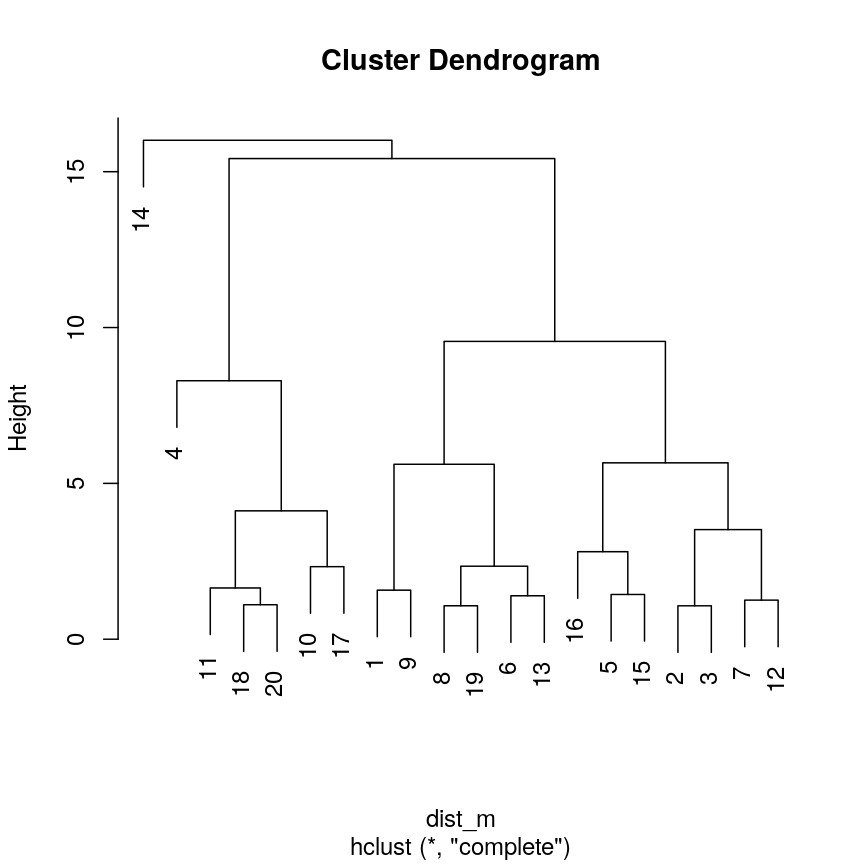

dim(prostate) # print the number of rows and columnsnames(prostate) # examine the variable names head(prostate) # print the first 6 rowsnames(prostate) # examine column names[1] "lcavol" "lweight" "age" "lbph" "svi" "lcp" "gleason" [8] "pgg45" "lpsa"pairs(prostate) # plot each pair of variables against each other

Pairwise plots of the 'prostate' dataset.

The

pairs()function plots relationships between each of the variables in theprostatedataset. This is possible for datasets with smaller numbers of variables, but for datasets in which $p$ is larger it becomes difficult (and time consuming) to visualise relationships between all variables in the dataset. Even where visualisation is possible, fitting models to datasets with many variables is difficult due to the potential for overfitting and difficulties in identifying a response variable.

Note that function documentation and information on function arguments will be useful throughout

this lesson. We can access these easily in R by running ? followed by the package name.

For example, the documentation for the dim function can be accessed by running ?dim.

Locating data with R - the

herepackageIt is often desirable to access external datasets from inside R and to write code that does this reliably on different computers. While R has an inbulit function

setwd()that can be used to denote where external datasets are stored, this usually requires the user to adjust the code to their specific system and folder structure. Theherepackage is meant to be used in R projects. It allows users to specify the data location relative to the R project directory. This makes R code more portable and can contribute to improve the reproducibility of an analysis.

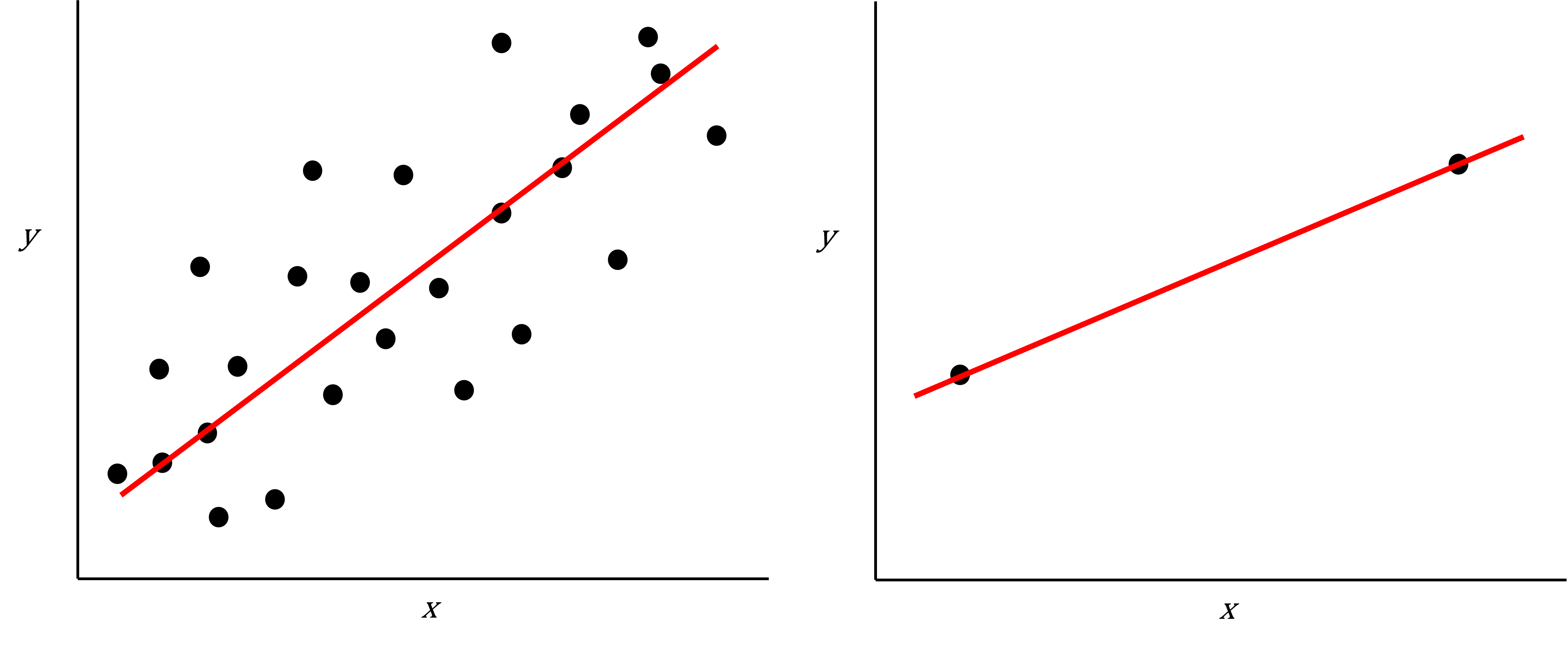

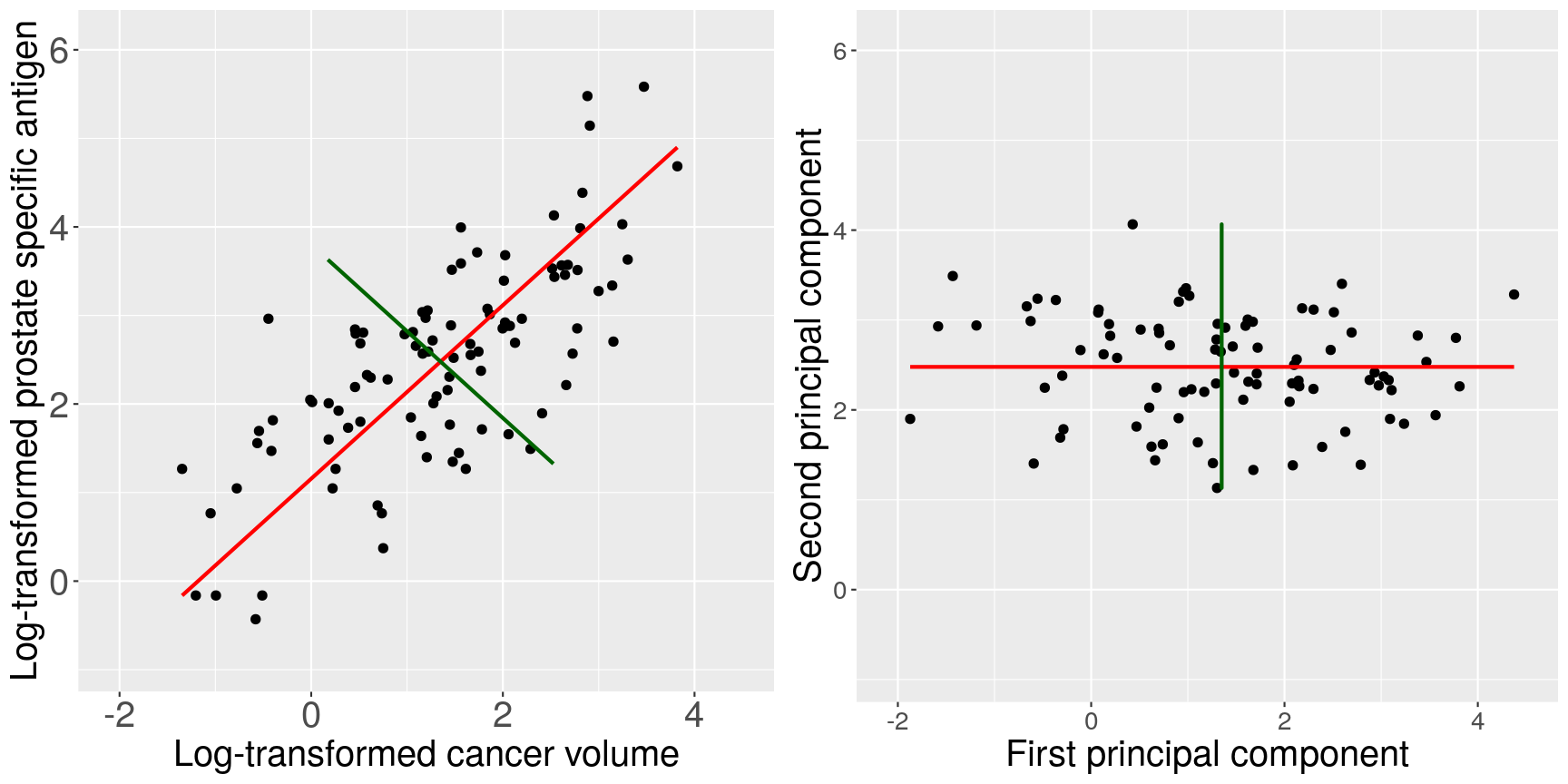

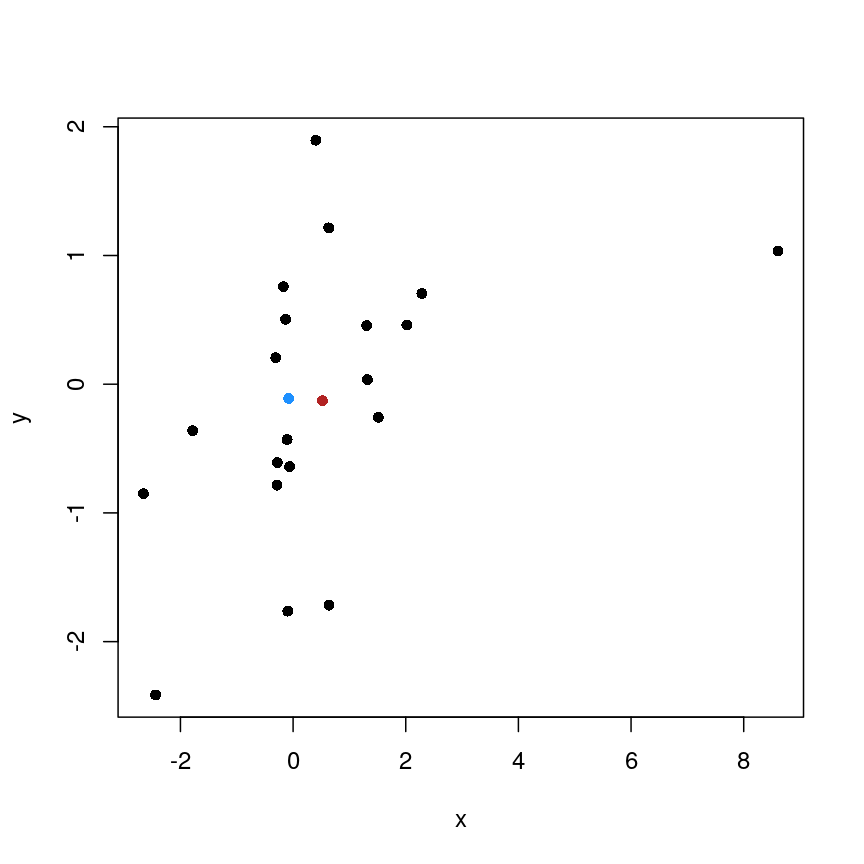

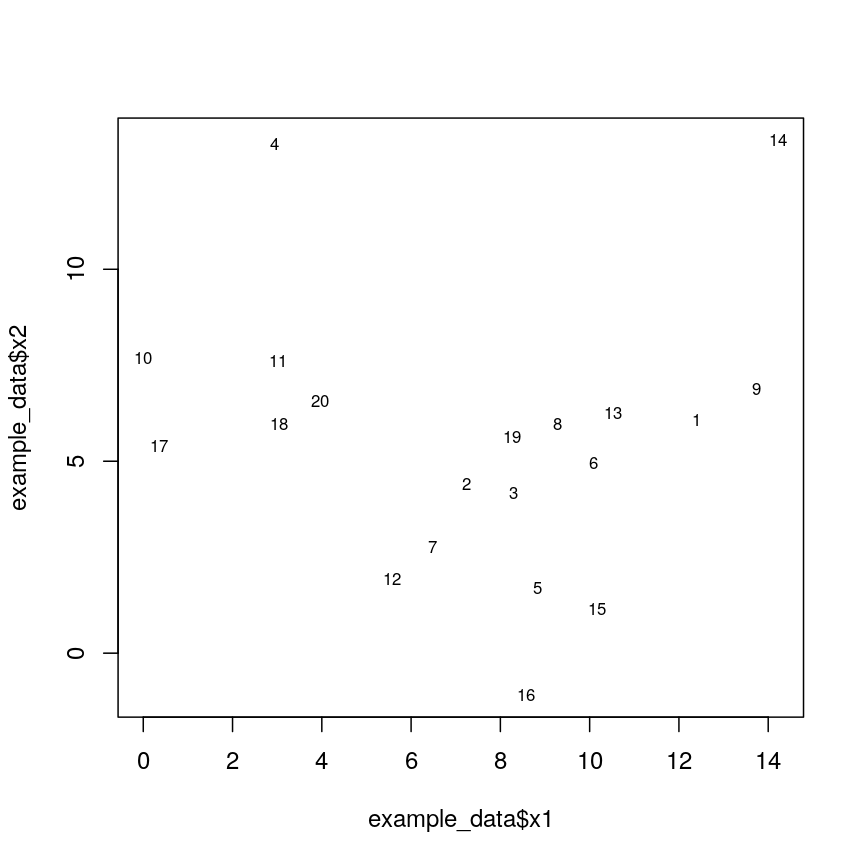

As well as many variables causing problems when working with high-dimensional data, having relatively few observations ($n$) compared to the number of features ($p$) causes additional challenges. To illustrate these challenges, imagine we are carrying out least squares regression on a dataset with 25 observations. Fitting a best fit line through these data produces a plot shown in the left-hand panel of the figure below.

However, imagine a situation in which the number of observations and features in a dataset are almost equal. In that situation the effective number of observations per feature is low. The result of fitting a best fit line through few observations can be seen in the right-hand panel below.

Scatter plot of two variables (x and y) from a data set with 25 observations (left) and 2 observations (right) with a fitted regression line (red).

In the first situation, the least squares regression line does not fit the data perfectly and there is some error around the regression line. But, when there are only two observations the regression line will fit through the points exactly, resulting in overfitting of the data. This suggests that carrying out least squares regression on a dataset with few data points per feature would result in difficulties in applying the resulting model to further datsets. This is a common problem when using regression on high-dimensional datasets.

Another problem in carrying out regression on high-dimensional data is dealing with correlations between explanatory variables. The large numbers of features in these datasets makes high correlations between variables more likely. Let’s explore why high correlations might be an issue in a Challenge.

Challenge 3

Use the

cor()function to examine correlations between all variables in theprostatedataset. Are some pairs of variables highly correlated using a threshold of 0.75 for the correlation coefficients?Use the

lm()function to fit univariate regression models to predict patient age using two variables that are highly correlated as predictors. Which of these variables are statistically significant predictors of age? Hint: thesummary()function can help here.Fit a multiple linear regression model predicting patient age using both variables. What happened?

Solution

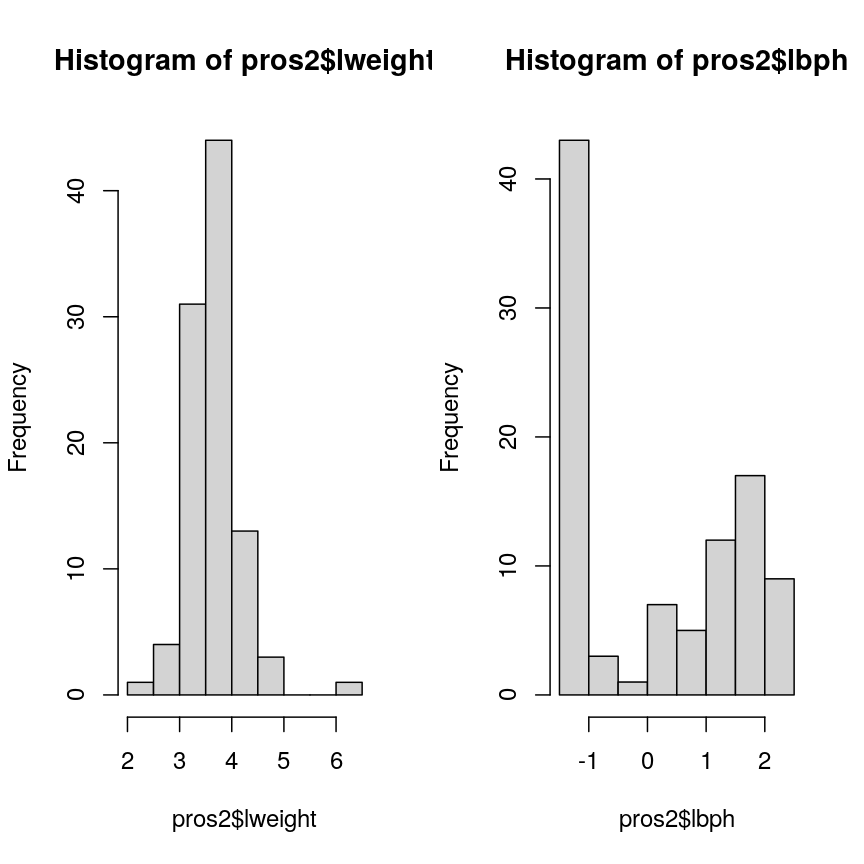

Create a correlation matrix of all variables in the

prostatedatasetcor(prostate)lcavol lweight age lbph svi lcp lcavol 1.0000000 0.194128286 0.2249999 0.027349703 0.53884500 0.675310484 lweight 0.1941283 1.000000000 0.3075286 0.434934636 0.10877851 0.100237795 age 0.2249999 0.307528614 1.0000000 0.350185896 0.11765804 0.127667752 lbph 0.0273497 0.434934636 0.3501859 1.000000000 -0.08584324 -0.006999431 svi 0.5388450 0.108778505 0.1176580 -0.085843238 1.00000000 0.673111185 lcp 0.6753105 0.100237795 0.1276678 -0.006999431 0.67311118 1.000000000 gleason 0.4324171 -0.001275658 0.2688916 0.077820447 0.32041222 0.514830063 pgg45 0.4336522 0.050846821 0.2761124 0.078460018 0.45764762 0.631528245 lpsa 0.7344603 0.354120390 0.1695928 0.179809410 0.56621822 0.548813169 gleason pgg45 lpsa lcavol 0.432417056 0.43365225 0.7344603 lweight -0.001275658 0.05084682 0.3541204 age 0.268891599 0.27611245 0.1695928 lbph 0.077820447 0.07846002 0.1798094 svi 0.320412221 0.45764762 0.5662182 lcp 0.514830063 0.63152825 0.5488132 gleason 1.000000000 0.75190451 0.3689868 pgg45 0.751904512 1.00000000 0.4223159 lpsa 0.368986803 0.42231586 1.0000000round(cor(prostate), 2) # rounding helps to visualise the correlationslcavol lweight age lbph svi lcp gleason pgg45 lpsa lcavol 1.00 0.19 0.22 0.03 0.54 0.68 0.43 0.43 0.73 lweight 0.19 1.00 0.31 0.43 0.11 0.10 0.00 0.05 0.35 age 0.22 0.31 1.00 0.35 0.12 0.13 0.27 0.28 0.17 lbph 0.03 0.43 0.35 1.00 -0.09 -0.01 0.08 0.08 0.18 svi 0.54 0.11 0.12 -0.09 1.00 0.67 0.32 0.46 0.57 lcp 0.68 0.10 0.13 -0.01 0.67 1.00 0.51 0.63 0.55 gleason 0.43 0.00 0.27 0.08 0.32 0.51 1.00 0.75 0.37 pgg45 0.43 0.05 0.28 0.08 0.46 0.63 0.75 1.00 0.42 lpsa 0.73 0.35 0.17 0.18 0.57 0.55 0.37 0.42 1.00As seen above, some variables are highly correlated. In particular, the correlation between

gleasonandpgg45is equal to 0.75.Fitting univariate regression models to predict age using gleason and pgg45 as predictors.

model_gleason <- lm(age ~ gleason, data = prostate) model_pgg45 <- lm(age ~ pgg45, data = prostate)Check which covariates have a significant efffect

summary(model_gleason)Call: lm(formula = age ~ gleason, data = prostate) Residuals: Min 1Q Median 3Q Max -20.780 -3.552 1.448 4.220 13.448 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 45.146 6.918 6.525 3.29e-09 *** gleason 2.772 1.019 2.721 0.00774 ** --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 Residual standard error: 7.209 on 95 degrees of freedom Multiple R-squared: 0.0723, Adjusted R-squared: 0.06254 F-statistic: 7.404 on 1 and 95 DF, p-value: 0.007741summary(model_pgg45)Call: lm(formula = age ~ pgg45, data = prostate) Residuals: Min 1Q Median 3Q Max -21.0889 -3.4533 0.9111 4.4534 15.1822 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 62.08890 0.96758 64.17 < 2e-16 *** pgg45 0.07289 0.02603 2.80 0.00619 ** --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 Residual standard error: 7.193 on 95 degrees of freedom Multiple R-squared: 0.07624, Adjusted R-squared: 0.06651 F-statistic: 7.84 on 1 and 95 DF, p-value: 0.006189Based on these results we conclude that both

gleasonandpgg45have a statistically significant univariate effect (also referred to as a marginal effect) as predictors of age (5% significance level).Fitting a multivariate regression model using both both

gleasonandpgg45as predictorsmodel_multivar <- lm(age ~ gleason + pgg45, data = prostate) summary(model_multivar)Call: lm(formula = age ~ gleason + pgg45, data = prostate) Residuals: Min 1Q Median 3Q Max -20.927 -3.677 1.323 4.323 14.420 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 52.95548 9.74316 5.435 4.3e-07 *** gleason 1.45363 1.54299 0.942 0.349 pgg45 0.04490 0.03951 1.137 0.259 --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 Residual standard error: 7.198 on 94 degrees of freedom Multiple R-squared: 0.08488, Adjusted R-squared: 0.06541 F-statistic: 4.359 on 2 and 94 DF, p-value: 0.01547Although

gleasonandpgg45have statistically significant univariate effects, this is no longer the case when both variables are simultaneously included as covariates in a multivariate regression model.

Including highly correlated variables such as gleason and pgg45

simultaneously the same regression model can lead to problems

in fitting a regression model and interpreting its output. Although each variable

appears to be associated with the response individually, the model cannot distinguish

the contribution of each variable to the model. This can also increase the risk

of over-fitting since the model may fit redundant variables to noise rather

than true relationships.

To allow the information from variables to be included in the same model despite high levels of correlation, we can use dimensionality reduction methods to collapse multiple variables into a single new variable (we will explore this dataset further in the dimensionality reduction lesson). We can also use modifications to linear regression like regularisation, which we will discuss in the lesson on high-dimensional regression.

What statistical methods are used to analyse high-dimensional data?

We have discussed so far that high-dimensional data analysis can be challenging since variables are difficult to visualise, leading to challenges identifying relationships between variables and suitable response variables; we may have relatively few observations compared to features, leading to over-fitting; and features may be highly correlated, leading to challenges interpreting models. We therefore require alternative approaches to examine whether, for example, groups of observations show similar characteristics and whether these groups may relate to other features in the data (e.g. phenotype in genetics data).

In this course, we will cover four methods that help in dealing with high-dimensional data: (1) regression with numerous outcome variables, (2) regularised regression, (3) dimensionality reduction, and (4) clustering. Here are some examples of when each of these approaches may be used:

-

Regression with numerous outcomes refers to situations in which there are many variables of a similar kind (expression values for many genes, methylation levels for many sites in the genome) and when one is interested in assessing whether these variables are associated with a specific covariate of interest, such as experimental condition or age. In this case, multiple univariate regression models (one per each outcome, using the covariate of interest as predictor) could be fitted independently. In the context of high-dimensional molecular data, a typical example are differential gene expression analyses. We will explore this type of analysis in the Regression with many outcomes episode.

-

Regularisation (also known as regularised regression or penalised regression) is typically used to fit regression models when there is a single outcome variable or interest but the number of potential predictors is large, e.g. there are more predictors than observations. Regularisation can help to prevent overfitting and may be used to identify a small subset of predictors that are associated with the outcome of interest. For example, regularised regression has been often used when building epigenetic clocks, where methylation values across several thousands of genomic sites are used to predict chronological age. We will explore this in more detail in the Regularised regression episode.

-

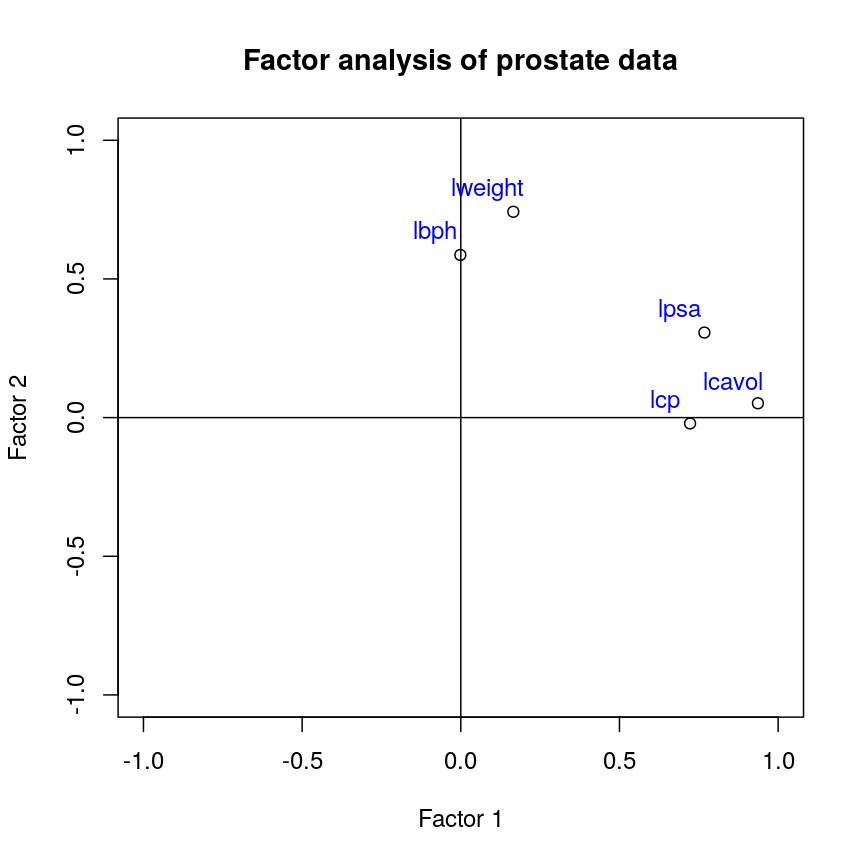

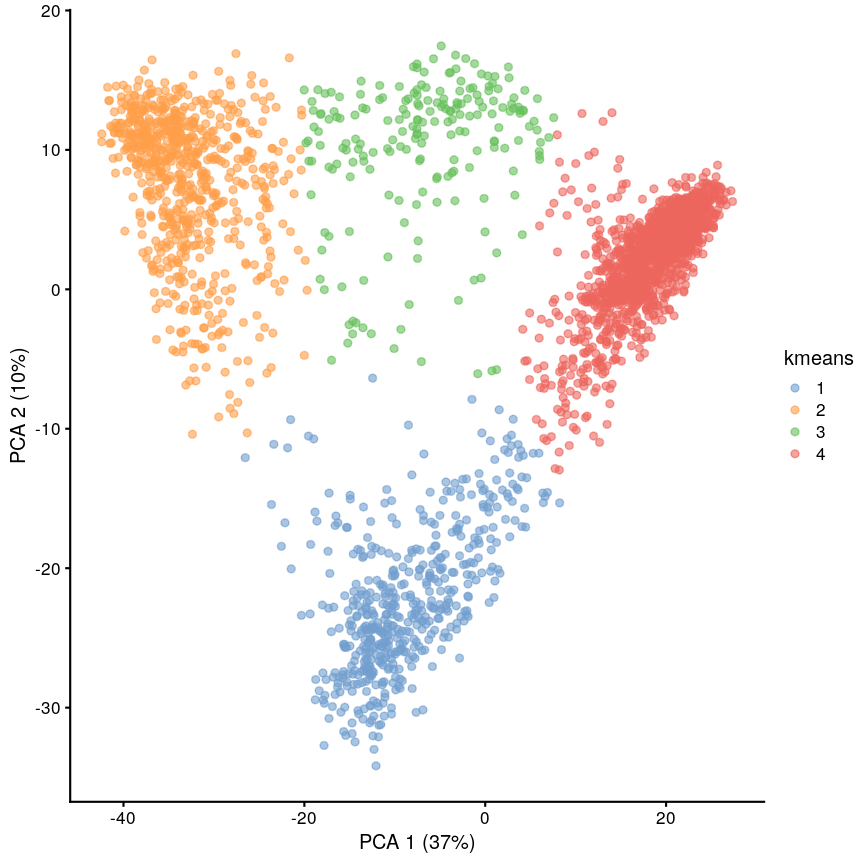

Dimensionality reduction is commonly used on high-dimensional datasets for data exploration or as a preprocessing step prior to other downstream analyses. For instance, a low-dimensional visualisation of a gene expression dataset may be used to inform quality control steps (e.g. are there any anomalous samples?). This course contains two episodes that explore dimensionality reduction techniques: Principal component analysis and Factor analysis.

-

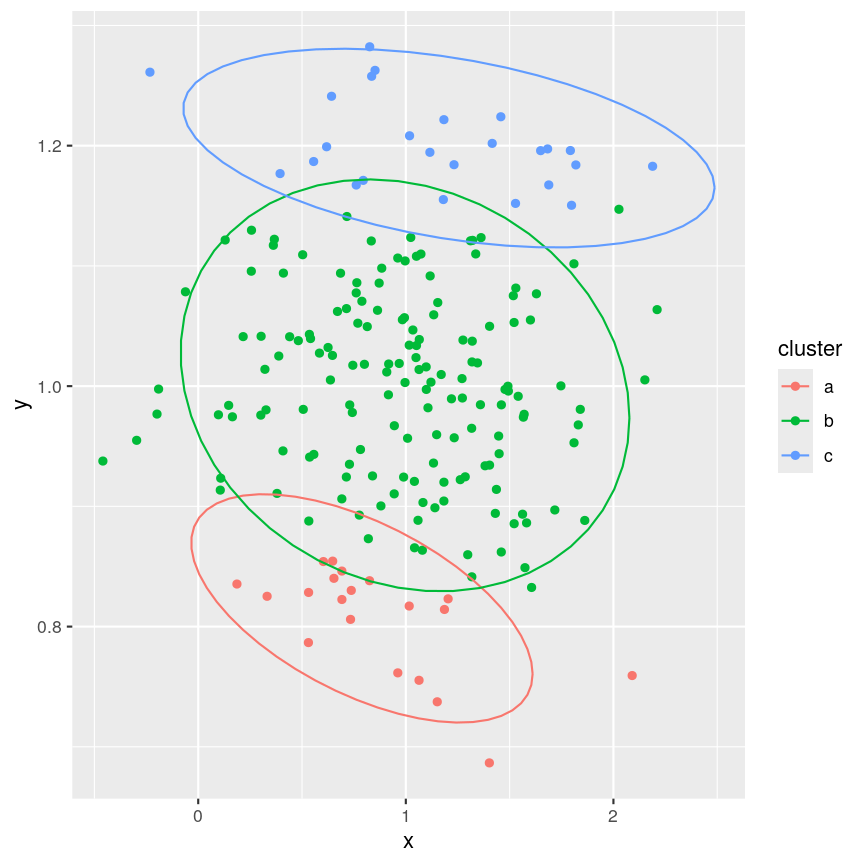

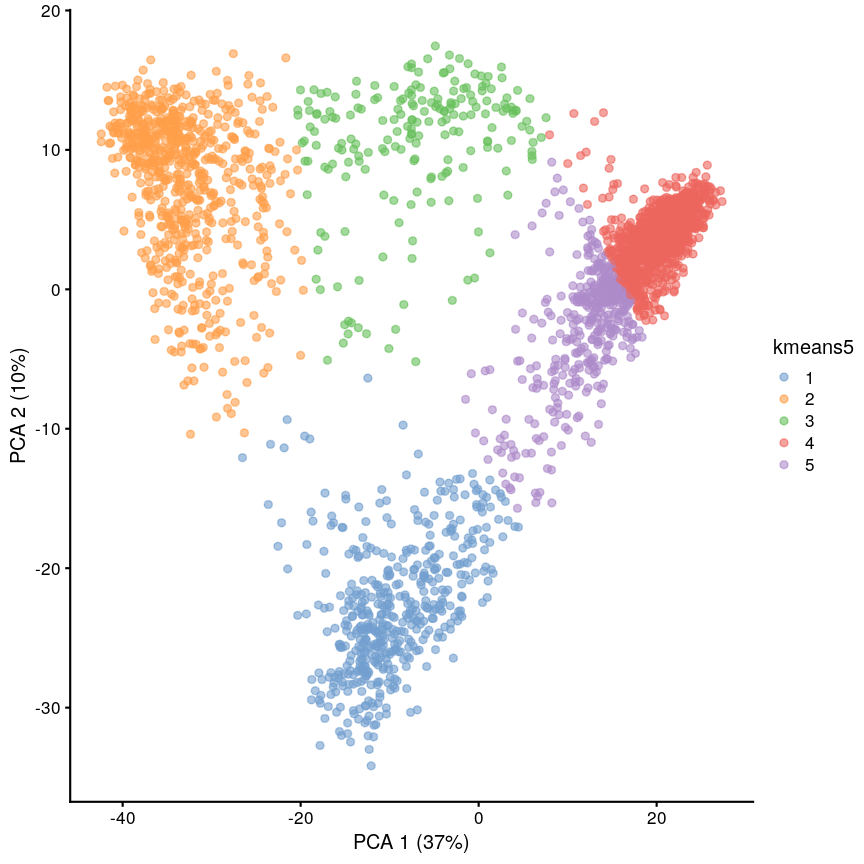

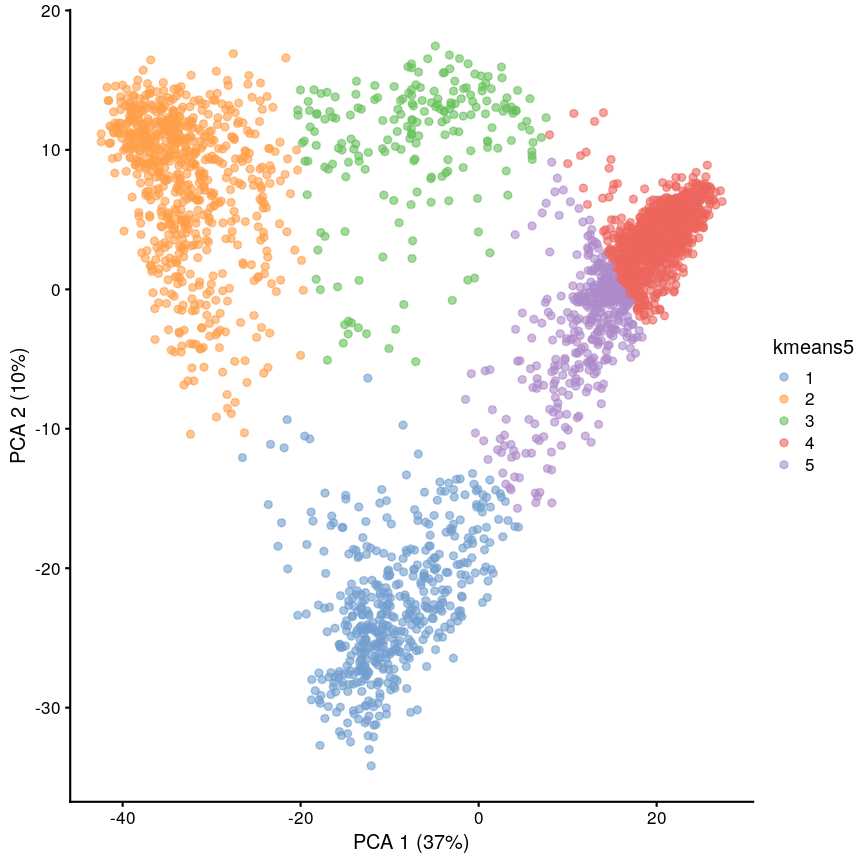

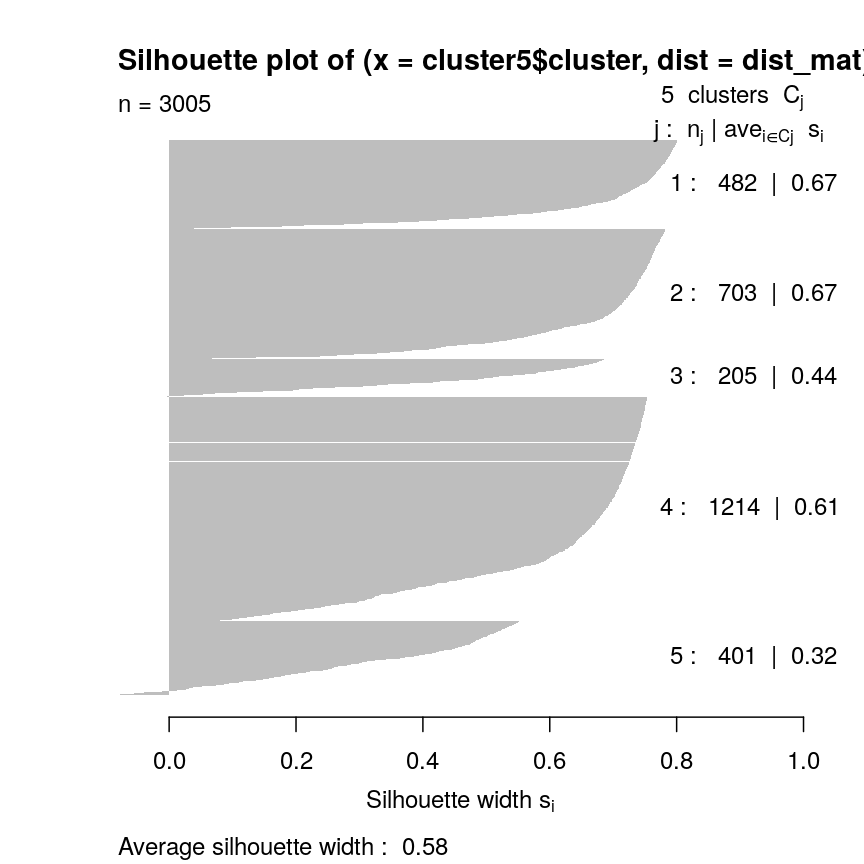

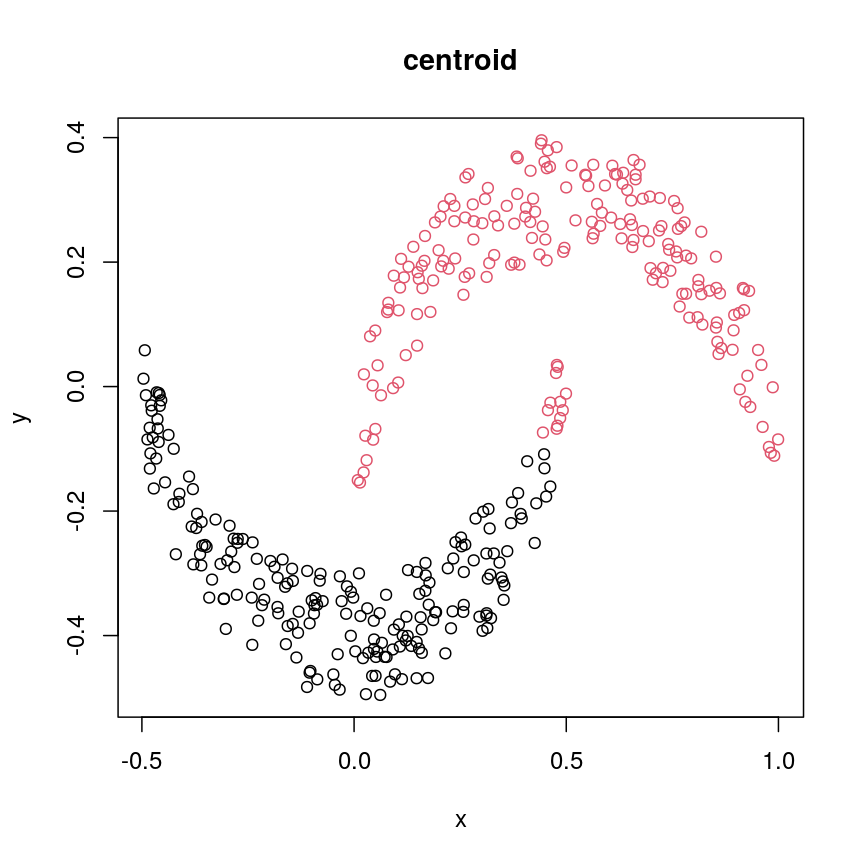

Clustering methods can be used to identify potential grouping patterns within a dataset. A popular example is the identification of distinct cell types through clustering cells with similar gene expression patterns. The K-means episode will explore a specific method to perform clustering analysis.

Using Bioconductor to access high-dimensional data in the biosciences

In this workshop, we will look at statistical methods that can be used to visualise and analyse high-dimensional biological data using packages available from Bioconductor, open source software for analysing high throughput genomic data. Bioconductor contains useful packages and example datasets as shown on the website https://www.bioconductor.org/.

Bioconductor packages can be installed and used in

Rusing theBiocManagerpackage. Let’s load theminfipackage from Bioconductor (a package for analysing Illumina Infinium DNA methylation arrays).library("minfi")browseVignettes("minfi")We can explore these packages by browsing the vignettes provided in Bioconductor. Bioconductor has various packages that can be used to load and examine datasets in

Rthat have been made available in Bioconductor, usually along with an associated paper or package.Next, we load the

methylationdataset which represents data collected using Illumina Infinium methylation arrays which are used to examine methylation across the human genome. These data include information collected from the assay as well as associated metadata from individuals from whom samples were taken.library("here") library("ComplexHeatmap") methylation <- readRDS(here("data/methylation.rds")) head(colData(methylation))DataFrame with 6 rows and 14 columns Sample_Well Sample_Name purity Sex Age <character> <character> <integer> <character> <integer> 201868500150_R01C01 A07 PCA0612 94 M 39 201868500150_R03C01 C07 NKpan2510 95 M 49 201868500150_R05C01 E07 WB1148 95 M 20 201868500150_R07C01 G07 B0044 97 M 49 201868500150_R08C01 H07 NKpan1869 95 F 33 201868590193_R02C01 B03 NKpan1850 93 F 21 weight_kg height_m bmi bmi_clas Ethnicity_wide <numeric> <numeric> <numeric> <character> <character> 201868500150_R01C01 88.4505 1.8542 25.7269 Overweight Mixed 201868500150_R03C01 81.1930 1.6764 28.8911 Overweight Indo-European 201868500150_R05C01 80.2858 1.7526 26.1381 Overweight Indo-European 201868500150_R07C01 82.5538 1.7272 27.6727 Overweight Indo-European 201868500150_R08C01 87.5433 1.7272 29.3452 Overweight Indo-European 201868590193_R02C01 87.5433 1.6764 31.1507 Obese Mixed Ethnic_self smoker Array Slide <character> <character> <character> <numeric> 201868500150_R01C01 Hispanic No R01C01 2.01869e+11 201868500150_R03C01 Caucasian No R03C01 2.01869e+11 201868500150_R05C01 Persian No R05C01 2.01869e+11 201868500150_R07C01 Caucasian No R07C01 2.01869e+11 201868500150_R08C01 Caucasian No R08C01 2.01869e+11 201868590193_R02C01 Finnish/Creole No R02C01 2.01869e+11methyl_mat <- t(assay(methylation)) ## calculate correlations between cells in matrix cor_mat <- cor(methyl_mat)cor_mat[1:10, 1:10] # print the top-left corner of the correlation matrixThe

assay()function creates a matrix-like object where rows represent probes for genes and columns represent samples. We calculate correlations between features in themethylationdataset and examine the first 100 cells of this matrix. The size of the dataset makes it difficult to examine in full, a common challenge in analysing high-dimensional genomics data.

Further reading

- Buhlman, P. & van de Geer, S. (2011) Statistics for High-Dimensional Data. Springer, London.

- Buhlman, P., Kalisch, M. & Meier, L. (2014) High-dimensional statistics with a view toward applications in biology. Annual Review of Statistics and Its Application.

- Johnstone, I.M. & Titterington, D.M. (2009) Statistical challenges of high-dimensional data. Philosophical Transactions of the Royal Society A 367:4237-4253.

- Bioconductor ethylation array analysis vignette.

- The Introduction to Machine Learning with Python course covers additional methods that could be used to analyse high-dimensional data. See Introduction to machine learning, Tree models and Neural networks. Some related (an important!) content is also available in Responsible machine learning.

Other resources suggested by former students

- Josh Starmer’s youtube channel.

Key Points

High-dimensional data are data in which the number of features, $p$, are close to or larger than the number of observations, $n$.

These data are becoming more common in the biological sciences due to increases in data storage capabilities and computing power.

Standard statistical methods, such as linear regression, run into difficulties when analysing high-dimensional data.

In this workshop, we will explore statistical methods used for analysing high-dimensional data using datasets available on Bioconductor.

Regression with many outcomes

Overview

Teaching: 70 min

Exercises: 50 minQuestions

How can we apply linear regression in a high-dimensional setting?

How can we benefit from the fact that we have many outcomes?

How can we control for the fact that we do many tests?

Objectives

Perform and critically analyse high-dimensional regression.

Understand methods for shrinkage of noise parameters in high-dimensional regression.

Perform multiple testing adjustment.

DNA methylation data

For the following few episodes, we will be working with human DNA methylation data from flow-sorted blood samples, described in data. DNA methylation assays measure, for each of many sites in the genome, the proportion of DNA that carries a methyl mark (a chemical modification that does not alter the DNA sequence). In this case, the methylation data come in the form of a matrix of normalised methylation levels (M-values), where negative values correspond to unmethylated DNA and positive values correspond to methylated DNA. Along with this, we have a number of sample phenotypes (eg, age in years, BMI).

Let’s read in the data for this episode:

library("here")

library("minfi")

methylation <- readRDS(here("data/methylation.rds"))

Note: the code that we used to download these data from its source is available here

methylation is actually a special Bioconductor SummarizedExperiment

object that summarises lots of different information about the data.

These objects are very useful for storing all of the information

about a dataset in a high-throughput context. The structure of SummarizedExperiment

objects is described in the vignettes on

Bioconductor.

Here, we show how to extract the information for analysis.

We can extract

- the dimensions of the dataset using

dim(). Importantly, in these objects and data structures for computational biology in R generally, observations are stored as columns and features (in this case, sites in the genome) are stored as rows. This is in contrast to usual tabular data, where features or variables are stored as columns and observations are stored as rows; - assays, (normalised methylation levels here), using

assay(); - sample-level information using

colData().

dim(methylation)

[1] 5000 37

You can see in this output that this object has a dim() of

$5000 \times 37$, meaning it has

5000 features and 37 observations. To

extract the matrix of methylation M-values, we can use the

assay() function.

methyl_mat <- assay(methylation)

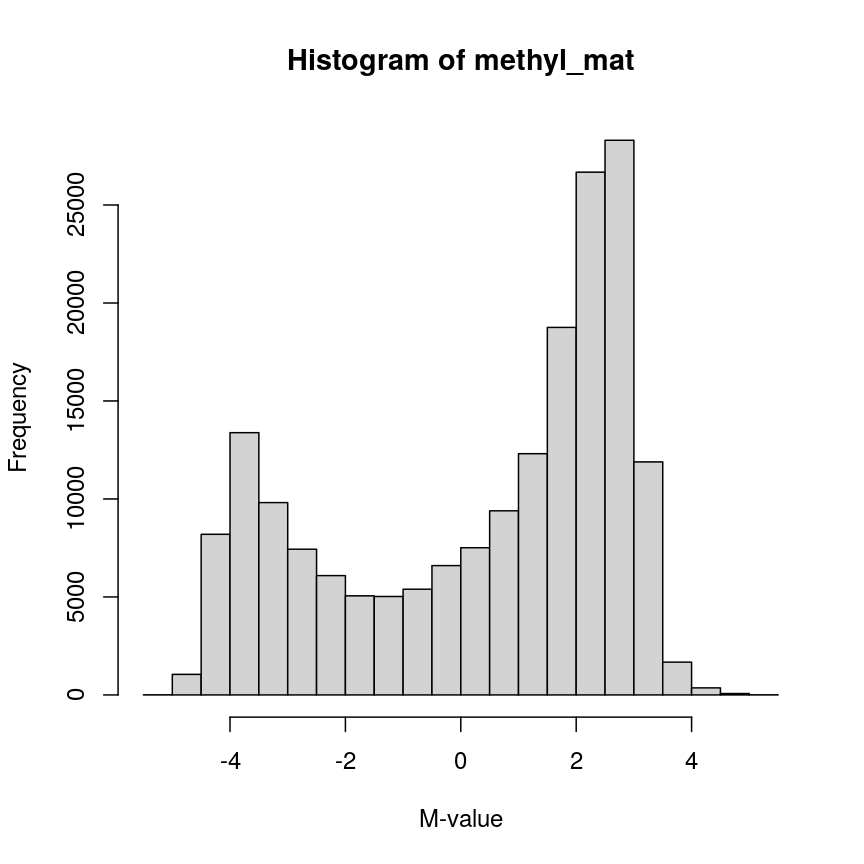

The distribution of these M-values looks like this:

hist(methyl_mat, xlab = "M-value")

Methylation levels are generally bimodally distributed.

You can see that there are two peaks in this distribution, corresponding to features which are largely unmethylated and methylated, respectively.

Similarly, we can examine the colData(), which represents the

sample-level metadata we have relating to these data. In this case, the

metadata, phenotypes, and groupings in the colData look like this for

the first 6 samples:

head(colData(methylation))

| Sample_Well | Sample_Name | purity | Sex | Age | weight_kg | height_m | bmi | bmi_clas | Ethnicity_wide | Ethnic_self | smoker | Array | Slide |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| A07 | PCA0612 | 94 | M | 39 | 88.45051 | 1.8542 | 25.72688 | Overweight | Mixed | Hispanic | No | R01C01 | 201868500150 |

| C07 | NKpan2510 | 95 | M | 49 | 81.19303 | 1.6764 | 28.89106 | Overweight | Indo-European | Caucasian | No | R03C01 | 201868500150 |

| E07 | WB1148 | 95 | M | 20 | 80.28585 | 1.7526 | 26.13806 | Overweight | Indo-European | Persian | No | R05C01 | 201868500150 |

| G07 | B0044 | 97 | M | 49 | 82.55381 | 1.7272 | 27.67272 | Overweight | Indo-European | Caucasian | No | R07C01 | 201868500150 |

| H07 | NKpan1869 | 95 | F | 33 | 87.54333 | 1.7272 | 29.34525 | Overweight | Indo-European | Caucasian | No | R08C01 | 201868500150 |

| B03 | NKpan1850 | 93 | F | 21 | 87.54333 | 1.6764 | 31.15070 | Obese | Mixed | Finnish/Creole | No | R02C01 | 201868590193 |

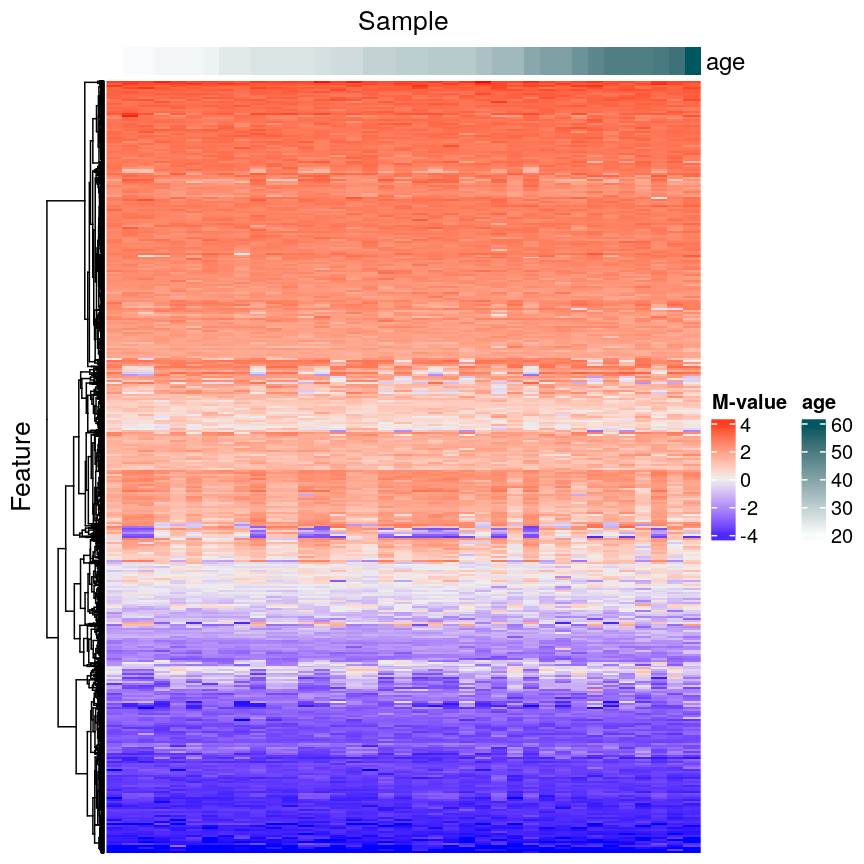

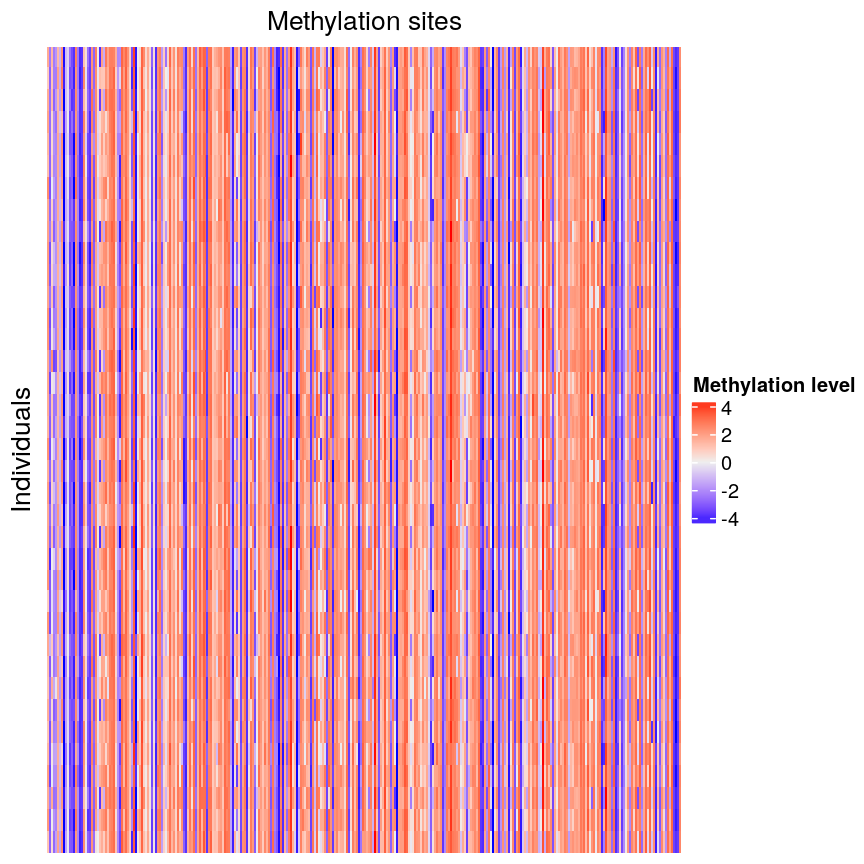

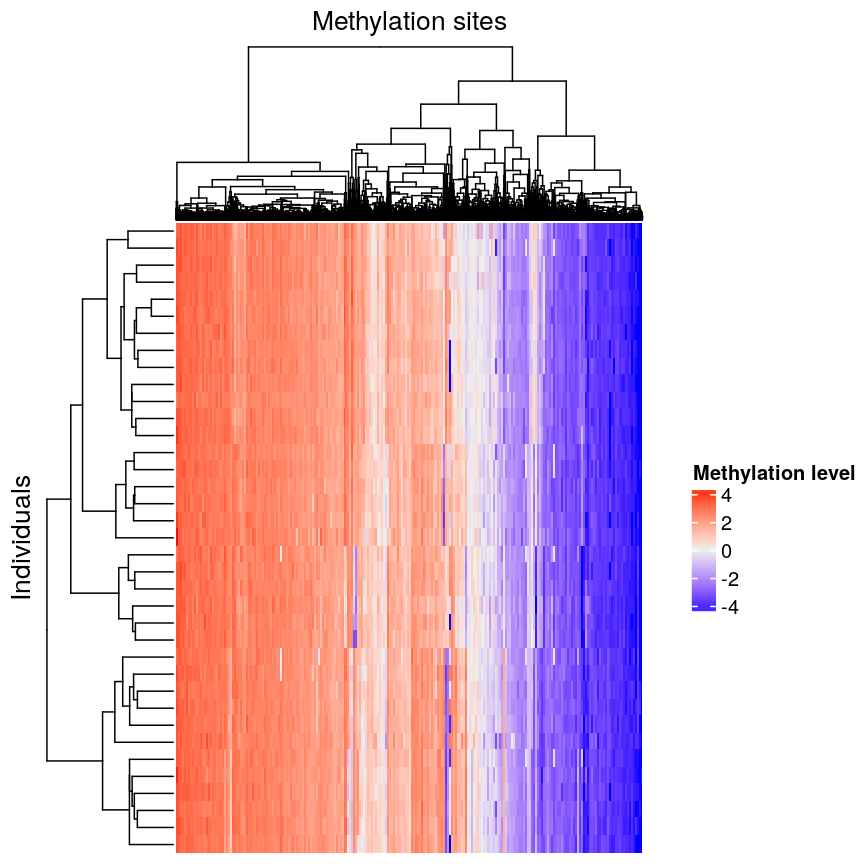

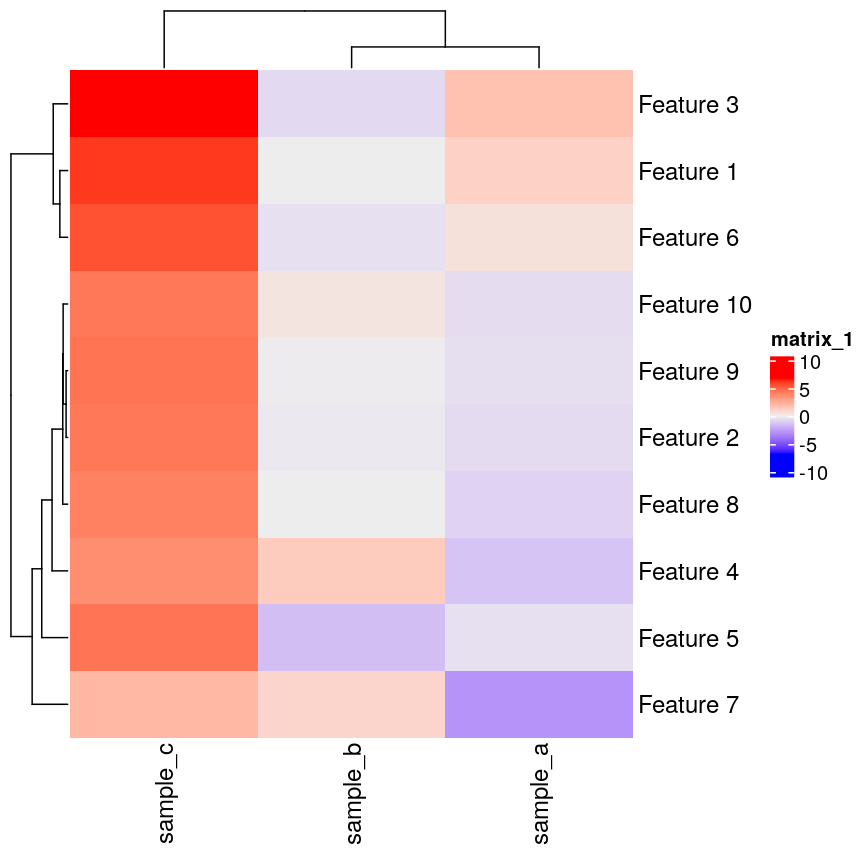

In this episode, we will focus on the association between age and methylation. The following heatmap summarises age and methylation levels available in the methylation dataset:

library("ComplexHeatmap")

age <- methylation$Age

# sort methylation values by age

order <- order(age)

age_ord <- age[order]

methyl_mat_ord <- methyl_mat[, order]

# plot heatmap

Heatmap(methyl_mat_ord,

cluster_columns = FALSE,

show_row_names = FALSE,

show_column_names = FALSE,

name = "M-value",

row_title = "Feature",

column_title = "Sample",

top_annotation = columnAnnotation(age = age_ord))

Heatmap of methylation values across all features.

Challenge 1

Why can we not just fit many linear regression models relating every combination of feature (

colDataand assays) and draw conclusions by associating all variables with significant model p-values?Solution

There are a number of problems that this kind of approach presents. For example:

- If we perform 5000 tests for each of 14 variables, even if there were no true associations in the data, we’d be likely to observe some strong spurious associations that arise just from random noise.

- We may not have a representative sample for each of these covariates. For example, we may have very small sample sizes for some ethnicities, leading to spurious findings.

- Without a research question in mind when creating a model, it’s not clear how we can interpret each model, and rationalising the results after the fact can be dangerous; it’s easy to make up a “story” that isn’t grounded in anything but the fact that we have significant findings.

In general, it is scientifically interesting to explore two modelling problems using the three types of data:

-

Predicting methylation levels using age as a predictor. In this case, we would have 5000 outcomes (methylation levels across the genome) and a single covariate (age).

-

Predicting age using methylation levels as predictors. In this case, we would have a single outcome (age) which will be predicted using 5000 covariates (methylation levels across the genome).

The examples in this episode will focus on the first type of problem, whilst the next episode will focus on the second.

Measuring DNA Methylation

DNA methylation is an epigenetic modification of DNA. Generally, we are interested in the proportion of methylation at many sites or regions in the genome. DNA methylation microarrays, as we are using here, measure DNA methylation using two-channel microarrays, where one channel captures signal from methylated DNA and the other captures unmethylated signal. These data can be summarised as “Beta values” ($\beta$ values), which is the ratio of the methylated signal to the total signal (methylated plus unmethylated). The $\beta$ value for site $i$ is calculated as

\[\beta_i = \frac{ m_i } { u_{i} + m_{i} }\]where $m_i$ is the methylated signal for site $i$ and $u_i$ is the unmethylated signal for site $i$. $\beta$ values take on a value in the range $[0, 1]$, with 0 representing a completely unmethylated site and 1 representing a completely methylated site.

The M-values we use here are the $\log_2$ ratio of methylated versus unmethylated signal:

\[M_i = \log_2\left(\frac{m_i}{u_i}\right)\]M-values are not bounded to an interval as Beta values are, and therefore can be easier to work with in statistical models.

Regression with many outcomes

In high-throughput studies, it is common to have one or more phenotypes or groupings that we want to relate to features of interest (eg, gene expression, DNA methylation levels). In general, we want to identify differences in the features of interest that are related to a phenotype or grouping of our samples. Identifying features of interest that vary along with phenotypes or groupings can allow us to understand how phenotypes arise or manifest. Analysis of this type is sometimes referred to using the term differential analysis.

For example, we might want to identify genes that are expressed at a higher level in mutant mice relative to wild-type mice to understand the effect of a mutation on cellular phenotypes. Alternatively, we might have samples from a set of patients, and wish to identify epigenetic features that are different in young patients relative to old patients, to help us understand how ageing manifests.

Using linear regression, it is possible to identify differences like

these. However, high-dimensional data like the ones we’re working with

require some special considerations. A first consideration, as we saw

above, is that there are far too many features to fit each one-by-one as

we might do when analysing low-dimensional datasets (for example using

lm() on each feature and checking the linear model assumptions). A

second consideration is that statistical approaches may behave

slightly differently when applied to very high-dimensional data, compared to

low-dimensional data. A third consideration is the speed at which we can

actually compute statistics for data this large – methods optimised for

low-dimensional data may be very slow when applied to high-dimensional

data.

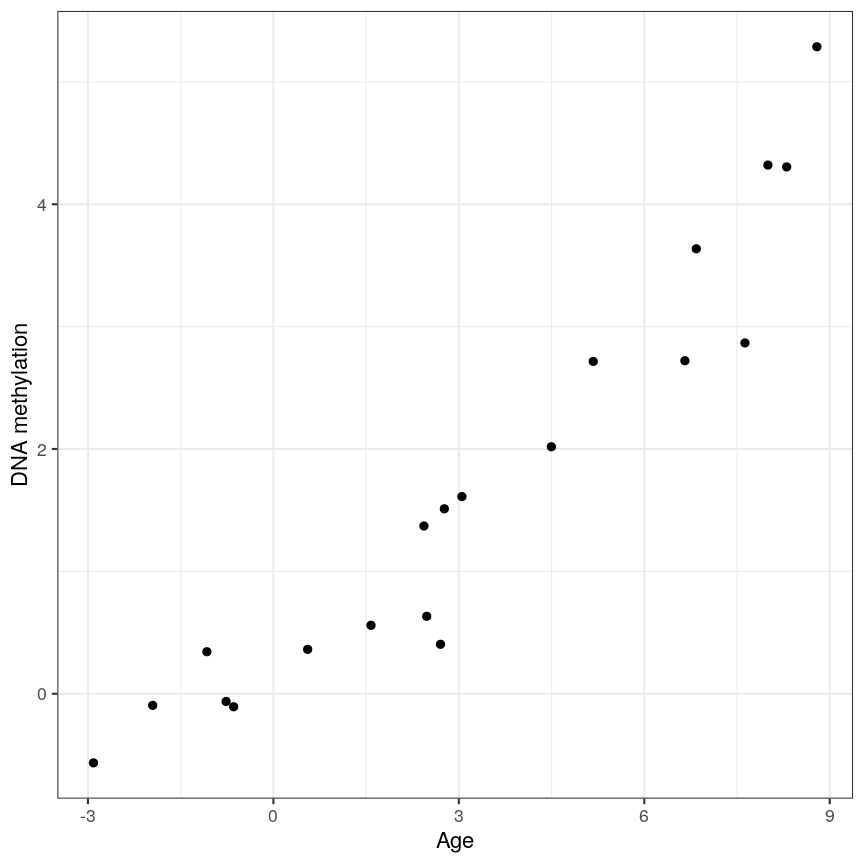

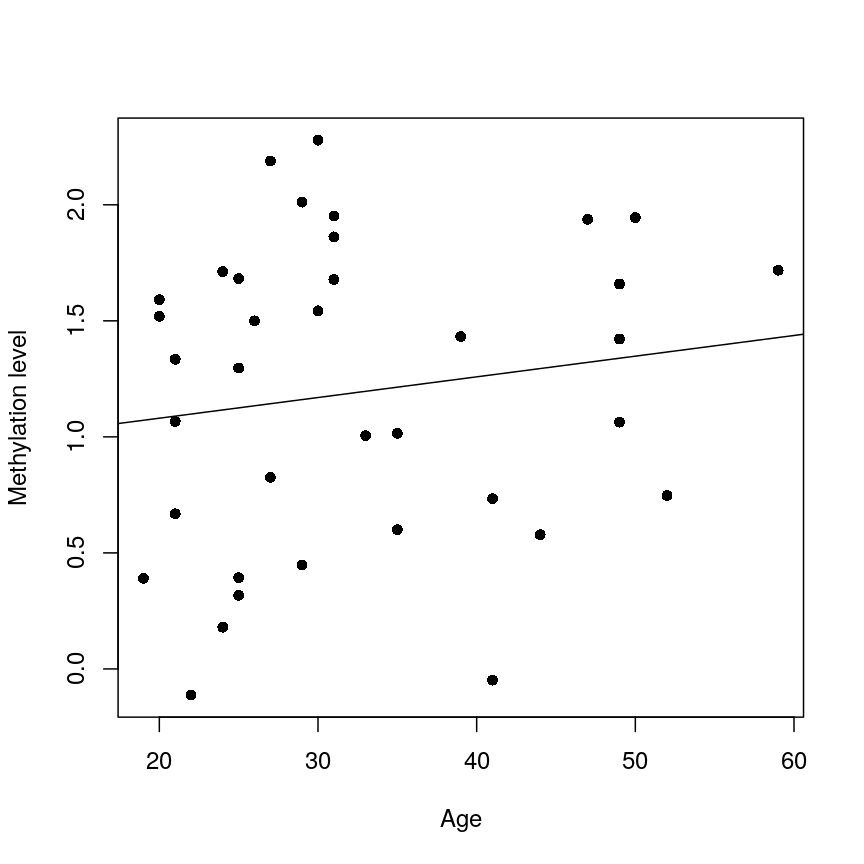

Ideally when performing regression, we want to identify cases like this, where there is a clear association, and we probably “don’t need” statistics:

A scatter plot of age and a feature of interest.

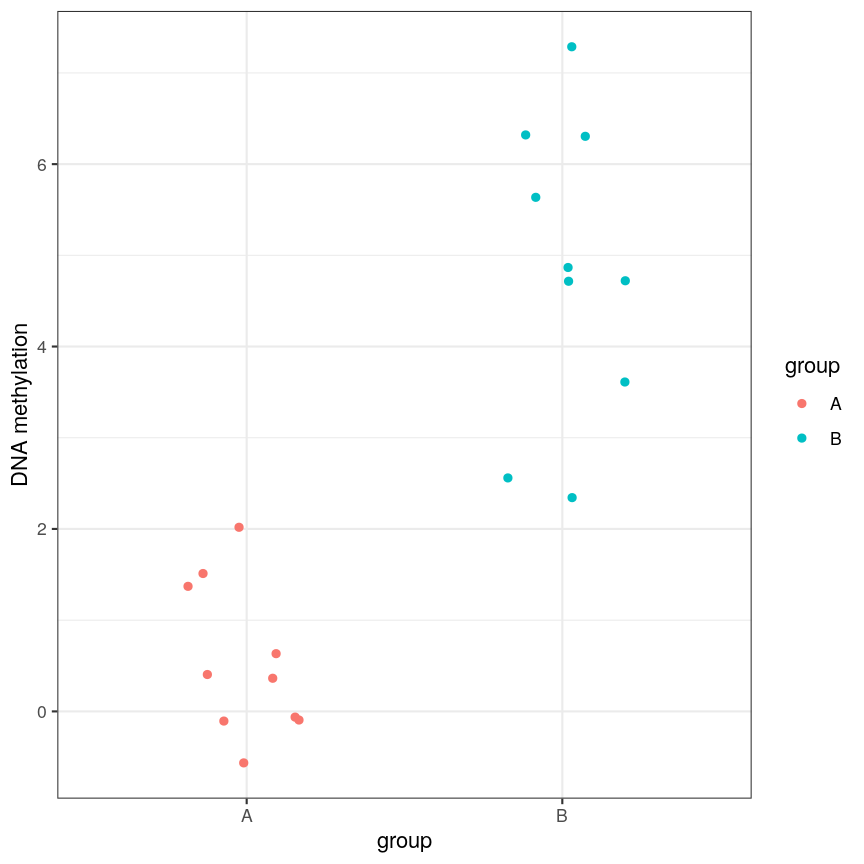

or equivalently for a discrete covariate:

A scatter plot of a grouping and a feature of interest.

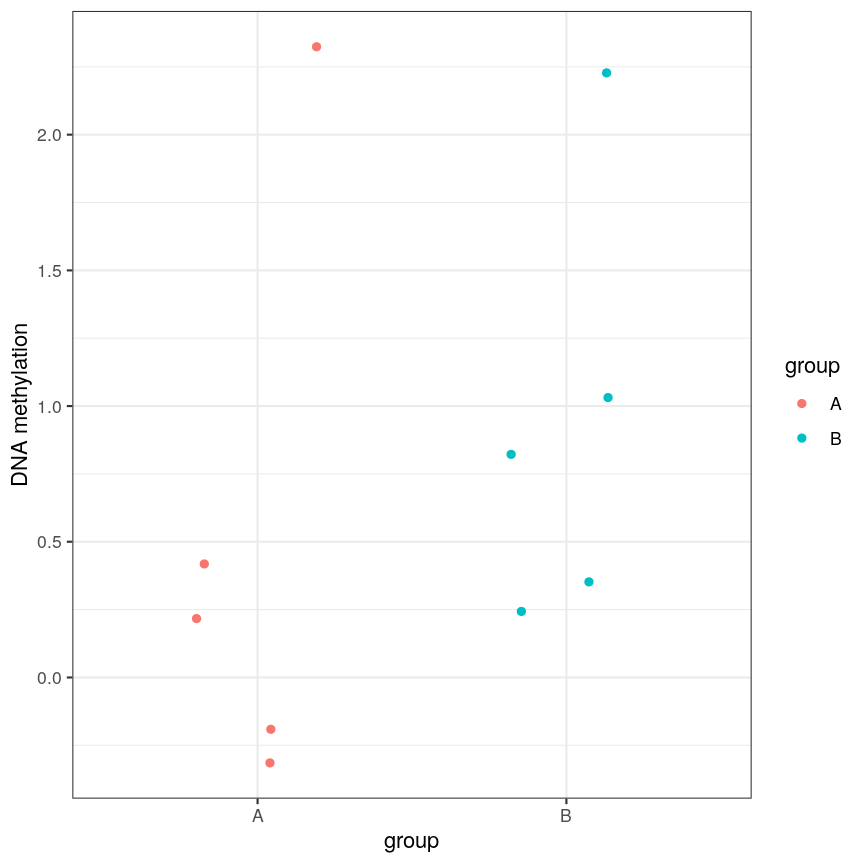

However, often due to small differences and small sample sizes, the problem is more difficult:

A scatter plot of a grouping and a feature of interest.

And, of course, we often have an awful lot of features and need to prioritise a subset of them! We need a rigorous way to prioritise genes for further analysis.

Fitting a linear model

So, in the data we have read in, we have a matrix of methylation values $X$ and a vector of ages, $y$. One way to model this is to see if we can use age to predict the expected (average) methylation value for sample $j$ at a given locus $i$, which we can write as $X_{ij}$. We can write that model as:

[\mathbf{E}(X_{ij}) = \beta_0 + \beta_1 \text{Age}_j]

where $\text{Age}_j$ is the age of sample $j$. In this model, $\beta_1$

represents the unit change in mean methylation level for each unit

(year) change in age. For a specific CpG, we can fit this model and get more

information from the model object. For illustration purposes, here we

arbitrarily select the first CpG in the methyl_mat matrix (the one on its first row).

age <- methylation$Age

# methyl_mat[1, ] indicates that the 1st CpG will be used as outcome variable

lm_age_methyl1 <- lm(methyl_mat[1, ] ~ age)

lm_age_methyl1

Call:

lm(formula = methyl_mat[1, ] ~ age)

Coefficients:

(Intercept) age

0.902334 0.008911

We now have estimates for the expected methylation level when age equals 0 (the intercept) and the change in methylation level for a unit change in age (the slope). We could plot this linear model:

plot(age, methyl_mat[1, ], xlab = "Age", ylab = "Methylation level", pch = 16)

abline(lm_age_methyl1)

A scatter plot of age versus the methylation level for an arbitrarily selected CpG side (the one stored as the first column of methyl_mat). Each dot represents an individual. The black line represents the estimated linear model.

For this feature, we can see that there is no strong relationship between methylation and age. We could try to repeat this for every feature in our dataset; however, we have a lot of features! We need an approach that allows us to assess associations between all of these features and our outcome while addressing the three considerations we outlined previously. Before we introduce this approach, let’s go into detail about how we generally check whether the results of a linear model are statistically significant.

Hypothesis testing in linear regression

Using the linear model we defined above, we can ask questions based on the estimated value for the regression coefficients. For example, do individuals with different age have different methylation values for a given CpG? We usually do this via hypothesis testing. This framework compares the results that we observed (here, estimated linear model coefficients) to the results you would expect under a null hypothesis associated to our question. In the example above, a suitable null hypothesis would test whether the regression coefficient associated to age ($\beta_1$) is equal to zero or not. If $\beta_1$ is equal to zero, the linear model indicates that there is no linear relationship between age and the methylation level for the CpG (remember: as its name suggests, linear regression can only be used to model linear relationships between predictors and outcomes!). In other words, the answer to our question would be: no!

The output of a linear model typically returns the results associated with the null hypothesis described above (this may not always be the most realistic or useful null hypothesis, but it is the one we have by default!). To be more specific, the test compares our observed results with a set of hypothetical counter-examples of what we would expect to observe if we repeated the same experiment and analysis over and over again under the null hypothesis.

For this linear model, we can use tidy() from the broom package to

extract detailed information about the coefficients and the associated

hypothesis tests in this model:

library("broom")

tidy(lm_age_methyl1)

# A tibble: 2 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 (Intercept) 0.902 0.344 2.62 0.0129

2 age 0.00891 0.0100 0.888 0.381

The standard errors (std.error) represent the statistical uncertainty in our

regression coefficient estimates (often referred to as effect size). The test

statistics and p-values (statistic and p.value) represent measures of how (un)likely it would be to observe

results like this under the “null hypothesis”. For coefficient $k$ in a

linear model (in our case, it would be the slope), the test statistic calculated in statistic above is

a t-statistic given by:

[t_{k} = \frac{\hat{\beta}{k}}{SE\left(\hat{\beta}{k}\right)}]

$SE\left(\hat{\beta}_{k}\right)$ measures the uncertainty we have in our effect size estimate. Knowing what distribution these t-statistics follow under the null hypothesis allows us to determine how unlikely it would be for us to observe what we have under those circumstances, if we repeated the experiment and analysis over and over again. To demonstrate how the t-statistics are calculated, we can compute them “by hand”:

table_age_methyl1 <- tidy(lm_age_methyl1)

tvals <- table_age_methyl1$estimate / table_age_methyl1$std.error

all.equal(tvals, table_age_methyl1$statistic)

[1] TRUE

We can see that the t-statistic is just the ratio between the coefficient estimate and the standard error.

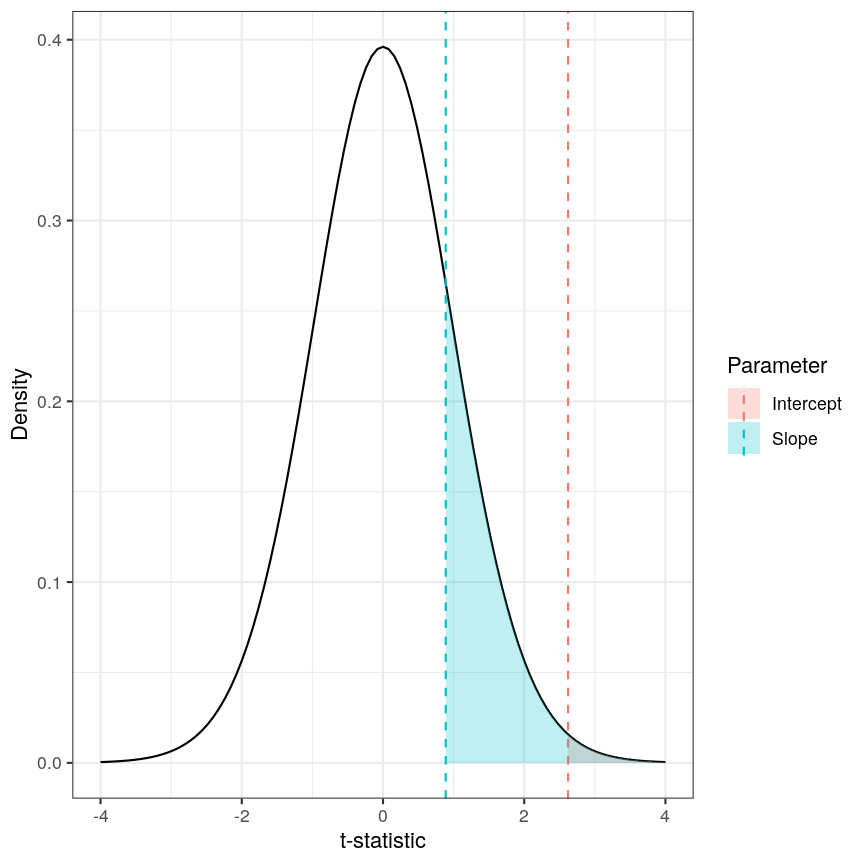

Calculating the p-values is a bit more tricky. Specifically, it is the proportion of the distribution of the test statistic under the null hypothesis that is as extreme or more extreme than the observed value of the test statistic. This is easy to observe visually, by plotting the theoretical distribution of the test statistic under the null hypothesis (see next call-out box for more details about it):

The p-value for a regression coefficient represents how often it'd be observed under the null.

The red-ish shaded region represents the portion of the distribution of the test statistic under the null hypothesis that is equal or greater to the value we observe for the intercept term. As our null hypothesis relates to a 2-tailed test (as the null hypothesis states that the regression coefficient is equal to zero, we would reject it if the regression coefficient is substantially larger or smaller than zero), the p-value for the test is twice the value of the shaded region. In this case, the shaded region is small relative to the total area of the null distribution; therefore, the p-value is small ($p=0.013$). The blue-ish shaded region represents the same measure for the slope term; this is larger, relative to the total area of the distribution, therefore the p-value is larger than the one for the intercept term ($p=0.381$). The p-value is a function of the test statistic: the ratio between the effect size we’re estimating and the uncertainty we have in that effect. A large effect with large uncertainty may not lead to a small p-value, and a small effect with small uncertainty may lead to a small p-value.

Calculating p-values from a linear model

Manually calculating the p-value for a linear model is a little bit more complex than calculating the t-statistic. The intuition posted above is definitely sufficient for most cases, but for completeness, here is how we do it:

Since the statistic in a linear model is a t-statistic, it follows a student t distribution under the null hypothesis, with degrees of freedom (a parameter of the student t-distribution) given by the number of observations minus the number of coefficients fitted, in this case $37 - 2 = 35$. We want to know what portion of the distribution function of the test statistic is as extreme as, or more extreme than, the value we observed. The function

pt()(similar topnorm(), etc) can give us this information.Since we’re not sure if the coefficient will be larger or smaller than zero, we want to do a 2-tailed test. Therefore we take the absolute value of the t-statistic, and look at the upper rather than lower tail. In the figure above the shaded areas are only looking at “half” of the t-distribution (which is symmetric around zero), therefore we multiply the shaded area by 2 in order to calculate the p-value.

Combining all of this gives us:

pvals <- 2 * pt(abs(tvals), df = lm_age_methyl1$df, lower.tail = FALSE) all.equal(table_age_methyl1$p.value, pvals)[1] TRUE

Challenge 2

In the model we fitted, the estimate for the intercept is 0.902 and its associated p-value is 0.0129. What does this mean?

Solution

The first coefficient in a linear model like this is the intercept, which measures the mean of the outcome (in this case, the methylation value for the first CpG) when age is zero. In this case, the intercept estimate is 0.902. However, this is not a particularly noteworthy finding as we do not have any observations with age zero (nor even any with age < 20!).

The reported p-value is associated to the following null hypothesis: the intercept ($\beta_0$ above) is equal to zero. Using the usual significance threshold of 0.05, we reject the null hypothesis as the p-value is smaller than 0.05. However, it is not really interesting if this intercept is zero or not, since we probably do not care what the methylation level is when age is zero. In fact, this question does not even make much sense! In this example, we are more interested in the regression coefficient associated to age, as that can tell us whether there is a linear relationship between age and methylation for the CpG.

Fitting a lot of linear models

In the linear model above, we are generally interested in the second regression coefficient (often referred to as slope) which measures the linear relationship between age and methylation levels. For the first CpG, here is its estimate:

coef_age_methyl1 <- tidy(lm_age_methyl1)[2, ]

coef_age_methyl1

# A tibble: 1 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 age 0.00891 0.0100 0.888 0.381

In this case, the p-value is equal to 0.381 and therefore we cannot reject the null hypothesis: there is no statistical evidence to suggest that the regression coefficient associated to age is not equal to zero.

Now, we could do this for every feature (CpG) in the dataset and rank the results based on their test statistic or associated p-value. However, fitting models in this way to 5000 features is not very computationally efficient, and it would also be laborious to do programmatically. There are ways to get around this, but first let us talk about what exactly we are doing when we look at significance tests in this context.

Sharing information across outcome variables

We are going to introduce an idea that allows us to take advantage of the fact that we carry out many tests at once on structured data. We can leverage this fact to share information between model parameters. The insight that we use to perform information pooling or sharing is derived from our knowledge about the structure of the data. For example, in a high-throughput experiment like a DNA methylation assay, we know that all of the features were measured simultaneously, using the same technique. This means that generally, we expect the base-level variability for each feature to be broadly similar.

This can enable us to get a better estimate of the uncertainty of model parameters than we could get if we consider each feature in isolation. So, to share information between features allows us to get more robust estimators. Remember that the t-statistic for coefficient $\beta_k$ in a linear model is the ratio between the coefficient estimate and its standard error:

[t_{k} = \frac{\hat{\beta}{k}}{SE\left(\hat{\beta}{k}\right)}]

It is clear that large effect sizes will likely lead to small p-values, as long as the standard error for the coefficent is not large. However, the standard error is affected by the amount of noise, as we saw earlier. If we have a small number of observations, it is common for the noise for some features to be extremely small simply by chance. This, in turn, causes small p-values for these features, which may give us unwarranted confidence in the level of certainty we have in the results (false positives).

There are many statistical methods in genomics that use this type of

approach to get better estimates by pooling information between features

that were measured simultaneously using the same techniques. Here we

will focus on the package limma, which is an established software

package used to fit linear models, originally for the gene expression

micro-arrays that were common in the 2000s, but which is still in use in

RNAseq experiments, among others. The authors of limma made some

assumptions about the distributions that these follow, and pool

information across genes to get a better estimate of the uncertainty in

effect size estimates. It uses the idea that noise levels should be

similar between features to moderate the estimates of the test

statistic by shrinking the estimates of standard errors towards a common

value. This results in a moderated t-statistic.

The process of running a model in limma is somewhat different to what you

may have seen when running linear models. Here, we define a model matrix or

design matrix, which is a way of representing the

coefficients that should be fit in each linear model. These are used in

similar ways in many different modelling libraries.

What is a model matrix?

R fits a regression model by choosing the vector of regression coefficients that minimises the differences between outcome values and predicted values using the covariates (or predictor variables). To get predicted values, we multiply the matrix of predictors by the coefficients. The latter matrix is called the model matrix (or design matrix). It has one row for each observation and one column for each predictor, plus one additional column of ones (the intercept column). Many R libraries contruct the model matrix behind the scenes, but

limmadoes not. Usually, the model matrix can be extracted from a model fit using the functionmodel.matrix(). Here is an example of a model matrix for the methylation model:design_age <- model.matrix(lm_age_methyl1) # model matrix head(design_age)(Intercept) age 201868500150_R01C01 1 39 201868500150_R03C01 1 49 201868500150_R05C01 1 20 201868500150_R07C01 1 49 201868500150_R08C01 1 33 201868590193_R02C01 1 21dim(design_age)[1] 37 2As you can see, the model matrix has the same number of rows as our methylation data has samples. It also has two columns - one for the intercept (similar to the linear model we fit above) and one for age. This happens “under the hood” when fitting a linear model with

lm(), but here we have to specify it directly. The limma user manual has more detail on how to make model matrices for different types of experimental design, but here we are going to stick with this simple two-variable case.

We pass our methylation data to lmFit(), specifying

the model matrix. Internally, this function runs lm() on each row of

the data in an efficient way. The function eBayes(), when applied to the

output of lmFit(), performs the pooled estimation of standard errors

that results in the moderated t-statistics and resulting p-values.

library("limma")

design_age <- model.matrix(lm_age_methyl1) # model matrix

fit_age <- lmFit(methyl_mat, design = design_age)

fit_age <- eBayes(fit_age)

To obtain the results of the linear models, we can use the topTable()

function. By default, this returns results for the first coefficient in

the model. As we saw above when using lm(), and when we defined

design_age above, the first coefficient relates to the intercept term,

which we are not particularly interested in here; therefore we specify

coef = 2. Further, topTable() by default only returns the top 10

results. To see all of the results in the data, we specify

number = nrow(fit_age) to ensure that it returns a row for every row

of the input matrix.

toptab_age <- topTable(fit_age, coef = 2, number = nrow(fit_age))

head(toptab_age)

logFC AveExpr t P.Value adj.P.Val B

cg08446924 -0.02571353 -0.4185868 -6.039068 5.595675e-07 0.002797837 5.131574

cg06493994 0.01550941 -2.1057877 5.593988 2.239813e-06 0.005599533 3.747986

cg17661642 0.02266668 -2.0527722 5.358739 4.658336e-06 0.006048733 3.019698

cg05168977 0.02276336 -2.2918472 5.346500 4.838987e-06 0.006048733 2.981904

cg24549277 0.01975577 -1.7466088 4.939242 1.708355e-05 0.011508818 1.731821

cg04436528 -0.01943612 0.7033503 -4.917179 1.828563e-05 0.011508818 1.664608

The output of topTable includes the coefficient, here termed a log

fold change logFC, the average level (aveExpr), the t-statistic t,

the p-value (P.Value), and the adjusted p-value (adj.P.Val). We’ll

cover what an adjusted p-value is very shortly. The table also includes

B, which represents the log-odds that a feature is signficantly

different, which we won’t cover here, but which will generally be a 1-1

transformation of the p-value. The coefficient estimates here are termed

logFC for legacy reasons relating to how microarray experiments were

traditionally performed. There are more details on this topic in many

places, for example this tutorial by Kasper D.

Hansen

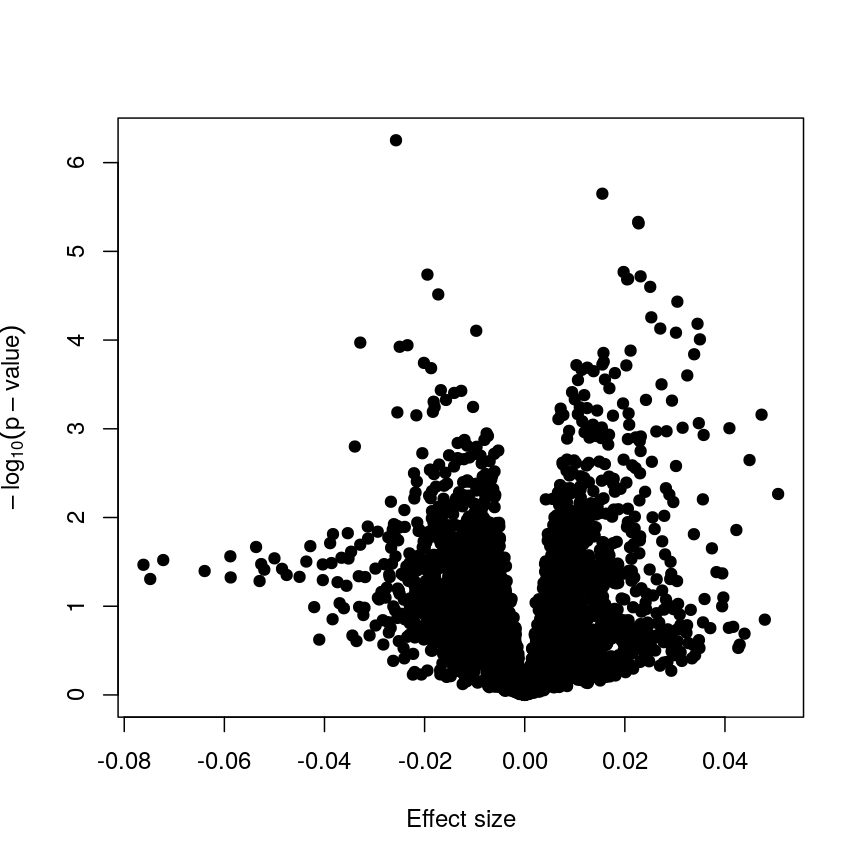

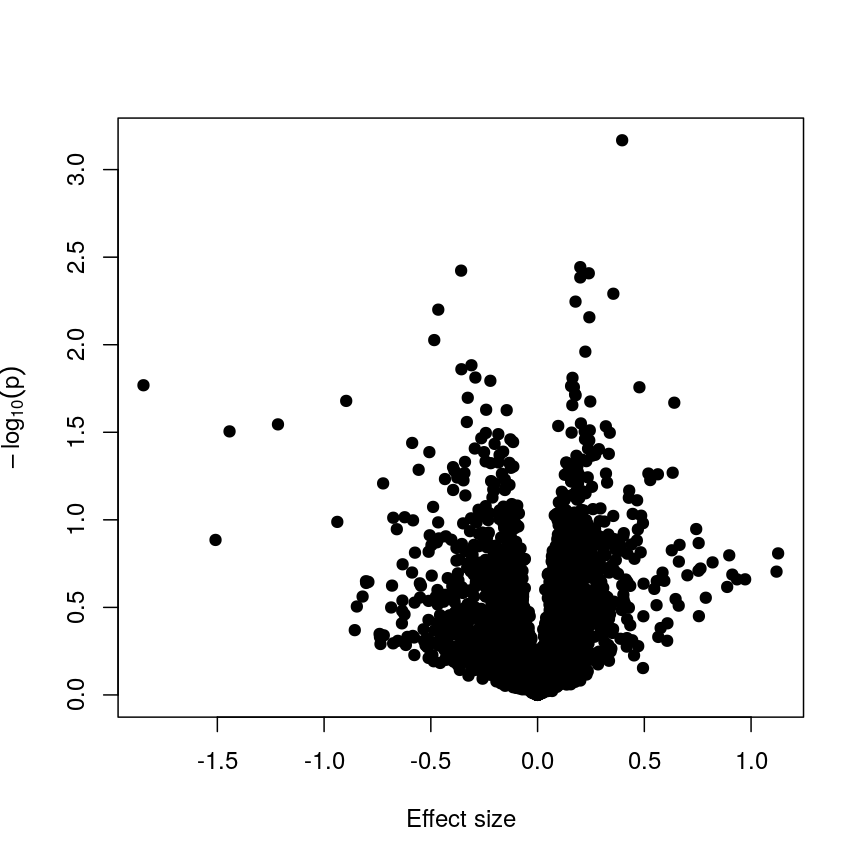

Now we have estimates of effect sizes and p-values for the association between methylation level at each locus and age for our 37 samples. It’s useful to create a plot of effect size estimates (model coefficients) against p-values for each of these linear models, to visualise the magnitude of effects and the statistical significance of each. These plots are often called “volcano plots”, because they resemble an eruption.

plot(toptab_age$logFC, -log10(toptab_age$P.Value),

xlab = "Effect size", ylab = bquote(-log[10](p-value)),

pch = 19

)

Plotting p-values against effect sizes using limma; the results are similar to a standard linear model.

In this figure, every point represents a feature of interest. The x-axis represents the effect size observed for that feature in a linear model, while the y-axis is the $-\log_{10}(\text{p-value})$, where larger values indicate increasing statistical evidence of a non-zero effect size. A positive effect size represents increasing methylation with increasing age, and a negative effect size represents decreasing methylation with increasing age. Points higher on the y-axis represent features for which we think the results we observed would be very unlikely under the null hypothesis.

Since we want to identify features that have different methylation levels in different age groups, in an ideal case there would be clear separation between “null” and “non-null” features. However, usually we observe results as we do here: there is a continuum of effect sizes and p-values, with no clear separation between these two classes of features. While statistical methods exist to derive insights from continuous measures like these, it is often convenient to obtain a list of features which we are confident have non-zero effect sizes. This is made more difficult by the number of tests we perform.

Challenge 3

The effect size estimates are very small, and yet many of the p-values are well below a usual significance level of p < 0.05. Why is this?

Solution

Because age has a much larger range than methylation levels, the unit change in methylation level even for a strong relationship is very small!

As we mentioned, the p-value is a function of both the effect size estimate and the uncertainty (standard error) of that estimate. Because the uncertainty in our estimates is much smaller than the estimates themselves, the p-values are also small.

If we predicted age using methylation level, it is likely we would see much larger coefficients, though broadly similar p-values!

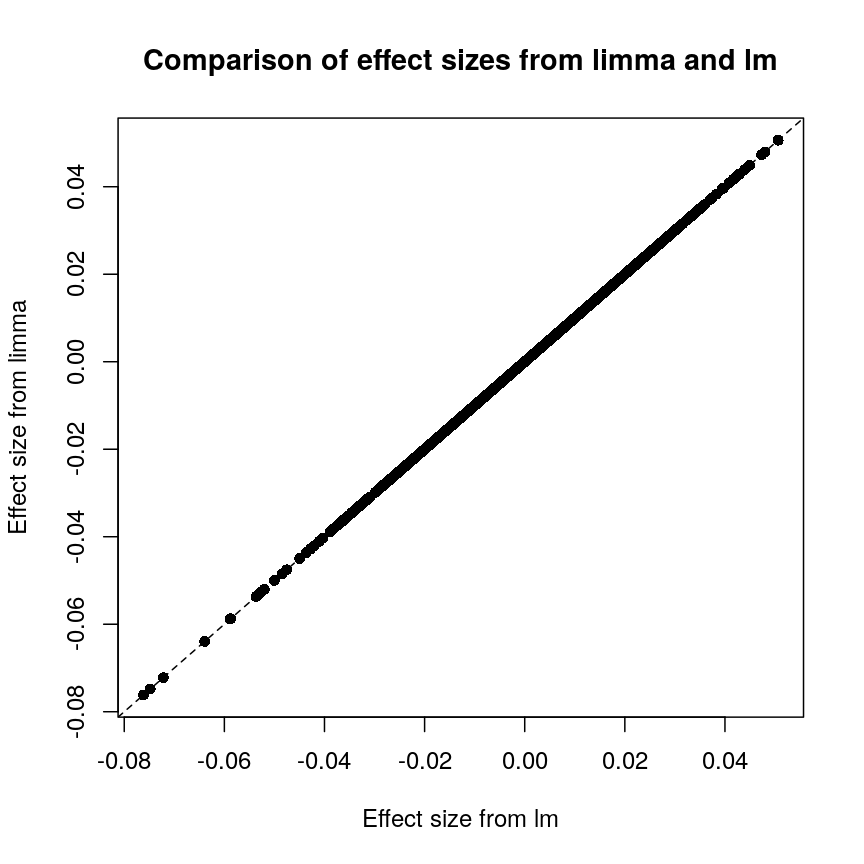

It is worthwhile considering what exactly the effect of the moderation

or information sharing that limma performs has on our results. To do

this, let us compare the effect sizes estimates and p-values from the two

approaches.

Plot of effect sizes using limma vs. those using lm.

These are exactly identical! This is because limma does not perform

any sharing of information when estimating effect sizes. This is in

contrast to similar packages that apply shrinkage to the effect size

estimates, like DESeq2. These often use information sharing to shrink

or moderate the effect size estimates, in the case of DESeq2 by again

sharing information between features about sample-to-sample variability.

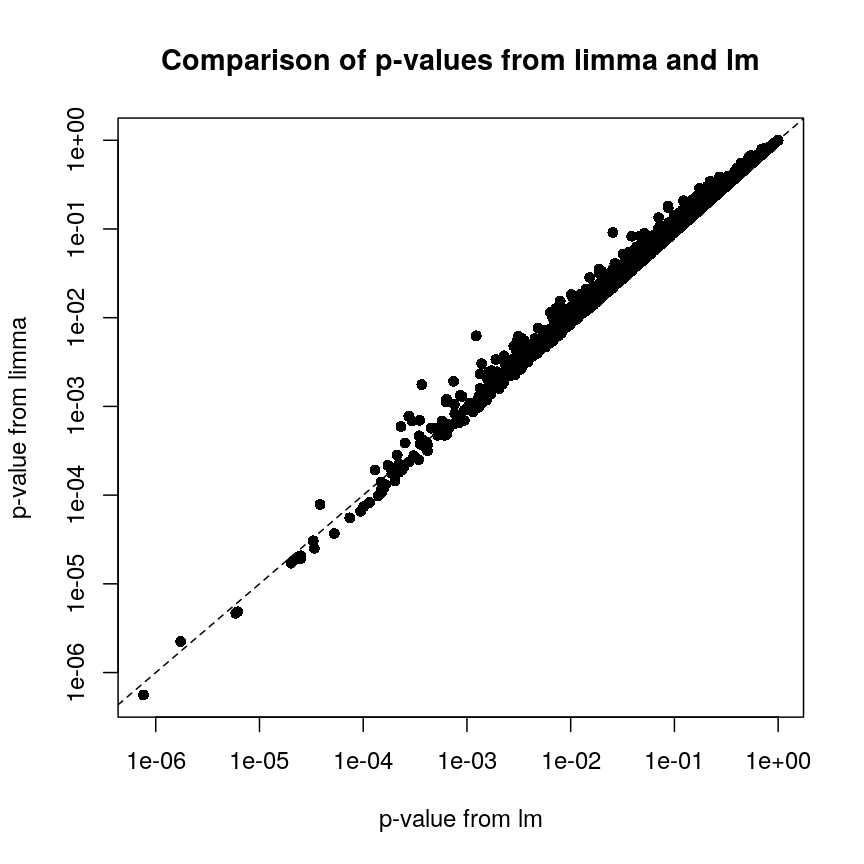

In contrast, let us look at the p-values from limma and R’s built-in lm() function:

Plot of p-values using limma vs. those using lm.

We can see that for the vast majority of features, the results are

broadly similar. There seems to be a minor general tendency for limma

to produce smaller p-values, but for several features, the p-values from

limma are considerably larger than the p-values from lm(). This is

because the information sharing tends to shrink large standard error

estimates downwards and small estimates upwards. When the degree of

statistical significance is due to an abnormally small standard error

rather than a large effect, this effect results in this prominent

reduction in statistical significance, which has been shown to perform

well in case studies. The degree of shrinkage generally depends on the

amount of pooled information and the strength of the evidence

independent of pooling. For example, with very few samples and many

features, information sharing has a larger effect, because there are a

lot of genes that can be used to provide pooled estimates, and the

evidence from the data that this is weighed against is relatively

sparse. In contrast, when there are many samples and few features, there

is not much opportunity to generate pooled estimates, and the evidence

of the data can easily outweigh the pooling.

Shrinkage methods like these ones can be complex to implement and understand, but it is useful to develop an intuition about why these approaches may be more precise and sensitive than the naive approach of fitting a model to each feature separately.

Challenge 4

- Try to run the same kind of linear model with smoking status as covariate instead of age, and making a volcano plot. Note: smoking status is stored as

methylation$smoker.- We saw in the example in the lesson that this information sharing can lead to larger p-values. Why might this be preferable?

Solution

The following code runs the same type of model with smoking status:

design_smoke <- model.matrix(~methylation$smoker) fit_smoke <- lmFit(methyl_mat, design = design_smoke) fit_smoke <- eBayes(fit_smoke) toptab_smoke <- topTable(fit_smoke, coef = 2, number = nrow(fit_smoke)) plot(toptab_smoke$logFC, -log10(toptab_smoke$P.Value), xlab = "Effect size", ylab = bquote(-log[10](p)), pch = 19 )

A plot of significance against effect size for a regression of smoking against methylation.

Being a bit more conservative when identifying features can help to avoid false discoveries. Furthermore, when rejecting the null hypothesis is based more on a small standard error resulting from abnormally low levels of variability for a given feature, we might want to be a bit more conservative in our expectations.

Shrinkage

Shrinkage is an intuitive term for an effect of information sharing, and is something observed in a broad range of statistical models. Often, shrinkage is induced by a multilevel modelling approach or by Bayesian methods.

The general idea is that these models incorporate information about the structure of the data into account when fitting the parameters. We can share information between features because of our knowledge about the data structure; this generally requires careful consideration about how the data were generated and the relationships within.

An example people often use is estimating the effect of attendance on grades in several schools. We can assume that this effect is similar in different schools (but maybe not identical), so we can share information about the effect size between schools and shrink our estimates towards a common value.

For example in

DESeq2, the authors used the observation that genes with similar expression counts in RNAseq data have similar dispersion, and a better estimate of these dispersion parameters makes estimates of fold changes much more stable. Similarly, inlimmathe authors made the assumption that in the absence of biological effects, we can often expect the technical variation in the measurement of the expression of each of the genes to be broadly similar. Again, better estimates of variability allow us to prioritise genes in a more reliable way.There are many good resources to learn about this type of approach, including:

The problem of multiple tests

With such a large number of features, it would be useful to decide which features are “interesting” or “significant” for further study. However, if we were to apply a normal significance threshold of 0.05, it would be likely we end up with a lot of false positives. This is because a p-value threshold like this represents a $\frac{1}{20}$ chance that we observe results as extreme or more extreme under the null hypothesis (that there is no assocation between age and methylation level). If we carry out many more than 20 such tests, we can expect to see situations where, despite the null hypothesis being true, we observe observe signifiant p-values due to random chance. To demonstrate this, it is useful to see what happens if we permute (scramble) the age values and run the same test again:

set.seed(123)

age_perm <- age[sample(ncol(methyl_mat), ncol(methyl_mat))]

design_age_perm <- model.matrix(~age_perm)

fit_age_perm <- lmFit(methyl_mat, design = design_age_perm)

fit_age_perm <- eBayes(fit_age_perm)

toptab_age_perm <- topTable(fit_age_perm, coef = 2, number = nrow(fit_age_perm))

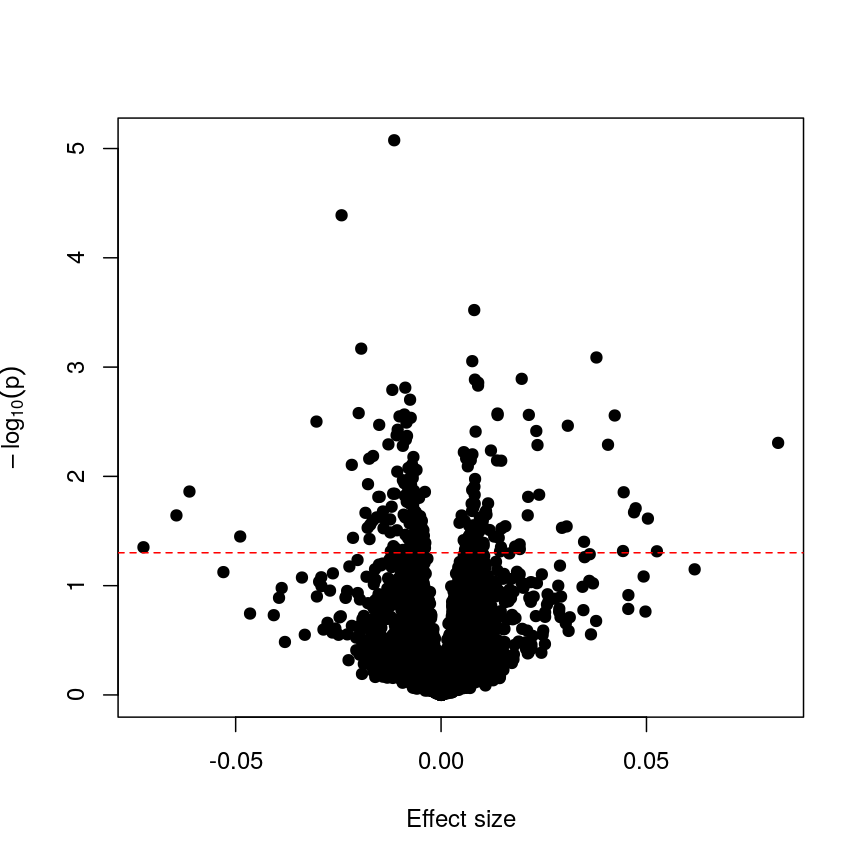

plot(toptab_age_perm$logFC, -log10(toptab_age_perm$P.Value),

xlab = "Effect size", ylab = bquote(-log[10](p)),

pch = 19

)

abline(h = -log10(0.05), lty = "dashed", col = "red")

Plotting p-values against effect sizes for a randomised outcome shows we still observe 'significant' results.

Since we have generated a random sequence of ages, we have no reason to suspect that there is a true association between methylation levels and this sequence of random numbers. However, you can see that the p-value for many features is still lower than a traditional significance level of $p=0.05$. In fact, here 226 features are significant at p < 0.05. If we were to use this fixed threshold in a real experiment, it is likely that we would identify many features as associated with age, when the results we are observing are simply due to chance.

Challenge 5

- If we run 5000 tests, even if there are no true differences, how many of them (on average) will be statistically significant at a threshold of $p < 0.05$?

- Why would we want to be conservative in labelling features as significantly different? By conservative, we mean to err towards labelling true differences as “not significant” rather than vice versa.

- How could we account for a varying number of tests to ensure “significant” changes are truly different?

Solution

- By default we expect $5000 \times 0.05 = 250$ features to be statistically significant under the null hypothesis, because p-values should always be uniformly distributed under the null hypothesis.

- Features that we label as “significantly different” will often be reported in manuscripts. We may also spend time and money investigating them further, computationally or in the lab. Therefore, spurious results have a real cost for ourselves and for others.

- One approach to controlling for the number of tests is to divide our significance threshold by the number of tests performed. This is termed “Bonferroni correction” and we’ll discuss this further now.

Adjusting for multiple tests

When performing many statistical tests to categorise features, we are effectively classifying features as “non-significant” or “significant”, that latter meaning those for which we reject the null hypothesis. We also generally hope that there is a subset of features for which the null hypothesis is truly false, as well as many for which the null truly does hold. We hope that for all features for which the null hypothesis is true, we accept it, and for all features for which the null hypothesis is not true, we reject it. As we showed in the example with permuted age, with a large number of tests it is inevitable that we will get some of these wrong.

We can think of these features as being “truly different” or “not truly different”1. Using this idea, we can see that each categorisation we make falls into four categories:

| Label as different | Label as not different | |

|---|---|---|

| Truly different | True positive | False negative |

| Truly not different | False positive | True negative |

If the null hypothesis was true for every feature, then as we perform more and more tests we’d tend to correctly categorise most results as negative. However, since p-values are uniformly distributed under the null, at a significance level of 5%, 5% of all results will be “significant” even though we would expect to see these results, given the null hypothesis is true, simply by chance. These would fall under the label “false positives” in the table above, and are also termed “false discoveries.”

There are two common ways of controlling these false discoveries. The first is to say, when we’re doing $n$ tests, that we want to have the same certainty of making one false discovery with $n$ tests as we have if we’re only doing one test. This is “Bonferroni” correction,2 which divides the significance level by the number of tests performed, $n$. Equivalently, we can use the non-transformed p-value threshold but multiply our p-values by the number of tests. This is often very conservative, especially with a lot of features!

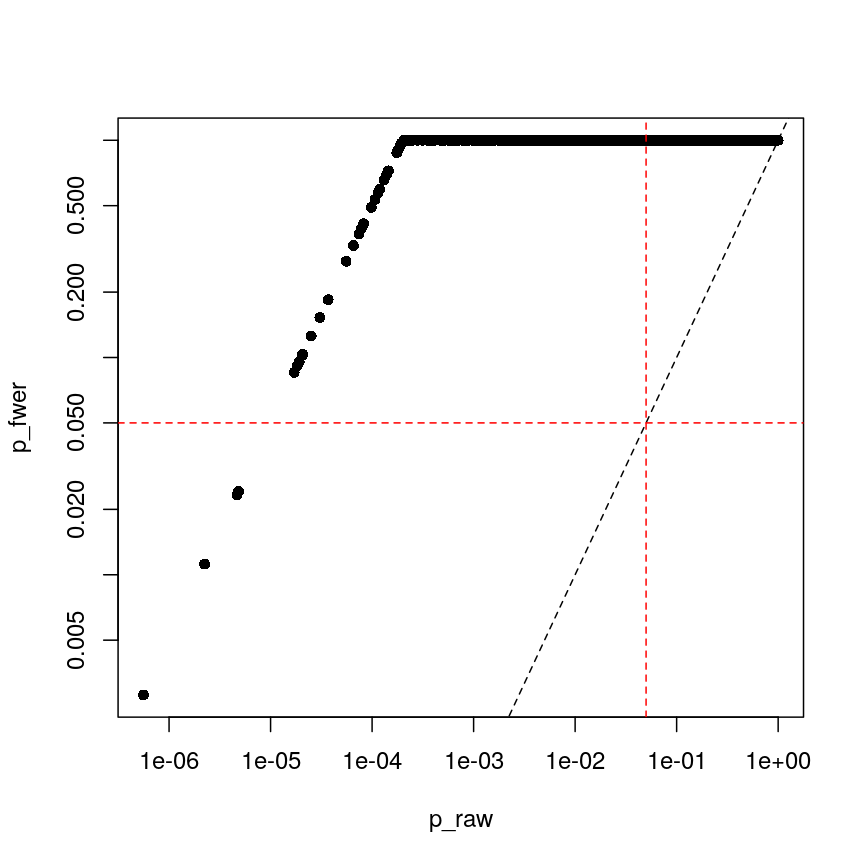

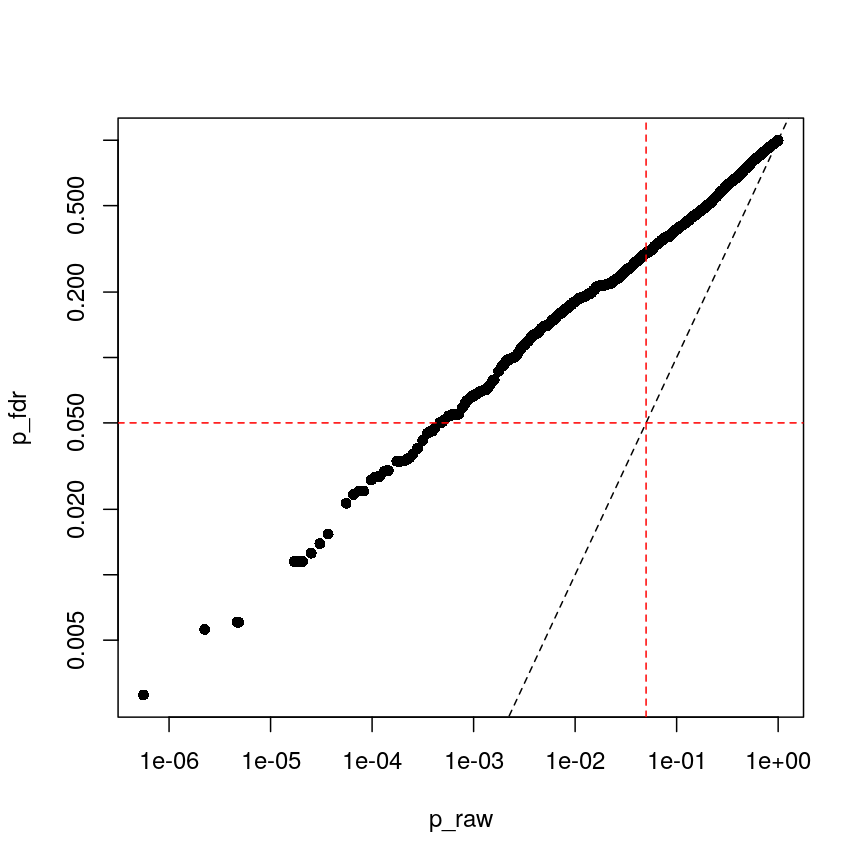

p_raw <- toptab_age$P.Value

p_fwer <- p.adjust(p_raw, method = "bonferroni")

plot(p_raw, p_fwer, pch = 16, log="xy")

abline(0:1, lty = "dashed")

abline(v = 0.05, lty = "dashed", col = "red")

abline(h = 0.05, lty = "dashed", col = "red")

Bonferroni correction often produces very large p-values, especially with low sample sizes.

You can see that the p-values are exactly one for the vast majority of tests we performed! This is not ideal sometimes, because unfortunately we usually don’t have very large sample sizes in health sciences.

The second main way of controlling for multiple tests is to control the false discovery rate (FDR).3 This is the proportion of false positives, or false discoveries, we’d expect to get each time if we repeated the experiment over and over.

- Rank the p-values

- Assign each a rank (1 is smallest)

- Calculate the critical value \(q = \left(\frac{i}{m}\right)Q\), where $i$ is rank, $m$ is the number of tests, and $Q$ is the false discovery rate we want to target.4

- Find the largest p-value less than the critical value. All smaller than this are significant.

| FWER | FDR |

|---|---|

| + Controls probability of identifying a false positive | + Controls rate of false discoveries |

| + Strict error rate control | + Allows error control with less stringency |

| - Very conservative | - Does not control probability of making errors |

| - Requires larger statistical power | - May result in false discoveries |

Challenge 6

- At a significance level of 0.05, with 100 tests performed, what is the Bonferroni significance threshold?

- In a gene expression experiment, after FDR correction with an FDR-adjusted p-value threshold of 0.05, we observe 500 significant genes. What proportion of these genes are truly different?

- Try running FDR correction on the

p_rawvector. Hint: checkhelp("p.adjust")to see what the method is called.

Compare these values to the raw p-values and the Bonferroni p-values.Solution

The Bonferroni threshold for this significance threshold is \(\frac{0.05}{100} = 0.0005\)

We can’t say what proportion of these genes are truly different. However, if we repeated this experiment and statistical test over and over, on average 5% of the results from each run would be false discoveries.

The following code runs FDR correction and compares it to non-corrected values and to Bonferroni:

p_fdr <- p.adjust(p_raw, method = "BH") plot(p_raw, p_fdr, pch = 16, log="xy") abline(0:1, lty = "dashed") abline(v = 0.05, lty = "dashed", col = "red") abline(h = 0.05, lty = "dashed", col = "red")

Benjamini-Hochberg correction is less conservative than Bonferroni

plot(p_fwer, p_fdr, pch = 16, log="xy") abline(0:1, lty = "dashed") abline(v = 0.05, lty = "dashed", col = "red") abline(h = 0.05, lty = "dashed", col = "red")

plot of chunk plot-fdr-fwer

Feature selection

In this episode, we have focussed on regression in a setting where there are more features than observations. This approach is relevant if we are interested in the association of each feature with some outcome or if we want to screen for features that have a strong association with an outcome. If, however, we are interested in predicting an outcome or if we want to know which features explain the variation in the outcome, we may want to restrict ourselves to a subset of relevant features. One way of doing this is called regularisation, and this is the topic of the next episode. An alternative is called feature selection. This is covered in the subsequent (optional) episode.

Further reading

limmatutorial by Kasper D. Hansenlimmauser manual.- The

VariancePartitionpackage has similar functionality tolimmabut allows the inclusion of random effects.

Footnotes

-

“True difference” is a hard category to rigidly define. As we’ve seen, with a lot of data, we can detect tiny differences, and with little data, we can’t detect large differences. However, both can be argued to be “true”. ↩

-

Bonferroni correction is also termed “family-wise” error rate control. ↩

-

This is often called “Benjamini-Hochberg” adjustment. ↩

-

People often perform extra controls on FDR-adjusted p-values, ensuring that ranks don’t change and the critical value is never smaller than the original p-value. ↩

Key Points

Performing linear regression in a high-dimensional setting requires us to perform hypothesis testing in a way that low-dimensional regression may not.

Sharing information between features can increase power and reduce false positives.

When running a lot of null hypothesis tests for high-dimensional data, multiple testing correction allows retain power and avoid making costly false discoveries.

Multiple testing methods can be more conservative or more liberal, depending on our goals.

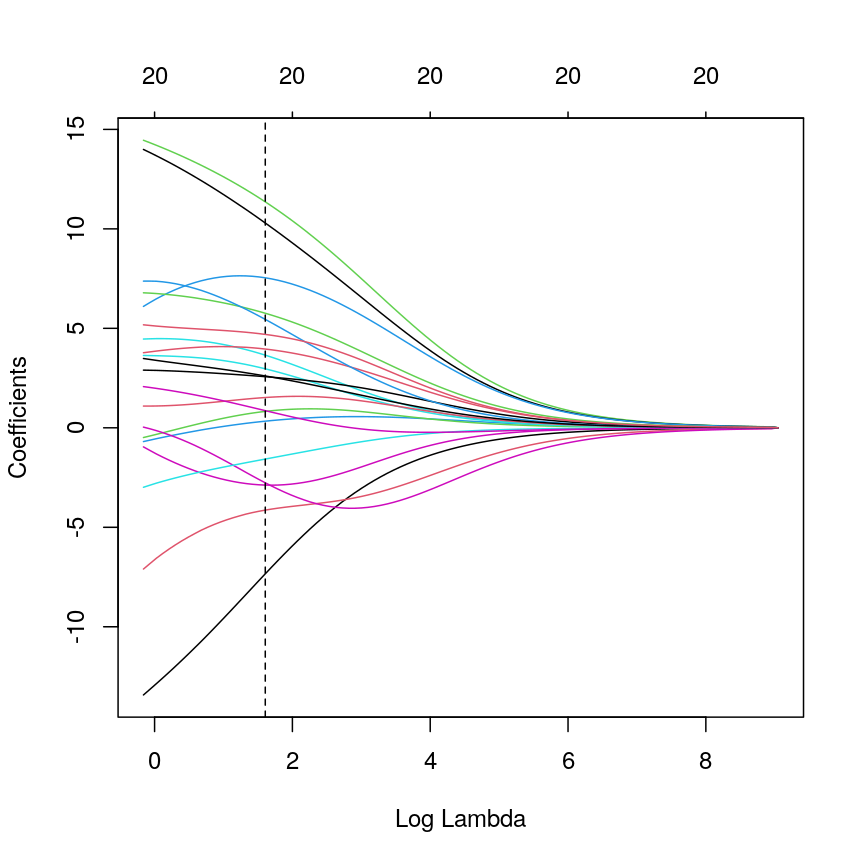

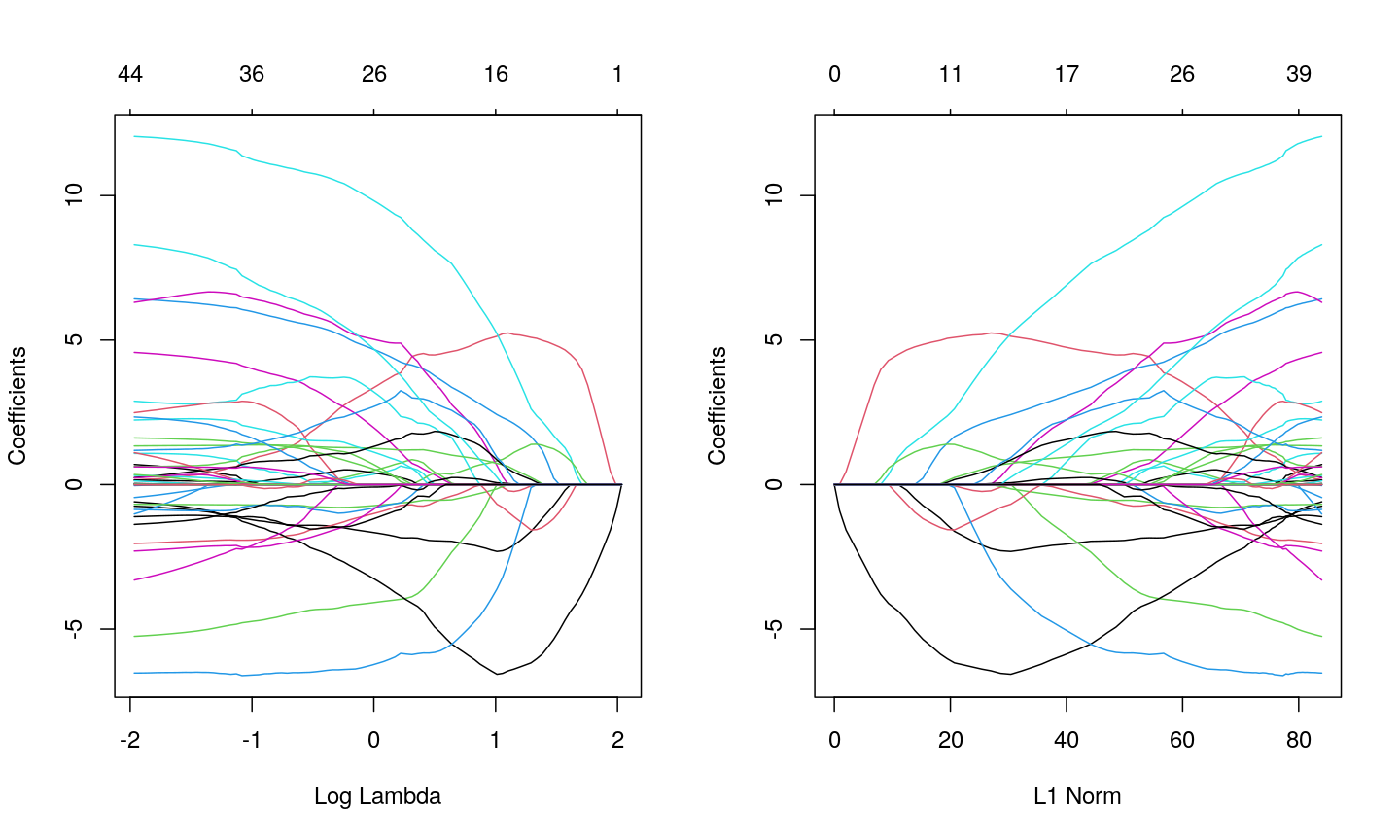

Regularised regression

Overview

Teaching: 110 min

Exercises: 60 minQuestions

What is regularisation?

How does regularisation work?

How can we select the level of regularisation for a model?

Objectives

Understand the benefits of regularised models.

Understand how different types of regularisation work.

Apply and critically analyse regularised regression models.

Introduction

This episode is about regularisation, also called regularised regression or penalised regression. This approach can be used for prediction and for feature selection and it is particularly useful when dealing with high-dimensional data.

One reason that we need special statistical tools for high-dimensional data is that standard linear models cannot handle high-dimensional data sets – one cannot fit a linear model where there are more features (predictor variables) than there are observations (data points). In the previous lesson, we dealt with this problem by fitting individual models for each feature and sharing information among these models. Now we will take a look at an alternative approach that can be used to fit models with more features than observations by stabilising coefficient estimates. This approach is called regularisation. Compared to many other methods, regularisation is also often very fast and can therefore be extremely useful in practice.

First, let us check out what happens if we try to fit a linear model to high-dimensional

data! We start by reading in the methylation

data from the last lesson:

library("here")

library("minfi")

methylation <- readRDS(here("data/methylation.rds"))

## here, we transpose the matrix to have features as rows and samples as columns

methyl_mat <- t(assay(methylation))

age <- methylation$Age

Then, we try to fit a model with outcome age and all 5,000 features in this dataset as predictors (average methylation levels, M-values, across different sites in the genome).

# by using methyl_mat in the formula below, R will run a multivariate regression

# model in which each of the columns in methyl_mat is used as a predictor.

fit <- lm(age ~ methyl_mat)

summary(fit)

Call:

lm(formula = age ~ methyl_mat)

Residuals:

ALL 37 residuals are 0: no residual degrees of freedom!

Coefficients: (4964 not defined because of singularities)

Estimate Std. Error t value Pr(>|t|)

(Intercept) 2640.474 NaN NaN NaN

methyl_matcg00075967 -108.216 NaN NaN NaN

methyl_matcg00374717 -139.637 NaN NaN NaN

methyl_matcg00864867 33.102 NaN NaN NaN

methyl_matcg00945507 72.250 NaN NaN NaN

[ reached getOption("max.print") -- omitted 4996 rows ]

Residual standard error: NaN on 0 degrees of freedom

Multiple R-squared: 1, Adjusted R-squared: NaN

F-statistic: NaN on 36 and 0 DF, p-value: NA

You can see that we’re able to get some effect size estimates, but they seem very high! The summary also says that we were unable to estimate effect sizes for 4,964 features because of “singularities”. We clarify what singularities are in the note below but this means that R couldn’t find a way to perform the calculations necessary to fit the model. Large effect sizes and singularities are common when naively fitting linear regression models with a large number of features (i.e., to high-dimensional data), often since the model cannot distinguish between the effects of many, correlated features or when we have more features than observations.

Singularities

The message that

\[(X^TX)^{-1}X^Ty,\]lm()produced is not necessarily the most intuitive. What are “singularities” and why are they an issue? A singular matrix is one that cannot be inverted. R uses inverse operations to fit linear models (find the coefficients) using:where $X$ is a matrix of predictor features and $y$ is the outcome vector. Thus, if the matrix $X^TX$ cannot be inverted to give $(X^TX)^{-1}$, R cannot fit the model and returns the error that there are singularities.

Why might R be unable to calculate $(X^TX)^{-1}$ and return the error that there are singularities? Well, when the determinant of the matrix is zero, we are unable to find its inverse. The determinant of the matrix is zero when there are more features than observations or often when the features are highly correlated.

xtx <- t(methyl_mat) %*% methyl_mat det(xtx)[1] 0

Correlated features – common in high-dimensional data

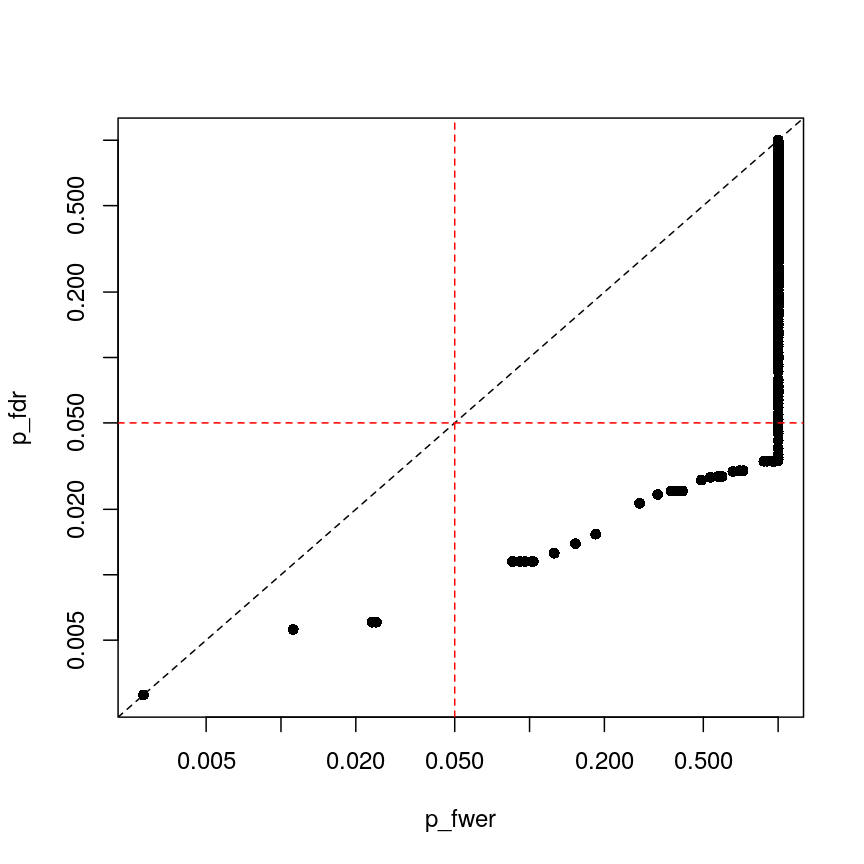

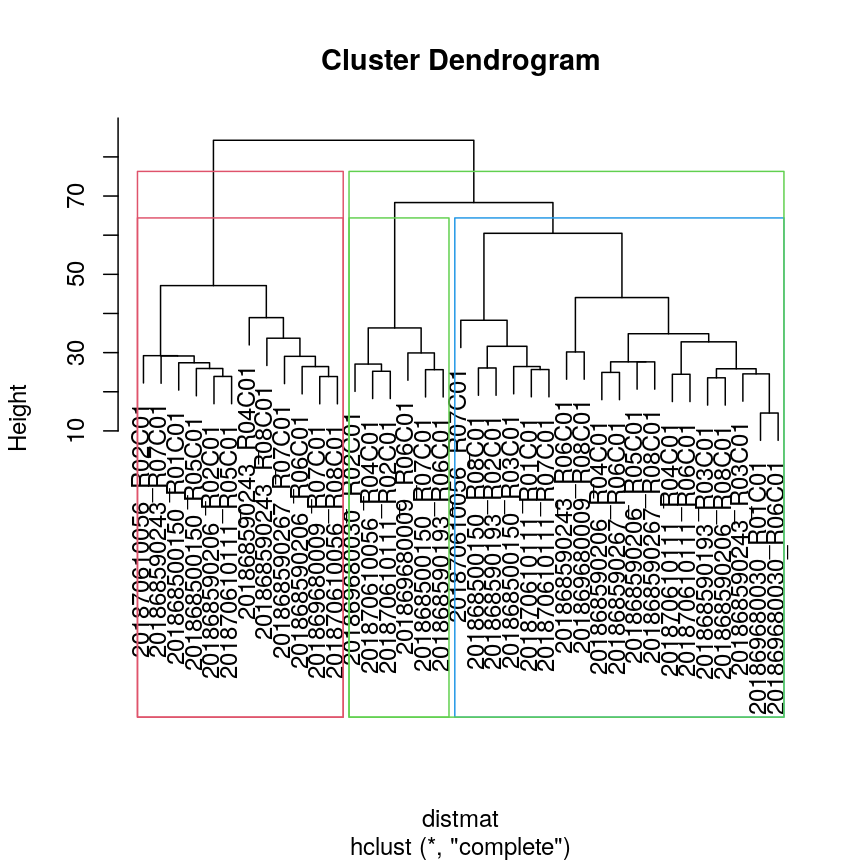

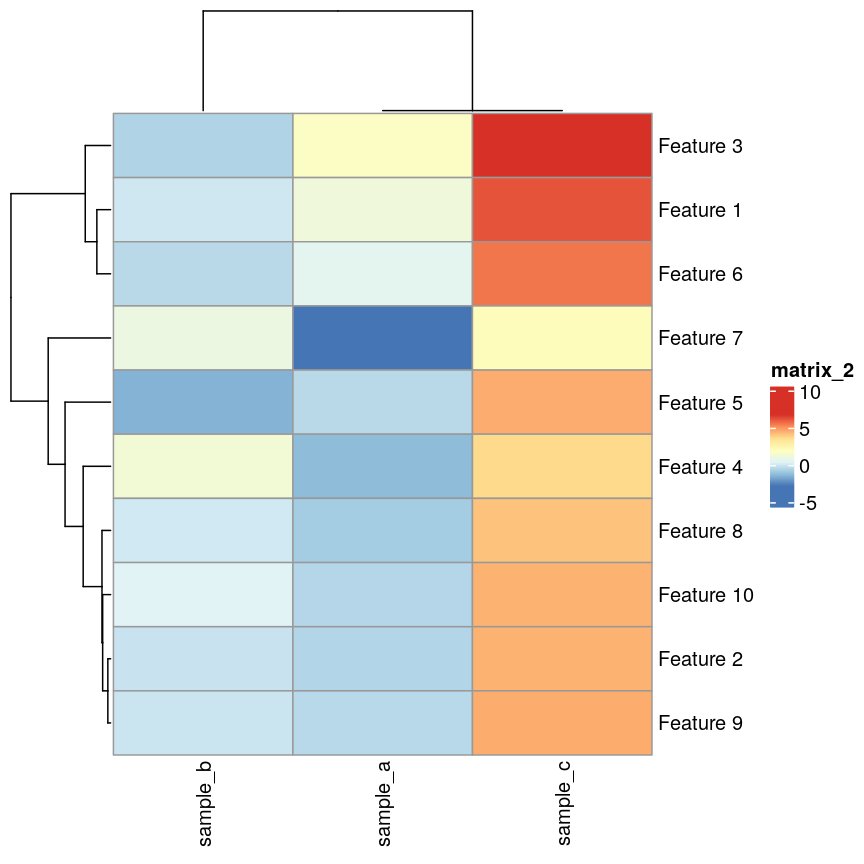

In high-dimensional datasets, there are often multiple features that contain redundant information (correlated features). If we visualise the level of correlation between sites in the

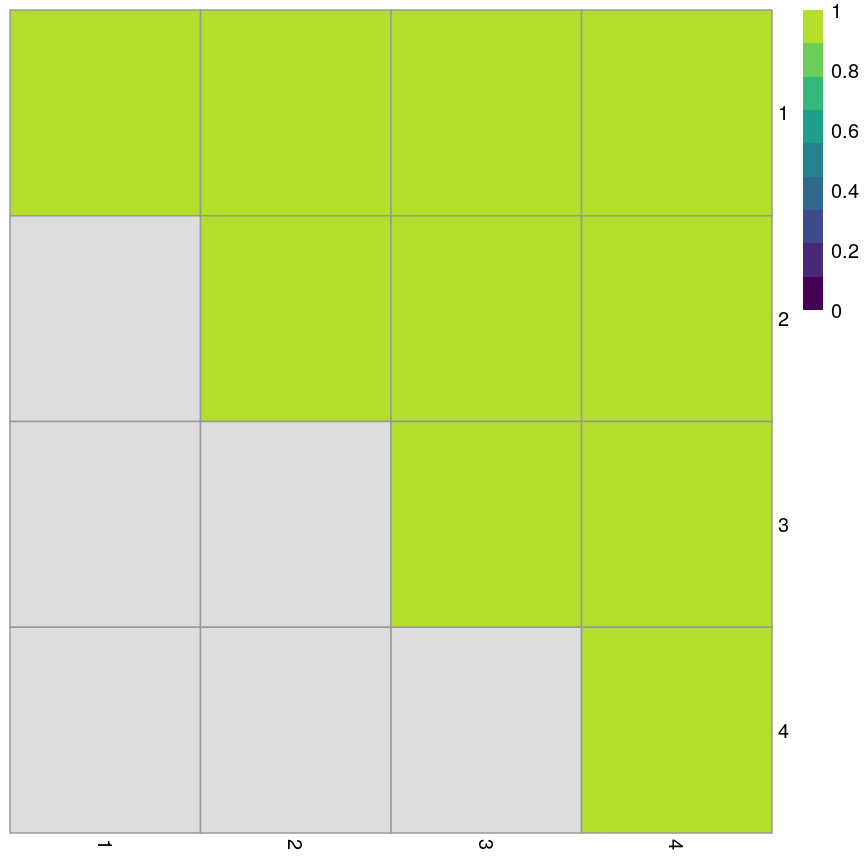

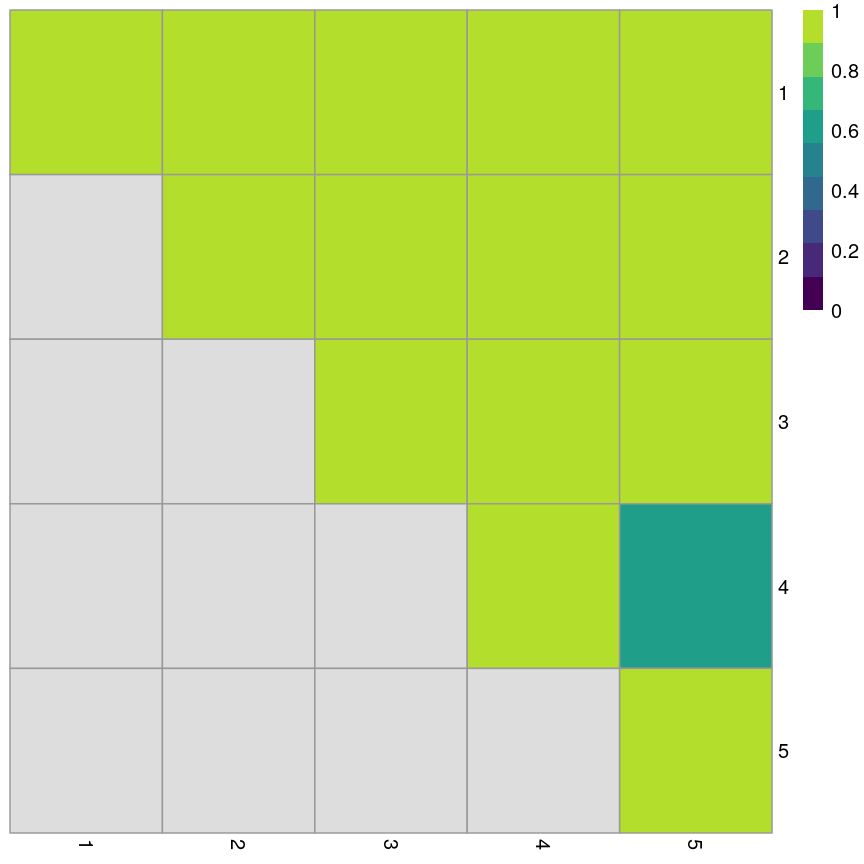

methylationdataset, we can see that many of the features represent the same information - there are many off-diagonal cells, which are deep red or blue. For example, the following heatmap visualises the correlations for the first 500 features in themethylationdataset (we selected 500 features only as it can be hard to visualise patterns when there are too many features!).library("ComplexHeatmap") small <- methyl_mat[, 1:500] cor_mat <- cor(small) Heatmap(cor_mat, column_title = "Feature-feature correlation in methylation data", name = "Pearson correlation", show_row_dend = FALSE, show_column_dend = FALSE, show_row_names = FALSE, show_column_names = FALSE )

Heatmap of pairwise feature-feature correlations between CpG sites in DNA methylation data

Challenge 1

Consider or discuss in groups:

- Why would we observe correlated features in high-dimensional biological data?

- Why might correlated features be a problem when fitting linear models?

- What issue might correlated features present when selecting features to include in a model one at a time?

Solution

- Many of the features in biological data represent very similar information biologically. For example, sets of genes that form complexes are often expressed in very similar quantities. Similarly, methylation levels at nearby sites are often very highly correlated.

- Correlated features can make inference unstable or even impossible mathematically.

- When we are selecting features one at a time we want to pick the most predictive feature each time. When a lot of features are very similar but encode slightly different information, which of the correlated features we select to include can have a huge impact on the later stages of model selection!

Regularisation can help us to deal with correlated features, as well as effectively reduce the number of features in our model. Before we describe regularisation, let’s recap what’s going on when we fit a linear model.

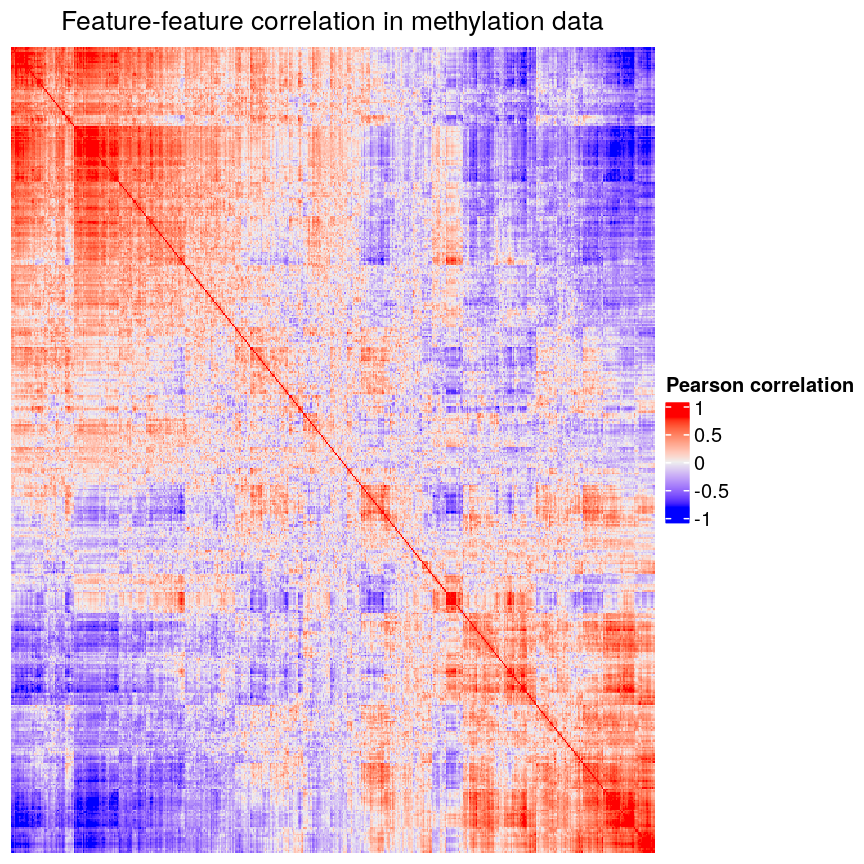

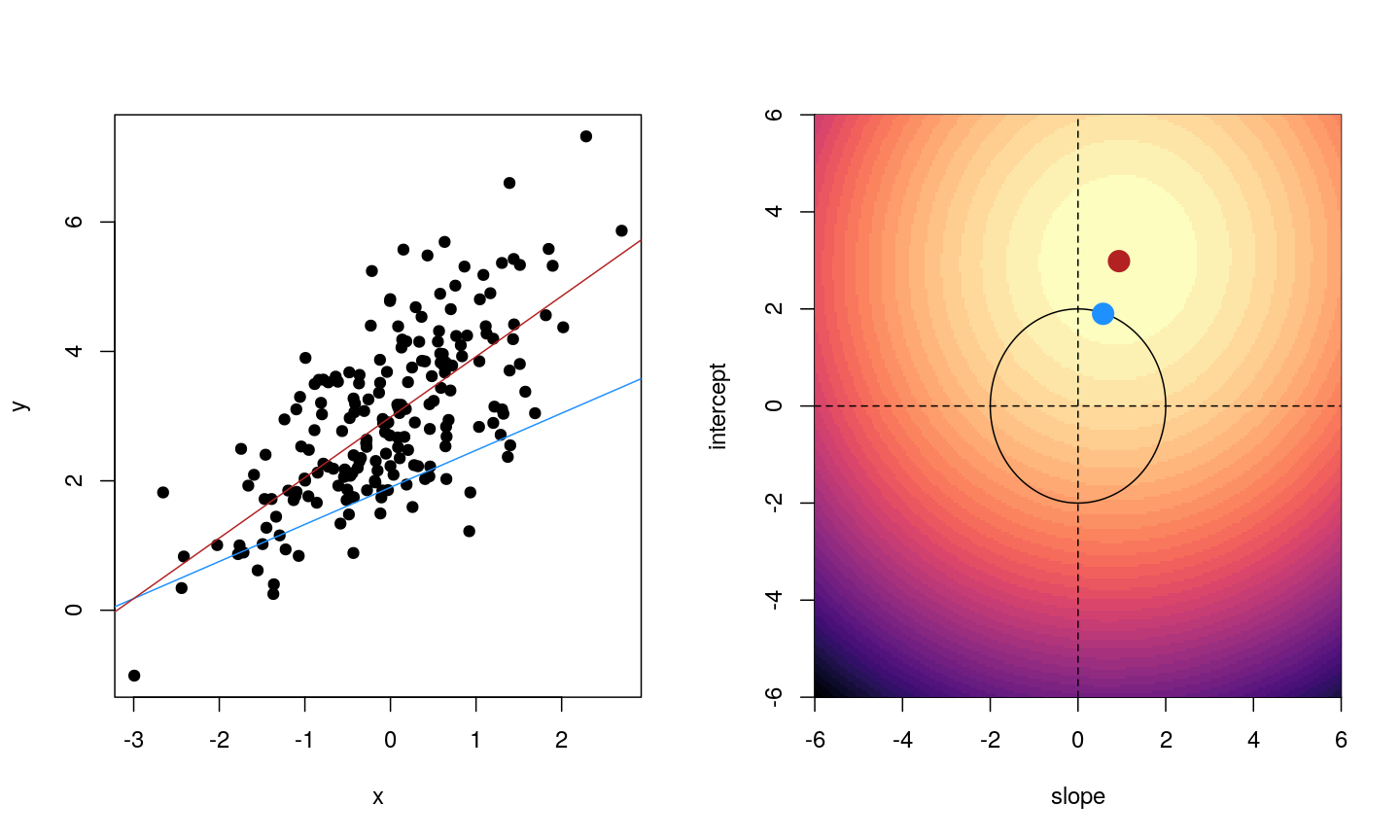

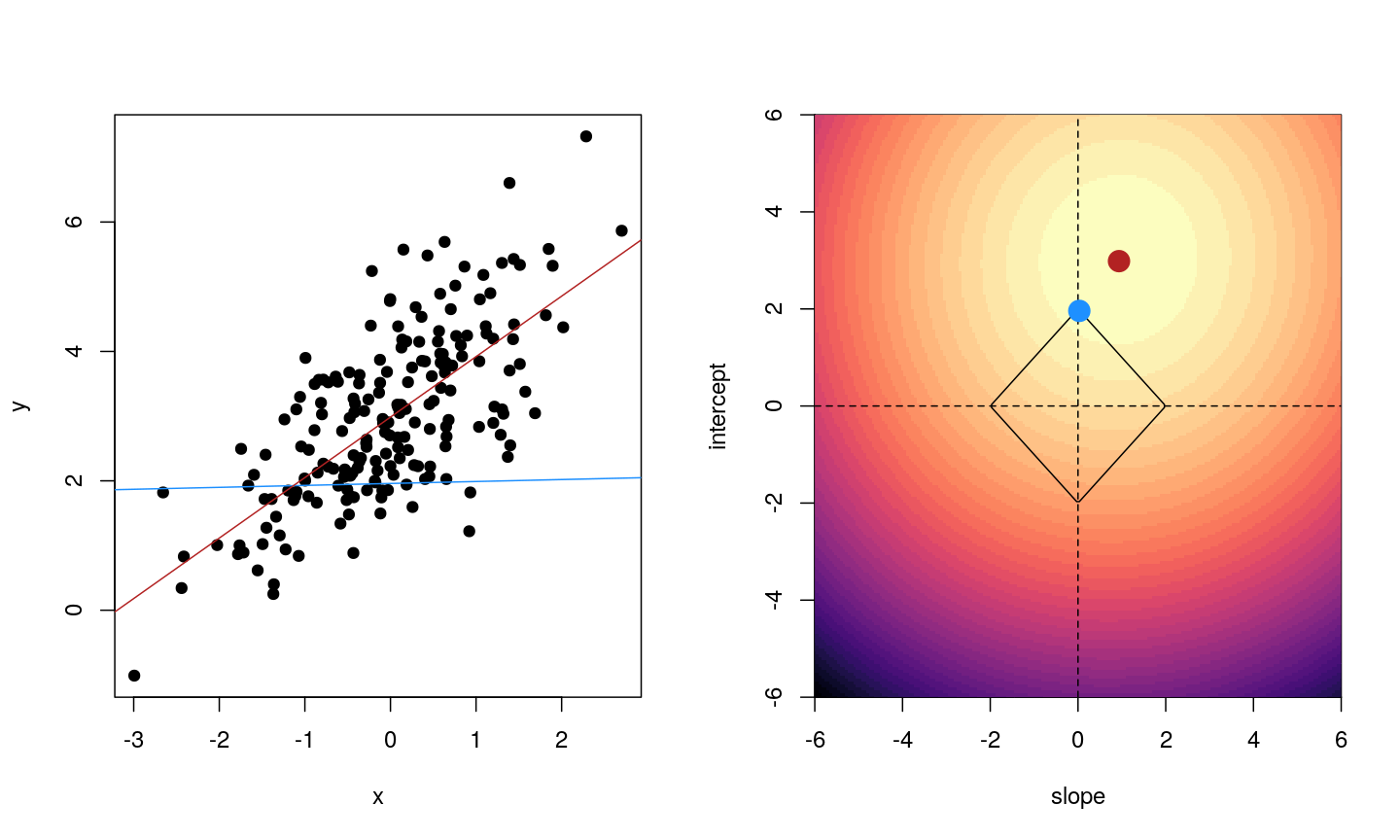

Coefficient estimates of a linear model

When we fit a linear model, we’re finding the line through our data that minimises the sum of the squared residuals. We can think of that as finding the slope and intercept that minimises the square of the length of the dashed lines. In this case, the red line in the left panel is the line that accomplishes this objective, and the red dot in the right panel is the point that represents this line in terms of its slope and intercept among many different possible models, where the background colour represents how well different combinations of slope and intercept accomplish this objective.

Illustrative example demonstrated how regression coefficients are inferred under a linear model framework.

Mathematically, we can write the sum of squared residuals as

[\sum_{i=1}^N ( y_i-x’_i\beta)^2]

where $\beta$ is a vector of (unknown) covariate effects which we want to learn by fitting a regression model: the $j$-th element of $\beta$, which we denote as $\beta_j$ quantifies the effect of the $j$-th covariate. For each individual $i$, $x_i$ is a vector of $j$ covariate values and $y_i$ is the true observed value for the outcome. The notation $x’_i\beta$ indicates matrix multiplication. In this case, the result is equivalent to multiplying each element of $x_i$ by its corresponding element in $\beta$ and then calculating the sum across all of those values. The result of this product (often denoted by $\hat{y}_i$) is the predicted value of the outcome generated by the model. As such, $y_i-x’_i\beta$ can be interpreted as the prediction error, also referred to as model residual. To quantify the total error across all individuals, we sum the square residuals $( y_i-x’_i\beta)^2$ across all the individuals in our data.

Finding the value of $\beta$ that minimises the sum above is the line of best fit through our data when considering this goal of minimising the sum of squared error. However, it is not the only possible line we could use! For example, we might want to err on the side of caution when estimating effect sizes (coefficients). That is, we might want to avoid estimating very large effect sizes.

Challenge 2

Discuss in groups:

- What are we minimising when we fit a linear model?

- Why are we minimising this objective? What assumptions are we making about our data when we do so?

Solution

- When we fit a linear model we are minimising the squared error. In fact, the standard linear model estimator is often known as “ordinary least squares”. The “ordinary” really means “original” here, to distinguish between this method, which dates back to ~1800, and some more “recent” (think 1940s…) methods.

- Squared error is useful because it ignores the sign of the residuals (whether they are positive or negative). It also penalises large errors much more than small errors. On top of all this, it also makes the solution very easy to compute mathematically. Least squares assumes that, when we account for the change in the mean of the outcome based on changes in the income, the data are normally distributed. That is, the residuals of the model, or the error left over after we account for any linear relationships in the data, are normally distributed, and have a fixed variance.

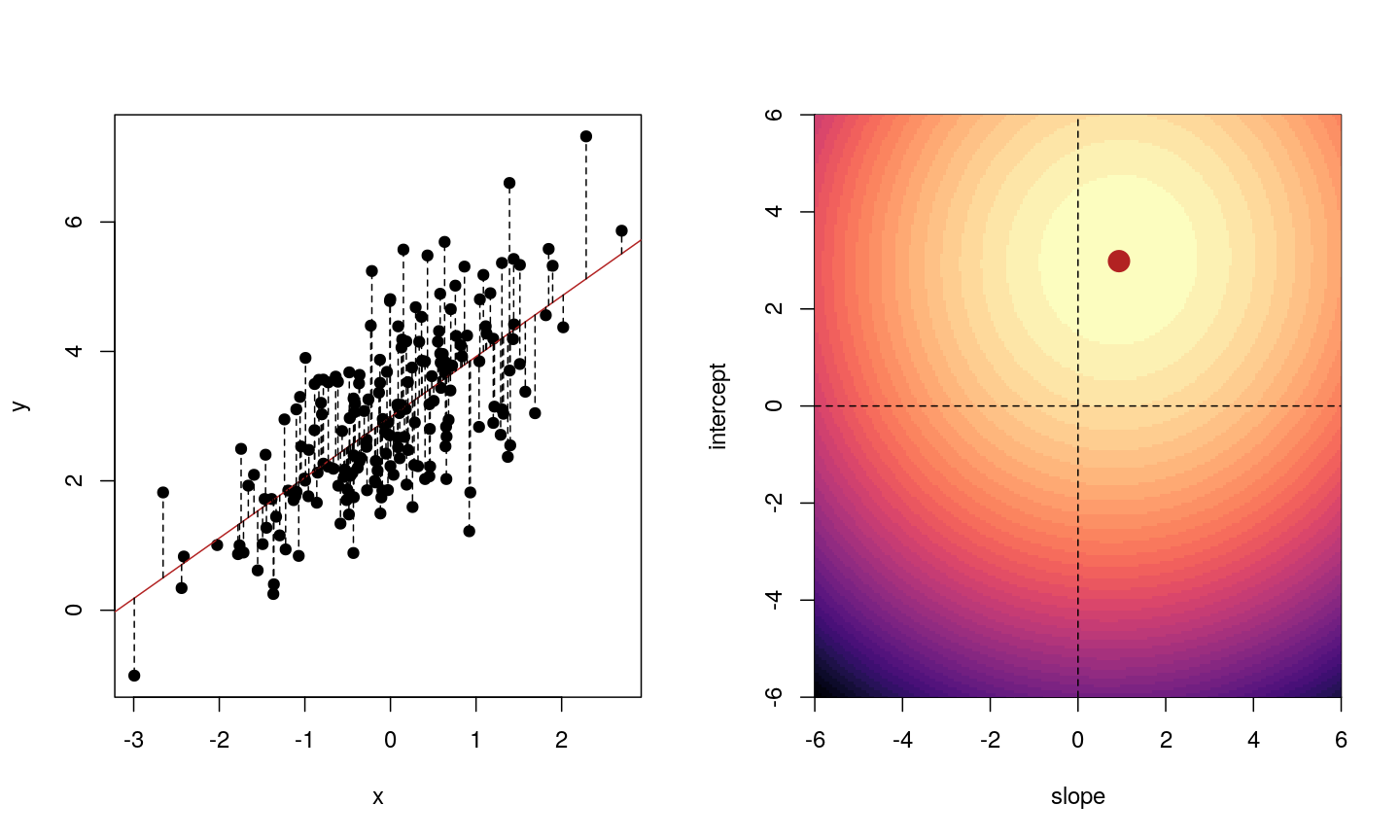

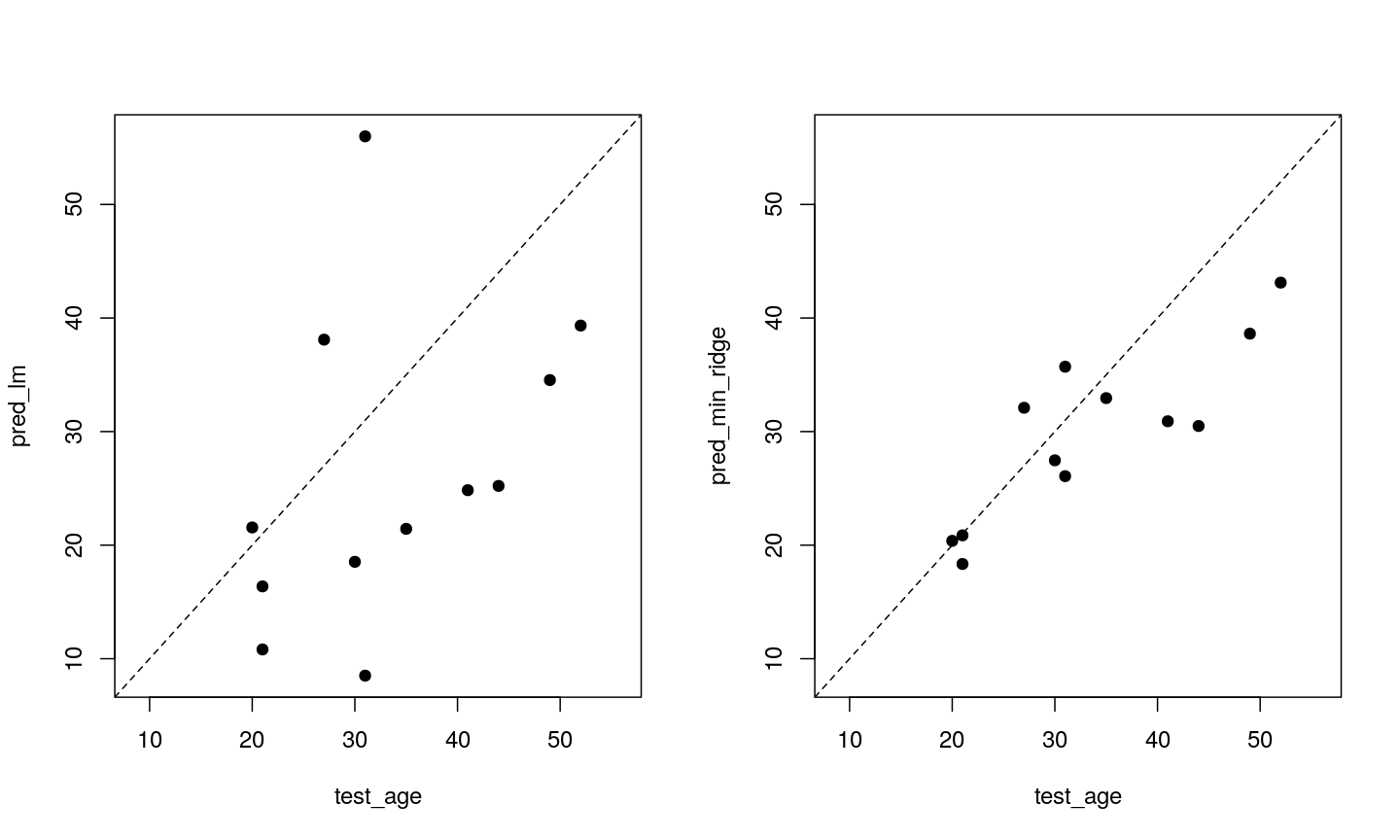

Model selection using training and test sets

Sets of models are often compared using statistics such as adjusted $R^2$, AIC or BIC. These show us how well the model is learning the data used in fitting that same model 1. However, these statistics do not really tell us how well the model will generalise to new data. This is an important thing to consider – if our model doesn’t generalise to new data, then there’s a chance that it’s just picking up on a technical or batch effect in our data, or simply some noise that happens to fit the outcome we’re modelling. This is especially important when our goal is prediction – it’s not much good if we can only predict well for samples where the outcome is already known, after all!

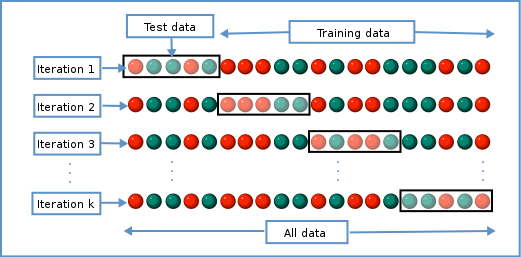

To get an idea of how well our model generalises, we can split the data into two - a “training” and a “test” set. We use the “training” data to fit the model, and then see its performance on the “test” data.

Schematic representation of how a dataset can be divided into a training and a test set.