Content from Data organisation with spreadsheets

Last updated on 2026-01-27 | Edit this page

Overview

Questions

- How to organise tabular data?

Objectives

- Learn about spreadsheets, their strengths and weaknesses.

- How do we format data in spreadsheets for effective data use?

- Learn about common spreadsheet errors and how to correct them.

- Organise your data according to tidy data principles.

- Learn about text-based spreadsheet formats such as the comma-separated (CSV) or tab-separated (TSV) formats.

This episode is based on the Data Carpentries’s Data Analysis and Visualisation in R for Ecologists lesson.

Spreadsheet programs

Question

- What are basic principles for using spreadsheets for good data organization?

Objective

- Describe best practices for organizing data so computers can make the best use of datasets.

Keypoint

- Good data organization is the foundation of any research project.

Good data organization is the foundation of your research project. Most researchers have data or do data entry in spreadsheets. Spreadsheet programs are very useful graphical interfaces for designing data tables and handling very basic data quality control functions. See also @Broman:2018.

Spreadsheet outline

Spreadsheets are good for data entry. Therefore we have a lot of data in spreadsheets. Much of your time as a researcher will be spent in this ‘data wrangling’ stage. It’s not the most fun, but it’s necessary. We’ll teach you how to think about data organization and some practices for more effective data wrangling.

What this lesson will not teach you

- How to do statistics in a spreadsheet

- How to do plotting in a spreadsheet

- How to write code in spreadsheet programs

If you’re looking to do this, a good reference is Head First Excel, published by O’Reilly.

Why aren’t we teaching data analysis in spreadsheets

Data analysis in spreadsheets usually requires a lot of manual work. If you want to change a parameter or run an analysis with a new dataset, you usually have to redo everything by hand. (We do know that you can create macros, but see the next point.)

It is also difficult to track or reproduce statistical or plotting analyses done in spreadsheet programs when you want to go back to your work or someone asks for details of your analysis.

Many spreadsheet programs are available. Since most participants utilise Excel as their primary spreadsheet program, this lesson will make use of Excel examples. A free spreadsheet program that can also be used is LibreOffice. Commands may differ a bit between programs, but the general idea is the same.

Spreadsheet programs encompass a lot of the things we need to be able to do as researchers. We can use them for:

- Data entry

- Organizing data

- Subsetting and sorting data

- Statistics

- Plotting

Spreadsheet programs use tables to represent and display data. Data formatted as tables is also the main theme of this chapter, and we will see how to organise data into tables in a standardised way to ensure efficient downstream analysis.

Challenge: Discuss the following points with your neighbour

- Have you used spreadsheets, in your research, courses, or at home?

- What kind of operations do you do in spreadsheets?

- Which ones do you think spreadsheets are good for?

- Have you accidentally done something in a spreadsheet program that made you frustrated or sad?

Problems with spreadsheets

Spreadsheets are good for data entry, but in reality we tend to use spreadsheet programs for much more than data entry. We use them to create data tables for publications, to generate summary statistics, and make figures.

Generating tables for publications in a spreadsheet is not optimal - often, when formatting a data table for publication, we’re reporting key summary statistics in a way that is not really meant to be read as data, and often involves special formatting (merging cells, creating borders, making it pretty). We advise you to do this sort of operation within your document editing software.

The latter two applications, generating statistics and figures, should be used with caution: because of the graphical, drag and drop nature of spreadsheet programs, it can be very difficult, if not impossible, to replicate your steps (much less retrace anyone else’s), particularly if your stats or figures require you to do more complex calculations. Furthermore, in doing calculations in a spreadsheet, it’s easy to accidentally apply a slightly different formula to multiple adjacent cells. When using a command-line based statistics program like R or SAS, it’s practically impossible to apply a calculation to one observation in your dataset but not another unless you’re doing it on purpose.

Using spreadsheets for data entry and cleaning

In this lesson, we will assume that you are most likely using Excel as your primary spreadsheet program - there are others (gnumeric, Calc from OpenOffice), and their functionality is similar, but Excel seems to be the program most used by biologists and biomedical researchers.

In this lesson we’re going to talk about:

- Formatting data tables in spreadsheets

- Formatting problems

- Exporting data

Formatting data tables in spreadsheets

Questions

- How do we format data in spreadsheets for effective data use?

Objectives

Describe best practices for data entry and formatting in spreadsheets.

Apply best practices to arrange variables and observations in a spreadsheet.

Keypoints

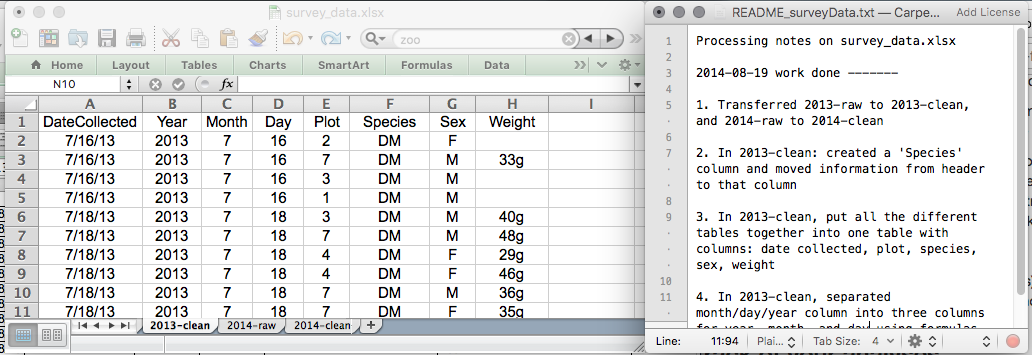

Never modify your raw data. Always make a copy before making any changes.

Keep track of all of the steps you take to clean your data in a plain text file.

Organise your data according to tidy data principles.

The most common mistake made is treating spreadsheet programs like lab notebooks, that is, relying on context, notes in the margin, spatial layout of data and fields to convey information. As humans, we can (usually) interpret these things, but computers don’t view information the same way, and unless we explain to the computer what every single thing means (and that can be hard!), it will not be able to see how our data fits together.

Using the power of computers, we can manage and analyse data in much more effective and faster ways, but to use that power, we have to set up our data for the computer to be able to understand it (and computers are very literal).

This is why it’s extremely important to set up well-formatted tables from the outset - before you even start entering data from your very first preliminary experiment. Data organization is the foundation of your research project. It can make it easier or harder to work with your data throughout your analysis, so it’s worth thinking about when you’re doing your data entry or setting up your experiment. You can set things up in different ways in spreadsheets, but some of these choices can limit your ability to work with the data in other programs or have the you-of-6-months-from-now or your collaborator work with the data.

Note: the best layouts/formats (as well as software and interfaces) for data entry and data analysis might be different. It is important to take this into account, and ideally automate the conversion from one to another.

Keeping track of your analyses

When you’re working with spreadsheets, during data clean up or analyses, it’s very easy to end up with a spreadsheet that looks very different from the one you started with. In order to be able to reproduce your analyses or figure out what you did when a reviewer or instructor asks for a different analysis, you should

create a new file with your cleaned or analysed data. Don’t modify the original dataset, or you will never know where you started!

keep track of the steps you took in your clean up or analysis. You should track these steps as you would any step in an experiment. We recommend that you do this in a plain text file stored in the same folder as the data file.

This might be an example of a spreadsheet setup:

Put these principles into practice today during your exercises.

While versioning is out of scope for this course, you can look at the Carpentries lesson on ‘Git’ to learn how to maintain version control over your data. See also this blog post for a quick tutorial or @Perez-Riverol:2016 for a more research-oriented use-case.

Structuring data in spreadsheets

The cardinal rules of using spreadsheet programs for data:

- Put all your variables in columns - the thing you’re measuring, like ‘weight’ or ‘temperature’.

- Put each observation in its own row.

- Don’t combine multiple pieces of information in one cell. Sometimes it just seems like one thing, but think if that’s the only way you’ll want to be able to use or sort that data.

- Leave the raw data raw - don’t change it!

- Export the cleaned data to a text-based format like CSV (comma-separated values) format. This ensures that anyone can use the data, and is required by most data repositories.

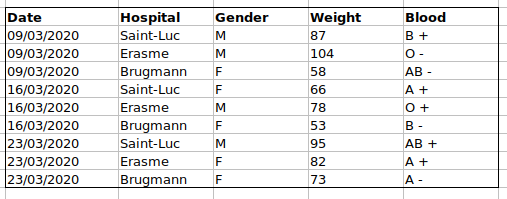

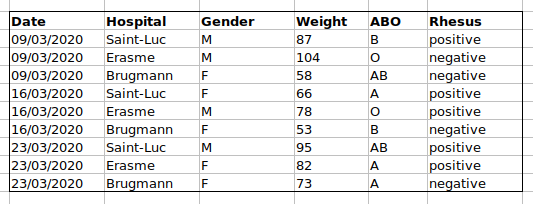

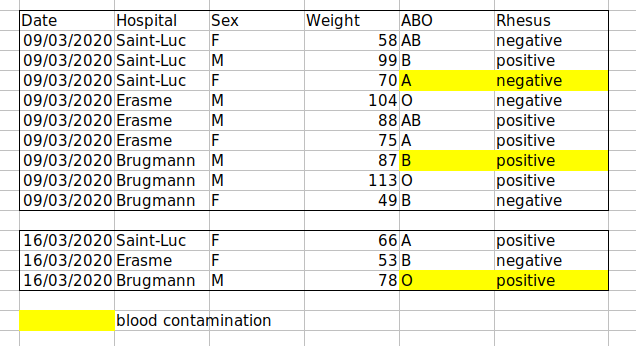

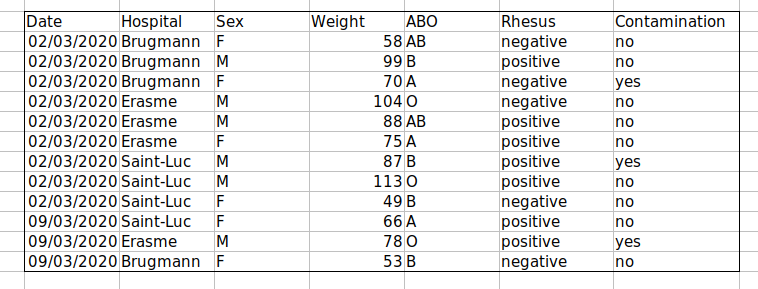

For instance, we have data from patients that visited several hospitals in Brussels, Belgium. They recorded the date of the visit, the hospital, the patients’ gender, weight and blood group.

If we were to keep track of the data like this:

the problem is that the ABO and Rhesus groups are in the same

Blood type column. So, if they wanted to look at all

observations of the A group or look at weight distributions by ABO

group, it would be tricky to do this using this data setup. If instead

we put the ABO and Rhesus groups in different columns, you can see that

it would be much easier.

An important rule when setting up a datasheet, is that columns are used for variables and rows are used for observations:

- columns are variables

- rows are observations

- cells are individual values

Challenge: We’re going to take a messy dataset and describe how we would clean it up.

Download a messy dataset by clicking this link.

Open up the data in a spreadsheet program.

You can see that there are two tabs. The data contains various clinical variables recorded in various hospitals in Brussels during the first and second COVID-19 waves in 2020. As you can see, the data have been recorded differently during the March and November waves. Now you’re the person in charge of this project and you want to be able to start analyzing the data.

With the person next to you, identify what is wrong with this spreadsheet. Also discuss the steps you would need to take to clean up first and second wave tabs, and to put them all together in one spreadsheet.

Important: Do not forget our first piece of advice: to create a new file (or tab) for the cleaned data, never modify your original (raw) data.

After you go through this exercise, we’ll discuss as a group what was wrong with this data and how you would fix it.

Challenge: Once you have tidied up the data, answer the following questions:

- How many men and women took part in the study?

- How many A, AB, and B types have been tested?

- As above, but disregarding the contaminated samples?

- How many Rhesus + and - have been tested?

- How many universal donors (O-) have been tested?

- What is the average weight of AB men?

- How many samples have been tested in the different hospitals?

An excellent reference, in particular with regard to R scripting is the Tidy Data paper @Wickham:2014.

Common spreadsheet errors

Questions

- What are some common challenges with formatting data in spreadsheets and how can we avoid them?

Objectives

- Recognise and resolve common spreadsheet formatting problems.

Keypoints

- Avoid using multiple tables within one spreadsheet.

- Avoid spreading data across multiple tabs.

- Record zeros as zeros.

- Use an appropriate null value to record missing data.

- Don’t use formatting to convey information or to make your spreadsheet look pretty.

- Place comments in a separate column.

- Record units in column headers.

- Include only one piece of information in a cell.

- Avoid spaces, numbers and special characters in column headers.

- Avoid special characters in your data.

- Record metadata in a separate plain text file.

There are a few potential errors to be on the lookout for in your own data as well as data from collaborators or the Internet. If you are aware of the errors and the possible negative effect on downstream data analysis and result interpretation, it might motivate yourself and your project members to try and avoid them. Making small changes to the way you format your data in spreadsheets, can have a great impact on efficiency and reliability when it comes to data cleaning and analysis.

- Using multiple tables

- Using multiple tabs

- Not filling in zeros

- Using problematic null values

- Using formatting to convey information

- Using formatting to make the data sheet look pretty

- Placing comments or units in cells

- Entering more than one piece of information in a cell

- Using problematic field names

- Using special characters in data

- Inclusion of metadata in data table

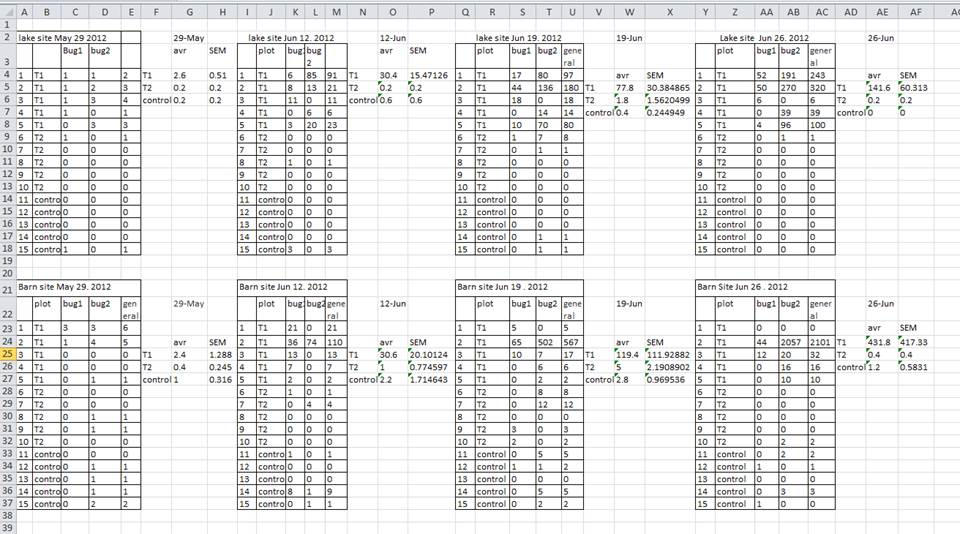

Using multiple tables

A common strategy is creating multiple data tables within one spreadsheet. This confuses the computer, so don’t do this! When you create multiple tables within one spreadsheet, you’re drawing false associations between things for the computer, which sees each row as an observation. You’re also potentially using the same field name in multiple places, which will make it harder to clean your data up into a usable form. The example below depicts the problem:

In the example above, the computer will see (for example) row 4 and assume that all columns A-AF refer to the same sample. This row actually represents four distinct samples (sample 1 for each of four different collection dates - May 29th, June 12th, June 19th, and June 26th), as well as some calculated summary statistics (an average (avr) and standard error of measurement (SEM)) for two of those samples. Other rows are similarly problematic.

Using multiple tabs

But what about workbook tabs? That seems like an easy way to organise data, right? Well, yes and no. When you create extra tabs, you fail to allow the computer to see connections in the data that are there (you have to introduce spreadsheet application-specific functions or scripting to ensure this connection). Say, for instance, you make a separate tab for each day you take a measurement.

This isn’t good practice for two reasons:

you are more likely to accidentally add inconsistencies to your data if each time you take a measurement, you start recording data in a new tab, and

even if you manage to prevent all inconsistencies from creeping in, you will add an extra step for yourself before you analyse the data because you will have to combine these data into a single datatable. You will have to explicitly tell the computer how to combine tabs - and if the tabs are inconsistently formatted, you might even have to do it manually.

The next time you’re entering data, and you go to create another tab or table, ask yourself if you could avoid adding this tab by adding another column to your original spreadsheet. We used multiple tabs in our example of a messy data file, but now you’ve seen how you can reorganise your data to consolidate across tabs.

Your data sheet might get very long over the course of the experiment. This makes it harder to enter data if you can’t see your headers at the top of the spreadsheet. But don’t repeat your header row. These can easily get mixed into the data, leading to problems down the road. Instead you can freeze the column headers so that they remain visible even when you have a spreadsheet with many rows.

Not filling in zeros

It might be that when you’re measuring something, it’s usually a zero, say the number of times a rabbit is observed in the survey. Why bother writing in the number zero in that column, when it’s mostly zeros?

However, there’s a difference between a zero and a blank cell in a spreadsheet. To the computer, a zero is actually data. You measured or counted it. A blank cell means that it wasn’t measured and the computer will interpret it as an unknown value (also known as a null or missing value).

The spreadsheets or statistical programs will likely misinterpret blank cells that you intend to be zeros. By not entering the value of your observation, you are telling your computer to represent that data as unknown or missing (null). This can cause problems with subsequent calculations or analyses. For example, the average of a set of numbers which includes a single null value is always null (because the computer can’t guess the value of the missing observations). Because of this, it’s very important to record zeros as zeros and truly missing data as nulls.

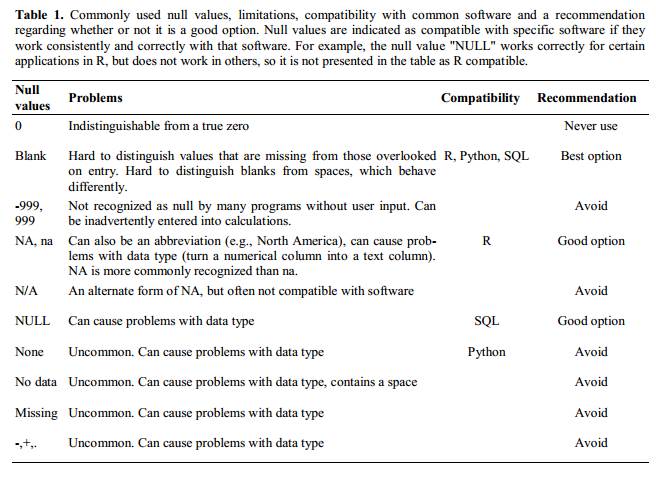

Using problematic null values

Example: using -999 or other numerical values (or zero) to represent missing data.

Solutions:

There are a few reasons why null values get represented differently within a dataset. Sometimes confusing null values are automatically recorded from the measuring device. If that’s the case, there’s not much you can do, but it can be addressed in data cleaning with a tool like OpenRefine before analysis. Other times different null values are used to convey different reasons why the data isn’t there. This is important information to capture, but is in effect using one column to capture two pieces of information. Like for using formatting to convey information it would be good here to create a new column like ‘data_missing’ and use that column to capture the different reasons.

Whatever the reason, it’s a problem if unknown or missing data is recorded as -999, 999, or 0.

Many statistical programs will not recognise that these are intended to represent missing (null) values. How these values are interpreted will depend on the software you use to analyse your data. It is essential to use a clearly defined and consistent null indicator.

Blanks (most applications) and NA (for R) are good choices. @White:2013 explain good choices for indicating null values for different software applications in their article:

Using formatting to convey information

Example: highlighting cells, rows or columns that should be excluded from an analysis, leaving blank rows to indicate separations in data.

Solution: create a new field to encode which data should be excluded.

Using formatting to make the data sheet look pretty

Example: merging cells.

Solution: If you’re not careful, formatting a worksheet to be more aesthetically pleasing can compromise your computer’s ability to see associations in the data. Merged cells will make your data unreadable by statistics software. Consider restructuring your data in such a way that you will not need to merge cells to organise your data.

Placing comments or units in cells

Most analysis software can’t see Excel or LibreOffice comments, and would be confused by comments placed within your data cells. As described above for formatting, create another field if you need to add notes to cells. Similarly, don’t include units in cells: ideally, all the measurements you place in one column should be in the same unit, but if for some reason they aren’t, create another field and specify the units the cell is in.

Entering more than one piece of information in a cell

Example: Recording ABO and Rhesus groups in one cell, such as A+, B+, A-, …

Solution: Don’t include more than one piece of information in a cell. This will limit the ways in which you can analyse your data. If you need both these measurements, design your data sheet to include this information. For example, include one column for the ABO group and one for the Rhesus group.

Using problematic field names

Choose descriptive field names, but be careful not to include spaces, numbers, or special characters of any kind. Spaces can be misinterpreted by parsers that use whitespace as delimiters and some programs don’t like field names that are text strings that start with numbers.

Underscores (_) are a good alternative to spaces.

Consider writing names in camel case (like this: ExampleFileName) to

improve readability. Remember that abbreviations that make sense at the

moment may not be so obvious in 6 months, but don’t overdo it with names

that are excessively long. Including the units in the field names avoids

confusion and enables others to readily interpret your fields.

Examples

| Good Name | Good Alternative | Avoid |

|---|---|---|

| Max_temp_C | MaxTemp | Maximum Temp (°C) |

| Precipitation_mm | Precipitation | precmm |

| Mean_year_growth | MeanYearGrowth | Mean growth/year |

| sex | sex | M/F |

| weight | weight | w. |

| cell_type | CellType | Cell Type |

| Observation_01 | first_observation | 1st Obs |

Using special characters in data

Example: You treat your spreadsheet program as a word processor when writing notes, for example copying data directly from Word or other applications.

Solution: This is a common strategy. For example, when writing longer text in a cell, people often include line breaks, em-dashes, etc. in their spreadsheet. Also, when copying data in from applications such as Word, formatting and fancy non-standard characters (such as left- and right-aligned quotation marks) are included. When exporting this data into a coding/statistical environment or into a relational database, dangerous things may occur, such as lines being cut in half and encoding errors being thrown.

General best practice is to avoid adding characters such as newlines, tabs, and vertical tabs. In other words, treat a text cell as if it were a simple web form that can only contain text and spaces.

Inclusion of metadata in data table

Example: You add a legend at the top or bottom of your data table explaining column meaning, units, exceptions, etc.

Solution: Recording data about your data (“metadata”) is essential. You may be on intimate terms with your dataset while you are collecting and analysing it, but the chances that you will still remember that the variable “sglmemgp” means single member of group, for example, or the exact algorithm you used to transform a variable or create a derived one, after a few months, a year, or more are slim.

As well, there are many reasons other people may want to examine or use your data - to understand your findings, to verify your findings, to review your submitted publication, to replicate your results, to design a similar study, or even to archive your data for access and re-use by others. While digital data by definition are machine-readable, understanding their meaning is a job for human beings. The importance of documenting your data during the collection and analysis phase of your research cannot be overestimated, especially if your research is going to be part of the scholarly record.

However, metadata should not be contained in the data file itself. Unlike a table in a paper or a supplemental file, metadata (in the form of legends) should not be included in a data file since this information is not data, and including it can disrupt how computer programs interpret your data file. Rather, metadata should be stored as a separate file in the same directory as your data file, preferably in plain text format with a name that clearly associates it with your data file. Because metadata files are free text format, they also allow you to encode comments, units, information about how null values are encoded, etc. that are important to document but can disrupt the formatting of your data file.

Additionally, file or database level metadata describes how files that make up the dataset relate to each other; what format they are in; and whether they supercede or are superceded by previous files. A folder-level readme.txt file is the classic way of accounting for all the files and folders in a project.

(Text on metadata adapted from the online course Research Data MANTRA by EDINA and Data Library, University of Edinburgh. MANTRA is licensed under a Creative Commons Attribution 4.0 International License.)

Exporting data

Question

- How can we export data from spreadsheets in a way that is useful for downstream applications?

Objectives

- Store spreadsheet data in universal file formats.

- Export data from a spreadsheet to a CSV file.

Keypoints

Data stored in common spreadsheet formats will often not be read correctly into data analysis software, introducing errors into your data.

Exporting data from spreadsheets to formats like CSV or TSV puts it in a format that can be used consistently by most programs.

Storing the data you’re going to work with for your analyses in Excel

default file format (*.xls or *.xlsx -

depending on the Excel version) isn’t a good idea. Why?

Because it is a proprietary format, and it is possible that in the future, technology won’t exist (or will become sufficiently rare) to make it inconvenient, if not impossible, to open the file.

Other spreadsheet software may not be able to open files saved in a proprietary Excel format.

Different versions of Excel may handle data differently, leading to inconsistencies. Dates is a well-documented example of inconsistencies in data storage.

Finally, more journals and grant agencies are requiring you to deposit your data in a data repository, and most of them don’t accept Excel format. It needs to be in one of the formats discussed below.

The above points also apply to other formats such as open data formats used by LibreOffice / Open Office. These formats are not static and do not get parsed the same way by different software packages.

Storing data in a universal, open, and static format will help deal with this problem. Try tab-delimited (tab separated values or TSV) or comma-delimited (comma separated values or CSV). CSV files are plain text files where the columns are separated by commas, hence ‘comma separated values’ or CSV. The advantage of a CSV file over an Excel/SPSS/etc. file is that we can open and read a CSV file using just about any software, including plain text editors like TextEdit or NotePad. Data in a CSV file can also be easily imported into other formats and environments, such as SQLite and R. We’re not tied to a certain version of a certain expensive program when we work with CSV files, so it’s a good format to work with for maximum portability and endurance. Most spreadsheet programs can save to delimited text formats like CSV easily, although they may give you a warning during the file export.

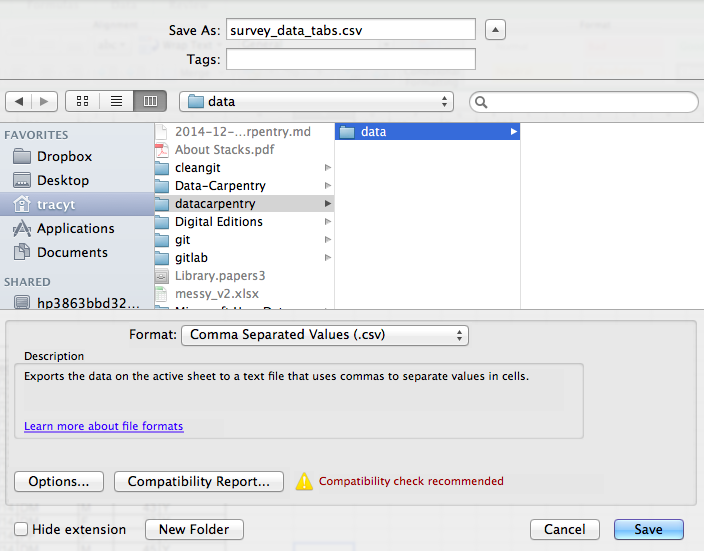

To save a file you have opened in Excel in CSV format:

- From the top menu select ‘File’ and ‘Save as’.

- In the ‘Format’ field, from the list, select ‘Comma Separated

Values’ (

*.csv). - Double check the file name and the location where you want to save it and hit ‘Save’.

An important note for backwards compatibility: you can open CSV files in Excel!

A note on R and xls: There are R

packages that can read xls files (as well as Google

spreadsheets). It is even possible to access different worksheets in the

xls documents.

But

- some of these only work on Windows.

- this equates to replacing a (simple but manual) export to

csvwith additional complexity/dependencies in the data analysis R code. - data formatting best practice still apply.

- Is there really a good reason why

csv(or similar) is not adequate?

Caveats on commas

In some datasets, the data values themselves may include commas (,). In that case, the software which you use (including Excel) will most likely incorrectly display the data in columns. This is because the commas which are a part of the data values will be interpreted as delimiters.

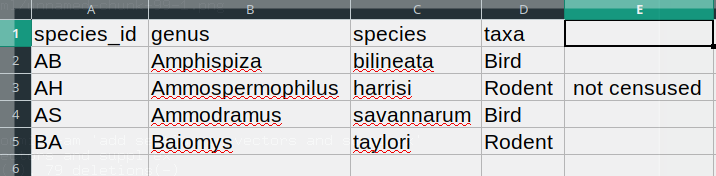

For example, our data might look like this:

species_id,genus,species,taxa

AB,Amphispiza,bilineata,Bird

AH,Ammospermophilus,harrisi,Rodent, not censused

AS,Ammodramus,savannarum,Bird

BA,Baiomys,taylori,RodentIn the record

AH,Ammospermophilus,harrisi,Rodent, not censused the value

for taxa includes a comma

(Rodent, not censused). If we try to read the above into

Excel (or other spreadsheet program), we will get something like

this:

The value for taxa was split into two columns (instead

of being put in one column D). This can propagate to a

number of further errors. For example, the extra column will be

interpreted as a column with many missing values (and without a proper

header). In addition to that, the value in column D for the

record in row 3 (so the one where the value for ‘taxa’ contained the

comma) is now incorrect.

If you want to store your data in csv format and expect

that your data values may contain commas, you can avoid the problem

discussed above by putting the values in quotes (““). Applying this

rule, our data might look like this:

species_id,genus,species,taxa

"AB","Amphispiza","bilineata","Bird"

"AH","Ammospermophilus","harrisi","Rodent, not censused"

"AS","Ammodramus","savannarum","Bird"

"BA","Baiomys","taylori","Rodent"Now opening this file as a csv in Excel will not lead to

an extra column, because Excel will only use commas that fall outside of

quotation marks as delimiting characters.

Alternatively, if you are working with data that contains commas, you likely will need to use another delimiter when working in a spreadsheet1. In this case, consider using tabs as your delimiter and working with TSV files. TSV files can be exported from spreadsheet programs in the same way as CSV files.

If you are working with an already existing dataset in which the data values are not included in “” but which have commas as both delimiters and parts of data values, you are potentially facing a major problem with data cleaning. If the dataset you’re dealing with contains hundreds or thousands of records, cleaning them up manually (by either removing commas from the data values or putting the values into quotes - ““) is not only going to take hours and hours but may potentially end up with you accidentally introducing many errors.

Cleaning up datasets is one of the major problems in many scientific disciplines. The approach almost always depends on the particular context. However, it is a good practice to clean the data in an automated fashion, for example by writing and running a script. The Python and R lessons will give you the basis for developing skills to build relevant scripts.

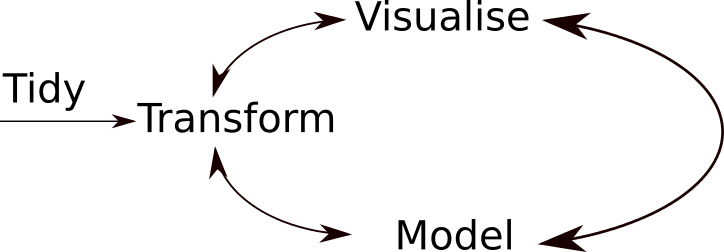

Summary

A typical data analysis workflow is illustrated in the figure above, where data is repeatedly transformed, visualised, and modelled. This iteration is repeated multiple times until the data is understood. In many real-life cases, however, most time is spent cleaning up and preparing the data, rather than actually analysing and understanding it.

An agile data analysis workflow, with several fast iterations of the transform/visualise/model cycle is only feasible if the data is formatted in a predictable way and one can reason about the data without having to look at it and/or fix it.

- Good data organization is the foundation of any research project.

This is particularly relevant in European countries where the comma is used as a decimal separator. In such cases, the default value separator in a csv file will be the semi-colon (;), or values will be systematically quoted.↩︎

Content from R and RStudio

Last updated on 2026-01-27 | Edit this page

Overview

Questions

- What are R and RStudio?

Objectives

- Describe the purpose of the RStudio Script, Console, Environment, and Plots panes.

- Organise files and directories for a set of analyses as an R project, and understand the purpose of the working directory.

- Use the built-in RStudio help interface to search for more information on R functions.

- Demonstrate how to provide sufficient information for troubleshooting with the R user community.

This episode is based on the Data Carpentries’s Data Analysis and Visualisation in R for Ecologists lesson.

What is R? What is RStudio?

The term R is used to refer to the programming language, the environment for statistical computing and the software that interprets the scripts written using it.

RStudio is currently a very popular way to not only write your R scripts but also to interact with the R software1. To function correctly, RStudio needs R and therefore both need to be installed on your computer.

The RStudio IDE Cheat Sheet provides much more information than will be covered here, but can be useful to learn keyboard shortcuts and discover new features.

Why learn R?

R does not involve lots of pointing and clicking, and that’s a good thing

The learning curve might be steeper than with other software, but with R, the results of your analysis do not rely on remembering a succession of pointing and clicking, but instead on a series of written commands, and that’s a good thing! So, if you want to redo your analysis because you collected more data, you don’t have to remember which button you clicked in which order to obtain your results; you just have to run your script again.

Working with scripts makes the steps you used in your analysis clear, and the code you write can be inspected by someone else who can give you feedback and spot mistakes.

Working with scripts forces you to have a deeper understanding of what you are doing, and facilitates your learning and comprehension of the methods you use.

R code is great for reproducibility

Reproducibility means that someone else (including your future self) can obtain the same results from the same dataset when using the same analysis code.

R integrates with other tools to generate manuscripts or reports from your code. If you collect more data, or fix a mistake in your dataset, the figures and the statistical tests in your manuscript or report are updated automatically.

An increasing number of journals and funding agencies expect analyses to be reproducible, so knowing R will give you an edge with these requirements.

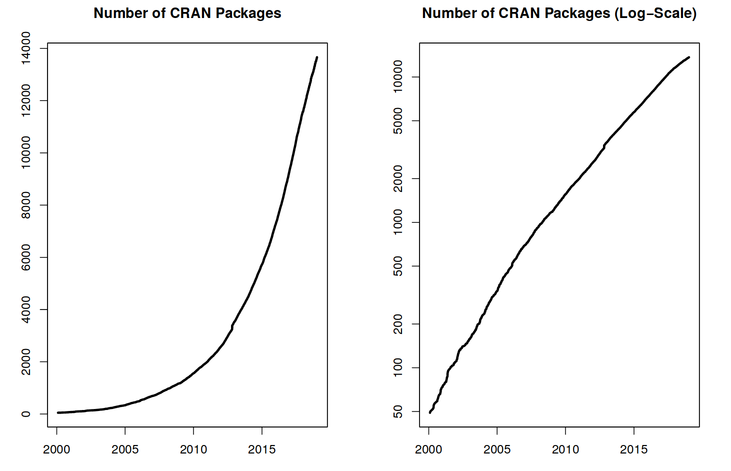

R is interdisciplinary and extensible

With 10000+ packages2 that can be installed to extend its capabilities, R provides a framework that allows you to combine statistical approaches from many scientific disciplines to best suit the analytical framework you need to analyse your data. For instance, R has packages for image analysis, GIS, time series, population genetics, and a lot more.

R works on data of all shapes and sizes

The skills you learn with R scale easily with the size of your dataset. Whether your dataset has hundreds or millions of lines, it won’t make much difference to you.

R is designed for data analysis. It comes with special data structures and data types that make handling of missing data and statistical factors convenient.

R can connect to spreadsheets, databases, and many other data formats, on your computer or on the web.

R produces high-quality graphics

The plotting functionalities in R are extensive, and allow you to adjust any aspect of your graph to convey most effectively the message from your data.

R has a large and welcoming community

Thousands of people use R daily. Many of them are willing to help you through mailing lists and websites such as Stack Overflow, or on the RStudio community. These broad user communities extend to specialised areas such as bioinformatics. One such subset of the R community is Bioconductor, a scientific project for analysis and comprehension “of data from current and emerging biological assays.” This workshop was developed by members of the Bioconductor community; for more information on Bioconductor, please see the companion workshop “The Bioconductor Project”.

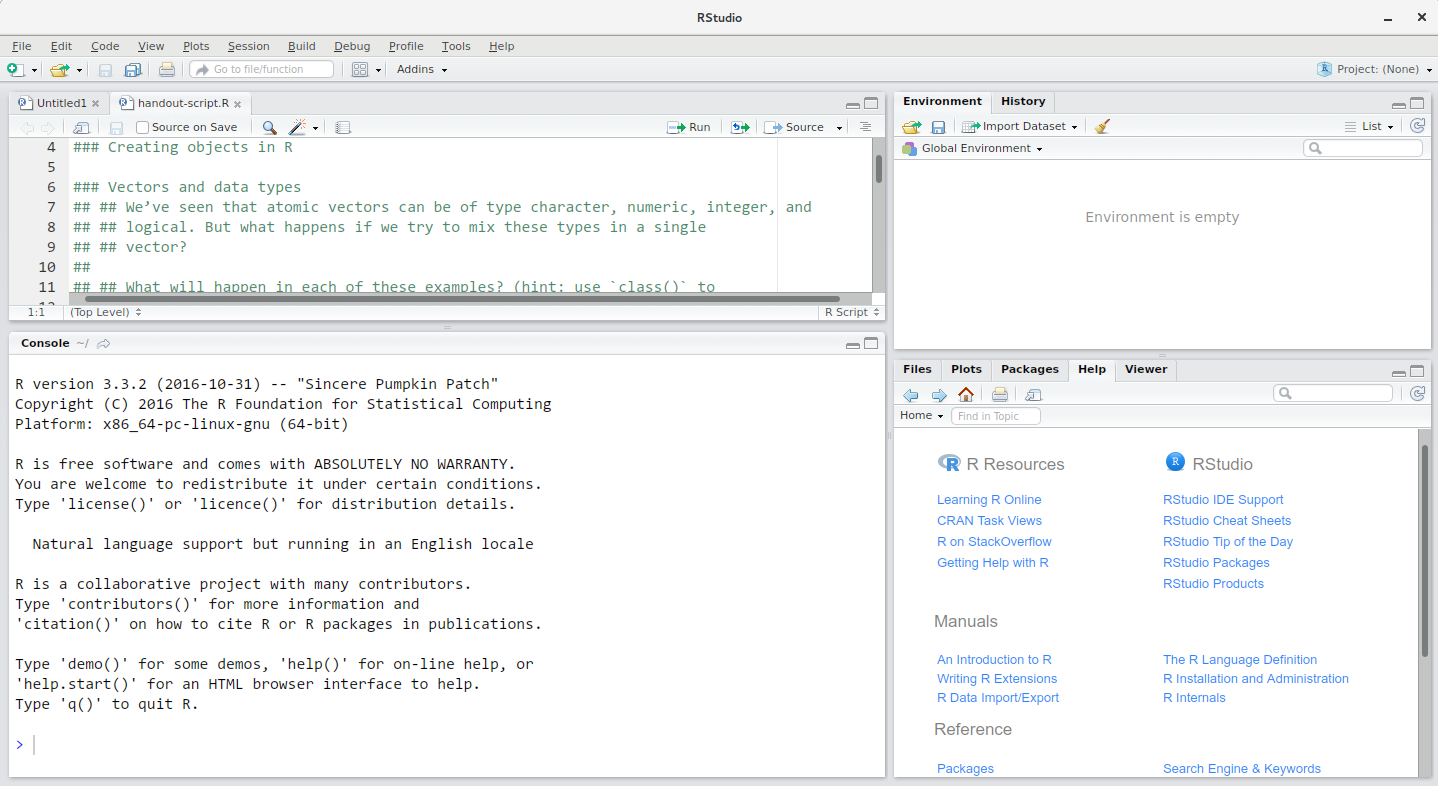

Knowing your way around RStudio

Let’s start by learning about RStudio, which is an Integrated Development Environment (IDE) for working with R.

The RStudio IDE open-source product is free under the Affero General Public License (AGPL) v3. The RStudio IDE is also available with a commercial license and priority email support from Posit, Inc.

We will use the RStudio IDE to write code, navigate the files on our computer, inspect the variables we are going to create, and visualise the plots we will generate. RStudio can also be used for other things (e.g., version control, developing packages, writing Shiny apps) that we will not cover during the workshop.

The RStudio window is divided into 4 “Panes”:

- the Source for your scripts and documents (top-left, in the default layout)

- your Environment/History (top-right),

- your Files/Plots/Packages/Help/Viewer (bottom-right), and

- the R Console (bottom-left).

The placement of these panes and their content can be customised (see

menu, Tools -> Global Options -> Pane Layout).

One of the advantages of using RStudio is that all the information you need to write code is available in a single window. Additionally, with many shortcuts, autocompletion, and highlighting for the major file types you use while developing in R, RStudio will make typing easier and less error-prone.

Getting set up

It is good practice to keep a set of related data, analyses, and text self-contained in a single folder, called the working directory. All of the scripts within this folder can then use relative paths to files that indicate where inside the project a file is located (as opposed to absolute paths, which point to where a file is on a specific computer). Working this way makes it a lot easier to move your project around on your computer and share it with others without worrying about whether or not the underlying scripts will still work.

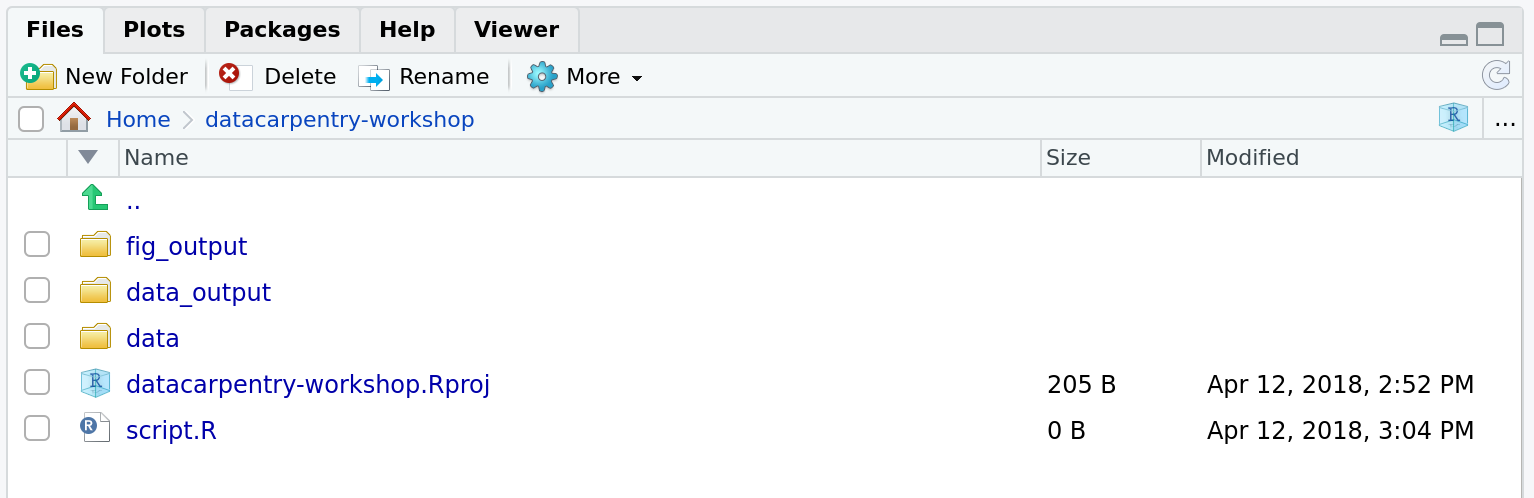

RStudio provides a helpful set of tools to do this through its “Projects” interface, which not only creates a working directory for you, but also remembers its location (allowing you to quickly navigate to it) and optionally preserves custom settings and open files to make it easier to resume work after a break. Go through the steps for creating an “R Project” for this tutorial below.

- Start RStudio.

- Under the

Filemenu, click onNew project. ChooseNew directory, thenNew project. - Enter a name for this new folder (or “directory”), and choose a

convenient location for it. This will be your working

directory for this session (or whole course) (e.g.,

bioc-intro). - Click on

Create project. - (Optional) Set Preferences to ‘Never’ save workspace in RStudio.

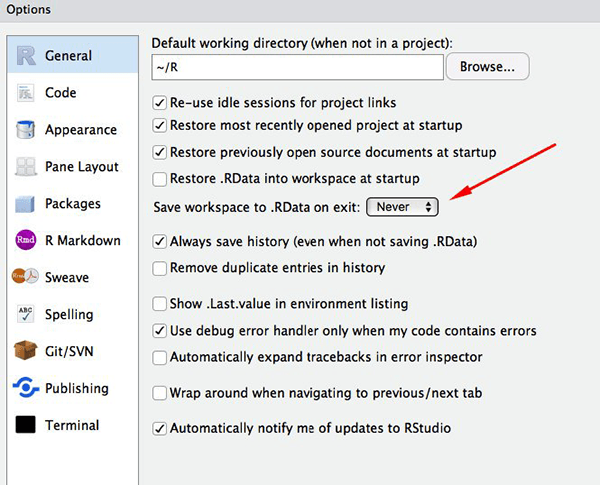

RStudio’s default preferences generally work well, but saving a workspace to .RData can be cumbersome, especially if you are working with larger datasets. To turn that off, go to Tools –> ‘Global Options’ and select the ‘Never’ option for ‘Save workspace to .RData’ on exit.

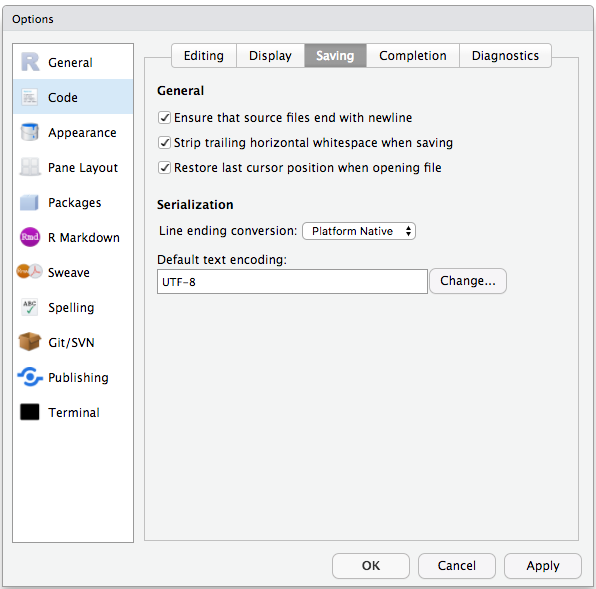

To avoid character encoding issues between Windows and other operating systems, we are going to set UTF-8 by default:

Organizing your working directory

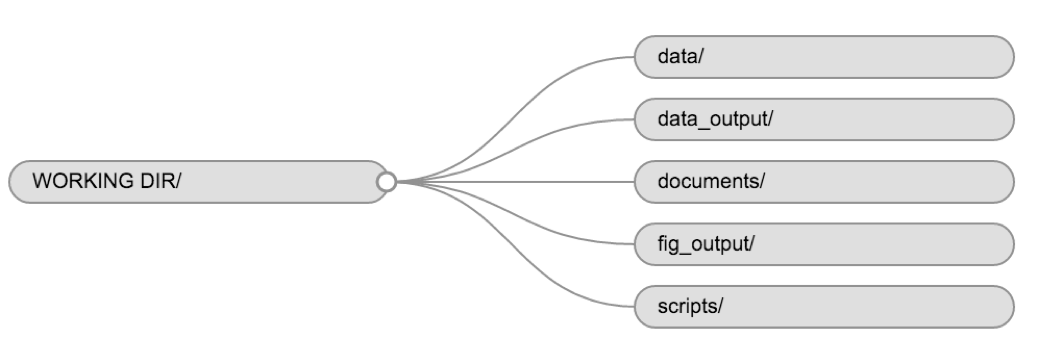

Using a consistent folder structure across your projects will help keep things organised, and will also make it easy to find/file things in the future. This can be especially helpful when you have multiple projects. In general, you may create directories (folders) for scripts, data, and documents.

-

data/Use this folder to store your raw data and intermediate datasets you may create for the need of a particular analysis. For the sake of transparency and provenance, you should always keep a copy of your raw data accessible and do as much of your data cleanup and preprocessing programmatically (i.e., with scripts, rather than manually) as possible. Separating raw data from processed data is also a good idea. For example, you could have filesdata/raw/tree_survey.plot1.txtand...plot2.txtkept separate from adata/processed/tree.survey.csvfile generated by thescripts/01.preprocess.tree_survey.Rscript. -

documents/This would be a place to keep outlines, drafts, and other text. -

scripts/(orsrc) This would be the location to keep your R scripts for different analyses or plotting, and potentially a separate folder for your functions (more on that later).

You may want additional directories or subdirectories depending on your project needs, but these should form the backbone of your working directory.

For this course, we will need a data/ folder to store

our raw data, and we will use data_output/ for when we

learn how to export data as CSV files, and fig_output/

folder for the figures that we will save.

Challenge: create your project directory structure

Under the Files tab on the right of the screen, click on

New Folder and create a folder named data

within your newly created working directory (e.g.,

~/bioc-intro/data). (Alternatively, type

dir.create("data") at your R console.) Repeat these

operations to create a data_output/ and a

fig_output folders.

We are going to keep the script in the root of our working directory because we are only going to use one file and it will make things easier.

Your working directory should now look like this:

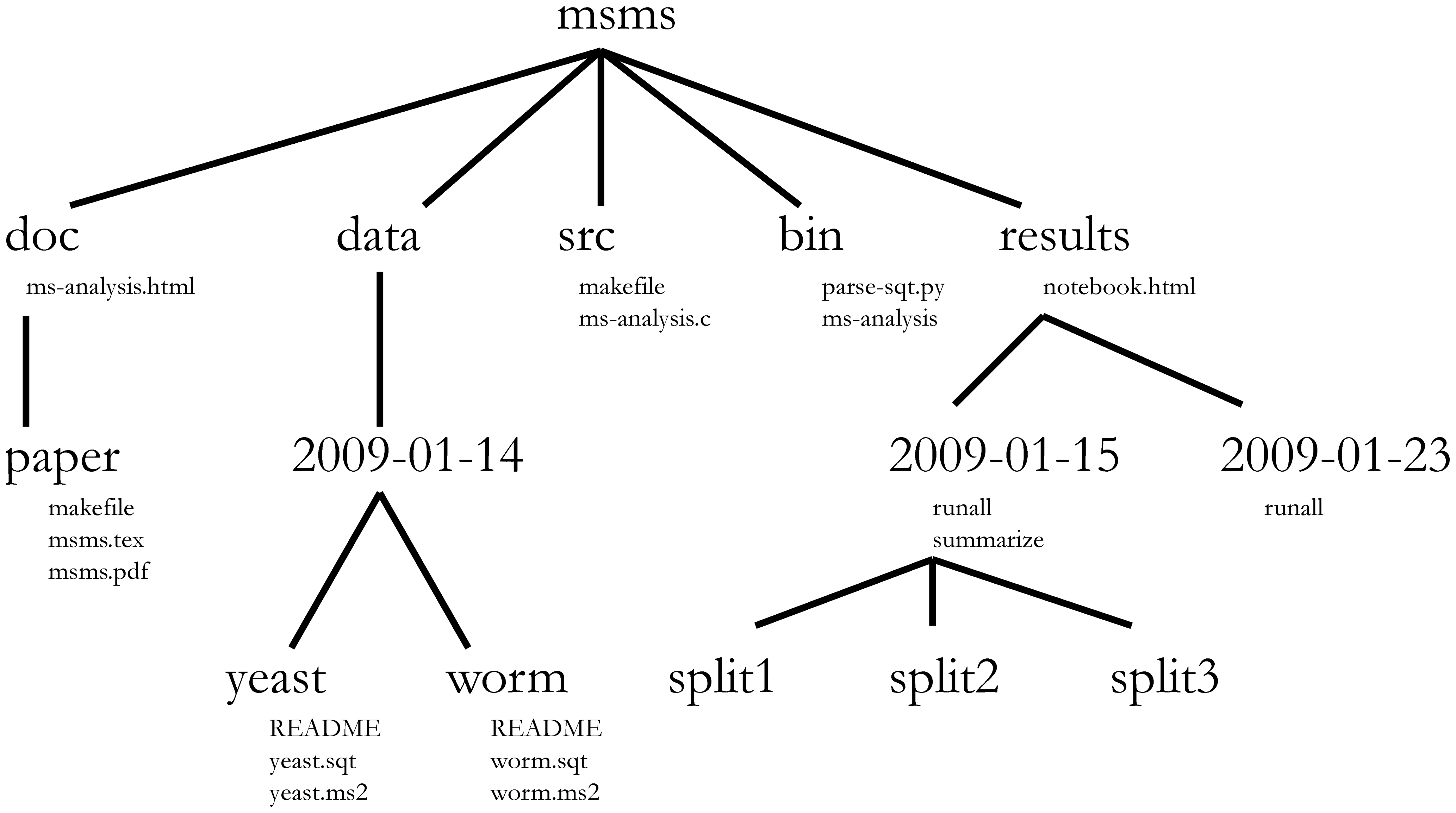

Project management is also applicable to bioinformatics projects, of course3. William Noble (@Noble:2009) proposes the following directory structure:

Directory names are in large typeface, and filenames are in smaller typeface. Only a subset of the files are shown here. Note that the dates are formatted

<year>-<month>-<day>so that they can be sorted in chronological order. The source codesrc/ms-analysis.cis compiled to createbin/ms-analysisand is documented indoc/ms-analysis.html. TheREADMEfiles in the data directories specify who downloaded the data files from what URL on what date. The driver scriptresults/2009-01-15/runallautomatically generates the three subdirectories split1, split2, and split3, corresponding to three cross-validation splits. Thebin/parse-sqt.pyscript is called by both of therunalldriver scripts.

The most important aspect of a well defined and well documented project directory is to enable someone unfamiliar with the project4 to

understand what the project is about, what data are available, what analyses were run, and what results were produced and, most importantly to

repeat the analysis over again - with new data, or changing some analysis parameters.

The working directory

The working directory is an important concept to understand. It is the place from where R will be looking for and saving the files. When you write code for your project, it should refer to files in relation to the root of your working directory and only need files within this structure.

Using RStudio projects makes this easy and ensures that your working

directory is set properly. If you need to check it, you can use

getwd(). If for some reason your working directory is not

what it should be, you can change it in the RStudio interface by

navigating in the file browser where your working directory should be,

and clicking on the blue gear icon More, and select

Set As Working Directory. Alternatively you can use

setwd("/path/to/working/directory") to reset your working

directory. However, your scripts should not include this line because it

will fail on someone else’s computer.

Example

The schema below represents the working directory

bioc-intro with the data and

fig_output sub-directories, and 2 files in the latter:

bioc-intro/data/

/fig_output/fig1.pdf

/fig_output/fig2.pngIf we were in the working directory, we could refer to the

fig1.pdf file using the relative path

bioc-intro/fig_output/fig1.pdf or the absolute path

/home/user/bioc-intro/fig_output/fig1.pdf.

If we were in the data directory, we would use the

relative path ../fig_output/fig1.pdf or the same absolute

path /home/user/bioc-intro/fig_output/fig1.pdf.

Interacting with R

The basis of programming is that we write down instructions for the computer to follow, and then we tell the computer to follow those instructions. We write, or code, instructions in R because it is a common language that both the computer and we can understand. We call the instructions commands and we tell the computer to follow the instructions by executing (also called running) those commands.

There are two main ways of interacting with R: by using the

console or by using scripts (plain

text files that contain your code). The console pane (in RStudio, the

bottom left panel) is the place where commands written in the R language

can be typed and executed immediately by the computer. It is also where

the results will be shown for commands that have been executed. You can

type commands directly into the console and press Enter to

execute those commands, but they will be forgotten when you close the

session.

Because we want our code and workflow to be reproducible, it is better to type the commands we want in the script editor, and save the script. This way, there is a complete record of what we did, and anyone (including our future selves!) can easily replicate the results on their computer. Note, however, that merely typing the commands in the script does not automatically run them - they still need to be sent to the console for execution.

RStudio allows you to execute commands directly from the script

editor by using the Ctrl + Enter shortcut (on

Macs, Cmd + Return will work, too). The

command on the current line in the script (indicated by the cursor) or

all of the commands in the currently selected text will be sent to the

console and executed when you press Ctrl +

Enter. You can find other keyboard shortcuts in this RStudio

cheatsheet about the RStudio IDE.

At some point in your analysis you may want to check the content of a

variable or the structure of an object, without necessarily keeping a

record of it in your script. You can type these commands and execute

them directly in the console. RStudio provides the Ctrl +

1 and Ctrl + 2 shortcuts allow

you to jump between the script and the console panes.

If R is ready to accept commands, the R console shows a

> prompt. If it receives a command (by typing,

copy-pasting or sending from the script editor using Ctrl +

Enter), R will try to execute it, and when ready, will show

the results and come back with a new > prompt to wait

for new commands.

If R is still waiting for you to enter more data because it isn’t

complete yet, the console will show a + prompt. It means

that you haven’t finished entering a complete command. This is because

you have not ‘closed’ a parenthesis or quotation, i.e. you don’t have

the same number of left-parentheses as right-parentheses, or the same

number of opening and closing quotation marks. When this happens, and

you thought you finished typing your command, click inside the console

window and press Esc; this will cancel the incomplete

command and return you to the > prompt.

How to learn more during and after the course?

The material we cover during this course will give you an initial taste of how you can use R to analyse data for your own research. However, you will need to learn more to do advanced operations such as cleaning your dataset, using statistical methods, or creating beautiful graphics5. The best way to become proficient and efficient at R, as with any other tool, is to use it to address your actual research questions. As a beginner, it can feel daunting to have to write a script from scratch, and given that many people make their code available online, modifying existing code to suit your purpose might make it easier for you to get started.

Seeking help

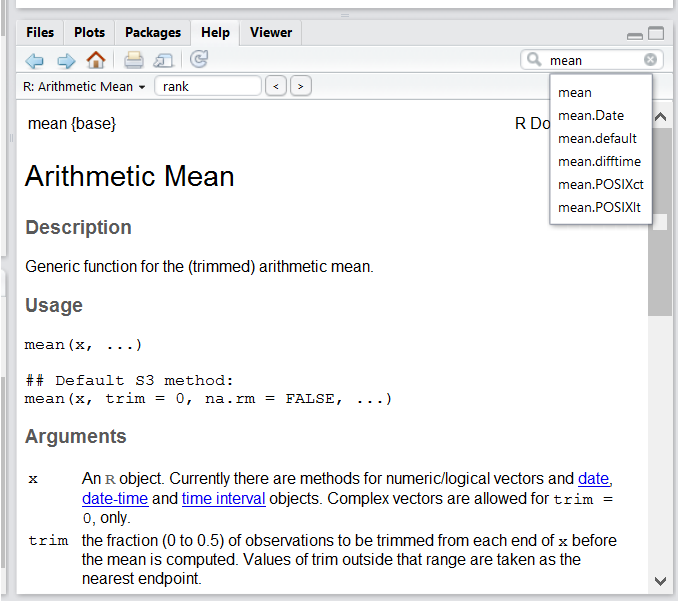

Use the built-in RStudio help interface to search for more information on R functions

One of the fastest ways to get help, is to use the RStudio help interface. This panel by default can be found at the lower right hand panel of RStudio. As seen in the screenshot, by typing the word “Mean”, RStudio tries to also give a number of suggestions that you might be interested in. The description is then shown in the display window.

I know the name of the function I want to use, but I’m not sure how to use it

If you need help with a specific function, let’s say

barplot(), you can type:

R

?barplot

If you just need to remind yourself of the names of the arguments, you can use:

R

args(lm)

I want to use a function that does X, there must be a function for it but I don’t know which one…

If you are looking for a function to do a particular task, you can

use the help.search() function, which is called by the

double question mark ??. However, this only looks through

the installed packages for help pages with a match to your search

request

R

??kruskal

If you can’t find what you are looking for, you can use the rdocumentation.org website that searches through the help files across all packages available.

Finally, a generic Google or internet search “R <task>” will often either send you to the appropriate package documentation or a helpful forum where someone else has already asked your question.

I am stuck… I get an error message that I don’t understand

Start by googling the error message. However, this doesn’t always work very well because often, package developers rely on the error catching provided by R. You end up with general error messages that might not be very helpful to diagnose a problem (e.g. “subscript out of bounds”). If the message is very generic, you might also include the name of the function or package you’re using in your query.

However, you should check Stack Overflow. Search using the

[r] tag. Most questions have already been answered, but the

challenge is to use the right words in the search to find the

answers:

https://stackoverflow.com/questions/tagged/r

The Introduction to R can also be dense for people with little programming experience but it is a good place to understand the underpinnings of the R language.

The R FAQ is dense and technical but it is full of useful information.

Asking for help

The key to receiving help from someone is for them to rapidly grasp your problem. You should make it as easy as possible to pinpoint where the issue might be.

Try to use the correct words to describe your problem. For instance, a package is not the same thing as a library. Most people will understand what you meant, but others have really strong feelings about the difference in meaning. The key point is that it can make things confusing for people trying to help you. Be as precise as possible when describing your problem.

If possible, try to reduce what doesn’t work to a simple reproducible example. If you can reproduce the problem using a very small data frame instead of your 50000 rows and 10000 columns one, provide the small one with the description of your problem. When appropriate, try to generalise what you are doing so even people who are not in your field can understand the question. For instance instead of using a subset of your real dataset, create a small (3 columns, 5 rows) generic one. For more information on how to write a reproducible example see this article by Hadley Wickham.

To share an object with someone else, if it’s relatively small, you

can use the function dput(). It will output R code that can

be used to recreate the exact same object as the one in memory:

R

## iris is an example data frame that comes with R and head() is a

## function that returns the first part of the data frame

dput(head(iris))

OUTPUT

structure(list(Sepal.Length = c(5.1, 4.9, 4.7, 4.6, 5, 5.4),

Sepal.Width = c(3.5, 3, 3.2, 3.1, 3.6, 3.9), Petal.Length = c(1.4,

1.4, 1.3, 1.5, 1.4, 1.7), Petal.Width = c(0.2, 0.2, 0.2,

0.2, 0.2, 0.4), Species = structure(c(1L, 1L, 1L, 1L, 1L,

1L), levels = c("setosa", "versicolor", "virginica"), class = "factor")), row.names = c(NA,

6L), class = "data.frame")If the object is larger, provide either the raw file (i.e., your CSV file) with your script up to the point of the error (and after removing everything that is not relevant to your issue). Alternatively, in particular if your question is not related to a data frame, you can save any R object to a file[^export]:

R

saveRDS(iris, file="/tmp/iris.rds")

The content of this file is however not human readable and cannot be

posted directly on Stack Overflow. Instead, it can be sent to someone by

email who can read it with the readRDS() command (here it

is assumed that the downloaded file is in a Downloads

folder in the user’s home directory):

R

some_data <- readRDS(file="~/Downloads/iris.rds")

Last, but certainly not least, always include the output of

sessionInfo() as it provides critical information

about your platform, the versions of R and the packages that you are

using, and other information that can be very helpful to understand your

problem.

R

sessionInfo()

OUTPUT

R version 4.5.2 (2025-10-31)

Platform: x86_64-pc-linux-gnu

Running under: Ubuntu 24.04.3 LTS

Matrix products: default

BLAS: /usr/lib/x86_64-linux-gnu/openblas-pthread/libblas.so.3

LAPACK: /usr/lib/x86_64-linux-gnu/openblas-pthread/libopenblasp-r0.3.26.so; LAPACK version 3.12.0

locale:

[1] LC_CTYPE=en_US.UTF-8 LC_NUMERIC=C

[3] LC_TIME=en_US.UTF-8 LC_COLLATE=en_US.UTF-8

[5] LC_MONETARY=en_US.UTF-8 LC_MESSAGES=en_US.UTF-8

[7] LC_PAPER=en_US.UTF-8 LC_NAME=C

[9] LC_ADDRESS=C LC_TELEPHONE=C

[11] LC_MEASUREMENT=en_US.UTF-8 LC_IDENTIFICATION=C

time zone: Etc/UTC

tzcode source: system (glibc)

attached base packages:

[1] stats graphics grDevices utils datasets methods base

loaded via a namespace (and not attached):

[1] BiocManager_1.30.27 compiler_4.5.2 cli_3.6.5

[4] tools_4.5.2 otel_0.2.0 yaml_2.3.12

[7] knitr_1.51 xfun_0.55 rlang_1.1.6

[10] renv_1.1.6 evaluate_1.0.5 Where to ask for help?

- The person sitting next to you during the course. Don’t hesitate to talk to your neighbour during the workshop, compare your answers, and ask for help.

- Your friendly colleagues: if you know someone with more experience than you, they might be able and willing to help you.

- Stack Overflow: if your question hasn’t been answered before and is well crafted, chances are you will get an answer in less than 5 min. Remember to follow their guidelines on how to ask a good question.

- The R-help mailing list: it is read by a lot of people (including most of the R core team), a lot of people post to it, but the tone can be pretty dry, and it is not always very welcoming to new users. If your question is valid, you are likely to get an answer very fast but don’t expect that it will come with smiley faces. Also, here more than anywhere else, be sure to use correct vocabulary (otherwise you might get an answer pointing to the misuse of your words rather than answering your question). You will also have more success if your question is about a base function rather than a specific package.

- If your question is about a specific package, see if there is a

mailing list for it. Usually it’s included in the DESCRIPTION file of

the package that can be accessed using

packageDescription("name-of-package"). You may also want to try to email the author of the package directly, or open an issue on the code repository (e.g., GitHub). - There are also some topic-specific mailing lists (GIS, phylogenetics, etc…), the complete list is available on the R-project mailing list page.

More resources

The Posting Guide for the R mailing lists.

How to ask for R help useful guidelines.

This blog post by Jon Skeet has quite comprehensive advice on how to ask programming questions.

The reprex package is very helpful to create reproducible examples when asking for help. The rOpenSci community call “How to ask questions so they get answered” (Github link and video recording) includes a presentation of the reprex package and of its philosophy.

R packages

Loading packages

As we have seen above, R packages play a fundamental role in R. The

make use of a package’s functionality, assuming it is installed, we

first need to load it to be able to use it. This is done with the

library() function. Below, we load

ggplot2.

R

library("ggplot2")

Installing packages

The default package repository is The Comprehensive R Archive

Network (CRAN), and any package that is available on CRAN can be

installed with the install.packages() function. Below, for

example, we install the dplyr package that we will learn

about later.

R

install.packages("dplyr")

This command will install the dplyr package as well as

all its dependencies, i.e. all the packages that it relies on to

function.

Another major R package repository is maintained by Bioconductor. Bioconductor

packages are managed and installed using a dedicated package, namely

BiocManager, that can be installed from CRAN with

R

install.packages("BiocManager")

Individual packages such as SummarizedExperiment (we

will use it later), DESeq2 (for RNA-Seq analysis), and any

others from either Bioconductor or CRAN can then be installed with

BiocManager::install.

R

BiocManager::install("SummarizedExperiment")

BiocManager::install("DESeq2")

By default, BiocManager::install() will also check all

your installed packages and see if there are newer versions available.

If there are, it will show them to you and ask you if you want to

Update all/some/none? [a/s/n]: and then wait for your

answer. While you should strive to have the most up-to-date package

versions, in practice we recommend only updating packages in a fresh R

session before any packages are loaded.

- Start using R and RStudio

As opposed to using R directly from the command line console. There exist other software that interface and integrate with R, but RStudio is particularly well suited for beginners while providing numerous very advanced features.↩︎

i.e. add-ons that confer R with new functionality, such as bioinformatics data analysis.↩︎

In this course, we consider bioinformatics as data science applied to biological or bio-medical data.↩︎

That someone could be, and very likely will be your future self, a couple of months or years after the analyses were run.↩︎

We will introduce most of these (except statistics) here, but will only manage to scratch the surface of the wealth of what is possible to do with R.↩︎

Content from Introduction to R

Last updated on 2026-01-27 | Edit this page

Overview

Questions

- First commands in R

Objectives

- Define the following terms as they relate to R: object, assign, call, function, arguments, options.

- Assign values to objects in R.

- Learn how to name objects

- Use comments to inform script.

- Solve simple arithmetic operations in R.

- Call functions and use arguments to change their default options.

- Inspect the content of vectors and manipulate their content.

- Subset and extract values from vectors.

- Analyze vectors with missing data.

This episode is based on the Data Carpentries’s Data Analysis and Visualisation in R for Ecologists lesson.

Creating objects in R

You can get output from R simply by typing math in the console:

R

3 + 5

OUTPUT

[1] 8R

12 / 7

OUTPUT

[1] 1.714286However, to do useful and interesting things, we need to assign

values to objects. To create an object, we need to

give it a name followed by the assignment operator <-,

and the value we want to give it:

R

weight_kg <- 55

<- is the assignment operator. It assigns values on

the right to objects on the left. So, after executing

x <- 3, the value of x is 3.

The arrow can be read as 3 goes into x.

For historical reasons, you can also use = for assignments,

but not in every context. Because of the slight

differences in syntax, it is good practice to always use

<- for assignments.

In RStudio, typing Alt + - (push Alt

at the same time as the - key) will write <-

in a single keystroke in a PC, while typing Option +

- (push Option at the same time as the

- key) does the same in a Mac.

Naming variables

Objects can be given any name such as x,

current_temperature, or subject_id. You want

your object names to be explicit and not too long. They cannot start

with a number (2x is not valid, but x2 is). R

is case sensitive (e.g., weight_kg is different from

Weight_kg). There are some names that cannot be used

because they are the names of fundamental functions in R (e.g.,

if, else, for, see the Reserved

Words in R manual page, for a complete list). In general, even if

it’s allowed, it’s best to not use other function names (e.g.,

c, T, mean, data,

df, weights). If in doubt, check the help to

see if the name is already in use. It’s also best to avoid dots

(.) within an object name as in my.dataset.

There are many functions in R with dots in their names for historical

reasons, but because dots have a special meaning in R (for methods) and

other programming languages, it’s best to avoid them. It is also

recommended to use nouns for object names, and verbs for function names.

It’s important to be consistent in the styling of your code (where you

put spaces, how you name objects, etc.). Using a consistent coding style

makes your code clearer to read for your future self and your

collaborators. In R, some popular style guides are Google’s, the

tidyverse’s style and the Bioconductor

style guide. The tidyverse’s is very comprehensive and may seem

overwhelming at first. You can install the lintr

package to automatically check for issues in the styling of your

code.

Objects vs. variables: What are known as

objectsinRare known asvariablesin many other programming languages. Depending on the context,objectandvariablecan have drastically different meanings. However, in this lesson, the two words are used synonymously. For more information see here.

When assigning a value to an object, R does not print anything. You can force R to print the value by using parentheses or by typing the object name:

R

weight_kg <- 55 # doesn't print anything

(weight_kg <- 55) # but putting parenthesis around the call prints the value of `weight_kg`

OUTPUT

[1] 55R

weight_kg # and so does typing the name of the object

OUTPUT

[1] 55Now that R has weight_kg in memory, we can do arithmetic

with it. For instance, we may want to convert this weight into pounds

(weight in pounds is 2.2 times the weight in kg):

R

2.2 * weight_kg

OUTPUT

[1] 121We can also change an object’s value by assigning it a new one:

R

weight_kg <- 57.5

2.2 * weight_kg

OUTPUT

[1] 126.5This means that assigning a value to one object does not change the

values of other objects For example, let’s store the animal’s weight in

pounds in a new object, weight_lb:

R

weight_lb <- 2.2 * weight_kg

and then change weight_kg to 100.

R

weight_kg <- 100

Challenge:

What do you think is the current content of the object

weight_lb? 126.5 or 220?

Comments

The comment character in R is #, anything to the right

of a # in a script will be ignored by R. It is useful to

leave notes, and explanations in your scripts.

RStudio makes it easy to comment or uncomment a paragraph: after selecting the lines you want to comment, press at the same time on your keyboard Ctrl + Shift + C. If you only want to comment out one line, you can put the cursor at any location of that line (i.e. no need to select the whole line), then press Ctrl + Shift + C.

Challenge

What are the values after each statement in the following?

R

mass <- 47.5 # mass?

age <- 122 # age?

mass <- mass * 2.0 # mass?

age <- age - 20 # age?

mass_index <- mass/age # mass_index?

Functions and their arguments

Functions are “canned scripts” that automate more complicated sets of

commands including operations assignments, etc. Many functions are

predefined, or can be made available by importing R packages

(more on that later). A function usually gets one or more inputs called

arguments. Functions often (but not always) return a

value. A typical example would be the function

sqrt(). The input (the argument) must be a number, and the

return value (in fact, the output) is the square root of that number.

Executing a function (‘running it’) is called calling the

function. An example of a function call is:

R

b <- sqrt(a)

Here, the value of a is given to the sqrt()

function, the sqrt() function calculates the square root,

and returns the value which is then assigned to the object

b. This function is very simple, because it takes just one

argument.

The return ‘value’ of a function need not be numerical (like that of

sqrt()), and it also does not need to be a single item: it

can be a set of things, or even a dataset. We’ll see that when we read

data files into R.

Arguments can be anything, not only numbers or filenames, but also other objects. Exactly what each argument means differs per function, and must be looked up in the documentation (see below). Some functions take arguments which may either be specified by the user, or, if left out, take on a default value: these are called options. Options are typically used to alter the way the function operates, such as whether it ignores ‘bad values’, or what symbol to use in a plot. However, if you want something specific, you can specify a value of your choice which will be used instead of the default.

Let’s try a function that can take multiple arguments:

round().

R

round(3.14159)

OUTPUT

[1] 3Here, we’ve called round() with just one argument,

3.14159, and it has returned the value 3.

That’s because the default is to round to the nearest whole number. If

we want more digits we can see how to do that by getting information

about the round function. We can use

args(round) or look at the help for this function using

?round.

R

args(round)

OUTPUT

function (x, digits = 0, ...)

NULLR

?round

We see that if we want a different number of digits, we can type

digits=2 or however many we want.

R

round(3.14159, digits = 2)

OUTPUT

[1] 3.14If you provide the arguments in the exact same order as they are defined you don’t have to name them:

R

round(3.14159, 2)

OUTPUT

[1] 3.14And if you do name the arguments, you can switch their order:

R

round(digits = 2, x = 3.14159)

OUTPUT

[1] 3.14It’s good practice to put the non-optional arguments (like the number you’re rounding) first in your function call, and to specify the names of all optional arguments. If you don’t, someone reading your code might have to look up the definition of a function with unfamiliar arguments to understand what you’re doing. By specifying the name of the arguments you are also safeguarding against possible future changes in the function interface, which may potentially add new arguments in between the existing ones.

Vectors and data types

A vector is the most common and basic data type in R, and is pretty

much the workhorse of R. A vector is composed by a series of values,

such as numbers or characters. We can assign a series of values to a

vector using the c() function. For example we can create a

vector of animal weights and assign it to a new object

weight_g:

R

weight_g <- c(50, 60, 65, 82)

weight_g

OUTPUT

[1] 50 60 65 82A vector can also contain characters:

R

molecules <- c("dna", "rna", "protein")

molecules

OUTPUT

[1] "dna" "rna" "protein"The quotes around “dna”, “rna”, etc. are essential here. Without the

quotes R will assume there are objects called dna,

rna and protein. As these objects don’t exist

in R’s memory, there will be an error message.

There are many functions that allow you to inspect the content of a

vector. length() tells you how many elements are in a

particular vector:

R

length(weight_g)

OUTPUT

[1] 4R

length(molecules)

OUTPUT

[1] 3An important feature of a vector, is that all of the elements are the

same type of data. The function class() indicates the class

(the type of element) of an object:

R

class(weight_g)

OUTPUT

[1] "numeric"R

class(molecules)

OUTPUT

[1] "character"The function str() provides an overview of the structure

of an object and its elements. It is a useful function when working with

large and complex objects:

R

str(weight_g)

OUTPUT

num [1:4] 50 60 65 82R

str(molecules)

OUTPUT

chr [1:3] "dna" "rna" "protein"You can use the c() function to add other elements to

your vector:

R

weight_g <- c(weight_g, 90) # add to the end of the vector

weight_g <- c(30, weight_g) # add to the beginning of the vector

weight_g

OUTPUT

[1] 30 50 60 65 82 90In the first line, we take the original vector weight_g,

add the value 90 to the end of it, and save the result back

into weight_g. Then we add the value 30 to the

beginning, again saving the result back into weight_g.

We can do this over and over again to grow a vector, or assemble a dataset. As we program, this may be useful to add results that we are collecting or calculating.

An atomic vector is the simplest R data

type and is a linear vector of a single type. Above, we saw 2

of the 6 main atomic vector types that R uses:

"character" and "numeric" (or

"double"). These are the basic building blocks that all R

objects are built from. The other 4 atomic vector types

are:

-

"logical"forTRUEandFALSE(the boolean data type) -

"integer"for integer numbers (e.g.,2L, theLindicates to R that it’s an integer) -

"complex"to represent complex numbers with real and imaginary parts (e.g.,1 + 4i) and that’s all we’re going to say about them -

"raw"for bitstreams that we won’t discuss further

You can check the type of your vector using the typeof()

function and inputting your vector as the argument.

Vectors are one of the many data structures that R

uses. Other important ones are lists (list), matrices

(matrix), data frames (data.frame), factors

(factor) and arrays (array).

Challenge:

We’ve seen that atomic vectors can be of type character, numeric (or double), integer, and logical. But what happens if we try to mix these types in a single vector?

R implicitly converts them to all be the same type

Challenge:

What will happen in each of these examples? (hint: use

class() to check the data type of your objects and type in

their names to see what happens):

R

num_char <- c(1, 2, 3, "a")

num_logical <- c(1, 2, 3, TRUE, FALSE)

char_logical <- c("a", "b", "c", TRUE)

tricky <- c(1, 2, 3, "4")

R

class(num_char)

OUTPUT

[1] "character"R

num_char

OUTPUT

[1] "1" "2" "3" "a"R

class(num_logical)

OUTPUT

[1] "numeric"R

num_logical

OUTPUT

[1] 1 2 3 1 0R

class(char_logical)

OUTPUT

[1] "character"R

char_logical

OUTPUT

[1] "a" "b" "c" "TRUE"R

class(tricky)

OUTPUT

[1] "character"R

tricky

OUTPUT

[1] "1" "2" "3" "4"Challenge:

Why do you think it happens?

Vectors can be of only one data type. R tries to convert (coerce) the content of this vector to find a common denominator that doesn’t lose any information.

Challenge:

How many values in combined_logical are

"TRUE" (as a character) in the following example:

R

num_logical <- c(1, 2, 3, TRUE)

char_logical <- c("a", "b", "c", TRUE)

combined_logical <- c(num_logical, char_logical)

Only one. There is no memory of past data types, and the coercion

happens the first time the vector is evaluated. Therefore, the

TRUE in num_logical gets converted into a

1 before it gets converted into "1" in

combined_logical.

R

combined_logical

OUTPUT

[1] "1" "2" "3" "1" "a" "b" "c" "TRUE"Challenge:

In R, we call converting objects from one class into another class coercion. These conversions happen according to a hierarchy, whereby some types get preferentially coerced into other types. Can you draw a diagram that represents the hierarchy of how these data types are coerced?

logical → numeric → character ← logical

Subsetting vectors

If we want to extract one or several values from a vector, we must provide one or several indices in square brackets. For instance:

R

molecules <- c("dna", "rna", "peptide", "protein")

molecules[2]

OUTPUT

[1] "rna"R

molecules[c(3, 2)]

OUTPUT

[1] "peptide" "rna" We can also repeat the indices to create an object with more elements than the original one:

R

more_molecules <- molecules[c(1, 2, 3, 2, 1, 4)]

more_molecules

OUTPUT

[1] "dna" "rna" "peptide" "rna" "dna" "protein"R indices start at 1. Programming languages like Fortran, MATLAB, Julia, and R start counting at 1, because that’s what human beings typically do. Languages in the C family (including C++, Java, Perl, and Python) count from 0 because that’s simpler for computers to do.

Finally, it is also possible to get all the elements of a vector except some specified elements using negative indices:

R

molecules ## all molecules

OUTPUT

[1] "dna" "rna" "peptide" "protein"R

molecules[-1] ## all but the first one

OUTPUT

[1] "rna" "peptide" "protein"R

molecules[-c(1, 3)] ## all but 1st/3rd ones

OUTPUT

[1] "rna" "protein"R

molecules[c(-1, -3)] ## all but 1st/3rd ones

OUTPUT

[1] "rna" "protein"Conditional subsetting

Another common way of subsetting is by using a logical vector.

TRUE will select the element with the same index, while

FALSE will not:

R

weight_g <- c(21, 34, 39, 54, 55)

weight_g[c(TRUE, FALSE, TRUE, TRUE, FALSE)]

OUTPUT

[1] 21 39 54Typically, these logical vectors are not typed by hand, but are the output of other functions or logical tests. For instance, if you wanted to select only the values above 50:

R

## will return logicals with TRUE for the indices that meet

## the condition

weight_g > 50

OUTPUT

[1] FALSE FALSE FALSE TRUE TRUER

## so we can use this to select only the values above 50

weight_g[weight_g > 50]

OUTPUT

[1] 54 55You can combine multiple tests using & (both

conditions are true, AND) or | (at least one of the

conditions is true, OR):

R

weight_g[weight_g < 30 | weight_g > 50]

OUTPUT

[1] 21 54 55R

weight_g[weight_g >= 30 & weight_g == 21]

OUTPUT

numeric(0)Here, < stands for “less than”, > for

“greater than”, >= for “greater than or equal to”, and

== for “equal to”. The double equal sign == is

a test for numerical equality between the left and right hand sides, and

should not be confused with the single = sign, which

performs variable assignment (similar to <-).

A common task is to search for certain strings in a vector. One could

use the “or” operator | to test for equality to multiple

values, but this can quickly become tedious. The function

%in% allows you to test if any of the elements of a search

vector are found:

R

molecules <- c("dna", "rna", "protein", "peptide")

molecules[molecules == "rna" | molecules == "dna"] # returns both rna and dna

OUTPUT

[1] "dna" "rna"R

molecules %in% c("rna", "dna", "metabolite", "peptide", "glycerol")

OUTPUT

[1] TRUE TRUE FALSE TRUER

molecules[molecules %in% c("rna", "dna", "metabolite", "peptide", "glycerol")]

OUTPUT

[1] "dna" "rna" "peptide"Challenge:

Can you figure out why "four" > "five" returns

TRUE?

R

"four" > "five"

OUTPUT

[1] TRUEWhen using > or < on strings, R

compares their alphabetical order. Here "four" comes after

"five", and therefore is greater than it.

Names

It is possible to name each element of a vector. The code chunk below shows an initial vector without any names, how names are set, and retrieved.

R

x <- c(1, 5, 3, 5, 10)

names(x) ## no names

OUTPUT

NULLR

names(x) <- c("A", "B", "C", "D", "E")

names(x) ## now we have names

OUTPUT

[1] "A" "B" "C" "D" "E"When a vector has names, it is possible to access elements by their name, in addition to their index.

R

x[c(1, 3)]

OUTPUT

A C

1 3 R

x[c("A", "C")]

OUTPUT

A C

1 3 Missing data

As R was designed to analyze datasets, it includes the concept of

missing data (which is uncommon in other programming languages). Missing

data are represented in vectors as NA.

When doing operations on numbers, most functions will return