Introduction to Natural Language Processing

Overview

Teaching: 15 minutes min

Exercises: 20 minutes minQuestions

What is Natural Language Processing?

What tasks can be done by Natural Language Processing?

What does a workflow for an NLP project look?

Objectives

Learn the tasks that NLP can do

Use a pretrained chatbot in python

Discuss our workflow for performing NLP tasks

Introduction

What is Natural Language Processing?

Text Analysis, also known as Natural Language Processing or NLP, is a subdiscipline of the larger disciplines of machine learning and artificial intelligence.

AI and machine learning both use complex mathematical constructs called models to take data as an input and produce a desired output.

What distinguishes NLP from other types of machine learning is that text and human language is the main input for NLP tasks.

Context for Digital Humanists

Before we get started, we would like to also provide a disclaimer. The humanities involves a wide variety of fields. Each of those fields brings a variety of research interests and methods to focus on a wide variety of questions.

AI is not infallible or without bias. NLP is simply another tool you can use to analyze texts and should be critically considered in the same way any other tool would be. The goal of this workshop is not to replace or discredit existing humanist methods, but to help humanists learn new tools to help them accomplish their research.

The Interpretive Loop

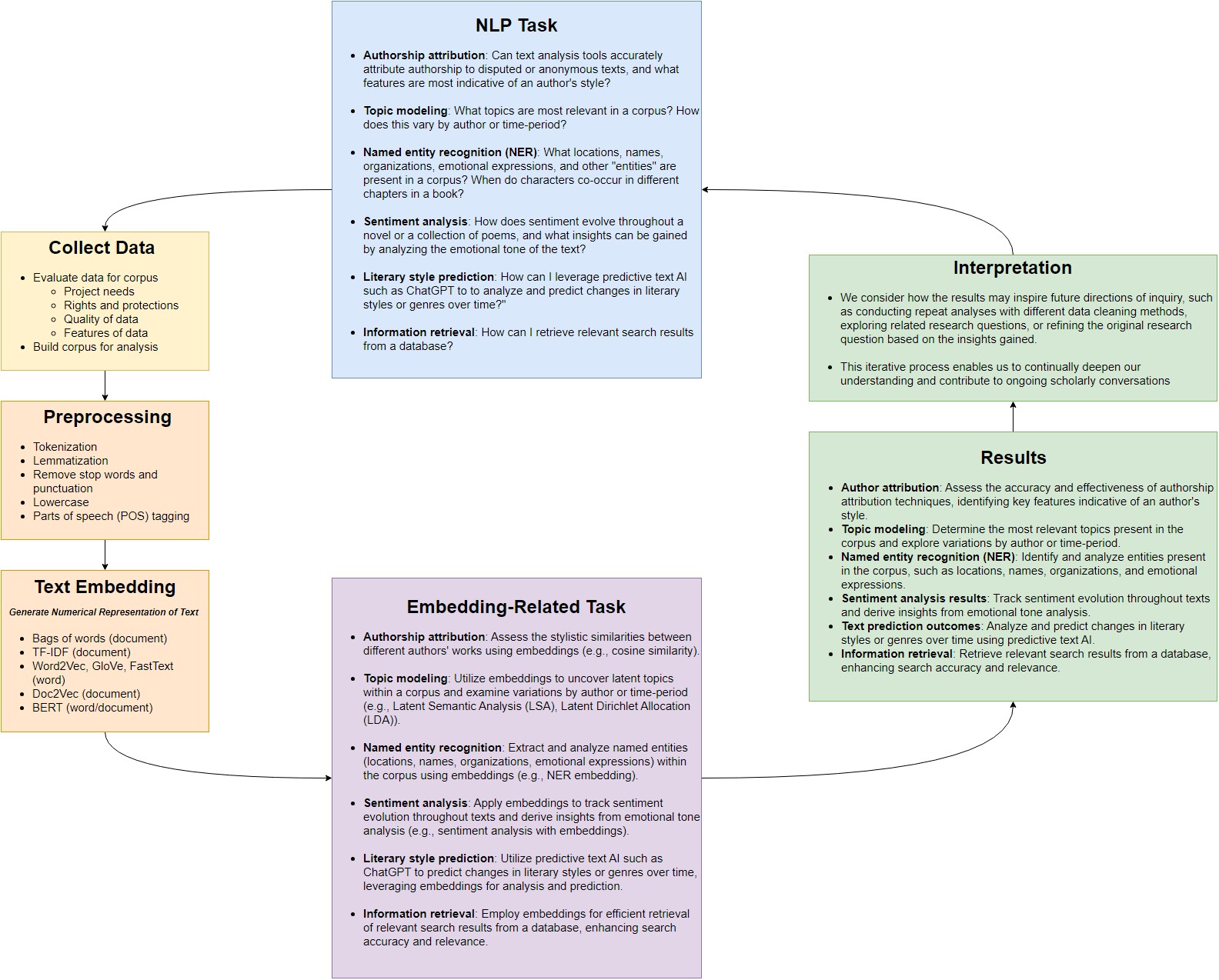

Despite the array of tasks encompassed within text analysis, many share common underlying processes and methodologies. Throughout our exploration, we’ll navigate an ‘interpretive loop’ that connects our research inquiries with the tools and techniques of natural language processing (NLP). This loop comprises several recurring stages:

- Formulating a research question or NLP task: Each journey begins with defining a task or problem within the domain of the digital humanities. This might involve authorship attribution, topic modeling, named entity recognition (NER), sentiment analysis, text prediction, or search, among others.

- Data collection and corpus building: With a clear objective in mind, the next step involves gathering relevant data and constructing a corpus (a set of documents). This corpus serves as the foundation for our analysis and model training. It may include texts, documents, articles, social media posts, or any other textual data pertinent to the research task.

- Data preprocessing: Before our data can be fed into NLP models, it undergoes preprocessing steps to clean, tokenize, and format the text. This ensures compatibility with our chosen model and facilitates efficient computation.

- Generating embeddings: Our processed data is then transformed into mathematical representations known as embeddings. These embeddings capture semantic and contextual information in the corpus, bridging the gap between human intuition and machine algorithms.

- Embedding-related tasks: Leveraging embeddings, we perform various tasks such as measuring similarity between documents, summarizing texts, or extracting key insights.

- Results: Results are generated from specific embedding-related tasks, such as measuring document similarity, document summarization, or topic modeling to uncover latent themes within a corpus.

- Interpreting results: Finally, we interpret the outputs in the context of our research objectives, stakeholder interests, and broader scholarly discourse. This critical analysis allows us to draw conclusions, identify patterns, and refine our approach as needed.

Additionally, we consider how the results may inspire future directions of inquiry, such as conducting repeat analyses with different data cleaning methods, exploring related research questions, or refining the original research question based on the insights gained. This iterative process enables us to continually deepen our understanding and contribute to ongoing scholarly conversations.

NLP Tasks

We’ll start by trying to understand what tasks NLP can do. Some of the many functions of NLP include topic modelling and categorization, named entity recognition, search, summarization and more.

We’re going to explore some of these tasks in this lesson using the popular “HuggingFace” library.

Launch a web browser and navigate to https://huggingface.co/tasks. Here we can see examples of many of the tasks achievable using NLP.

What do these different tasks mean? Let’s take a look at an example. A user engages in conversation with a bot. The bot generates a response based on the user’s prompt. This is called text generation. Let’s click on this task now: https://huggingface.co/tasks/text-generation

HuggingFace usefully provides an online demo as well as a description of the task. On the right, we can see there is a demo of a particular model that does this task. Give conversing with the chatbot a try.

If we scroll down, much more information is available. There is a link to sample models and datasets HuggingFace has made available that can do variations of this task. Documentation on how to use the model is available by scrolling down the page. Model specific information is available by clicking on the model.

Worked Example: Chatbot in Python

We’ve got an overview of what different tasks we can accomplish. Now let’s try getting started with doing these tasks in Python. We won’t worry too much about how this model works for the time being, but will instead just focusing trying it out. We’ll start by running a chatbot, just like the one we used online.

NLP tasks often need to be broken down into simpler subtasks to be executed in a particular order. These are called pipelines since the output from one subtask is used as the input to the next subtask. We will now define a “pipeline” in Python.

Launch either colab or our Anaconda environment, depending on your setup. Try following the example below.

from transformers import pipeline

from transformers.utils import logging

#disable warning about optional authentication

logging.set_verbosity_error()

text2text_generator = pipeline("text2text-generation")

print(text2text_generator("question: What is 42 ? context: 42 is the answer to life, the universe and everything"))

[{'generated_text': 'the answer to life, the universe and everything'}]

Feel free to prompt the chatbot with a few prompts of your own.

Group Activity and Discussion

With some experience with a task, let’s get a broader overview of the types of tasks we can do. Relaunch a web browser and go back to https://huggingface.co/tasks. Break out into groups and look at a couple of tasks for HuggingFace. The groups will be based on general categories for each task. Discuss possible applications of this type of model to your field of research. Try to brainstorm possible applications for now, don’t worry about technical implementation.

- Tasks that seek to convert non-text into text

- Searching and classifying documents as a whole

- Classifying individual words- Sequence based tasks

- Interactive and generative tasks such as conversation and question answering

Briefly present a summary of some of the tasks you explored. What types of applications could you see this type of task used in? How might this be relevant to a research question you have? Summarize these tasks and present your findings to the group.

What tasks can NLP do?

There are many models for representing language. The model we chose for our task will depend on what we want the output of our model to do. In other words, our model will vary based on the task we want it to accomplish.

We can think of the various tasks NLP can do as different types of desired outputs, which may require different models depending on the task.

Let’s discuss tasks you may find interesting in more detail. These are not the only tasks NLP can accomplish, but they are frequently of interest for Humanities scholars.

Search

Search attempts to retrieve documents that are similar to a query. In order to do this, there must be some way to compute the similarity between documents. A search query can be thought of as a small input document, and the outputs could be a score of relevant documents stored in the corpus. While we may not be building a search engine, we will find that similarity metrics such as those used in search are important to understanding NLP.

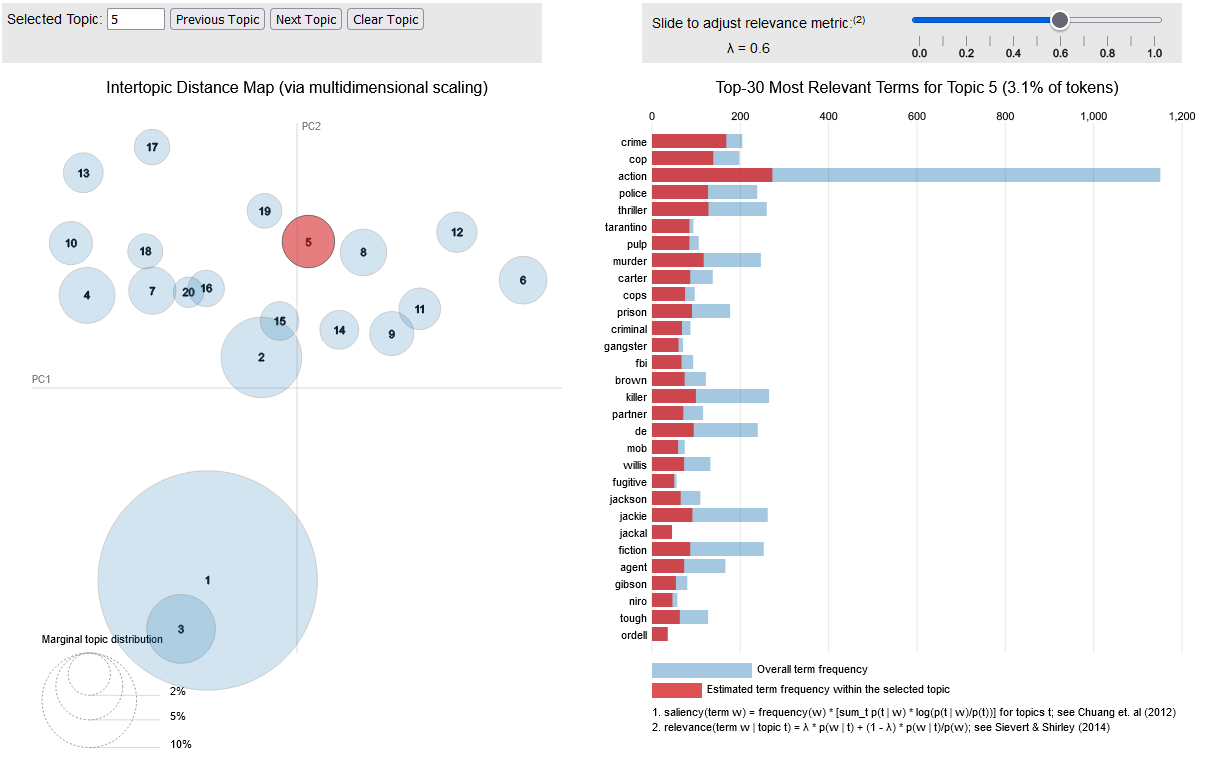

Topic Modeling

Topic modeling is a type of analysis that attempts to categorize documents into categories. These categories could be human generated labels, or we could ask our model to group together similar texts and create its own labels. For example, the Federalist Papers are a set of 85 essays written by three American Founding Fathers- Alexander Hamilton, James Madison and John Jay. These papers were written under pseudonyms, but many of the papers authors were later identified. One use for topic modelling might be to present a set of papers from each author that are known, and ask our model to label the federalist papers whose authorship is in dispute.

Alternatively, the computer might be asked to come up with a set number of topics, and create categories without precoded documents, in a process called unsupervised learning. Supervised learning requires human labelling and intervention, where unsupervised learning does not. Scholars may then look at the categories created by the NLP model and try to interpret them. One example of this is Mining the Dispatch, which tries to categorize articles based on unsupervised learning topics.

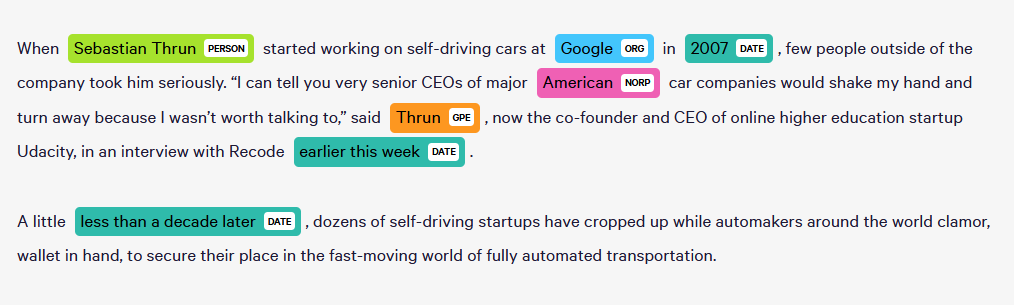

Token Classification

The task of token classification is trying to apply labels on a more granular level- labelling words as belonging to a certain group. The entities we are looking to recognize may be common. Parts of Speech (POS) Tagging looks to give labels to entities such as verbs, nouns, and so on. Named Entity Recognition (NER) seeks to label things such as places, names of individuals, or countries might not be easily enumerated. A possible application of this would be to track co-occurrence of characters in different chapters in a book.

Document Summarization

Document summarization takes documents which are longer, and attempts to output a document with the same meaning by finding relevant snippets or by generating a smaller document that conveys the meaning of the first document. Think of this as taking a large set of input data of words and outputting a smaller output of words that describe our original text.

Text Prediction

Text prediction attempts to predict future text inputs from a user based on previous text inputs. Predictive text is used in search engines and also on smartphones to help correct inputs and speed up the process of text input. It is also used in popular models such as ChatGPT.

Summary and Outro

We’ve looked at a general process or ‘interpretive loop’ for NLP. We’ve also seen a variety of different tasks you can accomplish with NLP. We used Python to generate text based on one of the models available through HuggingFace. Hopefully this gives some ideas about how you might use NLP in your area of research.

In the lessons that follow, we will be working on better understanding what is happening in these models. Before we can use a model though, we need to make sure we have data to build our model on. Our next lesson will be looking at one tool to build a dataset called APIs.

Key Points

NLP is comprised of models that perform different tasks.

Our workflow for an NLP project consists of designing, preprocessing, representation, running, creating output, and interpreting that output.

NLP tasks can be adapted to suit different research interests.

Corpus Development- Text Data Collection

Overview

Teaching: 20 min

Exercises: 20 minQuestions

How do I evaluate what kind of data to use for my project?

What do I need to consider when building my corpus?

Objectives

Become familiar with technical and legal/ethical considerations for data collection.

Practice evaluating text data files created through different processes.

Corpus Development- Text Data Collection

Building Your Corpus

The best sources to build a corpus, or dataset, for text analysis will ultimately depend on the needs of your project. Datasets and sources are not usually prepared to be used in specific kinds of projects, therefore the burden is on the researcher to select materials that will be suitable for their corpus.

Evaluating Data

It can be tempting to find a source and grab its data in bulk, trusting that it will be a fit for your analyses because it meets certain criteria. However, it is important to think critically about the data you are gathering, its context, and the corpus you are assembling. Doing so will allow you to create a corpus that can both meet your project’s needs and possibly serve as its own contribution to your field. As you collect your data and assemble your corpus, you will need to think critically about the content, file types, reduction of bias, rights and permissions, quality, and features needed for your analysis. You may find that no one source fits all of these needs and it may be best to put together a corpus from a variety of sources.

Content type

Materials used in projects can be either born digital, meaning that they originated in a digital format, or digitized, meaning that they were converted to a digital format. Common sources of text data for text analysis projects include digitized archival or primary source materials, newspapers, books, social media, and research articles. Depending on your project, you may even need to digitize some materials yourself. If you are accessing born digital materials, you will want to document the dates you accessed the resources as sources that are born digital may change over time and diverge from those in your corpus. If you are digitizing materials, you will want to document your process for digitization and make sure you are considering the rights and restrictions that apply to your materials.

The nature of your research question will inform the content type and your potential data sources. A question like “How are women represented in 19th century texts?” is very broad. A corpus that explores this question might quickly exceed your computing power as it is large enough to include all content types. Instead, it would be helpful to narrow the scope of the question and this will also narrow down the content type and potential sources. Which women? Where? What kind of texts - newspapers, novels, magazines, legal documents? A question like “How are women represented in classic 19th century American novels?” narrows the scope and content type to 19th century classic American novels.

Once you know the type of materials you need, you can begin exploring data sources for your project. Sources of text data can include government documents, cultural institutions such as archives, libraries, and museums, online projects, and commercial sources. Many sources make their data easily available for download or through an API. Depending on the source, you may also be able to reach out and ask for a copy of data. Other sources, such as commercial vendors, including vendors that work with libraries, can restrict access to their full text data or not allow for download outside of their platform. Although researchers tend to prefer full text data for text analysis, metadata from a source can also be useful for analysis.

File types

Text data can come in different forms, including unstructured text in a plain text file, or in a structured file such as json, html, or xml. As you collect files for potential use in your corpus, creating an inventory of the file types will be helpful as you decide whether to include files, which files to convert, and what kind of analyses you may want to explore.

You may find that the documents you want to analyze may not be in the format you want them to be. They may not even be in text form. A common source of data for text analysis in the digital humanities includes digitized sources. Digitized documents result in jpeg images, which aren’t very useful for text analysis. Some sources also provide a text file for the digitized image which is generated by either Optical Character Recognition (ORC) or, if the document was handwritten, by Handwritten Text Recognition (HTR), which converts images to text. A source may have audio files that are important to your corpus and may or may not contain a transcript generated by speech transcription software. The process of converting files is out of scope for this lesson, but it is worth mentioning that you can also use an OCR tool such as Tesseract, an HTR tool like eScriptorium, or a speech to text tool like DeepSpeech, which are all open source, to convert your files from image or audio to text.

Rights and Restrictions

One of the most important criteria for inclusion in your corpus is whether or not you have the right to use the data in the way your project requires. When evaluating data sources for your project, you may need to navigate a variety of legal and ethical issues. We’ll briefly mention some of them below, but to learn more about these issues, we recommend the open access book Building Legal Literacies for Text and Data Mining. If you are working with foreign-held or licensed content or your project involves international research collaborations, we recommend reviewing resources from the Legal Literacies for Text Data Mining- Cross Border Project (LLTDMX).

- Copyright - Copyright law in the United States protects original works of authorship and grants the right to reproduce the work, to create derivative works, distribute copies, perform the work publicly, and to share the work publicly. Fair use may create exceptions for some TDM activities, but if you are analyzing the full text of copyrighted material, publicly sharing that corpus would most likely not be allowed.

- Licensing - Licenses grant permission to use materials in certain ways while usually restricting others. If you are working with databases or other licensed collections of materials, make sure that you understand the license and how it applies to text and data mining.

- Terms of Use - If you are collecting text data from other sources, such as websites or applications, make sure that you understand any retrictions on how the data can be used.

- Technology Protection Measures - Some publishers and content hosts protect their copyrighted/licensed materials through encryption. While commercial versions of ebooks, for example, would make for easy content to analyze, circumventing these protections would be illegal in the United States under the Digital Millennium Copyright Act.

- Privacy - Before sharing a corpus publicly, consider whether doing so would constitute any legal or ethical violations, especially with regards to privacy. Consulting with digital scholarship librarians at your university or professional organizations in your field would be a good place to learn about privacy issues that might arise with the type of data you are working with.

- Research Protections - Depending on the type of corpus you are creating, you might need to consider human subject research protections such as informed consent. Your institution’s Institutional Review Board may be able to help you navigate emerging issues surrounding text data that is publicly available but could be sensitive, such as social media data.

Assessing Data Sources for Bias

Thinking critically about sources of data and the bias they may introduce to your project is important. It can be tempting to think that datasets are objective and that computational analysis can give you objective answers, however, the strength of the humanities is being able to interpret and understand subjectivity. Who created the data you are considering and for what purpose? What biases might they have held and how might that impact what is included or excluded from your data?

It is also important to think about the bias you may create as you choose your sources and assemble your corpus. If you are creating a corpus to explore how immigrant women are represented in 19th century American novels, you should consider who you are representing in your own corpus. Are any of the authors you are including women? Are any of them immigrants? Including different perspectives can give you a richer corpus that could lead to multiple insights that wouldn’t have been possible with a more limited corpus.

Another source of bias that you should consider is the bias in datasets used to train models you might use in your research and what impact they might have on your analysis. Research the models you are considering. What data were they trained on? Are there known issues with those datasets? If there are known bias issues with the model or you discover some, you will need to consider your options. Is it possible to remediate the model by either removing the biased dataset or adding new training data? Is there an alternative model trained on different data?

Data Quality and Features

Sources of text data each have their own characteristics depending on content type and whether the source was digitized, born digital, or converted from another medium. This may impact the quality of the data or give it certain characteristics. As you assemble your corpus, you should think critically about how the quality of the data and its features might impact your analysis or your decision to include it.

Text data sources that are born digital, meaning that they are created in digital formats rather than being converted or digitized, tend to have better quality data. However, this does not mean that they will necessarily be the best for your project or easier to work with. You should become familiar with your data sources, the way the data source impacts the text data, and options for improving the data quality if necessary.

Let’s look at two different content types, a novel and a newspaper, and how they are formatted. We’ll be working with novels from Project Gutenberg in the next lesson, including the novel “Emma” by Jane Austen. In this lesson we’ll compare the data from that ebook with OCR text data from a digitized newspaper of an article about Jane Austen.

Let’s explore the Project Gutenberg file for “Emma.” Project Gutenberg offers public domain ebooks in HTML or plain text. Uploaded versions must be proofread and often have had page numbers, headers, and footers removed. This makes for good quality plain text data that is easy to work with. However, it includes language about the project and the rights associated with the ebook at the beginning of each file that may need to be removed for cleaner text.

This novel is formatted to include a table of contents at the beginning that outlines its structure. Depending on your analysis, you could use these features to either divide the text data into its volumes and chapters or if you don’t need it, you can decide to remove the capitalized words volume and chapters from the corpus.

Now let’s look at a digitized image of an article about Jane Austen from the Library of Congress’s Chronicling America: Historic American Newspapers collection and its accompanying OCR text.

You can see that the text in the image is in columns. Because of the way the OCR process works, the OCR text data will be in columns as well and will preserve all the instances of words being broken up by this feature. When you look at the OCR text file, you can see that it also includes the text of all the other articles in the same image.

When you look at the quality of the text data, you can see that it is full of misspelled and broken up words. If you wanted to include it in a corpus, you might want to improve the quality of the text data by increasing the contrast or sharpening the image of the text you want and running it through OCR tools. An advanced technique involves running the image through three OCR programs and comparing the outputs against each other.

Assembling Your Corpus

Now that you have an understanding of what you need to consider when collecting data for a corpus, it can be useful to create a list with the requirements of your specific project to help you evaluate your data. Your corpus might be made up from different sources that you are bringing together. It is important for you to document the sources for your data, including the date accessed, search terms you used, and any decisions you made about what to include or exclude. Whether you are able to make your corpus public later on will depend on the rights and restrictions of the sources used, so make sure to document that information as well.

Although it sounds impressive, Big Data doesn’t always make for a better project. The size of your corpus should depend on your project’s needs, your storage capacity, and your computing power. A smaller dataset with more targeted documents might actually be better at helping you arrive at the insights that you need, depending on your use case. Whether your corpus consists of hundreds of documents or millions, the important thing is to create the corpus that works best for your project.

Key Points

You will need to evaluate the suitability of data for inclusion in your corpus and will need to take into consideration issues such as legal/ethical restrictions and data quality among others.

It is important to think critically about data sources and the context of how they were created or assembled.

Becoming familiar with your data and its characteristics can help you prepare your data for analysis.

Preparing and Preprocessing Your Data

Overview

Teaching: 10 min

Exercises: 10 minQuestions

How can I prepare data for NLP?

What are tokenization, casing and lemmatization?

Objectives

Load a test document into Spacy.

Learn preprocessing tasks.

Preparing and Preprocessing Your Data

Collection

The first step to preparing your data is to collect it. Whether you use API’s to gather your material or some other method depends on your research interests. For this workshop, we’ll use pre-gathered data.

During the setup instructions, we asked you to download a number of files. These included about forty texts downloaded from Project Gutenberg, which will make up our corpus of texts for our hands on lessons in this course.

Take a moment to orient and familiarize yourself with them:

- Austen

- Chesteron

- Dickens

- Dumas

- Melville

- Shakespeare

While a full-sized corpus can include thousands of texts, these forty-odd texts will be enough for our illustrative purposes.

Loading Data into Python

We’ll start by mounting our Google Drive so that Colab can read the helper functions. We’ll also go through how many of these functions are written in this lesson.

# Run this cell to mount your Google Drive.

from google.colab import drive

drive.mount('/content/drive')

# Show existing colab notebooks and helpers.py file

from os import listdir

wksp_dir = '/content/drive/My Drive/Colab Notebooks/text-analysis'

listdir(wksp_dir)

# Add folder to colab's path so we can import the helper functions

import sys

sys.path.insert(0, wksp_dir)

Next, we have a corpus of text files we want to analyze. Let’s create a method to list those files. To make this method more flexible, we will also use glob to allow us to put in regular expressions so we can filter the files if so desired. glob is a tool for listing files in a directory whose file names match some pattern, like all files ending in *.txt.

!pip install pathlib parse

import glob

import os

from pathlib import Path

def create_file_list(directory, filter_str='*'):

files = Path(directory).glob(filter_str)

files_to_analyze = list(map(str, files))

return files_to_analyze

Alternatively, we can load this function from the helpers.py file we provided for learners in this course:

from helpers import create_file_list

Either way, now we can use that function to list the books in our corpus:

corpus_dir = '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books'

corpus_file_list = create_file_list(corpus_dir)

print(corpus_file_list)

['/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dickens-olivertwist.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/chesterton-knewtoomuch.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dumas-tenyearslater.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dumas-twentyyearsafter.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/austen-pride.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dickens-taleoftwocities.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/chesterton-whitehorse.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dickens-hardtimes.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/austen-emma.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/chesterton-thursday.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dumas-threemusketeers.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/chesterton-ball.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/austen-ladysusan.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/austen-persuasion.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/melville-conman.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/chesterton-napoleon.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/chesterton-brown.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dumas-maninironmask.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dumas-blacktulip.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dickens-greatexpectations.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dickens-ourmutualfriend.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/austen-sense.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dickens-christmascarol.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dickens-davidcopperfield.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dickens-pickwickpapers.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/melville-bartleby.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dickens-bleakhouse.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dumas-montecristo.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/austen-northanger.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/melville-moby_dick.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/shakespeare-twelfthnight.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/melville-typee.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/shakespeare-romeo.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/melville-omoo.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/melville-piazzatales.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/shakespeare-muchado.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/shakespeare-midsummer.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/shakespeare-lear.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/melville-pierre.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/shakespeare-caesar.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/shakespeare-othello.txt']

We will use the full corpus later, but it might be useful to filter to just a few specific files. For example, if I want just documents written by Austen, I can filter on part of the file path name:

austen_list = create_file_list(corpus_dir, 'austen*')

print(austen_list)

['/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/austen-pride.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/austen-emma.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/austen-ladysusan.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/austen-persuasion.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/austen-sense.txt', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/austen-northanger.txt']

Let’s take a closer look at Emma. We are looking at the first full sentence, which begins with character 50 and ends at character 290.

preview_len = 290

emmapath = create_file_list(corpus_dir, 'austen-emma*')[0]

print(emmapath)

sentence = ""

with open(emmapath, 'r') as f:

sentence = f.read(preview_len)[50:preview_len]

print(sentence)

/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/austen-emma.txt

Emma Woodhouse, handsome, clever, and rich, with a comfortable home

and happy disposition, seemed to unite some of the best blessings

of existence; and had lived nearly twenty-one years in the world

with very little to distress or vex her.

Preprocessing

Currently, our data is still in a format that is best for humans to read. Humans, without having to think too consciously about it, understand how words and sentences group up and divide into discrete units of meaning. We also understand that the words run, ran, and running are just different grammatical forms of the same underlying concept. Finally, not only do we understand how punctuation affects the meaning of a text, we also can make sense of texts that have odd amounts or odd placements of punctuation.

For example, Darcie Wilder’s literally show me a healthy person has very little capitalization or punctuation:

in the unauthorized biography of britney spears she says her advice is to lift 1 lb weights and always sing in elevators every time i left to skateboard in the schoolyard i would sing in the elevator i would sing britney spears really loud and once the door opened and there were so many people they heard everything so i never sang again

Across the texts in our corpus, our authors write with different styles, preferring different dictions, punctuation, and so on.

To prepare our data to be more uniformly understood by our NLP models, we need to (a) break it into smaller units, (b) replace words with their roots, and (c) remove unwanted common or unhelpful words and punctuation. These steps encompass the preprocessing stage of the interpretive loop.

Tokenization

Tokenization is the process of breaking down texts (strings of characters) into words, groups of words, and sentences. A string of characters needs to be understood by a program as smaller units so that it can be embedded. These are called tokens.

While our tokens will be single words for now, this will not always be the case. Different models have different ways of tokenizing strings. The strings may be broken down into multiple word tokens, single word tokens, or even components of words like letters or morphology. Punctuation may or may not be included.

We will be using a tokenizer that breaks documents into single words for this lesson.

Let’s load our tokenizer and test it with the first sentence of Emma:

import spacy

import en_core_web_sm

spacyt = spacy.load("en_core_web_sm")

We will define a tokenizer method with the text editor. Keep this open so we can add to it throughout the lesson.

class Our_Tokenizer:

def __init__(self):

#import spacy tokenizer/language model

self.nlp = en_core_web_sm.load()

self.nlp.max_length = 4500000 # increase max number of characters that spacy can process (default = 1,000,000)

def __call__(self, document):

tokens = self.nlp(document)

return tokens

This will load spacy and its preprocessing pipeline for English. Pipelines are a series of interrelated tasks, where the output of one task is used as an input for another. Different languages may have different rulesets, and therefore require different preprocessing pipelines. Running the document we created through the NLP model we loaded performs a variety of tasks for us. Let’s look at these in greater detail.

tokens = spacyt(sentence)

for t in tokens:

print(t.text)

Emma

Woodhouse

,

handsome

,

clever

,

and

rich

,

with

a

comfortable

home

and

happy

disposition

,

seemed

to

unite

some

of

the

best

blessings

of

existence

;

and

had

lived

nearly

twenty

-

one

years

in

the

world

with

very

little

to

distress

or

vex

her

.

The single sentence has been broken down into a set of tokens. Tokens in spacy aren’t just strings: They’re python objects with a variety of attributes. Full documentation for these attributes can be found at: https://spacy.io/api/token

Stems and Lemmas

Think about similar words, such as running, ran, and runs. All of these words have a similar root, but a computer does not know this. Without preprocessing, each of these words would be a new token.

Stemming and Lemmatization are used to group together words that are similar or forms of the same word.

Stemming is removing the conjugation and pluralized endings for words. For example, words like digitization, and digitizing might chopped down to digitiz.

Lemmatization is the more sophisticated of the two, and looks for the linguistic base of a word. Lemmatization can group words that mean the same thing but may not be grouped through simple stemming, such as irregular verbs like bring and brought.

Similarly, in naive tokenization, capital letters are considered different from non-capital letters, meaning that capitalized versions of words are considered different from non-capitalized versions. Converting all words to lower case ensures that capitalized and non-capitalized versions of words are considered the same.

These steps are taken to reduce the complexities of our NLP models and to allow us to train them from less data.

When we tokenized the first sentence of Emma above, Spacy also created a lemmatized version of itt. Let’s try accessing this by typing the following:

for t in tokens:

print(t.lemma)

14931068470291635495

17859265536816163747

2593208677638477497

7792995567492812500

2593208677638477497

5763234570816168059

2593208677638477497

2283656566040971221

10580761479554314246

2593208677638477497

12510949447758279278

11901859001352538922

2973437733319511985

12006852138382633966

962983613142996970

2283656566040971221

244022080605231780

3083117615156646091

2593208677638477497

15203660437495798636

3791531372978436496

1872149278863210280

7000492816108906599

886050111519832510

7425985699627899538

5711639017775284443

451024245859800093

962983613142996970

886050111519832510

4708766880135230039

631425121691394544

2283656566040971221

14692702688101715474

13874798850131827181

16179521462386381682

8304598090389628520

9153284864653046197

17454115351911680600

14889849580704678361

3002984154512732771

7425985699627899538

1703489418272052182

962983613142996970

12510949447758279278

9548244504980166557

9778055143417507723

3791531372978436496

14526277127440575953

3740602843040177340

14980716871601793913

6740321247510922449

12646065887601541794

962983613142996970

Spacy stores words by an ID number, and not as a full string, to save space in memory. Many spacy functions will return numbers and not words as you might expect. Fortunately, adding an underscore for spacy will return text representations instead. We will also add in the lower case function so that all words are lower case.

for t in tokens:

print(str.lower(t.lemma_))

emma

woodhouse

,

handsome

,

clever

,

and

rich

,

with

a

comfortable

home

and

happy

disposition

,

seem

to

unite

some

of

the

good

blessing

of

existence

;

and

have

live

nearly

twenty

-

one

year

in

the

world

with

very

little

to

distress

or

vex

she

.

Notice how words like best and her have been changed to their root words like good and she. Let’s change our tokenizer to save the lower cased, lemmatized versions of words instead of the original words.

class Our_Tokenizer:

def __init__(self):

# import spacy tokenizer/language model

self.nlp = en_core_web_sm.load()

self.nlp.max_length = 4500000 # increase max number of characters that spacy can process (default = 1,000,000)

def __call__(self, document):

tokens = self.nlp(document)

simplified_tokens = [str.lower(token.lemma_) for token in tokens]

return simplified_tokens

Stop-Words and Punctuation

Stop-words are common words that are often filtered out for more efficient natural language data processing. Words such as the and and don’t necessarily tell us a lot about a document’s content and are often removed in simpler models. Stop lists (groups of stop words) are curated by sorting terms by their collection frequency, or the total number of times that they appear in a document or corpus. Punctuation also is something we are not interested in, at least not until we get to more complex models. Many open-source software packages for language processing, such as Spacy, include stop lists. Let’s look at Spacy’s stopword list.

from spacy.lang.en.stop_words import STOP_WORDS

print(STOP_WORDS)

{''s', 'must', 'again', 'had', 'much', 'a', 'becomes', 'mostly', 'once', 'should', 'anyway', 'call', 'front', 'whence', ''ll', 'whereas', 'therein', 'himself', 'within', 'ourselves', 'than', 'they', 'toward', 'latterly', 'may', 'what', 'her', 'nowhere', 'so', 'whenever', 'herself', 'other', 'get', 'become', 'namely', 'done', 'could', 'although', 'which', 'fifteen', 'seems', 'hereafter', 'whereafter', 'two', "'ve", 'to', 'his', 'one', ''d', 'forty', 'being', 'i', 'four', 'whoever', 'somehow', 'indeed', 'that', 'afterwards', 'us', 'she', "'d", 'herein', ''ll', 'keep', 'latter', 'onto', 'just', 'too', "'m", ''re', 'you', 'no', 'thereby', 'various', 'enough', 'go', 'myself', 'first', 'seemed', 'up', 'until', 'yourselves', 'while', 'ours', 'can', 'am', 'throughout', 'hereupon', 'whereupon', 'somewhere', 'fifty', 'those', 'quite', 'together', 'wherein', 'because', 'itself', 'hundred', 'neither', 'give', 'alone', 'them', 'nor', 'as', 'hers', 'into', 'is', 'several', 'thus', 'whom', 'why', 'over', 'thence', 'doing', 'own', 'amongst', 'thereupon', 'otherwise', 'sometime', 'for', 'full', 'anyhow', 'nine', 'even', 'never', 'your', 'who', 'others', 'whole', 'hereby', 'ever', 'or', 'and', 'side', 'though', 'except', 'him', 'now', 'mine', 'none', 'sixty', "n't", 'nobody', ''m', 'well', "'s", 'then', 'part', 'someone', 'me', 'six', 'less', 'however', 'make', 'upon', ''s', ''re', 'back', 'did', 'during', 'when', ''d', 'perhaps', "'re", 'we', 'hence', 'any', 'our', 'cannot', 'moreover', 'along', 'whither', 'by', 'such', 'via', 'against', 'the', 'most', 'but', 'often', 'where', 'each', 'further', 'whereby', 'ca', 'here', 'he', 'regarding', 'every', 'always', 'are', 'anywhere', 'wherever', 'using', 'there', 'anyone', 'been', 'would', 'with', 'name', 'some', 'might', 'yours', 'becoming', 'seeming', 'former', 'only', 'it', 'became', 'since', 'also', 'beside', 'their', 'else', 'around', 're', 'five', 'an', 'anything', 'please', 'elsewhere', 'themselves', 'everyone', 'next', 'will', 'yourself', 'twelve', 'few', 'behind', 'nothing', 'seem', 'bottom', 'both', 'say', 'out', 'take', 'all', 'used', 'therefore', 'below', 'almost', 'towards', 'many', 'sometimes', 'put', 'were', 'ten', 'of', 'last', 'its', 'under', 'nevertheless', 'whatever', 'something', 'off', 'does', 'top', 'meanwhile', 'how', 'already', 'per', 'beyond', 'everything', 'not', 'thereafter', 'eleven', 'n't', 'above', 'eight', 'before', 'noone', 'besides', 'twenty', 'do', 'everywhere', 'due', 'empty', 'least', 'between', 'down', 'either', 'across', 'see', 'three', 'on', 'formerly', 'be', 'very', 'rather', 'made', 'has', 'this', 'move', 'beforehand', 'if', 'my', 'n't', "'ll", 'third', 'without', ''m', 'yet', 'after', 'still', 'same', 'show', 'in', 'more', 'unless', 'from', 'really', 'whether', ''ve', 'serious', 'these', 'was', 'amount', 'whose', 'have', 'through', 'thru', ''ve', 'about', 'among', 'another', 'at'}

It’s possible to add and remove words as well, for example, zebra:

# remember, we need to tokenize things in order for our model to analyze them.

z = spacyt("zebra")[0]

print(z.is_stop) # False

# add zebra to our stopword list

STOP_WORDS.add("zebra")

spacyt = spacy.load("en_core_web_sm")

z = spacyt("zebra")[0]

print(z.is_stop) # True

# remove zebra from our list.

STOP_WORDS.remove("zebra")

spacyt = spacy.load("en_core_web_sm")

z = spacyt("zebra")[0]

print(z.is_stop) # False

Let’s add “Emma” to our list of stopwords, since knowing that the name “Emma” is often in Jane Austin does not tell us anything interesting.

This will only adjust the stopwords for the current session, but it is possible to save them if desired. More information about how to do this can be found in the Spacy documentation. You might use this stopword list to filter words from documents using spacy, or just by manually iterating through it like a list.

Let’s see what our example looks like without stopwords and punctuation:

# add emma to our stopword list

STOP_WORDS.add("emma")

spacyt = spacy.load("en_core_web_sm")

# retokenize our sentence

tokens = spacyt(sentence)

for token in tokens:

if not token.is_stop and not token.is_punct:

print(str.lower(token.lemma_))

woodhouse

handsome

clever

rich

comfortable

home

happy

disposition

unite

good

blessing

existence

live

nearly

year

world

little

distress

vex

Notice that because we added emma to our stopwords, she is not in our preprocessed sentence any more. Other stopwords are also missing such as numbers.

Let’s filter out stopwords and punctuation from our custom tokenizer now as well:

class Our_Tokenizer:

def __init__(self):

# import spacy tokenizer/language model

self.nlp = en_core_web_sm.load()

self.nlp.max_length = 4500000 # increase max number of characters that spacy can process (default = 1,000,000)

def __call__(self, document):

tokens = self.nlp(document)

simplified_tokens = []

for token in tokens:

if not token.is_stop and not token.is_punct:

simplified_tokens.append(str.lower(token.lemma_))

return simplified_tokens

Parts of Speech

While we can manually add Emma to our stopword list, it may occur to you that novels are filled with characters with unique and unpredictable names. We’ve already missed the word “Woodhouse” from our list. Creating an enumerated list of all of the possible character names seems impossible.

One way we might address this problem is by using Parts of speech (POS) tagging. POS are things such as nouns, verbs, and adjectives. POS tags often prove useful, so some tokenizers also have built in POS tagging done. Spacy is one such library. These tags are not 100% accurate, but they are a great place to start. Spacy’s POS tags can be used by accessing the pos_ method for each token.

for token in tokens:

if token.is_stop == False and token.is_punct == False:

print(str.lower(token.lemma_)+" "+token.pos_)

woodhouse PROPN

handsome ADJ

clever ADJ

rich ADJ

comfortable ADJ

home NOUN

SPACE

happy ADJ

disposition NOUN

unite VERB

good ADJ

blessing NOUN

SPACE

existence NOUN

live VERB

nearly ADV

year NOUN

world NOUN

SPACE

little ADJ

distress VERB

vex VERB

SPACE

Because our dataset is relatively small, we may find that character names and places weigh very heavily in our early models. We also have a number of blank or white space tokens, which we will also want to remove.

We will finish our special tokenizer by removing punctuation and proper nouns from our documents:

class Our_Tokenizer:

def __init__(self):

# import spacy tokenizer/language model

self.nlp = en_core_web_sm.load()

self.nlp.max_length = 4500000 # increase max number of characters that spacy can process (default = 1,000,000)

def __call__(self, document):

tokens = self.nlp(document)

simplified_tokens = [

#our helper function expects spacy tokens. It will take care of making them lowercase lemmas.

token for token in tokens

if not token.is_stop

and not token.is_punct

and token.pos_ != "PROPN"

]

return simplified_tokens

Alternative, instead of “blacklisting” all of the parts of speech we don’t want to include, we can “whitelist” just the few that we want, based on what they information they might contribute to the meaning of a text:

class Our_Tokenizer:

def __init__(self):

# import spacy tokenizer/language model

self.nlp = en_core_web_sm.load()

self.nlp.max_length = 4500000 # increase max number of characters that spacy can process (default = 1,000,000)

def __call__(self, document):

tokens = self.nlp(document)

simplified_tokens = [

#our helper function expects spacy tokens. It will take care of making them lowercase lemmas.

token for token in tokens

if not token.is_stop

and not token.is_punct

and token.pos_ in {"ADJ", "ADV", "INTJ", "NOUN", "VERB"}

]

return simplified_tokens

Either way, let’s test our custom tokenizer on this selection of text to see how it works.

tokenizer = Our_Tokenizer()

tokens = tokenizer(sentence)

print(tokens)

['handsome', 'clever', 'rich', 'comfortable', 'home', 'happy', 'disposition', 'unite', 'good', 'blessing', 'existence', 'live', 'nearly', 'year', 'world', 'little', 'distress', 'vex']

Putting it All Together

Now that we’ve built a tokenizer we’re happy with, lets use it to create lemmatized versions of all the books in our corpus.

That is, we want to turn this:

Emma Woodhouse, handsome, clever, and rich, with a comfortable home

and happy disposition, seemed to unite some of the best blessings

of existence; and had lived nearly twenty-one years in the world

with very little to distress or vex her.

into this:

handsome

clever

rich

comfortable

home

happy

disposition

seem

unite

good

blessing

existence

live

nearly

year

world

very

little

distress

vex

To help make this quick for all the text in all our books, we’ll use a helper function we prepared for learners to use our tokenizer, do the casing and lemmatization we discussed earlier, and write the results to a file:

from helpers import lemmatize_files

lemma_file_list = lemmatize_files(tokenizer, corpus_file_list)

['/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dickens-olivertwist.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/chesterton-knewtoomuch.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dumas-tenyearslater.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dumas-twentyyearsafter.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/austen-pride.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dickens-taleoftwocities.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/chesterton-whitehorse.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dickens-hardtimes.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/austen-emma.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/chesterton-thursday.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dumas-threemusketeers.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/chesterton-ball.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/austen-ladysusan.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/austen-persuasion.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/melville-conman.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/chesterton-napoleon.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/chesterton-brown.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dumas-maninironmask.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dumas-blacktulip.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dickens-greatexpectations.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dickens-ourmutualfriend.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/austen-sense.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dickens-christmascarol.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dickens-davidcopperfield.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dickens-pickwickpapers.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/melville-bartleby.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dickens-bleakhouse.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/dumas-montecristo.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/austen-northanger.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/melville-moby_dick.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/shakespeare-twelfthnight.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/melville-typee.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/shakespeare-romeo.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/melville-omoo.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/melville-piazzatales.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/shakespeare-muchado.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/shakespeare-midsummer.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/shakespeare-lear.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/melville-pierre.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/shakespeare-caesar.txt.lemmas', '/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/shakespeare-othello.txt.lemmas']

This process may take several minutes to run. Doing this preprocessing now however will save us much, much time later.

Saving Our Progress

Let’s save our progress by storing a spreadsheet (*.csv or *.xlsx file) that lists all our authors, books, and associated filenames, both the original and lemmatized copies.

We’ll use another helper we prepared to make this easy:

from helpers import parse_into_dataframe

pattern = "/content/drive/My Drive/Colab Notebooks/text-analysis/data/books/{author}-{title}.txt"

data = parse_into_dataframe(pattern, corpus_file_list)

data["Lemma_File"] = lemma_file_list

Finally, we’ll save this table to a file:

data.to_csv("/content/drive/My Drive/Colab Notebooks/text-analysis/data/data.csv", index=False)

Outro and Conclusion

This lesson has covered a number of preprocessing steps. We created a list of our files in our corpus, which we can use in future lessons. We customized a tokenizer from Spacy, to better suit the needs of our corpus, which we can also use moving forward.

Next lesson, we will start talking about the concepts behind our model.

Key Points

Tokenization breaks strings into smaller parts for analysis.

Casing removes capital letters.

Stopwords are common words that do not contain much useful information.

Lemmatization reduces words to their root form.

Vector Space and Distance

Overview

Teaching: 20 min

Exercises: 20 minQuestions

How can we model documents effectively?

How can we measure similarity between documents?

What’s the difference between cosine similarity and distance?

Objectives

Visualize vector space in a 2D model.

Learn about embeddings.

Learn about cosine similarity and distance.

Vector Space

Now that we’ve preprocessed our data, let’s move to the next step of the interpretative loop: generating a text embedding.

Many NLP models make use of a concept called Vector Space. The concept works like this:

- We create embeddings, or mathematical surrogates, of words and documents in vector space. These embeddings can be represented as sets of coordinates in multidimensional space, or as multi-dimensional matrices.

- These embeddings should be based on some sort of feature extraction, meaning that meaningful features from our original documents are somehow represented in our embedding. This will make it so that relationships between embeddings in this vector space will correspond to relationships in the actual documents.

Bags of Words

In the models we’ll look at today, we have a “bag of words” assumption as well. We will not consider the placement of words in sentences, their context, or their conjugation into different forms (run vs ran), not until later in this course.

A “bag of words” model is like putting all words from a sentence in a bag and just being concerned with how many of each word you have, not their order or context.

Worked Example: Bag of Words

Let’s suppose we want to model a small, simple set of toy documents. Our entire corpus of documents will only have two words, to and be. We have four documents, A, B, C and D:

- A: be be be be be be be be be be to

- B: to be to be to be to be to be to be to be to be

- C: to to be be

- D: to be to be

We will start by embedding words using a “one hot” embedding algorithm. Each document is a new row in our table. Every time word ‘to’ shows up in a document, we add one to our value for the ‘to’ dimension for that row, and zero to every other dimension. Every time ‘be’ shows up in our document, we will add one to our value for the ‘be’ dimension for that row, and zero to every other dimension.

How does this corpus look in vector space? We can display our model using a document-term matrix, which looks like the following:

| Document | to | be |

|---|---|---|

| Document A | 1 | 10 |

| Document B | 8 | 8 |

| Document C | 2 | 2 |

| Document D | 2 | 2 |

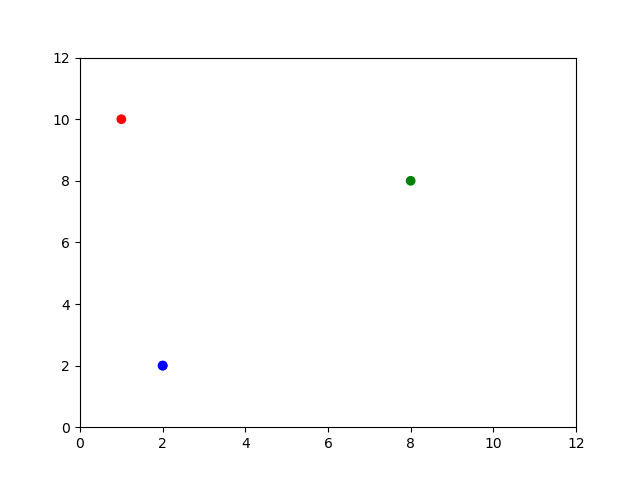

Notice that documents C and D are represented exactly the same. This is unavoidable right now because of our “bag of words” assumption, but much later on we will try to represent positions of words in our models as well. Let’s visualize this using Python.

import numpy as np

import matplotlib.pyplot as plt

corpus = np.array([[1,10],[8,8],[2,2],[2,2]])

print(corpus)

[[ 1 10]

[ 8 8]

[ 2 2]

[ 2 2]]

Graphing our model

We don’t just have to think of our words as columns. We can also think of them as dimensions, and the values as coordinates for each document.

# matplotlib expects a list of values by column, not by row.

# We can simply turn our table on its edge so rows become columns and vice versa.

corpusT = np.transpose(corpus)

print(corpusT)

[[ 1 8 2 2]

[10 8 2 2]]

X = corpusT[0]

Y = corpusT[1]

# define some colors for each point. Since points A and B are the same, we'll have them as the same color.

mycolors = ['r','g','b','b']

# display our visualization

plt.scatter(X,Y, c=mycolors)

plt.xlim(0, 12)

plt.ylim(0, 12)

plt.show()

Distance and Similarity

What can we do with this simple model? At the heart of many research tasks is distance or similarity, in some sense. When we classify or search for documents, we are asking for documents that are “close to” some known examples or search terms. When we explore the topics in our documents, we are asking for a small set of concepts that capture and help explain as much as the ways our documents might differ from one another. And so on.

There are two measures of distance/similarity we’ll consider here: Euclidean distance and cosine similarity.

Euclidean Distance

The Euclidian distance formula makes use of the Pythagorean theorem, where $a^2 + b^2 = c^2$. We can draw a triangle between two points, and calculate the hypotenuse to find the distance. This distance formula works in two dimensions, but can also be generalized over as many dimensions as we want. Let’s use distance to compare A to B, C and D. We’ll say the closer two points are, the smaller their distance, so the more similar they are.

from sklearn.metrics.pairwise import euclidean_distances as dist

#What is closest to document D?

D = [corpus[3]]

print(D)

[array([2, 2])]

dist(corpus, D)

array([[8.06225775],

[8.48528137],

[0. ],

[0. ]])

Distance may seem like a decent metric at first. Certainly, it makes sense that document D has zero distance from itself. C and D are also similar, which makes sense given our bag of words assumption. But take a closer look at documents B and D. Document B is just document D copy and pasted 4 times! How can it be less similar to document D than document B?

Distance is highly sensitive to document length. Because document A is shorter than document B, it is closer to document D. While distance may be an intuitive measure of similarity, it is actually highly dependent on document length.

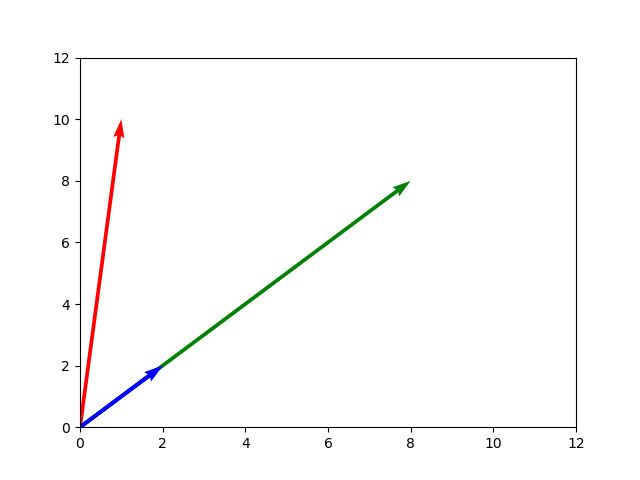

We need a different metric that will better represent similarity. This is where vectors come in. Vectors are geometric objects with both length and direction. They can be thought of as a ray or an arrow pointing from one point to another.

Vectors can be added, subtracted, or multiplied together, just like regular numbers can. Our model will consider documents as vectors instead of points, going from the origin at $(0,0)$ to each document. Let’s visualize this.

# we need the point of origin in order to draw a vector. Numpy has a function to create an array full of zeroes.

origin = np.zeros([1,4])

print(origin)

[[0. 0. 0. 0.]]

# draw our vectors

plt.quiver(origin, origin, X, Y, color=mycolors, angles='xy', scale_units='xy', scale=1)

plt.xlim(0, 12)

plt.ylim(0, 12)

plt.show()

Document A and document D are headed in exactly the same direction, which matches our intution that both documents are in some way similar to each other, even though they differ in length.

Cosine Similarity

Cosine Similarity is a metric which is only concerned with the direction of the vector, not its length. This means the length of a document will no longer factor into our similarity metric. The more similar two vectors are in direction, the closer the cosine similarity score gets to 1. The more orthogonal two vectors get (the more at a right angle they are), the closer it gets to 0. And as the more they point in opposite directions, the closer it gets to -1.

You can think of cosine similarity between vectors as signposts aimed out into multidimensional space. Two similar documents going in the same direction have a high cosine similarity, even if one of them is much further away in that direction.

Now that we know what cosine similarity is, how does this metric compare our documents?

from sklearn.metrics.pairwise import cosine_similarity as cs

cs(corpus, D)

array([[0.7739573],

[1. ],

[1. ],

[1. ]])

Both A and D are considered similar by this metric. Cosine similarity is used by many models as a measure of similarity between documents and words.

Generalizing over more dimensions

If we want to add another word to our model, we can add another dimension, which we can represent as another column in our table. Let’s add more documents with new words in them.

- E: be or not be

- F: to be or not to be

| Document | to | be | or | not |

|---|---|---|---|---|

| Document A | 1 | 10 | 0 | 0 |

| Document B | 8 | 8 | 0 | 0 |

| Document C | 2 | 2 | 0 | 0 |

| Document D | 2 | 2 | 0 | 0 |

| Document E | 0 | 2 | 1 | 1 |

| Document F | 2 | 2 | 1 | 1 |

We can keep adding dimensions for however many words we want to add. It’s easy to imagine vector space with two or three dimensions, but visualizing this mentally will rapidly become downright impossible as we add more and more words. Vocabularies for natural languages can easily reach tens of thousands of words.

Keep in mind, it’s not necessary to visualize how a high dimensional vector space looks. These relationships and formulae work over an arbitrary number of dimensions. Our methods for how to measure similarity will carry over, even if drawing a graph is no longer possible.

# add two new dimensions to our corpus

corpus = np.hstack((corpus, np.zeros((4,2))))

print(corpus)

[[ 1. 10. 0. 0.]

[ 8. 8. 0. 0.]

[ 2. 2. 0. 0.]

[ 2. 2. 0. 0.]]

E = np.array([[0,2,1,1]])

F = np.array([[2,2,1,1]])

#add document E to our corpus

corpus = np.vstack((corpus, E))

print(corpus)

[[ 1. 10. 0. 0.]

[ 8. 8. 0. 0.]

[ 2. 2. 0. 0.]

[ 2. 2. 0. 0.]

[ 0. 2. 1. 1.]]

What do you think the most similar document is to document F?

cs(corpus, F)

array([[0.69224845],

[0.89442719],

[0.89442719],

[0.89442719],

[0.77459667]])

This new document seems most similar to the documents B,C and D.

This principle of using vector space will hold up over an arbitrary number of dimensions, and therefore over a vocabulary of arbitrary size.

This is the essence of vector space modeling: documents are embedded as vectors in very high dimensional space.

How we define these dimensions and the methods for feature extraction may change and become more complex, but the essential idea remains the same.

Next, we will discuss TF-IDF, which balances the above “bag of words” approach against the fact that some words are more or less interesting: whale conveys more useful information than the, for example.

Key Points

We model documents by plotting them in high dimensional space.

Distance is highly dependent on document length.

Documents are modeled as vectors so cosine similarity can be used as a similarity metric.

Document Embeddings and TF-IDF

Overview

Teaching: 20 min

Exercises: 10 minQuestions

What is a document embedding?

What is TF-IDF?

Objectives

Produce TF-IDF matrix on a corpus

Understand how TF-IDF relates to rare/common words

The method of using word counts is just one way we might embed a document in vector space.

Let’s talk about more complex and representational ways of constructing document embeddings.

To start, imagine we want to represent each word in our model individually, instead of considering an entire document.

How individual words are represented in vector space is something called “word embeddings” and they are an important concept in NLP.

One hot encoding: Limitations

How would we make word embeddings for a simple document such as “Feed the duck”?

Let’s imagine we have a vector space with a million different words in our corpus, and we are just looking at part of the vector space below.

| dodge | duck | … | farm | feather | feed | … | tan | the | |

|---|---|---|---|---|---|---|---|---|---|

| feed | 0 | 0 | 0 | 0 | 1 | 0 | 0 | ||

| the | 0 | 0 | 0 | 0 | 0 | 0 | 1 | ||

| duck | 0 | 1 | 0 | 0 | 0 | 0 | 0 | ||

| Document | 0 | 1 | 0 | 0 | 1 | 0 | 1 |

Similar to what we did in the previous lesson, we can see that each word embedding gives a 1 for a dimension corresponding to the word, and a zero for every other dimension. This kind of encoding is known as “one hot” encoding, where a single value is 1 and all others are 0.

Once we have all the word embeddings for each word in the document, we sum them all up to get the document embedding. This is the simplest and most intuitive way to construct a document embedding from a set of word embeddings.

But does it accurately represent the importance of each word?

Our next model, TF-IDF, will embed words with different values rather than just 0 or 1.

TF-IDF Basics

Currently our model assumes all words are created equal and are all equally important. However, in the real world we know that certain words are more important than others.

For example, in a set of novels, knowing one novel contains the word the 100 times does not tell us much about it. However, if the novel contains a rarer word such as whale 100 times, that may tell us quite a bit about its content.

A more accurate model would weigh these rarer words more heavily, and more common words less heavily, so that their relative importance is part of our model.

However, rare is a relative term. In a corpus of documents about blue whales, the term whale may be present in nearly every document. In that case, other words may be rarer and more informative. How do we determine how these weights are done?

One method for constructing more advanced word embeddings is a model called TF-IDF.

TF-IDF stands for term frequency-inverse document frequency and can be calculated for each document, d, and term, t, in a corpus. The calculation consists of two parts: term frequency and inverse document frequency. We multiply the two terms to get the TF-IDF value.

Term frequency(t,d) is a measure for how frequently a term, t, occurs in a document, d. The simplest way to calculate term frequency is by simply adding up the number of times a term occurs in a document, and dividing by the total word count in the document.

Inverse document frequency measures a term’s importance. Document frequency is the number of documents, N, a term occurs in, so inverse document frequency gives higher scores to words that occur in fewer documents. This is represented by the equation:

IDF(t) = ln[(N+1) / (DF(t)+1)]

where…

- N represents the total number of documents in the corpus

- DF(t) represents document frequency for a particular term/word, t. This is the number of documents a term occurs in.

The key thing to understand is that words that occur in many documents produce smaller IDF values since the denominator grows with DF(t).

We can also embed documents in vector space using TF-IDF scores rather than simple word counts. This also weakens the impact of stop-words, since due to their common nature, they have very low scores.

Now that we’ve seen how TF-IDF works, let’s put it into practice.

Worked Example: TD-IDF

Earlier, we preprocessed our data to lemmatize each file in our corpus, then saved our results for later.

Let’s load our data back in to continue where we left off:

from pandas import read_csv

data = read_csv("/content/drive/My Drive/Colab Notebooks/text-analysis/data/data.csv")

TD-IDF Vectorizer

Next, let’s load a vectorizer from sklearn that will help represent our corpus in TF-IDF vector space for us.

from sklearn.feature_extraction.text import TfidfVectorizer

vectorizer = TfidfVectorizer(input='filename', max_df=.6, min_df=.1)

Here, max_df=.6 removes terms that appear in more than 60% of our documents (overly common words like the, a, an) and min_df=.1 removes terms that appear in less than 10% of our documents (overly rare words like specific character names, typos, or punctuation the tokenizer doesn’t understand). We’re looking for that sweet spot where terms are frequent enough for us to build theoretical understanding of what they mean for our corpus, but not so frequent that they can’t help us tell our documents apart.

Now that we have our vectorizer loaded, let’s used it to represent our data.

tfidf = vectorizer.fit_transform(list(data["Lemma_File"]))

print(tfidf.shape)

(41, 9879)

Here, tfidf.shape shows us the number of rows (books) and columns (words) are in our model.

Check Your Understanding:

max_dfandmin_dfTry different values for

max_dfandmin_df. How does increasing/decreasing each value affect the number of columns (words) that get included in the model?Solution

Increasing

max_dfresults in more words being included in the more, since a highermax_dfcorresponds to accepting more common words in the model. A highermax_dfaccepts more words likely to be stopwords.Inversely, increasing

min_dfreduces the number of words in the more, since a highermin_dfcorresponds to removing more rare words from the model. A highermin_dfremoves more words likely to be typos, names of characters, and so on.

Inspecting Results

We have a huge number of dimensions in the columns of our matrix (just shy of 10,000), where each one of which represents a word. We also have a number of documents (about forty), each represented as a row.

Let’s take a look at some of the words in our documents. Each of these represents a dimension in our model.

vectorizer.get_feature_names_out()[0:5]

array(['15th', '1st', 'aback', 'abandonment', 'abase'], dtype=object)

What is the weight of those words?

print(vectorizer.idf_[0:5]) # weights for each token

[2.79175947 2.94591015 2.25276297 2.25276297 2.43508453]

Let’s show the weight for all the words:

from pandas import DataFrame

tfidf_data = DataFrame(vectorizer.idf_, index=vectorizer.get_feature_names_out(), columns=["Weight"])

tfidf_data

Weight

15th 2.791759

1st 2.945910

aback 2.252763

abandonment 2.252763

abase 2.435085

... ...

zealously 2.945910

zenith 2.791759

zest 2.791759

zigzag 2.945910

zone 2.791759

tfidf_data.sort_values(by="Weight")

That was ordered alphabetically. Let’s try from lowest to heighest weight:

Weight

unaccountable 1.518794

nest 1.518794

needless 1.518794

hundred 1.518794

hunger 1.518794

... ...

incurably 2.945910

indecent 2.945910

indeed 2.945910

incantation 2.945910

gentlest 2.945910

Your Mileage May Vary

The results above will differ based on how you configured your tokenizer and vectorizer earlier.

Values are no longer just whole numbers such as 0, 1 or 2. Instead, they are weighted according to how often they occur. More common words have lower weights, and less common words have higher weights.

TF-IDF Summary

In this lesson, we learned about document embeddings and how they could be done in multiple ways. While one hot encoding is a simple way of doing embeddings, it may not be the best representation. TF-IDF is another way of performing these embeddings that improves the representation of words in our model by weighting them. TF-IDF is often used as an intermediate step in some of the more advanced models we will construct later.

Key Points

Some words convey more information about a corpus than others

One-hot encodings treat all words equally

TF-IDF encodings weigh overly common words lower

Latent Semantic Analysis

Overview

Teaching: 20 min

Exercises: 10 minQuestions

What is topic modeling?

What is Latent Semantic Analysis (LSA)?

Objectives

Use LSA to explore topics in a corpus

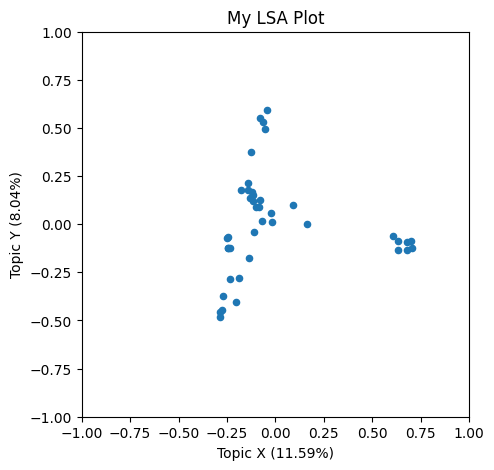

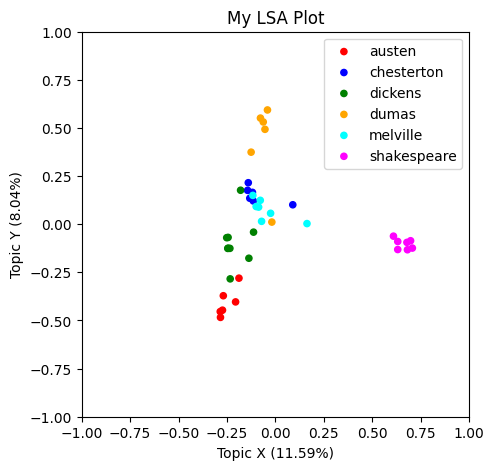

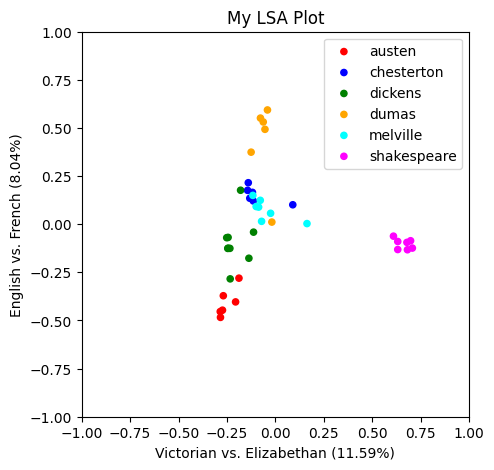

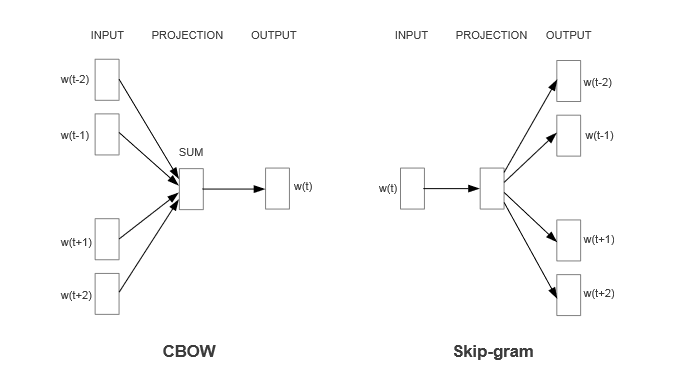

Produce and interpret an LSA plot

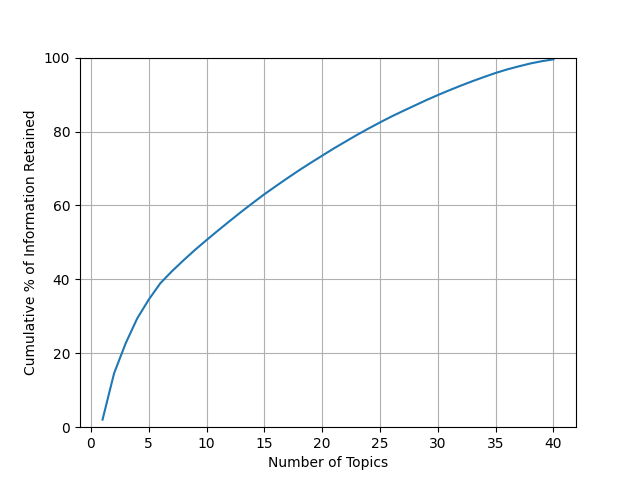

So far, we’ve learned the kinds of task NLP can be used for, preprocessed our data, and represented it as a TF-IDF vector space.

Now, we begin to close the loop with Topic Modeling — one of many embedding-related tasks possible with NLP.

Topic Modeling is a frequent goal of text analysis. Topics are the things that a document is about, by some sense of “about.” We could think of topics as:

- discrete categories that your documents belong to, such as fiction vs. non-fiction

- or spectra of subject matter that your documents contain in differing amounts, such as being about politics, cooking, racing, dragons, …

In the first case, we could use machine learning to predict discrete categories, such as trying to determine the author of the Federalist Papers.

In the second case, we could try to determine the least number of topics that provides the most information about how our documents differ from one another, then use those concepts to gain insight about the “stuff” or “story” of our corpus as a whole.

In this lesson we’ll focus on this second case, where topics are treated as spectra of subject matter. There are a variety of ways of doing this, and not all of them use the vector space model we have learned. For example:

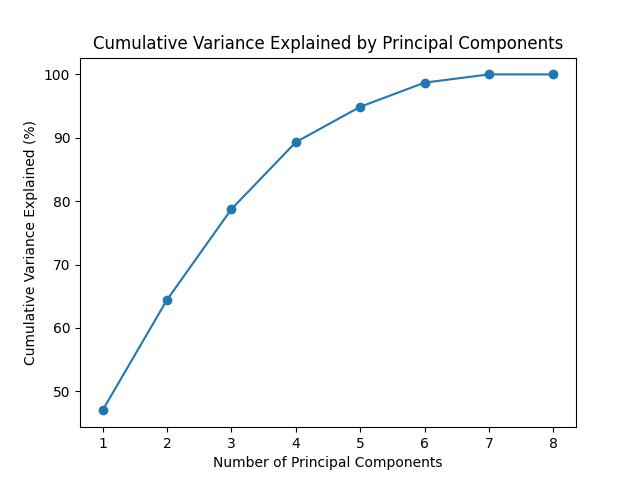

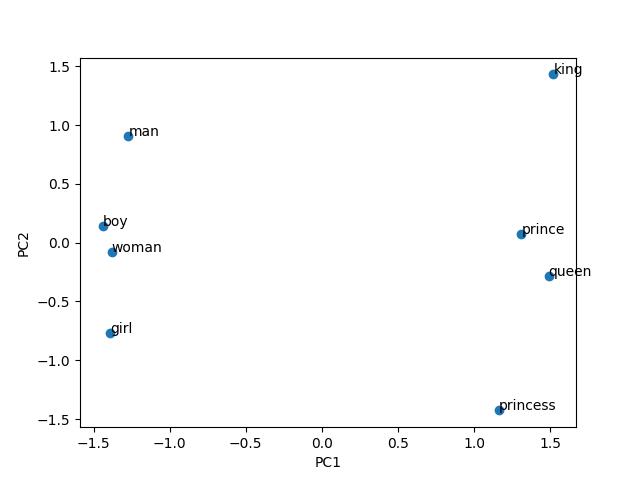

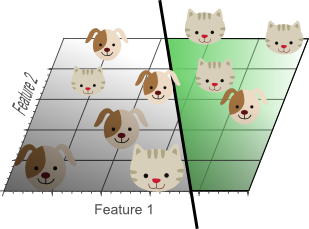

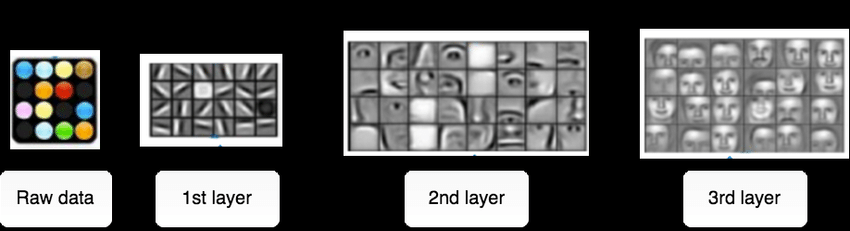

- Vector-space models: