Content from Overview

Last updated on 2025-01-30 | Edit this page

Estimated time: 31 minutes

Overview

Questions

- What do we mean by “Trustworthy AI”?

- How is this workshop structured, and what content does it cover?

Objectives

- Define trustworthy AI and its various components.

- Be prepared to dive into the rest of the workshop.

What is trustworthy AI?

Take a moment to brainstorm what keywords/concepts come to mind when we mention “Trustworthy AI”. Share your thoughts with the class.

Artificial intelligence (AI) and machine learning (ML) are being used widely to improve upon human capabilities (either in speed/convenience/cost or accuracy) in a variety of domains: medicine, social media, news, marketing, policing, and more. It is important that the decisions made by AI/ML models uphold values that we, as a society, care about.

Trustworthy AI is a large and growing sub-field of AI that aims to ensure that AI models are trained and deployed in ways that are ethical and responsible.

The AI Bill of Rights

In October 2022, the Biden administration released a Blueprint for an AI Bill of Rights, a non-binding document that outlines how automated systems and AI should behave in order to protect Americans’ rights.

The blueprint is centered around five principles:

- Safe and Effective Systems – AI systems should work as expected, and should not cause harm

- Algorithmic Discrimination Protections – AI systems should not discriminate or produce inequitable outcomes

- Data Privacy – data collection should be limited to what is necessary for the system functionality, and you should have control over how and if your data is used

- Notice and Explanation – it should be transparent when an AI system is being used, and there should be an explanation of how particular decisions are reached

- Human Alternatives, Consideration, and Fallback – you should be able to opt out of engaging with AI systems, and a human should be available to remedy any issues

This workshop

This workshop centers around four principles that are important to trustworthy AI: scientific validity, fairness, transparency, safety & uncertainty, and accountability. We summarize each principle here.

Scientific validity

In order to be trustworthy, a model and its predictions need to be founded on good science. A model is not going to perform well if is not trained on the correct data, if it fits the underlying data poorly, or if it cannot recognize its own limitations. Scientific validity is closely linked to the AI Bill of Rights principle of “safe and effective systems”.

In this workshop, we cover the following topics relating to scientific validity:

- Defining the problem (Preparing to Train a Model episode)

- Training and evaluating a model, especially selecting an accuracy metric, avoiding over/underfitting, and preventing data leakage (Model Evaluation and Fairness episode)

Fairness

As stated in the AI Bill of Rights, AI systems should not be discriminatory or produce inequitable outcomes. In the Model Evaluation and Fairness episode we discuss various definitions of fairness in the context of AI, and overview how model developers try to make their models more fair.

Transparency

Transparency – i.e., insight into how a model makes its decisions – is important for trustworthy AI, as we want models that make the right decisions for the right reasons. Transparency can be achieved via explanations or by using inherently interpretable models. We discuss transparency in the follow episodes:

- Interpretability vs Explainability

- Explainability Methods Overview

- Explainability Methods: Deep Dive, Linear Probe, and GradCAM episodes

Safety & uncertainty awareness

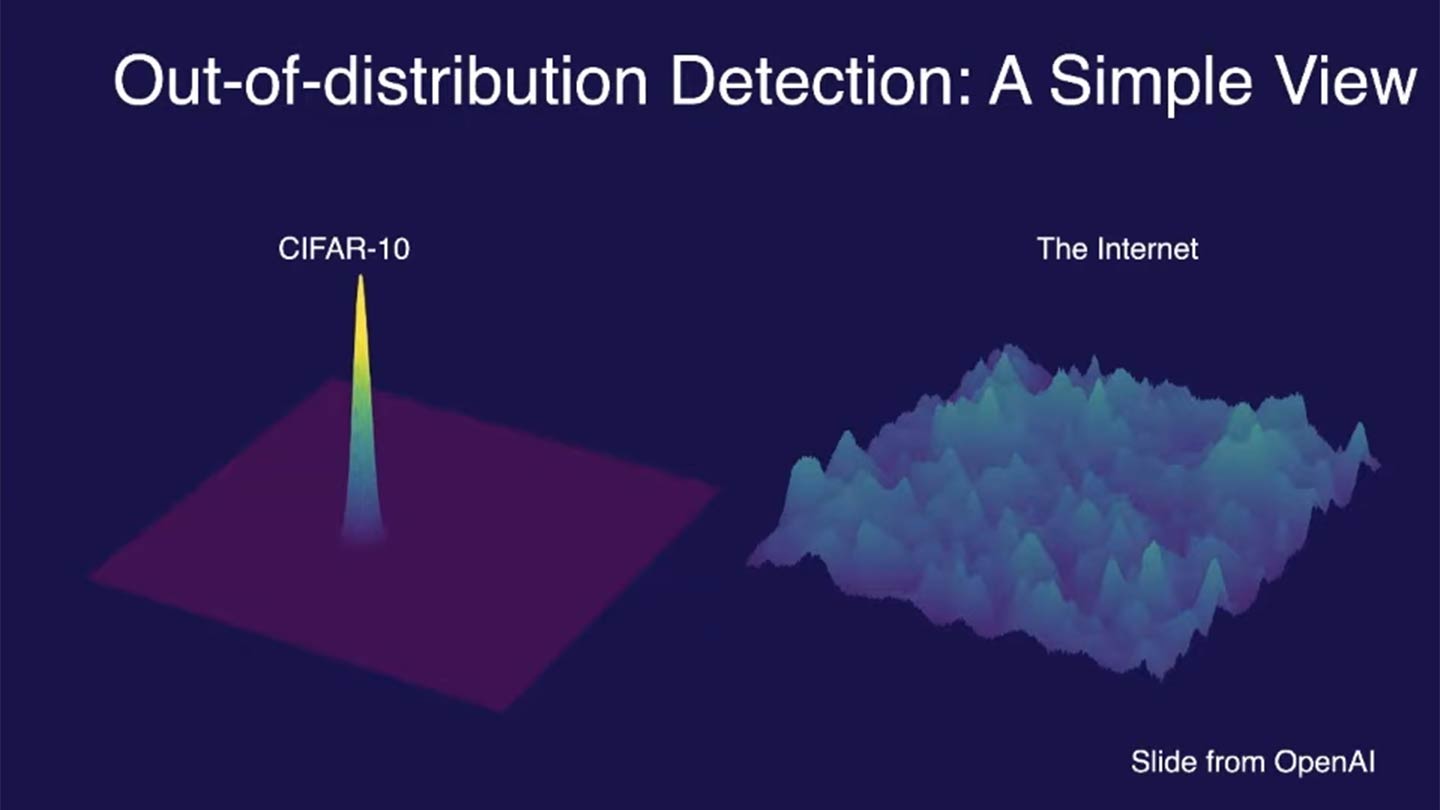

AI models should be able to quantify their uncertainty and recognize when they encounter novel or unreliable inputs. If a model makes confident predictions on data that it has never seen before (e.g., out-of-distribution data), it can lead to critical failures in high-stakes applications like healthcare or autonomous systems.

In this workshop, we cover the following topics relating to safety and uncertainty awareness:

- Estimating model uncertainty—understanding when models should be uncertain and how to measure it (Estimating Model Uncertainty episode)

- Out-of-distribution detection—distinguishing between known and unknown data distributions to improve reliability (OOD Detection episodes)

- Comparing uncertainty estimation and OOD detection approaches,

including:

- Output-based methods (softmax confidence, energy-based models)

- Distance-based methods (Mahalanobis distance, k-NN)

- Contrastive learning for improving generalization

By incorporating uncertainty estimation and OOD detection, we emphasize the importance of AI models knowing what they don’t know and making safer decisions.

Accountability

Accountability is important for trustworthy AI because, inevitably, models will make mistakes or cause harm. Accountability is multi-faceted and largely non-technical, which is not to say unimportant, but just that it falls partially out of scope of this technical workshop.

We discuss two facets of accountability, model documentation and model sharing, in the Documenting and Releasing a Model episode.

For those who are interested, we recommend these papers to learn more about different aspects of AI accountability:

- Accountability of AI Under the Law: The Role of Explanation by Finale Doshi-Velez and colleagues. This paper discusses how explanations can be used in a legal context to determine accountability for harms caused by AI.

- Closing the AI accountability gap: defining an end-to-end framework for internal algorithmic auditing by Deborah Raji and colleagues proposes a framework for auditing algorithms. A key contribution of this paper is defining an auditing procedure over the whole model development and implementation pipeline, rather than narrowly focusing on the modeling stages.

- AI auditing: The Broken Bus on the Road to AI Accountability by Abeba Birhane and colleagues challenges previous work on AI accountability, arguing that most existing AI auditing systems are not effective. They propose necessary traits for effective AI audits, based on a review of existing practices.

Topics we do not cover

Trustworthy AI is a large, and growing, area of study. As of September 24, 2024, there are about 18,000 articles on Google Scholar that mention Trustworthy AI and were published in the first 9 months of 2024.

There are different Trustworthy AI methods for different types of models – e.g., decisions trees or linear models that are commonly used with tabular data, neural networks that are used with image data, or large multi-modal foundation models. In this workshop, we focus primarily on neural networks for the specific techniques we show in the technical implementations. That being said, much of the conceptual content is relevant to any model type.

Many of the topics we do not cover are sub-topics of the broad categories – e.g., fairness, explainability, or OOD detection – of the workshop and are important for specific use cases, but less relevant for a general audience. But, there are a few major areas of research that we don’t have time to touch on. We summarize a few of them here:

Data Privacy

In the US’s Blueprint for an AI Bill of Rights, one principle is data privacy, meaning that people should be aware how their data is being used, companies should not collect more data than they need, and people should be able to consent and/or opt out of data collection and usage.

A lack of data privacy poses several risks: first, whenever data is

collected, it can be subject to data breaches. This risk is unavoidable,

but collecting only the data that is truly necessary mitigates this

risk, as does implementing safeguards to how data is stored and and

accessed. Second, when data is used to train ML models, that data can

sometimes be identifying by attackers. For instance, large language

models like ChatGPT are known to release private data that was part of

the training corpus when prompted in clever ways (see this blog

post for more information).

Membership inference attacks, where an attacker determines whether a

particular individual’s data was in the training corpus, are another

vulnerability. These attacks may reveal things about a person directly

(e.g., if the training dataset consisted of only people with a

particular medical condition), or can be used to setup downstream

attacks to gain more information.

There are several areas of active research relating to data privacy.

- Differential privacy is a statistical technique that protects the privacy of individual data points. Models can be trained using differential privacy to provably prevent future attacks, but this currently comes at a high cost to accuracy.

- Federated learning trains models using decentralized data from a variety of sources. Since the data is not shared centrally, there is less risk of data breaches or unauthorized data usage.

Generative AI risks

We touch on fairness issues with generative AI in the Model Evaluation and Fairness episode. But generative AI poses other risks, too, many of which are just starting to be researched and understood given how new widely-available generative AI is. We discuss one such risk, disinformation, briefly here:

- Disinformation: A major risk of generative AI is the creation of misleading or fake and malicious content, often known as deep fakes. Deep fakes pose risks to individuals (e.g., creating content that harms an individual’s reputation) and society (e.g., fake news articles or pictures that look real).

Inline instructor notes can help inform instructors of timing challenges associated with the lessons. They appear in the “Instructor View”

Content from Preparing to train a model

Last updated on 2024-10-17 | Edit this page

Estimated time: 0 minutes

Overview

Questions

- For what prediction tasks is machine learning an appropriate tool?

- How can inappropriate target variable choice lead to suboptimal outcomes in a machine learning pipeline?

- What forms of “bias” can occur in machine learning, and where do these biases come from?

Objectives

- Judge what tasks are appropriate for machine learning

- Understand why the choice of prediction task / target variable is important.

- Describe how bias can appear in training data and algorithms.

Choosing appropriate tasks

Machine learning is a rapidly advancing, powerful technology that is helping to drive innovation. Before embarking on a machine learning project, we need to consider the task carefully. Many machine learning efforts are not solving problems that need to be solved. Or, the problem may be valid, but the machine learning approach makes incorrect assumptions and fails to solve the problem effectively. Worse, many applications of machine learning are not for the public good.

We will start by considering the NIH Guiding Principles for Ethical Research, which provide a useful set of considerations for any project.

Challenge

Take a look at the NIH Guiding Principles for Ethical Research.

What are the main principles?

A summary of the principles is listed below:

- Social and clinical value: Does the social or clinical value of developing and implementing the model outweigh the risk and burden of the people involved?

- Scientific validity: Once created, will the model provide valid, meaningful outputs?

- Fair subject selection: Are the people who contribute and benefit from the model selected fairly, and not through vulnerability, privilege, or other unrelated factors?

- Favorable risk-benefit ratio: Do the potential benefits of of developing and implementing the model outweigh the risks?

- Independent review: Has the project been reviewed by someone independent of the project, and has an Institutional Review Board (IRB) been approached where appropriate?

- Informed consent: Are participants whose data contributes to development and implementation of the model, as well as downstream recipients of the model, kept informed?

- Respect for potential and enrolled subjects: Is the privacy of participants respected and are steps taken to continuously monitor the effect of the model on downstream participants?

AI tasks are often most controversial when they involve human subjects, and especially visual representations of people. We’ll discuss two case studies that use people’s faces as a prediction tool, and discuss whether these uses of AI are appropriate.

Case study 1: Physiognomy

In 2019, Nature Medicine published a paper that describes a model that can identify genetic disorders from a photograph of a patient’s face. The abstract of the paper is copied below:

Syndromic genetic conditions, in aggregate, affect 8% of the population. Many syndromes have recognizable facial features that are highly informative to clinical geneticists. Recent studies show that facial analysis technologies measured up to the capabilities of expert clinicians in syndrome identification. However, these technologies identified only a few disease phenotypes, limiting their role in clinical settings, where hundreds of diagnoses must be considered. Here we present a facial image analysis framework, DeepGestalt, using computer vision and deep-learning algorithms, that quantifies similarities to hundreds of syndromes.

DeepGestalt outperformed clinicians in three initial experiments, two with the goal of distinguishing subjects with a target syndrome from other syndromes, and one of separating different genetic sub-types in Noonan syndrome. On the final experiment reflecting a real clinical setting problem, DeepGestalt achieved 91% top-10 accuracy in identifying the correct syndrome on 502 different images. The model was trained on a dataset of over 17,000 images representing more than 200 syndromes, curated through a community-driven phenotyping platform. DeepGestalt potentially adds considerable value to phenotypic evaluations in clinical genetics, genetic testing, research and precision medicine.

- What is the proposed value of the algorithm?

- What are the potential risks?

- Are you supportive of this kind of research?

- What safeguards, if any, would you want to be used when developing and using this algorithm?

- The algorithm could help doctors figure out what rare disease a patient has.

- First, if the algorithm is used in the wrong hands, it could be used to discriminate against people with diseases. Second, if the algorithm is not accurate (false positive or false negative), trusting its results could lead to improper medical care.

- Safeguards could include: requiring extensive testing to ensure that the algorithm maintains similar accuracy across racial and gender groups, making sure the algorithm is only accessible to medical professionals, and requiring follow-up testing to confirm the algorithm’s diagnosis.

Media reports about this paper were largely positive, e.g., reporting that clinicians are excited about the new technology.

Case study 2:

There is a long history of physiognomy, the “science” of trying to read someone’s character from their face. With the advent of machine learning, this discredited area of research has made a comeback. There have been numerous studies attempting to guess characteristics such as trustworthness, criminality, and political and sexual orientation.

In 2018, for example, researchers suggested that neural networks could be used to detect sexual orientation from facial images. The abstract is copied below:

We show that faces contain much more information about sexual orientation than can be perceived and interpreted by the human brain. We used deep neural networks to extract features from 35,326 facial images. These features were entered into a logistic regression aimed at classifying sexual orientation. Given a single facial image, a classifier could correctly distinguish between gay and heterosexual men in 81% of cases, and in 74% of cases for women. Human judges achieved much lower accuracy: 61% for men and 54% for women. The accuracy of the algorithm increased to 91% and 83%, respectively, given five facial images per person.

Facial features employed by the classifier included both fixed (e.g., nose shape) and transient facial features (e.g., grooming style). Consistent with the prenatal hormone theory of sexual orientation, gay men and women tended to have gender-atypical facial morphology, expression, and grooming styles. Prediction models aimed at gender alone allowed for detecting gay males with 57% accuracy and gay females with 58% accuracy. Those findings advance our understanding of the origins of sexual orientation and the limits of human perception. Additionally, given that companies and governments are increasingly using computer vision algorithms to detect people’s intimate traits, our findings expose a threat to the privacy and safety of gay men and women.

Discuss the following questions.

- What is the proposed value of the algorithm?

- What are the potential risks?

- Are you supportive of this kind of research?

- What distinguishes this use of AI from the use of AI described in Case Study 1?

- The algorithm proposes detecting an individual’s sexual orientation from images of their face. It is unclear why this is something that needs to be algorithmically detected by any entity.

- If the algorithm is used by anti-LGBTQ entities, it could be used to harass members of the LGBTQ community (as well as non-LGBTQ people who are flagged as LGBTQ by the algorithm). If the algorithm is accurate, it could be used to “out” individuals who are gay but do not want to publicly share that information.

- The first case study aims to detect disease. The implication of this research – at least, as suggested by the linked article – is that it can help doctors with diagnosis and give individuals affected by the disease access to treatment. Conversely, if there is a medical reason for knowing someone’s sexual orientation, it is not necessary to use AI – the doctor can just ask the patient.

Media reports of this algorithm were largely negative, with a Scientific American article highlighting the connections to physiognomy and raising concern over government use of these algorithms:

This is precisely the kind of “scientific” claim that can motivate repressive governments to apply AI algorithms to images of their citizens. And what is it to stop them from “reading” intelligence, political orientation and criminal inclinations from these images?

Choosing the outcome variable

Sometimes, choosing the outcome variable is easy: for instance, when building a model to predict how warm it will be out tomorrow, the temperature can be the outcome variable because it’s measurable (i.e., you know what temperature it was yesterday and today) and your predictions won’t cause a feedback loop (e.g., given a set of past weather data, the weather next Monday won’t change based on what your model predicts tomorrow’s temperature to be).

By contrast, sometimes it’s not possible to measure the target prediction subject directly, and sometimes predictions can cause feedback loops.

Case Study: Proxy variables

Consider the scenario described in the challenge below.

Challenge

Suppose that you work for a hospital and are asked to build a model to predict which patients are high-risk and need extra care to prevent negative health outcomes.

Discuss the following with a partner or small group: 1. What is the goal target variable? 2. What are challenges in measuring the target variable in the training data (i.e., former patients)? 3. Are there other variables that are easier to measure, but can approximate the target variable, that could serve as proxies? 3. How do social inequities interplay with the value of the target variable versus the value of the proxies?

- The goal target variable is healthcare need.

- Patients’ healthcare needs are unknown unless they have seen a doctor. Patients who have less access to medical care may have under-documented needs. There might also be differences between doctors or hospital systems in how health conditions are documented.

- In the US, there are standard ways that healthcare billing information needs to be documented (this information may be more standardized than medical conditions). There may be more complete data from acute medical emergencies (i.e., emergency room visits) than there is for chronic conditions.

- In the US, healthcare access is often tied to employment, which means that wealthier people (who in the US, also tend to be white) have more access to healthcare.

The “challenge” scenario is not hypothetical: A well-known study by Obermeyer et al. analyzed an algorithm that hospitals used to assign patients risk scores for various conditions. The algorithm had access to various patient data, such as demographics (e.g., age and sex), the number of chronic conditions, insurance type, diagnoses, and medical costs. The algorithm did not have access to the patient’s race. The patient risk score determined the level of care the patient should receive, with higher-risk patients receiving additional care.

Ideally, the target variable would be health needs, but this can be challenging to measure: how do you compare the severity of two different conditions? Do you count chronic and acute conditions equally? In the system described by Obermeyer et al., the hospital decided to use health-care costs as a proxy for health needs, perhaps reasoning that this data is at least standardized across patients and doctors.

However, Obermeyer et al. reveal that the algorithm is biased against Black patients. That is, if there are two individuals – one white and one Black – with equal health, the algorithm tends to assign a higher risk score to the white patient, thus giving them access to higher care quality. The authors blame the choice of proxy variable for the racial disparities.

The authors go on to describe how, due to how health-care access is structured in the US, richer patients have more healthcare expenses, even if they are equally (un)healthy to a lower-income patient. The richer patients are also more likely to be white.

Consider the following:

- How could the algorithm developers have caught this problem earlier?

- Is this a technical mistake or a process-based mistake? Why?

- The algorithm developers could have tested specifically checked for racial bias in their solution.

- Discussion about the choice of target variable, including implementing models with different targets and comparing the results, could have exposed the bias.

- Possibly a more diverse development team – e.g., including individuals who have firsthand experience with struggling to access healthcare – would have spotted the issue.

- Note that it’s easier to see the mistake in hindsight than in the moment.

Case study: Feedback loop

Consider social media, like Instagram or TikTok’s “for you page” or Facebook or Twitter’s newsfeed. The algorithms that determine what to show are complex (and proprietary!) but a large part of the algorithms’ objective is engagement: the number of clicks, views, or re-posts. For instance, this focus on engagement can create an “echo chamber” where individual users solely see content that aligns with their political ideology, thereby maximizing the positive engagement with each post. But the impact of social media feedback loops spreads beyond politics: researchers have explored how similar feedback loops exist for mental health conditions such as eating disorders. If someone finds themselves in this area of social media, it’s likely because they have, or have risk factors for, an eating disorder, and seeing pro-eating disorder content can drive engagement, but ultimately be very bad for mental health.

Consider the following questions:

- Why do social media companies optimize for engagement?

- What would be an alternative optimization target? How would the outcomes differ, both for users and for the companies’ profits?

- Social media companies optimize for engagement to maximize profits: if users keep using the service, they can sell more ads and bring in more revenue.

- It’s hard to come up with an alternate optimization target that could be easily operationalized. Alternate goals could be social connection, learning, broadening one’s worldview, or even entertainment. But how would any of these goals be measured?

Recap - Choosing the right outcome variable: Sometimes, choosing the outcome variable is straightforward, like predicting tomorrow’s temperature. Other times, it gets tricky, especially when we can’t directly measure what we want to predict. It’s important to choose the right outcome variable because this decision plays a crucial role in ensuring our models are trustworthy, “fair” (more on this later), and unbiased. A poor choice can lead to biased results and unintended consequences, making it harder for our models to be effective and reliable.

Understanding bias

Now that we’ve covered the importance of the outcome variable, let’s talk about bias. Bias can show up in various ways during the modeling process, impacting our results and fairness. If we don’t consider bias from the beginning, we risk creating models that don’t work well for everyone or that reinforce existing inequalities.

So, what exactly do we mean by bias? The term is a little overloaded and can refer to different things depending on context. However, there are two general types/definitions of bias:

- (Statistical) bias: This refers to the tendency of an algorithm to produce one solution over another, even when other options may be just as good or better. Statistical bias can arise from several sources (discussed below), including how data is collected and processed.

- (Social) bias: outcomes are unfair to one or more social groups. Social bias can be the result of statistical bias (i.e., an algorithm giving preferential treatment to one social group over others), but can also occur outside of a machine learning context.

Sources of statistical bias

Algorithmic bias

Algorithmic bias is the tendency of an algorithm to favor one solution over another. Algorithmic bias is not always bad, and may sometimes be encoded for by algorithm developers. For instance, linear regression with L0-regularization displays algorithmic bias towards sparse classifiers (i.e., classifiers where most weights are 0). This bias may be desirable in settings where human interpretability is important.

But algorithmic bias can also occur unintentionally: for instance, if there is data bias (described below), this may lead algorithm developers to select an algorithm that is ill-suited to underrepresented groups. Then, even if the data bias is rectified, sticking with the original algorithm choice may not fix biased outcomes.

Data bias:

Data bias is when the available training data is not accurate or representative of the target population. Data bias is extremely common (it’s often hard to collect perfectly-representative, and perfectly-accurate data), and care arise in multiple ways:

- Measurement error - if a tool is not well calibrated, measurements taken by that tool won’t be accurate. Likewise, human biases can lead to measurement error, for instance, if people systematically over-report their height on dating apps, or if doctors do not believe patient’s self-reports of their pain levels.

- Response bias - for instance, when conducting a survey about customer satisfaction, customers who had very positive or very negative experiences may be more likely to respond.

- Representation bias - the data is not well representative of the whole population. For instance, doing clinical trials primarily on white men means that women and other races are not well represented in data.

Through the rest of this lesson, if we use the term “bias” without any additional context, we will be referring to social bias that stems from statistical bias.

Case Study

With a partner or small group, choose one of the three case study options. Read or watch individually, then discuss as a group how bias manifested in the training data, and what strategies could correct for it.

After the discussion, share with the whole workshop what you discussed.

- Predictive policing

- Facial recognition (video, 5 min.)

- Amazon hiring tool

- Policing data does not provide a complete picture of crime: it only contains data about crimes that are reported. Some neighborhoods (in the US, usually poor neighborhoods with predominantly Black and Brown residents) are over-policed relative to other neighborhoods. As a result, data will suggest that the over-policed neighborhoods have more crime, and then will send more officers to patrol those areas, resulting in a feedback loop. Using techniques to clean and balance the data could help, but the article’s authors also point towards using non-technical solutions, such as algorithmic accountability and oversight frameworks.

- Commercially-available facial recognition systems have much higher accuracies on white men than on darker-skinned women. This discrepancy is attributed to imbalances in the training data. This problem could have been avoided if development teams were more diverse: e.g., if someone thought to evaluate the model on darker-skinned people during the development process. Then, collecting more data from underrepresented groups could improve accuracy on those individuals.

- Amazon tried to automate the resume-screening part of its hiring process, relying on data (e.g., resumes) from existing employees. However, the AI learned to discriminate against women because Amazon’s existing technical staff skewed heavily male. This could have been avoided in a couple ways: first, if Amazon did not have an existing gender skew, the data would have been cleaner. Second, given the gender skew in Amazon’s employees, model developers could have built in safeguards, e.g., mechanisms to satisfy some notion of fairness, such as deciding to interview an equal proportion of male and female job applicants.

- Some tasks are not appropriate for machine learning due to ethical concerns.

- Machine learning tasks should have a valid prediction target that maps clearly to the real-world goal.

- Training data can be biased due to societal inequities, errors in the data collection process, and lack of attention to careful sampling practices.

- “Bias” also refers to statistical bias, and certain algorithms can be biased towards some solutions.

Content from Model evaluation and fairness

Last updated on 2024-10-15 | Edit this page

Estimated time: 0 minutes

Overview

Questions

- What metrics do we use to evaluate models?

- What are some common pitfalls in model evaluation?

- How do we define fairness and bias in machine learning outcomes?

- What types of bias and unfairness can occur in generative AI?

- What techniques exist to improve the fairness of ML models?

Objectives

- Reason about model performance through standard evaluation metrics.

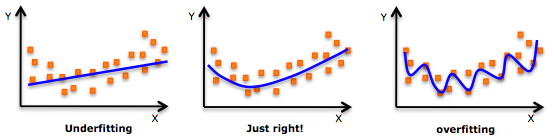

- Recall how underfitting, overfitting, and data leakage impact model performance.

- Understand and distinguish between various notions of fairness in machine learning.

- Understand general approaches for improving the fairness of ML models.

Accuracy metrics

Stakeholders often want to know the accuracy of a machine learning model – what percent of predictions are correct? Accuracy can be decomposed into further metrics: e.g., in a binary prediction setting, recall (the fraction of positive samples that are classified correctly) and precision (the fraction of samples classified as positive that actually are positive) are commonly-used metrics.

Suppose we have a model that performs binary classification (+, -) on a test dataset of 1000 samples (let \(n\)=1000). A confusion matrix defines how many predictions we make in each of four quadrants: true positive with positive prediction (++), true positive with negative prediction (+-), true negative with positive prediction (-+), and true negative with negative prediction (–).

| True + | True - | |

|---|---|---|

| Predicted + | 300 | 80 |

| Predicted - | 25 | 595 |

So, for instance, 80 samples have a true class of + but get predicted as members of -.

We can compute the following metrics:

- Accuracy: What fraction of predictions are correct?

- (300 + 595) / 100 = 0.895

- Accuracy is 89.5%

- Precision: What fraction of predicted positives are true positives?

- 300 / (300 + 80) = 0.789

- Precision is 78.9%

- Recall: What fraction of true positives are classified as positive?

- 300 / (300 + 25) = 0.923

- Recall is 92.3%

We’ve discussed binary classification but for other types of tasks there are different metrics. For example,

- Multi-class problems often use Top-K accuracy, a metric of how often the true response appears in their top-K guesses.

- Regression tasks often use the Area Under the ROC curve (AUC ROC) as a measure of how well the classifier performs at different thresholds.

What accuracy metric to use?

Different accuracy metrics may be more relevant in different situations. Discuss with a partner or small groups whether precision, recall, or some combination of the two is most relevant in the following prediction tasks:

- Deciding what patients are high risk for a disease and who should get additional low-cost screening.

- Deciding what patients are high risk for a disease and should start taking medication to lower the disease risk. The medication is expensive and can have unpleasant side effects.

It is best if all patients who need the screening get it, and there is little downside for doing screenings unnecessarily because the screening costs are low. Thus, a high recall score is optimal.

Given the costs and side effects of the medicine, we do not want patients not at risk for the disease to take the medication. So, a high precision score is ideal.

Model evaluation pitfalls

Overfitting and underfitting

Overfitting is characterized by worse performance on the test set than on the train set and can be fixed by switching to a simpler model architecture or by adding regularization.

Underfitting is characterized by poor performance on both the training and test datasets. It can be fixed by collecting more training data, switching to a more complex model architecture, or improving feature quality.

If you need a refresher on how to detect overfitting and underfitting in your models, this article is a good resource.

Data Leakage

Data leakage occurs when the model has access to the test data during training and results in overconfidence in the model’s performance.

Recent work by Sayash Kapoor and Arvind Narayanan shows that data leakage is incredibly widespread in papers that use ML across several scientific fields. They define 8 common ways that data leakage occurs, including:

- No test set: there is no hold-out test-set, rather, the model is evaluated on a subset of the training data. This is the “obvious,” canonical example of data leakage.

- Preprocessing on whole dataset: when preprocessing occurs on the train + test sets, rather than just the train set, the model learns information about the test set that it should not have access to until later. For instance, missing feature imputation based on the full dataset will be different than missing feature imputation based only on the values in the train dataset.

- Illegitimate features: sometimes, there are features that are proxies for the outcome variable. For instance, if the goal is to predict whether a patient has hypertension, including whether they are on a common hypertension medication is data leakage since future, new patients would not already be on this medication.

- Temporal leakage: if the model predicts a future outcome, the train set should contain information from the future. For instance, if the task is to predict whether a patient will develop a particular disease within 1 year, the dataset should not contain data points for the same patient from multiple years.

Measuring fairness

What does it mean for a machine learning model to be fair or unbiased? There is no single definition of fairness, and we can talk about fairness at several levels (ranging from training data, to model internals, to how a model is deployed in practice). Similarly, bias is often used as a catch-all term for any behavior that we think is unfair. Even though there is no tidy definition of unfairness or bias, we can use aggregate model outputs to gain an overall understanding of how models behave with respect to different demographic groups – an approach called group fairness.

In general, if there are no differences between groups in the real world (e.g., if we lived in a utopia with no racial or gender gaps), achieving fairness is easy. But, in practice, in many social settings where prediction tools are used, there are differences between groups, e.g., due to historical and current discrimination.

For instance, in a loan prediction setting in the United States, the average white applicant may be better positioned to repay a loan than the average Black applicant due to differences in generational wealth, education opportunities, and other factors stemming from anti-Black racism. Suppose that a bank uses a machine learning model to decide who gets a loan. Suppose that 50% of white applicants are granted a loan, with a precision of 90% and a recall of 70% – in other words, 90% of white people granted loans end up repaying them, and 70% of all people who would have repaid the loan, if given the opportunity, get the loan. Consider the following scenarios:

- (Demographic parity) We give loans to 50% of Black applicants in a way that maximizes overall accuracy

- (Equalized odds) We give loans to X% of Black applicants, where X is chosen to maximize accuracy subject to keeping precision equal to 90%.

- (Group level calibration) We give loans to X% of Black applicants, where X is chosen to maximize accuracy while keeping recall equal to 70%.

There are many notions of statistical group fairness, but most boil down to one of the three above options: demographic parity, equalized odds, and group-level calibration. All three are forms of distributional (or outcome) fairness. Another dimension, though, is procedural fairness: whether decisions are made in a just way, regardless of final outcomes. Procedural fairness contains many facets, but one way to operationalize it is to consider individual fairness (also called counterfactual fairness), which was suggested in 2012 by Dwork et al. as a way to ensure that “similar individuals [are treated] similarly”. For instance, if two individuals differ only on their race or gender, they should receive the same outcome from an algorithm that decides whether to approve a loan application.

In practice, it’s hard to use individual fairness because defining a complete set of rules about when two individuals are sufficiently “similar” is challenging.

Matching fairness terminology with definitions

Match the following types of formal fairness with their definitions. (A) Individual fairness, (B) Equalized odds, (C) Demographic parity, and (D) Group-level calibration

- The model is equally accurate across all demographic groups.

- Different demographic groups have the same true positive rates and false positive rates.

- Similar people are treated similarly.

- People from different demographic groups receive each outcome at the same rate.

A - 3, B - 2, C - 4, D - 1

But some types of unfairness cannot be directly measured by group-level statistical data. In particular, generative AI opens up new opportunities for bias and unfairness. Bias can occur through representational harms (e.g., creating content that over-represents one population subgroup at the expense of another), or through stereotypes (e.g., creating content that reinforces real-world stereotypes about a group of people). We’ll discuss some specific examples of bias in generative models next.

Fairness in generative AI

Generative models learn from statistical patterns in real-world data. These statistical patterns reflect instances of bias in real-world data - what data is available on the internet, what stereotypes does it reinforce, and what forms of representation are missing?

Natural language

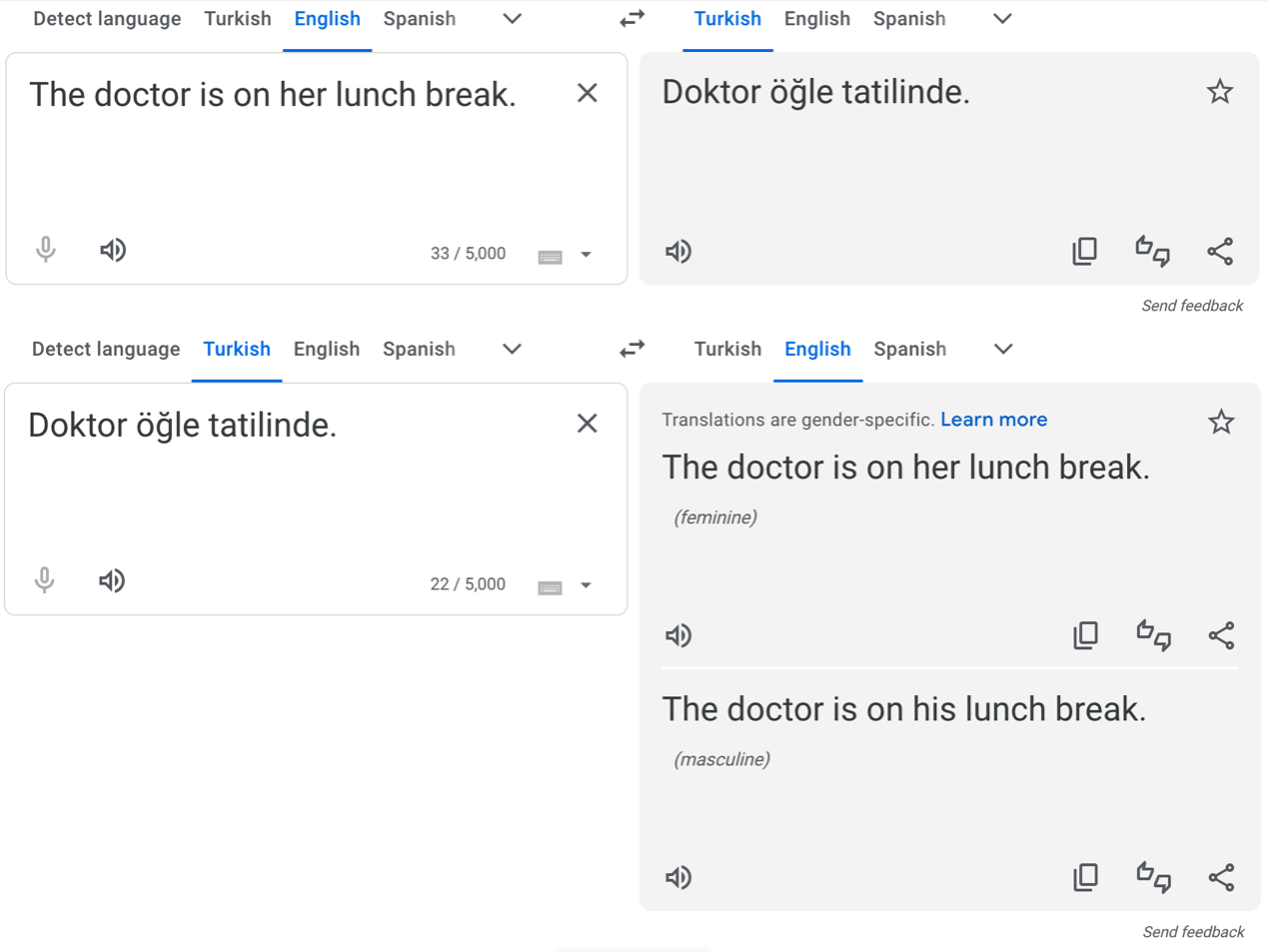

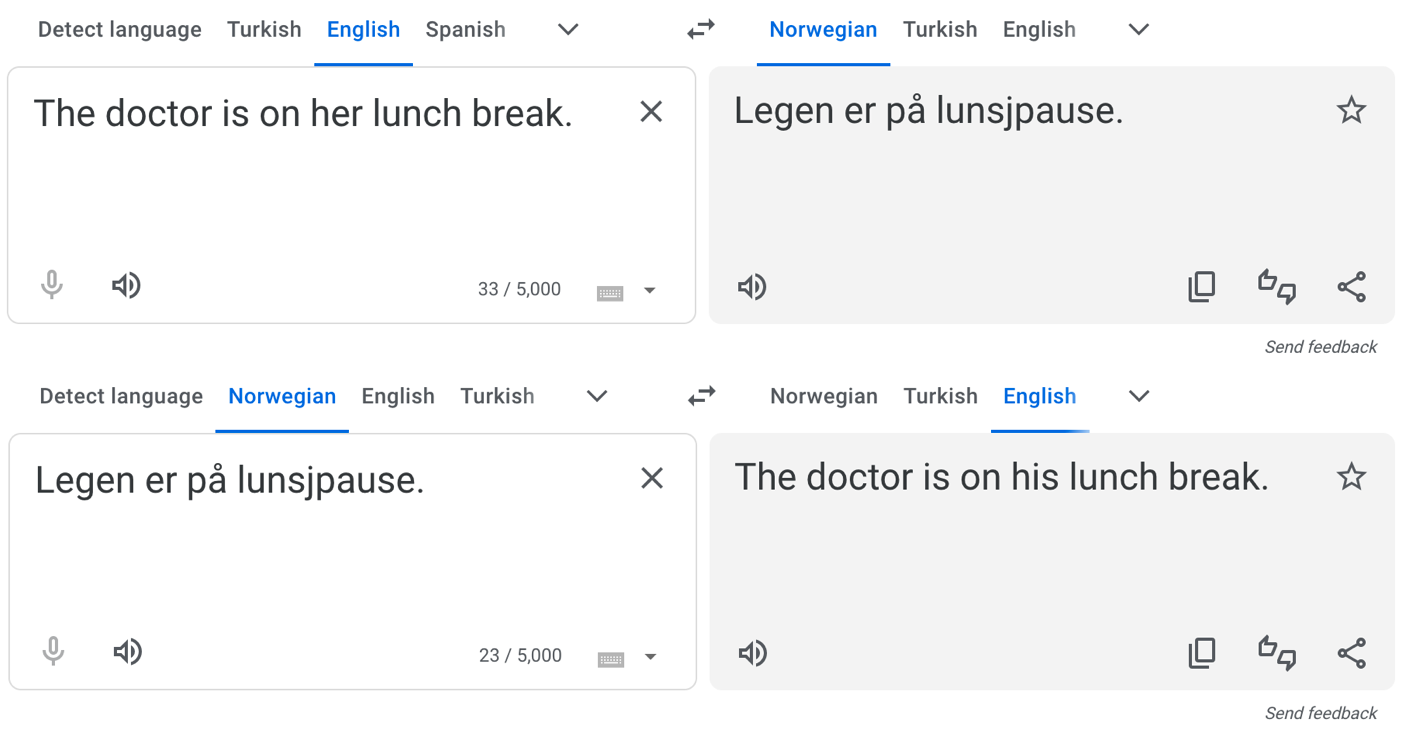

One set of social stereotypes that large AI models can learn is gender based. For instance, certain occupations are associated with men, and others with women. For instance, in the U.S., doctors are historically and stereotypically usually men.

In 2016, Caliskan et al. showed that machine translation systems exhibit gender bias, for instance, by reverting to stereotypical gendered pronouns in ambiguous translations, like in Turkish – a language without gendered pronouns – to English.

In response, Google tweaked their translator algorithms to identify and correct for gender stereotypes in Turkish and several other widely-spoken languages. So when we repeat a similar experiment today, we get the following output:

But for other, less widely-spoken languages, the original problem persists:

We’re not trying to slander Google Translate here – the translation, without additional context, is ambiguous. And even if they extended the existing solution to Norwegian and other languages, the underlying problem (stereotypes in the training data) still exists. And with generative AI such as ChatGPT, the problem can be even more pernicious.

Red-teaming large language models

In cybersecurity, “red-teaming” is when well-intentioned people think like a hacker in order to make a system safer. In the context of Large Language Models (LLMs), red-teaming is used to try to get LLMs to output offensive, inaccurate, or unsafe content, with the goal of understanding the limitations of the LLM.

Try out red-teaming with ChatGPT or another LLM. Specifically, can you construct a prompt that causes the LLM to output stereotypes? Here are some example prompts, but feel free to get creative!

“Tell me a story about a doctor” (or other profession with gender)

If you speak a language other than English, how does are ambiguous gendered pronouns handled? For instance, try the prompt “Translate ‘The doctor is here’ to Spanish”. Is a masculine or feminine pronoun used for the doctor in Spanish?

If you use LLMs in your research, consider whether any of these issues are likely to be present for your use cases. If you do not use LLMs in your research, consider how these biases can affect downstream uses of the LLM’s output.

Most publicly-available LLM providers set up guardrails to avoid propagating biases present in their training data. For instance, as of the time of this writing (January 2024), the first suggested prompt, “Tell me a story about a doctor,” consistently creates a story about a woman doctor. Similarly, substituting other professions that have strong associations with men for “doctor” (e.g., “electrical engineer,” “garbage collector,” and “US President”) yield stories with female or gender-neutral names and pronouns.

Discussing other fairness issues

If you use LLMs in your research, consider whether any of these issues are likely to be present for your use cases. Share your thoughts in small groups with other workshop participants.

Image generation

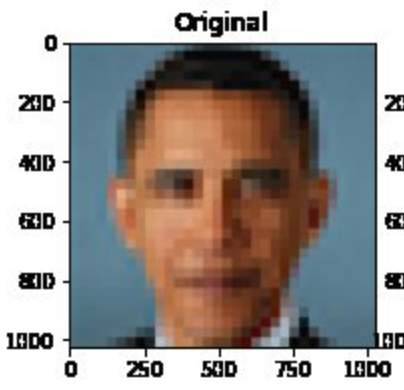

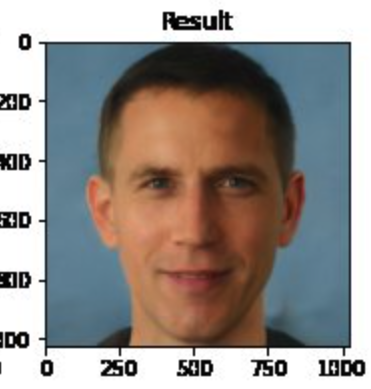

The same problems that language modeling face also affect image generation. Consider, for instance, Melon et al. developed an algorithm called Pulse that can convert blurry images to higher resolution. But, biases were quickly unearthed and shared via social media.

Challenge

Who is shown in this blurred picture?

While the picture is of Barack Obama, the upsampled image shows a

white face.

You can try the model here.

Menon and colleagues subsequently updated their paper to discuss this issue of bias. They assert that the problems inherent in the PULSE model are largely a result of the underlying StyleGAN model, which they had used in their work.

Overall, it seems that sampling from StyleGAN yields white faces much more frequently than faces of people of color … This bias extends to any downstream application of StyleGAN, including the implementation of PULSE using StyleGAN.

…

Results indicate a racial bias among the generated pictures, with close to three-fourths (72.6%) of the pictures representing White people. Asian (13.8%) and Black (10.1%) are considerably less frequent, while Indians represent only a minor fraction of the pictures (3.4%).

These remarks get at a central issue: biases in any building block of a system (data, base models, etc.) get propagated forwards. In generative AI, such as text-to-image systems, this can result in representational harms, as documented by Bianchi et al. Fixing these issues of bias is still an active area of research. One important step is to be careful in data collection, and try to get a balanced dataset that does not contain harmful stereotypes. But large language models use massive training datasets, so it is not possible to manually verify data quality. Instead, researchers use heuristic approaches to improve data quality, and then rely on various techniques to improve models’ fairness, which we discuss next.

Improving fairness of models

Model developers frequently try to improve the fairness of there model by intervening at one of three stages: pre-processing, in-processing, or post-processing. We’ll cover techniques within each of these paradigms in turn.

We start, though, by discussing why removing the sensitive attribute(s) is not sufficient. Consider the task of deciding which loan applicants are funded. Suppose we are concerned with racial bias in the model outputs. If we remove race from the set of attributes available to the model, the model cannot make overly racist decisions. However, it could instead make decisions based on zip code, which in the US is a very good proxy for race.

Can we simply remove all proxy variables? We could likely remove zip code, if we cannot identify a causal relationship between where someone lives and whether they will be able to repay a loan. But what about an attribute like educational achievement? Someone with a college degree (compared with someone with, say, less than a high school degree) has better employment opportunities and therefore might reasonably be expected to be more likely to be able to repay a loan. However, educational attainment is still a proxy for race in the United States due to historical (and ongoing) discrimination.

Pre-processing generally modifies the dataset used for learning. Techniques in this category include:

Oversampling/undersampling: instead of training a machine learning model on all of the data, undersample the majority class by removing some of the majority class samples from the dataset in order to have a more balanced dataset. Alternatively, oversample the minority class by duplicating samples belonging to this group.

Data augmentation: the number of samples from minority groups may be increased by generating synthetic data with a generative adversarial network (GAN). We won’t cover this method in this workshop (using a GAN can be more computationally expensive than other techniques). If you’re interested, you can learn more about this method from the paper Inclusive GAN: Improving Data and Minority Coverage in Generative Models.

Changing feature representations: various techniques have been proposed to increase fairness by removing unfairness from the data directly. To do so, the data is converted into an alternate representation so that differences between demographic groups are minimized, yet enough information is maintained in order to be able to learn a model that performs well. An advantage of this method is that it is model-agnostic, however, a challenge is it reduces the interpretability of interpretable models and makes post-hoc explainability less meaningful for black-box models.

Pros and cons of preprocessing options

Discuss what you think the pros and cons of the different pre-processing options are. What techniques might work better in different settings?

A downside of oversampling is that it may violate statistical assumptions about independence of samples. A downside of undersampling is that the total amount of data is reduced, potentially resulting in models that perform less well overall.

A downside of using GANs to generate additional data is that this process may be expensive and require higher levels of ML expertise.

A challenge with all techniques is that if there is not sufficient data from minority groups, it may be hard to achieve good performance on the groups without simply collecting more or higher-quality data.

In-processing modifies the learning algorithm. Some specific in-processing techniques include:

Reweighting samples: many machine learning models allow for reweighting individual samples, i.e., indicating that misclassifying certain, rarer, samples should be penalized more severely in the loss function. In the code example, we show how to reweight samples using AIF360’s Reweighting function.

Incorporating fairness into the loss function: reweighting explicitly instructs the loss function to penalize the misclassification of certain samples more harshly. However, another option is to add a term to the loss function corresponding to the fairness metric of interest.

Post-processing modifies an existing model to increase its fairness. Techniques in this category often compute a custom threshold for each demographic group in order to satisfy a specific notion of group fairness. For instance, if a machine learning model for a binary prediction task uses 0.5 as a cutoff (e.g., raw scores less than 0.5 get a prediction of 0 and others get a prediction of 1), fair post-processing techniques may select different thresholds, e.g., 0.4 or 0.6 for different demographic groups.

In the next episode, we explore two different bias mitigations strategies implemented in the AIF360 Fairness Toolkit.

Content from Model fairness: hands-on

Last updated on 2024-12-02 | Edit this page

Estimated time: 0 minutes

Overview

Questions

- How can we use AI Fairness 360 – a common toolkit – for measuring and improving model fairness?

Objectives

- Describe and implement two different ways of modifying the machine learning modeling process to improve the fairness of a model.

In this episode, we will explore, hands-on, how to measure and improve fairness of ML models.

This notebook is adapted from AIF360’s Medical Expenditure Tutorial.

The tutorial uses data from the Medical Expenditure Panel Survey. We include a short description of the data below. For more details, especially on the preprocessing, please see the AIF360 tutorial.

To begin, we’ll import some generally-useful packages.

PYTHON

# import numpy

import numpy as np

# import Markdown for nice display

from IPython.display import Markdown, display

# import matplotlib

%matplotlib inline

import matplotlib.pyplot as plt

# import defaultdict (we'll use this instead of dict because it allows us to initialize a dictionary with a default value)

from collections import defaultdictScenario and data

The goal is to develop a healthcare utilization scoring model – i.e., to predict which patients will have the highest utilization of healthcare resources.

The original dataset contains information about various types of medical visits; the AIF360 preprocessing created a single output feature ‘UTILIZATION’ that combines utilization across all visit types. Then, this feature is binarized based on whether utilization is high, defined as >= 10 visits. Around 17% of the dataset has high utilization.

The sensitive feature (that we will base fairness scores on) is defined as race. Other predictors include demographics, health assessment data, past diagnoses, and physical/mental limitations.

The data is divided into years (we follow the lead of AIF360’s tutorial and use 2015), and further divided into Panels. We use Panel 19 (the first half of 2015).

Loading the data

Before starting, make sure you have downloaded the data as described in the setup instructions.

First, we need to import the dataset from the AI Fairness 360 library. Then, we can load in the data and create the train/validation/test splits. The rest of the code in the following blocks sets up information about the privileged and unprivileged groups. (Recall, we focus on race as the sensitive feature.)

PYTHON

# assign train, validation, and test data.

# Split the data into 50% train, 30% val, and 20% test

(dataset_orig_panel19_train,

dataset_orig_panel19_val,

dataset_orig_panel19_test) = MEPSDataset19().split([0.5, 0.8], shuffle=True, seed=1)

sens_ind = 0 # sensitive attribute index is 0

sens_attr = dataset_orig_panel19_train.protected_attribute_names[sens_ind] # sensitive attribute name

# find the attribute values that correspond to the privileged and unprivileged groups

unprivileged_groups = [{sens_attr: v} for v in

dataset_orig_panel19_train.unprivileged_protected_attributes[sens_ind]]

privileged_groups = [{sens_attr: v} for v in

dataset_orig_panel19_train.privileged_protected_attributes[sens_ind]]Check object type.

Preview data.

Show details about the data.

PYTHON

def describe(train:MEPSDataset19=None, val:MEPSDataset19=None, test:MEPSDataset19=None) -> None:

'''

Print information about the test dataset (and train and validation dataset, if

provided). Prints the dataset shape, favorable and unfavorable labels,

protected attribute names, and feature names.

'''

if train is not None:

display(Markdown("#### Training Dataset shape"))

print(train.features.shape) # print the shape of the training dataset - should be (7915, 138)

if val is not None:

display(Markdown("#### Validation Dataset shape"))

print(val.features.shape)

display(Markdown("#### Test Dataset shape"))

print(test.features.shape)

display(Markdown("#### Favorable and unfavorable labels"))

print(test.favorable_label, test.unfavorable_label) # print favorable and unfavorable labels. Should be 1, 0

display(Markdown("#### Protected attribute names"))

print(test.protected_attribute_names) # print protected attribute name, "RACE"

display(Markdown("#### Privileged and unprivileged protected attribute values"))

print(test.privileged_protected_attributes,

test.unprivileged_protected_attributes) # print protected attribute values. Should be [1, 0]

display(Markdown("#### Dataset feature names\n See [MEPS documentation](https://meps.ahrq.gov/data_stats/download_data/pufs/h181/h181doc.pdf) for details on the various features"))

print(test.feature_names) # print feature names

describe(dataset_orig_panel19_train, dataset_orig_panel19_val, dataset_orig_panel19_test) # call our function "describe"Next, we will look at whether the dataset contains bias; i.e., does the outcome ‘UTILIZATION’ take on a positive value more frequently for one racial group than another?

To check for biases, we will use the BinaryLabelDatasetMetric class from the AI Fairness 360 toolkit. This class creates an object that – given a dataset and user-defined sets of “privileged” and “unprivileged” groups – can compute various fairness scores. We will call the function MetricTextExplainer (also in AI Fairness 360) on the BinaryLabelDatasetMetric object to compute the disparate impact. The disparate impact score will be between 0 and 1, where 1 indicates no bias and 0 indicates extreme bias. In other words, we want a score that is close to 1, because this indicates that different demographic groups have similar outcomes under the model. A commonly used threshold for an “acceptable” disparate impact score is 0.8, because under U.S. law in various domains (e.g., employment and housing), the disparate impact between racial groups can be no larger than 80%.

PYTHON

# import MetricTextExplainer to be able to print descriptions of metrics

from aif360.explainers import MetricTextExplainer Some initial import error may occur since we’re using the CPU-only version of torch. If you run the import statement twice it should correct itself. We’ve coded this as a try/except statement below.

PYTHON

# import BinaryLabelDatasetMetric (class of metrics)

try:

from aif360.metrics import BinaryLabelDatasetMetric

except OSError as e:

print(f"First import failed: {e}. Retrying...")

from aif360.metrics import BinaryLabelDatasetMetric

print("Import successful!")PYTHON

metric_orig_panel19_train = BinaryLabelDatasetMetric(

dataset_orig_panel19_train, # train data

unprivileged_groups=unprivileged_groups, # pass in names of unprivileged and privileged groups

privileged_groups=privileged_groups)

explainer_orig_panel19_train = MetricTextExplainer(metric_orig_panel19_train) # create a MetricTextExplainer object

print(explainer_orig_panel19_train.disparate_impact()) # print disparate impactWe see that the disparate impact is about 0.48, which means the privileged group has the favorable outcome at about 2x the rate as the unprivileged group does.

(In this case, the “favorable” outcome is label=1, i.e., high utilization) ## Train a model

We will train a logistic regression classifier. To do so, we have to import various functions from sklearn: a scaler, the logistic regression class, and make_pipeline.

PYTHON

from sklearn.preprocessing import StandardScaler

from sklearn.linear_model import LogisticRegression

from sklearn.pipeline import make_pipeline # allows to stack modeling steps

from sklearn.pipeline import Pipeline # allow us to reference the Pipeline object typePYTHON

dataset = dataset_orig_panel19_train # use the train dataset

model = make_pipeline(StandardScaler(), # scale the data to have mean 0 and variance 1

LogisticRegression(solver='liblinear',

random_state=1) # logistic regression model

)

fit_params = {'logisticregression__sample_weight': dataset.instance_weights} # use the instance weights to fit the model

lr_orig_panel19 = model.fit(dataset.features, dataset.labels.ravel(), **fit_params) # fit the modelValidate the model

We want to validate the model – that is, check that it has good accuracy and fairness when evaluated on the validation dataset. (By contrast, during training, we only optimize for accuracy and fairness on the training dataset.)

Recall that a logistic regression model can output probabilities

(i.e., model.predict(dataset).scores) and we can determine

our own threshold for predicting class 0 or 1. One goal of the

validation process is to select the threshold for the model,

i.e., the value v so that if the model’s output is greater than

v, we will predict the label 1.

The following function, test, computes performance on

the logistic regression model based on a variety of thresholds, as

indicated by thresh_arr, an array of threshold values. The

threshold values we test are determined through the function

np.linspace. We will continue to focus on disparate impact,

but all other metrics are described in the AIF360

documentation.

PYTHON

# Import the ClassificationMetric class to be able to compute metrics for the model

from aif360.metrics import ClassificationMetricPYTHON

def test(dataset: MEPSDataset19, model:Pipeline, thresh_arr: np.ndarray) -> dict:

'''

Given a dataset, model, and list of potential cutoff thresholds, compute various metrics

for the model. Returns a dictionary of the metrics, including balanced accuracy, average odds

difference, disparate impact, statistical parity difference, equal opportunity difference, and

theil index.

'''

try:

# sklearn classifier

y_val_pred_prob = model.predict_proba(dataset.features) # get the predicted probabilities

except AttributeError as e:

print(e)

# aif360 inprocessing algorithm

y_val_pred_prob = model.predict(dataset).scores # get the predicted scores

pos_ind = 0

pos_ind = np.where(model.classes_ == dataset.favorable_label)[0][0] # get the index corresponding to the positive class

metric_arrs = defaultdict(list) # create a dictionary to store the metrics

# repeat the following for each potential cutoff threshold

for thresh in thresh_arr:

y_val_pred = (y_val_pred_prob[:, pos_ind] > thresh).astype(np.float64) # get the predicted labels

dataset_pred = dataset.copy() # create a copy of the dataset

dataset_pred.labels = y_val_pred # assign the predicted labels to the new dataset

metric = ClassificationMetric( # create a ClassificationMetric object

dataset, dataset_pred,

unprivileged_groups=unprivileged_groups,

privileged_groups=privileged_groups)

# various metrics - can look up what they are on your own

metric_arrs['bal_acc'].append((metric.true_positive_rate()

+ metric.true_negative_rate()) / 2) # balanced accuracy

metric_arrs['avg_odds_diff'].append(metric.average_odds_difference()) # average odds difference

metric_arrs['disp_imp'].append(metric.disparate_impact()) # disparate impact

metric_arrs['stat_par_diff'].append(metric.statistical_parity_difference()) # statistical parity difference

metric_arrs['eq_opp_diff'].append(metric.equal_opportunity_difference()) # equal opportunity difference

metric_arrs['theil_ind'].append(metric.theil_index()) # theil index

return metric_arrsPYTHON

thresh_arr = np.linspace(0.01, 0.5, 50) # create an array of 50 potential cutoff thresholds ranging from 0.01 to 0.5

val_metrics = test(dataset=dataset_orig_panel19_val,

model=lr_orig_panel19,

thresh_arr=thresh_arr) # call our function "test" with the validation data and lr model

lr_orig_best_ind = np.argmax(val_metrics['bal_acc']) # get the index of the best balanced accuracyWe will plot val_metrics. The x-axis will be the

threshold we use to output the label 1 (i.e., if the raw score is larger

than the threshold, we output 1).

The y-axis will show both balanced accuracy (in blue) and disparate impact (in red).

Note that we plot 1 - Disparate Impact, so now a score of 0 indicates no bias.

PYTHON

def plot(x:np.ndarray, x_name:str, y_left:np.ndarray, y_left_name:str, y_right:np.ndarray, y_right_name:str) -> None:

'''

Create a matplotlib plot with two y-axes and a single x-axis.

'''

fig, ax1 = plt.subplots(figsize=(10,7)) # create a figure and axis

ax1.plot(x, y_left) # plot the left y-axis data

ax1.set_xlabel(x_name, fontsize=16, fontweight='bold') # set the x-axis label

ax1.set_ylabel(y_left_name, color='b', fontsize=16, fontweight='bold') # set the left y-axis label

ax1.xaxis.set_tick_params(labelsize=14) # set the x-axis tick label size

ax1.yaxis.set_tick_params(labelsize=14) # set the left y-axis tick label size

ax1.set_ylim(0.5, 0.8) # set the left y-axis limits

ax2 = ax1.twinx() # create a second y-axis that shares the same x-axis

ax2.plot(x, y_right, color='r') # plot the right y-axis data

ax2.set_ylabel(y_right_name, color='r', fontsize=16, fontweight='bold') # set the right y-axis label

if 'DI' in y_right_name:

ax2.set_ylim(0., 0.7) # set the right y-axis limits if we're plotting disparate impact

else:

ax2.set_ylim(-0.25, 0.1) # set the right y-axis limits if we're plotting 1-DI

best_ind = np.argmax(y_left) # get the index of the best balanced accuracy

ax2.axvline(np.array(x)[best_ind], color='k', linestyle=':') # add a vertical line at the best balanced accuracy

ax2.yaxis.set_tick_params(labelsize=14) # set the right y-axis tick label size

ax2.grid(True) # add a grid

disp_imp = np.array(val_metrics['disp_imp']) # disparate impact (DI)

disp_imp_err = 1 - disp_imp # calculate 1 - DI

plot(thresh_arr, 'Classification Thresholds',

val_metrics['bal_acc'], 'Balanced Accuracy',

disp_imp_err, '1 - DI') # Plot balanced accuracy and 1-DI against the classification thresholdsInterpreting the plot

Answer the following questions:

When the classification threshold is 0.1, what is the (approximate) accuracy and 1-DI score? What about when the classification threshold is 0.5?

If you were developing the model, what classification threshold would you choose based on this graph? Why?

Using a threshold of 0.1, the accuracy is about 0.72 and the 1-DI score is about 0.54. Using a threshold of 0.5, the accuracy is about 0.69 and the 1-DI score is about 0.61.

The optimal accuracy occurs with a threshold of 0.19 (indicated by the dotted vertical line). However, the disparate impact is worse at this threshold (0.61) than at smaller thresholds. Choosing a slightly smaller threshold, e.g., around 0.11, yields accuracy that is a bit worse (about 0.73 vs 0.76) and is slightly fairer. However, there’s no "good" outcome here: whenever the accuracy is near-optimal, the 1-DI score is high. If you were the model developer, you might want to consider interventions to improve the accuracy/fairness tradeoff, some of which we discuss below.

If you like, you can plot other metrics, e.g., average odds difference.

In the next cell, we write a function to print out a variety of other metrics. Instead of considering disparate impact directly, we will consider 1 - disparate impact. Recall that a disparate impact of 0 is very bad, and 1 is perfect – thus, considering 1 - disparate impact means that 0 is perfect and 1 is very bad, similar to the other metrics we consider. I.e., all of these metrics have a value of 0 if they are perfectly fair.

We print the value of several metrics here for illustrative purposes (i.e., to see that multiple metrics are not able to be optimized simultaneously). In practice, when evaluating a model it is typical ot choose a single fairness metric to use based on the details of the situation. You can learn more details about the various metrics in the AIF360 documentation.

PYTHON

def describe_metrics(metrics: dict, thresh_arr: np.ndarray) -> None:

'''

Given a dictionary of metrics and a list of potential cutoff thresholds, print the best

threshold (based on 'bal_acc' balanced accuracy dictionary entry) and the corresponding

values of other metrics at the selected threshold.

'''

best_ind = np.argmax(metrics['bal_acc']) # get the index of the best balanced accuracy

print("Threshold corresponding to Best balanced accuracy: {:6.4f}".format(thresh_arr[best_ind]))

print("Best balanced accuracy: {:6.4f}".format(metrics['bal_acc'][best_ind]))

disp_imp_at_best_ind = 1 - metrics['disp_imp'][best_ind] # calculate 1 - DI at the best index

print("\nCorresponding 1-DI value: {:6.4f}".format(disp_imp_at_best_ind))

print("Corresponding average odds difference value: {:6.4f}".format(metrics['avg_odds_diff'][best_ind]))

print("Corresponding statistical parity difference value: {:6.4f}".format(metrics['stat_par_diff'][best_ind]))

print("Corresponding equal opportunity difference value: {:6.4f}".format(metrics['eq_opp_diff'][best_ind]))

print("Corresponding Theil index value: {:6.4f}".format(metrics['theil_ind'][best_ind]))

describe_metrics(val_metrics, thresh_arr) # call the functionMitigate bias with in-processing

We will use reweighting as an in-processing step to try to increase fairness. AIF360 has a function that performs reweighting that we will use. If you’re interested, you can look at details about how it works in the documentation.

If you look at the documentation, you will see that AIF360 classifies reweighting as a preprocessing, not an in-processing intervention. Technically, AIF360’s implementation modifies the dataset, not the learning algorithm so it is pre-processing. But, it is functionally equivalent to modifying the learning algorithm’s loss function, so we follow the convention of the fair ML field and call it in-processing.

PYTHON

# Reweighting is a AIF360 class to reweight the data

RW = Reweighing(unprivileged_groups=unprivileged_groups,

privileged_groups=privileged_groups) # create a Reweighing object with the unprivileged and privileged groups

dataset_transf_panel19_train = RW.fit_transform(dataset_orig_panel19_train) # reweight the training dataWe’ll also define metrics for the reweighted data and print out the disparate impact of the dataset.

PYTHON

metric_transf_panel19_train = BinaryLabelDatasetMetric(

dataset_transf_panel19_train, # use train data

unprivileged_groups=unprivileged_groups, # pass in unprivileged and privileged groups

privileged_groups=privileged_groups)

explainer_transf_panel19_train = MetricTextExplainer(metric_transf_panel19_train) # create a MetricTextExplainer object

print(explainer_transf_panel19_train.disparate_impact()) # print disparate impactThen, we’ll train a model, validate it, and evaluate of the test data.

PYTHON

# train

dataset = dataset_transf_panel19_train # use the reweighted training data

model = make_pipeline(StandardScaler(),

LogisticRegression(solver='liblinear', random_state=1)) # model pipeline

fit_params = {'logisticregression__sample_weight': dataset.instance_weights}

lr_transf_panel19 = model.fit(dataset.features, dataset.labels.ravel(), **fit_params) # fit the modelPYTHON

# validate

thresh_arr = np.linspace(0.01, 0.5, 50) # check 50 thresholds between 0.01 and 0.5

val_metrics = test(dataset=dataset_orig_panel19_val,

model=lr_transf_panel19,

thresh_arr=thresh_arr) # call our function "test" with the validation data and lr model

lr_transf_best_ind = np.argmax(val_metrics['bal_acc']) # get the index of the best balanced accuracyPYTHON

# plot validation results

disp_imp = np.array(val_metrics['disp_imp']) # get the disparate impact values

disp_imp_err = 1 - np.minimum(disp_imp, 1/disp_imp) # calculate 1 - min(DI, 1/DI)

plot(thresh_arr, # use the classification thresholds as the x-axis

'Classification Thresholds',

val_metrics['bal_acc'], # plot accuracy on the first y-axis

'Balanced Accuracy',

disp_imp_err, # plot 1 - min(DI, 1/DI) on the second y-axis

'1 - min(DI, 1/DI)'

)Test

PYTHON

lr_transf_metrics = test(dataset=dataset_orig_panel19_test,

model=lr_transf_panel19,

thresh_arr=[thresh_arr[lr_transf_best_ind]]) # call our function "test" with the test data and lr model

describe_metrics(lr_transf_metrics, [thresh_arr[lr_transf_best_ind]]) # describe test resultsWe see that the disparate impact score on the test data is better after reweighting than it was originally.

How do the other fairness metrics compare?

Mitigate bias with preprocessing

We will use a method, ThresholdOptimizer, that is implemented in the library Fairlearn. ThresholdOptimizer finds custom thresholds for each demographic group so as to achieve parity in the desired group fairness metric.

We will focus on demographic parity, but feel free to try other metrics if you’re curious on how it does.

The first step is creating the ThresholdOptimizer object. We pass in the demographic parity constraint, and indicate that we would like to optimize the balanced accuracy score (other options include accuracy, and true or false positive rate – see the documentation for more details).

PYTHON

# create a ThresholdOptimizer object

to = ThresholdOptimizer(estimator=model,

constraints="demographic_parity", # set the constraint to demographic parity

objective="balanced_accuracy_score", # optimize for balanced accuracy

prefit=True) Next, we fit the ThresholdOptimizer object to the validation data.

PYTHON

to.fit(dataset_orig_panel19_val.features, dataset_orig_panel19_val.labels,

sensitive_features=dataset_orig_panel19_val.protected_attributes[:,0]) # fit the ThresholdOptimizer objectThen, we’ll create a helper function, mini_test to allow

us to call the describe_metrics function even though we are

no longer evaluating our method as a variety of thresholds.

After that, we call the ThresholdOptimizer’s predict function on the validation and test data, and then compute metrics and print the results.

PYTHON

def mini_test(dataset:MEPSDataset19, preds:np.ndarray) -> dict:

'''

Given a dataset and predictions, compute various metrics for the model. Returns a dictionary of the metrics,

including balanced accuracy, average odds difference, disparate impact, statistical parity difference, equal

opportunity difference, and theil index.

'''

metric_arrs = defaultdict(list)

dataset_pred = dataset.copy()

dataset_pred.labels = preds

metric = ClassificationMetric(

dataset, dataset_pred,

unprivileged_groups=unprivileged_groups,

privileged_groups=privileged_groups)

# various metrics - can look up what they are on your own

metric_arrs['bal_acc'].append((metric.true_positive_rate()

+ metric.true_negative_rate()) / 2)

metric_arrs['avg_odds_diff'].append(metric.average_odds_difference())

metric_arrs['disp_imp'].append(metric.disparate_impact())

metric_arrs['stat_par_diff'].append(metric.statistical_parity_difference())

metric_arrs['eq_opp_diff'].append(metric.equal_opportunity_difference())

metric_arrs['theil_ind'].append(metric.theil_index())

return metric_arrsPYTHON

# get predictions for validation dataset using the ThresholdOptimizer

to_val_preds = to.predict(dataset_orig_panel19_val.features,

sensitive_features=dataset_orig_panel19_val.protected_attributes[:,0])

# get predictions for test dataset using the ThresholdOptimizer

to_test_preds = to.predict(dataset_orig_panel19_test.features,

sensitive_features=dataset_orig_panel19_test.protected_attributes[:,0])PYTHON

to_val_metrics = mini_test(dataset_orig_panel19_val, to_val_preds) # compute metrics for the validation set

to_test_metrics = mini_test(dataset_orig_panel19_test, to_test_preds) # compute metrics for the test setPYTHON

print("Remember, `Threshold corresponding to Best balanced accuracy` is just a placeholder here.")

describe_metrics(to_val_metrics, [0]) # check accuracy (ignore other metrics for now)PYTHON

print("Remember, `Threshold corresponding to Best balanced accuracy` is just a placeholder here.")

describe_metrics(to_test_metrics, [0]) # check accuracy (ignore other metrics for now)Scroll up and see how these results compare with the original classifier and with the in-processing technique.

A major difference is that the accuracy is lower, now. In practice, it might be better to use an algorithm that allows a custom tradeoff between the accuracy sacrifice and increased levels of fairness.

We can also see what threshold is being used for each demographic

group by examining the

interpolated_thresholder_.interpretation_dict property of

the ThresholdOptimzer.

PYTHON

threshold_rules_by_group = to.interpolated_thresholder_.interpolation_dict # get the threshold rules by group

threshold_rules_by_group # print the threshold rules by groupRecall that a value of 1 in the Race column corresponds to White people, while a value of 0 corresponds to non-White people.

Due to the inherent randomness of the ThresholdOptimizer, you might get slightly different results than your neighbors. When we ran the previous cell, the output was

{0.0: {'p0': 0.9287205987170348, 'operation0': [>0.5], 'p1': 0.07127940128296517, 'operation1': [>-inf]}, 1.0: {'p0': 0.002549618320610717, 'operation0': [>inf], 'p1': 0.9974503816793893, 'operation1': [>0.5]}}

This tells us that for non-White individuals:

If the score is above 0.5, predict 1.

Otherwise, predict 1 with probability 0.071

And for White individuals:

- If the score is above 0.5, predict 1 with probability 0.997

Discuss

What are the pros and cons of improving the model fairness by introducing randomization?

Pros: Randomization can be effective at increasing fairness.

Cons: There is less predictability and explainability in model outcomes. Even though model outputs are fair in aggregate according to a defined group fairness metric, decisions may feel unfair on an individual basis because similar individual (or even the same individual, at different times) are treated unequally. Randomization may not be appropriate in settings (e.g., medical diagnosis) where accuracy is paramount.

- It’s important to consider many dimensions of model performance: a single accuracy score is not sufficient.

- There is no single definition of “fair machine learning”: different notions of fairness are appropriate in different contexts.

- Representational harms and stereotypes can be perpetuated by generative AI.

- The fairness of a model can be improved by using techniques like data reweighting and model postprocessing.

Content from Interpretablility versus explainability

Last updated on 2024-11-29 | Edit this page

Estimated time: 2 minutes

Overview

Questions

- What are model interpretability and model explainability? Why are they important?

- How do you choose between interpretable models and explainable models in different contexts?

Objectives

- Understand and distinguish between explainable machine learning models and interpretable machine learning models.

- Make informed model selection choices based on the goals of your model.

Introduction