Content from Introduction

Last updated on 2025-12-01 | Edit this page

Overview

Questions

- What is Natural Language Processing?

- What are some common applications of NLP?

- What makes text different from other data?

- Why not just learn Large Language Models?

- What linguistic properties should we consider when dealing with texts?

Objectives

- Define Natural Language Processing

- Show the most relevant NLP tasks and applications in practice

- Learn how to handle Linguistic Data and how is Linguistics relevant to NLP

What is NLP?

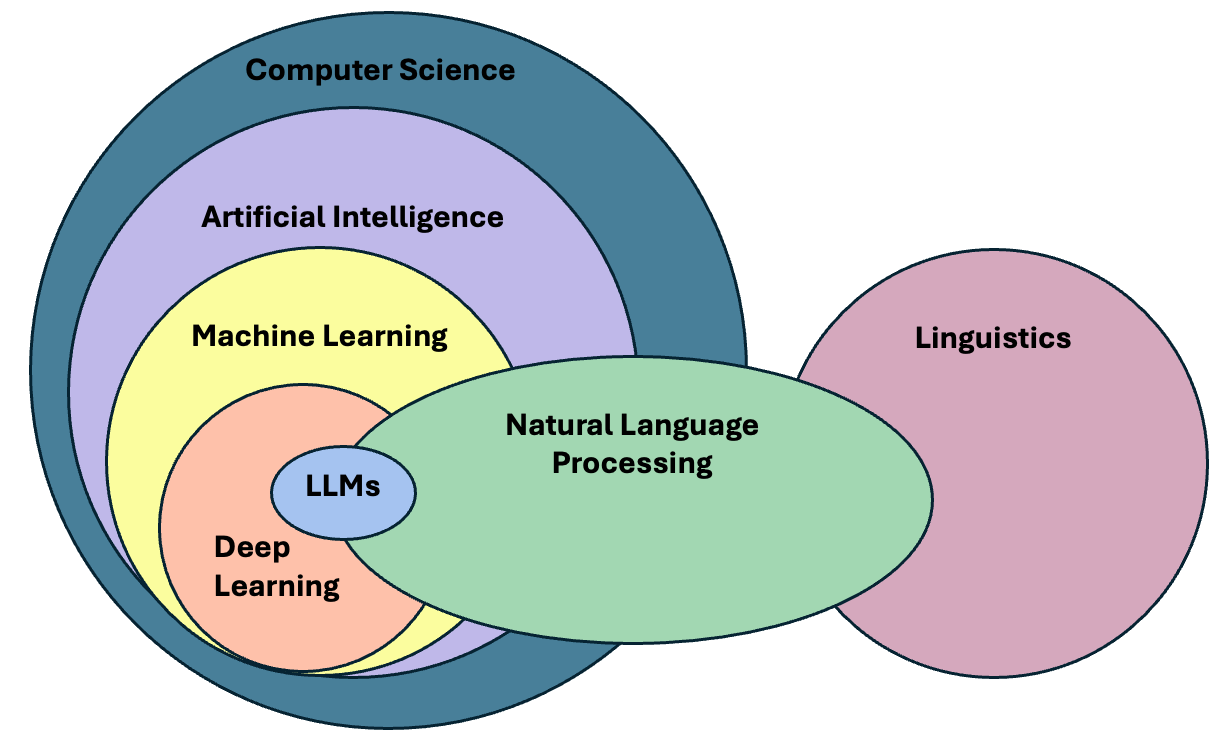

Natural language processing (NLP) is an area of research and application that focuses on making human languages processable for computers, so that they can perform useful tasks. It is therefore not a single method, but a collection of techniques that help us deal with linguistic inputs. The range of techniques spans simple word counts, to Machine Learning (ML) methods, all the way up to complex Deep Learning (DL) architectures.

We use the term “natural language”, as opposed to “artificial language” such as programming languages, which are by design constructed to be easily formalized into machine-readable instructions. In contrast to programming languages, natural languages are complex, ambiguous, and heavily context-dependent, making them challenging for computers to process. To complicate matters, there is not only a single human language. More than 7000 languages are spoken around the world, each with its own grammar, vocabulary, and cultural context.

In this course we will mainly focus on written language, specifically written English, we leave out audio and speech, as they require a different kind of input processing. But consider that we use English only as a convenience so we can address the technical aspects of processing textual data. While ideally most of the concepts from NLP apply to most languages, one should always be aware that certain languages require different approaches to solve seemingly similar problems. We would like to encourage the usage of NLP in other less widely known languages, especially if it is a minority language. You can read more about this topic in this blogpost.

NLP in the real world

Name three to five tools/products that you use on a daily basis and that you think leverage NLP techniques. To do this exercise you may make use of the Web.

These are some of the most popular NLP-based products that we use on a daily basis:

- Agentic Chatbots (ChatGPT, Perplexity)

- Voice-based assistants (e.g., Alexa, Siri, Cortana)

- Machine translation (e.g., Google translate, DeepL, Amazon translate)

- Search engines (e.g., Google, Bing, DuckDuckGo)

- Keyboard autocompletion on smartphones

- Spam filtering

- Spell and grammar checking apps

- Customer care chatbots

- Text summarization tools (e.g., news aggregators)

- Sentiment analysis tools (e.g., social media monitoring)

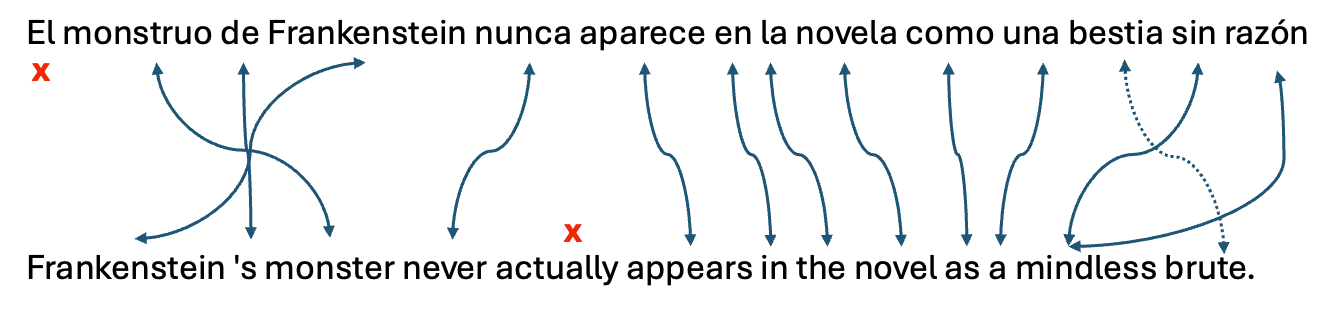

We can already find differences between languages in the most basic step for processing text. Take the problem of segmenting text into meaningful units, most of the times these units are words, in NLP we call this task tokenization. A naive approach is to obtain individual words by splitting text by spaces, as it seems obvious that we always separate words with spaces. Just as human beings break up sentences into words, phrases and other units in order to learn about grammar and other structures of a language, NLP techniques achieve a similar goal through tokenization. Let’s see how can we segment or tokenize a sentence in English:

PYTHON

english_sentence = "Tokenization isn't always trivial."

english_words = english_sentence.split(" ")

print(english_words)

print(len(english_words))OUTPUT

['Tokenization', "isn't", 'always', 'trivial.']

4The words are mostly well separated, however we do not get fully formed words (we have punctuation with the period after “trivial” and also special cases such as the abbreviation of “is not” into “isn’t”). But at least we get a rough count of the number of words present in the sentence. Let’s now look at the same example in Chinese:

PYTHON

# Chinese Translation of "Tokenization is not always trivial"

chinese_sentence = "标记化并不总是那么简单"

chinese_words = chinese_sentence.split(" ")

print(chinese_words)

print(len(chinese_words))OUTPUT

['标记化并不总是那么简单']

1The same example however did not work in Chinese, because Chinese does not use spaces to separate words. This is an example of how the idiosyncrasies of human language affects how we can process them with computers. We therefore need to use a tokenizer specifically designed for Chinese to obtain the list of well-formed words in the text. Here we use a “pre-trained” tokenizer called MicroTokenizer, which uses a dictionary-based approach to correctly identify the distinct words:

PYTHON

import MicroTokenizer # A popular Chinese text segmentation library

chinese_sentence = "标记化并不总是那么简单"

chinese_words = MicroTokenizer.cut(chinese_sentence)

print(chinese_words)

# ['mark', 'transform', 'and', 'no', 'always', 'so', 'simple']

print(len(chinese_words)) # Output: 7OUTPUT

['标记', '化', '并', '不', '总是', '那么', '简单']

7We can trust that the output is valid because we are using a verified

library - MicroTokenizer, even though we don’t speak

Chinese. Another interesting aspect is that the Chinese sentence has

more words than the English one, even though they convey the same

meaning. This shows the complexity of dealing with more than one

language at a time, as is the case in task such as Machine

Translation (using computers to translate speech or text from

one human language to another).

Natural Language Processing deals with the challenges of correctly processing and generating text in any language. This can be as simple as counting word frequencies to detect different writing styles, using statistical methods to classify texts into different categories, or using deep neural networks to generate human-like text by exploiting word co-occurrences in large amounts of texts.

Why should we learn NLP Fundamentals?

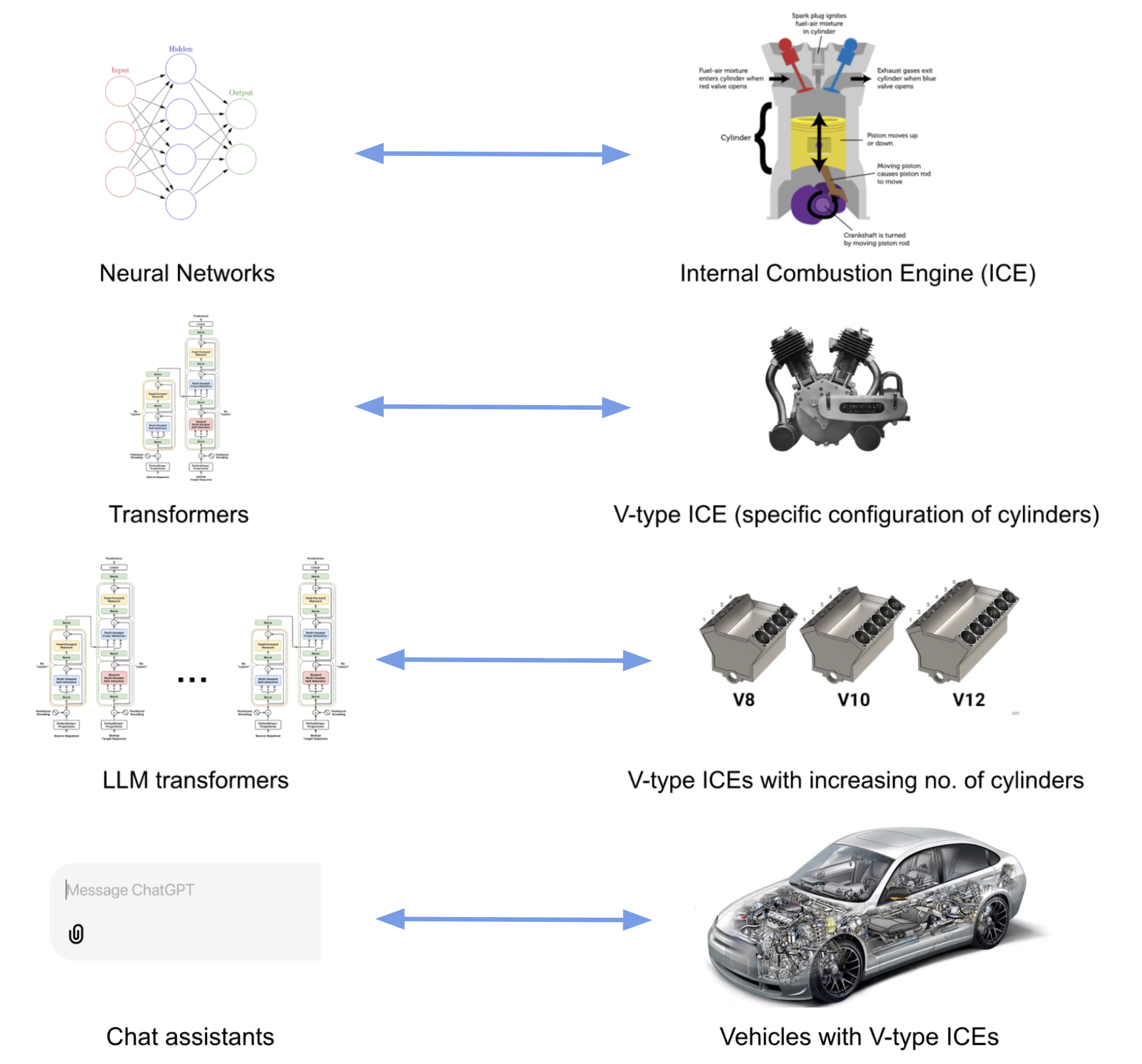

In the past decade, NLP has evolved significantly, especially in the field of deep learning, to the point that it has become embedded in our daily lives, one just needs to look at the term Large Language Models (LLMs), the latest generation of NLP models, which is now ubiquitous in news media and tech products we use on a daily basis.

The term LLM now is often (and wrongly) used as a synonym of Artificial Intelligence. We could therefore think that today we just need to learn how to manipulate LLMs in order to fulfill our research goals involving textual data. The truth is that Language Modeling has always been part of the core tasks of NLP, therefore, by learning NLP you will understand better where are the main ideas behind LLMs coming from.

LLM is a blanket term for an assembly of large neural networks that are trained on vast amounts of text data with the objective of optimizing for language modeling. Once they are trained, they are used to generate human-like text or fine-tunned to perform much more advanced tasks. Indeed, the surprising and fascinating properties that emerge from training models at this scale allows us to solve different complex tasks such as answer elaborate questions, translate languages, solve complex problems, generate narratives that emulate reasoning, and many more, all of this with a single tool.

It is important, however, to pay attention to what is happening behind the scenes in order to be able trace sources of errors and biases that get hidden in the complexity of these models. The purpose of this course is precisely to take a step back, and understand that:

- There are a wide variety of tools available, beyond LLMs, that do not require so much computing power

- Sometimes a much simpler method than an LLM is available that can solve our problem at hand

- If we learn how previous approaches to solve linguistic problems were designed, we can better understand the limitations of LLMs and how to use them effectively

- LLMs excel at confidently delivering information, without any regards for correctness. This calls for a careful design of evaluation metrics that give us a better understanding of the quality of the generated content.

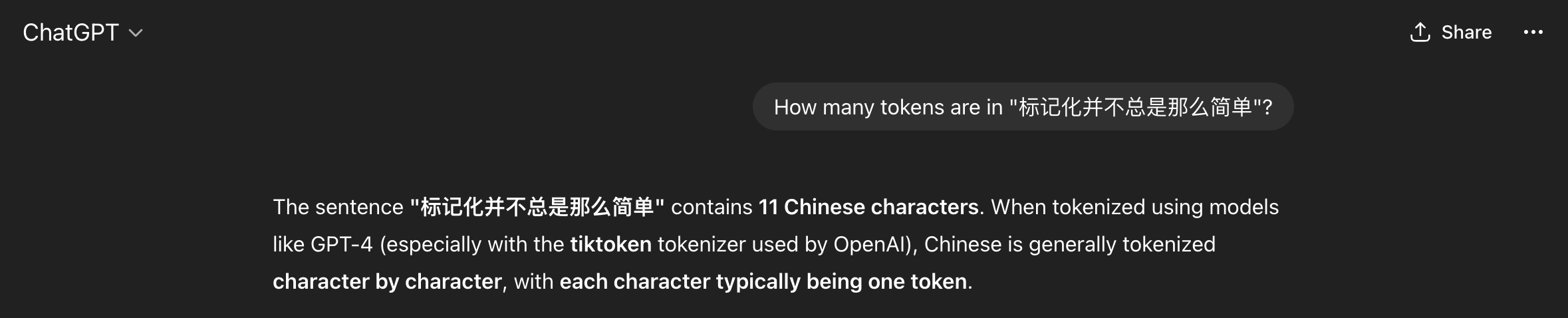

Let’s go back to our problem of segmenting text and see what ChatGPT has to say about tokenizing Chinese text:

We got what sounds like a straightforward confident answer. However, it is not clear how the model arrived at this solution. Second, we do not know whether the solution is correct or not. In this case ChatGPT made some assumptions for us, such as choosing a specific kind of tokenizer to give the answer, and since we do not speak the language, we do not know if this is indeed the best approach to tokenize Chinese text. If we understand the concept of Token (which we will today!), then we can be more informed about the quality of the answer, whether it is useful to us, and therefore make a better use of the model.

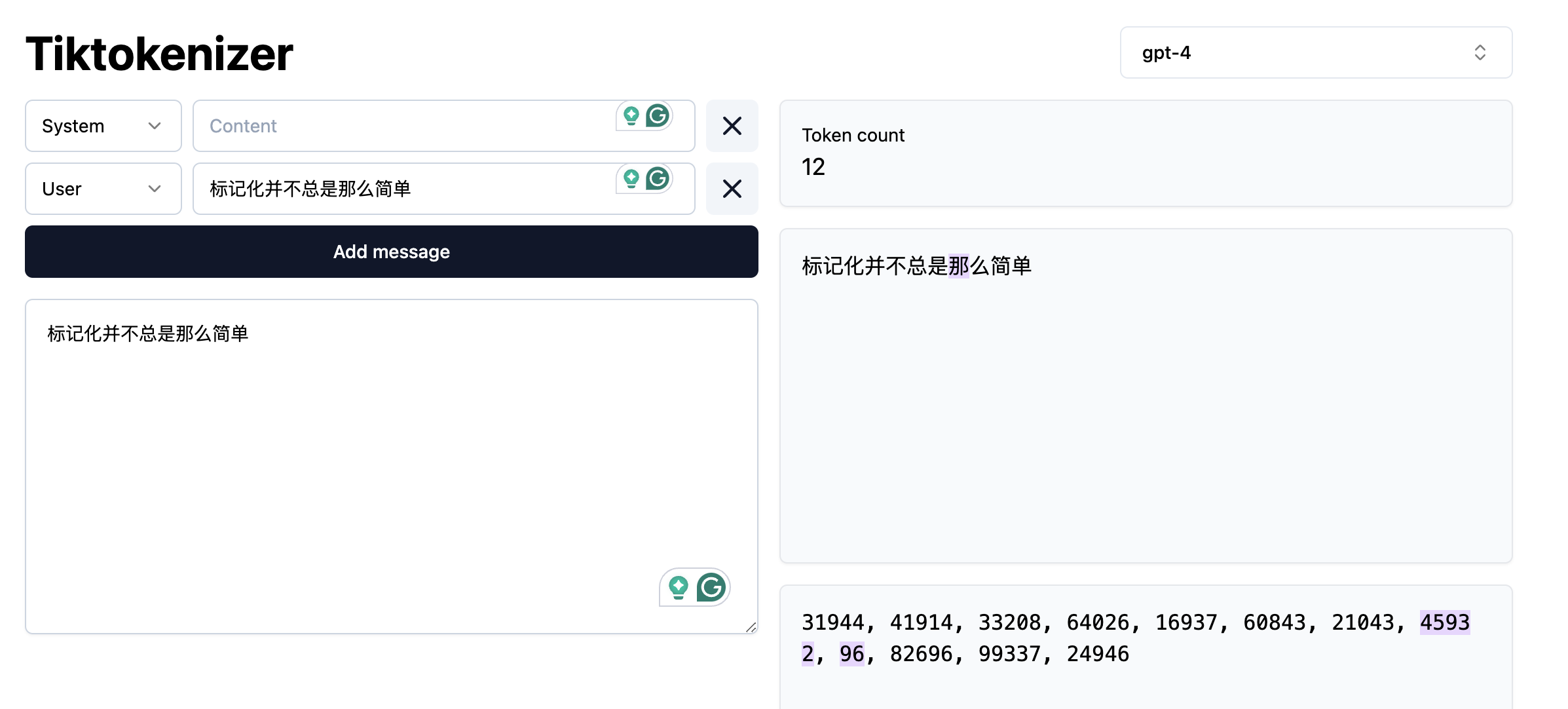

And by the way, ChatGPT was almost correct, in the specific case of the gpt-4 tokenizer, the model will return 12 tokens (not 11!) for the given Chinese sentence.

We can also argue if the statement “Chinese is generally tokenized character by character” is an overstatement or not. In any case, the real question here is: Are we ok with almost correct answers? Please note that this is not a call to avoid using LLM’s but a call for a careful consideration of usage and more importantly, an attempt to explain the mechanisms behind via NLP concepts.

Language as Data

From a more technical perspective, NLP focuses on applying advanced statistical techniques to linguistic data. This is a key factor, since we need a structured dataset with a well defined set of features in order to manipulate it numerically. Your first task as an NLP practitioner is to understand what aspects of textual data are relevant for your application and apply techniques to systematically extract meaningful features from unstructured data (if using statistics or Machine Learning) or choose an appropriate neural architecture (if using Deep Learning) that can help solve our problem at hand.

What is a word?

When dealing with language our basic data unit is usually a word. We deal with sequences of words and with how they relate to each other to generate meaning in text pieces. Thus, our first step will be to load a text file and provide it with structure by splitting it into valid words (tokenization)!

Token vs Word

For simplicity, in the rest of the course we will use the terms “word” and “token” interchangeably, but as we just saw they do not always have the same granularity. Originally the concept of token comprised dictionary words, numeric symbols and punctuation. Nowadays, tokenization has also evolved and became an optimization task on its own (How can we segment text in a way that neural networks learn optimally from text?). Tokenizers allow one to reconstruct or revert back to the original pre-tokenized form of tokens or words, hence we can afford to use token and word as synonyms. If you are curious, you can visualize how different state-of-the-art tokenizers split text in this WebApp

Let’s open a file, read it into a string and split it by spaces. We will print the original text and the list of “words” to see how they look:

PYTHON

with open("data/84_frankenstein_clean.txt") as f:

text = f.read()

print(text[:100])

print("Length:", len(text))

proto_tokens = text.split()

print(proto_tokens[:40])

print(len(proto_tokens))OUTPUT

Letter 1 St. Petersburgh, Dec. 11th, 17-- TO Mrs. Saville, England You will rejoice to hear that no disaster has accompanied the commencement of an en

Length: 417931

Proto-Tokens:

['Letter', '1', 'St.', 'Petersburgh,', 'Dec.', '11th,', '17--', 'TO', 'Mrs.', 'Saville,', 'England', 'You', 'will', 'rejoice', 'to', 'hear', 'that', 'no', 'disaster', 'has', 'accompanied', 'the', 'commencement', 'of', 'an', 'enterprise', 'which', 'you', 'have', 'regarded', 'with', 'such', 'evil', 'forebodings.', 'I', 'arrived', 'here', 'yesterday,', 'and', 'my']

74942Splitting by white space is possible but needs several extra steps to separate out punctuation appropriately. A more sophisticated approach is to use the spaCy library to segment the text into human-readable tokens. First we will download the pre-trained model, in this case we only need the small English version:

This is a model that spaCy already trained for us on a subset of web English data. Hence, the model already “knows” how to tokenize into English words. When the model processes a string, it does not only do the splitting for us but already provides more advanced linguistic properties of the tokens (such as part-of-speech tags, or named entities). You can check more languages and models in the spacy documentation

Pre-trained Models and Fine-tuning

These two terms frequently arise in discussions of NLP. The notion of pre-trained comes from Machine Learning and describes a model that has already been optimized on relevant data for a given task. Such a model can typically be loaded and applied directly to new datasets, often working “out of the box.” without need of further refinement. Ideally, publicly released pre-trained models have undergone rigorous testing for both generalization and output quality on different textual data that it was intended to be used on. Nevertheless, it remains essential to carefully review the evaluation methods used before relying on them in practice. It is also recommended that you perform your own evaluation of the model on text that you intend to use it on.

Sometimes a pre-trained model is of good quality, but it does not fit the nuances of our specific dataset. For example, the model was trained on newspaper articles but you are interested in poetry. In this case, it is common to perform fine-tuning, this means that instead of training your own model from scratch, you start with the knowledge obtained in the pre-trained model and adjust it (fine-tune it) to work optimally with your specific data. If this is done well it leads to increased performance in the specific task you are trying to solve. The advantage of fine-tuning is that you often do not need a large amount of data to improve the results, hence the popularity of the technique.

Let’s now import the model and use it to parse our document:

PYTHON

import spacy

nlp = spacy.load("en_core_web_sm") # we load the small English model for efficiency

doc = nlp(text) # Doc is a python object with several methods to retrieve linguistic properties

# SpaCy-Tokens

tokens = [token.text for token in doc] # Note that spacy tokens are also python objects

print(tokens[:40])

print(len(tokens))OUTPUT

['Letter', '1', 'St.', 'Petersburgh', ',', 'Dec.', '11th', ',', '17', '-', '-', 'TO', 'Mrs.', 'Saville', ',', 'England', 'You', 'will', 'rejoice', 'to', 'hear', 'that', 'no', 'disaster', 'has', 'accompanied', 'the', 'commencement', 'of', 'an', 'enterprise', 'which', 'you', 'have', 'regarded', 'with', 'such', 'evil', 'forebodings', '.']

85713The differences look subtle at the beginning, but if we carefully inspect the way spaCy splits the text, we can see the advantage of using a specialized tokenizer. There are also several useful features that spaCy provides us with. For example, we can choose to extract only symbols, or only alphanumerical tokens, and more advanced linguistic properties, for example we can remove punctuation and only keep alphanumerical tokens:

PYTHON

only_words = [token for token in doc if token.is_alpha] # Only alphanumerical tokens

print(only_words[:50])

print(len(only_words))OUTPUT

[Letter, Petersburgh, TO, Saville, England, You, will, rejoice, to, hear, that, no, disaster, has, accompanied, the, commencement, of, an, enterprise, which, you, have, regarded, with, such, evil, forebodings, I, arrived, here, yesterday, and, my, first, task, is, to, assure, my, dear, sister, of, my, welfare, and, increasing, confidence, in, the]

75062or keep only the verbs from our text:

PYTHON

only_verbs = [token for token in doc if token.pos_ == "VERB"] # Only verbs

print(only_verbs[:10])

print(len(only_verbs))OUTPUT

[rejoice, hear, accompanied, regarded, arrived, assure, increasing, walk, feel, braces]

10148SpaCy also predicts the sentences under the hood for us. It might seem trivial to you as a human reader to recognize where a sentence begins and ends but for a machine, just like finding words, finding sentences is a task on its own, for which sentence-segmentation models exist. In the case of Spacy, we can access the sentences like this:

PYTHON

sentences = [sent.text for sent in doc.sents] # Sentences are also python objects

print(sentences[:5])

print(len(sentences))OUTPUT

['Letter 1 St. Petersburgh, Dec. 11th, 17-- TO Mrs. Saville, England You will rejoice to hear that no disaster has accompanied the commencement of an enterprise which you have regarded with such evil forebodings.', 'I arrived here yesterday, and my first task is to assure my dear sister of my welfare and increasing confidence in the success of my undertaking.', 'I am already far north of London, and as I walk in the streets of Petersburgh, I feel a cold northern breeze play upon my cheeks, which braces my nerves and fills me with delight.', 'Do you understand this feeling?', 'This breeze, which has traveled from the regions towards which I am advancing, gives me a foretaste of those icy climes.']

3317We can also see what named entities the model predicted:

OUTPUT

1713

DATE Dec. 11th

CARDINAL 17

PERSON Saville

GPE England

DATE yesterdayThese are just basic tests to demonstrate how you can immediately process the structure of text using existing NLP libraries. The spaCy models we used are simpler relative to state of the art approaches. So the more complex the input text and task, the more errors are likely to appear when using such models. The biggest advantage of using these existing libraries is that they help you transform unstructured plain text files into structured data that you can manipulate later for your own goals such as training language models.

Computing stats with spaCy

Use the spaCy Doc object to compute an aggregate statistic about the

Frankenstein book. HINT: Use the python set,

dictionary or Counter objects to hold the

accumulative counts. For example:

- Give the list of the 20 most common verbs in the book

- How many different Places are identified in the book? (Label = GPE)

- How many different entity categories are in the book?

- Who are the 10 most mentioned PERSONs in the book?

- Or any other similar aggregate you want…

Let’s describe the solution to obtain all the different entity categories. For that we should iterate the whole text and keep a python set with all the seen labels.

PYTHON

entity_types = set()

for ent in doc.ents:

entity_types.add(ent.label_)

print(entity_types)

print(len(entity_types))OUTPUT

{'CARDINAL', 'GPE', 'WORK_OF_ART', 'ORDINAL', 'DATE', 'LAW', 'PRODUCT', 'QUANTITY', 'ORG', 'TIME', 'PERSON', 'LOC', 'LANGUAGE', 'FAC', 'NORP'}

15NLP tasks

The previous exercise shows that a great deal of NLP techniques are embedded in our daily life. Indeed NLP is an important component in a wide range of software applications that we use in our day to day activities.

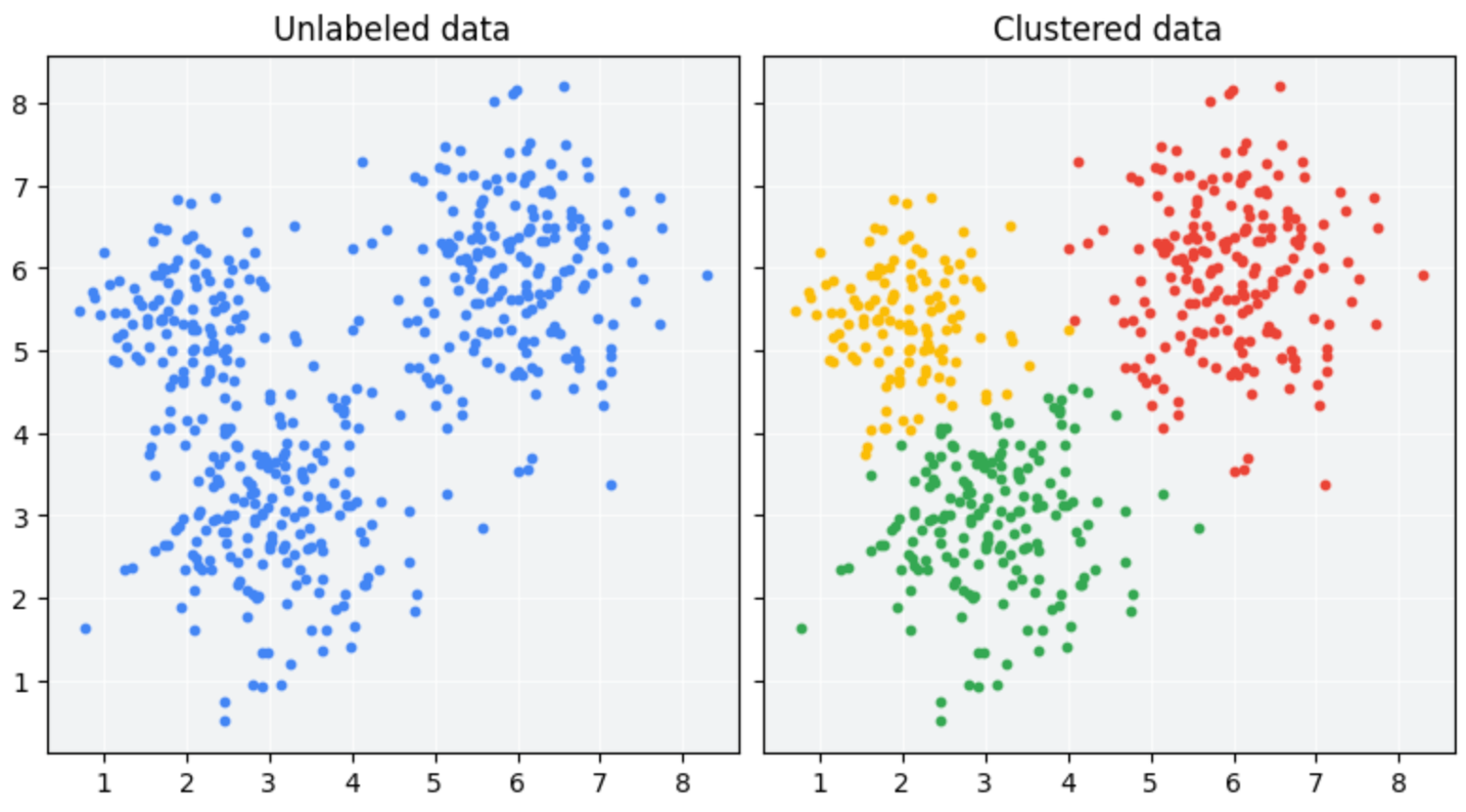

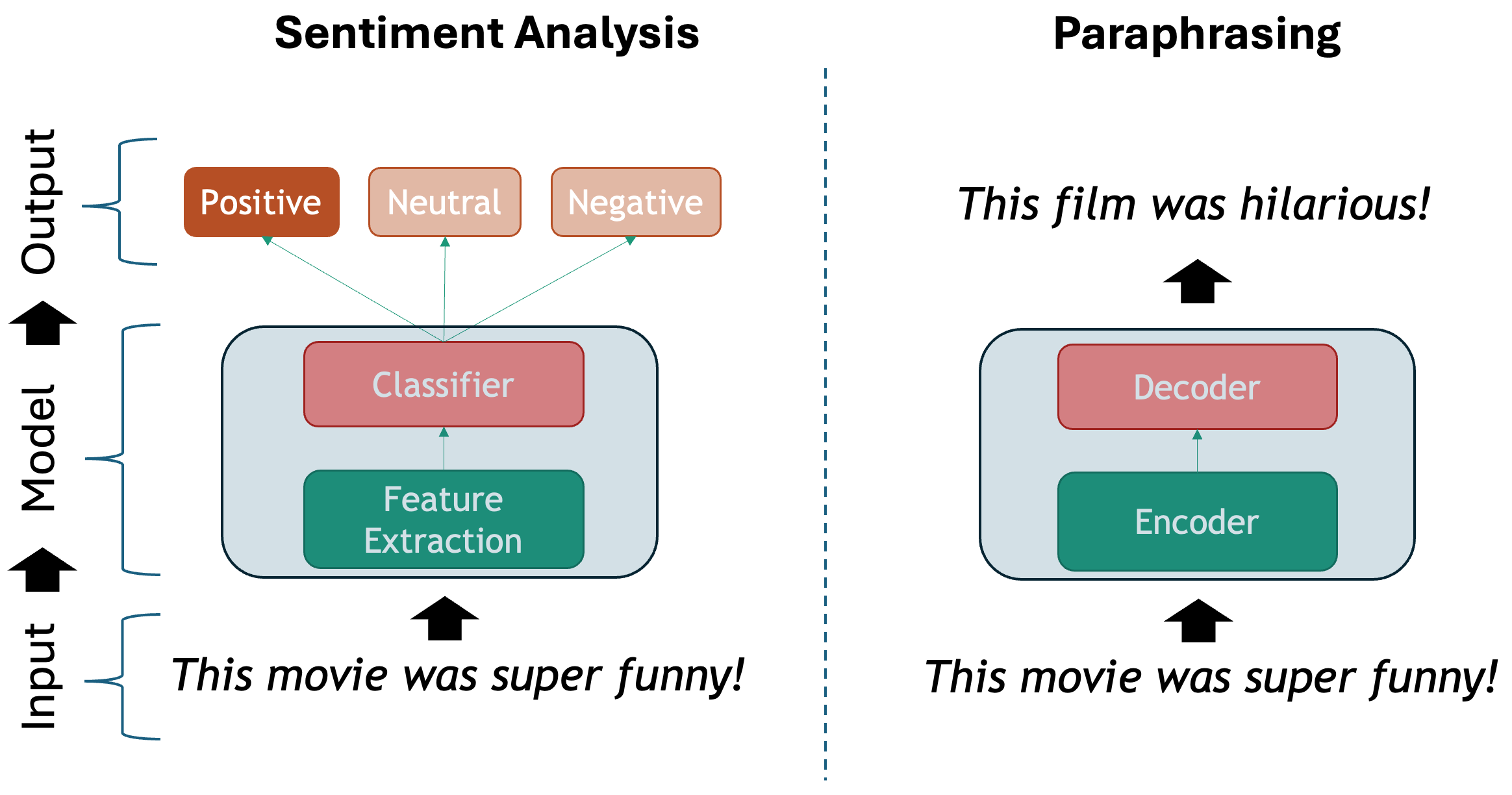

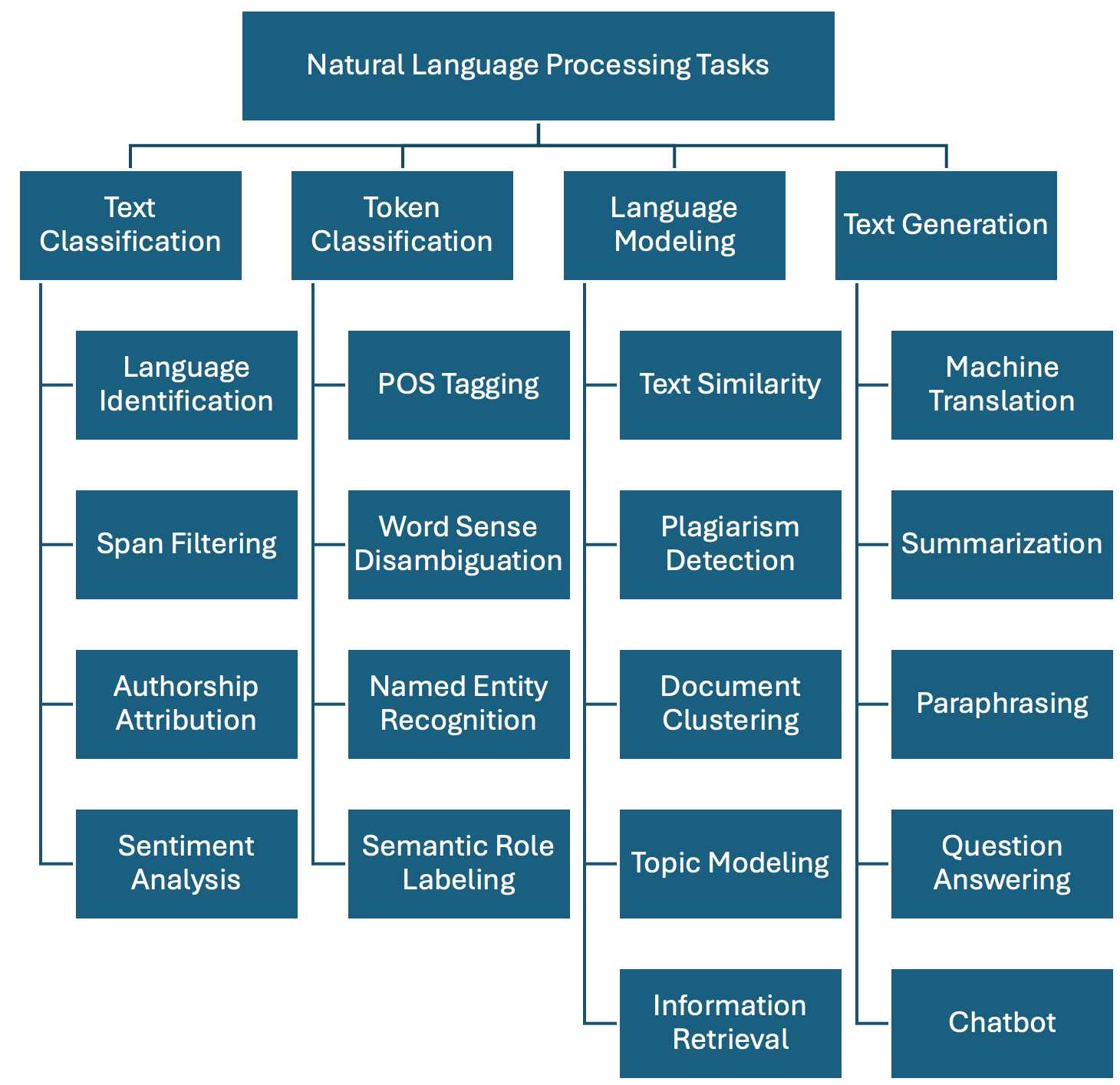

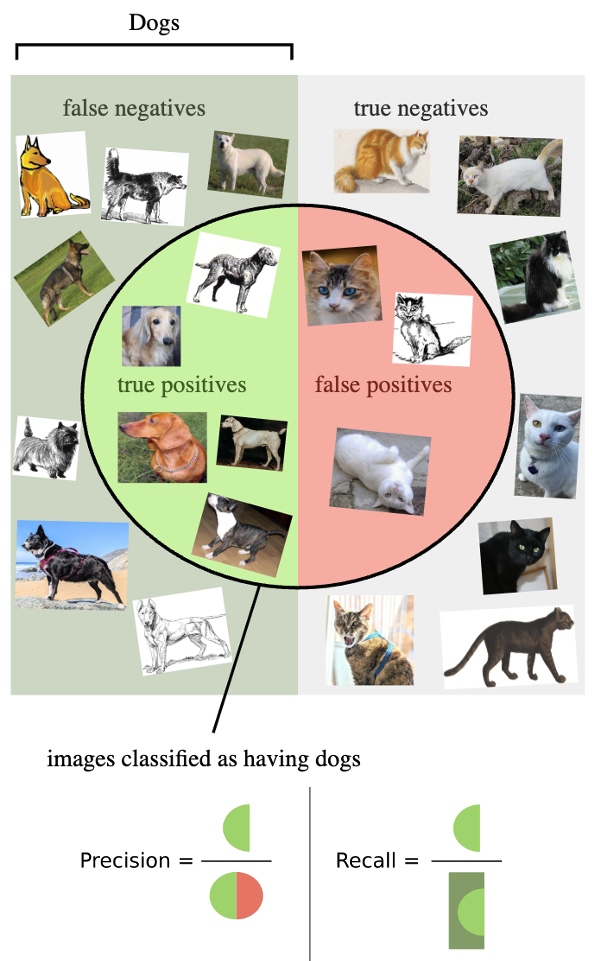

There are several ways to describe the tasks that NLP solves. From the Machine Learning perspective, we have:

- Unsupervised tasks: exploiting existing patterns from large amounts of text.

- Supervised tasks: learning to classify texts given a labeled set of examples

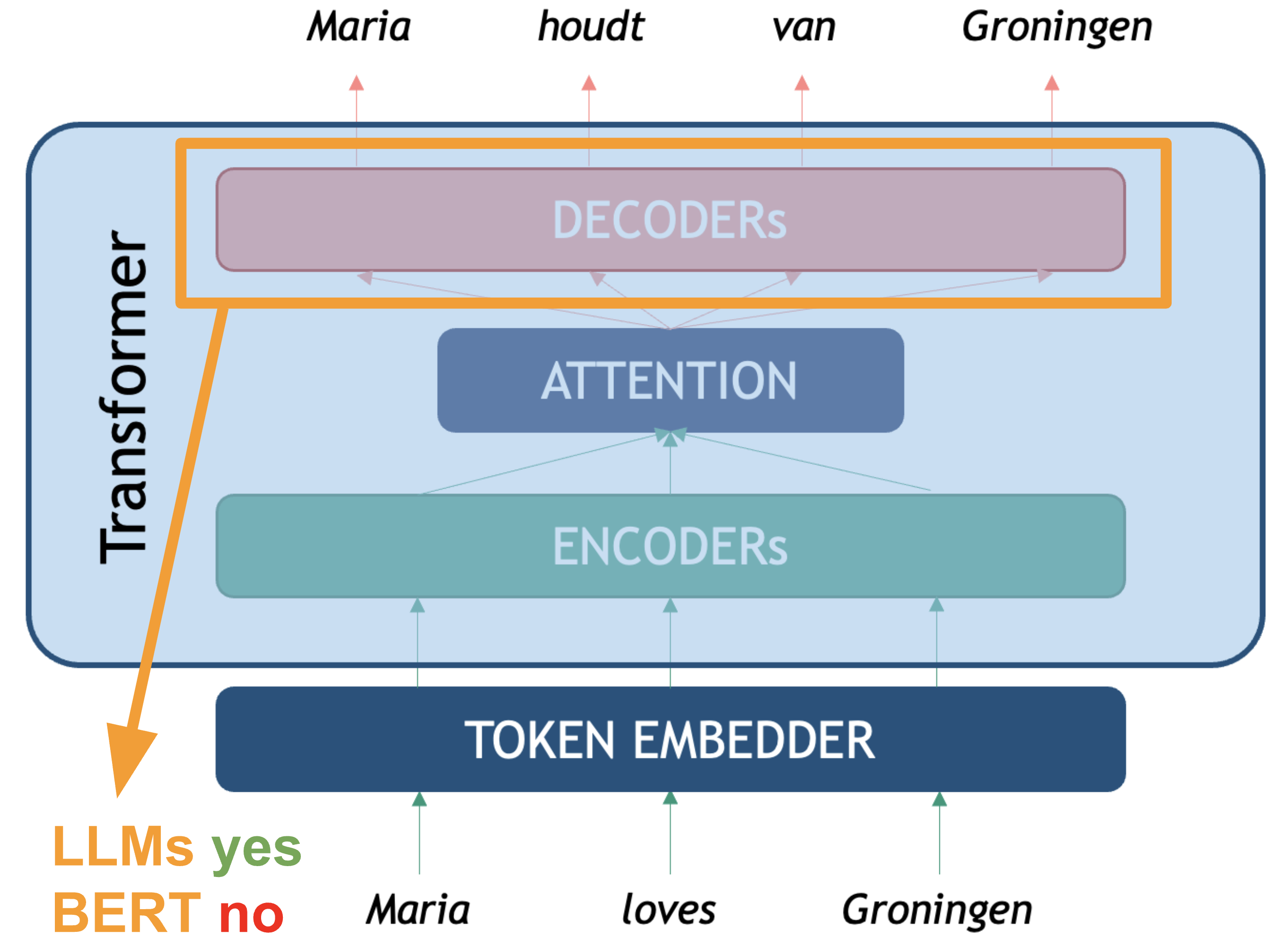

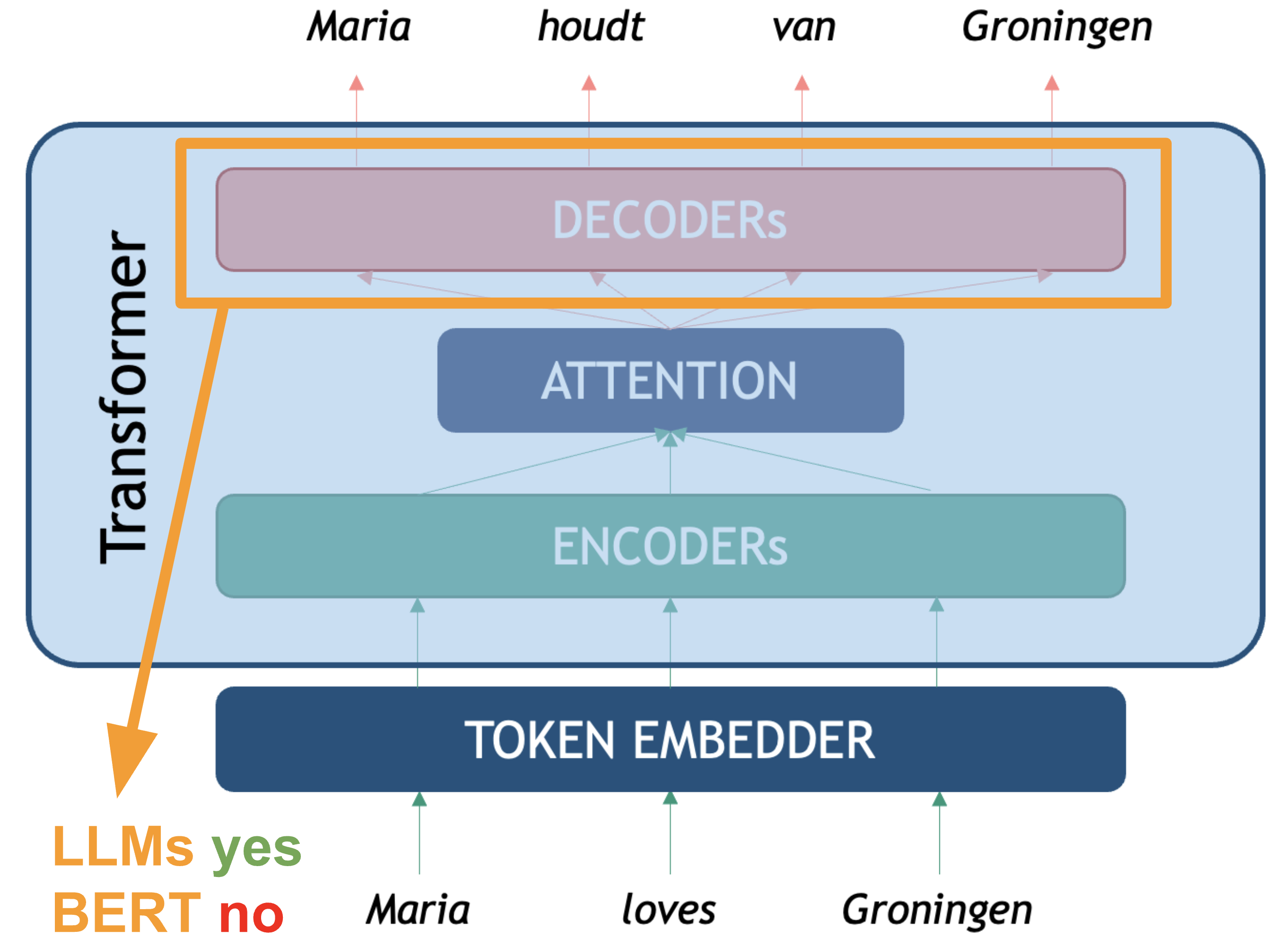

The Deep Learning perspective usually involves the selection of the right model among different neural network architectures to tackle an NLP task, such as:

Multi-layer Perceptron

Recurrent Neural Network

Convolutional Neural Network

Long-Short Term Memory Networks (LSTMs)

Transformer (including LLMs!)

Regardless of the chosen method, below we show one possible taxonomy of NLP tasks. The tasks are grouped together with some of their most prominent applications. This is definitely a non-exhaustive list, as in reality there are hundreds of them, but it is a good start:

-

Text Classification: Assign one or more labels to a given piece of text. This text is usually referred to as a document and in our context this can be a sentence, a paragraph, a book chapter, etc…

- Language Identification: determining the language in which a particular input text is written.

- Spam Filtering: classifying emails into spam or not spam based on their content.

- Authorship Attribution: determining the author of a text based on its style and content (based on the assumption that each author has a unique writing style).

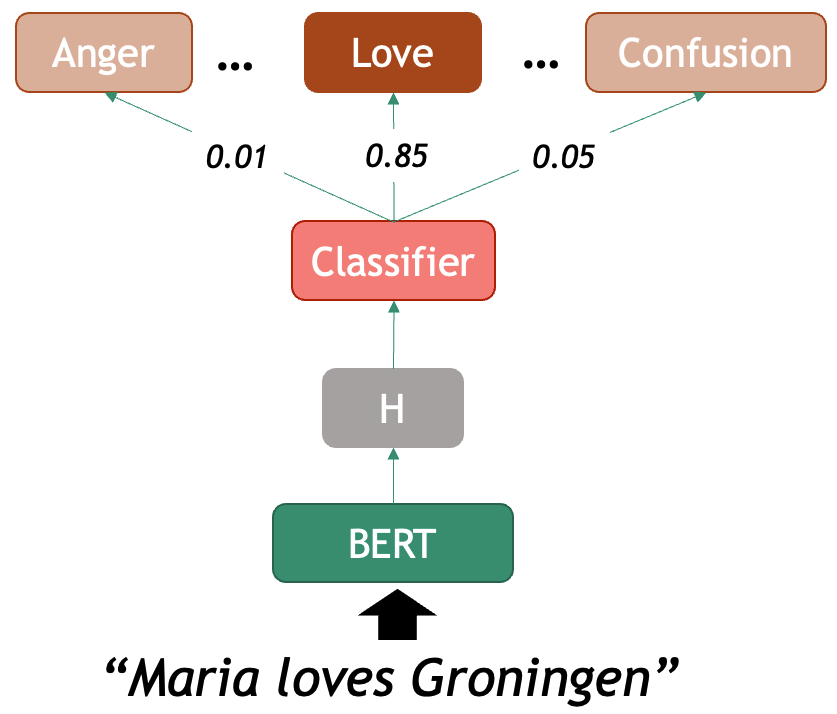

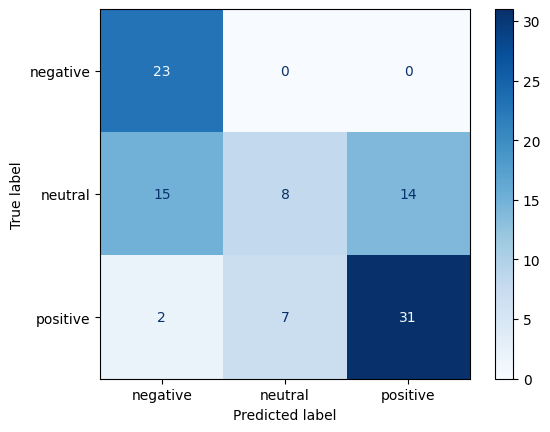

- Sentiment Analysis: classifying text into positive, negative or neutral sentiment. For example, in the sentence “I love this product!”, the model would classify it as positive sentiment.

-

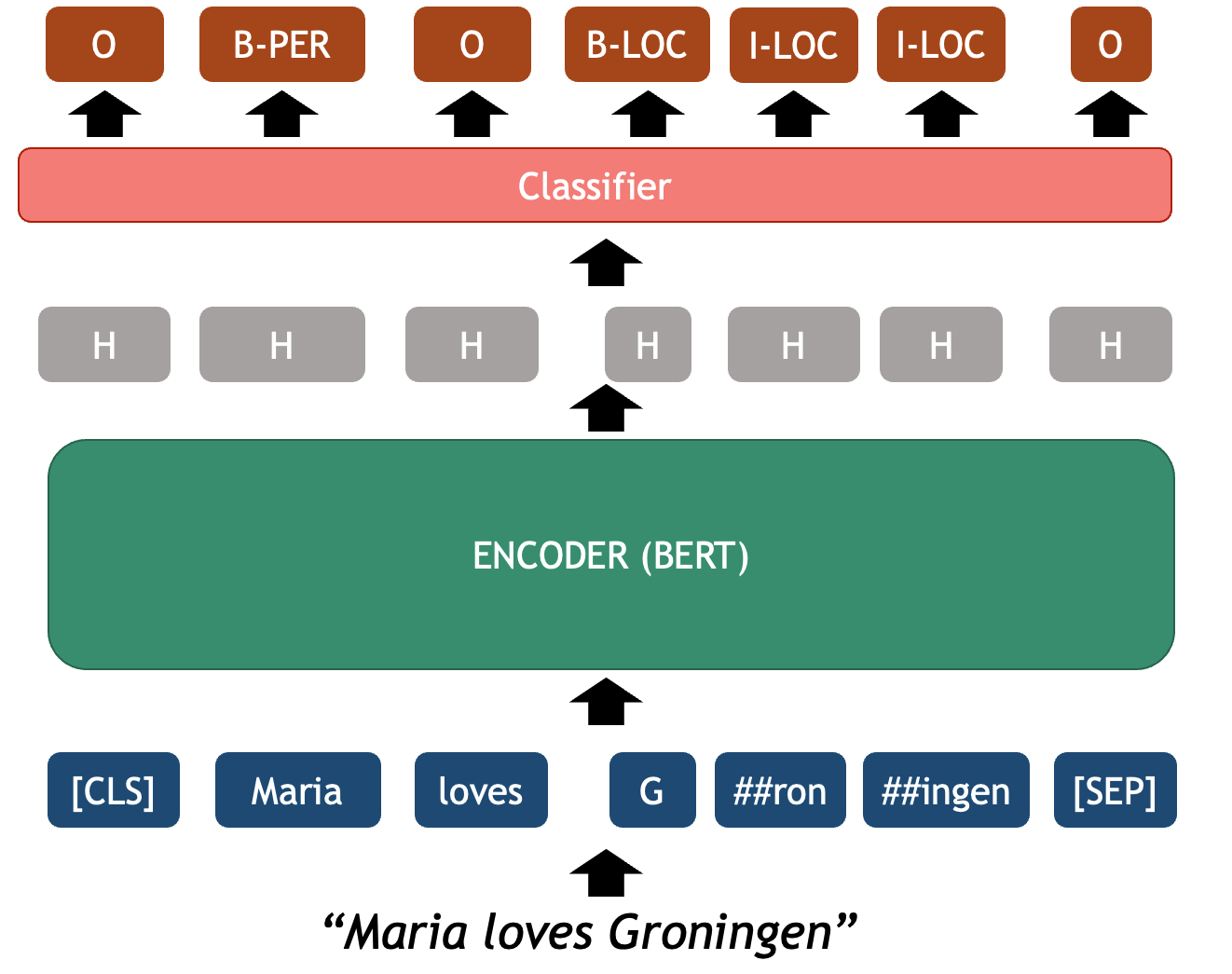

Token Classification: The task of individually assigning one label to each word in a document. This is a one-to-one mapping; however, because words do not occur in isolation and their meaning depend on the sequence of words to the left or the right of them, this is also called Word-In-Context Classification or Sequence Labeling and usually involves syntactic and semantic analysis.

- Part-Of-Speech Tagging: is the task of assigning a part-of-speech label (e.g., noun, verb, adjective) to each word in a sentence.

- Chunking: splitting a running text into “chunks” of words that together represent a meaningful unit: phrases, sentences, paragraphs, etc.

- Word Sense Disambiguation: based on the context what does a word mean (think of “book” in “I read a book.” vs “I want to book a flight.”)

- Named Entity Recognition: recognize world entities in text, e.g. Persons, Locations, Book Titles, or many others. For example “Mary Shelley” is a person, “Frankenstein or the Modern Prometheus” is a book, etc.

- Semantic Role Labeling: the task of finding out “Who did what to whom?” in a sentence: information from events such as agents, participants, circumstances, subject-verb-object triples etc.

- Relation Extraction: the task of identifying named relationships between entities in a text, e.g. “Apple is based in California” has the relation (Apple, based_in, California).

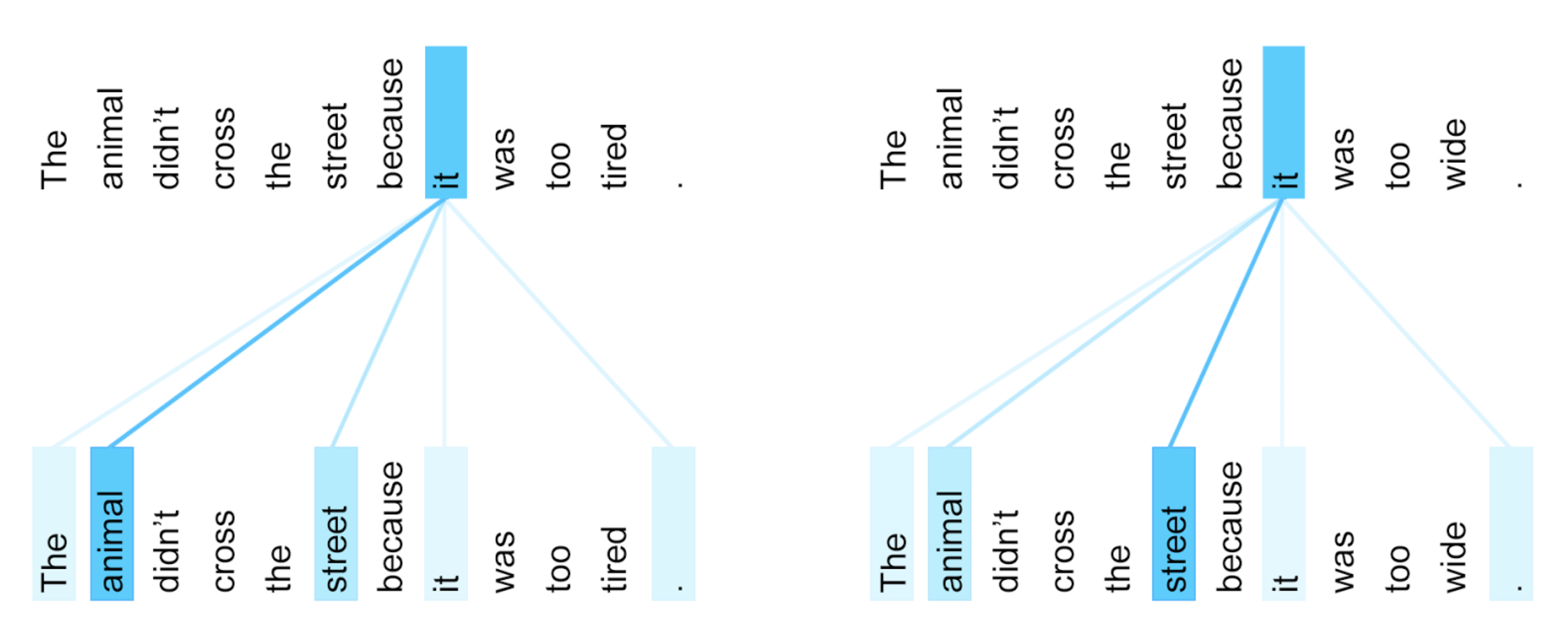

- Co-reference Resolution: the task of determining which words refer to the same entity in a text, e.g. “Mary is a doctor. She works at the hospital.” Here “She” refers to “Mary”.

- Entity Linking: the task of disambiguation of named entities in a text, linking them to their corresponding entries in a knowledge base, e.g. Mary Shelley’s biography in Wikipedia.

-

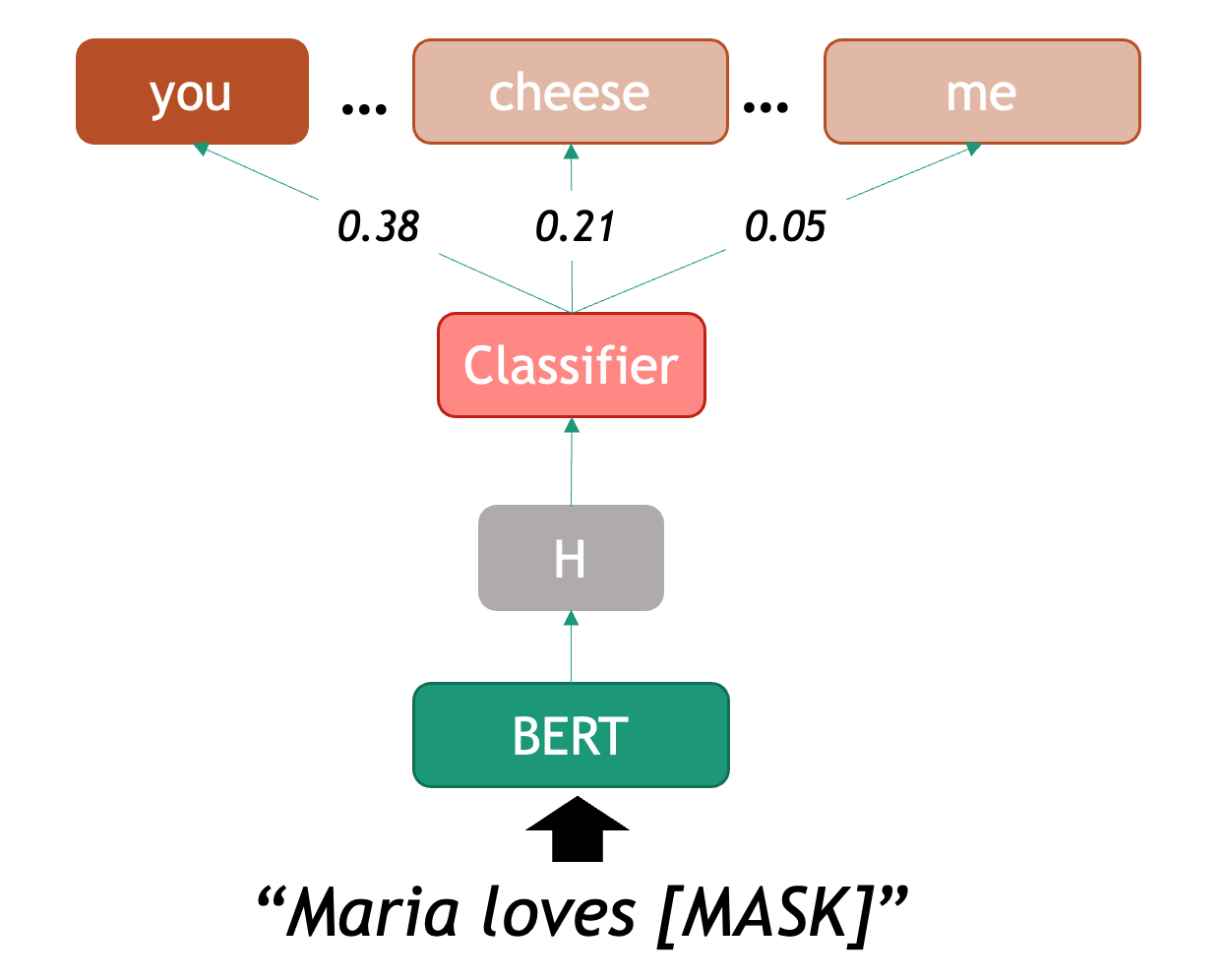

Language Modeling: Given a sequence of words, the model predicts the next word. For example, in the sentence “The capital of France is _____”, the model should predict “Paris” based on the context. This task was initially useful for building solutions that require speech and optical character recognition (even handwriting), language translation and spelling correction. Nowadays this has scaled up to the LLMs that we know. A byproduct of pre-trained Language Modeling is the vectorized representation of texts which allows to perform specific tasks such as:

- Text Similarity: The task of determining how similar two pieces of text are.

- Plagiarism detection: determining whether a piece of text, B, is close enough to another known piece of text, A, which increases the likelihood that it was plagiarized.

- Document clustering: grouping similar texts together based on their content.

- Topic modelling: a specific instance of clustering, here we automatically identify abstract “topics” that occur in a set of documents, where each topic is represented as a cluster of words that frequently appear together.

- Information Retrieval: this is the task of finding relevant information or documents from a large collection of unstructured data based on user’s query, e.g., “What’s the best restaurant near me?”.

-

Text Generation: the task of generating text based on a given input. This is usually done by generating the output word by word, conditioned on both the input and the output so far. The difference with Language Modeling is that for generation there are higher-level generation objectives such as:

- Machine Translation: translating text from one language to another, e.g., “Hello” in English to “Que tal” in Spanish.

- Summarization: generating a concise summary of a longer text. It can be abstractive (generating new sentences that capture the main ideas of the original text) but also extractive (selecting important sentences from the original text).

- Paraphrasing: generating a new sentence that conveys the same meaning as the original sentence, e.g., “The cat is on the mat.” to “The mat has a cat on it.”.

- Question Answering: given a question and a context, the model generates an answer. For example, given the question “What is the capital of France?” and the Wikipedia article about France as the context, the model should answer “Paris”. This task can be approached as a text classification problem (where the answer is one of the predefined options) or as a generative task (where the model generates the answer from scratch).

- Conversational Agent (ChatBot): Building a system that interacts with a user via natural language, e.g., “What’s the weather today, Siri?”. These agents are widely used to improve user experience in customer service, personal assistance and many other domains.

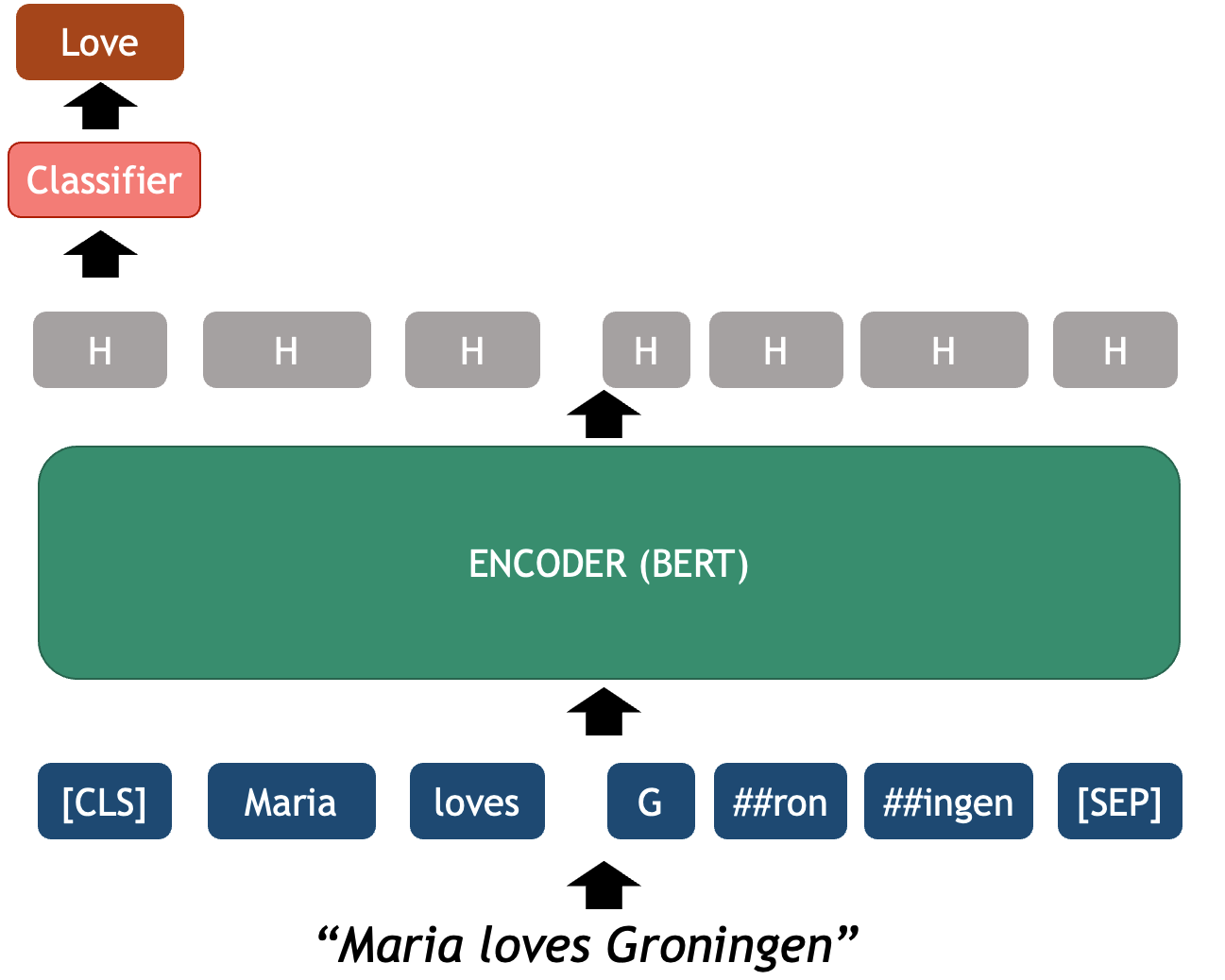

For the purposes of this episode, we will focus on supervised learning tasks and we will emphasize how the Transformer architecture is used to tackle some of them.

Inputs and Outputs

Look at the NLP Task taxonomy described above and write down a couple of examples of (Input, Output) instance pairs that you would need in order to train a supervised model for your chosen task.

For Example: the task of labeling an E-mail as spam or not-spam

Label_Set: [SPAM, NO-SPAM]

Training Instances:

Input: “Dear Sir, you’ve been awarded a grant of 10 million Euros and it is only available today. Please contact me ASAP!” Output: SPAM

Input: “Dear Madam, as agreed by phone here is the sales report for last month.” Output: NO-SPAM

Example B: the task of Conversational agent. Here are 3 instances to provide supervision for a model:

Label_Set: Output vocabulary. This is: learning to generate token by token a coherent response that addresses the input question.

Input: “Hello, how are you?” Output: “I am fine thanks!”

Input: “Do you know at what time is the World Cup final today?” Output: “Yes, the World Cup final will be at 6pm CET”

Input: “What color is my shirt?” Output: “Sorry, I am unable to see what you are wearing.”

A Primer on Linguistics

Natural language exhibits a set of properties that make it more challenging to process than other types of data such as tables, spreadsheets or time series. Language is hard to process because it is compositional, ambiguous, discrete and sparse.

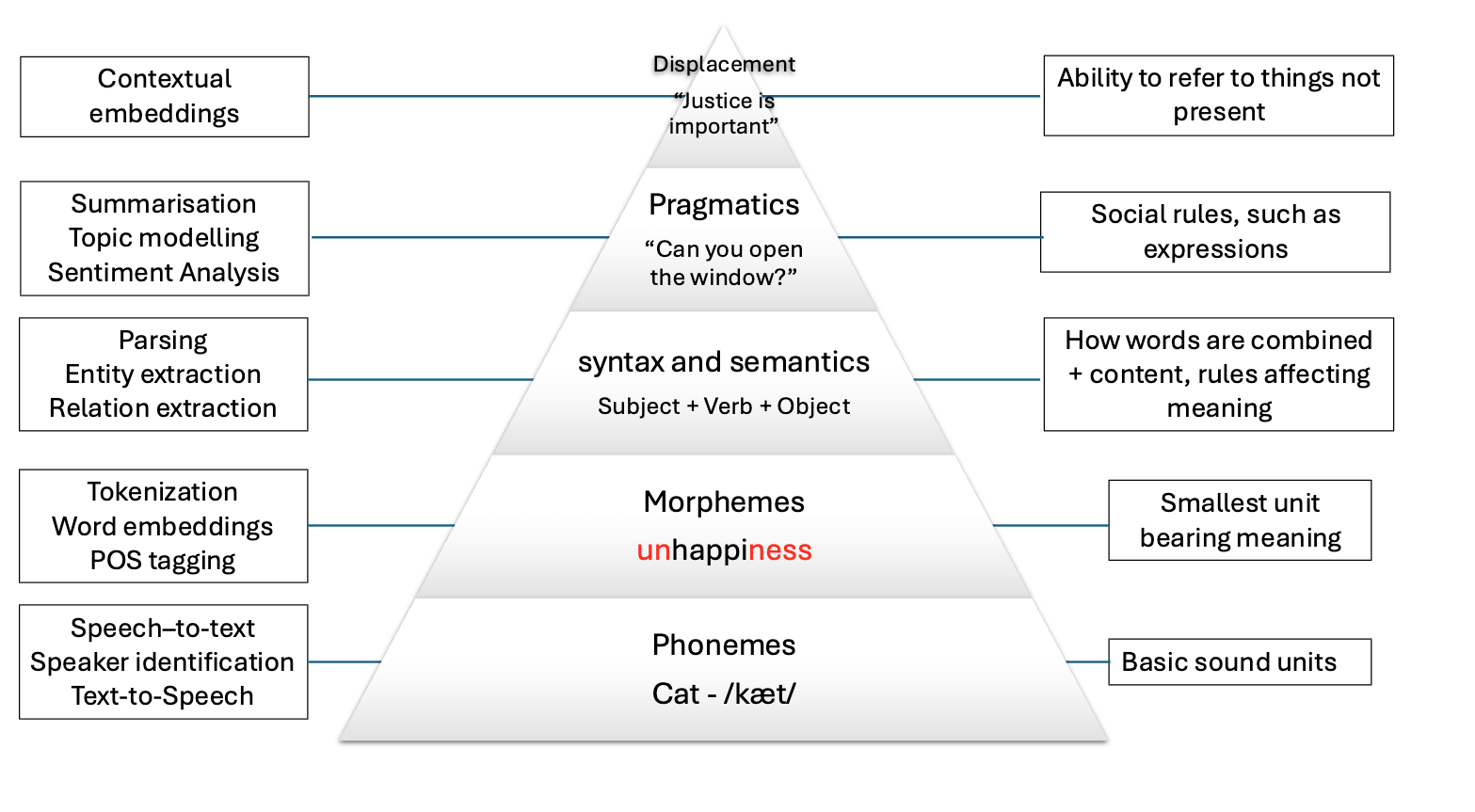

Compositionality

The basic elements of written languages are characters, a sequence of characters form words, and words in turn denote objects, concepts, events, actions and ideas (Goldberg, 2016). Subsequently words form phrases and sentences which are used in communication and depend on the context in which they are used. We as humans derive the meaning of utterances from interpreting contextual information that is present at different levels at the same time:

The first two levels refer to spoken language only, and the other four levels are present in both speech and text. Because in principle machines do not have access to the same levels of information that we do (they can only have independent audio, textual or visual inputs), we need to come up with clever methods to overcome this significant limitation. Knowing the levels of language is important so we consider what kind of problems we are facing when attempting to solve our NLP task at hand.

Ambiguity

The disambiguation of meaning is usually a by-product of the context in which utterances are expressed and also the historic accumulation of interactions which are transmitted across generations (think for instance to idioms – these are usually meaningless phrases that acquire meaning only if situated within their historical and societal context). These characteristics make NLP a particularly challenging field to work in.

We cannot expect a machine to process human language and simply understand it as it is. We need a systematic, scientific approach to deal with it. It’s within this premise that the field of NLP is born, primarily interested in converting the building blocks of human/natural language into something that a machine can understand.

The image below shows how the levels of language relate to a few NLP applications:

Levels of ambiguity

Discuss what the following sentences mean. What level of ambiguity do they represent?:

“The door is unlockable from the inside.” vs “Unfortunately, the cabinet is unlockable, so we can’t secure it”

“I saw the cat with the stripes” vs “I saw the cat with the telescope”

“Please don’t drive the cat to the vet!” vs “Please don’t drive the car tomorrow!”

“I never said she stole my money.” (re-write this sentence multiple times and each time emphasize a different word in uppercases).

This is why the previous statements were difficult:

- “Un-lockable vs Unlock-able” is a Morphological ambiguity: Same word form, two possible meanings

- “I saw the cat with the telescope” has a Syntactic ambiguity: Same sentence structure, different properties

- “drive the cat” vs “drive the car” shows a Semantic ambiguity: Syntactically identical sentences that imply quite different actions.

- “I NEVER said she stole MY money.” is a Pragmatic ambiguity: Meaning relies on word emphasis

Whenever you are solving a specific task, you should ask yourself what kind of ambiguity can affect your results, and to what degrees? At what level are your assumptions operating when defining your research questions? Having the answers to this can save you a lot of time when debugging your models. Sometimes the most innocent assumptions (for example using the wrong tokenizer) can create enormous performance drops even when the higher level assumptions were correct.

Sparsity

Another key property of linguistic data is its sparsity. This means that if we are hunting for a specific phenomenon, we may often realize it barely occurs inside a vast amount of text. Imagine we have the following brief text and we are interested in pizzas and hamburgers:

PYTHON

# A mini-corpus where our target words appear

text = """

I am hungry. Should I eat delicious pizza?

Or maybe I should eat a juicy hamburger instead.

Many people like to eat pizza because is tasty, they think pizza is delicious as hell!

My friend prefers to eat a hamburger and I agree with him.

We will drive our car to the restaurant to get the succulent hamburger.

Right now, our cat sleeps on the mat so we won't take him.

I did not wash my car, but at least the car has gasoline.

Perhaps when we come back we will take out the cat for a walk.

The cat will be happy then.

"""We can first use spaCy to tokenize the text and do some direct word count:

PYTHON

import spacy

nlp = spacy.load("en_core_web_sm")

doc = nlp(text)

words = [token.lower_ for token in doc if token.is_alpha] # Filter out punctuation and new lines

print(words)

print(len(words))We have in total 104 words, but we actually want to know how many times each word appears. For that we use the Python Counter and then we can visualize it inside a chart with matplotlib:

PYTHON

from collections import Counter

import matplotlib.pyplot as plt

word_count = Counter(words).most_common()

tokens = [item[0] for item in word_count]

frequencies = [item[1] for item in word_count]

plt.figure(figsize=(18, 6))

plt.bar(tokens, frequencies)

plt.xticks(rotation=90)

plt.show()This bar chart shows us several things about sparsity, even with such a small text:

The most common words are filler words such as “the”, “of”, “not” etc. These are known as stopwords because such words by themselves generally do not hold a lot of information about the meaning of the piece of text.

The two concepts (hamburger and pizza) we are interested in, appear only 3 times each, out of 104 words (comprising only ~3% of our corpus). This number only goes lower as the corpus size increases

There is a long tail in the distribution, where actually a lot of meaningful words are located.

Stop Words

Stop words are extremely frequent syntactic filler words that do not provide relevant semantic information for our use case. For some use cases it is better to ignore them in order to fight the sparsity phenomenon. However, consider that in many other use cases the syntactic information that stop words provide is crucial to solve the task.

SpaCy has a pre-defined list of stopwords per language. To explicitly load the English stop words we can do:

PYTHON

from spacy.lang.en.stop_words import STOP_WORDS

print(STOP_WORDS) # a set of common stopwords

print(len(STOP_WORDS)) # There are 326 words considered in this listYou can also manually extend the list of stop words if you are interested in ignoring other unlisted terms that you encounter in your data.

Alternatively, you can filter out stop words when iterating your tokens (remember the spaCy token properties!) like this:

Sparsity is closely related to what is frequently called domain-specific data. The discourse context in which language is used varies importantly across disciplines (domains). Take for example law texts and medical texts which are typically filled with domain-specific jargon. We should expect the top part of the distribution to contain mostly the same words as they tend to be stop words. But once we remove the stop words, the top of the distribution will contain very different content words.

Also, the meaning of concepts described in each domain might significantly differ. For example the word “trial” refers to a procedure for examining evidence in court, but in the medical domain this could refer to a clinical “trial” which is a procedure to test the efficacy and safety of treatments on patients. For this reason there are specialized models and corpora that model language use in specific domains. The concept of fine-tuning a general purpose model with domain-specific data is also popular, even when using LLMs.

Discreteness

There is no inherent relationship between the form of a word and its meaning. For this reason, by syntactic or lexical analysis alone, there is no automatic way of knowing if two words are similar in meaning or how they relate semantically to each other. For example, “car” and “cat” appear to be very closely related at the morphological level, only one letter needs to change to convert one word into the other. But the two words represent concepts or entities in the world which are very different. Conversely, “pizza” and “hamburger” look very different (they only share one letter in common) but are more closely related semantically, because they both refer to typical fast foods.

How can we automatically know that “pizza” and “hamburger” share more semantic properties than “car” and “cat”? One way is by looking at the context (neighboring words) of these words. This idea is the principle behind distributional semantics, and aims to look at the statistical properties of language, such as word co-occurrences (what words are typically located nearby a given word in a given corpus of text), to understand how words relate to each other.

Let’s keep using the list of words from our mini corpus:

Now we will create a dictionary where we accumulate the words that appear around our words of interest. In this case we want to find out, according to our corpus, the most frequent words that occur around pizza, hamburger, car and cat:

PYTHON

target_words = ["pizza", "hamburger", "car", "cat"] # words we want to analyze

co_occurrence = {word: [] for word in target_words}

co_occurrenceWe iterate over each word in our corpus, collecting its surrounding

words within a defined window. A window consists of a set number of

words to the left and right of the target word, as determined by the

window_size parameter. For example, with window_size = 3, a

word W has a window of six neighboring words—three

preceding and three following—excluding W itself:

PYTHON

window_size = 3 # How many words to look at on each side

for i, word in enumerate(words):

# If the current word is one of our target words...

if word in target_words:

start = max(0, i - window_size) # get the start index of the window

end = min(len(words), i + 1 + window_size) # get the end index of the window

context = words[start:i] + words[i+1:end] # Exclude the target word itself

co_occurrence[word].extend(context)

print(co_occurrence)We call the words that fall inside this window the

context of a target word. We can already see other

interesting related words in the context of each target word, but a lot

of non interesting stuff is in there. To obtain even nicer results, we

can delete the stop words from the context window before adding it to

the dictionary. You can define your own stop words, here we use the

STOP_WORDS list provided by spaCy:

PYTHON

from spacy.lang.en.stop_words import STOP_WORDS

co_occurrence = {word: [] for word in target_words} # Empty the dictionary

window_size = 3 # How many words to look at on each side

for i, word in enumerate(words):

# If the current word is one of our target words...

if word in target_words:

start = max(0, i - window_size) # get the start index of the window

end = min(len(words), i + 1 + window_size) # get the end index of the window

context = words[start:i] + words[i+1:end] # Exclude the target word itself

context = [w for w in context if w not in STOP_WORDS] # Filter out stop words

co_occurrence[word].extend(context)

print(co_occurrence)Our dictionary keys represent each word of interest, and the values are a list of the words that occur within window_size distance of the word. Now we use a Counter to get the most common items:

PYTHON

# Print the most common context words for each target word

print("Contextual Fingerprints:\n")

for word, context_list in co_occurrence.items():

# We use Counter to get a frequency count of context words

fingerprint = Counter(context_list).most_common(5)

print(f"'{word}': {fingerprint}")OUTPUT

Contextual Fingerprints:

'pizza': [('eat', 2), ('delicious', 2), ('tasty', 2), ('maybe', 1), ('like', 1)]

'hamburger': [('eat', 2), ('juicy', 1), ('instead', 1), ('people', 1), ('agree', 1)]

'car': [('drive', 1), ('restaurant', 1), ('wash', 1), ('gasoline', 1)]

'cat': [('walk', 2), ('right', 1), ('sleeps', 1), ('happy', 1)]As our mini experiment demonstrates, discreteness can be combatted with statistical co-occurrence: words with similar meaning will occur around similar concepts, giving us an idea of similarity that has nothing to do with syntactic or lexical form of words. This is the core idea behind most modern semantic representation models in NLP.

NLP Libraries

Related to the need of shaping our problems into a known task, there

are several existing NLP libraries which provide a wide range of models

that we can use out-of-the-box (without further need of modification).

We already saw simple examples using spaCy for English and

MicroTokenizer for Chinese. Again, as a non-exhaustive

list, we mention some widely used NLP libraries in Python:

Linguistic Resources

There are also several curated resources (textual data) that can help solve your NLP-related tasks, specifically when you need highly specialized definitions. An exhaustive list would be impossible as there are thousands of them, and also them being language and domain dependent. Below we mention some of the most prominent, just to give you an idea of the kind of resources you can find, so you don’t need to reinvent the wheel every time you start a project:

- HuggingFace Datasets: A large collection of datasets for NLP tasks, including text classification, question answering, and language modeling.

- WordNet: A large lexical database of English, where words are grouped into sets of synonyms (synsets) and linked by semantic relations.

- Europarl: A parallel corpus of the proceedings of the European Parliament, available in 21 languages, which can be used for machine translation and cross-lingual NLP tasks.

- Universal Dependencies: A collection of syntactically annotated treebanks across 100+ languages, providing a consistent annotation scheme for syntactic and morphological properties of words, which can be used for cross-lingual NLP tasks.

- PropBank: A corpus of texts annotated with information about basic semantic propositions, which can be used for English semantic tasks.

- FrameNet: A lexical resource that provides information about the semantic frames that underlie the meanings of words (mainly verbs and nouns), including their roles and relations.

- BabelNet: A multilingual lexical resource that combines WordNet and Wikipedia, providing a large number of concepts and their relations in multiple languages.

- Wikidata: A free and open knowledge base initially derived from Wikipedia, that contains structured data about entities, their properties and relations, which can be used to enrich NLP applications.

- Dolma: An open dataset of 3 trillion tokens from a diverse mix of clean web content, academic publications, code, books, and encyclopedic materials, used to train English large language models.

What did we learn in this lesson?

NLP is a subfield of Artificial Intelligence (AI) that, using the help of Linguistics, deals with approaches to process, understand and generate natural language

Linguistic Data has special properties that we should consider when modeling our solutions

Key tasks include language modeling, text classification, token classification and text generation

Deep learning has significantly advanced NLP, but the challenge remains in processing the discrete and ambiguous nature of language

The ultimate goal of NLP is to enable machines to understand and process language as humans do

Content from From words to vectors

Last updated on 2025-12-01 | Edit this page

Overview

Questions

- Why do we need to preprocess text in NLP?

- What are the most common preprocessing operations and in which contexts should each be used?

- What properties do word embeddings have?

- What is a word2vec model?

- What insights can I get from word embeddings?

- How do I train my own word2vec model?

Objectives

After following this lesson, learners will be able to:

- Perform basic NLP preprocessing operations

- Implement a basic NLP Pipeline

- Explain the motivation for vectorization in modern NLP

- Train a custom Word2Vec model using the Gensim library

- Apply a Word2Vec model to interpret and analyze semantics of text (either a pre-trained model or custom model)

- Describe the kinds of semantic relationships captured by Word2Vec, and identify NLP tasks it is suited to support

- Explain, with examples, what the limitations are for the Word2Vec representation

Introduction

In this episode, we will learn about the importance of preprocessing text in NLP, and how to apply common preprocessing operations to text files. We will also learn more about NLP Pipelines, learn about their basic components and how to construct such pipelines.

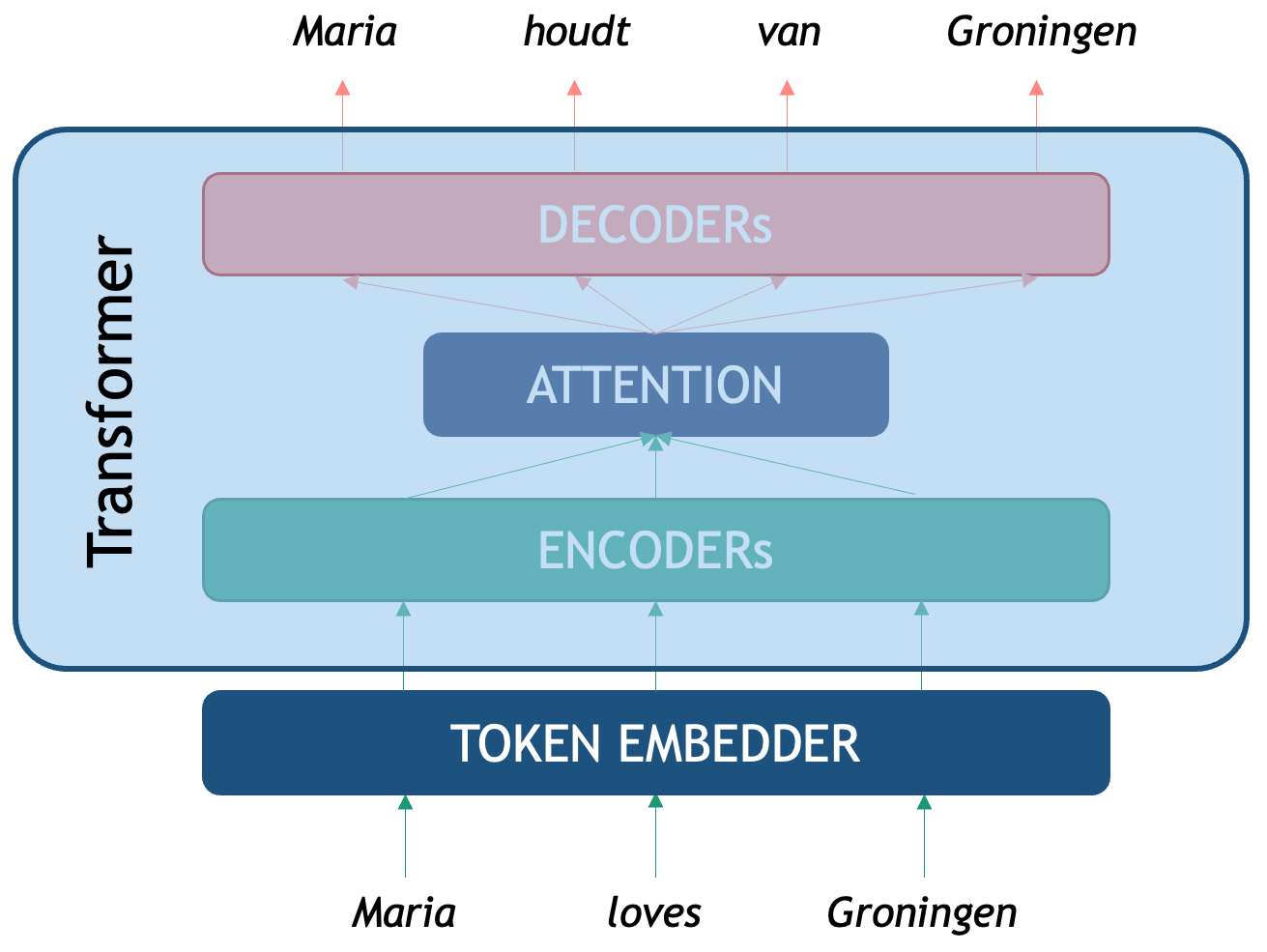

We will then address the transition from rule-based NLP to distributional semantics approaches which encode text into numerical representations based on statistical relationships between tokens. We will introduce one particular algorithm for this kind of encoding called Word2Vec proposed in 2013 by Mikolov et al. We will show what kind of useful semantic relationships these representations encode in text, and how we can use them to solve specific NLP tasks. We will also discuss some of the limitations of Word2Vec which are addressed in the next lesson on transformers before concluding with a summary of what we covered in this lesson.

Preprocessing Operations

As is common in data science and machine learning, raw textual data often comes in a form that is not readily suitable for downstream NLP tasks. Preprocessing operations in NLP are analogous to the data cleaning and sanitation steps in traditional non-NLP Machine Learning tasks. Sometimes you are extracting text from PDF files which contain line breaks, headers, tables etc. that are not relevant to NLP tasks and which need to be removed. You may need to remove punctuation and special characters, or lowercase text for some NLP tasks etc. Whether you need to perform certain preprocessing operations, and the order in which you should perform them, will depend on the NLP task at hand.

Also note that preprocessing can differ significantly if you work with different languages. This is both in terms of which steps to apply, but also which methods to use for a specific step.

Here we will analyze with more detail the most common pre-processing steps when dealing with unstructured English text data:

Data Formatting

Text comes from various sources and are available in different formats (e.g., Microsoft Word documents, PDF documents, ePub files, plain text files, Web pages etc…). The first step is to obtain a clean text representation that can be transferred into python UTF-8 strings that our scripts can manipulate.

Take a look at the

data/84_frankenstein_or_the_modern_prometheus.txt file:

PYTHON

filename = "data/84_frankenstein_or_the_modern_prometheus.txt"

with open(filename, 'r', encoding='utf-8') as file:

text = file.read()

print(text[:300]) # print the first 300 charactersOur file is already in plain text so it might seem we do not need to do anything; however, if we look closer we see new line characters separating not only paragraphs but breaking the lines in the middle of sentences. While this is useful to keep the text in a narrow space to help the human reader, it introduces artificial breaks that can confuse any automatic analysis (for example to identify where sentences start and end).

One straightforward way is to replace the new lines with spaces so all the text is in a single line:

Other data formatting operations might include: - Removal of special or noisy characters. For example:

SH

- Random symbols: "The total cost is $120.00#" → remove #

- Incorrectly recognized letters or numbers: 1 misread as l, 0 as O, etc. Example: "l0ve" → should be "love"

- Control or formatting characters: \n, \t, \r appearing in the middle of sentences. Example: "Please\nsubmit\tyour form." → "Please submit your form."

- Non-standard Unicode characters: �, �, or other placeholder symbols where OCR failed. Example: "Th� quick brown fox" → "The quick brown fox"- Remove HTML tags (e.g., if you are extracting text from Web pages)

- Strip non-meaningful punctuation (e.g., “The quick brown fox jumps over the lazy dog and con- tinues to run across the field.)

- Strip footnotes, headers, tables, images etc.

- Remove URLs or phone numbers

What if I need to extract text from MS Word docs or PDF files or Web pages There are various Python libraries for helping you extract and manipulate text from these kinds of sources.

- For MS Word documents python-docx is popular.

- For (text-based) PDF files PyPDF2 and PyMuPDF are widely used. Note that some PDF files are encoded as images (pixels) and not text. If the text in these files is digital (as opposed to scanned handwriting), you can use OCR (Optical Character Recognition) libraries such as pytesseract to convert the image to machine-readable text.

- For scraping text from websites, BeautifulSoup and Scrapy are some common options.

- LLMs also have something to offer here, and the field is moving pretty fast. There are some interesting open source LLM-based document parsers and OCR-like extractors such as Marker, or PyMuPDF4LLM, just to mention a couple.

Another important choice at the data formatting level is to decide at what granularity do you need to perform the NLP task:

- Are you analyzing phenomena at the word level? For example, detecting abusive language (based on a known vocabulary).

- Do you need to first extract sentences from the text and do analysis at the sentence level? For example, extracting entities in each sentence.

- Do you need full chunks of text? (e.g. paragraphs or chapters?) For example, summarizing each paragraph in a document.

- Or perhaps you want to extract patterns at the document level? For example each full book should have one genre tag (Romance, History, Poetry).

Sometimes your data will be already available at the desired granularity level. If this is not the case, then during the tokenization step you will need to figure out how to obtain the desired granularity level.

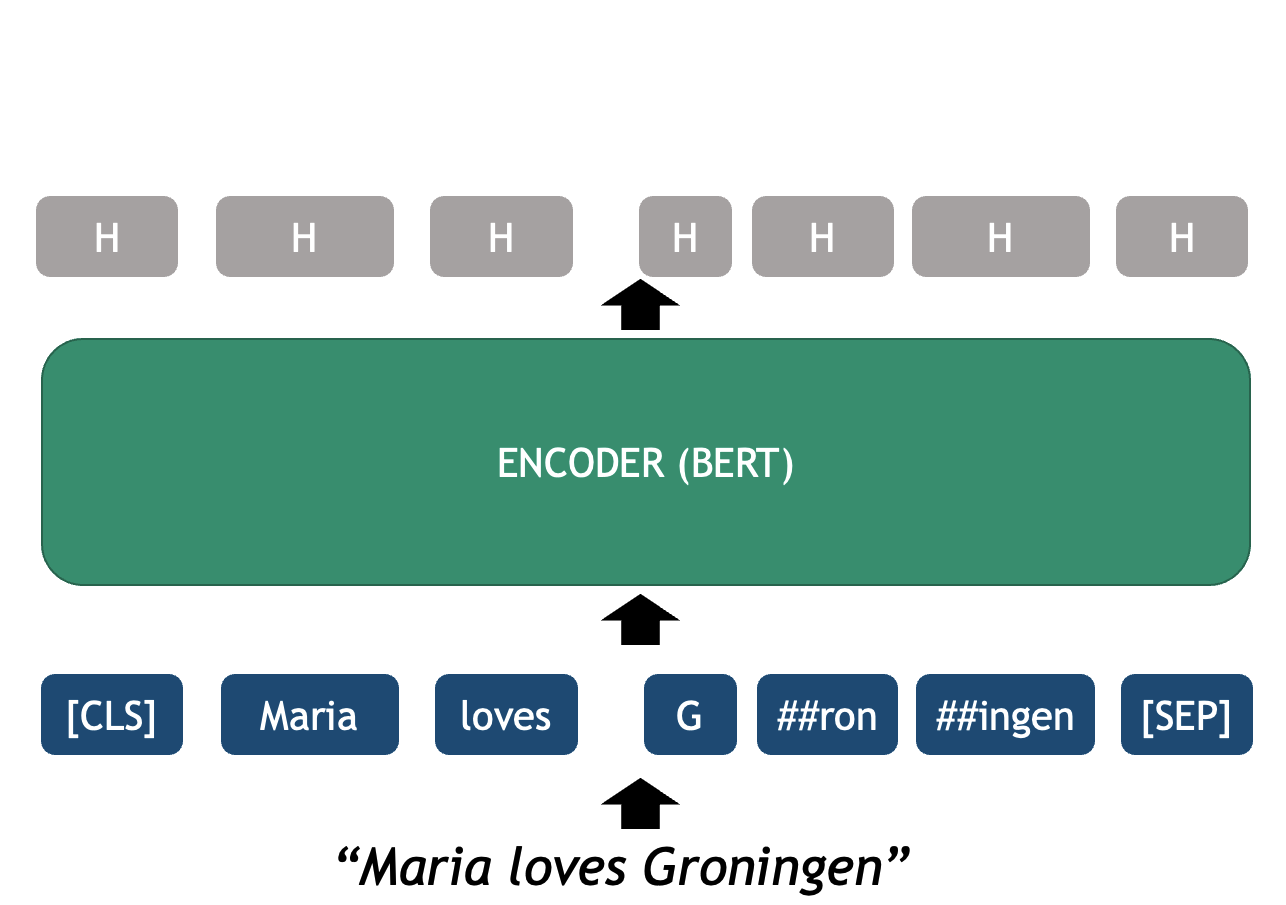

Tokenization

Tokenization is a foundational operation in NLP, as it helps to

create structure from raw text. This structure is a basic requirement

and input for modern NLP algorithms to attribute and interpret meaning

from text. This operation involves the segmentation of the text into

smaller units referred to as tokens. Tokens can be

sentences (e.g. 'the happy cat'), words

('the', 'happy', 'cat'), subwords

('un', 'happiness') or characters

('c','a', 't'). Different NLP algorithms may require

different choices for the token unit. And different languages may

require different approaches to identify or segment these tokens.

Python strings are by definition sequences of characters, thus we can iterate through the string character by character:

However, it is often more advantageous if our atomic units are words. As we saw in Lesson 1, the task of extracting word tokens from texts is not trivial, therefore pre-trained models such as spaCy can help with this step. In this case we will use the small English model that was trained on a web corpus:

PYTHON

import spacy

nlp = spacy.load("en_core_web_sm")

doc = nlp(text)

print(type(doc)) # Should be <class 'spacy.tokens.doc.Doc'>

print(len(doc))

print(doc)A good word tokenizer for example, does not simply break up a text based on spaces and punctuation, but it should be able to distinguish:

- abbreviations that include points (e.g.: e.g.)

- times (11:15) and dates written in various formats (01/01/2024 or 01-01-2024)

- word contractions such as don’t, these should be split into do and n’t

- URLs

Many older tokenizers are rule-based, meaning that they iterate over a number of predefined rules to split the text into tokens, which is useful for splitting text into word tokens for example. Modern large language models use subword tokenization, which learn to break text into pieces that are statistically convenient, this makes them more flexible but less human-readable.

We can access the tokens by iterating the document and getting its

.text property:

OUTPUT

['Letter', '1', '\n\n\n', 'St.', 'Petersburgh', ',', 'Dec.', '11th', ',', '17', '-', '-', '\n\n', 'TO', 'Mrs.']This shows us the individual tokens, including new lines and punctuation (in case we didn’t run the previous cleaning step). spaCy allows us to filter based on token properties. For example, assuming we are not interested in the newlines, punctuation nor in numeric tokens, in one single step we can keep only the token objects that contain alphabetical characters:

OUTPUT

[Letter, Petersburgh, TO, Saville, England, You, will, rejoice, to, hear, that, no, disaster, has, accompanied]We do not have to depend necessarily on the Doc and

Token spaCy objects. Once we tokenized the text with the

spaCy model, we can extract the list of words as a list of strings and

continue our text analysis:

Again, it all depends on what your requirements are. For example, sometimes it is more useful if our atomic units are sentences. Think of the NLP task of classifying each whole sentence inside a text as Positive/Negative/Neutral in terms of sentiment (e.g., within movie reviews). spaCy also helps with this using a sentence segmentation model:

Note that in this case each sentence is a python object, and the

property .text returns an untokenized string (in terms of

words). But we can still access the list of word tokens inside each

sentence object if we want:

PYTHON

sents_sample = list(doc.sents)[:10]

for sent in sents_sample:

print("Sentence:", sent.text)

for token in sent:

print("\tToken:", token.text)This will give us enough flexibility to work at the sentence and word level at the same time. In terms of what we can do with these sentences once spaCy has identified them, we could ask humans to label each sentence as either Positive/Negative/Neutral and train a supervised model for sentiment classification on the set of sentences. Or if we have a pre-trained model for sentiment classification on sentences, we could load this model and classify each of our input sentences as either Positive/Negative/Neutral.

Lowercasing

Removing uppercases to e.g. avoid treating “Dog” and “dog” as two different words is also a common step, for example to train word vector representations, we want to merge both occurrences as they represent exactly the same concept. Lowercasing can be done with Python directly as:

PYTHON

lower_text = text_flat.lower()

lower_text[:100] # Beware that this is a python string operationBeware that lowercasing the whole string as a first step might affect

the tokenizer behavior since tokenization benefits from information

provided by case-sensitive strings. We can therefore tokenize first

using spaCy and then obtain the lowercase strings of each token using

the .lower_ property:

PYTHON

lower_text = [token.lower_ for token in doc]

lower_text[:10] # Beware that this is a list of strings now!In other tasks, such as Named Entity Recognition (NER), lowercasing before training can actually lower the performance of your model. This is because words that start with an uppercase (not preceded by a period) can represent proper nouns that map into Entities, for example:

PYTHON

import spacy

# Preserving uppercase characters increases the likelihood that an NER model

# will correctly identify Apple and Will as a company (ORG) and a person (PER)

# respectively.

str1 = "My next laptop will be from Apple, Will said."

# Lowercasing can reduce the likelihood of accurate labeling

str2 = "my next laptop will be from apple, will said."

nlp = spacy.load("en_core_web_sm")

ents1 = [ent.text for ent in nlp(str1).ents]

ents2 = [ent.text for ent in nlp(str2).ents]

print(ents1)

print(ents2)OUTPUT

['Apple', 'Will']

[]Lemmatization

Although it has become less widely used in modern NLP approaches, normalizing words into their dictionary form can help to focus on relevant aspects of text. Consider how “eating”, “ate”, “eaten” are all variations of the root verb “eat”. Each variation is sometimes known as an inflection of the root word. Conversely, we say that the word “eat” is the lemma for the words “eating”, “eats”, “eaten”, “ate” etc. Lemmatization is therefore the process of rewriting each token or word in a given input text as its lemma.

Lemmatization is not only a possible preprocessing step in NLP but

also an NLP task on its own, with different algorithms for it. Therefore

we also tend to use pre-trained models to perform lemmatization. Using

spaCy we can access the lemmmatized version of each token with the

lemma_ property (notice the underscore!):

Note that the list of lemmas is now a list of strings.

Having a lemmatized text allows us to merge the different surface

occurrences of the same concept into a single token. This can be very

useful for count-based NLP methods such as topic modelling approaches

which count the frequency of certain words to see how prevalent a given

topic is within a document. If you condense “eat”, “eating”, “ate”,

“eaten” to the same token “eat” then you can count four occurrences of

the same “topic” in a text, instead of treating these four tokens as

distinct or unrelated topics just because they are spelled differently.

You can also use lemmatization for generating word embeddings. For

example, you can have a single vector for eat instead of

one vector per verb tense.

As with each preprocessing operation, this step is optional. Consider, for example, the cases where the differences of verb usage according to tense is informative, or the difference between singular and plural usage of nouns, in those cases lemmatizing will get rid of important information for your task. For example, if your chosen NLP task is to detect past tense verbs from a document, then lemmatizing “eaten” into “eat” loses crucial information about tense that your model requires.

Stop Word Removal

The most frequent words in texts are those which contribute little semantic value on their own: articles (‘the’, ‘a’, ‘an’), conjunctions (‘and’, ‘or’, ‘but’), prepositions (‘on’, ‘by’), auxiliary verbs (‘is’, ‘am’), pronouns (‘he’, ‘which’), or any highly frequent word that might not be of interest in several content-only related tasks. Let’s define a small list of stop words for this specific case:

Using Python directly, we need to manually define a list of what we consider to be stop words and directly filter the tokens that match this. Notice that lemmatization was a crucial step to get more coverage with the stop word filtering:

PYTHON

lemmas = [token.lemma_ for token in doc]

content_words = []

for lemma in lemmas:

if lemma not in STOP_WORDS:

content_words.append(lemma)

print(content_words[:20])Using spaCy we can filter the stop words based on the token properties:

There is no canonical definition of stop words because what you consider to be a stop word is directly linked to the objective of your task at hand. For example, pronouns are usually considered stopwords, but if you want to do gender bias analysis then pronouns are actually a key element of your text processing pipeline. Similarly, removing articles and prepositions from text is obviously not advised if you are doing dependency parsing (the task of identifying the parts of speech in a given text).

Another special case is the word ‘not’ which may encode the semantic notion of negation. Removing such tokens can drastically change the meaning of sentences and therefore affect the accuracy of models for which negation is important to preserve (e.g., sentiment classification “this movie was NOT great” vs. “this movie was great”).

NLP Pipeline

The concept of NLP pipeline refers to the sequence of operations that we apply to our data in order to go from the original data (e.g. original raw documents) to the expected outputs of our NLP Task at hand. The components of the pipeline refer to any manipulation we apply to the text, and do not necessarily need to be complex models, they involve preprocessing operations, application of rules or machine learning models, as well as formatting the outputs in a desired way.

A simple rule-based classifier

Imagine we want to build a very lightweight sentiment classifier. A basic approach is to design the following pipeline:

- Clean the original text file (as we saw in the Data Formatting section)

- Apply a sentence segmentation or tokenization model

- Define a set of positive and negative words (a hard coded dictionary)

- For each sentence:

- If it contains one or more of the positive words, classify as

POSITIVE - If it contains one or more of the negative words, classify as

NEGATIVE - Otherwise classify as

NEUTRAL

- If it contains one or more of the positive words, classify as

- Output a table with the original sentence and the assigned label

This is implemented with the following code:

- Read the text and normalize it into a single line

PYTHON

import spacy

nlp = spacy.load("en_core_web_sm")

filename = "data/84_frankenstein_or_the_modern_prometheus.txt"

with open(filename, 'r', encoding='utf-8') as file:

text = file.read()

text = text.replace("\n", " ") # some cleaning by removing new line characters- Apply Sentence segmentation

- Define the positive and negative words you care about:

PYTHON

positive_words = ["happy", "excited", "delighted", "content", "love", "enjoyment"]

negative_words = ["unhappy", "sad", "anxious", "miserable", "fear", "horror"]- Apply the rules to each sentence and collect the labels

PYTHON

classified_sentences = []

for sent in sentences:

if any(word in sent.lower() for word in positive_words):

classified_sentences.append((sent, 'POSITIVE'))

elif any(word in sent.lower() for word in negative_words):

classified_sentences.append((sent, 'NEGATIVE'))

else:

classified_sentences.append((sent, 'NEUTRAL'))- Save the classified data

PYTHON

import pandas as pd

df = pd.DataFrame(classified_sentences, columns=['sentence', 'label'])

df.to_csv('results_naive_rule_classifier.csv', sep='\t')Challenge

Discuss the pros and cons of the proposed NLP pipeline:

- Do you think it will give accurate results?

- What do you think about the coverage of this approach? What cases will it miss?

- Think of possible drawbacks of chaining components in a pipeline.

- This classifier only considers the presence of one word to apply a label. It does not analyze sentence semantics or even syntax.

- Given how the rules are defined, if both positive and negative words

are present in the same sentence it will assign the

POSITIVElabel. It will generate a lot of false positives because of the simplistic rules - The errors from previous steps get carried over to the next steps increasing the likelihood of noisy outputs.

So far we’ve seen how to format and segment the text to have atomic data at the word level or sentence level. We then apply operations to the word and sentence strings. This approach still depends on counting and exact keyword matching. And as we have already seen it has several limitations. The method cannot interpret words outside the dictionary defined for example.

One way to combat this is by transforming each word into numeric representation and study statistical patterns in how these words are distributed in text. For example, what words tend to occur “close” to a given word in my data? For example, if we analyze restaurant menus we find that “cheese”, “mozzarella”, “base” etc. frequently occur near the token “pizza”. We can then exploit these statistical patterns to inform various NLP tasks. This concept is commonly known as distributional semantics. It is based on the assumption “words that appear in similar contexts have similar meanings.”

This concept is powerful for enabling, for example, the measurement of semantic similarity of words, sentences, phrases etc. in text. And this, in turn, can help with other downstream NLP tasks, as we shall see in the next section on word embeddings.

Word Embeddings

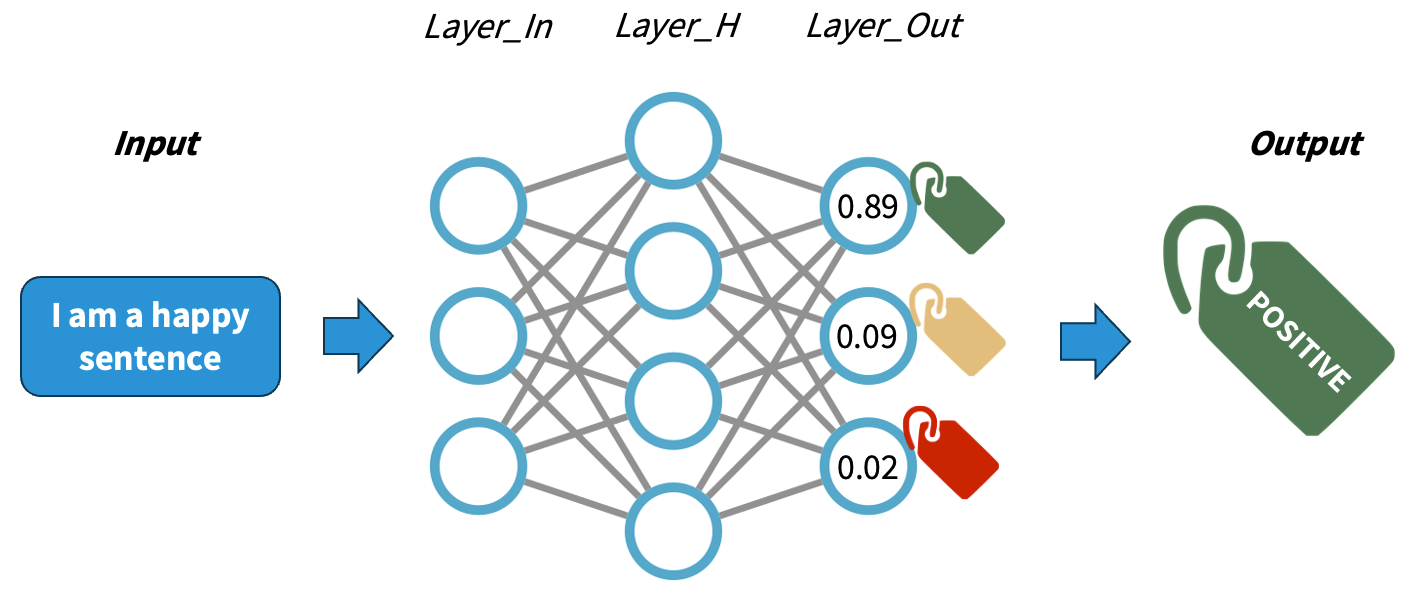

Reminder: Neural Networks

Understanding how neural networks work is out of the scope of this course. For our purposes we will simplify the explanation in order to conceptually understand how Neural Network works. A Neural Network (NN) is a pattern-finding machine with layers (a deep neural network is the same concept but scaled to dozens or even hundreds of layers). In a neural network, each layer has several interconnected neurons, each one corresponding to a random number initially. The deeper the network is, the more complex patterns it can learn. As the neural netork gets trained (that is, as it sees several labeled examples that we provide), each neuron value will be updated in order to maximize the probability of getting the answers right. A well trained neural network will be able to predict the right labels on completely new data with certain accuracy.

The main difference with traditional machine learning models is that we do not need to design explicitly any features, rather the network will adjust itself by looking at the data alone and executing the back-propagation algorithm. The main job when using NNs is to encode our data properly so it can be fed into the network.

Rationale behind Embeddings

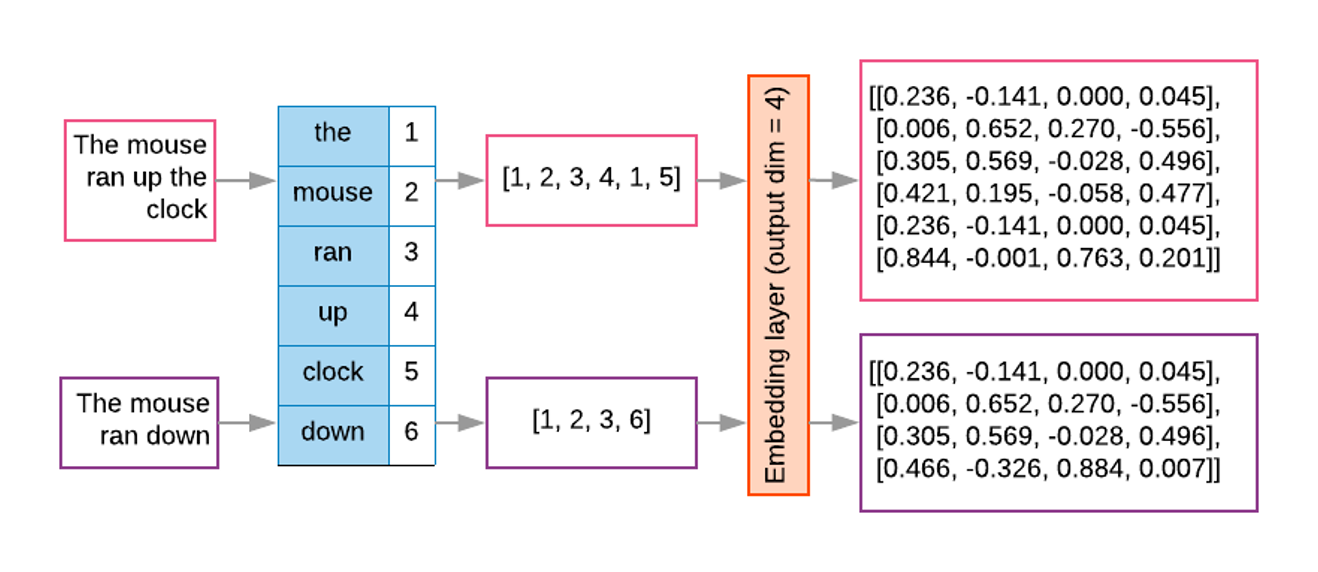

A word embedding is a numeric vector that represents a word. Word2Vec exploits the “feature agnostic” power of neural networks to transform word strings into trained word numeric representations. Hence we still use words as features but instead of using the string directly, we transform that string into its corresponding vector in the pre-trained Word2Vec model. And because both the network input and output are the words themselves in text, we basically have billions of labeled training datapoints for free.

To obtained the word embeddings, a shallow neural network is optimized with the task of language modeling and the final hidden layer inside the trained network holds the fixed size vectors whose values can be mapped into linguistic properties (since the training objective was language modeling). Since similar words occur in similar contexts, or have same characteristics, a properly trained model will learn to assign similar vectors to similar words.

By representing words with vectors, we can mathematically manipulate them through vector arithmetic and express semantic similarity in terms of vector distance. Because the size of the learned vectors is not proportional to the amount of documents we can learn the representations from larger collections of texts, obtaining more robust representations, that are less corpus-dependent.

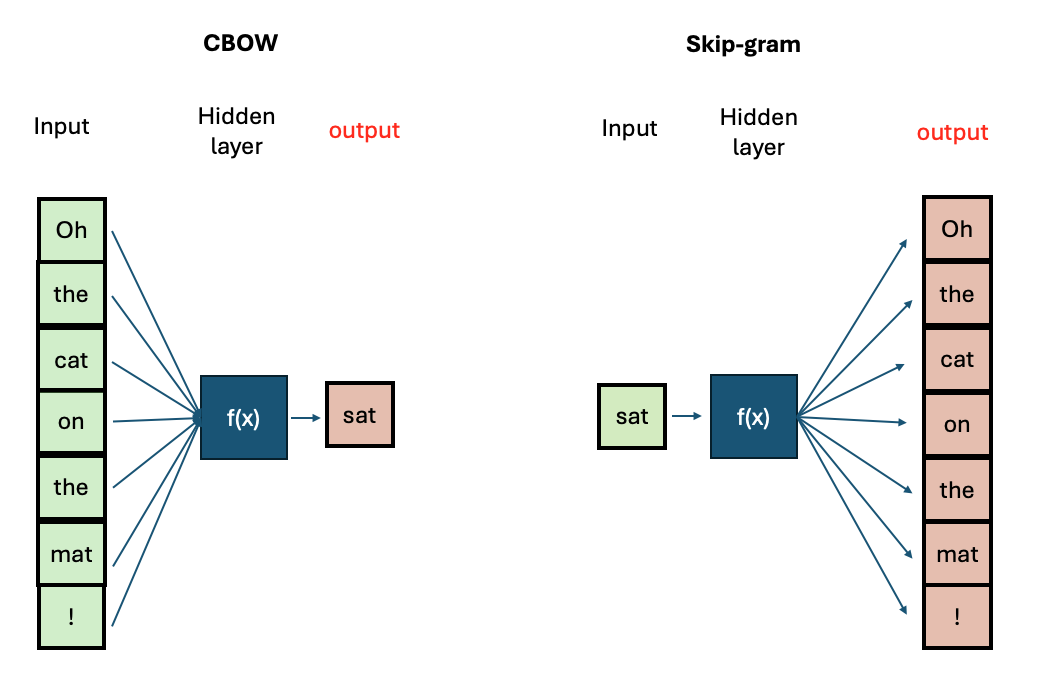

There are two main algorithms for training Word2Vec:

- Continuous Bag-of-Words (CBOW): Predicts a target word based on its surrounding context words.

- Continuous Skip-Gram: Predicts surrounding context words given a target word.

If you want to know more about the technicl aspecs of training Word2Vec you can visit this tutorial

The Word2Vec Vector Space

The python module gensim offers a user-friendly

interface to interact with pre-trained Word2vec models and also to train

our own. First we will explore the model from the original Word2Vec

paper, which was trained on a big corpus from Google News (English news

articles). We will see what functionalities are available to explore a

vector space. Then we will prepare our own text step-by-step to train

our own Word2vec models and save them.

Load the embeddings and inspect them

The library gensim has a repository with English

pre-trained models. We can take a look at the models:

PYTHON

import gensim.downloader

available_models = gensim.downloader.info()['models'].keys()

print(list(available_models))We will download the google News model with:

We can do some basic checkups such as showing how many words are in the vocabulary (i.e., for how many words do we have an available vector), what is the total number of dimensions in each vector, and print the components of a vector for a given word:

PYTHON

print(len(w2v_model.key_to_index.keys())) # 3 million words

print(w2v_model.vector_size) # 300 dimensions. This can be chosen when training your own model

print(w2v_model['car'][:10]) # The first 10 dimensions of the vector representing 'car'.

print(w2v_model['cat'][:10]) # The first 10 dimensions of the vector representing 'cat'.OUTPUT

3000000

300

[ 0.13085938 0.00842285 0.03344727 -0.05883789 0.04003906 -0.14257812

0.04931641 -0.16894531 0.20898438 0.11962891]

[ 0.0123291 0.20410156 -0.28515625 0.21679688 0.11816406 0.08300781

0.04980469 -0.00952148 0.22070312 -0.12597656]As we can see, this is a very large model with 3 million words and the dimensionality chosen at training time was 300, thus each word will have a 300-dimension vector associated with it.

Even with such a big vocabulary we can always find a word that won’t be in there:

This will throw a KeyError as the model does not know

that word. Unfortunately this is a limitation of Word2vec - unseen words

(words that were not included in the training data) cannot be

interpreted by the model.

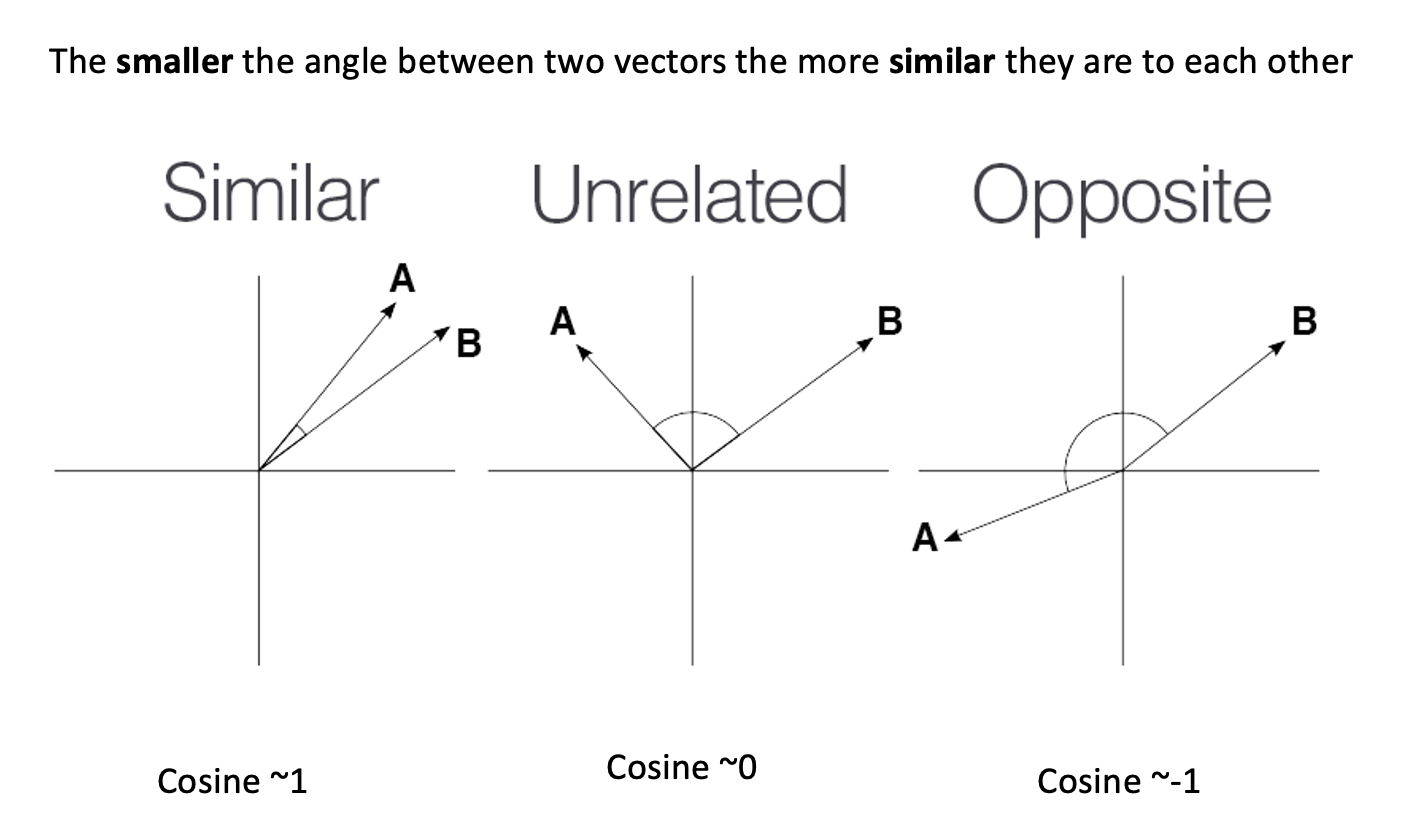

Now let’s talk about the vectors themselves. They are not easy to interpret as they are a bunch of floating point numbers. These are the weights that the network learned when optimizing for language modelling. As the vectors are hard to interpret, we rely on a mathematical method to compute how similar two vectors are. Generally speaking, the recommended metric for measuring similarity between two high-dimensional vectors is cosine similarity .

cosine

similarity ranges between [-1 and 1]. It

is the cosine of the angle between two vectors, divided by the product

of their length. Mathematically speaking, when two vectors point in

exactly the same direction their cosine will be 1, and when they point

in the opposite direction their cosine will be -1. In python we can use

Numpy to compute the cosine similarity of vectors.

We can use sklearn learn to measure any pair of

high-dimensional vectors:

PYTHON

from sklearn.metrics.pairwise import cosine_similarity

car_vector = w2v_model['car']

cat_vector = w2v_model['cat']

similarity = cosine_similarity([car_vector], [cat_vector])

print(f"Cosine similarity between 'car' and 'cat': {similarity[0][0]}")

similarity = cosine_similarity([w2v_model['hamburger']], [w2v_model['pizza']])

print(f"Cosine similarity between 'hamburger' and 'pizza': {similarity[0][0]}")PYTHON

Cosine similarity between 'car' and 'cat': 0.21528185904026031

Cosine similarity between 'hamburger' and 'pizza': 0.6153676509857178Or you can use directly the

w2v_model.similarity('car', 'cat') function which gives the

same result.

The higher similarity score between the hamburger and pizza indicates they are more similar based on the contexts where they appear in the training data. Even though is hard to read all the floating numbers in the vectors, we can trust this metric to always give us a hint of which words are semantically closer than others

Challenge

Think of different word pairs and try to guess how close or distant they will be from each other. Use the similarity measure from the word2vec module to compute the metric and discuss if this fits your expectations. If not, can you come up with a reason why this was not the case?

Some interesting cases include synonyms, antonyms and morphologically related words:

PYTHON

print(w2v_model.similarity('democracy', 'democratic'))

print(w2v_model.similarity('queen', 'princess'))

print(w2v_model.similarity('love', 'hate')) #!! (think of "I love X" and "I hate X")

print(w2v_model.similarity('love', 'lover'))OUTPUT

0.86444813

0.7070532

0.6003957

0.48608577Vector Neighborhoods

Now that we have a metric we can trust, we can retrieve neighborhoods

of vectors that are close to a given word. This is analogous to

retrieving semantically related terms to a target term. Let’s explore

the neighborhood around `pizza` using the most_similar()

method:

This returns a list of ranked tuples with the form (word, similarity_score). The list is already ordered in descent, so the first element is the closest vector in the vector space, the second element is the second closest word and so on…

OUTPUT

[('pizzas', 0.7863470911979675),

('Domino_pizza', 0.7342829704284668),

('Pizza', 0.6988078355789185),

('pepperoni_pizza', 0.6902607083320618),

('sandwich', 0.6840401887893677),

('burger', 0.6569692492485046),

('sandwiches', 0.6495091319084167),

('takeout_pizza', 0.6491535902023315),

('gourmet_pizza', 0.6400628089904785),

('meatball_sandwich', 0.6377009749412537)]Exploring neighborhoods can help us understand why some vectors are closer (or not so much). Take the case of love and lover, originally we might think these should be very close to each other but by looking at their neighborhoods we understand why this is not the case:

PYTHON

print(w2v_model.most_similar('love', topn=10))

print(w2v_model.most_similar('lover', topn=10))This returns a list of ranked tuples with the form (word, similarity_score). The list is already ordered in descent, so the first element is the closest vector in the vector space, the second element is the second closest word and so on…

OUTPUT

[('loved', 0.6907791495323181), ('adore', 0.6816874146461487), ('loves', 0.6618633270263672), ('passion', 0.6100709438323975), ('hate', 0.6003956198692322), ('loving', 0.5886634588241577), ('Ilove', 0.5702950954437256), ('affection', 0.5664337873458862), ('undying_love', 0.5547305345535278), ('absolutely_adore', 0.5536840558052063)]

[('paramour', 0.6798686385154724), ('mistress', 0.6387110352516174), ('boyfriend', 0.6375402212142944), ('lovers', 0.6339589953422546), ('girlfriend', 0.6140860915184021), ('beau', 0.609399676322937), ('fiancé', 0.5994566679000854), ('soulmate', 0.5993717312812805), ('hubby', 0.5904166102409363), ('fiancée', 0.5888950228691101)]The first word is a noun or a verb (depending on the context) that denotes affection to someone/something , so it is associated with other concepts of affection (positive or negative). The case of lover is used to describe a person, hence the associated concepts are descriptors of people with whom the lover can be associated.

Word Analogies with Vectors

Another powerful property that word embeddings show is that vector

algebra can preserve semantic analogy. An analogy is a comparison

between two different things based on their similar features or

relationships, for example king is to queen as man is to woman. We can

mimic this operations directly on the vectors using the

most_similar() method with the positive and

negative parameters:

PYTHON

# king is to man as what is to woman?

# king + woman - man = queen

w2v_model.most_similar(positive=['king', 'woman'], negative=['man'])OUTPUT

[('queen', 0.7118192911148071),

('monarch', 0.6189674735069275),

('princess', 0.5902431011199951),

('crown_prince', 0.5499460697174072),

('prince', 0.5377321243286133),

('kings', 0.5236844420433044),

('Queen_Consort', 0.5235945582389832),

('queens', 0.5181134343147278),

('sultan', 0.5098593235015869),

('monarchy', 0.5087411403656006)]Train your own Word2Vec

The gensim package has implemented everything for us,

this means we have to focus mostly on obtaining clean data and then

calling the Word2Vec object to train our own model with our

own data. This can be done like follows:

PYTHON

import spacy

from gensim.models import Word2Vec

# Load and Tokenize the Text using spacy

spacy_model = spacy.load("en_core_web_sm")

with open("data/84_frankenstein_clean.txt") as f:

book_text = f.read()

book_doc = spacy_model(book_text)

clean_tokens = [tok.text.lower() for tok in book_doc if tok.is_alpha and not tok.is_stop]

# Call and Train the Word2Vec model

model = Word2Vec([clean_tokens], sg=0 , vector_size=300, window=5, min_count=1, workers=4)With this line code we are configuring our whole Word2Vec training

schema. We will be using CBOW (sg=0 means CBOW,

sg=1 means Skip-gram). We are interested in having vectors

with 300 dimensions vector_size=300 and a context size of 5

surrounding words window=5. Because we already filtered our

tokens, we include all words present in the filtered corpora, regardless

of their frequency of occurrence min_count=1. The last

parameters tells python to use 4 CPU cores for training.

See the Gensim documentation for more training options.

Save and Retrieve your model

Once your model is trained it is useful to save the checkpoint in order to retrieve it next time instead of having to train it every time. You can save it with:

Let’s put everything together. We have now the following NLP task: train our own Word2Vec model. We are interested on having vectors for content words only, so even though our preprocessing will unfortunately lose a lot of the original information, in exchange we will be able to manipulate the most relevant conceptual words as individual numeric representations.

To load back the pre-trained vectors you just created you can use the following code:

PYTHON

model = Word2Vec.load("word2vec_mini_books.model")

w2v = model.wv

# Test:

w2v.most_similar('monster')Challenge

Let’s apply this step by step on a longer text. In this case, because we are learning the process, our corpus will be only one book but in reality we would like to train a network with thousands of them. We will use two books: Frankenstein and Dracula to train a model of word vectors.

Write the code to follow the proposed pipeline and train the word2vec model. The proposed pipeline for this task is: