Content from Introduction to R and RStudio

Last updated on 2026-02-10 | Edit this page

Overview

Questions

- What is R and what is RStudio?

- How can I find my way around RStudio?

- How can I manage projects in R?

- How can I install packages?

- How can I interact with R?

Objectives

After completing this episode, participants should be able to…

- Create self-contained projects in RStudio

- Install additional packages using R code

- Manage packages

- Define a variable

- Assign data to a variable

- Call functions

Project management in RStudio

RStudio is an integrated development environment (IDE), which means that it provides a user-friendly interface for the R software. For RStudio to work, you need to have R installed on your computer. However, R is integrated into RStudio, so you never actually have to open R software.

RStudio provides a useful feature: creating projects. A project is a self-contained working space (i.e., working directory), to which R will refer to when looking for and saving files. You can create a project in an existing directory or in a new one.

Creating RStudio Project

We’re going to create a project in RStudio in a new directory. To create a project, go to:

FileNew ProjectNew directory- Browse to a location that you will easily find on your laptop and

name the directory

data-carpentry Create project

Organising the working directory

Creating an RStudio project is a good first step towards good project management. However, most of the time it is a good idea to organize the working space further. This is one suggestion of how your R project can look like. Let’s go ahead and create the sub-folders:

-

data/- should be where your raw data is. READ ONLY -

data_output/- should be where your data output is saved READ AND WRITE -

documents/- all the documentation associated with the project -

fig_output/- your figure outputs go here WRITE ONLY -

scripts/- all your code goes here READ AND WRITE

You can create these folders as you would any other folders on your laptop, but R and RStudio offer handy ways to do it directly in your RStudio session.

You can use the RStudio interface to create a folder in your project by going to the lower-bottom pane, Files tab, and clicking on the Folder icon. A dialog box will appear, allowing you typing a name of a folder you want to create.

An alternative solution is to create the folders using the R command

dir.create(). In the console type:

R

dir.create("data")

dir.create("data_output")

dir.create("documents")

dir.create("fig_output")

dir.create("scripts")

Two main ways to interact with R

There are two main ways to interact with R through RStudio:

- test and play environment within the interactive R console

- write and save an R script (

.Rfile)

The console and script windows

When you open the RStudio or create the Rstudio project, you will see Console window on the left by default. Once you create an R script, it is placed in the upper left pane. The Console is moved to the bottom left pane.

Each of the modes o interactions has its advantages and drawbacks.

| Console | R script | |

|---|---|---|

| Pros | Immediate results | Complete record of your work |

| Cons | Work lost once you close RStudio | Messy if you just want to print things out |

Creating a script

During the workshop, we will mostly use an .R script to

have a full documentation of what has been written. This way we will

also be able to reproduce the results. Let’s create one now and save it

in the scripts directory.

FileNew FileR Script- A new

Untitledscript will appear in the source pane - Save it using the floppy disc icon

- Select the

scripts/folder as the file location - Name the script

intro-to-r.R

Running the code

Note that all code written in the script can be also executed at once

in the

interactive console. We will now learn how to run the code both in the

console and in the script.

- In the Console you run the code by pressing Enter at the end of the line

- In the R script there are two way to execute the code:

- You can use the

Runbutton on the top right of the script window. - Alternatively, you can use a keyboard shortcut: Ctrl + Enter on Windows/Linux or Command + Return on Mac.

- You can use the

In both cases, the active line (the line where your cursor is placed) or a highlighted snippet of code will be executed. A common source of error in scripts, such as a previously created object not found, is code that has not been executed in previous lines: make sure that all code has been executed as described above. To run all lines before the active line, you can use the keyboard shortcut Ctrl + Alt + B on Windows/Linux or Command + option + B on Mac.

Escaping

The console shows it’s ready to get new commands with the

> sign. It will show the + sign if it still

requires input for the command to be executed.

Sometimes you don’t know what is missing, you change your mind and want to run something else, or your code is running too long and you just want it to stop. The way to stop it is to press Esc.

Packages

A great power of R lays in packages: add-on sets of

functions that are build by the community and once they go

through a quality process they are available to download from a

repository called CRAN. They need to be explicitly

activated. Now, we will be using the tidyverse package,

which is actually a collection of useful packages. Another package that

we will use is here.

You were asked to install the tidyverse package in

preparation to the workshop. You need to install a package only once, so

you won’t have to do it again. We still need to install the

here package. To do so, go to the script and run:

R

install.packages("here")

Is tidyverse installed?

If you are not sure if you have the tidyverse package

installed, you can check it in the Packages tab in the

bottom right pane. In the search box start typing

‘tidyverse’ and see if it appears in the list of installed

packages. If not, you will need to install it by writing in the

script:

R

install.packages("tidyverse")

Commenting your code

Now we have a bit of an issue with our script. As mentioned, the

packages need to be installed only once, but now, they will be installed

each time we run the script, which can take a lot of time if we’re

installing a large package like tidyverse.

To keep track of you installing the packages, without executing it,

you can use a comment. In R, anything that is written after

a has sign #, is ignored in execution. Thanks to this

feature, you can annotate your code. Let’s adapt our script by changing

the first lines into comments:

R

# install.packages('here')

# install.packages('tidyverse')

Installing packages is not sufficient to work with them. You will

need to load them each time you want to use them. To do that you use the

library() command:

R

# Load packages

library(tidyverse)

library(here)

Handling paths

You have created a project which is your working directory, and a few

sub-folders, that will help you organise your project better. But now,

each time you will save or retrieve a file from those folders, you will

need to specify the path from the folder you are in (most likely the

scripts/ folder) to those files.

That can become complicated and might cause a reproducibility problem, if the person using your code (including future you) is working in a different sub-folder.

We will use the here() package to tackle this issue.

This package converts relative paths from the root (working directory)

of your project to absolute paths (the exact location on your computer).

For instance, instead of writing out the full path like

C:\Users\YourName\Documents\r-geospatial-urban\data\file.csv

or ~/Documents/r-geospatial-urban/data/file.csv, you can

use the here() function to create a path relative to your

project’s root directory. This makes your code more portable and

reproducible, as it doesn’t depend on a specific location of your

project on your computer.

This might be confusing, so let’s see how it works. We will use the

here() function from the here package. In the

console, we write:

R

here()

here("data")

You all probably have something different printed out. And this is

fine, because here adapts to your computer’s specific

situation.

Download files

We still need to download data for the first part of the workshop.

You can do it with the function download.file(). We will

save it in the data/ folder, where the raw

data should go. In the script, we will write:

R

# Download the data

download.file(

"https://bit.ly/geospatial_data",

here("data", "gapminder-data.csv")

)

The data we just downloaded is data about country statistics, containing information on, for instance, GDP and life-expectancy. We will work with this data later in the lesson

Importing data into R

Three of the most common ways of importing data in R are:

- loading a package with pre-installed data;

- downloading data from a URL;

- reading a file from your computer.

For larger datasets, database connections or API requests are also possible. We will not cover these in the workshop.

Introduction to R

You can use R as calculator. You can for example write:

R

1 + 100

1 * 100

1 / 100

Variables and assignment

However, what’s more useful is that in R we can store values, that is

assign them to objects and use them whenever we need

to. We use the assignment operator <-, like this:

R

x <- 1 / 40

Notice that assignment does not print a value. Instead, we’ve stored

it for later in something called a variable. The x variable

now contains the value 0.025:

R

x

Look for the Environment tab in the upper right pane of

RStudio. You will see that x and its value have appeared in

the list of Values. Our variable x can be used in place of

a number in any calculation that expects a number, e.g., when

calculating a square root:

R

sqrt(x)

Variables can be also reassigned. This means that we can assign a new

value to variable x:

R

x <- 100

x

You can use one variable to create a new one:

R

y <- sqrt(x) # you can use the value stored in object x to create y

y

- Use RStudio to write and run R programs.

- Use

install.packages()to install packages. - Use

library()to load packages.

Content from Data Structures

Last updated on 2026-02-10 | Edit this page

Overview

Questions

- What are the basic data types in R?

- How do I represent categorical information in R?

Objectives

After completing this episode, participants should be able to…

- Understand different types of data.

- Explore data frames and understand how they are related to vectors, factors and lists.

- Ask questions from R about the type, class, and structure of an object.

Vectors

So far we’ve looked at individual values, such as

x <- 100. Now we will move to a data structure called

vectors. Vectors are arrays of values of the same data type. So now we

combine multiple values into one object:

x <- c(100, 200)

Data types

Data type refers to a type of information that is stored by a value. It can be:

-

numerical(a number) -

integer(a number without information about decimal points) -

logical(a boolean - are values TRUE or FALSE?) -

character(a text, also referred to as a string of characters) -

complex(a complex number) -

raw(raw bytes)

We won’t discuss complex or raw data type

in the workshop.

Data structures

Vectors are the most common and basic data structure in R but you will come across other data structures such as data frames, lists and matrices as well. In short:

- data.frames is a two-dimensional data structure in which columns are vectors of the same length that can have different data types. We will use this data structure in this lesson.

- lists can have an arbitrary structure and can mix data types;

- matrices are two-dimensional data structures containing elements of the same data type.

For a more detailed description, see Data Types and Structures.

Note that vector data in the geospatial context is different from vector data types. More about vector data in a later lesson!

You can create a vector with a c() function.

You can inspect vectors with the str() function. You can

also see the structure in the environment tab of RStudio.

R

# vector of numbers - numeric data type.

numeric_vector <- c(2, 6, 3)

numeric_vector

OUTPUT

[1] 2 6 3R

str(numeric_vector)

OUTPUT

num [1:3] 2 6 3R

# vector of words or strings of characters - character data type.

# Note that we need to use quotation marks '' to tell R that we are

# working with strings.

character_vector <- c('Amsterdam', "'s Gravenhage", 'Delft')

character_vector

OUTPUT

[1] "Amsterdam" "'s Gravenhage" "Delft" R

str(character_vector)

OUTPUT

chr [1:3] "Amsterdam" "'s Gravenhage" "Delft"R

# vector of logical values (is something true or false?) - logical data type.

logical_vector <- c(TRUE, FALSE, TRUE)

logical_vector

OUTPUT

[1] TRUE FALSE TRUER

str(logical_vector)

OUTPUT

logi [1:3] TRUE FALSE TRUEWhich quotation marks to use?

In R, you can use both single '' and double

"" quotation marks for strings. However, best

practice is to use double quotes by default.

Use single quotes when the text inside the string contains double quotes — this tells R that the double quotation mark is part of the string, not the code.

Why double quotes?

Single quotes are often part of names or words (e.g., ’s Gravenhage), so using double quotes keeps your code cleaner and more consistent.

Preferred:

R

c("Amsterdam", "'s Gravenhage", "Delft")

OUTPUT

[1] "Amsterdam" "'s Gravenhage" "Delft" Avoid:

R

c('Amsterdam', "'s Gravenhage", 'Delft')

OUTPUT

[1] "Amsterdam" "'s Gravenhage" "Delft" Combining vectors

The combine function, c(), will also append things to an

existing vector:

R

ab_vector <- c("a", "b")

ab_vector

OUTPUT

[1] "a" "b"R

abcd_vector <- c(ab_vector, "c", "d")

abcd_vector

OUTPUT

[1] "a" "b" "c" "d"Missing values

Challenge: combining vectors

Combine the abcd_vector with the

numeric_vector in R. What is the data type of this new

vector and why?

combined_vector <- c(abcd_vector, numeric_vector)

combined_vector

str(combined_vector)The combined vector is a character vector. Because vectors can only

hold one data type and abcd_vector cannot be interpreted as

numbers, the numbers in numeric_vector are coerced

into characters.

A common operation you want to perform is to remove all the missing

values (in R denoted as NA). Let’s have a look how to do

it:

R

with_na <- c(1, 2, 1, 1, NA, 3, NA) # vector including missing values

First, let’s try to calculate mean for the values in this vector

R

mean(with_na) # mean() function cannot interpret the missing values

OUTPUT

[1] NAR

# You can add the argument na.rm = TRUE to calculate the result while

# ignoring the missing values.

mean(with_na, na.rm = TRUE)

OUTPUT

[1] 1.6However, sometimes, you would like to have the NA

permanently removed from your vector. For this you need to identify

which elements of the vector hold missing values with

is.na() function.

R

is.na(with_na) # This will produce a vector of logical values,

OUTPUT

[1] FALSE FALSE FALSE FALSE TRUE FALSE TRUER

# stating if a statement 'This element of the vector is a missing value'

# is true or not

# to see how many values are missing in our with_na vector, we can use the

# sum function

sum(is.na(with_na))

OUTPUT

[1] 2R

# to identify the values that are not missing we write the following

!is.na(with_na) # The ! operator means negation, i.e. not is.na(with_na)

OUTPUT

[1] TRUE TRUE TRUE TRUE FALSE TRUE FALSER

# and to sum all the non-missing values we write

sum(!is.na(with_na))

OUTPUT

[1] 5We know which elements in the vectors are NA. Now we

need to retrieve the subset of the with_na vector that is

not NA. Sub-setting in R is done with square

brackets[ ].

R

without_na <- with_na[!is.na(with_na)] # this notation will return only

# the elements that have TRUE on their respective positions

without_na

OUTPUT

[1] 1 2 1 1 3Factors

Another important data structure is called a factor. Factors look like character data, but are used to represent categorical information.

Factors create a structured relation between the different levels

(values) of a categorical variable, such as days of the week or

responses to a question in a survey. While factors look (and often

behave) like character vectors, they are actually treated as numbers by

R, which is useful for computing summary statistics about

their distribution, running regression analysis, etc. So you need to be

very careful when treating them as strings.

Create factors

Once created, factors can only contain a pre-defined set of values, known as levels.

R

nordic_str <- c("Norway", "Sweden", "Norway", "Denmark", "Sweden")

nordic_str # regular character vectors printed out

OUTPUT

[1] "Norway" "Sweden" "Norway" "Denmark" "Sweden" R

# factor() function converts a vector to factor data type

nordic_cat <- factor(nordic_str)

nordic_cat # With factors, R prints out additional information - 'Levels'

OUTPUT

[1] Norway Sweden Norway Denmark Sweden

Levels: Denmark Norway SwedenR

nordic_cat

OUTPUT

[1] Norway Sweden Norway Denmark Sweden

Levels: Denmark Norway SwedenR

str(nordic_cat)

OUTPUT

Factor w/ 3 levels "Denmark","Norway",..: 2 3 2 1 3Inspect factors

R will treat each unique value from a factor vector as a level and (silently) assign numerical values to it. This can come in handy when performing statistical analysis. You can inspect and adapt levels of the factor.

R

levels(nordic_cat) # returns all levels of a factor vector.

OUTPUT

[1] "Denmark" "Norway" "Sweden" R

nlevels(nordic_cat) # returns number of levels in a vector

OUTPUT

[1] 3Reorder levels

Note that R sorts the levels in the alphabetic order,

not in the order of occurrence in the vector. R assigns

value of:

- 1 to level ‘Denmark’,

- 2 to ‘Norway’

- 3 to ‘Sweden’.

This is important as it can affect e.g. the order in which categories are displayed in a plot or which category is taken as a baseline in a statistical model.

You can reorder the categories using the factor()

function. This can be useful, for instance, to select a reference

category (first level) in a regression model or for ordering legend

items in a plot, rather than using the default category systematically

(i.e., based on alphabetical order).

R

nordic_cat <- factor(

nordic_cat,

levels = c(

"Norway",

"Denmark",

"Sweden"

)

)

# now Norway will be the first category, Denmark second and Sweden third

nordic_cat

OUTPUT

[1] Norway Sweden Norway Denmark Sweden

Levels: Norway Denmark SwedenReordering factors

There is more than one way to reorder factors. Later in the lesson,

we will use fct_relevel() function from

forcats package to do the reordering.

R

library(forcats)

nordic_cat <- fct_relevel(

nordic_cat,

"Norway",

"Denmark",

"Sweden"

) # With this, Norway will be first category,

# Denmark second and Sweden third

nordic_cat

OUTPUT

[1] Norway Sweden Norway Denmark Sweden

Levels: Norway Denmark SwedenNote of caution

Remember that once created, factors can only contain a pre-defined

set of values, known as levels. It means that whenever you try to add

something to the factor outside of this set, it will become an

unknown/missing value detonated by R as

NA.

R

nordic_str

OUTPUT

[1] "Norway" "Sweden" "Norway" "Denmark" "Sweden" R

nordic_cat2 <- factor(

nordic_str,

levels = c("Norway", "Denmark")

)

# because we did not include Sweden in the list of

# factor levels, it has become NA.

nordic_cat2

OUTPUT

[1] Norway <NA> Norway Denmark <NA>

Levels: Norway Denmark- The mostly used basic data types in R are

numeric,integer,logical, andcharacter. - Use factors to represent categories in R.

Content from Exploring Data Frames & Data frame Manipulation with dplyr

Last updated on 2026-02-10 | Edit this page

Overview

Questions

- What is a data frame?

- How can I read data in R?

- How can I get basic summary information about my data set?

- How can I select specific rows and/or columns from a data frame?

- How can I combine multiple commands into a single command?

- How can I create new columns or remove existing columns from a data frame?

Objectives

After completing this episode, participants should be able to…

- Describe what a data frame is.

- Load external data from a

.csvfile into a data frame. - Summarize the contents of a data frame.

- Select certain columns in a data frame with the

dplyrfunctionselect(). - Select certain rows in a data frame according to filtering

conditions with the

dplyrfunctionfilter(). - Link the output of one dplyr function to the input of another

function with the ‘pipe’ operator

|>. - Add new columns to a data frame based on existing columns with mutate.

- Use

summarize(),group_by(), andcount()to split a data frame into groups of observations, apply a summary statistics to each group, and then combine the results.

Exploring Data frames

Now we turn to the bread-and-butter of working with R:

working with tabular data. In R data are stored in a data

structure called data frames.

A data frame is a representation of data in the format of a table where the columns are vectors that all have the same length.

Because columns are vectors, each column must contain a single type of data (e.g., characters, numeric, factors). For example, here is a figure depicting a data frame comprising a numeric, a character, and a logical vector.

Source: Data

Carpentry R for Social Scientists

Reading data

read.csv() is a function used to read comma separated

data files (.csv format)). There are other functions for

files separated with other delimiters. We read in the

gapminder data set with information about countries’ size,

GDP and average life expectancy in different years.

R

gapminder <- read.csv(here("data", "gapminder_data.csv"))

Exploring dataset

Let’s investigate the gapminder data frame a bit; the

first thing we should always do is check out what the data looks

like.

It is important to see if all the variables (columns) have the data type that we require. For instance, a column might have numbers stored as characters, which would not allow us to make calculations with those numbers.

R

str(gapminder)

OUTPUT

'data.frame': 1704 obs. of 6 variables:

$ country : chr "Afghanistan" "Afghanistan" "Afghanistan" "Afghanistan" ...

$ year : int 1952 1957 1962 1967 1972 1977 1982 1987 1992 1997 ...

$ pop : num 8425333 9240934 10267083 11537966 13079460 ...

$ continent: chr "Asia" "Asia" "Asia" "Asia" ...

$ lifeExp : num 28.8 30.3 32 34 36.1 ...

$ gdpPercap: num 779 821 853 836 740 ...We can see that the gapminder object is a data.frame

with 1704 observations (rows) and 6 variables (columns).

In each line after a $ sign, we see the name of each

column, its type and first few values.

First look at the dataset

There are multiple ways to explore a data set. Here are just a few examples:

R

# Show first 6 rows of the data set

head(gapminder)

OUTPUT

country year pop continent lifeExp gdpPercap

1 Afghanistan 1952 8425333 Asia 28.801 779.4453

2 Afghanistan 1957 9240934 Asia 30.332 820.8530

3 Afghanistan 1962 10267083 Asia 31.997 853.1007

4 Afghanistan 1967 11537966 Asia 34.020 836.1971

5 Afghanistan 1972 13079460 Asia 36.088 739.9811

6 Afghanistan 1977 14880372 Asia 38.438 786.1134R

# Basic statistical information about each column

# Information format differs by data type.

summary(gapminder)

OUTPUT

country year pop continent

Length:1704 Min. :1952 Min. :6.001e+04 Length:1704

Class :character 1st Qu.:1966 1st Qu.:2.794e+06 Class :character

Mode :character Median :1980 Median :7.024e+06 Mode :character

Mean :1980 Mean :2.960e+07

3rd Qu.:1993 3rd Qu.:1.959e+07

Max. :2007 Max. :1.319e+09

lifeExp gdpPercap

Min. :23.60 Min. : 241.2

1st Qu.:48.20 1st Qu.: 1202.1

Median :60.71 Median : 3531.8

Mean :59.47 Mean : 7215.3

3rd Qu.:70.85 3rd Qu.: 9325.5

Max. :82.60 Max. :113523.1 R

# Return number of rows in a dataset

nrow(gapminder)

OUTPUT

[1] 1704R

# Return number of columns in a dataset

ncol(gapminder)

OUTPUT

[1] 6Dollar sign ($)

When you’re analyzing a data set, you often need to access its specific columns.

One handy way to access a column is using it’s name and a dollar sign

$:

R

# This notation means: From dataset gapminder, give me column country. You can

# see that the column accessed in this way is just a vector of characters.

country_vec <- gapminder$country

head(country_vec)

OUTPUT

[1] "Afghanistan" "Afghanistan" "Afghanistan" "Afghanistan" "Afghanistan"

[6] "Afghanistan"Now you can explore distinct values from a vector with the unique() function:

R

head(unique(country_vec), 10)

OUTPUT

[1] "Afghanistan" "Albania" "Algeria" "Angola" "Argentina"

[6] "Australia" "Austria" "Bahrain" "Bangladesh" "Belgium" Note that the calling a column with a $ sign will return

a vector - it’s not a data frame anymore.

Data frame Manipulation with dplyr

Select

Let’s start manipulating the data.

First, we will adapt our data set, by keeping only the columns we’re

interested in, using the select() function from the

dplyr package:

R

year_country_gdp <- select(gapminder, year, country, gdpPercap)

head(year_country_gdp)

OUTPUT

year country gdpPercap

1 1952 Afghanistan 779.4453

2 1957 Afghanistan 820.8530

3 1962 Afghanistan 853.1007

4 1967 Afghanistan 836.1971

5 1972 Afghanistan 739.9811

6 1977 Afghanistan 786.1134Pipe

Now, this is not the most common notation when working with

dplyr package. R offers an operator

|> called a pipe, which allows you to build up

complicated commands in a readable way.

The pipe

The |> operator, also called the “native pipe”, was

introduced in R version 4.1.0. Before that, the

%>% operator from the magrittr package was

widely used. The two pipes work in similar ways. The main difference is

that you don’t need to load any packages to have the native pipe

available.

The select() statement with a pipe would look like

that:

R

year_country_gdp <- gapminder |>

select(year, country, gdpPercap)

head(year_country_gdp)

OUTPUT

year country gdpPercap

1 1952 Afghanistan 779.4453

2 1957 Afghanistan 820.8530

3 1962 Afghanistan 853.1007

4 1967 Afghanistan 836.1971

5 1972 Afghanistan 739.9811

6 1977 Afghanistan 786.1134First we define the dataset, then with the use of the pipe we pass it

on to the select() function. This way we can chain multiple

functions together.

Filter

We already know how to select only the needed columns. But now, we

also want to filter the rows of our data set on certain conditions with

the filter() function. Instead of doing it in separate

steps, we can do it all together.

In the gapminder dataset, we want to see the results

from outside of Europe for the 21st century.

R

year_country_gdp_euro <- gapminder |>

filter(continent != "Europe" & year >= 2000) |>

select(year, country, gdpPercap)

# '&' operator (AND) - both conditions must be met

head(year_country_gdp_euro)

OUTPUT

year country gdpPercap

1 2002 Afghanistan 726.7341

2 2007 Afghanistan 974.5803

3 2002 Algeria 5288.0404

4 2007 Algeria 6223.3675

5 2002 Angola 2773.2873

6 2007 Angola 4797.2313Let’s now focus only on North American countries

R

year_gdp_namerica <- year_country_gdp_euro |>

filter(country == "Canada" | country == "Mexico" | country == "United States")

# '|' operator (OR) - at least one of the conditions must be met

head(year_gdp_namerica)

OUTPUT

year country gdpPercap

1 2002 Canada 33328.97

2 2007 Canada 36319.24

3 2002 Mexico 10742.44

4 2007 Mexico 11977.57

5 2002 United States 39097.10

6 2007 United States 42951.65Challenge: filtered data frame

Write a single command (which can span multiple lines and includes pipes) that will produce a data frame that has the values for life expectancy, country and year, only for EurAsia.

How many rows does your data frame have and why?

R BG-INFO

year_country_gdp_eurasia <- gapminder |>

filter(continent == "Europe" | continent == "Asia") |>

select(year, country, gdpPercap)

# '|' operator (OR) - one of the conditions must be met

nrow(year_country_gdp_eurasia)

OUTPUT

[1] 756Group and summarize

So far, we have provided summary statistics on the whole dataset, selected columns, and filtered the observations. But often instead of doing that, we would like to know statistics by group. Let’s calculate the average GDP per capita by continent.

R

gapminder |> # select the dataset

group_by(continent) |> # group by continent

summarize(avg_gdpPercap = mean(gdpPercap)) # create basic stats

OUTPUT

# A tibble: 5 × 2

continent avg_gdpPercap

<chr> <dbl>

1 Africa 2194.

2 Americas 7136.

3 Asia 7902.

4 Europe 14469.

5 Oceania 18622.Challenge: longest and shortest life expectancy

Calculate the average life expectancy per country. Which country has the longest average life expectancy and which has the shortest average life expectancy?

Hint Use max() and min()

functions to find minimum and maximum.

R BG-INFO

gapminder |>

group_by(country) |>

summarize(avg_lifeExp = mean(lifeExp)) |>

filter(avg_lifeExp == min(avg_lifeExp) |

avg_lifeExp == max(avg_lifeExp))

OUTPUT

# A tibble: 2 × 2

country avg_lifeExp

<chr> <dbl>

1 Iceland 76.5

2 Sierra Leone 36.8Multiple groups and summary variables

You can also group by multiple columns:

R

gapminder |>

group_by(continent, year) |>

summarize(avg_gdpPercap = mean(gdpPercap))

OUTPUT

# A tibble: 60 × 3

# Groups: continent [5]

continent year avg_gdpPercap

<chr> <int> <dbl>

1 Africa 1952 1253.

2 Africa 1957 1385.

3 Africa 1962 1598.

4 Africa 1967 2050.

5 Africa 1972 2340.

6 Africa 1977 2586.

7 Africa 1982 2482.

8 Africa 1987 2283.

9 Africa 1992 2282.

10 Africa 1997 2379.

# ℹ 50 more rowsOn top of this, you can also make multiple summaries of those groups:

R

gdp_pop_bycontinents_byyear <- gapminder |>

group_by(continent, year) |>

summarize(

avg_gdpPercap = mean(gdpPercap),

sd_gdpPercap = sd(gdpPercap),

avg_pop = mean(pop),

sd_pop = sd(pop),

n_obs = n()

)

head(gdp_pop_bycontinents_byyear)

OUTPUT

# A tibble: 6 × 7

# Groups: continent [1]

continent year avg_gdpPercap sd_gdpPercap avg_pop sd_pop n_obs

<chr> <int> <dbl> <dbl> <dbl> <dbl> <int>

1 Africa 1952 1253. 983. 4570010. 6317450. 52

2 Africa 1957 1385. 1135. 5093033. 7076042. 52

3 Africa 1962 1598. 1462. 5702247. 7957545. 52

4 Africa 1967 2050. 2848. 6447875. 8985505. 52

5 Africa 1972 2340. 3287. 7305376. 10130833. 52

6 Africa 1977 2586. 4142. 8328097. 11585184. 52Frequencies

If you need only a number of observations per group, you can use the

count() function

R

gapminder |>

count(continent)

OUTPUT

continent n

1 Africa 624

2 Americas 300

3 Asia 396

4 Europe 360

5 Oceania 24Mutate

Frequently you’ll want to create new columns based on the values in

existing columns. For example, instead of only having the GDP per

capita, we might want to create a new GDP variable and convert its units

into Billions. For this, we’ll use mutate().

R

gapminder_gdp <- gapminder |>

mutate(gdpBillion = gdpPercap * pop / 10^9)

head(gapminder_gdp)

OUTPUT

country year pop continent lifeExp gdpPercap gdpBillion

1 Afghanistan 1952 8425333 Asia 28.801 779.4453 6.567086

2 Afghanistan 1957 9240934 Asia 30.332 820.8530 7.585449

3 Afghanistan 1962 10267083 Asia 31.997 853.1007 8.758856

4 Afghanistan 1967 11537966 Asia 34.020 836.1971 9.648014

5 Afghanistan 1972 13079460 Asia 36.088 739.9811 9.678553

6 Afghanistan 1977 14880372 Asia 38.438 786.1134 11.697659- We can use the

select()andfilter()functions to select certain columns in a data frame and to subset it based a specific conditions. - With

mutate(), we can create new columns in a data frame with values based on existing columns. - By combining

group_by()andsummarize()in a pipe (|>) chain, we can generate summary statistics for each group in a data frame.

Content from Introduction to visualisation

Last updated on 2026-02-10 | Edit this page

Overview

Questions

- How can I create a basic plot in R?

- How can I add features to a plot?

- How can I get basic summary information about my data set?

- How can I include addition information via a colours palette.

- How can I find more information about a function and its arguments?

- How can I reorder columns in a data frame?

Objectives

After completing this episode, participants should be able to…

- Generate plots to visualise data with

ggplot2. - Add plot layers to incrementally build a more complex plot.

- Use the

fillargument for colouring surfaces, and modify colours with the viridis or scale_manual packages. - Explore the help documentation.

- Save and format your plot via the

ggsave()function.

Introduction to Visualisation

The package ggplot2 is a powerful plotting system. We

will start with an introduction of key features of ggplot2.

gg stands for grammar of graphics. The idea behind it is

that the following three components are needed to create a graph:

- data,

- aesthetics - a coordinate system on which we map the data (what is represented on x axis, what on y axis), and

- geometries - visual representation of the data (points, bars, etc.)

A fun part about ggplot2 is that you can add layers to

the plot to provide more information and to make it more beautiful.

While here we still focus on the gapminder dataset, in

later parts of this workshop we will use ggplot2 to

visualize geospatial data. First, make sure that you have the

tidyverse loaded, which includes ggplot2.

R

library(tidyverse)

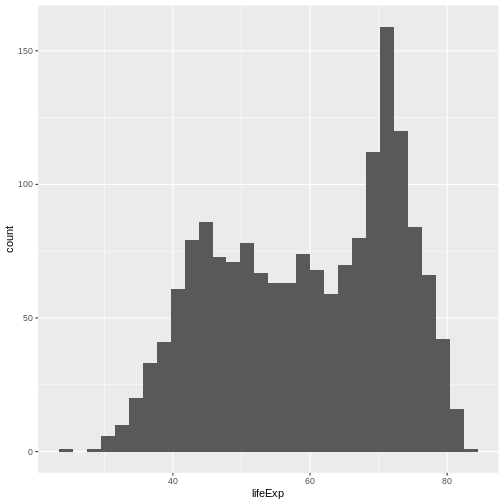

Now, lets plot the distribution of life expectancy in the

gapminder dataset:

R

ggplot(

data = gapminder, # data

aes(x = lifeExp) # aesthetics layer

) +

geom_histogram() # geometry layer

You can see that in ggplot you use + as a

pipe, to add layers. Within the ggplot() call, it is the

only pipe that will work. But, it is possible to chain operations on a

data set with a pipe that we have already learned: |>

and follow them by ggplot syntax.

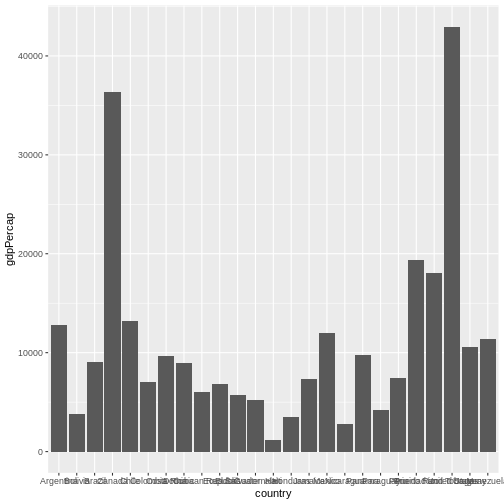

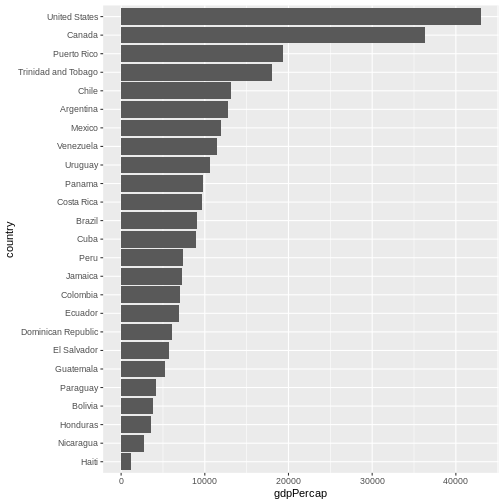

Let’s create another plot, this time only on a subset of observations:

R

gapminder |> # we select a data set

filter(year == 2007 & continent == "Americas") |> # filter year and continent

ggplot(aes(x = country, y = gdpPercap)) + # the x and y axes represent columns

geom_col() # we use a column graph as a geometry

Now, you can iteratively improve how the plot looks like. For example, you might want to flip it, to better display the labels.

R

gapminder |>

filter(year == 2007 & continent == "Americas") |>

ggplot(aes(x = country, y = gdpPercap)) +

geom_col() +

coord_flip() # flip axes

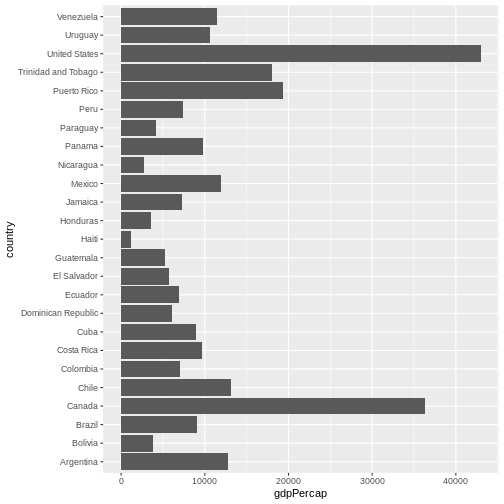

One thing you might want to change here is the order in which countries are displayed. It would be easier to compare GDP per capita, if they were showed in order. To do that, we need to reorder factor levels (you remember, we’ve already done this before).

Now the order of the levels will depend on another variable - GDP per capita.

R

gapminder |>

filter(year == 2007 & continent == "Americas") |>

mutate(country = fct_reorder(country, gdpPercap)) |> # reorder factor levels

ggplot(aes(x = country, y = gdpPercap)) +

geom_col() +

coord_flip()

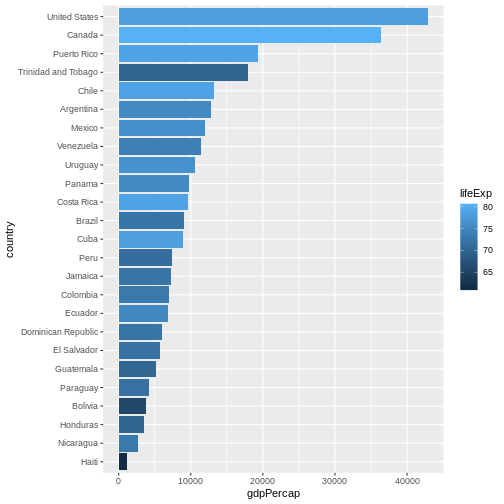

Let’s make things more colourful - let’s represent the average life expectancy of a country by colour

R

gapminder |>

filter(year == 2007 & continent == "Americas") |>

mutate(country = fct_reorder(country, gdpPercap)) |>

ggplot(aes(

x = country,

y = gdpPercap,

fill = lifeExp # use 'fill' for surfaces; 'colour' for points and lines

)) +

geom_col() +

coord_flip()

We can also adapt the colour scale. Common choice that is used for

its readability and colorblind-proofness are the palettes available in

the viridis package.

R

gapminder |>

filter(year == 2007 & continent == "Americas") |>

mutate(country = fct_reorder(country, gdpPercap)) |>

ggplot(aes(

x = country,

y = gdpPercap,

fill = lifeExp

)) +

geom_col() +

coord_flip() +

scale_fill_viridis_c() # _c stands for continuous scale

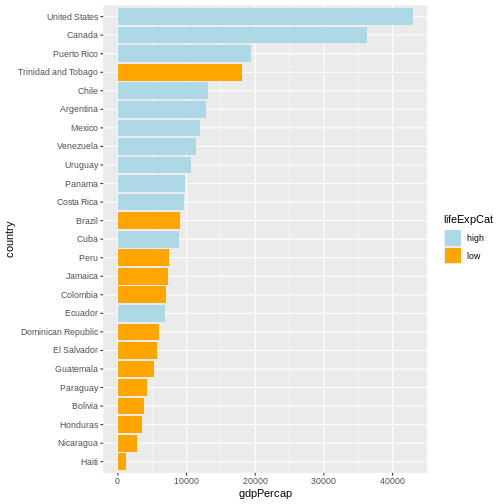

Maybe we don’t need that much information about the life expectancy.

We only want to know if it’s below or above average. We will make use of

the if_else() function inside mutate() to

create a new column lifeExpCat with the value

high if life expectancy is above average and

low otherwise. Note the usage of the if_else()

function:

if_else(<condition>, <value if TRUE>, <value if FALSE>).

R

p <- # this time let's save the plot in an object

gapminder |>

filter(year == 2007 & continent == "Americas") |>

mutate(

country = fct_reorder(country, gdpPercap),

lifeExpCat = if_else(

lifeExp >= mean(lifeExp),

"high",

"low"

)

) |>

ggplot(aes(x = country, y = gdpPercap, fill = lifeExpCat)) +

geom_col() +

coord_flip() +

scale_fill_manual(

values = c(

"light blue",

"orange"

) # customize the colors

)

Since we saved a plot as an object p, nothing has been

printed out. Just like with any other object in R, if you

want to see it, you need to call it.

R

p

Now we can make use of the saved object and add things to it.

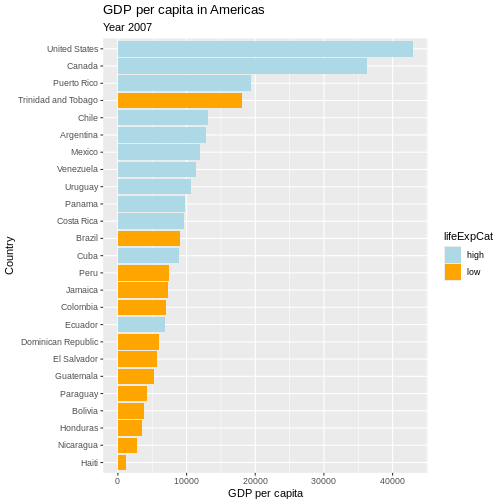

Let’s also give it a title, name the axes and the legend:

R

p <- p +

labs(

title = "GDP per capita in Americas",

subtitle = "Year 2007",

x = "Country",

y = "GDP per capita",

fill = "Life Expectancy categories"

)

# show plot

p

Writing data

Saving the plot

Once we are happy with our plot we can save it in a format of our choice. Remember to save it in the dedicated folder.

R

ggsave(

plot = p,

filename = here("fig_output", "plot_americas_2007.pdf")

)

# By default, ggsave() saves the last displayed plot, but

# you can also explicitly name the plot you want to save

Using help documentation

My saved plot is not very readable. We can see why it happened by exploring the help documentation. We can do that by writing directly in the console:

R

?ggsave

We can read that it uses the “size of the current graphics device”.

That would explain why our saved plots look slightly different. Feel

free to explore the documentation to see how to adapt the size e.g. by

adapting width, height and units

parameter.

Saving the data

Another output of your work you want to save is a cleaned data set. In your analysis, you can then load directly that data set. Let’s say we want to save the data only for Americas:

R

gapminder_amr_2007 <- gapminder |>

filter(year == 2007 & continent == "Americas") |>

mutate(

country_reordered = fct_reorder(country, gdpPercap),

lifeExpCat = if_else(lifeExp >= mean(lifeExp), "high", "low")

)

write.csv(gapminder_amr_2007,

here("data_output", "gapminder_americas_2007.csv"),

row.names = FALSE

)

- With

ggplot2, we use the+operator to combine plot layers and incrementally build a more complex plot. - In the aesthetics (

aes()), we can assign variables to the x and y axes and use thefillargument for colouring surfaces. - With

scale_fill_viridis_c()andscale_fill_manual()we can assign new colours to our plot. - To open the help documentation for a function, we run the name of

the function preceded by the

?sign.

Content from Introduction to Geospatial Concepts

Last updated on 2026-02-10 | Edit this page

Overview

Questions

- How do I describe the location of a geographic feature on the surface of the earth?

- What is a coordinate reference system (CRS) and how do I describe different types of CRS?

- How do I decide on what CRS to use?

Objectives

After completing this episode, participants should be able to…

- Identify the CRS that is best fit for a specific research question.

The shape of the Earth

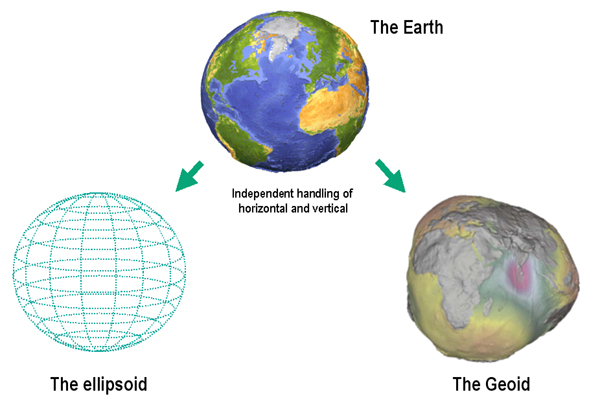

The shape of the Earth is approximately a sphere which is slightly wider than it is tall, and which is called ellipsoid. The true shape of the Earth is an irregular ellipsoid, the so-called geoid, as illustrated in the image below.

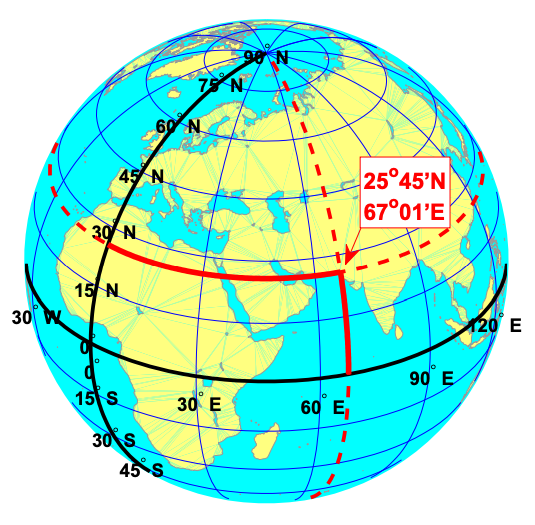

The most common and basic representation of the position of points on the Earth is the combination of the geographical latitude and longitude, as shown below.

Meridians are vertical circles with constant longitude, called great circles, which run from the North Pole to the South Pole. Parallels are horizontal circles with constant latitude, which are called small circles. Only the equator (the largest parallel) is also a great circle.

The black lines in the figure above show the equator and the prime meridian running through Greenwich, with latitude and longitude labels. The red dotted lines show the meridian and parallel running through Karachi, Pakistan (25°45’N, 67°01’E).

Map projection: From the 3D Earth to a 2D map

Map projection is a systematic transformation of the latitudes and longitudes of locations on the surface of an ellipsoid into locations on a plane. It is a transformation of the three-dimensional Earth’s surface into its two-dimensional representation on a sheet of paper or computer screen (see the image below for a comparison with flattening an orange peel).

Many different map projections are in use for different purposes. Generally, they can be categorised into the following groups: cylindrical, conic, and azimuthal.

Each map projection introduces a distortion in geometrical elements – distance, angle, and area. Depending on which of these geometrical elements are more relevant for a specific map, we can choose an appropriate map projection. Conformal projections are the best for preserving angles between any two curves, which means preserving the correct shapes of small areas; equal area (equivalent) projections preserve the area or scale; equal distance (conventional) projections are the best for preserving distances.

Coordinate reference systems (CRS)

A coordinate reference system (CRS) is a coordinate-based local, regional or global system for locating geographical entities, which uses a specific map projection. It defines how the two-dimensional, projected map relates to real places on the Earth.

All coordinate reference systems are included in a public registry called the EPSG Geodetic Parameter Dataset (EPSG registry), initiated in 1985 by a member of the European Petroleum Survey Group (EPSG). Each CRS has a unique EPSG code, which makes it possible to easily identify them among the large number of CRS. This is particularly important for transforming spatial data from one CRS to another.

Some of the most commonly used CRS in the Netherlands are the following:

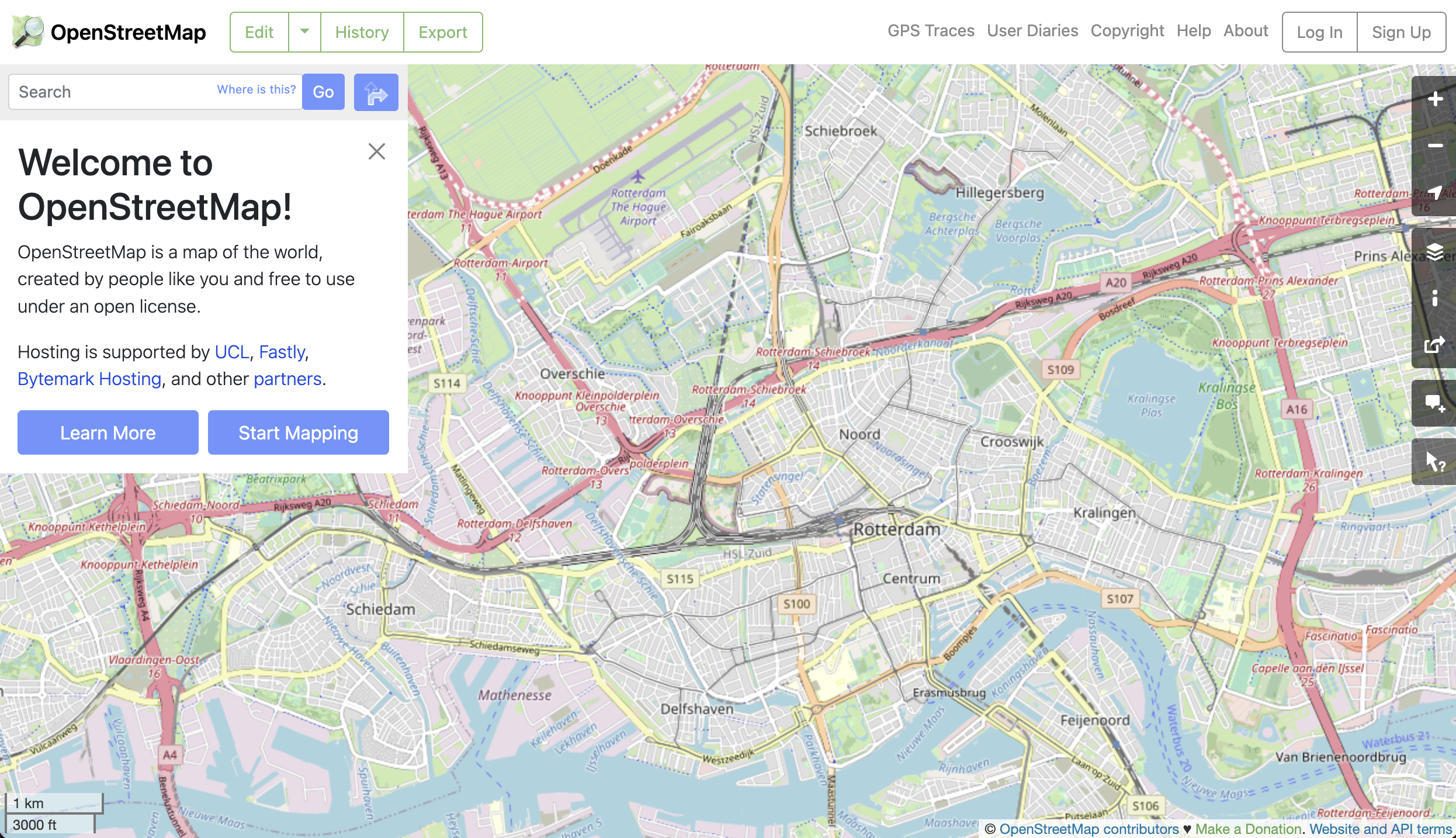

- World Geodetic System 1984 (WGS84) is the best known global reference system (EPSG:4326).

- European Terrestrial Reference System 1989 (ETRS89) is the standard coordinate system for Europe (EPSG:4258).

- The most popular projected CRS in the Netherlands is ‘Stelsel van de Rijksdriehoeksmeting (RD)’ registered in EPSG as ‘Amersfoort / RD New’ (EPSG:28992).

The main parameters of each CRS are the following:

- Datum is a model of the shape of the Earth, which specifies how a coordinate system is linked to the Earth, e.g. how to define the origin of the coordinate axis – where (0,0) is. It has angular units (degrees).

- Projection is mathematical transformation of the angular measurements on the Earth to linear units (e.g. meters) on a flat surface (paper or a computer screen).

- Additional parameters, such as a definition of the centre of the map, are often necessary to create the full CRS.

If you work with data for the Netherlands, you will most likely encounter the two CRS shown in the table below, namely the WGS 84 and Amersfoort / RD New. While WGS 84 is used for data for countries worldwide (for example, for OpenStreetMap data), Amersfoort / RD New is a Dutch local CRS. For other countries, other local CRS are available.

| WGS 84 (EPSG:4326) | Amersfoort / RD New (EPSG:28992) | |

|---|---|---|

| Definition | Dynamic (relies on a datum which is not plate-fixed) | Static (relies on a datum which is plate-fixed) |

| Celestial body | Earth | Earth |

| Ellipsoid | WGS-84 | Bessel 1841 |

| Prime meridian | International Reference Meridian | Greenwich |

| Datum | World Geodetic System 1984 ensemble | Amersfoort |

| Projection | Geographic (uses latitude and longitude for coordinates) | Projected (uses meters for coordinates) |

| Method | Lat/long (Geodetic alias) | Oblique Stereographic Alternative |

| Units | Degrees | Meters |

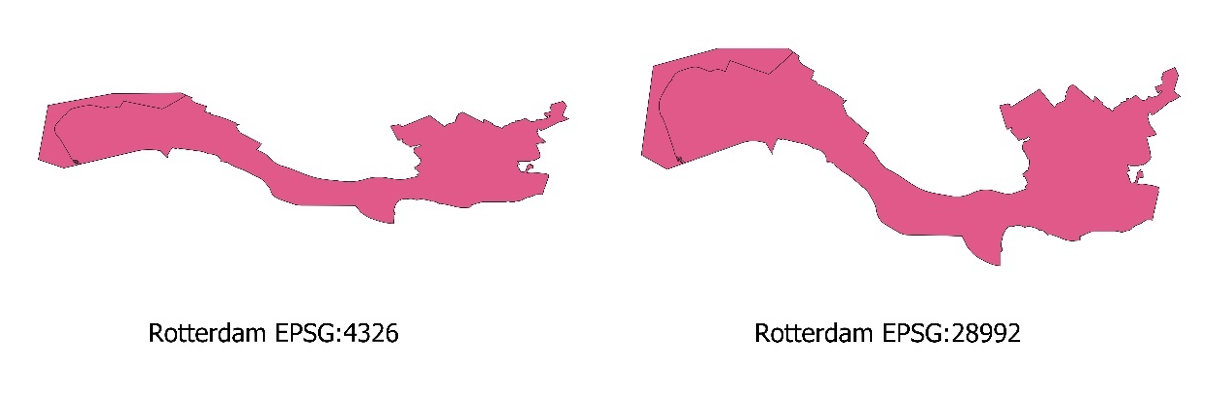

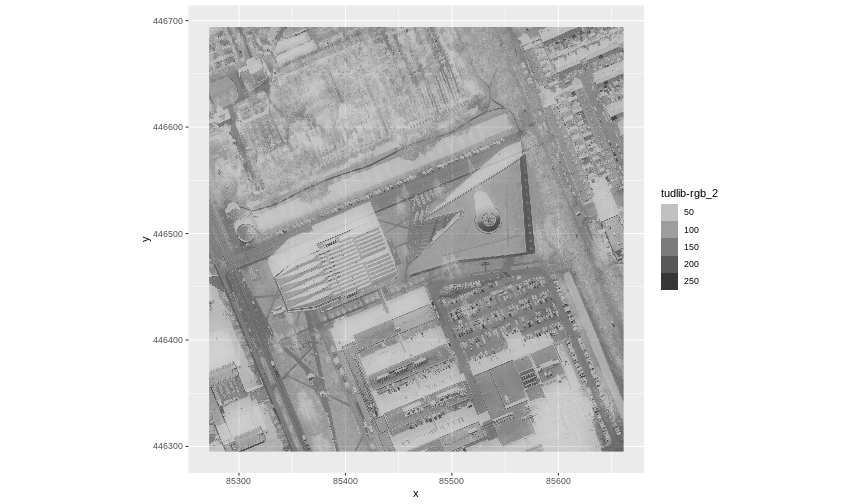

The figure below shows the same city (Rotterdam) in these two CRS.

In addition to using different CRS, these two maps of Rotterdam also have different scales.

Map scale

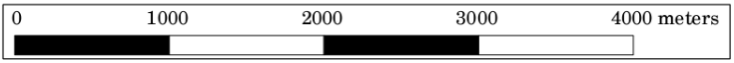

Map scale measures the ratio between distance on a map and the corresponding distance on the ground. For example, on a 1:100 000 scale map, 1cm on the map equals 1km (100 000 cm) on the ground. Map scale can be expressed in the following three ways:

| Verbal: | 1 centimetre represents 250 meters |

| Fraction: | 1:25000 |

| Graphic: |  |

Where is the scale bar?

Note that the maps presented in this lesson do not use a scale bar. Instead, plot axes will serve that purpose.

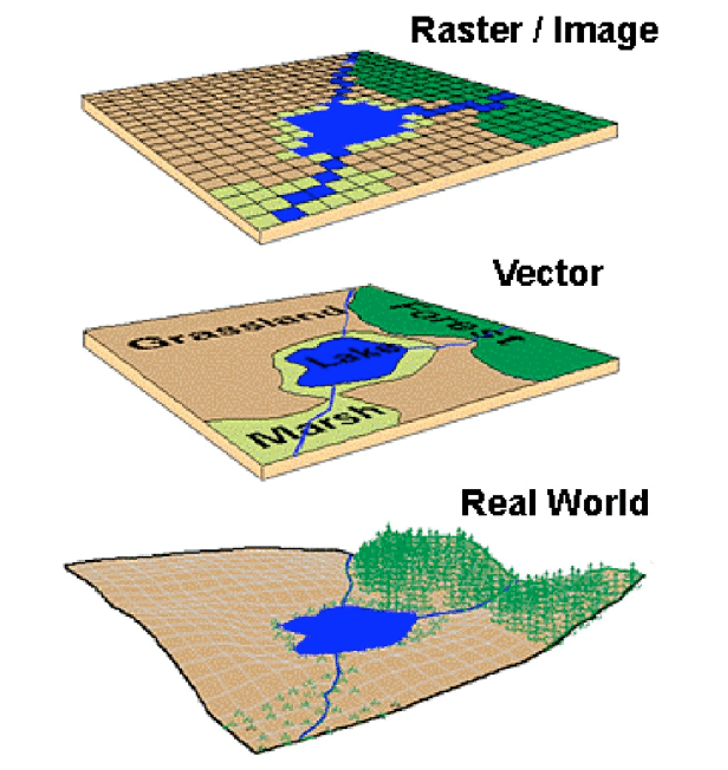

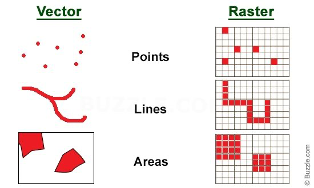

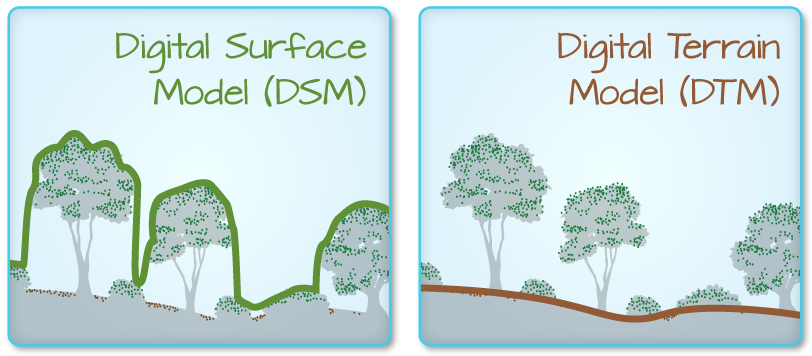

Types of geospatial data

The map of Rotterdam in the figure above shows the area of the city as a discrete feature with precise boundaries. This type of data is called vector. Vector data can have the form of points, lines and polygons (areas).

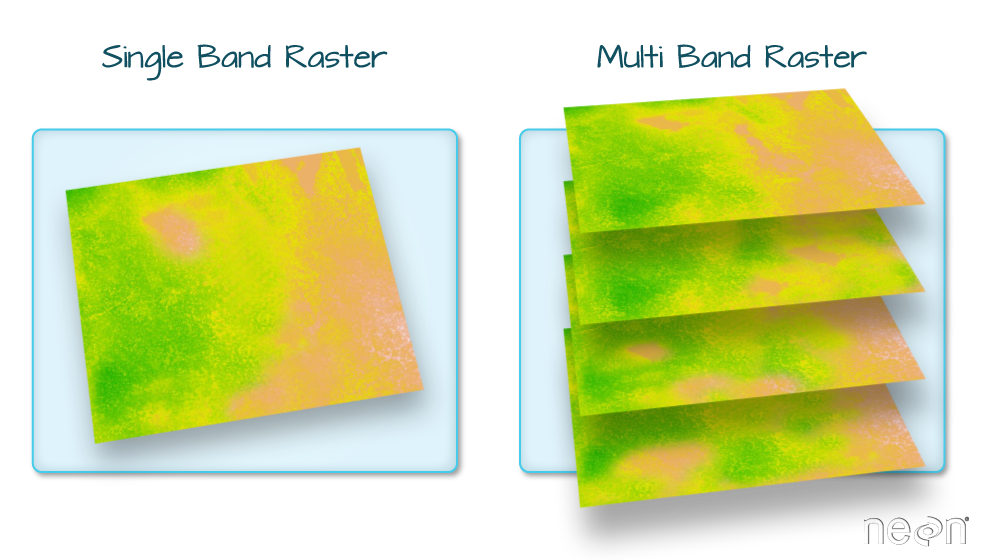

We can also represent geographical features on the Earth as continuous phenomena and images of the Earth. This type of data is called raster. The figure below shows the two types of geospatial data which can be used to represent the real world, namely vector and raster.

Disambiguating vectors

Vector data in geospatial analysis is not the same as vectors in R. In R, vectors are one-dimensional arrays of elements of the same type, while geospatial vector data is a type of data that represents discrete features with precise boundaries.

Challenge: CRS for calculating areas

You want to investigate which European country has the largest urban area. Which CRS will you use?

- EPSG:4326

- EPSG:28992

- EPSG:3035

Hint: Go to https://epsg.io/ or https://epsg.org/search/by-name and check properties of the given CRS, such as datum, type of map projection, units of measure etc.

Correct answer: c. EPSG:3035

Challenge: CRS for calculating shortest paths

You want to calculate the shortest path between two buildings in Delft. Which CRS will you use?

- EPSG:4326

- EPSG:28992

- EPSG:3035

Hint: Go to https://epsg.io/ or https://epsg.org/search/by-name and check properties of the given CRS, such as datum, type of map projection, units of measure etc.

Correct answer: b. EPSG:28992

References

Knippers, R. (2009): Geometric aspects of mapping. International Institute for Geo-Information Science and Earth Observation (ITC), Enschede. https://kartoweb.itc.nl/geometrics/ (Accessed 22-01-2024)

Saab, D. J. (2003). Conceptualizing space: Mapping schemas as meaningful representations. Unpublished Master’s Thesis, Lesley University, Cambridge, MA, http://www.djsaab.info/thesis/djsaab_thesis.pdf.

United Nations Statistics Division and International Cartographic Association (2012): 3. Plane rectangular coordinate systems – A) The ellipsoid / geoid. https://unstats.un.org/unsd/geoinfo/ungegn/docs/_data_icacourses/_HtmlModules/_Selfstudy/S06/S06_03a.html (Accessed 22-01-2024)

Van der Marel, H. (2014). Reference systems for surveying and mapping. Lecture notes. Faculty of Civil Engineering and Geosciences, Delft University of Technology, Delft, The Netherlands. https://gnss1.tudelft.nl/pub/vdmarel/reader/CTB3310_RefSystems_1-2a_print.pdf (Accessed 22-01-2024)

Useful resources

Campbell, J., Shin, M. E. (2011). Essentials of Geographic Information Systems. Textbooks. 2. https://digitalcommons.liberty.edu/textbooks/2 (Accessed 22-01-2024)

Data Carpentry (2023): Introduction to Geospatial Concepts. Coordinate Reference Systems. https://datacarpentry.org/organization-geospatial/03-crs.html (Accessed 22-01-2024)

GeoRepository (2024): EPSG Geodetic Parameter Dataset https://epsg.org/home.html (Accessed 22-01-2024)

Klokan Technologies GmbH (2022) https://epsg.io/ (Accessed 22-01-2024)

United Nations Statistics Division and International Cartographic Association (2012b): UNGEGN-ICA webcourse on Toponymy. https://unstats.un.org/unsd/geoinfo/ungegn/docs/_data_icacourses/2012_Home.html (Accessed 22-01-2024)

Each location on the Earth has its geographical latitude and longitude, which can be transformed on a plane using a map projection.

Depending on the research question, we need a global, regional, or local CRS with suitable properties such as the least possible distortion and an appropriate measurement unit.

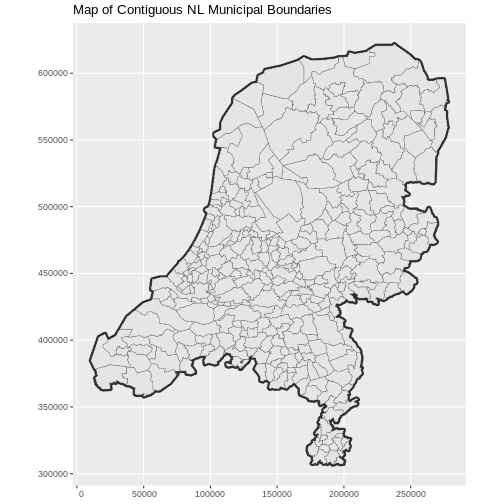

Content from Open and Plot Vector Layers

Last updated on 2026-02-10 | Edit this page

Overview

Questions

- How can I read, examine and visualize point, line and polygon vector data in R?

Objectives

After completing this episode, participants should be able to…

- Differentiate between point, line, and polygon vector data.

- Load vector data into R.

- Access the attributes of a vector object in R.

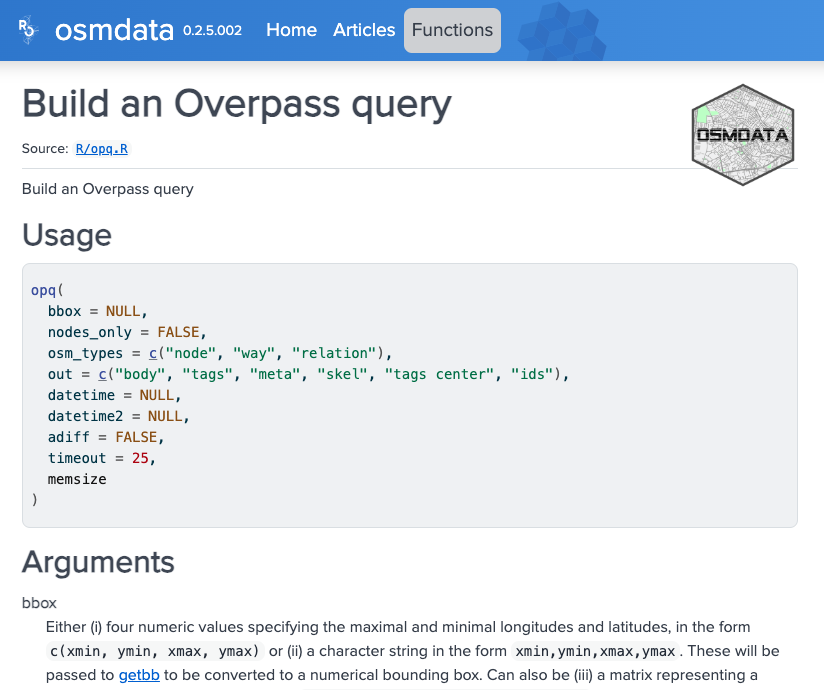

In this lesson you will work with the sf package. Note

that the sf package has some external dependencies, namely

GEOS, PROJ.4, GDAL and UDUNITS, which need to be installed beforehand.

Before starting the lesson, follow the workshop setup instructions for the installation of

sf and its dependencies.

First we need to load the packages we will use in this lesson. We

will use the tidyverse package with which you are already

familiar from the previous lesson. In addition, we need to load the sf package for

working with spatial vector data.

R

library(tidyverse) # wrangle, reshape and visualize data

library(sf) # work with spatial vector data

The ‘sf’ package

sf stands for Simple Features which is a standard

defined by the Open Geospatial Consortium for storing and accessing

geospatial vector data. Read more about simple features and its

implementation in R here.

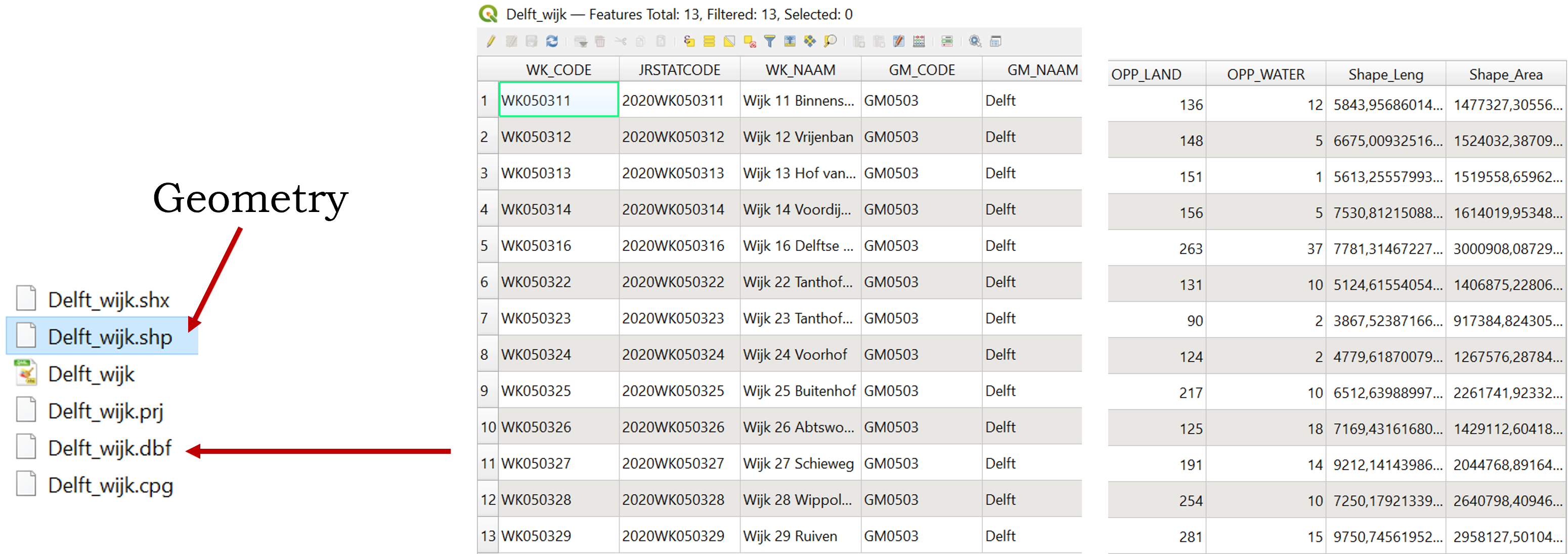

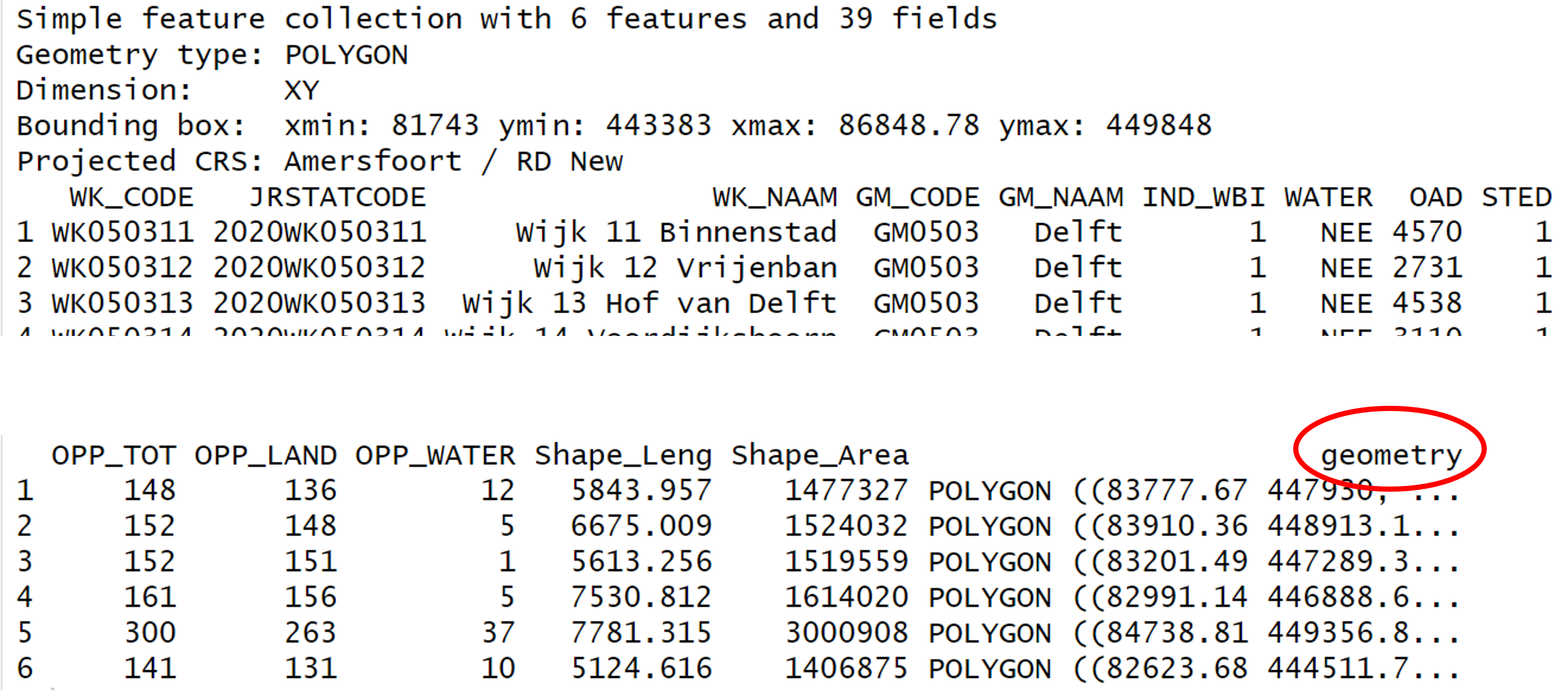

Geometry in QGIS and in R

You may be familiar with GIS software using graphical interfaces like

QGIS. In QGIS, you do not see the geometry in the Attribute Table but it

is directly displayed in the map view. In R, however, the geometry is

stored in a column called geometry.

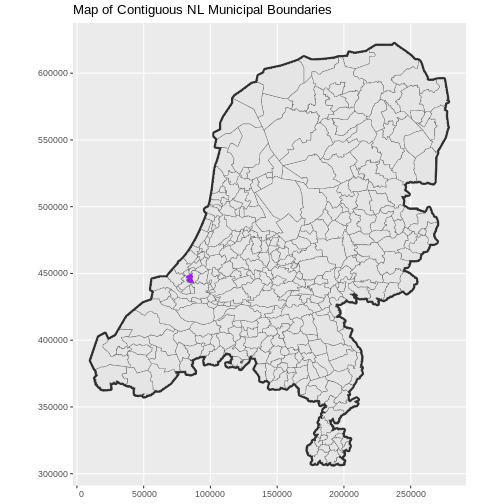

Import shapefiles

Let’s start by opening a shapefile. Shapefiles are a common file

format to store spatial vector data used in GIS software. Note that a

shapefile consists of multiple files and it is important to keep them

all together in the same location. We will read a shapefile with the

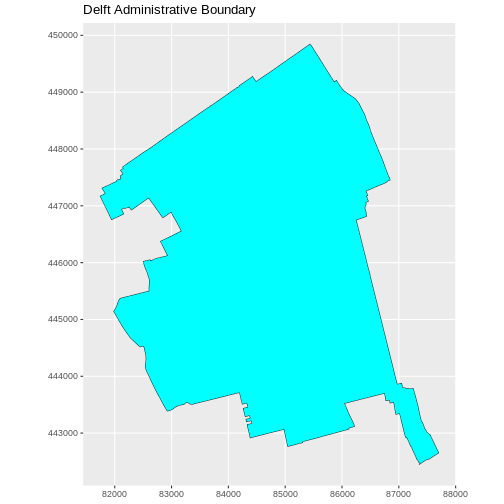

administrative boundary of Delft with the function

st_read() from the sf package.

R

boundary_Delft <- st_read("data/delft-boundary.shp", quiet = TRUE)

All ‘sf’ functions start with ‘st_’

Note that all functions from the sf package start with

the standard prefix st_ which stands for Spatial Type. This

is helpful in at least two ways:

- it allows for easy autocompletion of function names in RStudio, and

- it makes the interaction with or translation to/from software using the simple features standard like PostGIS easy.

Shapefiles vs. GeoPackage

Shapefiles are increasingly being replaced by more modern formats like GeoPackage. An advantage of GeoPackage is that it is a single file that can store multiple layers and attributes, whereas shapefiles consist of multiple files. However, shapefiles are still widely used and are a good starting point for learning about spatial data.

Spatial Metadata

By default (with quiet = FALSE), the

st_read() function provides a message with a summary of

metadata about the file that was read in.

R

st_read("data/delft-boundary.shp")

OUTPUT

Reading layer `delft-boundary' from data source

`/home/runner/work/r-geospatial-urban/r-geospatial-urban/site/built/data/delft-boundary.shp'

using driver `ESRI Shapefile'

Simple feature collection with 1 feature and 1 field

Geometry type: POLYGON

Dimension: XY

Bounding box: xmin: 4.320218 ymin: 51.96632 xmax: 4.407911 ymax: 52.0326

Geodetic CRS: WGS 84To examine the metadata in more detail, we can use other, more

specialised, functions from the sf package. The

st_geometry_type() function, for instance, gives us

information about the geometry type, which in this case is

POLYGON.

R

st_geometry_type(boundary_Delft)

OUTPUT

[1] POLYGON

18 Levels: GEOMETRY POINT LINESTRING POLYGON MULTIPOINT ... TRIANGLEGeometry types

The sf package supports the following common geometry

types: POINT, LINESTRING,

POLYGON, MULTIPOINT,

MULTILINESTRING, MULTIPOLYGON,

GEOMETRYCOLLECTION. More information about support for

these and other geometry types can be found in the sf package

documentation.

The st_crs() function returns the coordinate reference

system (CRS) used by the shapefile, which in this case is

WGS 84 and has the unique reference code

EPSG: 4326.

R

st_crs(boundary_Delft)

OUTPUT

Coordinate Reference System:

User input: WGS 84

wkt:

GEOGCRS["WGS 84",

DATUM["World Geodetic System 1984",

ELLIPSOID["WGS 84",6378137,298.257223563,

LENGTHUNIT["metre",1]]],

PRIMEM["Greenwich",0,

ANGLEUNIT["degree",0.0174532925199433]],

CS[ellipsoidal,2],

AXIS["latitude",north,

ORDER[1],

ANGLEUNIT["degree",0.0174532925199433]],

AXIS["longitude",east,

ORDER[2],

ANGLEUNIT["degree",0.0174532925199433]],

ID["EPSG",4326]]Examining the output of ‘st_crs()’

As the output of st_crs() can be long, you can use

$Name and $epsg after the crs()

call to extract the projection name and EPSG code respectively.

R

st_crs(boundary_Delft)$Name

OUTPUT

[1] "WGS 84"R

st_crs(boundary_Delft)$epsg

OUTPUT

[1] 4326The $ operator is used to extract a specific part of an

object. We used it in a previous

episode to subset a data frame by column name. In this case, it is

used to extract named elements stored in a crs object. For

more information, see the

documentation of the st_crs function.

The st_bbox() function shows the extent of the

layer.

R

st_bbox(boundary_Delft)

OUTPUT

xmin ymin xmax ymax

4.320218 51.966316 4.407911 52.032599 As WGS 84 is a geographic CRS, the

extent of the shapefile is displayed in degrees. We need a

projected CRS, which in the case of the Netherlands is

the Amersfoort / RD New projection. To reproject our

shapefile, we will use the st_transform() function. For the

crs argument we can use the EPSG code of the CRS we want to

use, which is 28992 for the Amersfort / RD New

projection. To check the EPSG code of any CRS, we can check this

website: https://epsg.io/

R

boundary_Delft <- st_transform(boundary_Delft, crs = 28992)

st_crs(boundary_Delft)$Name

OUTPUT

[1] "Amersfoort / RD New"R

st_crs(boundary_Delft)$epsg

OUTPUT

[1] 28992Notice that the bounding box is measured in meters after the

transformation. The $units_gdal named element confirms that

the new CRS uses metric units.

R

st_bbox(boundary_Delft)

OUTPUT

xmin ymin xmax ymax

81743.00 442446.21 87703.78 449847.95 R

st_crs(boundary_Delft)$units_gdal

OUTPUT

[1] "metre"We confirm the transformation by examining the reprojected shapefile.

R

boundary_Delft

OUTPUT

Simple feature collection with 1 feature and 1 field

Geometry type: POLYGON

Dimension: XY

Bounding box: xmin: 81743 ymin: 442446.2 xmax: 87703.78 ymax: 449848

Projected CRS: Amersfoort / RD New

osm_id geometry

1 324269 POLYGON ((87703.78 442651, ...More about CRS

Read more about Coordinate Reference Systems in the previous episode. We will also practice transformation between CRS in Handling Spatial Projection & CRS.

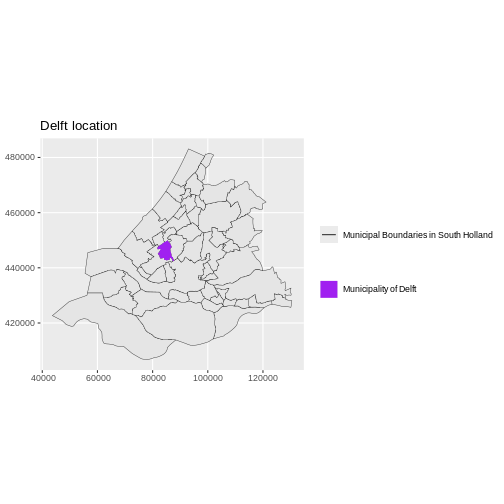

Plot a vector layer

Now, let’s plot this shapefile. You are already familiar with the

ggplot2 package from Introduction to Visualisation.

ggplot2 has special geom_ functions for

spatial data. We will use the geom_sf() function for

sf data. We use coord_sf() to ensure that the

coordinates shown on the two axes are displayed in meters.

R

ggplot(data = boundary_Delft) +

geom_sf(size = 3, color = "black", fill = "cyan1") +

labs(title = "Delft Administrative Boundary") +

coord_sf(datum = st_crs(28992)) # displays the axes in meters

Challenge: Import line and point vector layers

Read in delft-streets.shp and

delft-leisure.shp and assign them to

lines_Delft and points_Delft respectively.

Answer the following questions:

- What is the CRS and extent for each object?

- Do the files contain points, lines, or polygons?

- How many features are in each file?

R

lines_Delft <- st_read("data/delft-streets.shp")

points_Delft <- st_read("data/delft-leisure.shp")

We can check the type of type of geometry with the

st_geometry_type() function. lines_Delft

contains "LINESTRING" geometry and

points_Delft is made of "POINT"

geometries.

R

st_geometry_type(lines_Delft)[1]

OUTPUT

[1] LINESTRING

18 Levels: GEOMETRY POINT LINESTRING POLYGON MULTIPOINT ... TRIANGLER

st_geometry_type(points_Delft)[2]

OUTPUT

[1] POINT

18 Levels: GEOMETRY POINT LINESTRING POLYGON MULTIPOINT ... TRIANGLEBoth lines_Delft and points_Delft are in

EPSG:28992.

R

st_crs(lines_Delft)$epsg

OUTPUT

[1] 28992R

st_crs(points_Delft)$epsg

OUTPUT

[1] 28992When looking at the bounding boxes with the st_bbox()

function, we see the spatial extent of the two objects in a projected

CRS using meters as units. lines_Delft() and

points_Delft have similar extents.

R

st_bbox(lines_Delft)

OUTPUT

xmin ymin xmax ymax

81759.58 441223.13 89081.41 449845.81 R

st_bbox(points_Delft)

OUTPUT

xmin ymin xmax ymax

81863.21 442621.15 87370.15 449345.08 - Metadata for vector layers include geometry type, CRS, and extent

and can be examined with the

sffunctionsst_geometry_type(),st_crs(), andst_bbox(), respectively. - Load spatial objects into R with the

sffunctionst_read(). - Spatial objects can be plotted directly with

ggplot2using thegeom_sf()function. No need to convert to a data frame.

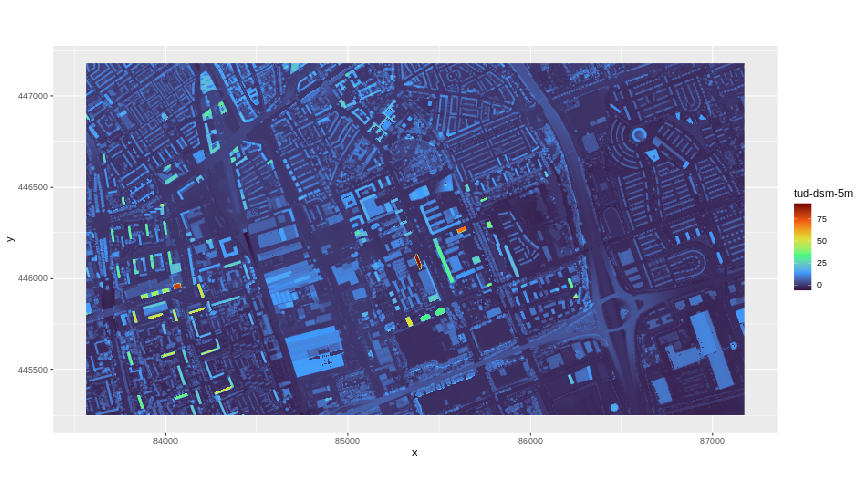

Content from Explore and plot by vector layer attributes

Last updated on 2026-02-10 | Edit this page

Overview

Questions

- How can I examine the attributes of a vector layer?

Objectives

After completing this episode, participants should be able to…

Query attributes of a vector object.

Subset vector objects using specific attribute values.

Plot a vector feature, coloured by unique attribute values.

Query Vector Feature Metadata

Let’s have a look at the content of the loaded data, starting with

lines_Delft. In essence, an "sf" object is a

data.frame with a “sticky” geometry column and some extra metadata, like

the CRS, extent and geometry type we examined earlier.

R

lines_Delft

OUTPUT

Simple feature collection with 11244 features and 2 fields

Geometry type: LINESTRING

Dimension: XY

Bounding box: xmin: 81759.58 ymin: 441223.1 xmax: 89081.41 ymax: 449845.8

Projected CRS: Amersfoort / RD New

First 10 features:

osm_id highway geometry

1 4239535 cycleway LINESTRING (86399.68 448599...

2 4239536 cycleway LINESTRING (85493.66 448740...

3 4239537 cycleway LINESTRING (85493.66 448740...

4 4239620 footway LINESTRING (86299.01 448536...

5 4239621 footway LINESTRING (86307.35 448738...

6 4239674 footway LINESTRING (86299.01 448536...

7 4310407 service LINESTRING (84049.47 447778...

8 4310808 steps LINESTRING (84588.83 447828...

9 4348553 footway LINESTRING (84527.26 447861...

10 4348575 footway LINESTRING (84500.15 447255...This means that we can examine and manipulate them as data frames.

For instance, we can look at the number of variables (columns in a data

frame) with ncol().

R

ncol(lines_Delft)

OUTPUT

[1] 3In the case of point_Delft those columns are

"osm_id", "highway" and

"geometry". We can check the names of the columns with the

function names().

R

names(lines_Delft)

OUTPUT

[1] "osm_id" "highway" "geometry"The geometry as a column

Note that in R the geometry is just another column and counts towards

the number returned by ncol(). This is different from GIS

software with graphical user interfaces, where the geometry is displayed

in a viewport not as a column in the attribute table.

We can also preview the content of the object by looking at the first

6 rows with the head() function, which in the case of an

sf object is similar to examining the object directly.

R

head(lines_Delft)

OUTPUT

Simple feature collection with 6 features and 2 fields

Geometry type: LINESTRING

Dimension: XY

Bounding box: xmin: 85107.1 ymin: 448400.3 xmax: 86399.68 ymax: 449076.2

Projected CRS: Amersfoort / RD New

osm_id highway geometry

1 4239535 cycleway LINESTRING (86399.68 448599...

2 4239536 cycleway LINESTRING (85493.66 448740...

3 4239537 cycleway LINESTRING (85493.66 448740...

4 4239620 footway LINESTRING (86299.01 448536...

5 4239621 footway LINESTRING (86307.35 448738...

6 4239674 footway LINESTRING (86299.01 448536...Explore values within one attribute

Using the $ operator, we can examine the content of a

single field of our lines object. Let’s have a look at the

highway field, a categorical variable stored in the

lines_Delft object as character. To avoid

displaying all 11244 values of highway, we will preview it

with the head() function:

R

head(lines_Delft$highway, 10)

OUTPUT

[1] "cycleway" "cycleway" "cycleway" "footway" "footway" "footway"

[7] "service" "steps" "footway" "footway" The first rows returned by the head() function do not

necessarily contain all unique values within the highway

field. To see all unique values, we can use the unique()

function. This function extracts all possible values of a character

variable. For the highway field, this returns all types of

roads stored in lines_Delft.

R

unique(lines_Delft$highway)

OUTPUT

[1] "cycleway" "footway" "service" "steps"

[5] "residential" "unclassified" "construction" "secondary"

[9] "busway" "living_street" "motorway_link" "tertiary"

[13] "track" "motorway" "path" "pedestrian"

[17] "primary" "bridleway" "trunk" "tertiary_link"

[21] "services" "secondary_link" "trunk_link" "primary_link"

[25] "platform" "proposed" NA Using factors in sf objects

R is also able to handle categorical variables called factors,

introduced in an earlier episode.

With factors, we can use the levels() function to show

unique values. To examine unique values of the highway

variable this way, we have to first transform it into a factor with the

factor() function:

R

factor(lines_Delft$highway) |> levels()

OUTPUT

[1] "bridleway" "busway" "construction" "cycleway"

[5] "footway" "living_street" "motorway" "motorway_link"

[9] "path" "pedestrian" "platform" "primary"

[13] "primary_link" "proposed" "residential" "secondary"

[17] "secondary_link" "service" "services" "steps"

[21] "tertiary" "tertiary_link" "track" "trunk"

[25] "trunk_link" "unclassified" Note that this way the values are shown by default in alphabetical

order and NAs are not displayed, whereas using

unique() returns unique values in the order of their

occurrence in the data frame and it also shows NA

values.

Challenge: Attributes for different spatial classes

Explore the attributes associated with the point_Delft

spatial object.

- How many fields does it have?

- What types of leisure points do the points represent? Give three examples.

- Which of the following is NOT a field of the

point_Delftobject?

-

locationB)leisureC)osm_id

- To find the number of fields, we use the

ncol()function:

R

ncol(point_Delft)

OUTPUT

[1] 3- The types of leisure point are in the column named

leisure.

Using the head() function which displays 6 rows by

default, we only see two values and NAs.

R

head(point_Delft)

OUTPUT

Simple feature collection with 6 features and 2 fields

Geometry type: POINT

Dimension: XY

Bounding box: xmin: 83839.59 ymin: 443827.4 xmax: 84967.67 ymax: 447475.5

Projected CRS: Amersfoort / RD New

osm_id leisure geometry

1 472312297 picnic_table POINT (84144.72 443827.4)

2 480470725 marina POINT (84967.67 446120.1)

3 484697679 <NA> POINT (83912.28 447431.8)

4 484697682 <NA> POINT (83895.43 447420.4)

5 484697691 <NA> POINT (83839.59 447455)

6 484697814 <NA> POINT (83892.53 447475.5)We can increase the number of rows with the n argument

(e.g., head(n = 10) to show 10 rows) until we see at least

three distinct values in the leisure column. Note that printing an

sf object will also display the first 10 rows.

R

# you might be lucky to see three distinct values

head(point_Delft, 10)

OUTPUT

Simple feature collection with 10 features and 2 fields

Geometry type: POINT

Dimension: XY

Bounding box: xmin: 82485.72 ymin: 443827.4 xmax: 85385.25 ymax: 448341.3

Projected CRS: Amersfoort / RD New

osm_id leisure geometry

1 472312297 picnic_table POINT (84144.72 443827.4)

2 480470725 marina POINT (84967.67 446120.1)

3 484697679 <NA> POINT (83912.28 447431.8)

4 484697682 <NA> POINT (83895.43 447420.4)

5 484697691 <NA> POINT (83839.59 447455)

6 484697814 <NA> POINT (83892.53 447475.5)

7 549139430 marina POINT (84479.99 446823.5)

8 603300994 sports_centre POINT (82485.72 445237.5)

9 883518959 sports_centre POINT (85385.25 448341.3)

10 1148515039 playground POINT (84661.3 446818)We have our answer (sports_centre is the third value),

but in general this is not a good approach as the first rows might still

have many NAs and three distinct values might still not be

present in the first n rows of the data frame. To remove

NAs, we can use the function na.omit() on the

leisure column to remove NAs completely. Note that we use

the $ operator to examine the content of a single

variable.

R

# this is better

na.omit(point_Delft$leisure) |> head()

OUTPUT

[1] "picnic_table" "marina" "marina" "sports_centre"

[5] "sports_centre" "playground" To show only unique values, we can use the levels()

function on a factor to only see the first occurrence of each distinct

value. Note NAs are dropped in this case and that we get

the first three of the unique alphabetically ordered values.

R

# this is even better

factor(point_Delft$leisure) |>

levels() |>

head(n = 3)

OUTPUT

[1] "dance" "dog_park" "escape_game"- To see a list of all fields names and answer the last question, we

can use the

names()function.

R

names(point_Delft)

OUTPUT

[1] "osm_id" "leisure" "geometry"-

locationis not a field of thepoint_Delftobject.

Subset features

We can use the filter() function to select a subset of

features from a spatial object, just like with data frames. Let’s select

only cycleways from our street data.

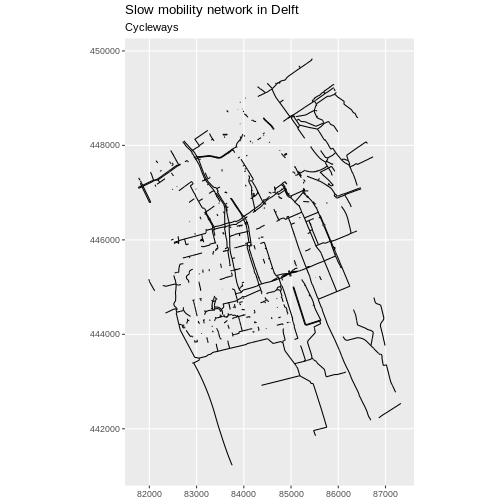

R

cycleway_Delft <- lines_Delft |>

filter(highway == "cycleway")

Our subsetting operation reduces the number of features from 11244 to 1397.

R

nrow(lines_Delft)

OUTPUT

[1] 11244R

nrow(cycleway_Delft)

OUTPUT

[1] 1397This can be useful, for instance, to calculate the total length of

cycleways. For that, we first need to calculate the length of each

segment with st_length()

R

cycleway_Delft <- cycleway_Delft |>

mutate(length = st_length(geometry))

cycleway_Delft |>

summarise(total_length = sum(length))

OUTPUT

Simple feature collection with 1 feature and 1 field

Geometry type: MULTILINESTRING

Dimension: XY

Bounding box: xmin: 81759.58 ymin: 441227.3 xmax: 87326.76 ymax: 449834.5

Projected CRS: Amersfoort / RD New

total_length geometry

1 115550.1 [m] MULTILINESTRING ((86399.68 ...Now we can plot only the cycleways.

R

ggplot(data = cycleway_Delft) +

geom_sf() +

labs(

title = "Slow mobility network in Delft",

subtitle = "Cycleways"

) +

coord_sf(datum = st_crs(28992))

Challenge

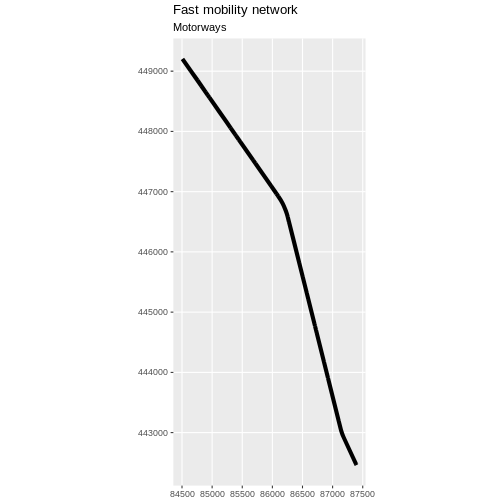

Challenge: Now with motorways

- Create a new object that only contains the motorways in Delft.

- How many features does the new object have?

- What is the total length of motorways?

- Plot the motorways.

- To create the new object, we first need to see which value of the

highwaycolumn holds motorways. There is a value calledmotorway.

R

unique(lines_Delft$highway)

OUTPUT

[1] "cycleway" "footway" "service" "steps"

[5] "residential" "unclassified" "construction" "secondary"

[9] "busway" "living_street" "motorway_link" "tertiary"

[13] "track" "motorway" "path" "pedestrian"

[17] "primary" "bridleway" "trunk" "tertiary_link"

[21] "services" "secondary_link" "trunk_link" "primary_link"

[25] "platform" "proposed" NA We extract only the features with the value

motorway.

R

motorway_Delft <- lines_Delft |>

filter(highway == "motorway")

motorway_Delft

OUTPUT

Simple feature collection with 48 features and 2 fields

Geometry type: LINESTRING

Dimension: XY

Bounding box: xmin: 84501.66 ymin: 442458.2 xmax: 87401.87 ymax: 449205.9

Projected CRS: Amersfoort / RD New

First 10 features:

osm_id highway geometry

1 7531946 motorway LINESTRING (87395.68 442480...

2 7531976 motorway LINESTRING (87401.87 442467...

3 46212227 motorway LINESTRING (86103.56 446928...

4 120945066 motorway LINESTRING (85724.87 447473...

5 120945068 motorway LINESTRING (85710.31 447466...

6 126548650 motorway LINESTRING (86984.12 443630...

7 126548651 motorway LINESTRING (86714.75 444772...

8 126548653 motorway LINESTRING (86700.23 444769...

9 126548654 motorway LINESTRING (86716.35 444766...

10 126548655 motorway LINESTRING (84961.78 448566...- There are 48 features with the value

motorway.

R

nrow(motorway_Delft)

OUTPUT

[1] 48- The total length of motorways is 14877.4361477941.

R

motorway_Delft_length <- motorway_Delft |>

mutate(length = st_length(geometry)) |>

select(everything(), geometry) |>

summarise(total_length = sum(length))

- Plot the motorways.

R

ggplot(data = motorway_Delft) +

geom_sf(linewidth = 1.5) +

labs(

title = "Fast mobility network",

subtitle = "Motorways"

) +

coord_sf(datum = st_crs(28992))

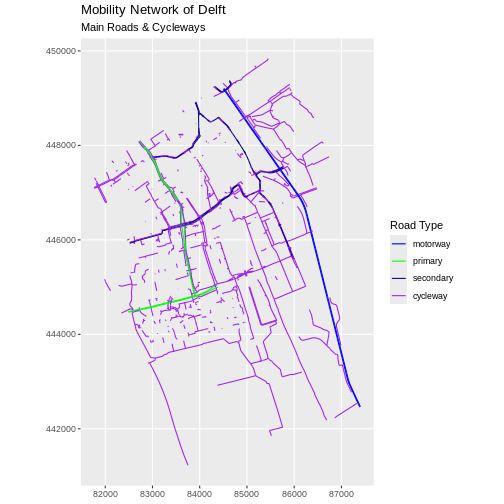

Customize plots

Let’s say that we want to color different road types with different colors and that we want to determine those colors.

R

unique(lines_Delft$highway)

OUTPUT

[1] "cycleway" "footway" "service" "steps"

[5] "residential" "unclassified" "construction" "secondary"

[9] "busway" "living_street" "motorway_link" "tertiary"

[13] "track" "motorway" "path" "pedestrian"

[17] "primary" "bridleway" "trunk" "tertiary_link"

[21] "services" "secondary_link" "trunk_link" "primary_link"

[25] "platform" "proposed" NA If we look at all the unique values of the highway field of our

street network we see more than 20 values. Let’s focus on a subset of

four values to illustrate the use of distinct colours. We filter the

roads that have one of the four given values "motorway",

"primary", "secondary", and

"cycleway". Note that we do this with the %in%

operator which is a more compact equivalent of a series of

== equality conditions joined by the | (or)

operator. We also make sure that the highway column is a factor

column.

R

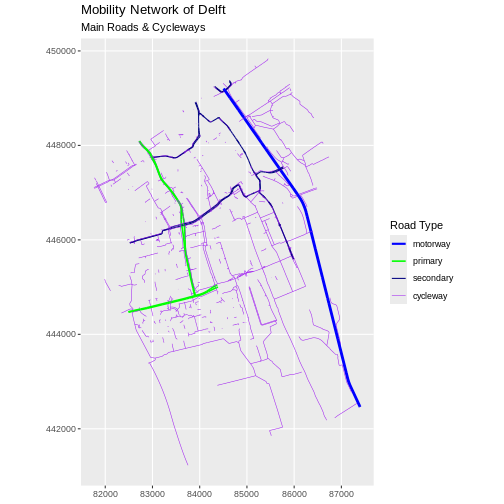

road_types <- c("motorway", "primary", "secondary", "cycleway")

lines_Delft_selection <- lines_Delft |>

filter(highway %in% road_types) |>

mutate(highway = factor(highway, levels = road_types))

Next we define the four colours we want to use, one for each type of

road in our vector object. Note that in R you can use named colours like

"blue", "green", "navy", and

"purple". If you are using RStudio, you will see the named

colours previewed in line. A full list of named colours can be listed

with the colors() function.

R

road_colors <- c("blue", "green", "navy", "purple")

We can use the defined colour palette in a ggplot.

R

ggplot(data = lines_Delft_selection) +

geom_sf(aes(color = highway)) +

scale_color_manual(values = road_colors) +

labs(

color = "Road Type",

title = "Mobility Network of Delft",

subtitle = "Main Roads & Cycleways"

) +

coord_sf(datum = st_crs(28992))

Challenge: Adjust line width

Follow the same steps to add custom line widths for every road type.

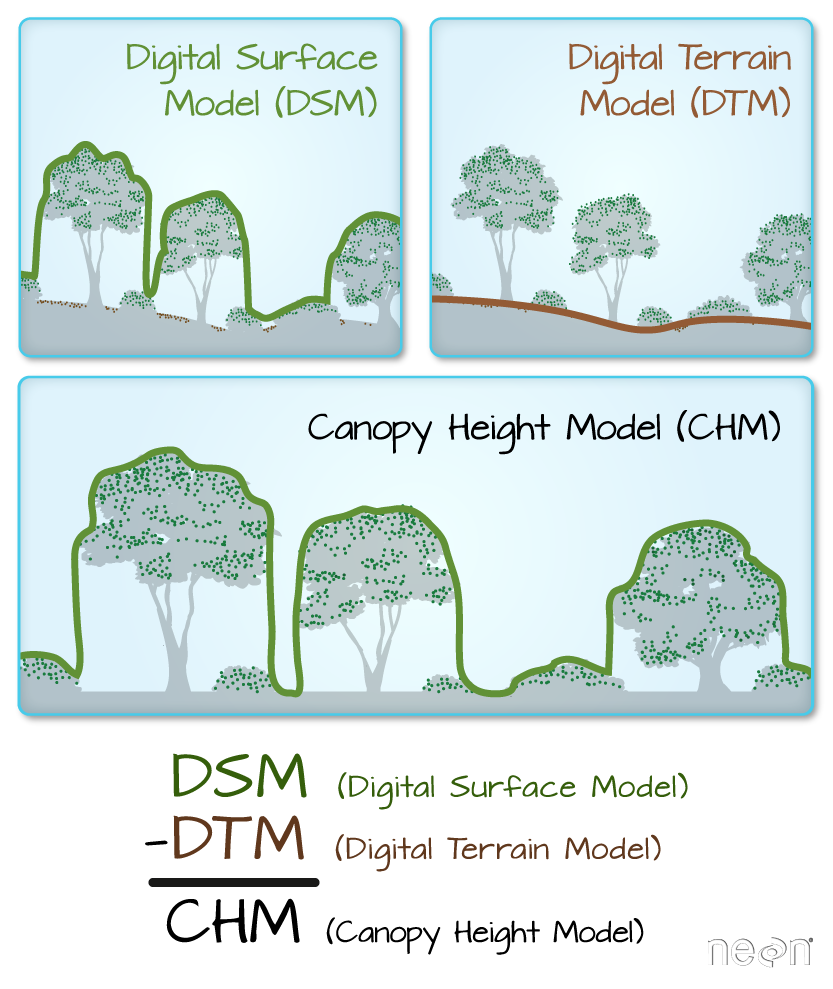

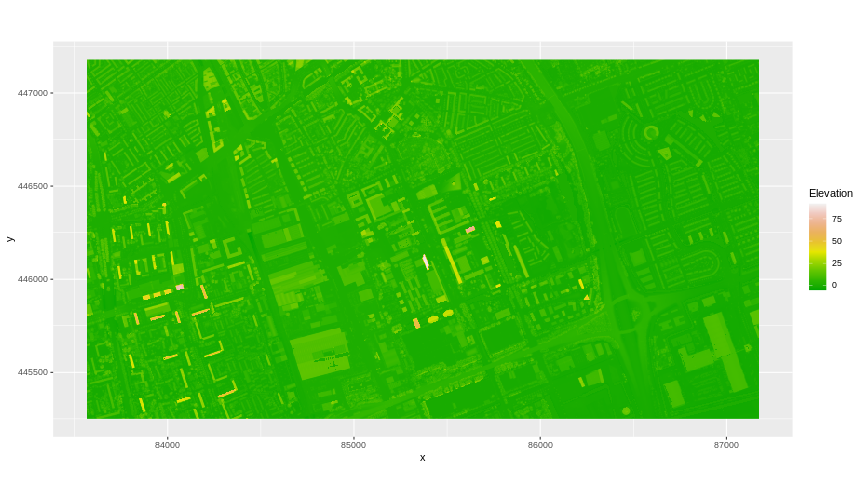

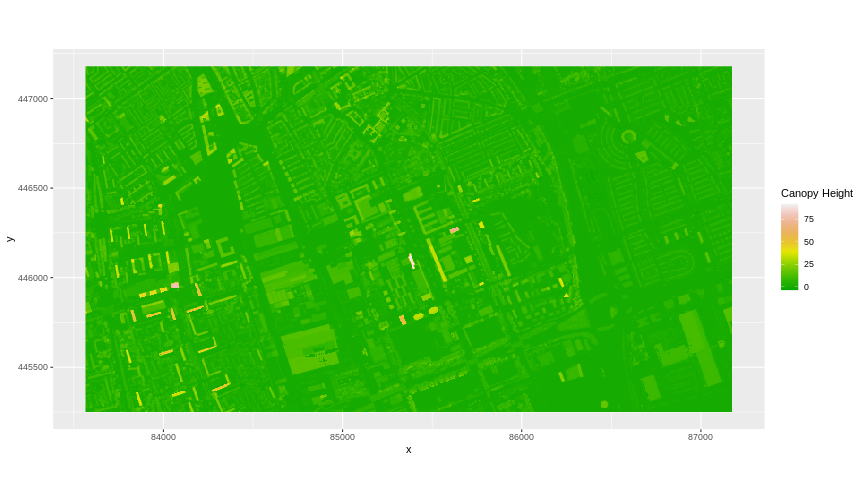

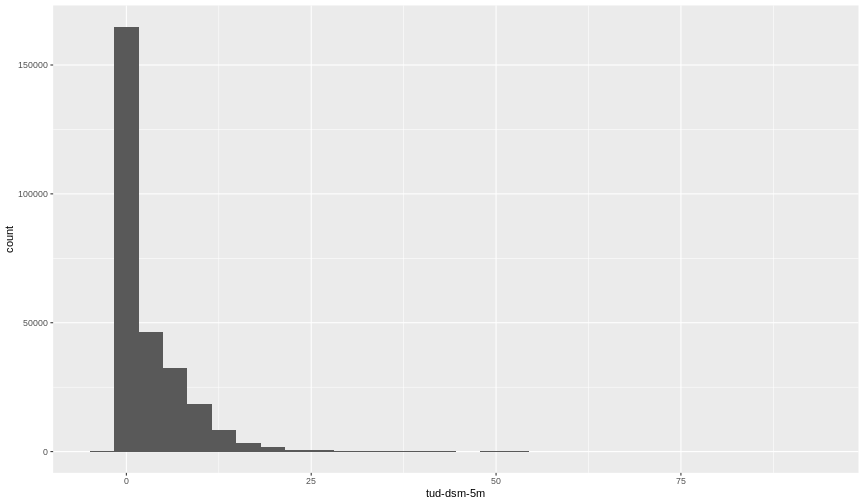

Assign the custom values