Content from Course introduction

Last updated on 2025-07-01 | Edit this page

Overview

Questions

- What is open and reproducible research?

- Why are these practices important, in particular in the context of software used to support such research?

Objectives

- Describe the principles of open and reproducible research and why they are of value in the research community

- Explain how the concept of reproducibility translates into practices for building better research software

- Setup machine with software and data used to teach this course

Software is fundamental to modern research - some of it would even be impossible without software. From short, thrown-together temporary scripts written to help with day-to-day research tasks, through an abundance of complex data analysis spreadsheets, to the hundreds of software engineers and millions of lines of code behind international efforts such as the Large Hadron Collider, there are few areas of research where software does not have a fundamental role.

This course teaches good practices and reproducible working methods that are agnostic of a programming language (although we will use Python code in our examples). It aims to provide researchers with the tools and knowledge to feel confident when writing good quality and sustainable software to support their research. Although the discussion will often focus on software developed in the context of research, most of the good practices introduced here are beneficial to software development more generally.

The lesson is particularly focused on one aspect of good (scientific) software development practice: improving software to enhance reproducibility. That is, enabling others to run our code and obtain the same results we did.

Why should I care about reproducibility?

Scientific transparency and rigor are key factors in research. Scientific methodology and results need to be published openly and replicated and confirmed by several independent parties. However, research papers often lack the full details required for independent reproduction or replication. Many attempts at reproducing or replicating the results of scientific studies have failed in a variety of disciplines ranging from psychology (The Open Science Collaboration (2015)) to cancer sciences (Errington et al (2021)). These are called the reproducibility and replicability crises - ongoing methodological crises in which the results of many scientific studies are difficult or impossible to repeat.

Reproducible research is a practice that ensures that researchers can repeat the same analysis multiple times with the same results. It offers many benefits to those who practice it:

- Reproducible research helps researchers remember how and why they performed specific tasks and analyses; this enables easier explanation of work to collaborators and reviewers.

- Reproducible research enables researchers to quickly modify analyses and figures - this is often required at all stages of research and automating this process saves loads of time.

- Reproducible research enables reusability of previously conducted tasks so that new projects that require the same or similar tasks become much easier and efficient by reusing or reconfiguring previous work.

- Reproducible research supports researchers’ career development by facilitating the reuse and citation of all research outputs - including both code and data.

- Reproducible research is a strong indicator of rigor, trustworthiness, and transparency in scientific research. This can increase the quality and speed of peer review, because reviewers can directly access the analytical process described in a manuscript. It increases the probability that errors are caught early on - by collaborators or during the peer-review process, helping alleviate the reproducibility crisis.

However, reproducible research often requires that researchers implement new practices and learn new tools. This course aims to teach some of these practices and tools pertaining to the use of software to conduct reproducible research.

Review the Reproducible Research Discussion for a more in-depth discussion of this topic.

Practices for building better research software

The practices we will cover for building better research software fall into three areas.

1. Things you can do with your own computing environment to enhance the software

- Using virtual development environments ensures your software can be developed and run consistently across different systems, making it easier for you and others to run, reuse, and extend your code.

2. Things you can do to improve the source code of the software itself

- Organising and structuring your code and project directory keeps your software clean, modular, and reusable, enhancing its readability, extensibility, and reusability.

- Following coding conventions and guides for your programming language ensures that others find it easy to read your code, reuse or extend it in their own examples and applications.

- Testing can save time spent on debugging and ensures that your code is correct and does what it is set out to do, giving you and others confidence in your code and the results it produces.

3. Things you can do to make the software easier for other people to use

- Using version control and collaboration platforms like GitHub, GitLab, and CodeBerg makes it easier to share code and work on it together.

- Fostering a community around your software and promoting collaboration helps to grow a user base for your software and contributes to its long-term sustainability.

- Providing clear and comprehensive documentation, including code comments, API specifications, setup guides, and usage instructions, ensures your software is easy to understand, use, and extend (by you and others).

- Accompanying your software with clear information about its licensing terms and how it should be cited ensures that others can reuse and adapt your code with confidence and that you receive credit when they do so.

Challenge

Individually,

- reflect on what practices (and tools) you are already using in your software development workflow,

- list at least 3 new practices or tools that you would like to start employing or using.

Write your reflections in the shared collaborative document.

Our research software project

You are going to follow a fairly typical experience of a new

researcher (e.g. a PhD student or a postdoc) joining a research group.

You were emailed some spacewalks data and analysis code bundled in the

spacewalks.zip archive, written by another group member who

worked on similar things but has since left. You need to be able to

install and run this code on your machine, check you can understand it

and then adapt it to your own project.

As part of the setup for this

course, you may have downloaded or been emailed the

spacewalks.zip archive. If not, you can download it now.

Save the spacewalks.zip archive to your home directory and

extract it - you should get a directory called

spacewalks.

The first thing you may want to do is inspect the content of the code and data you received. We will use VS Code for browsing, inspecting, modifying files and running our code.

VS Code is a very handy tool for software development and is used by

many researchers worldwide. It “understands” the syntax of many

different file types - for example Python, JSON, CSV, etc. - either

natively or via extensions that can be installed. To open our directory

spacewalks in VS Code – go to File -> Open

Folder and find spacewalks.

You may notice that the software project contains:

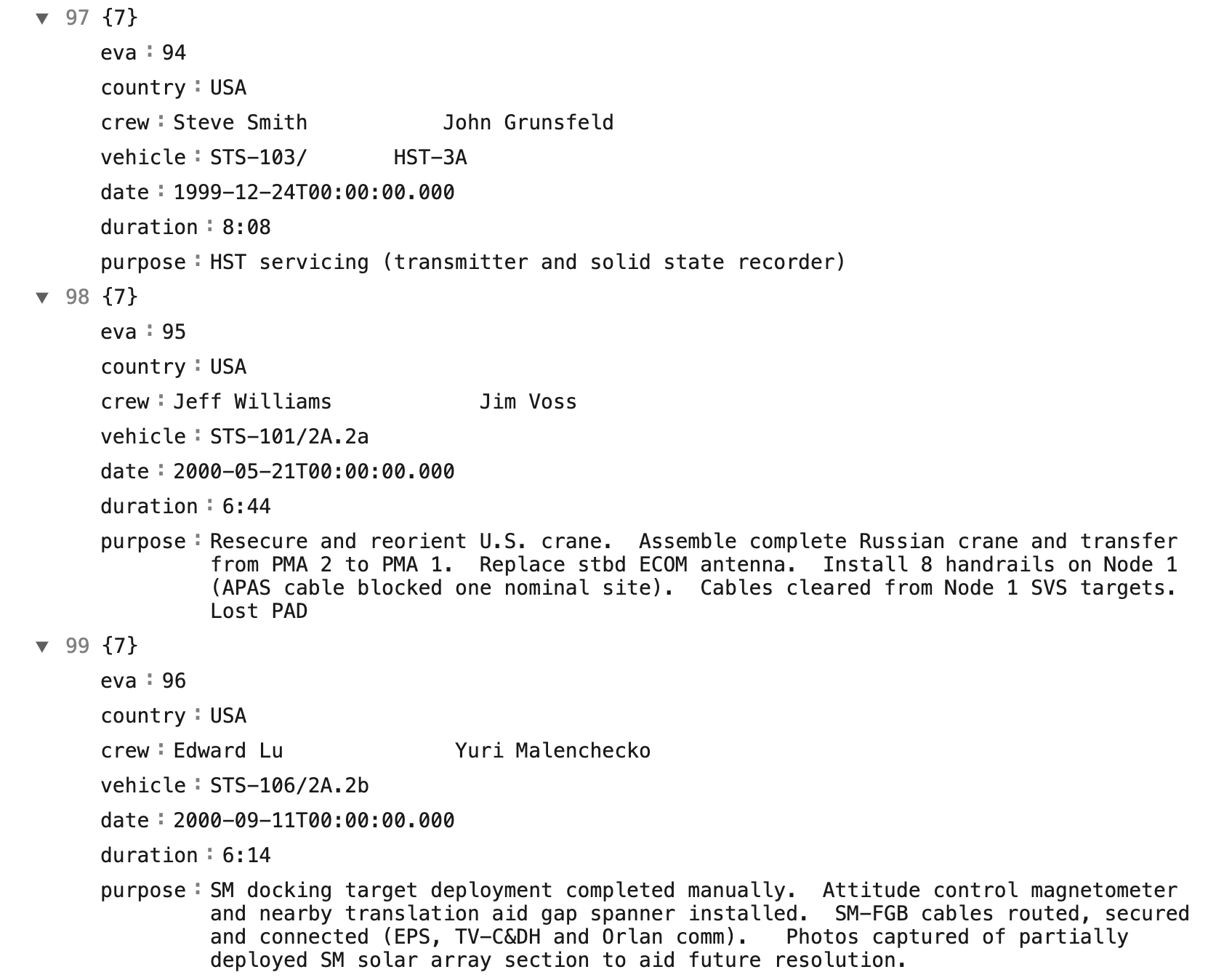

-

A JSON file called

data.json- a snippet of which is shown below - with data on extra-vehicular activities (EVAs, i.e. spacewalks) undertaken by astronauts and cosmonauts from 1965 to 2013 (data provided by NASA via its Open Data Portal). JSON data file snippet showing EVA/spacewalk data including EVA id, country, crew members, vehicle type, date of the spacewalk, duration, and purpose)

JSON data file snippet showing EVA/spacewalk data including EVA id, country, crew members, vehicle type, date of the spacewalk, duration, and purpose) -

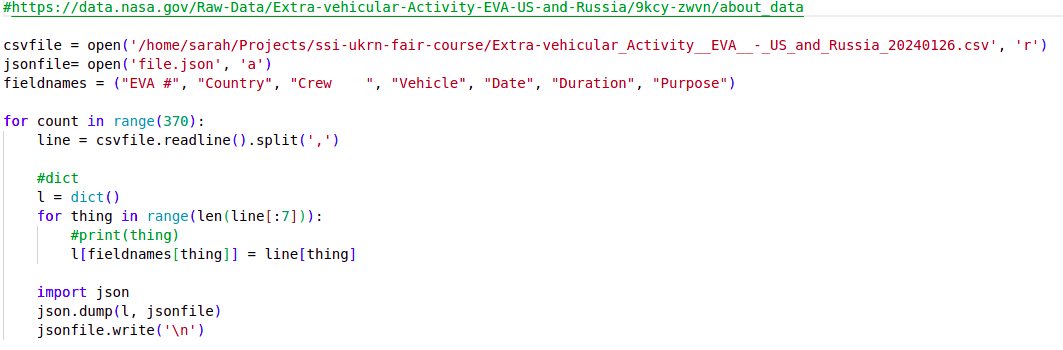

A Python script called

my code v2.pycontaining some analysis code. A first few lines of a Python script

A first few lines of a Python scriptThe code in the Python script does some common research tasks:

- Reads in the data from the JSON file

- Changes the data from one data format to another and saves to a file in the new format (CSV)

- Performs some calculations to generate summary statistics about the data

- Makes a plot to visualise the data

Read and understand data and code

Individually inspect the code and try and see if you can understand what the code is doing and how it is organised.

In the shared document, write down anything that you think is not “quite right”, not clear, is missing, or could be done better.

Below are some suggested questions to help you assess the code. These are not the only criteria on which you could evaluate the code and you may find other aspects to comment on.

- If these files were emailed to you, or sent on a chat platform, or handed to you on a memory stick, how easy would it be to find them again in 6 months, or 3 years?

- Can you understand the code? Does it make sense to you?

- Could you run the code on your platform/operating system (is there documentation that covers installation instructions)? What programs or libraries do you need to install to make it work (and which versions)? Are these commonly used tools in your field?

- Are you allowed to use the code in your own research? If you did, would your collaborators expect credit in some form (paper authorship, citation or acknowledgement)? Are you allowed to edit the files? Publish them? Share them with others?

- Is the code written in a way that allows you to easily modify or extend it? How easy would it be to change its parameters to calculate a different statistic, or run on a different input file?

This is a (non-exhaustive) list of things that could be fixed/improved with our code and data:

File and variable naming

- data (

data.json) and Python script (my code v2.py) files could have more descriptive names - Python script (

my code v2.py) should not contain blank spaces as it may cause problems when running the code from command line - variables (e.g.

w) should have more descriptive and meaningful names - version control is embedded in file name - there are better ways of keeping track of changes to code and its different versions

Code organisation and style

- import statements should be grouped at the top

- commenting and uncommenting code should not be used to direct the flow of execution / type of analysis being done

- the code lacks comments, documentation and explanations

- code structure could be improved to be more modular and not one monolithic piece of code - e.g. use functions for reusable units of functionality

- unused variables (e.g.

fieldnamesmeant to be used when saving data to CSV file) are polluting the code and confusing the person reading the code - spaces should not be used in column names as it can lead to error when reading the data in

Code content and correctness

- fixing the loop to 374 data entries is not reusable on other data files and would likely break if the data file changed

- running the code twice causes the program to fail as the result file from the previous run will exist (which the code does not check for) and the script will refuse to overwrite it

- the code does not specify the encoding when reading the data in, and we are also not sure what encoding the data was saved in originally

- how can we be confident the data analysis and plot that is produced as a result are correct?

Documentation

- there is no README documentation to orient the user

- there is no licence information to say how the code can be reused (which then means it cannot be reused at all)

- it is not clear what software dependencies the code has

- there are no installation instructions or instructions on how to run the code

As you have seen from the previous exercise - there are quite a few things that can be improved with this code. We will try to make this research software project a “bit better” for future use.

Let’s check your setup now to make sure you are ready for the rest of this course.

Check your setup

From a command line terminal on your operating system or within VS Code run the following commands to check you have installed all the tools listed in the Setup page and that are functioning correctly.

Checking the command line terminal:

$ date$ echo $SHELL$ pwd$ whoami

Checking Python:

$ python --version$ python3 --version$ which python$ which python3

Checking Git and GitHub:

$ git --help$ git config --list$ ssh -T git@github.com

Checking VS Code:

$ code$ code --list-extensions

The expected out put of each command is:

- Today’s date

-

bashorzsh- this tells you what shell language you are using. In this course we show examples in Bash. - Your “present working directory” or the folder where your shell is running

- Your username

- In this course we are using Python 3. If

python --versiongives you Python 2.x you may have two versions of Python installed on your computer and need to be careful which one you are using. - Use this command to be certain you are using Python version 3, not 2, if you have both installed.

- The file path to where the Python version you are calling is installed.

- If you have more than one version these should be different paths, if both 5. and 6. gave the same result then 7. and 8. should match as well.

- The help message explaining how to use the

gitcommand. - You should have

user.name,user.emailandcore.editorset in your Git configuration. Check that the editor listed is one you know how to use. - This checks if you have set up your connection to GitHub correctly.

If is says

permission deniedyou may need to look at the instructions for setting up SSH keys again on the Setup page. - This should open VSCode in your current working directory. macOS users may need to first open VS Code and add it to the PATH.

- You should have the extensions GitLens, Git Graph, Python, JSON and Excel Viewer installed to use in this course.

Further reading

We recommend the following resources for some additional reading on reproducible research:

- Reproducible research through reusable code is a one day course by the Netherlands eScience Center which discusses similar themes to this course, but in a shorter format.

- The Turing Way’s “Guide for Reproducible Research”

- A Beginner’s Guide to Conducting Reproducible Research, Jesse M. Alston, Jessica A. Rick, Bulletin of The Ecological Society of America 102 (2) (2021), https://doi.org/10.1002/bes2.1801

- “Ten reproducible research things” tutorial

- FORCE11’s FAIR 4 Research Software (FAIR4RS) Working Group

- “Good Enough Practices in Scientific Computing” course

- Reproducibility for Everyone’s (R4E) resources, community-led education initiative to increase adoption of open research practices at scale

- Training materials on different aspects of research software engineering (including open source, reproducibility, research software testing, engineering, design, continuous integration, collaboration, version control, packaging, etc.), compiled by the INTERSECT project

- Curated resources by the Framework for Open and Reproducible Research Training (FORRT)

Acknowledgements and references

The content of this course borrows from or references various work.

Content from Better start with a software project

Last updated on 2025-07-01 | Edit this page

Overview

Questions

- What is a version control system?

- Why is version control essential to building good software

- What does a standard version control workflow look like?

Objectives

- Set up version control for our software project to track changes to it

- Create self-contained commits using Git to incrementally save work

- Push new work from a local machine to a remote server on GitHub

In this episode, we will set up our new research software project using some good practices from the start. This will lay the foundation for long-term sustainability of our code, collaboration, and reproducibility.

This starts with following naming conventions for files, employing version control, and (in the next episode) setting up a virtual development environment with software dependencies to ensure the project can be more easily and reliably run, shared and maintained. Next (over the rest of the course) - adding tests, setting up automation (e.g. continuous integration), documenting software and including metadata such as licensing, authorship and citation will ensure the results our software produces can be trusted and others can build upon it with confidence.

Let’s begin by creating a new software project from our existing code, and start tracking changes to it with version control.

From script to software project

In the previous episode you have unzipped spacewalks.zip

into a directory spacewalks in your home directory. If you

have not opened the software directory in VS Code already – go to

File -> Open Folder and find

spacewalks.

We also need access to a command line terminal to type various commands. In VS Code start a new terminal via Terminal -> New Terminal (Windows users need to make sure the new terminal is “GitBash”; not “PowerShell” or “cmd”). Alternatively, you can work with a shell terminal directly (and not within VS Code), if you so prefer.

If you are not already inside this directory, from your command line terminal you can navigate to it and list its contents with:

BASH

cd ~/spacewalks

ls -la

total 272

drwx------@ 5 mbassan2 staff 160 26 Jun 11:35 .

drwx------@ 489 mbassan2 staff 15648 26 Jun 11:41 ..

drwxrwxr-x@ 4 mbassan2 staff 128 4 Apr 10:48 astronaut-data-analysis-old

-rw-rw-r--@ 1 mbassan2 staff 132981 4 Apr 10:48 data.json

-rw-rw-r--@ 1 mbassan2 staff 1518 4 Apr 10:48 my code v2.pyOver the rest of the course, we will transform a collection of these files into a well-structured software project that follows established good practices in research software engineering.

The first thing you may notice that our software project contains

folder astronout-data-analysis-old which presumably tries

to keep track of older versions of the code. There is a better way to do

that using version control tool, such as Git, and we can delete this

folder so it does not cause confusion:

Version control

Before we do any further changes to our software, we want to make sure we can keep a history of what changes we have done since we inherited the code from our colleague.

We can track changes with version control. Later on, we will store those changes on a remote server too – both for safe-keeping and to make them easier to share with others. In later episodes, we will also see how version control makes it easier for multiple collaborators to work together on the same project at the same time and combine their contributions.

Version control refresher

What is a version control system?

Version control systems are tools that let you track changes in files over time in a special database that allows users to “travel through time”, and compare earlier versions of the files with the current state. Think of a version control system like turning on ‘Track Changes’ on Microsoft Word/Google Docs, but for any files you want, and a lot more powerful and flexible.

Why use a version control system?

As scientists, our main motivation for using version control is reproducibility. By tracking and storing every change we make, we can restore our project to the state it was at any point in time. This is incredibly useful for reproducing results from a specific version of the code, or tracking down which changes we (or others) made introduced bugs or changed our results.

The other benefit of version control is it provides us with a history of our development. As we make each change, we record what it was, and why we made it. This helps make our development process transparent and auditable – which is a good scientific practice.

It also makes our project more sustainable - as our data, software and methods (knowledge) remain usable and accessible over time (especially if made available in shared version controlled code repositories), even after the original funding ends or team members move on.

Git version control system

Git is the most popular version control system used

by researchers worldwide, and the one we’ll be using. Git is used mostly

for managing code when developing software, but it can track

any files – and is particularly effective with text-based files

(e.g. source code like .py, .c,

.r, but also .csv, .tex and

more).

Git helps multiple people work on the same project (even the same file) at the same time. Initially, we will use Git to start tracking changes to files on our local machines; later on we will start sharing our work on GitHub allowing other people to see and contribute to our work.

Git refresher

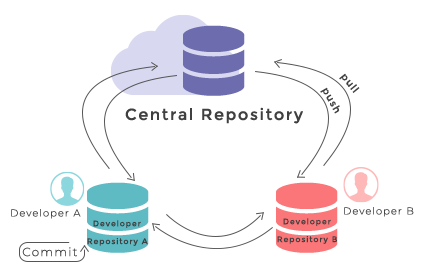

Git stores files in repositories - directories where changes to the files can be tracked. The diagram below shows the different parts of a Git repository, and the most common commands used to work with one.

-

Working directory - a local directory (including

any subdirectories) where your project files live, and where you are

currently working. It is also known as the “untracked” area of Git. Any

changes to files will be marked by Git in the working directory. Git

will only save changes that you explicitly tell it to. Using

git add FILENAMEcommand, can you tell Git to start tracking changes to fileFILENAMEin your working directory. -

Staging area (index) - once you tell Git to start

tracking changes to files (with

git add FILENAMEcommand), Git saves those changes in the staging area on your local machine. Each subsequent change to the same file needs to be followed by anothergit add FILENAMEcommand to tell Git to update it in the staging area. To see what is in your working directory and staging area at any moment (i.e. what changes is Git tracking), you can run the commandgit status. The staging area lets you bundle together groups of changes to save to your repository. -

Local repository - stored within the

.gitdirectory of your project locally, this is where Git wraps together all your changes from the staging area and puts them using thegit commitcommand. Each commit is a new, permanent snapshot (checkpoint, record) of your project in time, which you can share or revert to. -

Remote repository - this is a version of your

project that is hosted somewhere on the Internet (e.g., on GitHub,

GitLab or somewhere else). While your project is nicely

version-controlled in your local repository, and you have snapshots of

its versions from the past, if your machine crashes you still may lose

all your work. Plus, sharing or collaborating on local work with others

requires lots of emailing back and forth. Working with a remote

repository involves ‘pushing’ your local changes to it (using

git push), and pulling other people’s changes back to your local copy (usinggit fetchorgit pull). This keeps the two in sync in order to collaborate, with a bonus that your work also gets backed up to another machine. Best practice when collaborating with others on a shared repository is to always do agit pullbefore agit push, to ensure you have any latest changes before you push your own.

Start tracking changes with Git

Before we start, if you forgot to do it during setup, tell Git to use

main as the default branch. More modern versions of Git use

main, but older ones still use master as their

main branch. They work the same, but we want to keep things consistent

for clarity.

At this point, we should be located in our spacewalks

directory. We want to tell Git to make spacewalks a

repository – a directory where Git can track changes to our files. We do

that with:

We can check everything is set up correctly by asking Git to tell us the status of our project:

OUTPUT

On branch main

No commits yet

Untracked files:

(use "git add <file>..." to include in what will be committed)

data.json

my code v2.py

nothing added to commit but untracked files present (use "git add" to track)This tells us that Git has noticed two files in our directory, but unlike Dropbox or OneDrive, it does not automatically track them. We need to tell Git explicitly which files we want it to track. This is not a handicap, but rather helpful, since scientific code can have vast inputs or outputs we might not want Git to track and store (GBs ot TBs of space telescope data) or require sensitive information we cannot share (for example, medical records).

Add files into repository

We can tell Git to track a file using git add:

and then check the right thing happened:

OUTPUT

On branch main

No commits yet

Changes to be committed:

(use "git rm --cached <file>..." to unstage)

new file: data.json

new file: my code v2.pyGit now knows that should track the changes to

my code v2.py and data.json, but it has not

‘committed’ those changes to the record yet. A commit is a snapshot of

how your tracked files have changed at a stage in time. To create a

commit that records we added two new files, we need to run one more

command:

OUTPUT

[main (root-commit) bf55eb7] Add the initial spacewalks data and code

2 files changed, 437 insertions(+)

create mode 100644 data.json

create mode 100644 my code v2.pyAt this point, Git has taken everything we have told it to save with

the git add command and stored a copy (snapshot) of the

files in a special, hidden .git directory. This is called a

commit (or revision).

The -m option means message, and records a short,

descriptive, and specific comment that will help us remember later on

what we did and why. If we run git commit without

-m , Git will still expect a message – and will launch a

text editor so that we can write a longer one.

Remember, good commit messages start with a brief (<50 characters) statement about the changes made in the commit. Generally, the message should complete the sentence “If applied, this commit will…”. If you want to go into more detail, add a blank line between the summary line and your additional notes. Use this additional space to explain why you made changes and/or what their impact will be.

If we run git status now, we see:

OUTPUT

On branch main

nothing to commit, working tree cleanThis tells us that everything is up to date.

Where are my changes?

If we run ls at this point, we’ll still only see two

files – our script, and our dataset. Git saves information about our

files’ history in the special .git directory mentioned

earlier. This both stops our folders being cluttered with old versions,

and also stops us accidentally deleting them!

You can see the hidden Git directory using the -a flag

to show all files and folders:

OUTPUT

.

..

.gitIf you delete it, your directory will stop being a repository, and it

will lose your history of changes. You never need to look into

.git yourself – Git adds useful commands to do that, which

are covered later on.

Make a change

You may have noticed that the script we received contain blank spaces

in filename. This meant that, when we were typing the script’s name into

the terminal, we had to add a slash before the space like this:

my\ code\ v2.py. Using a backslash in this way is called

“escaping”. It lets the terminal know to treat the space as part of the

filename, and not a separate argument. It is a bit inconvenient and can

cause problems if you forget, so best practise is to avoid spaces in

filenames. The simplest fix is to replace the spaces with underscores

_ instead.

To rename the files using git you can use the git mv

command:

If you run git status again, you’ll see Git has noticed

the change in the filename. Note, git mv handles the name

change directly, instead of seeing a deleted file and a new file as

would be the case if we’d used mv and then

git add. It also stages the changes to be committed.

OUTPUT

On branch main

Changes to be committed:

(use "git restore --staged <file>..." to unstage)

renamed: my code v2.py -> my_code_v2.pyRename our data and output files

Now that we know how to rename files in Git, we can use it to make our files and code a bit easier to understand.

We may want to:

- Give our script and input data file more meaningful names, e.g

eva_data_analysis.pyandeva-data.json. This change also uses removes version tracking from the script name as we are using Git for version control any more as Git will keep track of that for us. - Choose informative file names for our output data file

(e.g.

eva-data.csv) and plot (cumulative_eva_graph.png). - Use relative paths (e.g.

./eva-data.json) instead of absolute paths (e.g.home/sarah/Projects/ssi-ukrn-fair-course/data.csv) to the files (which were hardcoded to the path on our colleagues machine and would not work on ours). - Update the Python script with these changes.

Update the filenames in the repo

Try to make these changes yourself.

- Give our Python script and input data file informative names -

eva_data_analysis.pyandeva-data.json, respectively. - Update other file names and paths used in the script - output CSV

data (

eva-data.csvto match the new input data name) and plot(cumulative_eva_graph.png). - Stage and commit these changes in the Git repository.

Firstly, let’s update the file names in our Python script from VS Code:

PYTHON

data_f = open('./eva-data.json', 'r')

data_t = open('./eva-data.csv','w')

g_file = './cumulative_eva_graph.png'Next, we need to rename the files on the file system using Git:

BASH

git mv data.json eva-data.json

git mv my_code_v2.py eva_data_analysis.py

git add eva_data_analysis.py

git statusOUTPUT

On branch main

Changes to be committed:

(use "git restore --staged <file>..." to unstage)

renamed: data.json -> eva-data.json

renamed: my_code_v2.py -> eva_data_analysis.pyFinally, we can commit our changes:

Interacting with a remote Git server

Git is distributed version control system and lets us synchronise work between multiple copies of the same repository - which may not be on your machine (called remote repositories). So far, we have used a local repository on our machine and, even though we have been incrementally saving our work in a way that is recoverable, if we lost our machine then we would lose all our code along with it,

Fortunately, we can easily upload our local repository, with all our code and the history of our development, to a remote server so that it can be backed-up and recovered in future.

GitHub is an online software development platform that can act as a central remote server. It uses Git, and provides facilities for storing, tracking, and collaborating on software projects. Other Git hosting services are available, such as GitLab and Bitbucket.

Putting our projects on GitHub helps protect them against deletion, and also makes it easy to collaborate and share them. Our collaborators can access the project, download their own copy, and even contribute work back to us.

Let’s push our local repository to GitHub and share it publicly.

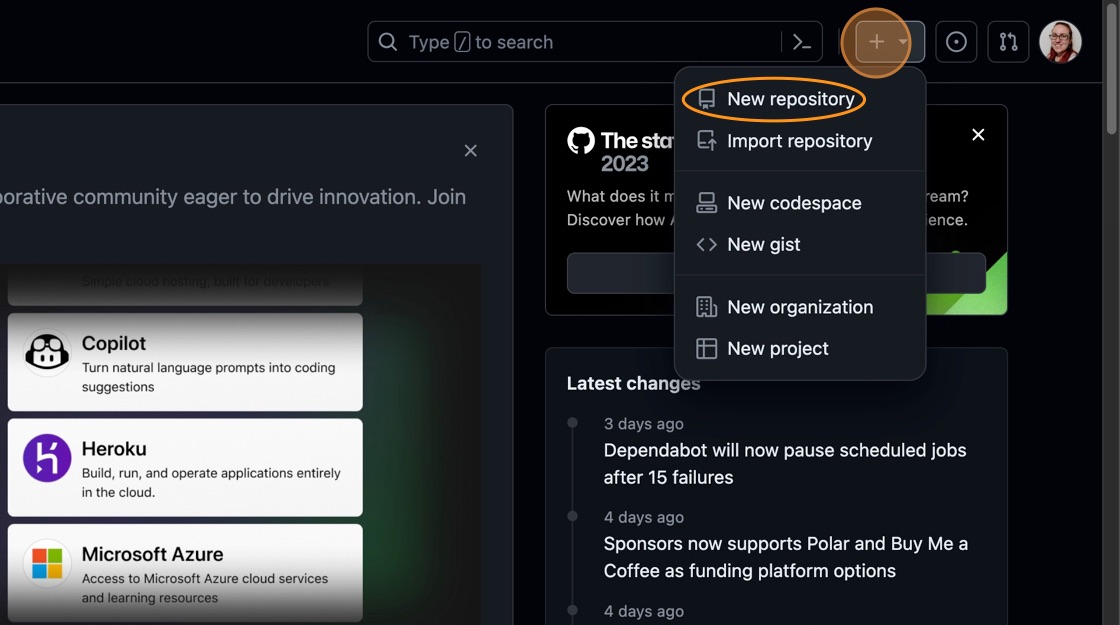

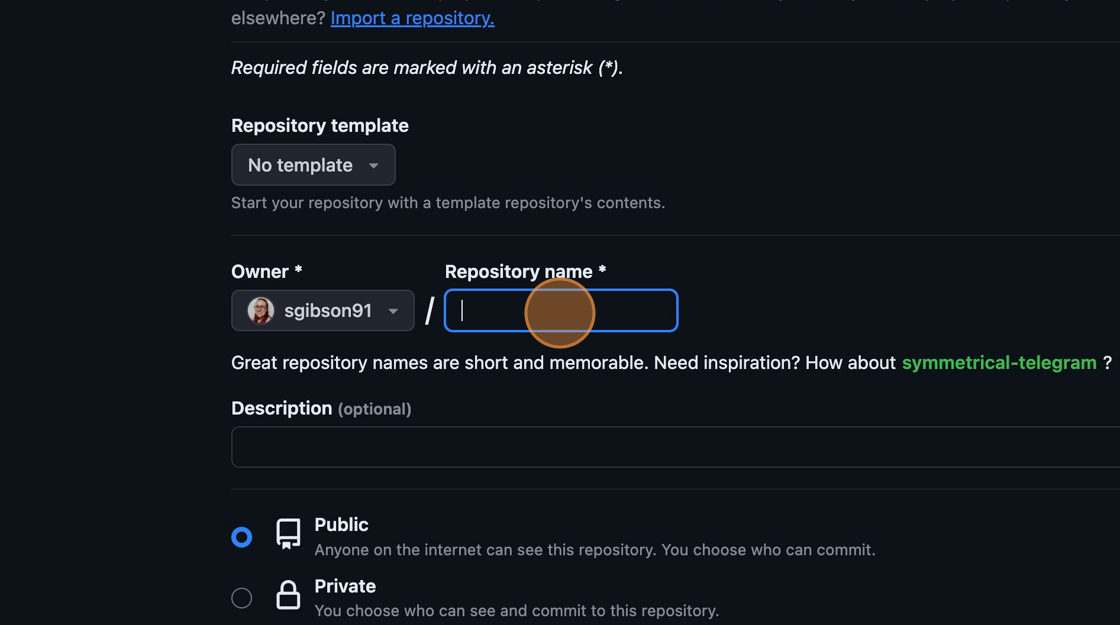

In your browser, navigate to https://github.com and sign into your account.

-

In the top right hand corner of the screen, there is a menu labelled “+” with a dropdown. Click the dropdown and select “New repository” from the options:

Creating a new GitHub repository

Creating a new GitHub repository -

You will be presented with some options to fill in or select while creating your repository. In the “Repository Name” field, type “spacewalks”. This is the name of your project and matches the name of your local folder.

Naming the GitHub repository

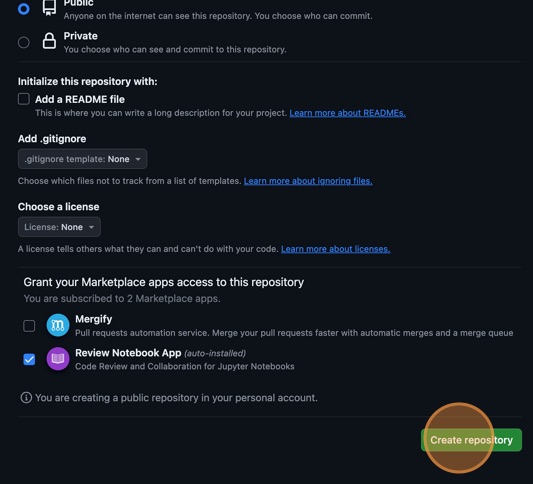

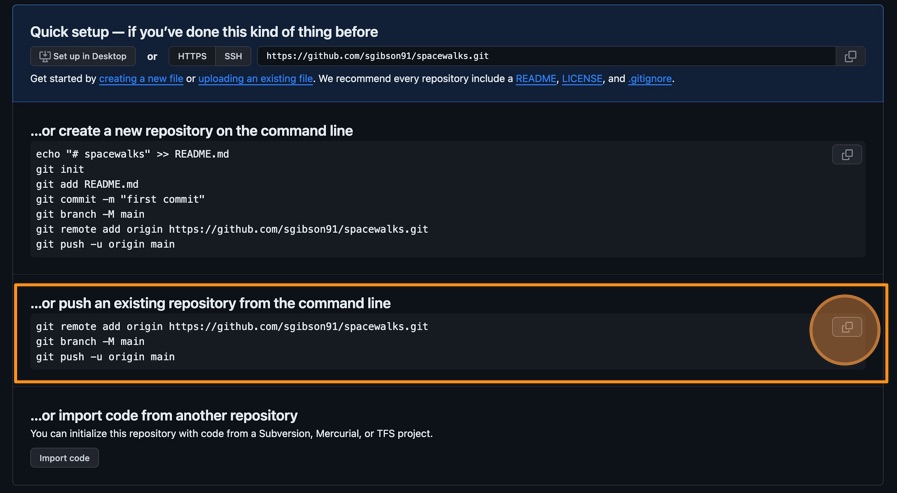

Naming the GitHub repositoryEnsure the visibility of the repository is “Public” and leave all other options blank. Since this repository will be connected to a local repository, it needs to be empty which is why we chose not to initialise with a README or add a license or

.gitignorefile. Click “Create repository” at the bottom of the page: Complete GitHub repository creation

Complete GitHub repository creation -

Now we have a remote repository on GitHub’s servers, you need to send it the files and history from your local repository. GitHub provides some instructions on how to do that for different scenarios. Change the toggle on the right side from “HTTPS” to “SSH”, then look at the heading “…or push an existing repository from the command line”. You should see instructions that look like this:

BASH

git remote add origin git@github.com/<YOUR_GITHUB_HANDLE>/spacewalks.git git branch -M main git push -u origin mainIt is very important you make sure you switch from “HTTPS” to “SSH”. In the setup, we configured our GitHub account and our local machine for SSH. If you select HTTPS, you will not be able to upload your files.

You can copy these commands using the button that looks like two overlapping squares to the right-hand side of the commands. Paste them into your terminal and run them.

- If you refresh your browser window, you should now see the two files

eva_data_analysis.pyandeva-data.jsonvisible in the GitHub repository, matching what you have locally on your machine.

If you were wondering about what those commands did, here is the explanation.

This command tells Git to create a remote called

“origin” and link it to the URL of your GitHub repository. A

remote is a version control concept where two (or more)

repositories are connected to each other, in such a way that they can be

kept in sync by exchanging commits. “origin” is a name used to refer to

the remote repository. It could be called anything, but “origin” is a

common convention for Git since it shows which is considered the “source

of truth”. This is particularly useful when many people are

collaborating on the same repository.

git branch is a command used to manage branches. We’ll

discuss branches later on in the course. We saw this command during

setup and earlier in this episode - it ensures the branch we are working

on is called “main”. This will be the default branch of the project for

everyone working on it.

The git push command is used to update a remote

repository with changes from your local repository. This command tells

Git to update the “main” branch on the “origin” remote. The

-u flag (short for --set-upstream) sets the

‘tracking reference’ for the current branch, so that in future

git push will default to sending to

origin main.

Summary

We have created a new software project and used version control system Git to track changes to it. We can now look back at our work, compare different code versions, and even recover past states. We have also published our software to a remote repository located on GitHub, where it is both secure and shareable.

These skills are critical to reproducible and sustainable science. Software is science, and being able to share the specific version of code used in a paper is required for reproducibility. But we, as researchers, also benefit from having a clear, self-documented record of what we did, when and why. It makes it much easier to track down and fix our bugs, return to work on projects we took a break from, and even for other people to pick up our work.

Before we start making changes to the code, we have to set up a development environment with software dependencies for our project to ensure this metadata about our project is recorded and shared with anyone wishing to download, run or extend our software (and this includes ourselves on a different machine or operating system).

Further reading

We recommend the following resources for some additional reading on the topic of this episode:

- Software Carpentry’s Git Novice lesson

- The Turing Way’s “Guide to Version Control”

- “How Git Works” course on Pluralsight

- How to Write a Good Commit Message

- Git Commit Good Practice

Also check the full reference set for the course.

Key Points

- Version control systems are software that tracks and manages changes to a project over time

- Using version control aids reproducibility since the exact state of the software that produced an output can be recovered

- A commit represents the smallest unit of change to a project

- Commit messages describe what each commit contains and should be descriptive

- GitHub is a hosting service for sharing and collaborating on software

- Using version control is one of the first steps to creating a software project from a bunch of scripts - by investing in these practices early, researchers can create software that supports their work more effectively and enables others to build upon it with confidence.

Content from Reproducible software environments

Last updated on 2025-07-01 | Edit this page

Overview

Questions

- What are virtual environments in software development and why use them?

- How can we manage Python virtual coding environments and external (third-party) libraries on our machines?

Objectives

- Set up a Python virtual coding environment for a software project

using

venvandpip.

So far we have created a local Git repository to track changes in our software project and pushed it to GitHub to enable others to see and contribute to it. We now want to start developing the code further.

Software dependencies

If we have a look at our script, we may notice a few

import lines such as: import json,

import csv, import datetime as dt and

import matplotlib.pyplot as plt throughout the code. This

means that our code depends on or requires several external

libraries (also called third-party packages or

dependencies) to function - namely json,

csv, datetime and matplotlib.

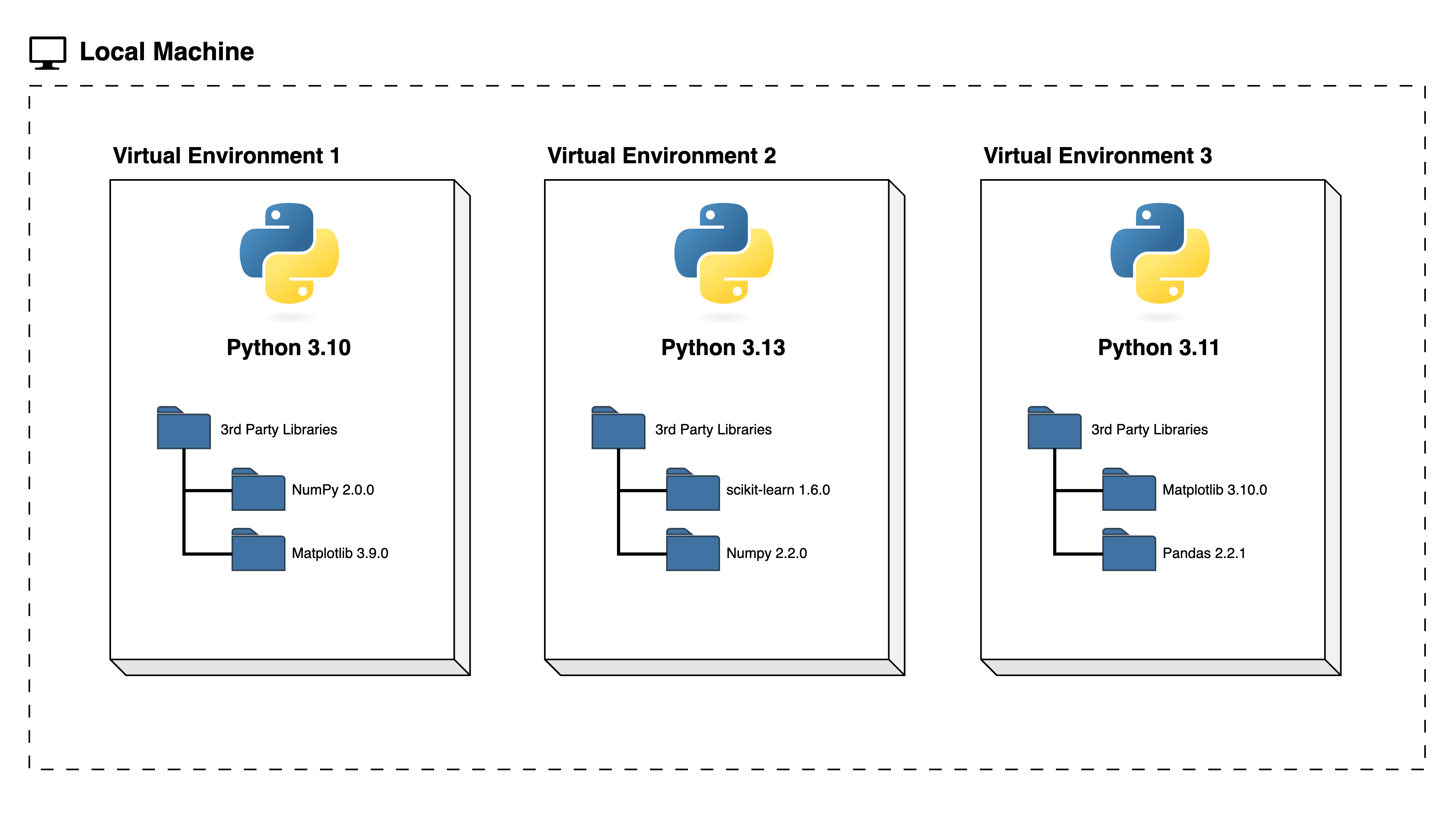

Python applications often use external libraries that do not come as part of the standard Python distribution. This means that you will have to use a package manager tool to install them on your system. Applications will also sometimes need a specific version of an external library (e.g. because they were written to work with feature, class, or function that may have been updated in more recent versions), or a specific version of Python interpreter. This means that each Python application you work with may require a different setup and a set of dependencies so it is useful to be able to keep these configurations separate to avoid confusion between projects. The solution for this problem is to create a self-contained virtual environment per project, which contains a particular version of Python installation plus a number of additional external libraries.

What are virtual software environments?

So what exactly are virtual software environments, and why use them?

A Python virtual environment helps us create an isolated working copy of a software project that uses a specific version of Python interpreter together with specific versions of a number of external libraries installed into that virtual environment. Python virtual environments are implemented as directories with a particular structure within software projects, containing links to specified dependencies allowing isolation from other software projects on your machine that may require different versions of Python or external libraries.

It is recommended to create a separate virtual environment for each project. Then you do not have to worry about changes to the environment of the current project you are working on affecting other projects - you can use different Python versions and different versions of the same third party dependency by different projects on your machine independently from one another.

We can visualise the use of virtual environments for different Python

projects on the same machine as follows:

Another big motivator for using virtual environments is that they make sharing your code with others much easier - as we will see shortly you can record your virtual environment in a special file and share it with your collaborators who can then recreate the same development environment on their machines.

You do not have to worry too much about specific versions of external libraries that your project depends on most of the time. Virtual environments also enable you to always use the latest available version without specifying it explicitly. They also enable you to use a specific older version of a package for your project, should you need to.

Truly reproducible environments are difficult to attain

Creating and managing isolated environments for each of your software projects and sharing descriptions of those environments alongside the relevant code is a great way to make your software and analyses much more reproducible. However, “true” computational reproducibility is very difficult to achieve. For example, the tools we will use in this lesson only track the dependencies of our software, remaining unanware of other aspects of the software’s environment such as the operating system and hardware of the system it is running on. These properties of the environment can influence the running of the software and the results it produces and should be accounted for if a workflow is to be truly reproducible.

Although there is more that we can do to maximise the reproducibility of our software/workflows, the steps described in this episode are an excellent place to start. We should not let the difficulty of attaining “perfect” reproducibility prevent us from implementing “good enough” practices that make our lives easier and are much better than doing nothing.

Managing virtual environments

There are several command line tools used for managing Python virtual

environments - we will use venv, available by default from

the standard Python distribution since

Python 3.3.

Part of managing your (virtual) working environment involves

installing, updating and removing external packages on your system. The

Python package manager tool pip is most commonly used for

this - it interacts and obtains the packages from the central repository

called Python Package Index (PyPI).

So, we will use venv and pip in combination

to help us create and share our virtual development environments.

Creating virtual environments

Creating a virtual environment with venv is done by

executing the following command:

where /path/to/new/virtual/environment is a path to a

directory where you want to place it - conventionally within your

software project so they are co-located. This will create the target

directory for the virtual environment.

For our project let’s create a virtual environment called “venv_spacewalks” from our project’s root directory.

Firstly, ensure you are located within the project’s root directory:

If you list the contents of the newly created directory “venv_spacewalks”, on a Mac or Linux system (slightly different on Windows as explained below) you should see something like:

OUTPUT

total 8

drwxr-xr-x 12 alex staff 384 5 Oct 11:47 bin

drwxr-xr-x 2 alex staff 64 5 Oct 11:47 include

drwxr-xr-x 3 alex staff 96 5 Oct 11:47 lib

-rw-r--r-- 1 alex staff 90 5 Oct 11:47 pyvenv.cfgSo, running the python3 -m venv venv_spacewalks command

created the target directory called “venv_spacewalks” containing:

-

pyvenv.cfgconfiguration file with a home key pointing to the Python installation from which the command was run, -

binsubdirectory (calledScriptson Windows) containing a symlink of the Python interpreter binary used to create the environment and the standard Python library, -

lib/pythonX.Y/site-packagessubdirectory (calledLib\site-packageson Windows) to contain its own independent set of installed Python packages isolated from other projects, and - various other configuration and supporting files and subdirectories.

Once you’ve created a virtual environment, you will need to activate it.

On Mac or Linux, it is done as:

On Windows, recall that we have Scripts directory

instead of bin and activating a virtual environment is done

as:

Activating the virtual environment will change your command line’s prompt to show what virtual environment you are currently using (indicated by its name in round brackets at the start of the prompt), and modify the environment so that running Python will get you the particular version of Python configured in your virtual environment.

You can verify you are using your virtual environment’s version of

Python by checking the path using the command which:

When you’re done working on your project, you can exit the environment with:

If you’ve just done the deactivate, ensure you

reactivate the environment ready for the next part:

Note that, since our software project is being tracked by Git, the newly created virtual environment will show up in version control - we will see how to handle it using Git in one of the subsequent episodes.

Installing external packages

We noticed earlier that our code depends on four external

packages/libraries - json, csv,

datetime and matplotlib. As of Python 3.5,

Python comes with in-built JSON and CSV libraries - this means there is

no need to install these additional packages (if you are using a fairly

recent version of Python), but you still need to import them in any

script that uses them. However, we still need to install packages

datetime and matplotlib as they do not come as

standard with Python distribution.

To install the latest version of packages datetime and

matplotlib with pip you use pip’s

install command and specify the package’s name, e.g.:

BASH

(venv_spacewalks) $ python3 -m pip install datetime

(venv_spacewalks) $ python3 -m pip install matplotlibor like this to install multiple packages at once for short:

The above commands have installed packages datetime and

matplotlib in our currently active

venv_spacewalks environment and will not affect any other

Python projects we may have on our machines.

If you run the python3 -m pip install command on a

package that is already installed, pip will notice this and

do nothing.

To install a specific version of a Python package give the package

name followed by == and the version number,

e.g. python3 -m pip install matplotlib==3.5.3.

To specify a minimum version of a Python package, you can do

python3 -m pip install matplotlib>=3.5.1.

To upgrade a package to the latest version,

e.g. python3 -m pip install --upgrade matplotlib.

To display information about a particular installed package do:

OUTPUT

Name: matplotlib

Version: 3.9.0

Summary: Python plotting package

Home-page:

Author: John D. Hunter, Michael Droettboom

Author-email: Unknown <matplotlib-users@python.org>

License: License agreement for matplotlib versions 1.3.0 and later

=========================================================

...

Location: /opt/homebrew/lib/python3.11/site-packages

Requires: contourpy, cycler, fonttools, kiwisolver, numpy, packaging, pillow, pyparsing, python-dateutil

Required-by:To list all packages installed with pip (in your current

virtual environment):

OUTPUT

Package Version

--------------- -----------

contourpy 1.2.1

cycler 0.12.1

DateTime 5.5

fonttools 4.53.1

kiwisolver 1.4.5

matplotlib 3.9.2

numpy 2.0.1

packaging 24.1

pillow 10.4.0

pip 23.3.1

pyparsing 3.1.2

python-dateutil 2.9.0.post0

pytz 2024.1

setuptools 69.0.2

six 1.16.0

zope.interface 7.0.1To uninstall a package installed in the virtual environment do:

python3 -m pip uninstall <package-name>. You can also

supply a list of packages to uninstall at the same time.

Sharing virtual environments

You are collaborating on a project with a team so, naturally, you

will want to share your environment with your collaborators so they can

easily ‘clone’ your software project with all of its dependencies and

everyone can replicate equivalent virtual environments on their

machines. pip has a handy way of exporting, saving and

sharing virtual environments.

To export your active environment - use

python3 -m pip freeze command to produce a list of packages

installed in the virtual environment. A common convention is to put this

list in a requirements.txt file in your project’s root

directory:

BASH

(venv_spacewalks) $ python3 -m pip freeze > requirements.txt

(venv_spacewalks) $ cat requirements.txtOUTPUT

contourpy==1.2.1

cycler==0.12.1

DateTime==5.5

fonttools==4.53.1

kiwisolver==1.4.5

matplotlib==3.9.2

numpy==2.0.1

packaging==24.1

pillow==10.4.0

pyparsing==3.1.2

python-dateutil==2.9.0.post0

pytz==2024.1

six==1.16.0

zope.interface==7.0.1The first of the above commands will create a

requirements.txt file in your current directory. Yours may

look a little different, depending on the version of the packages you

have installed, as well as any differences in the packages that they

themselves use.

The requirements.txt file can then be committed to a

version control system (we will see how to do this using Git in a

moment) and get shipped as part of your software and shared with

collaborators and/or users.

Note that you only need to share the small

requirements.txt file with your collaborators - and not the

entire venv_spacewalks directory with packages contained in

your virtual environment. We need to tell Git to ignore that directory,

so it is not tracked and shared - we do this by creating a file

.gitignore in the root directory of our project and adding

a line venv_spacewalks to it.

BASH

(venv_spacewalks) $ echo "venv_spacewalks/" >> .gitignore

(venv_spacewalks) $ git add .gitignore

(venv_spacewalks) $ git commit -m "ignoring venv folder"Let’s now put requirements.txt under version control and

share it along with our code.

BASH

(venv_spacewalks) $ git add requirements.txt

(venv_spacewalks) $ git commit -m "Initial commit of requirements.txt."

(venv_spacewalks) $ git push origin mainYour collaborators or users of your software can now download your

software’s source code and replicate the same virtual software

environment for running your code on their machines using

requirements.txt to install all the necessary depending

packages.

To recreate a virtual environment from requirements.txt,

from the project root one can do the following:

Another challenge in (long-term) reproducibility

For people (including your future self) to be able to reproduce software environments described in this way, the listed dependencies need to remain available to download and possible to install on the user’s system. These are reasonably safe assumptions for widely-used, actively maintained tools on commonly-used operating systems in the short- to medium-term. However, it becomes less likely that we will be able to recreate such environments as system architectures evolve over time and maintainers stop supporting older versions of software. To achieve this kind of long-term reproducibility, you will need to explore options to provide the actual environment – with dependencies already included – alongside your software e.g. via a containerised environment.

As your project grows - you may need to update your environment for a variety of reasons, e.g.:

- one of your project’s dependencies has just released a new version (dependency version number update),

- you need an additional package for data analysis (adding a new dependency), or

- you have found a better package and no longer need the older package (adding a new and removing an old dependency).

What you need to do in this case (apart from installing the new and

removing the packages that are no longer needed from your virtual

environment) is update the contents of the requirements.txt

file accordingly by re-issuing pip freeze command and

propagate the updated requirements.txt file to your

collaborators via your code sharing platform.

Environment management can be troublesome

Software environment management is a difficult thing to get right, which is one reason why the Python community has come up with so many different ways of doing it over the years. (That webcomic is several years old at the time of writing and the Python environment management ecosystem has only become more complicated since.) Unfortunately, even if you try to follow good practices and keep your environments isolated it is possible – perhaps even likely – that you will face difficulties with installing and updating dependencies on your projects in the coming years. Such issues are particularly likely to appear when you upgrade your computer hardware, operating system, and/or interpreter/compiler. As before, this is not a reason to avoid managing your software environments altogether – or to avoid upgrading your hardware, operating system, etc! If you have descriptions of your environments it will always be easier to reproduce them and keep working with your software than if you need to start from scratch. Furthermore, your expertise will develop as you get more practice with managing your software environments, which will equip you well to troubleshoot problems if and when they arise.

Running the code and reproducing results

We are now setup to run our code from the newly created virtual environment:

You should get a pop up window with a graph. However, some (but not all) Windows users will not. You might instead see an error like:

Traceback (most recent call last):

File "C:\Users\Toaster\Desktop\spacewalks\eva_data_analysis.py", line 30, in <module>

w.writerow(data[j].values())

File "C:\Program Files\WindowsApps\PythonSoftwareFoundation.Python.3.12_3.12.2544.0_x64__qbz5n2kfra8p0\Lib\encodings\cp1252.py", line 19, in encode

return codecs.charmap_encode(input,self.errors,encoding_table)[0]

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

UnicodeEncodeError: 'charmap' codec can't encode character '\x92' in position 101: character maps to <undefined>

(spacewalks) (spacewalks)This is not what we were expecting! The problem is character

encoding. ‘Standard’ Latin characters are encoded using ASCII, but

the expanded Unicode character set covers many more. In this case, the

data contains Unicode characters that are represented in the ASCII input

file with shortcuts (Â as \u00c2 and

’ as \u0092).

When we read the file, Python converts those into the Unicode

characters. Then by default Windows tries to write out

eva-data.csv using UTF-7. This saves space compared to the

standard UTF-8, but it doesn’t include all of the characters. It

automatically converts \u0092 into the shorter

\x92, then discovers that doesn’t exist in UTF-7.

The fact that different systems have different defaults, which can change or even break your code’s behaviour, shows why it is so important to make our code’s requirements explicit!

We can easily fix this by explicitly telling Python what encoding to use when reading and writing our files:

data_f = open('./eva-data.json', 'r', encoding='ascii')

data_t = open('./eva-data.csv','w', encoding='utf-8')Do not foget to commit any files that have been changed.

Now we have the code running in a virtual environment, in the next episode we will inspect it in more detail, to see if we can understand and improve it.

Further reading

We recommend the following resources for some additional reading on the topic of this episode:

Also check the full reference set for the course.

Key Points

- Virtual environments keep Python versions and dependencies required by different projects separate.

- A Python virtual environment is itself a directory structure.

- You can use

venvto create and manage Python virtual environments, andpipto install and manage external dependencies your code relies on. - By convention, you can save and export your Python virtual

environment in

requirements.txtfile in your project’s root directory, which can then be shared with collaborators/users and used to replicate your virtual environment elsewhere.

Content from Code readability

Last updated on 2025-07-01 | Edit this page

Overview

Questions

- Why does code readability matter?

- How can I organise my code to be more readable?

- What types of documentation can I include to improve the readability of my code?

Objectives

After completing this episode, participants should be able to:

- Organise code into reusable functions that achieve a singular purpose

- Choose function and variable names that help explain the purpose of the function or variable

- Write informative comments and docstrings to provide more detail about what the code is doing

In this episode, we will introduce the concept of readable code and consider how it can help create reusable scientific software and empower collaboration between researchers.

When someone writes code, they do so based on requirements that are likely to change in the future. Requirements change because software interacts with the real world, which is dynamic. When these requirements change, the developer (who is not necessarily the same person who wrote the original code) must implement the new requirements. They do this by reading the original code to understand the different abstractions, and identify what needs to change. Readable code facilitates the reading and understanding of the abstraction phases and, as a result, facilitates the evolution of the codebase. Readable code saves future developers’ time and effort.

In order to develop readable code, we should ask ourselves: “If I re-read this piece of code in fifteen days or one year, will I be able to understand what I have done and why?” Or even better: “If a new person who just joined the project reads my software, will they be able to understand what I have written here?”

We will now learn about a few software best practices we can follow to help create more readable code.

Place import statements at the top

Let’s have a look our code again - the first thing we may notice is

that our script currently places import statements throughout the code.

Conventionally, all import statements are placed at the top of the

script so that dependent libraries are clearly visible and not buried

inside the code (even though there are standard ways of describing

dependencies - e.g. using requirements.txt file). This will

help readability (accessibility) and reusability of our code.

Our code after the modification should look like the following.

PYTHON

import json

import csv

import datetime as dt

import matplotlib.pyplot as plt

# https://data.nasa.gov/resource/eva.json (with modifications)

data_f = open('./eva-data.json', 'r', encoding='ascii')

data_t = open('./eva-data.csv','w', encoding='utf-8')

g_file = './cumulative_eva_graph.png'

fieldnames = ("EVA #", "Country", "Crew ", "Vehicle", "Date", "Duration", "Purpose")

data=[]

for i in range(374):

line=data_f.readline()

print(line)

data.append(json.loads(line[1:-1]))

#data.pop(0)

## Comment out this bit if you don't want the spreadsheet

w=csv.writer(data_t)

time = []

date =[]

j=0

for i in data:

print(data[j])

# and this bit

w.writerow(data[j].values())

if 'duration' in data[j].keys():

tt=data[j]['duration']

if tt == '':

pass

else:

t=dt.datetime.strptime(tt,'%H:%M')

ttt = dt.timedelta(hours=t.hour, minutes=t.minute, seconds=t.second).total_seconds()/(60*60)

print(t,ttt)

time.append(ttt)

if 'date' in data[j].keys():

date.append(dt.datetime.strptime(data[j]['date'][0:10], '%Y-%m-%d'))

#date.append(data[j]['date'][0:10])

else:

time.pop(0)

j+=1

t=[0]

for i in time:

t.append(t[-1]+i)

date,time = zip(*sorted(zip(date, time)))

plt.plot(date,t[1:], 'ko-')

plt.xlabel('Year')

plt.ylabel('Total time spent in space to date (hours)')

plt.tight_layout()

plt.savefig(g_file)

plt.show()Let’s make sure we commit our changes.

Use meaningful variable names

Variables are the most common thing you will assign when coding, and it’s really important that it is clear what each variable means in order to understand what the code is doing. If you return to your code after a long time doing something else, or share your code with a colleague, it should be easy enough to understand what variables are involved in your code from their names. Therefore we need to give them clear names, but we also want to keep them concise so the code stays readable. There are no “hard and fast rules” here, and it’s often a case of using your best judgment.

Some useful tips for naming variables are:

- Short words are better than single character names. For example, if

we were creating a variable to store the speed to read a file,

s(for ‘speed’) is not descriptive enough butMBReadPerSecondAverageAfterLastFlushToLogis too long to read and prone to misspellings.ReadSpeed(orread_speed) would suffice. - If you are finding it difficult to come up with a variable name that is both short and descriptive, go with the short version and use an inline comment to describe it further (more on those in the next section). This guidance does not necessarily apply if your variable is a well-known constant in your domain - for example, c represents the speed of light in physics.

- Try to be descriptive where possible and avoid meaningless or funny

names like

foo,bar,var,thing, etc.

There are also some restrictions to consider when naming variables in Python:

- Only alphanumeric characters and underscores are permitted in variable names.

- You cannot begin your variable names with a numerical character as

this will raise a syntax error. Numerical characters can be included in

a variable name, just not as the first character. For example,

read_speed1is a valid variable name, but1read_speedisn’t. (This behaviour may be different for other programming languages.) - Variable names are case sensitive. So

speed_of_lightandSpeed_Of_Lightare not the same. - Programming languages often have global pre-built functions, such as

input, which you may accidentally overwrite if you assign a variable with the same name and no longer be able to access the originalinputfunction. In this case, opting for something likeinput_datawould be preferable. Note that this behaviour may be explicitly disallowed in other programming languages but is not in Python.

Give a descriptive name to a variable

Below we have a variable called var being set the value

of 9.81. var is not a very descriptive name here as it

doesn’t tell us what 9.81 means, yet it is a very common constant in

physics! Go online and find out which constant 9.81 relates to and

suggest a new name for this variable.

Hint: the units are metres per second squared!

\[ 9.81 m/s^2 \] is the gravitational force exerted by the Earth. It is often referred to as “little g” to distinguish it from “big G” which is the Gravitational Constant. A more descriptive name for this variable therefore might be:

Rename our variables to be more descriptive

Let’s apply this to eva_data_analysis.py.

-

Edit the code as follows to use descriptive variable names:

- Change data_f to input_file

- Change data_t to output_file

- Change g_file to graph_file

What other variable names in our code would benefit from renaming?

Commit your changes to your repository. Remember to use an informative commit message.

Updated code:

PYTHON

import json

import csv

import datetime as dt

import matplotlib.pyplot as plt

# https://data.nasa.gov/resource/eva.json (with modifications)

input_file = open('./eva-data.json', 'r', encoding='ascii')

output_file = open('./eva-data.csv', 'w', encoding='utf-8')

graph_file = './cumulative_eva_graph.png'

fieldnames = ("EVA #", "Country", "Crew ", "Vehicle", "Date", "Duration", "Purpose")

data=[]

for i in range(374):

line=input_file.readline()

print(line)

data.append(json.loads(line[1:-1]))

#data.pop(0)

## Comment out this bit if you don't want the spreadsheet

w=csv.writer(output_file)

time = []

date =[]

j=0

for i in data:

print(data[j])

# and this bit

w.writerow(data[j].values())

if 'duration' in data[j].keys():

tt=data[j]['duration']

if tt == '':

pass

else:

t=dt.datetime.strptime(tt,'%H:%M')

ttt = dt.timedelta(hours=t.hour, minutes=t.minute, seconds=t.second).total_seconds()/(60*60)

print(t,ttt)

time.append(ttt)

if 'date' in data[j].keys():

date.append(dt.datetime.strptime(data[j]['date'][0:10], '%Y-%m-%d'))

#date.append(data[j]['date'][0:10])

else:

time.pop(0)

j+=1

t=[0]

for i in time:

t.append(t[-1]+i)

date,time = zip(*sorted(zip(date, time)))

plt.plot(date,t[1:], 'ko-')

plt.xlabel('Year')

plt.ylabel('Total time spent in space to date (hours)')

plt.tight_layout()

plt.savefig(graph_file)

plt.show()We should also rename variables w, t,

tt and ttt to be more descriptive.

Commit changes:

Use standard libraries

Our script currently reads the data line-by-line from the JSON data file and uses custom code to manipulate the data. Variables of interest are stored in lists but there are more suitable data structures (e.g. data frames) to store data in our case. By choosing custom code over standard and well-tested libraries, we are making our code less readable and understandable and more error-prone.

The main functionality of our code can be rewritten as follows using

the Pandas library to load and manipulate the data in data

frames.

First, we need to install this dependency into our virtual environment (which should be active at this point).

The code should now look like:

PYTHON

import matplotlib.pyplot as plt

import pandas as pd

# Data source: https://data.nasa.gov/resource/eva.json (with modifications)

input_file = open('./eva-data.json', 'r', encoding='ascii')

output_file = open('./eva-data.csv', 'w', encoding='utf-8')

graph_file = './cumulative_eva_graph.png'

eva_df = pd.read_json(input_file, convert_dates=['date'])

eva_df['eva'] = eva_df['eva'].astype(float)

eva_df.dropna(axis=0, inplace=True)

eva_df.sort_values('date', inplace=True)

eva_df.to_csv(output_file, index=False)

eva_df['duration_hours'] = eva_df['duration'].str.split(":").apply(lambda x: int(x[0]) + int(x[1])/60)

eva_df['cumulative_time'] = eva_df['duration_hours'].cumsum()

plt.plot(eva_df['date'], eva_df['cumulative_time'], 'ko-')

plt.xlabel('Year')

plt.ylabel('Total time spent in space to date (hours)')

plt.tight_layout()

plt.savefig(graph_file)

plt.show()We should replace the existing code in our Python script

eva_data_analysis.py with the above code and commit the

changes. Remember to use an informative commit message.

BASH

(venv_spacewalks) $ git add eva_data_analysis.py

(venv_spacewalks) $ git commit -m "Refactor code to use standard libraries"Make sure to capture the changes to your virtual development environment too.

Use comments to explain functionality

Commenting is a very useful practice to help convey the context of the code. It can be helpful as a reminder for your future self or your collaborators as to why code is written in a certain way, how it is achieving a specific task, or the real-world implications of your code.

There are several ways to add comments to code:

- An inline comment is a comment on the same line as a code statement. Typically, it comes after the code statement and finishes when the line ends and is useful when you want to explain the code line in short. Inline comments in Python should be separated by at least two spaces from the statement; they start with a # followed by a single space, and have no end delimiter.

- A multi-line or block comment can

span multiple lines and has a start and end sequence. To comment out a

block of code in Python, you can either add a # at the beginning of each

line of the block or surround the entire block with three single

(

''') or double quotes (""").

PYTHON

x = 5 # In Python, inline comments begin with the `#` symbol and a single space.

'''

This is a multiline

comment

in Python.

'''Here are a few things to keep in mind when commenting your code:

- Focus on the why and the how of your code - avoid using comments to explain what your code does. If your code is too complex for other programmers to understand, consider rewriting it for clarity rather than adding comments to explain it.

- Make sure you are not reiterating something that your code already conveys on its own. Comments should not echo your code.

- Keep comments short and concise. Large blocks of text quickly become unreadable and difficult to maintain.

- Comments that contradict the code are worse than no comments. Always make a priority of keeping comments up-to-date when code changes.

Examples of unhelpful comments

PYTHON

statetax = 1.0625 # Assigns the float 1.0625 to the variable 'statetax'

citytax = 1.01 # Assigns the float 1.01 to the variable 'citytax'

specialtax = 1.01 # Assigns the float 1.01 to the variable 'specialtax'The comments in this code simply tell us what the code does, which is easy enough to figure out without the inline comments.

Examples of helpful comments

PYTHON

statetax = 1.0625 # State sales tax rate is 6.25% through Jan. 1

citytax = 1.01 # City sales tax rate is 1% through Jan. 1

specialtax = 1.01 # Special sales tax rate is 1% through Jan. 1In this case, it might not be immediately obvious what each variable represents, so the comments offer helpful, real-world context. The date in the comment also indicates when the code might need to be updated.

Add comments to our code

- Examine

eva_data_analysis.py. Add as many comments as you think is required to help yourself and others understand what that code is doing. - Commit your changes to your repository. Remember to use an informative commit message.

Some good comments may look like the example below.

PYTHON

import matplotlib.pyplot as plt

import pandas as pd

# https://data.nasa.gov/resource/eva.json (with modifications)

input_file = open('./eva-data.json', 'r', encoding='ascii')

output_file = open('./eva-data.csv', 'w', encoding='utf-8')

graph_file = './cumulative_eva_graph.png'

print("--START--")

print(f'Reading JSON file {input_file}')

# Read the data from a JSON file into a Pandas dataframe

eva_df = pd.read_json(input_file, convert_dates=['date'])

eva_df['eva'] = eva_df['eva'].astype(float)

# Clean the data by removing any incomplete rows and sort by date

eva_df.dropna(axis=0, inplace=True)

eva_df.sort_values('date', inplace=True)

print(f'Saving to CSV file {output_file}')

# Save dataframe to CSV file for later analysis

eva_df.to_csv(output_file, index=False)

print(f'Plotting cumulative spacewalk duration and saving to {graph_file}')

# Plot cumulative time spent in space over years

eva_df['duration_hours'] = eva_df['duration'].str.split(":").apply(lambda x: int(x[0]) + int(x[1])/60)

eva_df['cumulative_time'] = eva_df['duration_hours'].cumsum()

plt.plot(eva_df['date'], eva_df['cumulative_time'], 'ko-')

plt.xlabel('Year')

plt.ylabel('Total time spent in space to date (hours)')

plt.tight_layout()

plt.savefig(graph_file)

plt.show()

print("--END--")Commit changes:

Separate units of functionality

Functions are a fundamental concept in writing software and are one of the core ways you can organise your code to improve its readability. A function is an isolated section of code that performs a single, specific task that can be simple or complex. It can then be called multiple times with different inputs throughout a codebase, but its definition only needs to appear once.

Breaking up code into functions in this manner benefits readability since the smaller sections are easier to read and understand. Since functions can be reused, codebases naturally begin to follow the Don’t Repeat Yourself principle which prevents software from becoming overly long and confusing. The software also becomes easier to maintain because, if the code encapsulated in a function needs to change, it only needs updating in one place instead of many. As we will learn in a future episode, testing code also becomes simpler when code is written in functions. Each function can be individually checked to ensure it is doing what is intended, which improves confidence in the software as a whole.

Callout

Decomposing code into functions helps with reusability of blocks of code and eliminating repetition, but, equally importantly, it helps with code readability and testing.

Looking at our code, you may notice it contains different pieces of functionality:

- reading the data from a JSON file

- converting and saving the data in the CSV format

- processing/cleaning the data and preparing it for analysis

- data analysis and visualising the results

Let’s refactor our code so that reading the data in JSON format into

a dataframe (step 1.) and converting it and saving to the CSV format

(step 2.) are extracted into separate functions. Let’s name those

functions read_json_to_dataframe and

write_dataframe_to_csv respectively. The main part of the

script should then be simplified to invoke these new functions, while

the functions themselves contain the complexity of each of these two

steps. We will continue to work on steps 3. and 4. above later on.

After the initial refactoring, our code may look something like the following.

PYTHON

import matplotlib.pyplot as plt

import pandas as pd

def read_json_to_dataframe(input_file):

print(f'Reading JSON file {input_file}')

# Read the data from a JSON file into a Pandas dataframe

eva_df = pd.read_json(input_file, convert_dates=['date'])

eva_df['eva'] = eva_df['eva'].astype(float)

# Clean the data by removing any incomplete rows and sort by date

eva_df.dropna(axis=0, inplace=True)

eva_df.sort_values('date', inplace=True)

return eva_df

def write_dataframe_to_csv(df, output_file):

print(f'Saving to CSV file {output_file}')

# Save dataframe to CSV file for later analysis

df.to_csv(output_file, index=False)

# Main code

print("--START--")

input_file = open('./eva-data.json', 'r', encoding='ascii')

output_file = open('./eva-data.csv', 'w', encoding='utf-8')

graph_file = './cumulative_eva_graph.png'

# Read the data from JSON file

eva_data = read_json_to_dataframe(input_file)

# Convert and export data to CSV file

write_dataframe_to_csv(eva_data, output_file)

print(f'Plotting cumulative spacewalk duration and saving to {graph_file}')

# Plot cumulative time spent in space over years

eva_data['duration_hours'] = eva_data['duration'].str.split(":").apply(lambda x: int(x[0]) + int(x[1])/60)

eva_data['cumulative_time'] = eva_data['duration_hours'].cumsum()

plt.plot(eva_data['date'], eva_data['cumulative_time'], 'ko-')

plt.xlabel('Year')

plt.ylabel('Total time spent in space to date (hours)')

plt.tight_layout()

plt.savefig(graph_file)

plt.show()

print("--END--")We have chosen to create functions for reading in and writing out

data files since this is a very common task within research software.

While these functions do not contain that many lines of code due to

using the pandas in-built methods that do all the complex

data reading, converting and writing operations, it can be useful to

package these steps together into reusable functions if you need to read

in or write out a lot of similarly structured files and process them in

the same way.

Use docstrings to document functions