Welcome

Overview

Teaching: 15 min

Exercises: 0 minQuestions

Who are we and what are we going to learn?

Objectives

Introduce ourselves and the course

Setup our learning platform

Better research by better sharing

Introductions

Introductions set the stage for learning.

— Tracy Teal, Former Executive Director, The Carpentries

Hello everyone, and welcome to the FAIR in biological practice workshop. We are very pleased to have you with us.

Today’s Trainers

To begin the class, each Trainer should give a brief introduction of themselves.

Now we would like to get to know all of you.

Who are you and what are your expectations from the workshop

Please introduce yourself shortly and tell us:

- Why are you taking this course?

- What goals do you have for the follwing days?

Better research by better sharing

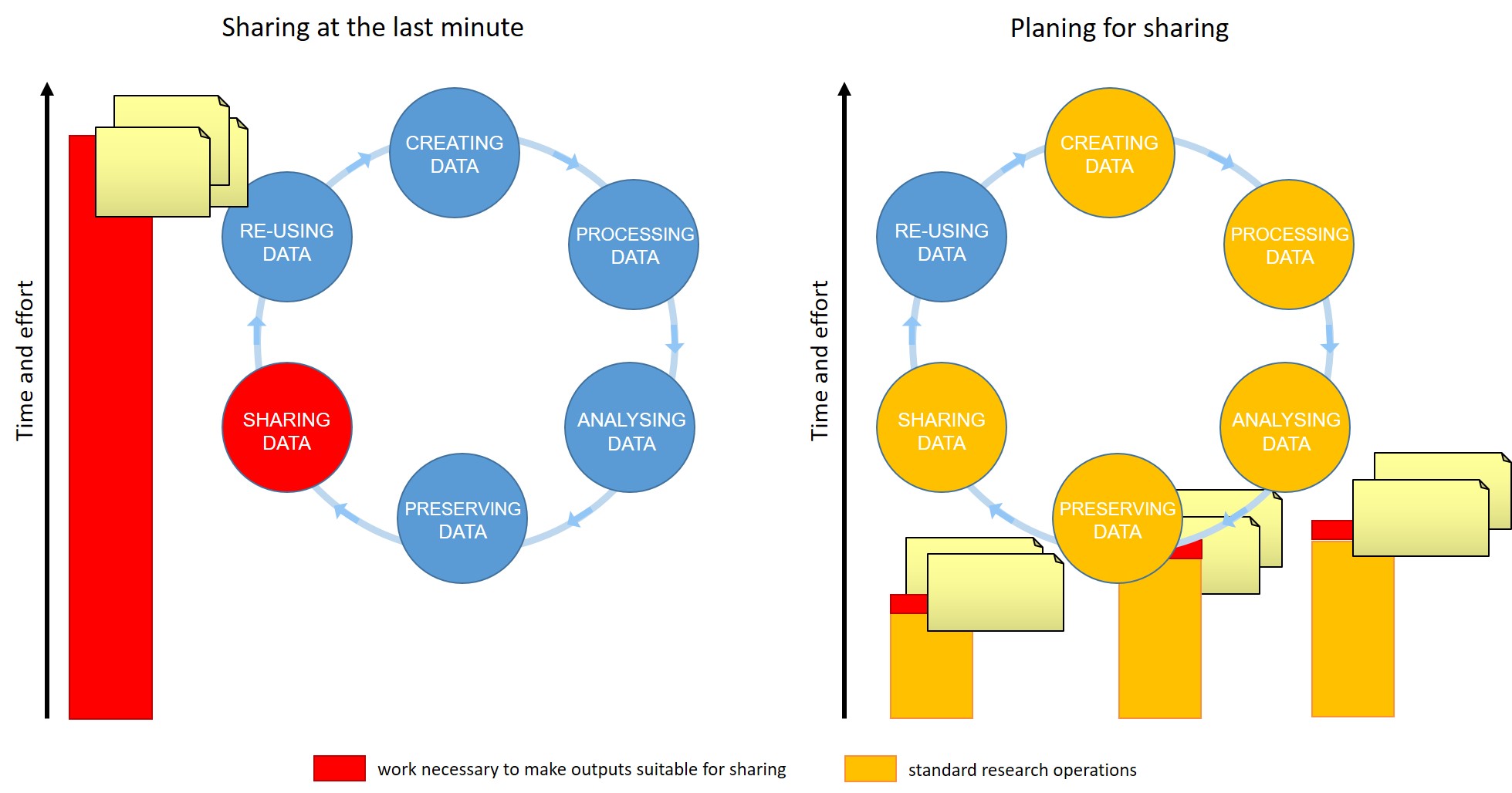

For many of us, data management or output sharing in general are considered a burden rather than a useful activity. Part of the problem is our bad timing and lack of planning.

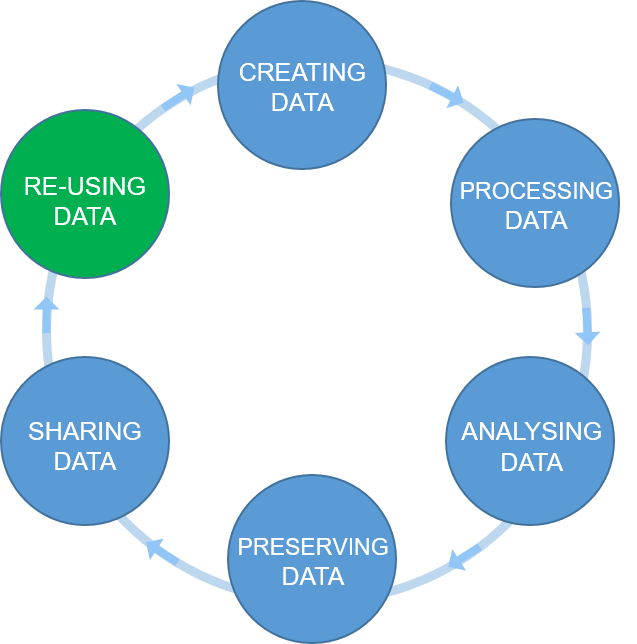

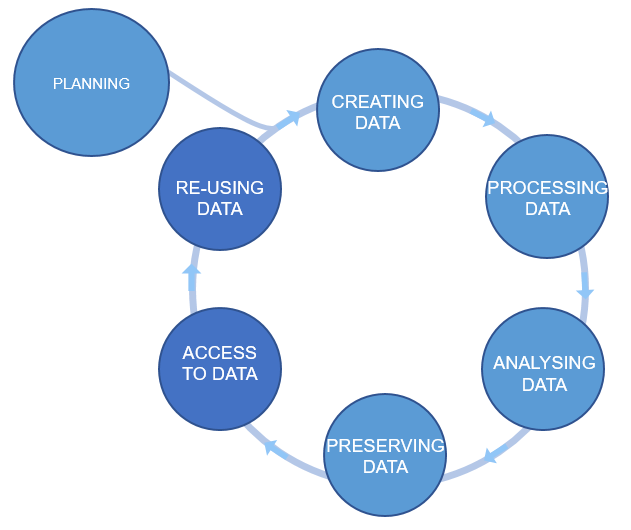

Data management is a continuous process

Figure credits: Tomasz Zielinski and Andrés Romanowski

Figure credits: Tomasz Zielinski and Andrés Romanowski

When should you engage in data sharing and open practices?

- Data management should be done throughout the duration of your project.

- If you wait till the end, it will take a massive effort on your side and will be more of a burden than a benefit.

- The overheads of making data suitable for sharing at each stage of data life-cycle are small comparing to the other actions involved

- Taking the time to do effective data management will help you understand your data better and make it easier to find when you need it (for example when you need to write a manuscript or a thesis!).

- All the practices that enable others to access and use your outcomes directly benefit you and your group

In this workshop we will show you how you can plan and do your research in a way that makes your outputs readily available for re-use by others.

Our agenda:

- Day 1 We will start with explaining Open Science principles and what the benefits are of being open for you and society. Then we will talk about FAIR principles which define steps you/we should take so that our “shared” outputs are of value. We will finish with a brief introduction to metadata and ontologies, and how to be precise to deliver FAIR outputs.

- Day 2 We will show you how to use metadata in Excel, and the benefits of using online records for documenting experiments. We will talk about working and organizing files and appropriate file naming conventions using Excel or CSV tables to store and document data.

-

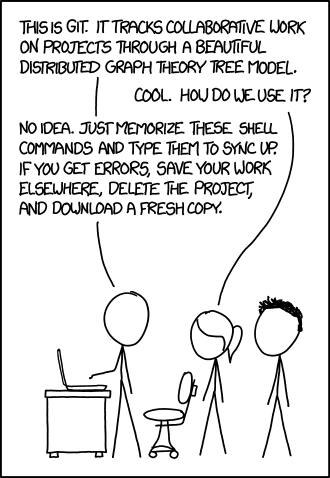

Day 3 We will introduce Jupyter notebooks as a tool for reusable data analysis. We will talk about Version Control. We will teach how to describe your projects using simple text files or customized templates.

- Day 4 We will show you how public repositories make your outputs accessible and reusable. We will consolidate our knowledge of FAIR ready data management and what other tools can help you during your research.

Online workshop specifics

Our learning tools

Before we begin let’s explain how to use the tools:

- Raising hands

- Yes/No sticker

- Chatroom for links not for jokes

- Breakout rooms, leaving and rejoining

- using pad, answering questions in pad

- where to find things If needed, check the pre workshop setup, ask to report problems and help at a break or after the session.

Key Points

Do not be shy

Be nice

Remember, you can do better research if you plan to share your outputs!

Introduction to Open Science

Overview

Teaching: 35 min

Exercises: 20 minQuestions

What is Open Science?

How can I benefit from Open Science?

Why has Open Science become a hot topic?

Objectives

Identify parts of the Open Science movement, their goals and motivations

Explain the main benefits of Open Science

Recognize the barriers and risks in the adoption of Open Science practices

(16 min teaching)

Science works best by exchanging ideas and building on them. Most efficient science involves both questions and experiments being made as fully informed as possible, which requires the free exchange of data and information.

All practices that make knowledge and data freely available fall under the umbrella-term of Open Science/Open Research. It makes science more reproducible, transparent, and accessible. As science becomes more open, the way we conduct and communicate science changes continuously.

What is Open Science

Open science is the movement to make scientific research (including publications, data, physical samples, and software) and its dissemination accessible to all levels of an inquiring society, amateur or professional.

Open Science represents a new approach to the scientific process based on cooperative work and new ways of diffusing knowledge by using digital technologies and new collaborative tools

Open science is transparent and accessible knowledge that is shared and developed through collaborative networks.

Characteristics:

- Using web-based tools to facilitate information exchange and scientific collaboration

- Transparency in experimental methodology, observation, and collection of data

- Public availability and reusability of scientific data, methods and communications

What is the Open Science movement?

Sharing of information is fundamental for science. This began at a significant scale with the invention of scientific journals in 1665. At that time this was the best available alternative to critique & disseminate research, and foster communities of like-minded researchers.

Whilst this was a great step forward, the journal-driven system of science has led to a culture of ‘closed’ science, where knowledge or data is unavailable or unaffordable to many.

The distribution of knowledge has always been subject to improvement. Whilst the internet was initially developed for military purposes, it was hijacked for communication between scientists, which provided a viable route to change the dissemination of science.

The momentum has built up with a change in the way science is communicated to reflect what research communities are calling for – solutions to the majority of problems (e.g. impact factors, data reusability, reproducibility crisis, trust in the public science sector etc…) that we face today.

Open Science is the movement to increase transparency and reproducibility of research, through using the open best practices.

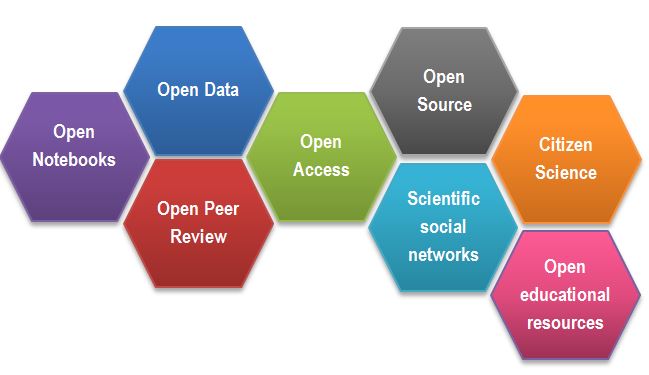

After Gema Bueno de la Fuente

Open Science Building Blocks

-

Open Access: Research outputs hosted in a way that make them accessible for everyone. Traditionally Open Access referred to journal articles, but now includes books, chapters or images.

-

Open Data: Data freely and readily available to access, reuse, and share. Smaller data sets were often accessible as supplemental materials by journals alongside articles themselves. However, they should be hosted in dedicated platforms for more convenient and better access.

-

Open Software: Software where the source code is made readily available; others are free to use, change, and share. Some examples of these including the coding language and supporting software R and RStudio, as well as image analysis software such as Fiji/ImageJ.

-

Open Notebooks: Lab & notebooks hosted online, readily accessible to all. These are popular among some of the large funding bodies and allow anyone to comment on any stage of the experimental record.

-

Open Peer Review: A system where peer review reports are published alongside the body of work. This can include reviewers’ reports, correspondence between parties involved, rebuttals, editorial decisions etc…

-

Citizens Science: Lay people become involved in scientific research, most commonly in data collection or image analysis. Platforms such as zooniverse.org help connect projects with lay people interested in playing an active role in research, which can help generate and/or process data which would otherwise be unachievable by one single person.

-

Scientific social networks: Networks of researchers, which often meet locally in teams, but are also connected online, foster open discussions on scientific issues. Online, many people commonly use traditional social media platforms for this, such as Twitter, Instagram, various sub-reddits, discussion channels on Slack/Discord etc…, although there are also more dedicated spaces such as researchgate.net.

-

Open Education resources: Educational materials that are free for anyone to access and use to learn from. These can be anything from talks, instructional videos, and explanations posted on video hosting websites (e.g. YouTube), to entire digital textbooks written and then published freely online.

-

Citizen science: Citizen participation of various stages of research process from project funding to collecting and analysing data.

Exercise 1: Benefits of Open Science (5 min)

Being open has other outcomes/consequences beyond giving the free access to information. For example, Open educational resources:

- enables collaborative development of courses

- improves teachers/instructors skills by sharing ideas

Select one or two of the following OS parts:

- Open Access

- Open Data

- Open Software

- Open Notebooks

- Open Peer Review

and discuss what are the benefits or what problems are solved by adaption of those Open initiatives.

Solution

Possible benefits and consequences for each part:

Open Access

- speed of knowledge distribution

- leveling field for underfunded sites which otherwise wouldn’t be able to navigate the paywall

- prevent articles being paid for ‘thrice’ (first to produce, second to publish, third to access) by institutions.

- greater access to work by others, increasing chance for exposure & citations

- access to work by lay audiences, thus increases social exposure of research

Open Data

- ensures data isn’t lost overtime - reusability

- acceleration of scientific discovery rate

- value for money/reduced redundancy

- permits statistical re-analysis of the data to validate findings

- gives access to datasets which were not published as papers (e.g. negative results, large screening data sets)

- provides an avenue to generate new hypotheses

- permits combination of multiple data sources to address questions, provides greater power than a single data source

Open Software

- great source to learn programming skills

- the ability to modify creates a supportive community of users and rapid innovation

- saves time

- faster bug fixes

- better error scrutiny

- use of the same software/code allows better reproducibility between experiments

- need funds to maintain and update software

Open Notebooks

- 100% transparent science, allowing input from others at early stages of experiments

- source of learning about the process of how science is actually conducted

- allows access to experiments and data which otherwise never get published

- provides access to ‘negative’ results and failed experiments

- anyone, anywhere around the world, at any time, can check in on projects, including many users simultaneously

- possibility of immediate feedback

- thorough evidence of originality of ideas and experiments, negating effect of ‘scooping’

Open Peer Review

- visibility leads to more constructive reviews

- mitigates against editorial conflicts of interest and/or biases

- mitigates against reviewers conflicts of interest and/or biases

- allows readers to learn/benefit from comments of the reviewers

Open Educational Materials

- Foster collaboration between educators/others

- Show clearly how method was taught (e.g. Carpentries materials) which can be reproduces anywhere, anytime

- protects materials from becoming technologically obsolete

- authors preparing the material or contribute all earn credit (e.g. GitHub)

- recycle animations and material that is excellent (why reinvent the wheel?)

Motivation: Money (8 min teaching)

One has to consider the moral objectives that accompany the research/publication process: charities/taxpayers pay to fund research, these then pay again to access the research they already funded.

From an economic point of view, scientific outputs generated by public research are a public good that everyone should be able to use at no cost.

According to EU report “Cost-benefit analysis for FAIR research data”, €10.2bn is lost every year because of not accessible data (plus additional 16bn if accounting for re-use and research quality).

The goals of Open Science is to make research and research data available to e.g. charities/taxpayers who funded this research.

COAlition S, a group of national research funding organisations backed by the European Commission and the European Research Council, is a big driver trying to get rid of the paywalls that our research is sat behind. They announced Plan S, an initiative to make research publications fully free at the point of access, meaning that all research funded by public funding bodies must be published Open Access from 2021 onwards.

Open Access (a successful example)

The majority of larger UK and other countries’ funding bodies are now making Open Access publication conditional upon funding.

The initiative is known as Plan S, which requires “resulting publications available immediately (without embargoes) and under open licences, either in quality Open Access platforms or journals or through immediate deposit in open repositories that fulfil the necessary conditions.”

Exact requirements differ between funding bodies, with the minimum requirement being that a copy be deposited with your home institution.

Details of funding bodies and their involvement and requirements can be found at Plan S/cOAlition S. There is also a cOAlition S journal checker tool to assess compliance being developed. The Directory of Open Access Journals (DOAJ) is a tool to find which journals are Open Access.

Motivation: Reproducibility

The inherited transparency of Open Science and the easy access to data, methods and analysis details naturally help to address the Reproducibility crisis. The openness of scientific communications and of the actual process of evaluation of the research (Open Peer Review) increase confidence in the research findings.

Personal motivators

Open Science is advantageous to many parties involved in science (including researcher community, funding bodies, the public even journals), which is leading to a push for the widespread adoption of Open Science practices.

Large UK funding bodies such as The Wellcome Trust are big supporters of Open Science. We can see with the example of Open Access, that once enforced by funders (the stick) there is a wide adoption. But what about the personal motivators, the carrots.

Exercise 2: Personal benefits of being “open” (4 min)

Below are some personal benefits to adopting Open Science practices. Read through them which of them are the strongest motivators for you. Select two the most important/attractive for you and mark them with +1, select the two least important for you and mark them with 0

- receive higher citations

- complying with funders’ policies

- get extra value from your work (e.g. collaborators, reuse by modellers, ML specialists)

- demonstrate research impact

- save own time (reproducibility but also communication overhead)

- become pioneers

- distinguish yourself from the crowd

- plan successful research proposals

- gain valuable experience

- form community

- increased speed and/or ease of writing papers

- speed up and help with peer review

- build reputation and presence in the science community

- evidence of your scientific rigour and work ethic

- avoid embarrassment/disaster when you cannot reproduce your results

Can you think of other benefits? How personal benefits of Open Science compare to the benefits for the (scientific) society?

(3 min teaching)

The main difference between the public benefits of Open Science practices and the personal motivators of outputs creators, that the public can benefit almost instantly from the open resources. However, the advantages for data creator comes with a delay, typically counted in years. For example, building reputation will not happen with one dataset, the re-use also will lead to citations/collaboration after the next research cycle.

Barriers and risks of OS movement:

Exercise 3: Why we are not doing Open Science already (4 min)

Discuss Open Science barriers, mention the reasons for not already being open:

Solution

- sensitive data (anonymising data from administrative health records can be difficult)

- IP

- misuse (fake news)

- lack of confidence (the fear of critics)

- lack of expertise

- the costs in $ and in time

- novelty of data

- it is not mandatory

- lack of credit (publishing negative results is of little benefit to you)

(9 min teaching)

It may seem obvious that we should adopt open science practices, but there are associated challenges with doing so.

Sensitivity of data is sometimes considered a barrier. Shared data needs to be compliant with data privacy laws, leading many to shy away from hosting it publicly. Anonymising data to desensitise it can help overcome this barrier.

The potential for intellectual property on research can dissuade some from adopting open practices. Again, much can be shared if the data is filtered carefully to protect anything relating to intellectual property.

Another risk could be seen with work on Covid19: pre-prints. A manuscript hosted publicly prior to peer review, may accelerate access to knowledge, but can also be misused and/or misunderstood. This can result in political and health decision making based on faulty data, which is counter to societies’ best interest.

One concern is that opening up ones data to the scientific community can lead to the identification of errors, which may lead to feelings of embarrassment. However, this could be considered an upside - we should seek for our work to be scrutinized and errors to be pointed out, and is the sign of a competent scientist. One should rather have errors pointed out rather than risking that irreproducible data might cause even more embarrassment and disaster.

One of the biggest barriers are the costs involved in “being Open”. Firstly, making outputs readily available and usable to others takes time and significant effort. Secondly, there are costs of hosting and storage. For example, microscopy datasets reach sizes in terabytes, making such data accessible for 10 years involves serious financial commitment.

Get involved

Thankfully, incentive structures are beginning to support Open Science practices:

- Universities signing up to the Declaration on Research Assessment (DORA).

- Wellcome Trust funding proposals that increase Open Science

- Wellcome Trust asked for description of Open Science activities in the grant application

You do not want to be left behind!

Where to next

Further reading/links:

Exercise 4: Open Science Quiz (5 min + runs over break)

Which of the following statements about the OS movement are true/false?

- Open Science relies strongly on the Internet

- Open Access eliminates publishing costs

- Open Data facilitates re-use

- Open Data can increases confidence in research findings

- In Open Peer Review, readers vote on publication acceptance

- Open Notebooks improve reproducibility

- Open Notebooks can create patenting issues

- Open Access permits the whole society to benefit from scientific findings

- Citizen Science engages the public in the research process

- Citizen Science can help get ecological data quickly

Solution

- Open Science relies strongly on the Internet T

- Open Access eliminates publishing costs F

- Open Data facilitates re-use T

- Open Data increases confidence in research findings T

- In Open Peer Review, readers vote on publication acceptance F

- Open Notebooks improve reproducibility T

- Open Notebooks can create patenting issues T*

- Open Access permits the whole society to benefit from scientific findings T

- Citizen Science engages the public in the research process T

- Citizen Science can help get ecological data quickly T

Attribution

Content of this episode was adapted from:

Key Points

Open Science increases transparency in research

Publicly funded science should be publicly available

While both You and the research community benefit from open practices, they are costs involved in making outputs open

Being FAIR

Overview

Teaching: 45 min

Exercises: 15 minQuestions

How to get more value from your own data?

What are the FAIR guidelines?

Why being FAIR matters?

Objectives

Recognize typical issues that prevent data re-use

Understand FAIR principles

Know steps for achieving FAIR data

(7 min teaching)

We have seen how Open practices can benefit both scientific community as a whole and individual practitioner. The wide adoption of Open Access principles has resulted in an easy access to recent biomedical publications. Unfortunately, the same cannot be said about data and software that accompanies those publications.

What is data

Although scientific data is a very broad term, we still encounter groups who (wrongly) believe they do not have data! Data does not mean Excel files with recorded measurements from a machine. Data also includes:

- images, not only from microscopes

- information about biological materials, like strain or patient details

- biological models

- recipes, laboratory and measurement protocols

- scripts, analysis procedures, and custom software can also be considered data However, there are specific recommendations on how to deal with code.

Let’s have a look how challenging it can be to access and use data from published biological papers.

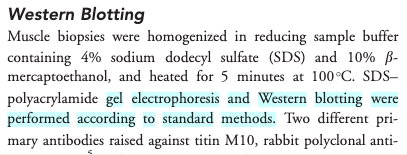

Exercise 1: Impossible protocol (4 min)

You need to do a western blot to identify Titin proteins, the largest proteins in the body, with a molecular weight of 3,800 kDa. You found an antibody sold by Sigma Aldrich that has been validated in western blots and immunofluorescence. Sigma Aldrich lists the Yu et al., 2019 paper as reference.

Find details of how to separate and transfer this large protein in the reference paper.

- Hint 1: Methods section has a Western blot analysis subsection.

- Hint 2: Follow the references.

Would you say that the methods was Findable? Accessible? Reusable?

Solution

- Ref 17 will lead you to this paper, which first of all is not Open Access

- Access the paper through your institutions (if you can) and find the ‘Western Blotting’ protocol on page 232 which will show the following (Screenshot from the methods section from Evilä et al 2014):

- “Western blotting were performed according to standard methods.” - with no further reference to these standard methods, describing these methods, or supplementary material detailing these methods

- This methodology is unfortunately a true dead end and we thus can’t easily continue our experiments!

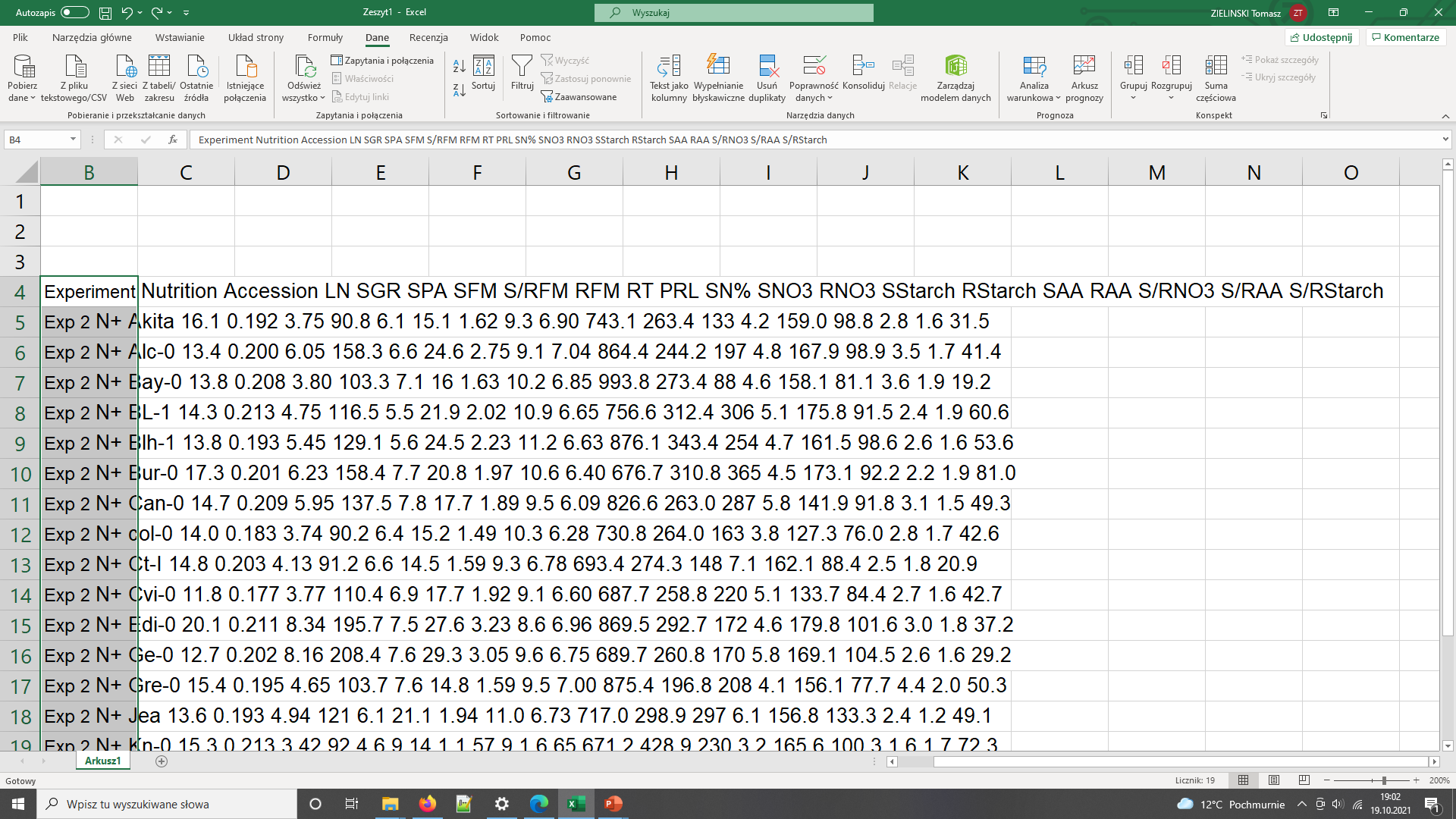

Impossible numbers

Ikram 2014 paper contains data about various metabolites in different accessions (genotypes) of Arabidopsis plant. You would like to calculate average nitrogen content in plants grown under normal and nitrogen limited conditions. Please calculate the average (over genotypes) nitrogen content for the two experimental conditions.

- Hint 1. Data are in Supplementary data

- Hint 2. Search for nitrogen in paper text to identify the correct data column.

Solution

- Finding the right table and column containing the relevant data is already problematic as the headers are obscured so they need to decoded using manuscript

- Data in pdf table so they cannot be readily used in calculations

- Depending on the software used to open (and the way the pdf was created), the local machine international settings, copying the data into Excel can bring unexpected results

Data needs parsing after coping to Excel

The same data copied to Excel with polish locale has been converted to dates- In general pdf tables cannot be read programaticaly from R or Python.

Impossible numbers

Systems biologists usually require raw numerical data to build their models. Take a look at the following example: Try to find the numerical data behind the graph shown in Figure 6 which demonstrates changes in levels of phytochrome proteins from Sharrock RA and Clack T, 2002.

- Hint 1: Materials and methods describe quantification procedure

- Hint 2: Supporting Information or Supplementary Materials sections often contain data files.

How easy it was?

Impossible resource/link

RNA-seq (transcriptomics) data is usually deposited in online repositories such as SRA or ArrayExpress. Your task is to find the link to the repository of the raw RNA-seq data in Li et al., Genes Dev. 2012. Can you find it anywhere?

(29 min teaching)

The above examples illustrate the typical challenges in accessing research data and software. Firstly, data/protocols/software often do not have an identity of their own, but only accompany a publication. Second, they are not easily accessible or reusable, for example, all the details are inside one supporting information PDF file. Such file includes “printed” numerical table or even source code, both of which need to be “re-typed” if someone would like to use them. Data are shared in proprietary file format specific to a particular vendor and not accessible if one does not have a particular software that accompanies the equipment. Finally, data files are provided without detailed description other than the whole article text.

In our examples, the protocol was difficult to find (the loops), difficult to access (pay wall), and not reusable as it lacked the necessary details (dead-end). In the second example the data were not interoperable and reusable as their were only available as a figure graph.

To avoid such problems FAIR principles were designed.

After SangyaPundir

After SangyaPundir

FAIR Principles

In 2016, the FAIR Guiding Principles for scientific data management and stewardship were published in Scientific Data. The original guideline focused on “machine-actionability” - the ability of computer systems to operate on data with minimal human intervention. However, now the focus has shifted to making data accessible from a human perspective, and not an automated one (mostly due to the lack of user friendly tools that could help deal with standards and structured metadata).

Findable: Easy to find data and metadata for both humans and computers. Automatic and reliable discovery of datasets and services depends on machine-readable persistent identifiers (PIDs) and metadata.

Accessible: (Meta)data should be retrievable by their identifier using a standardized and open communications protocol (including authentication and authorisation). Metadata should be available even when the data are no longer available.

Interoperable: Data should be able to be combined with and used with other data or tools. The format of the data should be open and interpretable for various tools. It applies both to data and metadata, (meta)data should use vocabularies that follow FAIR principles.

Re-usable: FAIR aims at optimizing the reuse of data. Metadata and data should be well-described so that they can be replicated and/or combined in different settings. The reuse of (meta)data should be stated with clear and accessible license(s).

FAIR in biological practice

Findable & Accessible

Deposit data to an external, reputable public repository.

Repositories provide persistent identifiers (PIDs), catalogue options, advanced metadata searching, and download statistics. Some repositories can also host private data or provide embargo periods, meaning access to all data can be delayed.

There are general “data agnostic” repositories, for example:

Or domain specific, for example:

- UniProt protein data,

- GenBank sequence data,

- MetaboLights metabolomics data

- GitHub for code.

We will cover repositories in more details in a later episode.

What are persistent identifiers (PIDs)

A persistent identifier is a long-lasting reference to a digital resource. Typically it has two components:

- a service that locates the resource over time even when its location changes

- and a unique identifier (that distinguishes the resource or concept from others).

Persistent identifiers aim to solve the problem of the persistence of accessing cited resource, particularly in the field of academic literature. All too often, web addresses (links) changes over time and fail to take you to the referenced resource you expected.

There are several services and technologies (schemes) that provide PIDs for objects (whether digital, physical or abstract). One of the most popular is Digital Object Identifier (DOI), recognizable by the prefix doi.org in the web links. For example: https://doi.org/10.1038/sdata.2016.18 resolves to the location of the paper that describes FAIR principles.

Public repositories often maintain web addresses of their content in a stable form which follow the convention http://repository.adress/identifier; these are often called permalinks. For well establish services, permalinks can be treated as PIDs.

For example: http://identifiers.org/SO:0000167 resolves to a page defining promoter role, and can be used to annotate part of a DNA sequence as performing such a role during transcription.

Interoperable

- Use common file formats (can be domain specific)

- Always use .csv or .xls files for numerical data. Never share data tables as word or pdf,

- Provide underlying numerical data for all plots and graphs

- Convert proprietary binary formats to the open ones. For example convert Snapgene to Genbank, microscopy multistack images to OME-TIFF

Reusable

- Describe your data well / provide good metadata

- write README file describing the data

- user descriptive column headers for the data tables

- tidy data tables, make them analysis friendly

- provide as many details as possible (prepare good metadata)

- use (meta)data formats (e.g. SBML, SBOL)

- follow Minimum Information Standards

Describing data well is the most challenging part of the data sharing process. We will cover this in more detail later on.

- Attach license files.

Licenses explicitly declare conditions and terms by which data and software can be re-used.

Here, we recommend:

- for data Creative Commons Attribution (CC BY) license,

- for code a permissive open source license such as the MIT, BSD, or Apache license.

Copyright and data

Software code (the text) automatically gets the default copyright protection which prevents others from copying or modifying it. Only by adding the explicit licence you can permit re-use by others.

Data, being factual, cannot be copyrighted. So why, do we need a license?

While the data itself cannot be copyrighted, the way how it is presented can be. The extend to which it is protected needs ultimately to be settled by the court.

The “good actors” will restrain from using your data to avoid “court” risks. The “bad actors” will either ignore the risk or can afford the lawyers fees.

Exercise 2: Example of FAIR data (4 min)

Zenodo is general data repository. Have a look at the dataset record with COVID-19 data: https://doi.org/10.5281/zenodo.6339631

Identify how each of F.A.I.R principles has been met.

Hint: navigate to linked github record to easily access the README fileSolution

- (F) The dataset is identified by a PID (doi). It can be found by its ID. It has human accessible description and keywords, both suitable for discovery.

- (A) Data can be downloaded using standard browser.

- (I) Dataset entries are in common formats: csv, R, jpg

- (I) Dataset is linked to publication, github record and project website

- (R) The record contains rich metadata in README file, including files structure and the detailed tables formats.

- (R) Data are released under open Creative Commons Attribution Licence

Exercise 2 (too)Advanced: Example of FAIR data (4 min)

Depending on the audience this version of the excercise may present information overload.

Uniprot is a high-quality and freely accessible resource of protein sequence and functional information. Have a look at the record of the GFP protein: https://www.uniprot.org/uniprot/P42212

Identify how each of F.A.I.R principles has been met.

Alternative records to check: https://www.ncbi.nlm.nih.gov/nuccore/AH002877.2 https://synbiohub.org/public/bsu/SubtilinReceiver_spaRK_separated/1

Solution

All entries are uniquely identified by a stable URL (‘F’), that provides access to the record in a variety of formats including a web page, plain-text, FASTA, and GENBANK (‘A’, ‘I’). The record contains rich metadata (‘R’) that is both human-readable (HTML) and machine-readable (text) (‘I’). There search options that uses both record IDs and the rich metadata (‘F’). The graphical pane shows how the data are re-usable. Metadata uses ontological terms (e.g. taxonomy) and shared vocabularies (e.g. genbank features) (‘I’). Interlinking with other databases: GenBank, Pubmed (‘I’, ‘R’) enabling automated retrieval of records and cross-referencing information.

Exercise 3: FAIR and You (3 min)

The FAIR acronym is sometimes accompanied with the following labels:

- Findable - Citable

- Accessible - Trackable and countable

- Interoperable - Intelligible

- Reusable - Reproducible

Using those labels as hints discuss how FAIR principles directly benefit you as the data creators.

Solution

- Findable data have their own identity, so they can be easily cited and secure the credits to the authors

- Data accessibility over the Internet using standard protocols can be easily monitored (for example using Google analytics). This results in metrics on data popularity or even geo-locations of data users.

- Interoperable data can benefit the future you, for example you will be able to still read your data even when you no longer have access to the specialized, vendor specific software with which you worked with them before. Also the future you may not remember abreviations and ad-hoc conventions you used before (Intelligible).

- Well documented data should contain all the details necessary to reproduce the experiments, helping the future you or someone taking over from you in the laboratory.

- Saves time and money.

FAIR vs Open Science (3 min teaching)

FAIR does not mean Open. Actually, FAIR guideline only requires that the metadata record is always accessible. For example, the existence of the data can be known (their metadata), the data can have easy to use PID to reference them, but the actual data files can only be downloaded after the login and authorization.

However, if data are already in the FAIR form, i.e. accessible over the internet, in interoperable format and well documented, then it is almost effortless to “open” the dataset and make it available to the whole public. The data owner can do it any time when he no longer perceives oppening as a risk.

At the same time, Open data which does not follow FAIR guidelines have little value. If they are not well described, not in open formats then they are not going to be re-used even if they were made “open” by posting them on some website.

Exercise 4: FAIR Quiz (5 min - run through break)

Which of the following statements is true/false (T or F).

- F in FAIR stands for free.

- Only figures presenting results of statistical analysis need underlying numerical data.

- Sharing numerical data as a .pdf in Zenodo is FAIR.

- Sharing numerical data as an Excel file via Github is not FAIR.

- Group website is a good place to share your data.

- Data from failed experiments are not re-usable.

- Data should always be converted to Excel or .csv files in order to be FAIR.

- A DOI of a dataset helps in getting credit.

- FAIR data are peer reviewed.

- FAIR data accompany a publication.

Solution

- F in FAIR stands for free. F

- Only figures presenting results of statistical analysis need underlying numerical data. F

- Sharing numerical data as a .pdf in Zenodo is FAIR. F

- Sharing numerical data as an Excel file via Github is not FAIR. F

- Group website is a good place to share your data. F

- Data from failed experiments are not re-usable. F

- Data should always be converted to Excel or .csv files in order to be FAIR. F

- A DOI of a dataset helps in getting credit. T

- FAIR data are peer reviewed. F

- FAIR data accompany a publication. F

Key Points

FAIR stands for Findable Accessible Interoperable Reusable

FAIR assures easy reuse of data underlying scientific findings

Intellectual Property, Licensing and Openness

Overview

Teaching: 13 min

Exercises: 7 minQuestions

What is intellectual property?

Why should I consider IP in Open Science?

Objectives

Timeline matters for legal protection

Understand what can and cannot be patented

Understand what licenses to use for re-use of data and software

(13 min teaching)

Open Science and Intellectual property

This section is tailored to the EU and UK.

Intellectual property (IP) is something that you create using your mind - for example, a story, an invention, an artistic work or a symbol.

The timeline of “opening” matters when one seeks legal protection for his IP.

For example, patents are granted only for inventions that are new and were not known to the public in any form. Publishing in a journal or presenting in a conference information related to the invention completely prevents the inventor from getting a patent!

In our opinion, you are more likely to benefit from new collaborations, industrial partnerships, consultations which are acquired by openness, than from patent related royalties.

(Optional) Intellectual property protection

This section is tailored to the EU and UK.

You can use a patent to protect your (technical) invention. It gives you the right to take legal action against anyone who makes, uses, sells or imports it without your permission.

Discoveries, mathematical methods, computer programs and business methods as such are not regarded as inventions. Surgical and therapeutic procedures, diagnostic methods and new plant or animal varieties are completely excluded from patentability.

Patents are granted only for inventions that are new and were not known to the public in any form. Publishing in a journal or presenting in a conference information related to the invention completely prevents the inventor from getting a patent!

In principle, software cannot be patented. However, it is a “no but yes” situation, and a software patent are being granted. It is usually, settled by the court for each case.

Software code is copyrighted. Copyright prevents people from:

- copying your code

- distributing copies of it, whether free of charge or for sale.

Data cannot be patented, and in principle, it cannot be copyrighted. It is not possible to copyright facts!

However, how data are collated and presented (especially if it is a database), can have a layer of copyright protection. Deciding what data needs to be included in a database, how to organize the data, and how to relate different data elements are all creative decisions that may receive copyright protection. Again, it is often a case by case situation and may come down to who has better lawyers.

After: UK Government, Intellectual Property European Patent Office, Inventor’s Handbook

Exercise 1: Checking common licenses (5 min + discussion)

Open CC BY license summary https://creativecommons.org/licenses/by/4.0/ is it clear how you can use the data under this licence and why it is popular in academia?

Check the MIT license wording: https://opensource.org/licenses/MIT is it clear what you can do with software code under this licence?

Compare the full wording of CC BY https://creativecommons.org/licenses/by/4.0/legalcode can you guess why the MIT licence is currently one of the most popular for open source code?

Solution

- CC BY license states material can be reproduced, shared, in whole or in part, unless where exceptions and limitations are stated. Attributions must be made to the Licensor.

- MIT license states that Software can by used without restriction (to copy, modify, publish, distribute etc…)

- The MIT license is short, to the point and optimised for software developers as it offers flexibility.

Key Points

A license is a promise not to sue - therefore attach license files

For data use Creative Commons Attribution (CC BY) license (CC0 is even more permissive)

For code use open source licenses such as MIT, BSD, or Apache license

Introduction to metadata

Overview

Teaching: 11 min

Exercises: 14 minQuestions

What is metadata?

What do we use metadata for?

Objectives

Recognise what metadata is

Distinguish different types of metadata

Understand what makes metadata interoperable

Know how to decide what to include in metadata

(5 min teaching)

What is (or are) metadata?

Simply put, metadata is data about the data. Sound confusing? Lets clarify: metadata is the description of data. It allows deeper understanding of data and provides insight for its interpretation. Hence, your metadata should be considered as important as your data. Further, metadata plays a very important role in making your data FAIR. It should be continuously added to your research data (not just at the beginning or end of a project!). Metadata can be produced in an automated way (e.g. when you capture a microscopy image usually the accompanying software saves metadata as part of it) or manually.

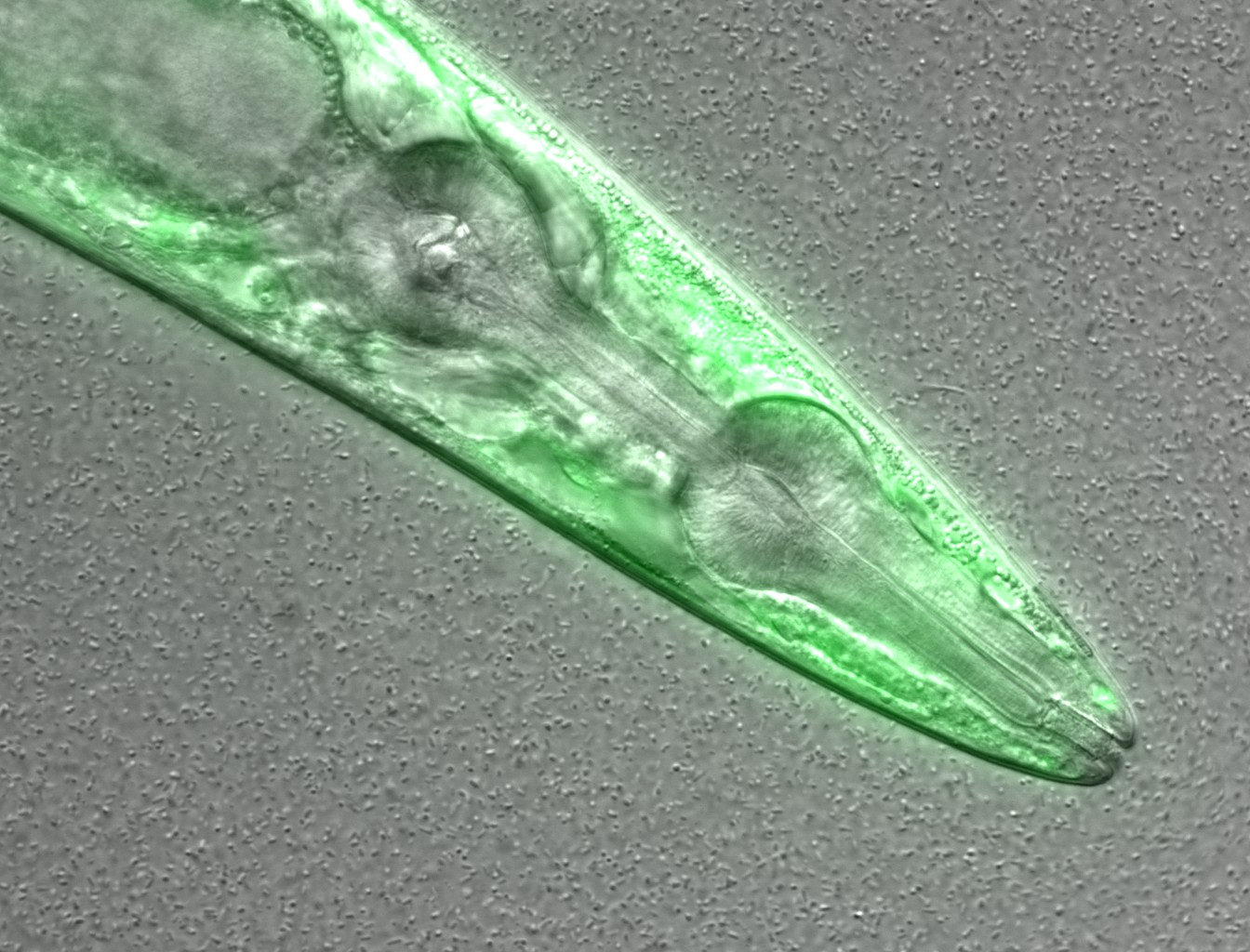

Let’s take a look at an example:

This is a confocal microscopy image of a C. elegans nematode strain used

as a proteostasis model (Pretty! Isn’t it?). The image is part of the raw data

associated to Goya et al., 2020,

which was deposited in a Public Omero Server

Project

Figure1 set

Figure credits: María Eugenia Goya

Figure credits: María Eugenia Goya

. What information can you get from the image, without the associated description (metadata)?

Let’s see the associated metadata of the image and the dataset to which it belongs:

Image metadata

Name: OP50 D10Ad_06.czi Image ID: 3485 Owner: Maria Eugenia Goya ORCID: 0000-0002-5031-2470

Acquisition Date: 2018-12-12 17:53:55 Import Date: 2020-04-30 22:38:59 Dimensions (XY): 1344 x 1024 Pixels Type: uint16 Pixels Size (XYZ) (µm): 0.16 x 0.16 x 1.00 Z-sections/Timepoints: 56 x 1 Channels: TL DIC, TagYFP ROI Count: 0

Tags: time course; day 10; adults; food switching; E. coli OP50; NL5901; C. elegans

Dataset metadata

Name: Figure2_Figure2B Dataset ID: 263 Owner: Maria Eugenia Goya ORCID: 0000-0002-5031-2470

Description: The datasets contains a time course of α-syn aggregation in NL5901 C. elegans worms after a food switch at the L4 stage:

E. coli OP50 to OP50 Day 01 adults Day 03 adults Day 05 adults Day 07 adults Day 10 adults Day 13 adults

E. coli OP50 to B. subtilis PXN21 Day 01 adults Day 03 adults Day 05 adults Day 07 adults Day 10 adults Day 13 adults

Images were taken at 6 developmental timepoints (D1Ad, D3Ad, D5Ad, D7Ad, D10Ad, D13Ad)

* Some images contain more than one nematode.

Each image contains ~30 (or more) Z-sections, 1 µmeters apart. The TagYFP channel is used to follow the alpha-synuclein particles. The TL DIC channel is used to image the whole nematode head.

These images were used to construct Figure 2B of the Cell Reports paper (https://doi.org/10.1016/j.celrep.2019.12.078).

Creation date: 2020-04-30 22:16:39

Tags: protein aggregation; time course; E. coli OP50 to B. subtilis PXN21; food switching; E. coli OP50; 10.1016/j.celrep.2019.12.078; NL5901; C. elegans

This is a lot of information!

Types of metadata

According to How to FAIR we can distinguish between three main types of metadata:

- Administrative metadata: data about a project or resource that are relevant for managing it; E.g. project/resource owner, principal investigator, project collaborators, funder, project period, etc. They are usually assigned to the data, before you collect or create them.

- Descriptive or citation metadata: data about a dataset or resource that allow people to discover and identify it; E.g. authors, title, abstract, keywords, persistent identifier, related publications, etc.

- Structural metadata: data about how a dataset or resource came about, but also how it is internally structured. E.g. the unit of analysis, collection method, sampling procedure, sample size, categories, variables, etc. Structural metadata have to be gathered by the researchers according to best practice in their research community and will be published together with the data.

Descriptive and structural metadata should be added continuously throughout the project.

Exercise 1: Identifying metadata types (4 min)

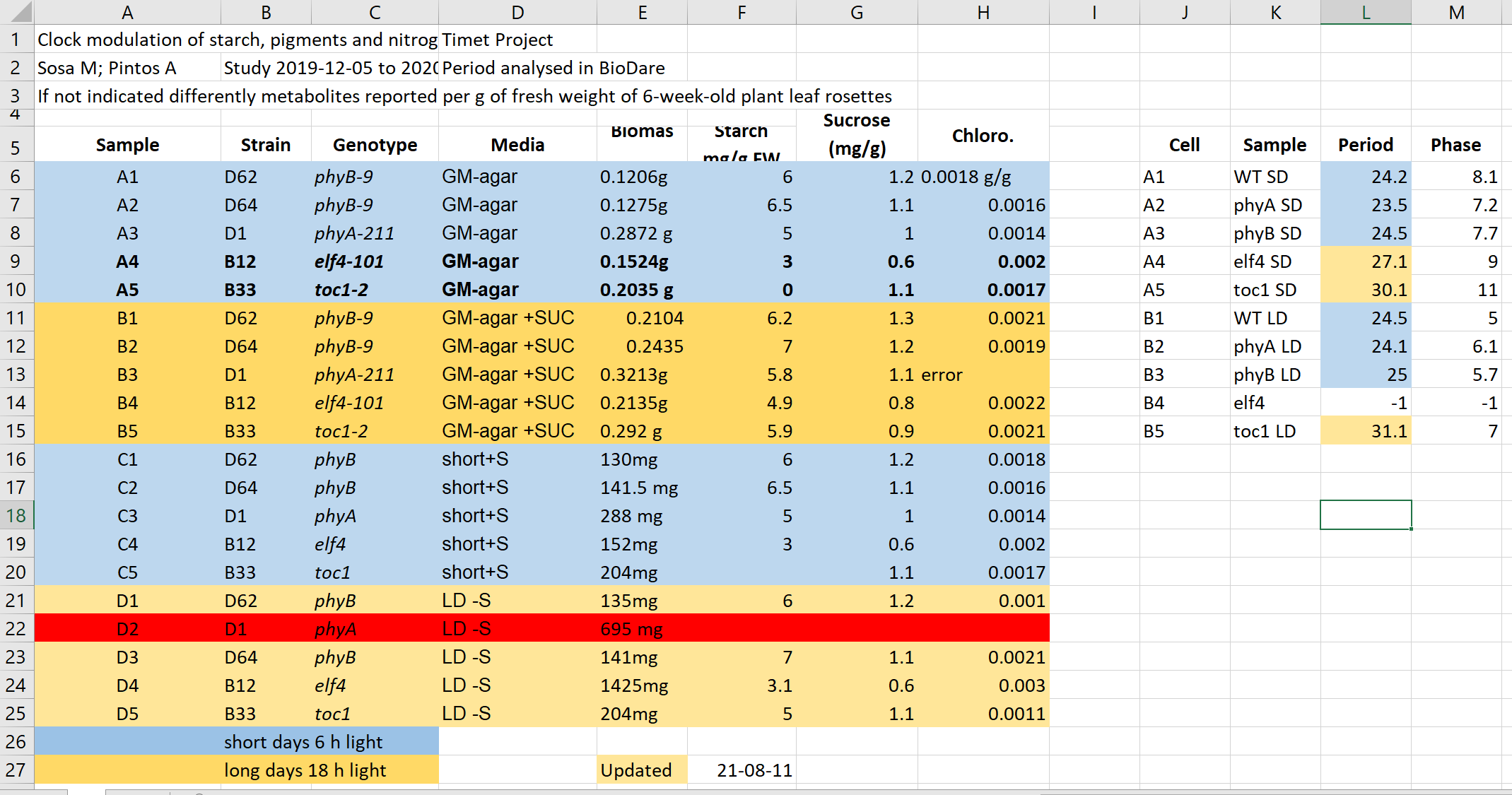

Here we have an excel spreadsheet that contains project metadata for a made-up experiment of plant metabolites

Figure credits: Tomasz Zielinski and Andrés Romanowski

In groups, identify different types of metadata (administrative, descriptive, structural) present in this example.

Solution

- Administrative metadata marked in blue

- Descriptive metadata marked in orange

- Structural metadata marked in green

Figure credits: Tomasz Zielinski and Andrés Romanowski

(6 min teaching)

Where does data end and metadata start?

What is “data” and what is “metadata” can be a matter of perspective: Some researchers’ metadata can be other researchers’ data.

For example, a funding body is categorised as typical administrative metadata, however, it can be used to calculate numbers of public datasets per funder and then used to compare effects of different funders’ policies on open practices.

Adding metadata to your experiments

Good metadata are crucial for assuring re-usability of your outcomes. Adding metadata is also a very time-consuming process if done manually, so collecting metadata should be done incrementally during your experiment.

As we saw metadata can take many forms from as simple as including a ReadMe.txt file, by embedding them inside the Excel files, to using domain specific metadata standards and formats.

But,

- What should be included in metadata?

- What terms should be used in descriptions?

For many assay methods and experiment types, there are defined recommendations and guidelines called Minimal Information Standards.

Minimal Information Standard

The minimum information standard is a set of guidelines for reporting data derived by relevant methods in biosciences. If followed, it ensures that the data can be easily verified, analysed and clearly interpreted by the wider scientific community. Keeping with these recommendations also facilitates the foundation of structuralized databases, public repositories and development of data analysis tools. Individual minimum information standards are brought by the communities of cross-disciplinary specialists focused on issues of the specific method used in experimental biology.

Minimum Information for Biological and Biomedical Investigations (MIBBI) is the collection of the most known standards.

FAIRSharing offers excellent search service for finding standards

Exercise 2: Minimal information standard example (5 min)

Look at Minimum Information about a Neuroscience Investigation (MINI) Electrophysiology Gibson, F. et al. Nat Prec (2008). which contains recommendations for reporting the use of electrophysiology in a neuroscience study.

(Neuroscience (or neurobiology) is the scientific study of the nervous system).Scroll to Reporting requirement and decide which of the points 1-8 are:

- a) important for understanding and reuse of data

- b) important for technical replication

- c) could be applied to other experiments in neuroscience

Solution

Possible answers:

- a) 2, 3, 4, 5, 6, 8a-b

- b) 3, 7

- c) 2, 3, 4, 5, 6

What if there are no metadata standards defined for your data / field of research?

Think about the minimum information that someone else (from your lab or from any other lab in the world) would need to know to be able to work with your dataset without any further input from you.

Think as a consumer of your data not the producer!

Exercise 3: What to include - discussion (4 minutes)

Think of the data you generate in your projects, and imagine you are going to share them.

What information would another researcher need to understand or reproduce your data (the structural metadata)?

For example, we believe that any dataset should have:

- a name/title

- its purpose or experimental hypothesis

Write down and compare your proposals, can we find some common elements?

Solution

Some typical elements are:

- biological material, e.g. Species, Genotypes, Tissue type, Age, Health conditions

- biological context, e.g. speciment growth, entrainment, samples preparation

- experimental factors and conditions, e.g. drug treatments, stress factors

- primers, plasmid sequences, cell line information, plasmid construction

- specifics of data acquisition

- specifics of data processing and analysis

- definition of variables

- accompanying code, software used (version nr), parameters applied, statistical tests used, seed for randomisation

- LOT numbers

Metadata and FAIR guidelines

Metadata provides extremely valuable information for us and others to be able to interpret, process, reuse and reproduce the research data it accompanies.

Because metadata are data about data, all of the FAIR principles i.e. Findable, Accessible, Interoperable and Reusable apply to metadata.

Ideally, metadata should not only be machine-readable, but also interoperable so that they can interlink or be reasoned about by computer systems.

Attribution

Content of this episode was adapted from:

Key Points

Metadata provides contextual information so that other people can understand the data.

Metadata is key for data reuse and complying with FAIR guidelines.

Metadata should be added incrementally through out the project

Being precise

Overview

Teaching: 25 min

Exercises: 10 minQuestions

How to make my metadata interoperable?

How to avoid disambiguation?

Objectives

Using public identifiers

Understand difference between close vocabulary and ontology

Finding ontology terms

(16 min teaching)

Being precise

If the metadata purpose is to help understand the data, it has to be done in a precise and “understandable” way i.e. it has to be interoperable. To be interoperable metadata should use a formal, accessible, shared, and broadly applicable terms/language for knowledge representation.

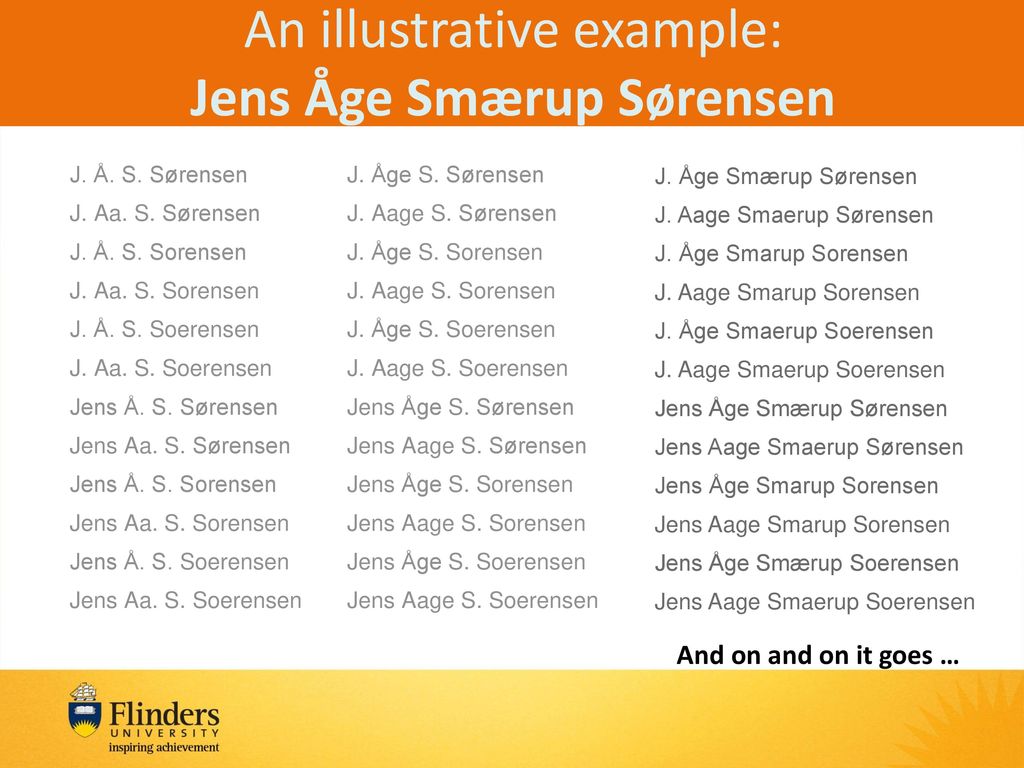

One of the easiest examples is the problem of author disambiguation.

After Libarary Carpentry FAIR Data

Open Researcher and Contributor ID (ORCID)

Have you ever searched yourself in pubmed and found that you have a doppelganger? So how can you uniquely associate something you created to just yourself and no other researcher with the same name?

ORCID is a free, unique, persistent identifier that you own and control—forever. It distinguishes you from every other researcher across disciplines, borders, and time.

ORCIDs of authors of this episode are:

You can connect your iD with your professional information—affiliations, grants, publications, peer review, and more. You can use your iD to share your information with other systems, ensuring you get recognition for all your contributions, saving you time and hassle, and reducing the risk of errors.

If you do not have an ORCID, you should register to get one!

Exercise 1: Public ID in action (3 min)

The Wellcome Open Research journal uses ORCID to identify authors.

Open one of our papers doi.org/10.12688/wellcomeopenres.15341.2 and have a look how public IDs such as ORCID can be used to interlink information.

If you have not done so yet, register yourself at ORCID*

Solution

ORCID is used to link to authors profiles which list their other publications.

ORCID provides the registry of researchers, so they can be precisely identified. Similarly, there are other registries that can be used to identify many of biological concepts and entities:

- species e.g. NCBI taxonomy

- chemicals e.g. ChEBI

- proteins e.g. UniProt

- genes e.g. GenBank

- metabolic reactions, enzymes e.g KEGG

BioPortal or

NCBI

are good places to start searching for a registry or a term.

Exercise 2: Public ID in action 2 (3 min)

The second metadata example (the Excel table): contains two other types of public IDs.

Figure credits: Tomasz Zielinski and Andrés Romanowski

- Can you find the public IDs?

- Can you find the meaning behind those Ids?

Solution

The metadata example contains genes IDs from The Arabidopsis Information Resource TAIR and metabolites IDs from KEGG

Disambiguation (7 min teaching)

In academic disciplines we quickly run into problems of naming standards e.g.:

- Escherichia coli

- EColi

- E. coli

- E. Coli

- Kanamycin A

- Kanamycin

- Kanam.

- Kan.

In order to prevent such ambiguities applications provide interface that constraints users to pre-defined options, it controls the available vocabulary.

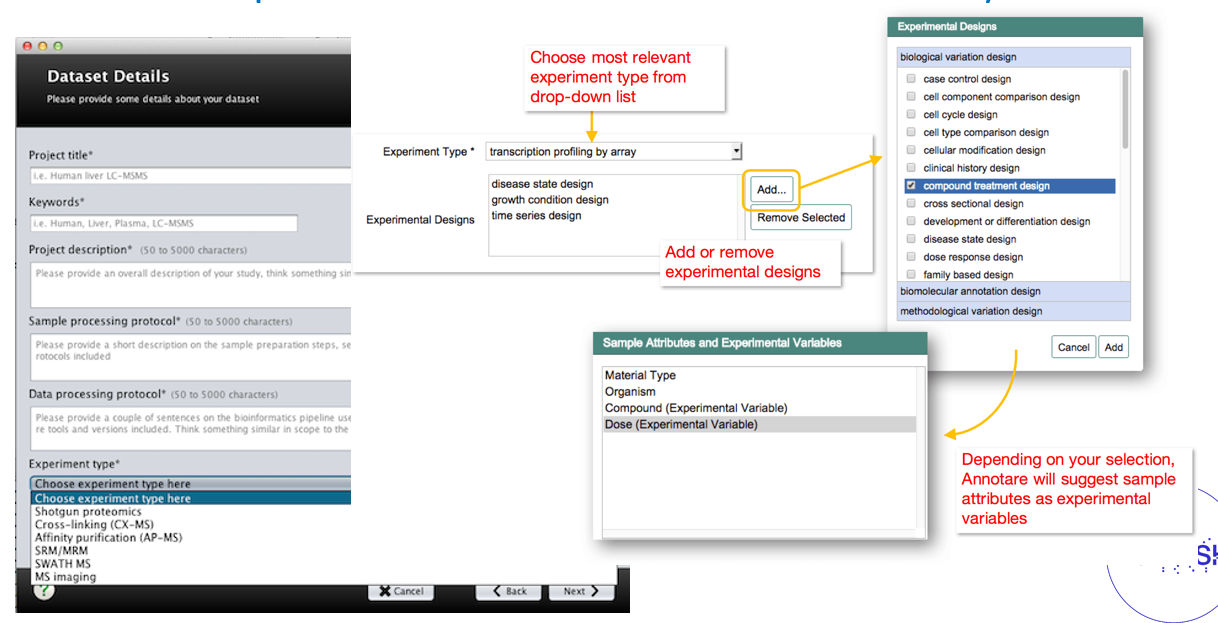

Example of graphical user interfaces with controlled vocabularies

Example of graphical user interfaces with controlled vocabularies

Controlled Vocabulary

Definition: Any closed prescribed list of terms

Key Features:

- Terms are not usually defined

- Relationships between the terms are not usually defined

- the simplest form is a list

Example:

- E. coli

- Drosophila melanogaster

- Homo sapiens

- Mus musculus

- Salmonella

Use of controlled vocabulary (a list) can be organised hierarchically into a taxonomy, a system we know mostly from our species taxonomy.

Taxonomy

Definition: Any controlled vocabulary that is arranged in a hierarchy

Key Features:

- Terms are not usually defined

- Relationships between the terms are not usually defined

- Terms are arranged in a hierarchy

Example:

- Bacteria

- E. coli

- Salmonella

- Eucariota

- Mammalia

- Homo sapiens

- Mus musculus

- Insecta

- Drosophila melanogaster

Ontologies add a further dimension to controlled vocabularies and taxonomy. They allow us to conceptualise relationships between the established hierarchy which helps with more sophisticated data queries and metadata searches.

Ontology

Definition: A formal conceptualisation of a specified domain

Key Features:

- Terms are DEFINED

- Relationships between the terms are DEFINED, allowing logical inference and sophisticated data queries

- Terms are arranged in a hierarchy

- expressed in a knowledge representation language such as RDFS, OBO, or OWL

Example:

- Bacteria

- E. coli

- Salmonella

- Eucariota — has_part —> nucleas

- Mammalia — has_part —> placenta

- Homo sapiens

- Mus musculus

- Insecta

- Drosophila melanogaster

Ontologies represent a standardised, formal naming system and define categories, properties and relationships between data. Ontologies allow to describe properties of a subject area and how they are related (e.g. taxonomy).

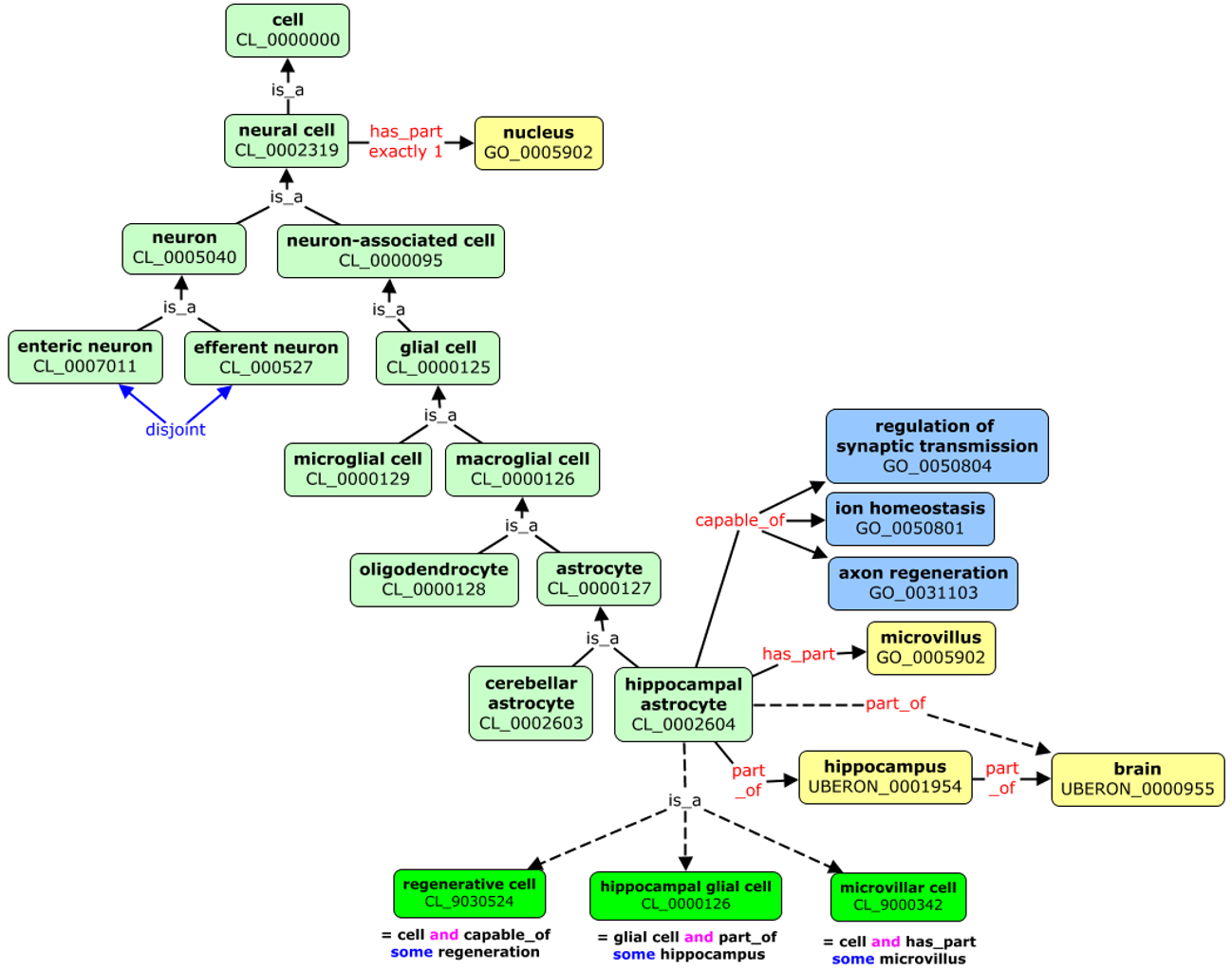

Ontologies allows automatic reasoning about the data, using the relations between the described terms. For example, in the picture above we can “deduce” that hippocampal astrocyte:

- it is type of cell as it one of its predecessor in the knowledge tree is cell (CL_0000000)

- it is located in the brain (UBERON_000955)

- it can perform axon regeneration (GO_0031103)

We could follow the term axon regeneration to find its definition and what it means, to have a better understanding of hippocampal astrocyte.

Ontologies are crucial for aggregation of information and finding suitable data. Imagine you are interested in gene expersions in glial cells. Using a free text search you would need to hope that data from astrocytes will be also tagged as glial cell, or you would need to specify all suptypes of glial cells like oligodendrocytes astrocytes. Ontologies permits to automatically expands such searches that they contain suitable domain.

The above figure presents also another aspect of using ontologies. The terms are often referred to as:

PREFIX_ID e.g. CL_0002604

Prefix (CL, UB, UBERON, GO) will identify the ontology which defined the term (Cell Ontology for CL).

ID refers to particular term in the ontology.

Ontologies are encoded in standard, interoperable formats, which permitted creation of re-usable interfaces to access the described terms or browsing different ontologies. For example: https://bioportal.bioontology.org/](https://bioportal.bioontology.org/) permits searching for suitable terms definitions in hundreds of ontologies.

Obo foundry is another useful tool that lists recommended ontologies.

Exercise 3: Exploring ontologies (with instructor)

Check the example of ontology records:

Check what type of information is available, explore the terms hierarchy, the visualization, the interlinked terms, mapping between different ontologies.

Exercise 4: Ontology tests

- The prefix CL stands for:

- a) Class ontology:

- b) Cell ontology:

- c) Cell line ontology

- The recommended ontology for chemical compounds is:

- a) cheminf

- b) chmo

- c) chebi

- Which terms captures both Alzheimer’s and Huntington’s diseases

- a) DOID_680

- b) DOID_1289

- c) DOID_0060090

Solution

3 b (hint try to see the tree in bioportal)

Attribution

Content of this episode was adapted from:

- BD2K Open Educational Resources: BDK14 Ontologies 101 Nicole Vasilevsky

Key Points

Public identifiers and ontologies are key to knowledge discovery

Automatic data aggregations needs standardised metadata formats and values

(Meta)data in Excel

Overview

Teaching: 40 min

Exercises: 15 minQuestions

How to represent data in tables

Objectives

Do’s and don’ts in Excel

How good table organization can helps to re-use data

(2 min teaching)

Tables are one of the best forms of storing and representing information. That is why we find them almost everywhere, from a menu in a restaurant, a bank statement, to professional databases. No surprise then that we biologists love tables and we tend to predominantly use Excel.

Excel is easy to use, flexible and powerful, however, it often gives us too much freedom which leads to bad practices and difficult to re-use data and metadata.

Exercise 1: What can go wrong with data in Excel (4 min)

Have a look at the example data-file in Excel.

It contains data similar to the presented data before from experiments on plants in different light conditions. Imagine you need to work with this file.

- What do you find confusing?

- What would you try to clarify with the author before doing anything with the file?

- What will be the issues with calculation of: average biomas, biomas per genotype?

- Typically, more advanced data analysis is done programmatically, which requires either conversion to text format as csv, tsv format or using a library that reads Excel files and “kind of makes this conversion on the fly”. Save this file in a text format, close Excel and reopen the saved files. What has changed?

Have you seen similar tables? Do you believe this example is realistic and replicates real-life issues?

Solution

This file hopefully unrealistically exacerbates typical bad practices in Excel. 1+2. Some things that may be confusing:

- Why are there two tables, are the period measurement related to the metabolics i.e. same samples?

- Do colors in the period table have the same meaning? Seems no.

- Why can row 22 be read, whilst row 13 says error?

- What is the meaning of values in the media column?

- Are the genotypes the same in different blocks or not?

- What is the meaning behind bold text in the table?

- What is the definition of the terms/why are units missing/inconsistent? 3.

- Before averaging the biomas weight, they need to get converted the same unit and the text needs to get replaced by the unit.

- Averaging per genotype needs manual selection of suitable entries 4.

- Information about light conditions is completely lost.

- Header columns are scrambled.

- The update date may change its meaning depending on the location (switch year with day).

Common Spreadsheet Errors (25 min teaching)

1. Using multiple tables

Multiple data tables within one spreadsheet confuse both human and computers.

- For humans they prompt finding associations where they are not present.

- For computers each spreadsheet row typically is treated as one observation. And you are potentially using the same field name in multiple places.

In our example both column A and K named sample represent different information,

and values in row 6 from both parts are not related.

2. Using multiple tabs

That seems like an easy way to organize data, right? Well, yes and no.

- tabs make it more difficult to do programmatic analysis

- tabs can be ignored (under the radar) when opening files, for example the recently used tab is shown first, other user may not notice that there are many other tabs before it (we have seen it a lot when importing data)

However,

- tabs seems like a perfect place to add administrative, descriptive metadata so they are next to data while not “polluting” the data table.

- having secondary data next to primary in subsequent tabs permits easy inspection of all without a need to always send a collection of files.

Our recommendation: use with caution; the more automatic analysis you do, the less frequently you should use tabs.

3. Not filling in zeros

It might be that when you’re measuring something, it’s usually a zero, say the number of times a rabbit is observed in the survey. Why bother writing in the number zero in that column, when it’s mostly zeros?

However, there’s a difference between a zero and a blank cell in a spreadsheet. To the computer, a zero is actually data. You measured or counted it. A blank cell means that it wasn’t measured and the computer will interpret it as an unknown value (otherwise known as a null value).

The spreadsheets or statistical programs will likely mis-interpret blank cells that you intend to be zeros.

Because of this, it’s very important to record zeros as zeros and truly missing data as nulls.

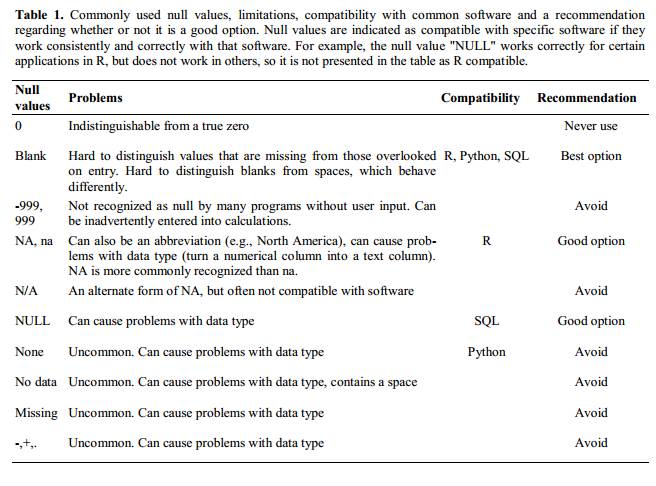

4. Using problematic null values

Example: using -999 or -1 or 0 (other numerical values) to represent missing data.

Other times different null values are used to convey different reasons why the data isn’t there. For example, -1 not recorded, -2 contamination etc.

This is important information to capture, but is in effect using one column to capture two pieces (real values and comment or status). It would be good here to create a new column like ‘data_missing’ and use that column to capture the different reasons.

Whatever the reason, it’s a problem if unknown or missing data is recorded as -999, 999, or 0. Many statistical programs will not recognize that these are intended to represent missing (null) values.

It is essential to use a clearly defined and consistent null indicator. Blanks (most applications) and NA (for R) are good choices.

White et al, 2013, explain good choices for indicating null values for different software applications in their article: Nine simple ways to make it easier to (re)use your data. Ideas in Ecology and Evolution.

5. Using formatting to convey information organizing data

Never highlight cells, rows or columns that should be excluded from an analysis, or to mark particular properties/conditions.

In our example file information about light conditions is only encoded as a color. Formatting information is not available to analysis software and almost certainly will be lost during processing.

All the information should be encoded as a field value, for example in columns like: condition, calibrated, validated, omitted etc.

You can still use colors/fonts to help with readability (just make sure they help and don’t distract) but no information should be lost if data is exported to plain text.

If you are not careful, formatting a worksheet to be more aesthetically pleasing can compromise your computer’s ability to see associations in the data.

Never merge cells, as it will make your data unreadable by statistics software. Don’t leave blank rows to indicate separations in data

6. Placing comments or units in cells

Most analysis software can’t see Excel or LibreOffice comments, and would be confused by comments placed within your data cells.

As described above for formatting, create another field if you need to add notes to cells.

Similarly, don’t include units in cells: ideally, all the measurements you place in one column

should have the same unit, but if for some reason they don’t, create another field and specify the unit.

A field value 0.123 g will need to be parsed by a script into 0.123 and g in order to be used

for calculations, don’t add extra work for others.

7. Entering more than one piece of information in a cell

Don’t include more than one piece of information in a cell (like M30, F25, for male age 30 and female 25,

or Red light + Ampicilin + High nitrogen).

This will limit the ways in which you can analyze your data. Design your data sheet to include this information. For example, include one column for age of individuals and a separate column for sex.

8. Inconsistency in used values

Using “synonyms” or alternatives to describe the same values.

For example: E. Coli, EColi, Escherichia coli in the same table

9. Using problematic field names

Choose descriptive field names, but be careful not to include spaces, numbers, or special characters of any kind (including national characters: óęłńöüä). Spaces can be misinterpreted by parsers that use whitespaces as delimiters and some programs don’t like field names that are text strings starting with numbers (e.g. Excel).

Underscores (_) are a good alternative to spaces.

Consider writing names in camel case (like this: ExampleFileName) to improve

readability.

Remember that abbreviations that make sense at the moment may not be so obvious in 6 months, but don’t overdo it with names that are excessively long.

Including the units in the field names avoids confusion and enables others to readily interpret your fields. Or add a separate row to help automatic unit conversions.

Examples

| Good Name | Good Alternative | Avoid |

| Max_temp_C | MaxTemp | Maximum Temp (°C) |

| Precipitation_mm | Precipitation | precmm |

| Mean_year_growth | MeanYearGrowth | Mean growth/year |

| sex | sex | M/F |

| weight | weight | w. |

| cell_type | CellType | Cell Type |

| Observation_01 | first_observation | 1st Obs |

10. Using special characters in data

For example, when writing longer text in a cell, people often include line breaks, em-dashes, etc in their spreadsheet. Also, when copying data from applications such as Word, formatting and ‘fancy’ non-standard characters (such as left- and right-aligned quotation marks) are included. When exporting this data into a coding/statistical environment or into a relational database, dangerous things may occur, such as lines being cut in half and encoding errors being thrown.

General best practice is to avoid adding characters such as new lines, tabs, vertical tabs and sadly, national characters. In other words, treat a text cell as if it were a simple web form that can only contain text and spaces.

11. Values without field labels

It may be obvious for us that a cell Ann Smart and Daniele Hardwork contains authors and

Starch content in Arabidopis T. under different light conditions is a title, but this is not obvious

for a computer program.

Always label values as the column header, or as a first field on the left:

Author | Ann Smart | Daniele Hardwork

Title | Starch content in Arabidopis T. under different light conditions

Exercise 2: Spotting problems (4 min)

Look at the following rows and columns in the problematic table:

- Row 5

- Row 2

- Column C

- Column E

- Column L

which of the problems discussed above can you spot in these rows and column? Here, we list them again:

- Using multiple tables

- Using multiple tabs

- Not filling in zeros

- Using problematic null values

- Using formatting to convey information and organizing data

- Placing comments or units in cells

- Entering more than one piece of information in a cell

- Inconsistency in used values

- Using problematic field names

- Using special characters in data

- Values without field labels

Type the problem number(s) next to the table elements

Solution

- Row 5: 5, 9

- Row 2: 11, 7

- Column C: 8

- Column E: 6, 5

- Column L: 3

Clean data tables make life easier (6 min teaching)

Let’s check the cleaned version of the previous file.

Look how easy it is to calculate the average biomas, and if you have Office365, see how easy it is to get average values per genotype.

Do you think it would take more effort to record data in a cleaned way to start with, in comparison to the ‘bad example’ presented before?

How long do you think it took to “clean” the original, problematic data?

Exercise 3: Outsmarted by Excel (3 min)

Excel has built in autoformatting functions which can make biologist’s life more difficult

Finding the right date

Open Excel and type following values into the cells:

A B C D E F Gene SEPT2 Sample 0013 Record 12/5/4 Mar1 1 March Mar-1 1-3 14/3/20 43904

- Is what you see what you typed?

- Can you force Excel to keep your formatting?

- Do you know which year the dates represent?

(9 min teaching)

A team of Australian researchers analyzed nearly 3,600 genetics papers Ziemann 2016. As is common practice in the field, these papers all came with supplementary files containing lists of genes used in research.

The Australian researchers found that roughly 1 in 5 of these papers included errors in their gene lists that were due to Excel automatically converting gene names to things like calendar dates or random numbers.

Those errors actually forced the renaming of 27 gene symbols including SEPT4 (now SEPTIN4) and MARCH1 (now MARCHF1).

Storing and handling dates is generally problematic even in programming languages, as we tend to use dates in various formats and those formats are region specific.

Have you ever got confused by a meeting date from an American collaborator?

If the dates are “real” data, for example sample collection from a patient or field measurement, preventing misinterpretations is crucial. There are two safe options:

- Store dates in 3 columns for year, month and day:

| Year | Month | Day |

|---|---|---|

| 2021 | 3 | 16 |

| 2021 | 10 | 1 |

- Store the date as an ISO string:

YYYYMMDDe.g.20210316

Even though, normally YYYY-MM-DD format is preferred, it will be sadly reformatted by Excel according

to your locale!

When using text files (.csv, .tsv), You should always document what format you are using to represent dates.

To use or not to use Excel

The Excel file format

.xlsxis now open, widely used and supported by external libraries and thus it could be considered interoperable. Nowadays it is admissible as being FAIR.However, plain text files like comma or tab separated values (.csv, .tsv) can be accessed without any special software. Data in a CSV file can also easily be imported into other formats and environments, such as SQLite and R. We are not tied to a certain version of a certain expensive program when we work with CSV files, so it is a good format to work with for maximum portability, interoperability and endurance. If such files are handled only with text editors or programmatically (R, Python) then they are a safer option as they prevent the autoformatting issues described before.

If you analyse your data with R or Python, or you know that your data are meant to be processed that way you should be using text formats whenever possible, and as soon as you capture your data.

However, if you only use Excel and so does your community, just keep using it. Just be aware of the possible pitfalls discussed, especially when working with gene or protein names and accession numbers.

Cleaning data with Open Refine

There are tools that help you to clean and reorganize existing data.

OpenRefine is a powerful tool for working with messy data: cleaning it; transforming it from one format into another; and extending it with web services and external data.

With OpenRefine you can find and merge synonyms like:

E. Coli,EColi,Escherichia coliinto one, or split values in theNamefield intoFirstNameandLastName.There is a carpentry course available: Data Cleaning with OpenRefine for Ecologists

Exercise 4: Data tables and FAIR (3 min)

Which of the following statements is true/false (T or F):

- Do’s and don’ts help in programmatic re-use:

- Avoiding multiple tabs improves interoperability:

- Having accompanying README file with a table description is not FAIR:

- No ‘spaces’ in columns headers improve readability:

- 2022-07-15 is ISO date format:

- 20220715 date format is better for excel than 2022-07-15:

- “No data” is better than leaving cell “blank” for missing data:

Solution

- Do’s and don’ts help in programmatic re-use: T

- Avoiding multiple tabs improves interoperability: T

- Having accompanying README file with a table description is not FAIR: F

- No ‘spaces’ in columns headers improve readability: F

- 2022-07-15 is ISO date format: T

- 20220715 date format is better for excel than 2022-07-15: T

- “No data” is better than leaving cell “blank” for missing data: F

Key Points

Never use formatting to encode information

Include only one piece of information in a cell

It is easier to store data in the correct form than to clean data for reuse

Laboratory records

Overview

Teaching: 39 min

Exercises: 31 minQuestions

How and why to keep good quality records for experiments?

How to streamline and simplify record keeping and its maintenance with electronic lab notebooks and online protocols?

How to keep records FAIR?

Objectives

Use Benchling to create an electronic lab notebook entry with electronic protocols

Reuse and modify an existing lab notebook entry, and export the modified version

Use an existing protocol on protocols.io and adapt it to your own needs

Find out where to track the history of a record in Benchling and protocols.io

Before we start…

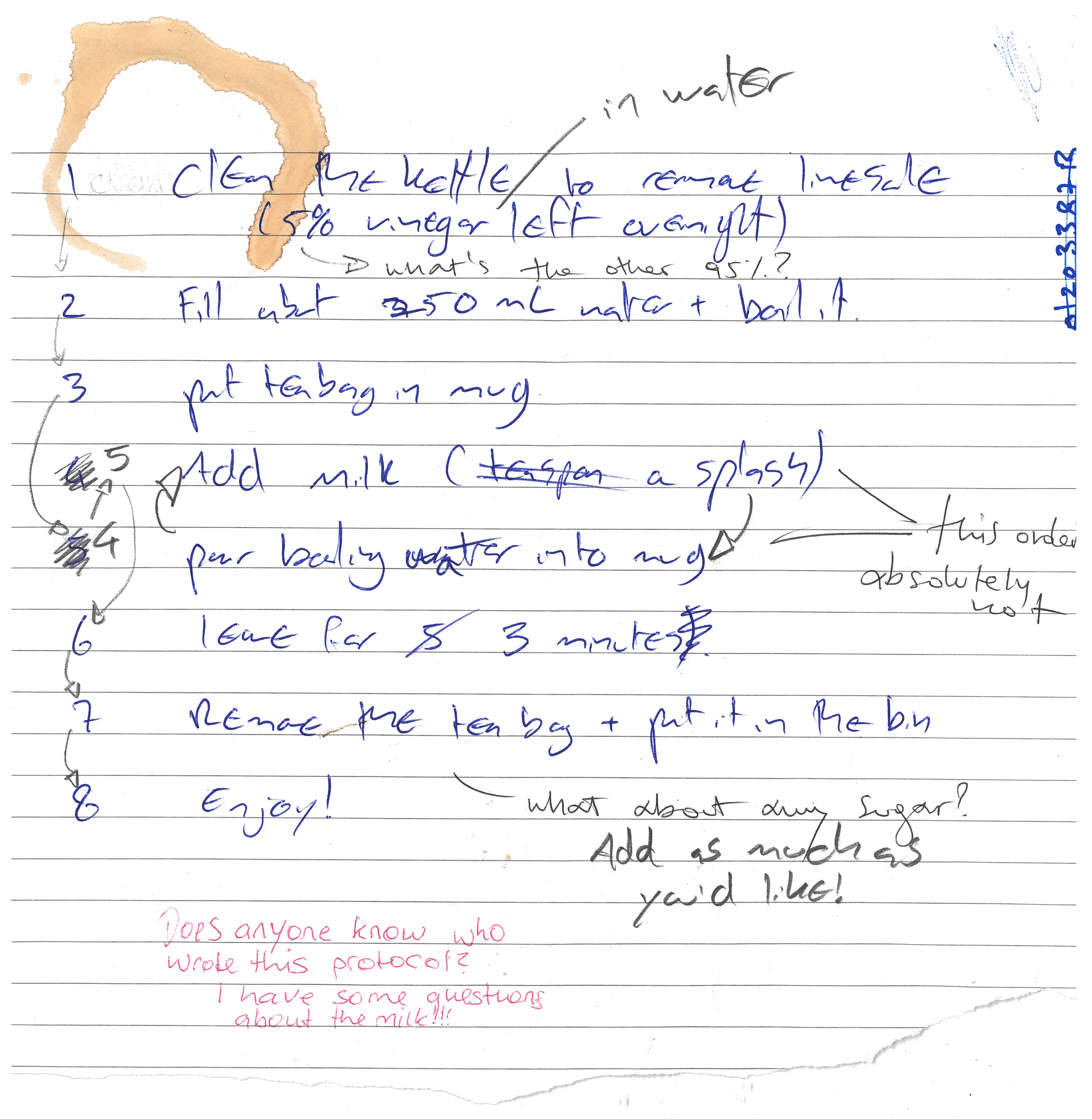

Before we start this session on good record keeping, it might be a good idea to make ourselves cup of tea. Here’s a peer-reviewed protocol for making tea:

Figure credits: Ines Boehm and Ben Thomas

Figure credits: Ines Boehm and Ben Thomas

Differences between analog and record keeping

How did you find making your cup of tea from the above protocol? As you could see, one scientist put milk in before boiling water, another one put boiling water in before milk. Another couldn’t find which type of milk was used. Whilst the steps can be followed to produce a cup of tea, the teas can vary wildly. Slight variations are acceptable, but such as in an experiment, it is more important that steps can be repeated correctly so that others can do the same.

Here is the same protocol typed out on protocol.io. Which do you find easier to follow?

Although digital protocols are the better of the two, analogue protocols certainly have their place. For example, it is often easier to make notes on analogue protocols which you can return to later. Additionally, your working environment may make it too risky to bring expensive technology (just in case), whereas pen and paper can be fine. In cases like these, a hybrid system is often best - where analogue notes are digitized. This can be the best of both worlds.

Exercise 1: Differences between analog and digital record keeping (4 + 5 min)