Content from Introduction

Last updated on 2026-01-20 | Edit this page

Overview

Questions

- What is statistical inference?

- Why do biomedical researchers need to learn statistics now?

Objectives

- Explain how technology has changed biomedical measurements from past to present.

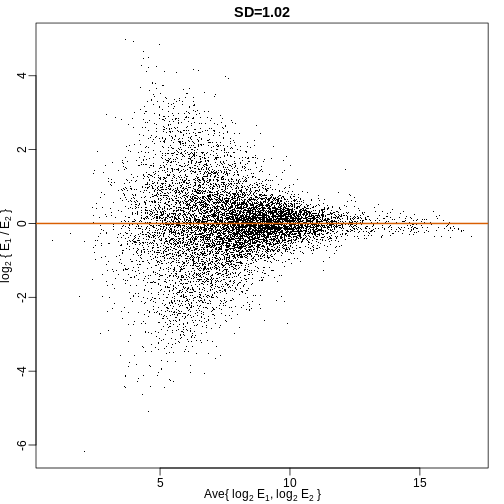

Technological changes in biomedical research drive data production in greater quantity and complexity. High-throughput technologies, such as sequencing technologies, produce data whose size and complexity require sophisticated statistical skills to avoid being fooled by patterns arising by chance. In the past researchers would measure, for example, the transcription levels of a single gene of interest. Now it is possible to measure all genes at once, often 20,000 or more depending on the organism. Technological advances like these have driven a change from hypothesis to discovery-driven research. This means that statistics and data analysis in the life sciences are more important than ever.

This lesson will introduce the statistical concepts and data analysis skills needed for success in data-driven life science research. We start with one of the most important topics in statistics and in the life sciences: statistical inference. Inference is the use of probability to learn population characteristics from data. A typical example is determining if two groups (for example, cases versus controls) are different on average. Specific topics include:

- p-values

- the t-test

- confidence intervals

- association tests

- permutation tests

- and statistical power

We make use of approximations made possible by mathematical theory, such as the Central Limit Theorem, as well as techniques made possible by modern computing.

- Novel technologies produce data in great complexity and scale, requiring more sophisticated understanding of statistics.

Content from Inference

Last updated on 2026-01-20 | Edit this page

Overview

Questions

- What does inference mean?

- Why do we need p-values and confidence intervals?

- What is a random variable?

- What exactly is a distribution?

Objectives

- Describe the statistical concepts underlying p-values and confidence intervals.

- Explain random variables and null distributions using R programming.

- Compute p-values and confidence intervals using R programming.

Introduction

This section introduces the statistical concepts necessary to understand p-values and confidence intervals. These terms are ubiquitous in the life science literature. Let’s use this paper as an example.

Note that the abstract has this statement:

“Body weight was higher in mice fed the high-fat diet already after the first week, due to higher dietary intake in combination with lower metabolic efficiency.”

To support this claim they provide the following in the results section:

“Already during the first week after introduction of high-fat diet, body weight increased significantly more in the high-fat diet-fed mice (+ 1.6 ± 0.1 g) than in the normal diet-fed mice (+ 0.2 ± 0.1 g; P < 0.001).”

What does P < 0.001 mean? Why are the ± included? We will learn what this means and learn to compute these values in R. The first step is to understand random variables. To do this, we will use data from a mouse database (provided by Karen Svenson via Gary Churchill and Dan Gatti and partially funded by P50 GM070683). We will import the data into R and explain random variables and null distributions using R programming. See the Setup instructions to import the data.

Our first look at data

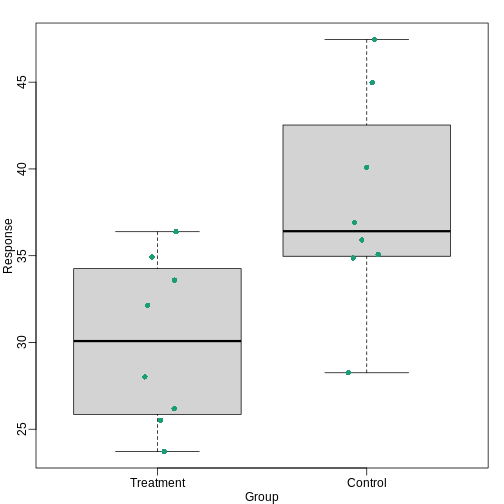

We are interested in determining if following a given diet makes mice

heavier after several weeks. This data was produced by ordering 24 mice

from The Jackson Lab and randomly assigning either chow or high fat (hf)

diet. After several weeks, the scientists weighed each mouse and

obtained this data (head just shows us the first 6

rows):

R

fWeights <- read.csv("./data/femaleMiceWeights.csv")

head(fWeights)

OUTPUT

Diet Bodyweight

1 chow 21.51

2 chow 28.14

3 chow 24.04

4 chow 23.45

5 chow 23.68

6 chow 19.79If you would like to view the entire data set with RStudio:

R

View(fWeights)

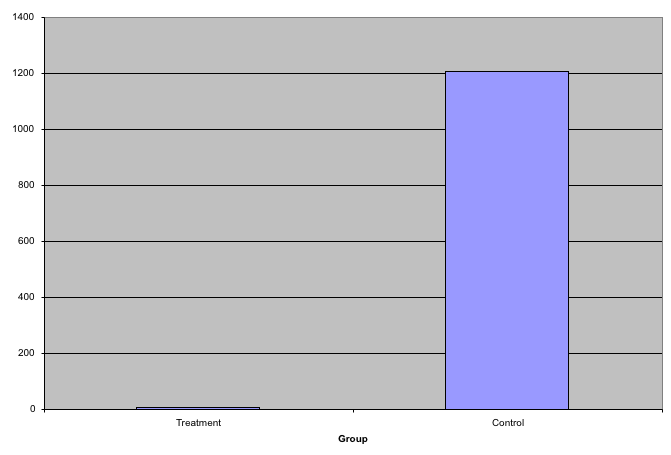

So are the hf mice heavier? Mouse 24 at 20.73 grams is one of the lightest mice, while Mouse 21 at 34.02 grams is one of the heaviest. Both are on the hf diet. Just from looking at the data, we see there is variability. Claims such as the previous (that body weight increased significantly in high-fat diet-fed mice) usually refer to the averages. So let’s look at the average of each group:

R

control <- filter(fWeights, Diet=="chow") %>%

select(Bodyweight) %>%

unlist

treatment <- filter(fWeights, Diet=="hf") %>%

select(Bodyweight) %>%

unlist

print( mean(treatment) )

OUTPUT

[1] 26.83417R

print( mean(control) )

OUTPUT

[1] 23.81333R

obsdiff <- mean(treatment) - mean(control)

print(obsdiff)

OUTPUT

[1] 3.020833So the hf diet mice are about 10% heavier. Are we done? Why do we need p-values and confidence intervals? The reason is that these averages are random variables. They can take many values.

If we repeat the experiment, we obtain 24 new mice from The Jackson Laboratory and, after randomly assigning them to each diet, we get a different mean. Every time we repeat this experiment, we get a different value. We call this type of quantity a random variable.

Random Variables

Let’s explore random variables further. Imagine that we actually have the weight of all control female mice and can upload them to R. In Statistics, we refer to this as the population. These are all the control mice available from which we sampled 24. Note that in practice we do not have access to the population. We have a special dataset that we are using here to illustrate concepts.

Now let’s sample 12 mice three times and see how the average changes.

R

population <- read.csv(file = "./data/femaleControlsPopulation.csv")

control <- sample(population$Bodyweight, 12)

mean(control)

OUTPUT

[1] 23.47667R

control <- sample(population$Bodyweight, 12)

mean(control)

OUTPUT

[1] 25.12583R

control <- sample(population$Bodyweight, 12)

mean(control)

OUTPUT

[1] 23.72333Note how the average varies. We can continue to do this repeatedly and start learning something about the distribution of this random variable.

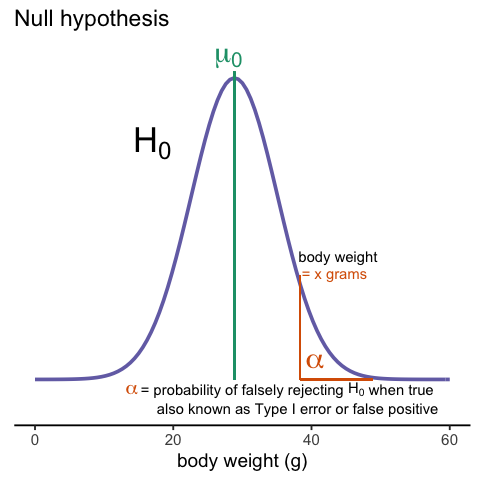

The Null Hypothesis

Now let’s go back to our average difference of obsdiff.

As scientists we need to be skeptics. How do we know that this

obsdiff is due to the diet? What happens if we give all 24

mice the same diet? Will we see a difference this big? Statisticians

refer to this scenario as the null hypothesis. The name “null”

is used to remind us that we are acting as skeptics: we give credence to

the possibility that there is no difference.

Because we have access to the population, we can actually observe as many values as we want of the difference of the averages when the diet has no effect. We can do this by randomly sampling 24 control mice, giving them the same diet, and then recording the difference in mean between two randomly split groups of 12 and 12. Here is this process written in R code:

R

## 12 control mice

control <- sample(population$Bodyweight, 12)

## another 12 control mice that we act as if they were not

treatment <- sample(population$Bodyweight, 12)

print(mean(treatment) - mean(control))

OUTPUT

[1] -0.2391667Now let’s do it 10,000 times. We will use a “for-loop”, an operation

that lets us automate this (a simpler approach that, we will learn

later, is to use replicate).

R

n <- 10000

null <- vector("numeric", n)

for (i in 1:n) {

control <- sample(population$Bodyweight, 12)

treatment <- sample(population$Bodyweight, 12)

null[i] <- mean(treatment) - mean(control)

}

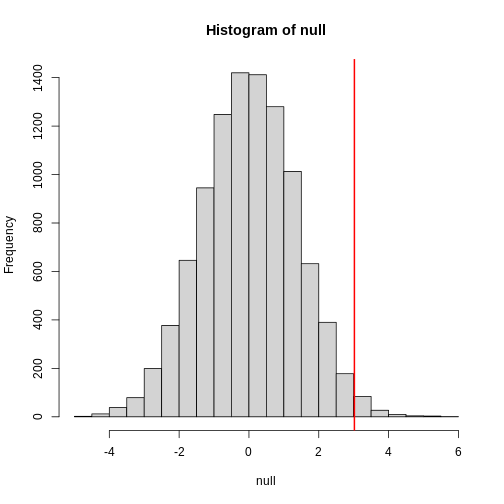

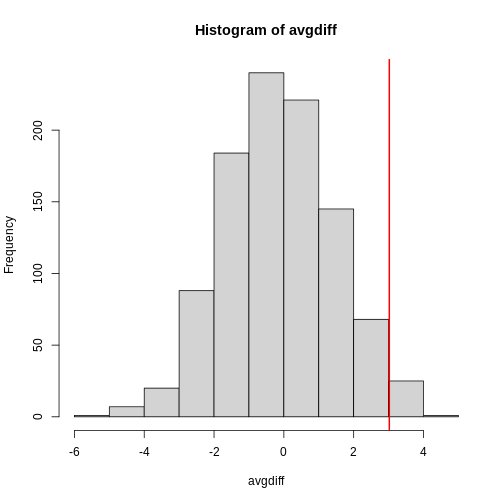

The values in null form what we call the null

distribution. We will define this more formally below. By the way,

the loop above is a Monte Carlo simulation to obtain 10,000

outcomes of the random variable under the null hypothesis. Simulations

can be used to check theoretical or analytical results. For more

information about Monte Carlo simulations, visit Data Analysis

for the Life Sciences.

So what percent of the 10,000 are bigger than

obsdiff?

R

mean(null >= obsdiff)

OUTPUT

[1] 0.0123Only a small percent of the 10,000 simulations. As skeptics what do we conclude? When there is no diet effect, we see a difference as big as the one we observed only 1.5% of the time. This is what is known as a p-value, which we will define more formally later in the book.

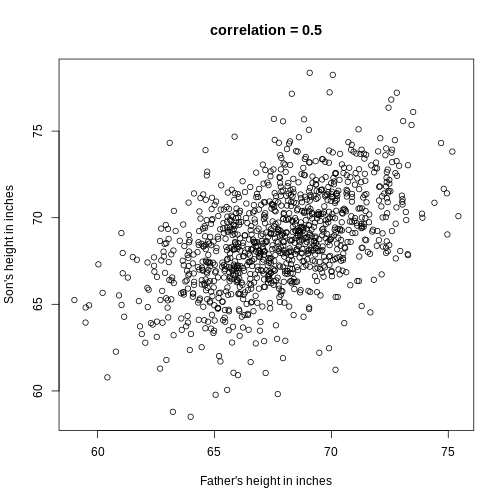

Distributions

We have explained what we mean by null in the context of null hypothesis, but what exactly is a distribution?

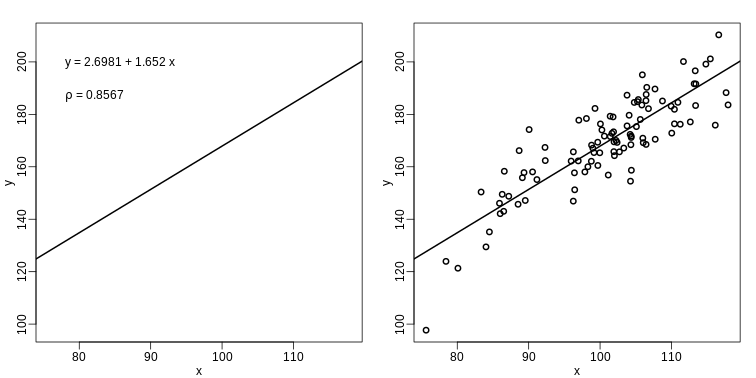

The simplest way to think of a distribution is as a compact description of many numbers. For example, suppose you have measured the heights of all men in a population. Imagine you need to describe these numbers to someone that has no idea what these heights are, such as an alien that has never visited Earth. Suppose all these heights are contained in the following dataset:

R

father.son <- UsingR::father.son

x <- father.son$fheight

One approach to summarizing these numbers is to simply list them all out for the alien to see. Here are 10 randomly selected heights of 1,078:

R

round(sample(x, 10), 1)

OUTPUT

[1] 67.7 72.5 64.7 62.7 66.1 67.5 68.2 61.8 69.7 71.2Cumulative Distribution Function

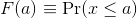

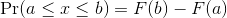

Scanning through these numbers, we start to get a rough idea of what the entire list looks like, but it is certainly inefficient. We can quickly improve on this approach by defining and visualizing a distribution. To define a distribution we compute, for all possible values of a, the proportion of numbers in our list that are below a. We use the following notation:

This is called the cumulative distribution function (CDF). When the CDF is derived from data, as opposed to theoretically, we also call it the empirical CDF (ECDF). The ECDF for the height data looks like this:

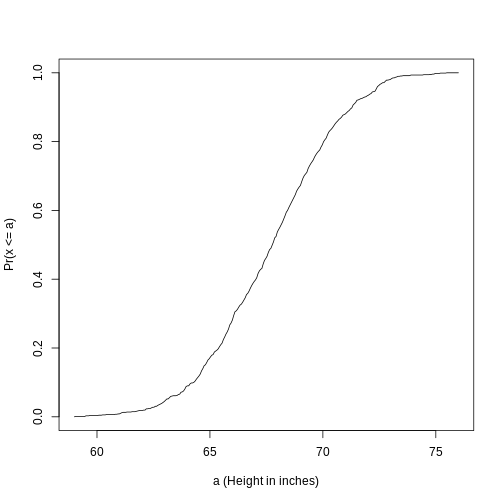

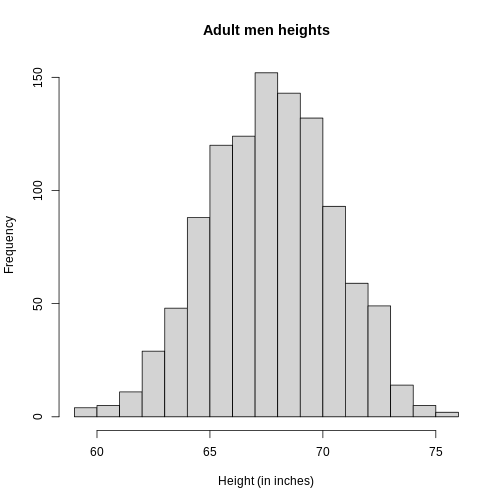

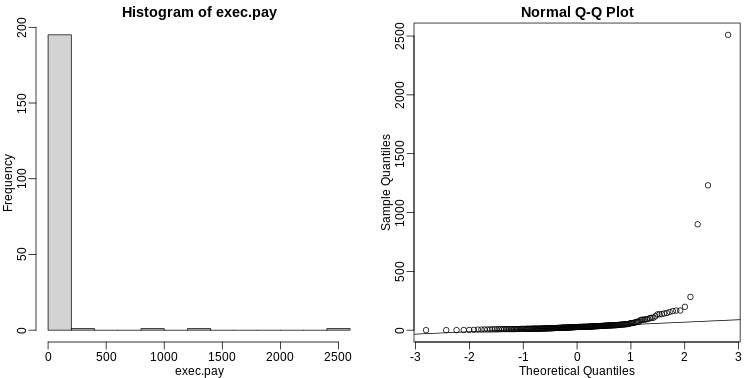

Histograms

Although the empirical CDF concept is widely discussed in statistics textbooks, the plot is actually not very popular in practice. The reason is that histograms give us the same information and are easier to interpret. Histograms show us the proportion of values in intervals:

Plotting these heights as

bars is what we call a histogram. It is a more useful plot

because we are usually more interested in intervals, such and such

percent are between 70 inches and 71 inches, etc., rather than the

percent less than a particular height. Here is a histogram of

heights:

Plotting these heights as

bars is what we call a histogram. It is a more useful plot

because we are usually more interested in intervals, such and such

percent are between 70 inches and 71 inches, etc., rather than the

percent less than a particular height. Here is a histogram of

heights:

R

hist(x, xlab="Height (in inches)", main="Adult men heights")

Showing this plot to the alien is much more informative than showing numbers. With this simple plot, we can approximate the number of individuals in any given interval. For example, there are about 70 individuals over six feet (72 inches) tall.

Probability Distribution

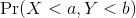

Summarizing lists of numbers is one powerful use of a distribution. An even more important use is describing the possible outcomes of a random variable. Unlike a fixed list of numbers, we don’t actually observe all possible outcomes of random variables, so instead of describing proportions, we describe probabilities. For instance, if we pick a random height from our list, then the probability of it falling between a and b is denoted with:

Note that the X is

now capitalized to distinguish it as a random variable and that the

equation above defines the probability distribution of the random

variable. Knowing this distribution is incredibly useful in science. For

example, in the case above, if we know the distribution of the

difference in mean of mouse weights when the null hypothesis is true,

referred to as the null distribution, we can compute the

probability of observing a value as large as we did, referred to as a

p-value. In a previous section we ran what is called a

Monte Carlo simulation (we will provide more details on Monte

Carlo simulation in a later section) and we obtained 10,000 outcomes of

the random variable under the null hypothesis.

Note that the X is

now capitalized to distinguish it as a random variable and that the

equation above defines the probability distribution of the random

variable. Knowing this distribution is incredibly useful in science. For

example, in the case above, if we know the distribution of the

difference in mean of mouse weights when the null hypothesis is true,

referred to as the null distribution, we can compute the

probability of observing a value as large as we did, referred to as a

p-value. In a previous section we ran what is called a

Monte Carlo simulation (we will provide more details on Monte

Carlo simulation in a later section) and we obtained 10,000 outcomes of

the random variable under the null hypothesis.

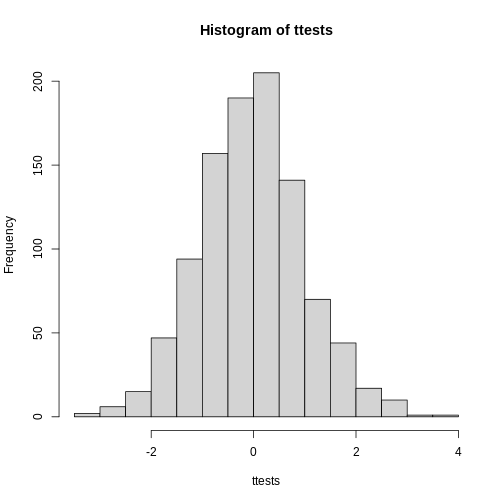

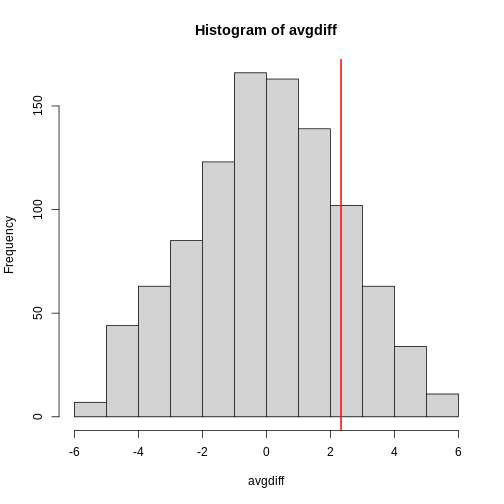

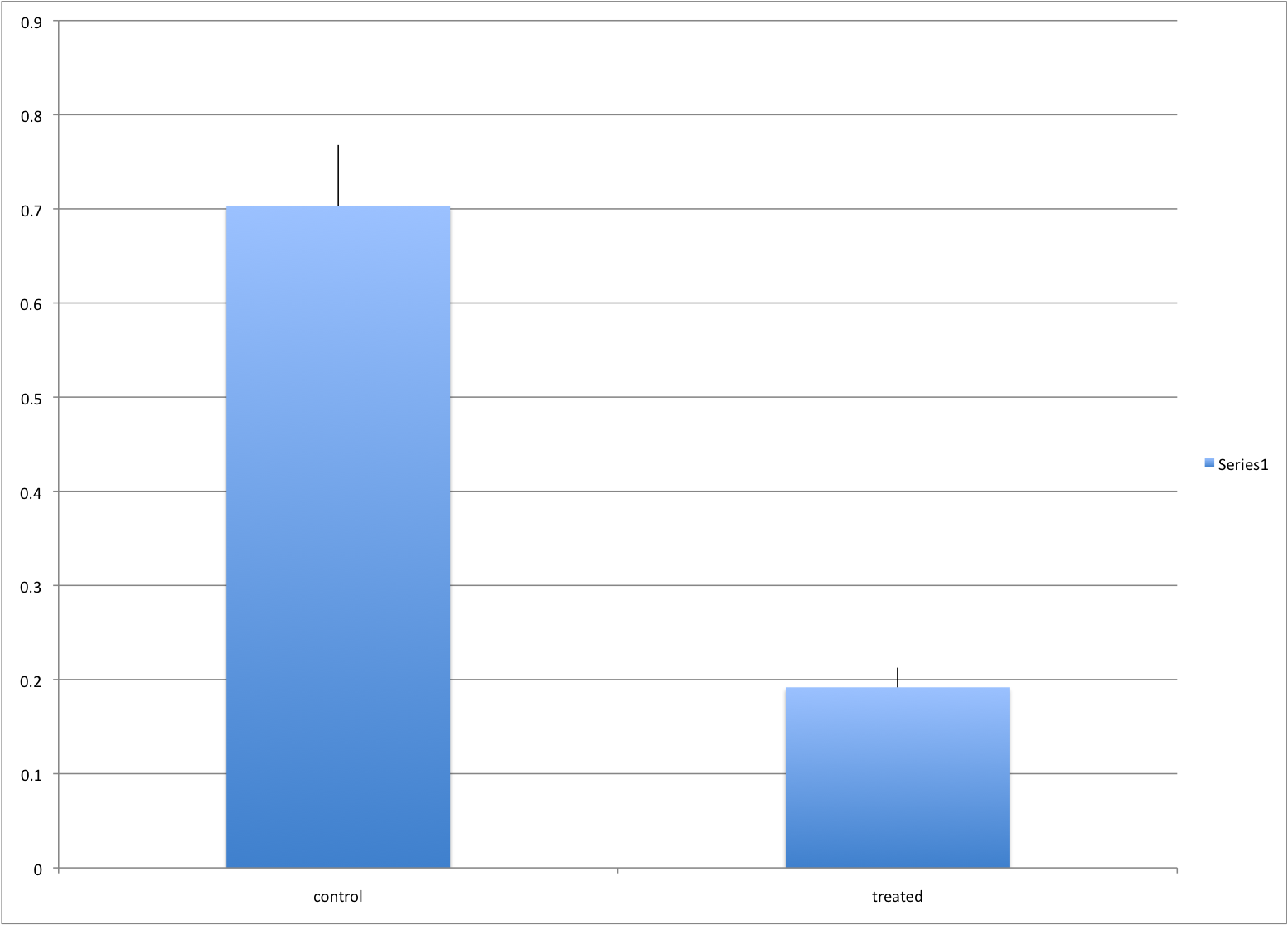

The observed values will amount to a histogram. From a histogram of

the null vector we calculated earlier, we can see that

values as large as obsdiff are relatively rare:

R

hist(null, freq=TRUE)

abline(v=obsdiff, col="red", lwd=2)

An important point to keep in mind here is that while we defined Pr(a) by counting cases, we will learn that, in some circumstances, mathematics gives us formulas for Pr(a) that save us the trouble of computing them as we did here. One example of this powerful approach uses the normal distribution approximation.

Normal Distribution

The probability distribution we see above approximates one that is very common in nature: the bell curve, also known as the normal distribution or Gaussian distribution. When the histogram of a list of numbers approximates the normal distribution, we can use a convenient mathematical formula to approximate the proportion of values or outcomes in any given interval:

While the formula may look intimidating, don’t worry, you will never

actually have to type it out, as it is stored in a more convenient form

(as pnorm in R which sets a to -∞, and takes

b as an argument).

Here μ and σ are referred to as the mean and the standard deviation

of the population (we explain these in more detail in another section).

If this normal approximation holds for our list, then the

population mean and variance of our list can be used in the formula

above. An example of this would be when we noted above that only 1.5% of

values on the null distribution were above obsdiff. We can

compute the proportion of values below a value x with

pnorm(x, mu, sigma) without knowing all the values. The

normal approximation works very well here:

R

1 - pnorm(obsdiff, mean(null), sd(null))

OUTPUT

[1] 0.01311009Later, we will learn that there is a mathematical explanation for this. A very useful characteristic of this approximation is that one only needs to know μ and σ to describe the entire distribution. From this, we can compute the proportion of values in any interval.

Refer to the histogram of null values above. The code we just ran

represents everything to the right of the vertical red line, or 1 minus

everything to the left. Try running this code without the

1 - to understand this better.

R

pnorm(obsdiff, mean(null), sd(null))

OUTPUT

[1] 0.9868899This value represents everything to the left of the vertical red line in the null histogram above.

Summary

So computing a p-value for the difference in diet for the mice was pretty easy, right? But why are we not done? To make the calculation, we did the equivalent of buying all the mice available from The Jackson Laboratory and performing our experiment repeatedly to define the null distribution. Yet this is not something we can do in practice. Statistical Inference is the mathematical theory that permits you to approximate this with only the data from your sample, i.e. the original 24 mice. We will focus on this in the following sections.

Setting the random seed

Before we continue, we briefly explain the following important line of code:

R

set.seed(1)

Throughout this lesson, we use random number generators. This implies that many of the results presented can actually change by chance, including the correct answer to problems. One way to ensure that results do not change is by setting R’s random number generation seed. For more on the topic please read the help file:

R

?set.seed

Even better is to review an example. R has a built-in vector of

characters called LETTERS that contains upper case letters

of the Roman alphabet. We can take a sample of 5 letters with the

following code, which when repeated will give a different set of 5

letters each times.

R

LETTERS

OUTPUT

[1] "A" "B" "C" "D" "E" "F" "G" "H" "I" "J" "K" "L" "M" "N" "O" "P" "Q" "R" "S"

[20] "T" "U" "V" "W" "X" "Y" "Z"R

sample(LETTERS, 5)

OUTPUT

[1] "Y" "D" "G" "A" "B"R

sample(LETTERS, 5)

OUTPUT

[1] "W" "K" "N" "R" "S"R

sample(LETTERS, 5)

OUTPUT

[1] "A" "U" "Y" "J" "V"If we set a seed, we will get the same sample of letters each time.

R

set.seed(1)

sample(LETTERS, 5)

OUTPUT

[1] "Y" "D" "G" "A" "B"R

set.seed(1)

sample(LETTERS, 5)

OUTPUT

[1] "Y" "D" "G" "A" "B"R

set.seed(1)

sample(LETTERS, 5)

OUTPUT

[1] "Y" "D" "G" "A" "B"When we set a seed we ensure that we get the same results from random

number generation, which is used in sampling with

sample.

For the following exercises, we will be using the female controls

population dataset that we read into a variable called

population. Here population represents the

weights for the entire population of female mice. To remind ourselves

about this data set, run the following:

R

str(population)

OUTPUT

'data.frame': 225 obs. of 1 variable:

$ Bodyweight: num 27 24.8 27 28.1 23.6 ...R

head(population)

OUTPUT

Bodyweight

1 27.03

2 24.80

3 27.02

4 28.07

5 23.55

6 22.72R

summary(population)

OUTPUT

Bodyweight

Min. :15.51

1st Qu.:21.51

Median :23.54

Mean :23.89

3rd Qu.:26.08

Max. :36.84 Exercise 1

- What is the average of these weights?

- After setting the seed at 1, (

set.seed(1)) take a random sample of size 5.

What is the absolute value (abs()) of the difference between the average of the sample and the average of all the values? - After setting the seed at 5,

set.seed(5)take a random sample of size 5. What is the absolute value of the difference between the average of the sample and the average of all the values? - Why are the answers from 2 and 3 different?

- Because we made a coding mistake.

- Because the average of the population weights is random.

- Because the average of the samples is a random variable.

- All of the above.

mean(population$Bodyweight)set.seed(1)meanOfSample1 <- mean(sample(population$Bodyweight, 5))abs(meanOfSample1 - mean(population$Bodyweight))set.seed(5)meanOfSample2 <- mean(sample(population$Bodyweight, 5))abs(meanOfSample2 - mean(population$Bodyweight))- Because the average of the samples is a random variable.

Exercise 2

- Set the seed at 1, then using a for-loop take a random sample of 5 mice 1,000 times. Save these averages. What percent of these 1,000 averages are more than 1 gram away from the average of the population?

- We are now going to increase the number of times we redo the sample from 1,000 to 10,000. Set the seed at 1, then using a for-loop take a random sample of 5 mice 10,000 times. Save these averages. What percent of these 10,000 averages are more than 1 gram away from the average of the population?

- Note that the answers to the previous two questions barely changed.

This is expected. The way we think about the random value distributions

is as the distribution of the list of values obtained if we repeated the

experiment an infinite number of times. On a computer, we can’t perform

an infinite number of iterations so instead, for our examples, we

consider 1,000 to be large enough, thus 10,000 is as well. Now if

instead we change the sample size, then we change the random variable

and thus its distribution.

Set the seed at 1, then using a for-loop take a random sample of 50 mice 1,000 times. Save these averages. What percent of these 1,000 averages are more than 1 gram away from the average of the population?

-

set.seed(1)n <- 1000meanSampleOf5 <- vector("numeric", n)for (i in 1:n) {meanSampleOf5[i] <- mean(sample(population$Bodyweight, 5)}meanSampleOf5mean(population$Bodyweight)`

mean population weight plus one gram and minus one gram

meanPopWeight <- mean(population$Bodyweight) # mean

sdPopWeight <- sd(population$Bodyweight) # standard

deviation abline(v = meanPopWeight, col = "blue", lwd = 2)

abline(v = meanPopWeight + 1, col = "red", lwd = 2)

abline(v = meanPopWeight - 1, col = "red", lwd = 2)

proportion below mean population weight minus 1 gram

pnorm(meanPopWeight - 1, mean = meanPopWeight, sd = sdPopWeight)

proportion greater than mean population weight plus 1 gram

1 - pnorm(meanPopWeight + 1, mean = meanPopWeight, sd = sdPopWeight)

add the two together

pnorm(meanPopWeight - 1, mean = meanPopWeight, sd = sdPopWeight) +

1 - pnorm(meanPopWeight + 1, mean = meanPopWeight, sd = sdPopWeight)

2. set.seed(1)n <- 10000meanSampleOf5 <- vector("numeric", n)for (i in 1:n) {meanSampleOf5[i] <- mean(sample(population$Bodyweight, 5))}meanSampleOf5mean(population$Bodyweight)`

mean population weight plus one gram and minus one gram

meanPopWeight <- mean(population$Bodyweight) # mean

sdPopWeight <- sd(population$Bodyweight) # standard

deviation abline(v = meanPopWeight, col = "blue", lwd = 2)

abline(v = meanPopWeight + 1, col = "red", lwd = 2)

abline(v = meanPopWeight - 1, col = "red", lwd = 2)

proportion below mean population weight minus 1 gram

pnorm(meanPopWeight - 1, mean = meanPopWeight, sd = sdPopWeight)

proportion greater than mean population weight plus 1 gram

1 - pnorm(meanPopWeight + 1, mean = meanPopWeight, sd = sdPopWeight)

add the two together

pnorm(meanPopWeight - 1, mean = meanPopWeight, sd = sdPopWeight) +

1 - pnorm(meanPopWeight + 1, mean = meanPopWeight, sd = sdPopWeight)

3. set.seed(1)n <- 1000meanSampleOf50 <- vector("numeric", n)for (i in 1:n) {meanSampleOf50[i] <- mean(sample(population$Bodyweight, 50))}meanSampleOf50mean(population$Bodyweight)`

mean population weight plus one gram and minus one gram

abline(v = meanPopWeight, col = "blue", lwd = 2)

abline(v = meanPopWeight + 1, col = "red", lwd = 2)

abline(v = meanPopWeight - 1, col = "red", lwd = 2)

proportion below mean population weight minus 1 gram

pnorm(meanPopWeight - 1, mean = meanPopWeight, sd = sdPopWeight)

Exercise 3

Use a histogram to “look” at the distribution of averages we get with

a sample size of 5 and a sample size of 50. How would you say they

differ?

A) They are actually the same.

B) They both look roughly normal, but with a sample size of 50 the

spread is smaller.

C) They both look roughly normal, but with a sample size of 50 the

spread is larger.

D) The second distribution does not look normal at all.

Exercise 4

For the last set of averages, the ones obtained from a sample size of 50, what percent are between 23 and 25? Now ask the same question of a normal distribution with average 23.9 and standard deviation 0.43. The answer to the previous two were very similar. This is because we can approximate the distribution of the sample average with a normal distribution. We will learn more about the reason for this next.

- Inference uses sampling to investigate population parameters such as mean and standard deviation.

- A p-value describes the probability of obtaining a specific value.

- A distribution summarizes a set of numbers.

Content from Populations, Samples and Estimates

Last updated on 2026-01-20 | Edit this page

Overview

Questions

- What is a parameter from a population?

- What are sample estimates?

- How can we use sample estimates to make inferences about population parameters?

Objectives

- Understand the difference between parameters and statistics.

- Calculate population means and sample means.

- Calculate the difference between the means of two subgroups.

Populations, Samples and Estimates

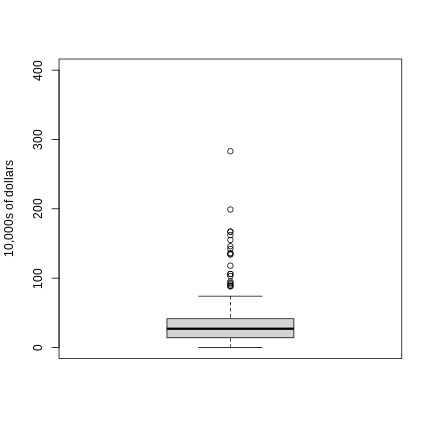

We can never know the true mean or variance of an entire population. Why not? Because we can’t feasibly measure every member of a population. We can never know the true mean blood pressure of all mice, for example, even if all are from one strain, because we can’t afford to buy them all or even find them all. We can never know the true mean blood pressure of all people on a Western diet, for example, because we can’t possibly measure the entire population that’s on a Western diet. If we could measure all people on a Western diet, we really are interested in the difference in means between people on a Western vs. non high fat high sugar diet because we want to know what effect the diet has on people. If there is no difference in means, we can say that there is no effect of diet. If there is a difference in means, we can say that the diet has an effect. The question we are asking can be expressed as:

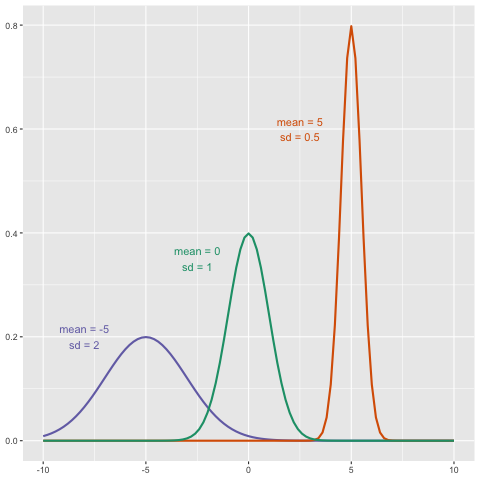

We can even compare

more than two means. The three normal curves below help to visualize a

question comparing the means of each curve to one of the others.

We can even compare

more than two means. The three normal curves below help to visualize a

question comparing the means of each curve to one of the others.

We also want to know the variance from the mean, so that we have a sense of the spread of measurement around the mean.

In reality we use sample estimates of population parameters. The true population parameters that we are interested in are mean and standard deviation. Here we learn how taking a sample permits us to answer our questions about differences between groups. This is the essence of statistical inference.

Now that we have introduced the idea of a random variable, a null distribution, and a p-value, we are ready to describe the mathematical theory that permits us to compute p-values in practice. We will also learn about confidence intervals and power calculations.

Population parameters

A first step in statistical inference is to understand what population you are interested in. In the mouse weight example, we have two populations: female mice on control diets and female mice on high fat diets, with weight being the outcome of interest. We consider this population to be fixed, and the randomness comes from the sampling. One reason we have been using this dataset as an example is because we happen to have the weights of all the mice of this type. We download this file to our working directory and read in to R:

R

pheno <- read.csv(file = "./data/mice_pheno.csv")

We can then access the population values and determine, for example, how many we have. Here we compute the size of the control population:

R

controlPopulation <- filter(pheno, Sex == "F" & Diet == "chow") %>%

select(Bodyweight) %>% unlist

length(controlPopulation)

OUTPUT

[1] 225We usually denote these values as x 1,…,xm. In this case, m is the number computed above. We can do the same for the high fat diet population:

R

hfPopulation <- filter(pheno, Sex == "F" & Diet == "hf") %>%

select(Bodyweight) %>% unlist

length(hfPopulation)

OUTPUT

[1] 200and denote with y 1,…,yn.

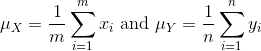

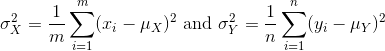

We can then define summaries of interest for these populations, such as the mean and variance.

The mean:

R

# X is the control population

sum(controlPopulation) # sum of the xsubi's

OUTPUT

[1] 5376.01R

length(controlPopulation) # this equals m

OUTPUT

[1] 225R

sum(controlPopulation)/length(controlPopulation) # this equals mu sub x

OUTPUT

[1] 23.89338R

# Y is the high fat diet population

sum(hfPopulation) # sum of the ysubi's

OUTPUT

[1] 5253.779R

sum(hfPopulation)/length(hfPopulation) # this equals mu sub y

OUTPUT

[1] 26.2689The variance:

with the standard deviation being the square root of the variance. We refer to such quantities that can be obtained from the population as population parameters. The question we started out asking can now be written mathematically: is μY - μX = 0 ?

Although in our illustration we have all the values and can check if this is true, in practice we do not. For example, in practice it would be prohibitively expensive to buy all the mice in a population. Here we learn how taking a sample permits us to answer our questions. This is the essence of statistical inference.

Sample estimates

In the previous chapter, we obtained samples of 12 mice from each population. We represent data from samples with capital letters to indicate that they are random. This is common practice in statistics, although it is not always followed. So the samples are X 1,…,XM and Y 1,…,YN and, in this case, Ν=Μ=12. In contrast and as we saw above, when we list out the values of the population, which are set and not random, we use lower-case letters.

Since we want to know if μY - μX = 0, we consider the sample version: Ȳ-X̄ with:

Note that this difference of averages is also a random variable. Previously, we learned about the behavior of random variables with an exercise that involved repeatedly sampling from the original distribution. Of course, this is not an exercise that we can execute in practice. In this particular case it would involve buying 24 mice over and over again. Here we described the mathematical theory that mathematically relates X̄ to μX and Ȳ to μY, that will in turn help us understand the relationship between Ȳ-X̄ and μY - μX. Specifically, we will describe how the Central Limit Theorem permits us to use an approximation to answer this question, as well as motivate the widely used t-distribution.

Exercise

We will use the mouse phenotype data. Remove the observations that contain missing values:

pheno <- na.omit(pheno)

Use dplyr to create a vector x with the

body weight of all males on the control (chow) diet.

What is this population’s average body weight?

R

# Omit observations with missing data

pheno <- na.omit(pheno)

# Create subset of males on chow diet

x <- pheno %>%

filter(Diet == "chow" & Sex == "M") %>%

select(Bodyweight) %>%

unlist

# Calculate mean weight

mean(x)

OUTPUT

[1] 30.96381Exercise (continued)

Set the seed at 1. Take a random sample X of size 25

from x. What is the sample average?

R

set.seed(1)

x_sample <- sample(x, 25)

mean(x_sample)

OUTPUT

[1] 30.5196Exercise (continued)

Use dplyr to create a vector y with the

body weight of all males on the high fat (hf) diet.

What is this population’s average?

R

# Create subset of males on high fat diet

y <- pheno %>%

filter(Diet == "hf" & Sex == "M") %>%

select(Bodyweight) %>%

unlist

# Calculate the mean weight

mean(y)

OUTPUT

[1] 34.84793Exercise (continued)

Set the seed at 1. Take a random sample Y of size 25

from y. What is the sample average?

R

set.seed(1)

y_sample <- sample(y, 25)

mean(y_sample)

OUTPUT

[1] 35.8036Exercise (continued)

What is the difference in absolute value between x̄-ȳ and X̄-Ȳ?

R

# mu_x - mu_y

pop_mean_diff <- abs(mean(x) - mean(y))

# x-bar - y-bar

sample_mean_diff <- abs(mean(x_sample) - mean(y_sample))

# difference in absolute value between (mu_x - mu_y) - (x-bar - y-bar)

pop_sample_mean_diff <- abs(pop_mean_diff - sample_mean_diff)

pop_sample_mean_diff

OUTPUT

[1] 1.399884Exercise (continued)

Repeat the above for females. Make sure to set the seed to 1 before each sample call. What is the difference in absolute value between x̄-ȳ and X̄-Ȳ?

R

x_f <- pheno %>%

filter(Diet == "chow" & Sex == "F") %>%

select(Bodyweight) %>%

unlist

mean(x_f)

OUTPUT

[1] 23.89338R

set.seed(1)

x_f_sample <- sample(x_f, 25)

mean(x_f_sample)

OUTPUT

[1] 24.2528R

y_f <- pheno %>%

filter(Diet == "hf" & Sex == "F") %>%

select(Bodyweight) %>%

unlist

mean(y_f)

OUTPUT

[1] 26.2689R

set.seed(1)

y_f_sample <- sample(y_f, 25)

mean(y_f_sample)

OUTPUT

[1] 28.3828R

pop_mean_diff_f <- abs(mean(x_f) - mean(y_f))

sample_mean_diff_f <- abs(mean(x_f_sample) - mean(y_f_sample))

pop_sample_mean_diff_f <- abs(pop_mean_diff_f - sample_mean_diff_f)

pop_sample_mean_diff_f

OUTPUT

[1] 1.754483- Parameters are measures of populations.

- Statistics are measures of samples drawn from populations.

- Statistical inference is the process of using sample statistics to answer questions about population parameters.

Content from Central Limit Theorem and the t-distribution

Last updated on 2026-01-20 | Edit this page

Overview

Questions

- What is a parameter from a population?

Objectives

Central Limit Theorem and t-distribution

Below we will discuss the Central Limit Theorem (CLT) and the t-distribution, both of which help us make important calculations related to probabilities. Both are frequently used in science to test statistical hypotheses. To use these, we have to make different assumptions from those for the CLT and the t-distribution. However, if the assumptions are true, then we are able to calculate the exact probabilities of events through the use of mathematical formula.

Central Limit Theorem

The CLT is one of the most frequently used mathematical results in science. It tells us that when the sample size is large, the average Ȳ of a random sample follows a normal distribution centered at the population average μY and with standard deviation equal to the population standard deviation σY, divided by the square root of the sample size N. We refer to the standard deviation of the distribution of a random variable as the random variable’s standard error.

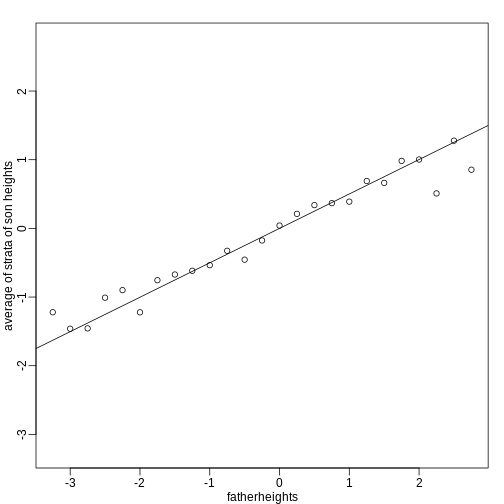

This implies that if we take many samples of size N, then the quantity:

is approximated with a normal distribution centered at 0 and with standard deviation 1.

We are interested in the difference between two sample averages. Again, applying certain mathematical principles, it can be implied that the below ratio:

is approximated by a normal distribution centered at 0 and standard deviation 1. Calculating p-values for the standard normal distribution is simple because we know the proportion of the distribution under any value. For example, only 5% of the values in the standard normal distribution are larger than 2 (in absolute value):

R

pnorm(-2) + (1 - pnorm(2))

OUTPUT

[1] 0.04550026We don’t need to buy more mice, 12 and 12 suffice.

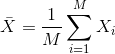

However, we can’t claim victory just yet because we don’t know the population standard deviations: σX and σY. These are unknown population parameters, but we can get around this by using the sample standard deviations, call them sX and sY. These are defined as:

Note that we are dividing by M-1 and N-1, instead of by M and N. There is a theoretical reason for doing this which we do not explain here.

So we can redefine our ratio as

if M = N or in general,

The CLT tells us that when M and N are large, this

random variable is normally distributed with mean 0 and SD 1. Thus we

can compute p-values using the function pnorm.

The t-distribution

The CLT relies on large samples, what we refer to as asymptotic results. When the CLT does not apply, there is another option that does not rely on asymptotic results. When the original population from which a random variable, say Y, is sampled is normally distributed with mean 0, then we can calculate the distribution of:

This is the ratio of two random variables so it is not necessarily normal. The fact that the denominator can be small by chance increases the probability of observing large values. William Sealy Gosset, an employee of the Guinness brewing company, deciphered the distribution of this random variable and published a paper under the pseudonym “Student”. The distribution is therefore called Student’s t-distribution. Later we will learn more about how this result is used.

Exercise 1

- If a list of numbers has a distribution that is well approximated by the normal distribution, what proportion of these numbers are within one standard deviation away from the list’s average?

- What proportion of these numbers are within two standard deviations away from the list’s average?

- What proportion of these numbers are within three standard deviations away from the list’s average?

- Define y to be the weights of males on the control diet. What

proportion of the mice are within one standard deviation away from the

average weight (remember to use

popsdfor the population sd)? - What proportion of these numbers are within two standard deviations away from the list’s average?

- What proportion of these numbers are within three standard deviations away from the list’s average?

- Note that the numbers for the normal distribution and our weights

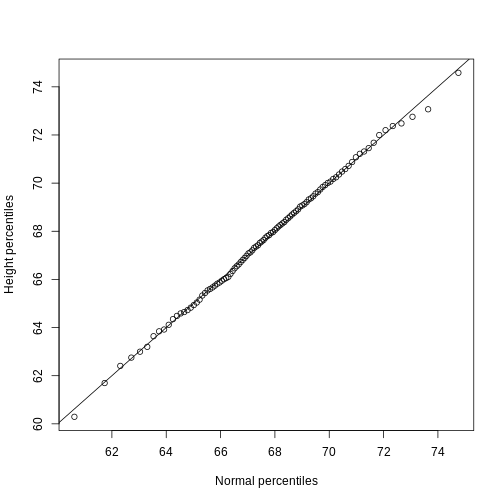

are relatively close. Also, notice that we are indirectly comparing

quantiles of the normal distribution to quantiles of the mouse weight

distribution. We can actually compare all quantiles using a qqplot.

Which of the following best describes the qq-plot comparing mouse

weights to the normal distribution?

- The points on the qq-plot fall exactly on the identity line.

- The average of the mouse weights is not 0 and thus it can’t follow a

normal distribution.

- The mouse weights are well approximated by the normal distribution,

although the larger values (right tail) are larger than predicted by the

normal. This is consistent with the differences seen between question 3

and 6.

- These are not random variables and thus they can’t follow a normal distribution.

- Create the above qq-plot for the four populations: male/females on

each of the two diets. What is the most likely explanation for the mouse

weights being well approximated?

What is the best explanation for all these being well approximated by the normal distribution?

- The CLT tells us that sample averages are approximately

normal.

- This just happens to be how nature behaves, perhaps the result of

many biological factors averaging out.

- Everything measured in nature follows a normal distribution.

- Measurement error is normally distributed.

-

pnorm(1)-pnorm(-1)= 0.6826895 (68%) -

pnorm(2)-pnorm(-2)= 0.9544997 (95%) -

pnorm(3)-pnorm(-3)= 0.9973002 (99%) -

install.packages("rafalib")library(rafalib)pheno <- read.csv(file = "../data/mice_pheno.csv")y <- pheno %>%filter(Sex == "M" & Diet == "chow") %>%select(Bodyweight) %>%unlist()sigma <- popsd(y, na.rm = TRUE)# 4.420545mu <- mean(y, na.rm = TRUE)# 30.96381y %>%filter(Bodyweight > mu - sigma & Bodyweight < mu + sigma) %>%count()/nrow(y)# 0.6919643 (~68%)y %>%filter(Bodyweight > mu - 2*sigma & Bodyweight < mu + 2*sigma) %>%count()/nrow(y)# 0.9419643 (~95%)filter(Bodyweight > mu - 3*sigma & Bodyweight < mu + 3*sigma) %>%count()/nrow(y)# 0.9866071 (~99%)

Exercise 2

Here we are going to use the function replicate to learn

about the distribution of random variables. All the above exercises

relate to the normal distribution as an approximation of the

distribution of a fixed list of numbers or a population. We have not yet

discussed probability in these exercises. If the distribution of a list

of numbers is approximately normal, then if we pick a number at random

from this distribution, it will follow a normal distribution. However,

it is important to remember that stating that some quantity has a

distribution does not necessarily imply this quantity is random. Also,

keep in mind that this is not related to the central limit theorem. The

central limit applies to averages of random variables. Let’s explore

this concept.

We will now take a sample of size 25 from the population of males on the

chow diet. The average of this sample is our random variable. We will

use the replicate to observe 10,000 realizations of this random

variable.

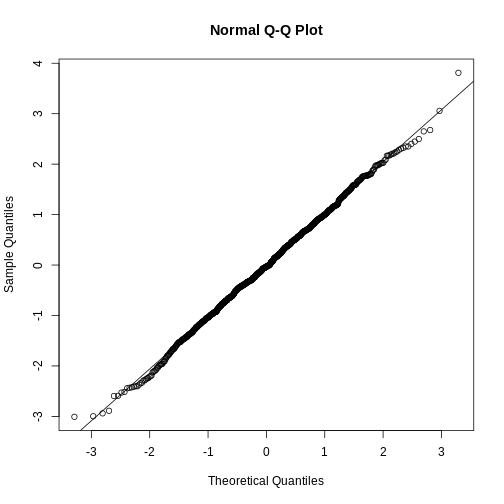

- Set the seed at 1, generate these 10,000 averages.

set.seed(1)y <- filter(pheno, Sex=="M" & Diet=="chow") %>%select(Bodyweight) %>%unlistavgs <- replicate(10000, mean(sample(y,25))) - Make a histogram and qq-plot these 10,000 numbers against the normal

distribution.

mypar(1,2)hist(avgs)qqnorm(avgs)qqline(avgs)

We can see that, as predicted by the CLT, the distribution of the random variable is very well approximated by the normal distribution. - What is the average of the distribution of the sample average?

- What is the standard deviation of the distribution of sample

averages?

According to the CLT, the answer to the exercise above should be the same asmean(y). You should be able to confirm that these two numbers are very close. - Which of the following does the CLT tell us should be close to your

answer to this exercise?

-

popsd(y)

-

popsd(avgs)/sqrt(25)

-

sqrt(25) / popsd(y)

popsd(y)/sqrt(25)

Here we will use the mice phenotype data as an example. We start by creating two vectors, one for the control population and one for the high-fat diet population:

R

# pheno <- read.csv("mice_pheno.csv") #We downloaded this file in a previous section

controlPopulation <- filter(pheno, Sex == "F" & Diet == "chow") %>%

select(Bodyweight) %>% unlist

hfPopulation <- filter(pheno, Sex == "F" & Diet == "hf") %>%

select(Bodyweight) %>% unlist

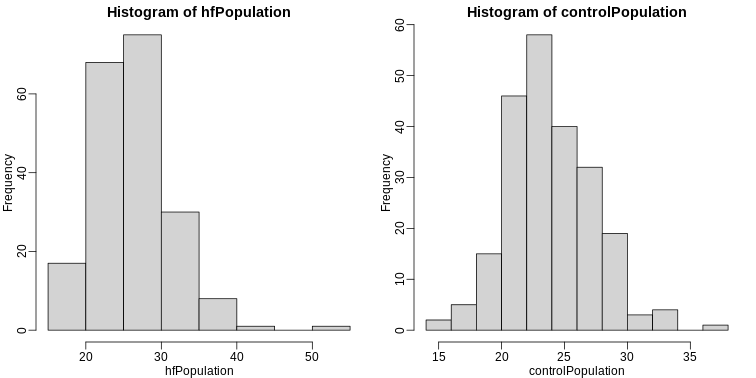

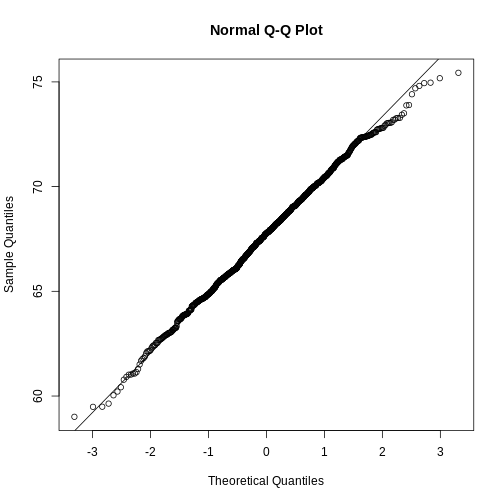

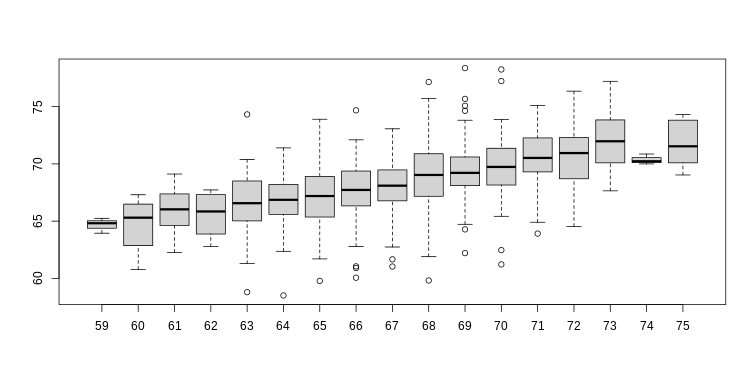

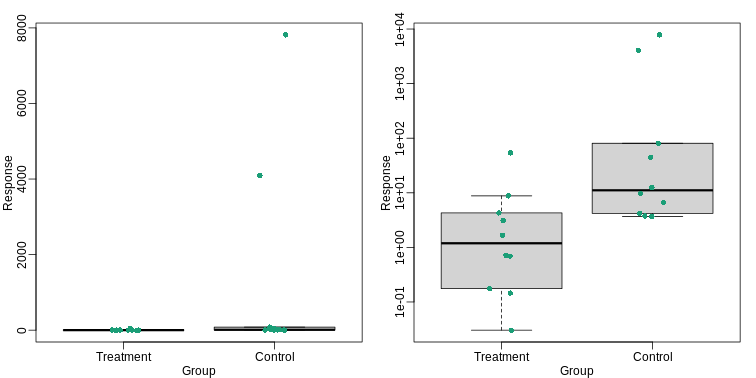

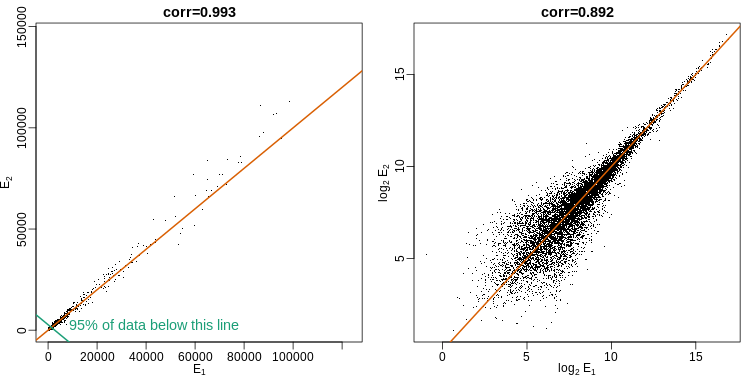

It is important to keep in mind that what we are assuming to be normal here is the distribution of y1,y2,…,yn, not the random variable Ȳ. Although we can’t do this in practice, in this illustrative example, we get to see this distribution for both controls and high fat diet mice:

R

library(rafalib)

mypar(1,2)

hist(hfPopulation)

hist(controlPopulation)

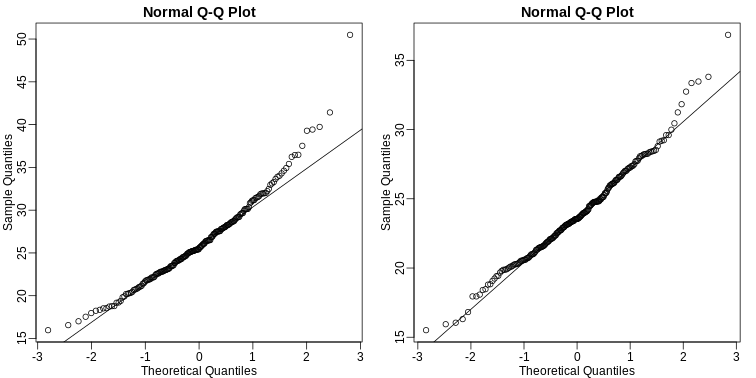

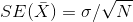

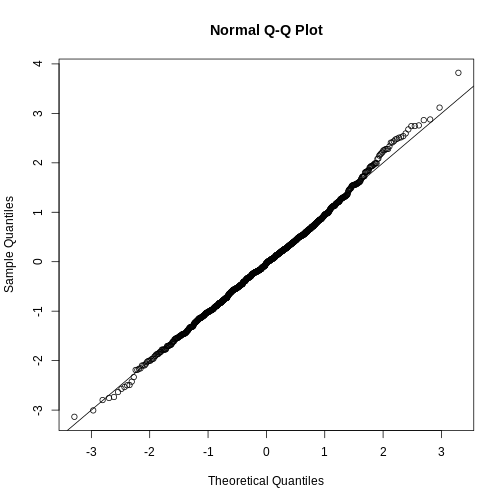

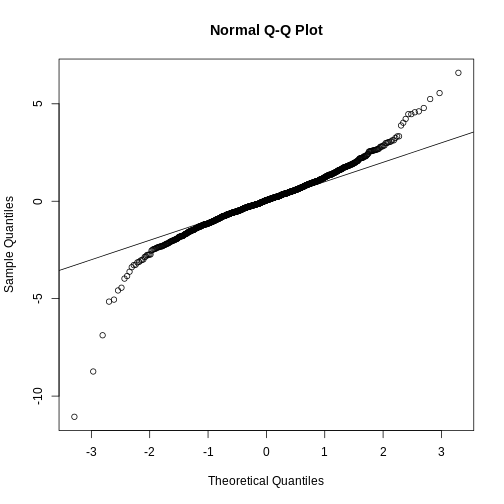

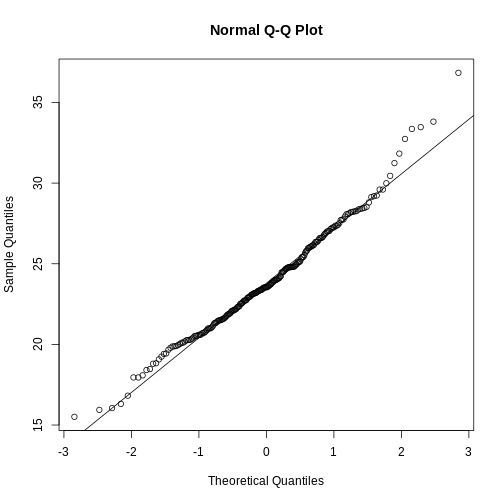

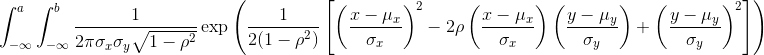

We can use qq-plots to confirm that the distributions are relatively close to being normally distributed. We will explore these plots in more depth in a later section, but the important thing to know is that it compares data (on the y-axis) against a theoretical distribution (on the x-axis). If the points fall on the identity line, then the data is close to the theoretical distribution.

R

mypar(1,2)

qqnorm(hfPopulation)

qqline(hfPopulation)

qqnorm(controlPopulation)

qqline(controlPopulation)

The larger the sample, the more forgiving the result is to the weakness of this approximation. In the next section, we will see that for this particular dataset the t-distribution works well even for sample sizes as small as 3.

All the above exercises relate to the normal distribution as an approximation of the distribution of a fixed list of numbers or a population. We have not yet discussed probability in these exercises. If the distribution of a list of numbers is approximately normal, then if we pick a number at random from this distribution, it will follow a normal distribution. However, it is important to remember that stating that some quantity has a distribution does not necessarily imply this quantity is random. Also, keep in mind that this is not related to the central limit theorem. The central limit applies to averages of random variables. Let’s explore this concept.

Exercise 3

We will now take a sample of size 25 from the population of males on

the chow diet. The average of this sample is our random variable. We

will use replicate to observe 10,000 realizations of this

random variable.

- Set the seed at 1.

- Generate these 10,000 averages.

- Make a histogram and qq-plot of these 10,000 numbers against the

normal distribution.

y <- filter(dat, Sex=="M" & Diet=="chow") %>%select(Bodyweight) %>%unlistavgs <- replicate(10000, mean( sample(y,25)))mypar(1,2)hist(avgs)qqnorm(avgs)qqline(avgs)

We can see that, as predicted by the CLT, the distribution of the random variable is very well approximated by the normal distribution. - What is the average of the distribution of the sample average?

- What is the standard deviation of the distribution of sample averages?

- According to the CLT, the answer to exercise 9 should be the same as

mean(y). You should be able to confirm that these two numbers are very close. Which of the following does the CLT tell us should be close to your answer to exercise 5?

-

popsd(y)

-

popsd(avgs) / sqrt(25)

-

sqrt(25) / popsd(y)

popsd(y) / sqrt(25)

- In practice we do not know

(popsd(y))which is why we can’t use the CLT directly. This is because we see a sample and not the entire distribution. We also can’t usepopsd(avgs)because to construct averages, we have to take 10,000 samples and this is never practical. We usually just get one sample. Instead we have to estimatepopsd(y). As described, what we use is the sample standard deviation.

Set the seed at 1. Using thereplicatefunction, create 10,000 samples of 25 and now, instead of the sample average, keep the standard deviation. Look at the distribution of the sample standard deviations. It is a random variable. The real population SD is about 4.5. What proportion of the sample SDs are below 3.5? - What the answer to question 7 reveals is that the denominator of the

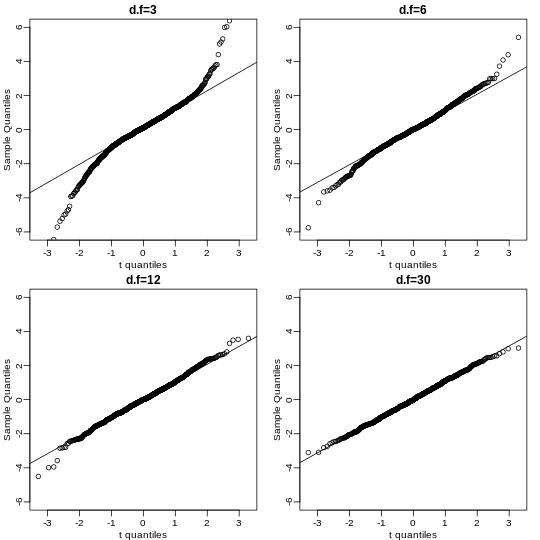

t-test is a random variable. By decreasing the sample size, you can see

how this variability can increase. It therefore adds variability. The

smaller the sample size, the more variability is added. The normal

distribution stops providing a useful approximation. When the

distribution of the population values is approximately normal, as it is

for the weights, the t-distribution provides a better approximation. We

will see this later on. Here we will look at the difference between the

t-distribution and normal. Use the function

qtandqnormto get the quantiles ofx=seq(0.0001, 0.9999, len=300). Do this for degrees of freedom 3, 10, 30, and 100. Which of the following is true?

- The t-distribution and normal distribution are always the

same.

- The t-distribution has a higher average than the normal

distribution.

- The t-distribution has larger tails up until 30 degrees of freedom,

at which point itis practically the same as the normal

distribution.

- The variance of the t-distribution grows as the degrees of freedom grow.

- We can calculate the exact probabilities of events through using mathematical formulas.

- The Central Limit Theorem and t-distribution have different assumptions.

- Both can be used to calculate probabilities.

Content from Central Limit Theorem in practice

Last updated on 2026-01-20 | Edit this page

Overview

Questions

- How is the CLT used in practice?

Objectives

Central Limit Theorem in Practice

Let’s use our data to see how well the central limit theorem approximates sample averages from our data. We will leverage our entire population dataset to compare the results we obtain by actually sampling from the distribution to what the CLT predicts.

R

# pheno <- read.csv("mice_pheno.csv") #file was previously downloaded

head(pheno)

OUTPUT

Sex Diet Bodyweight

1 F hf 31.94

2 F hf 32.48

3 F hf 22.82

4 F hf 19.92

5 F hf 32.22

6 F hf 27.50Start by selecting only female mice since males and females have different weights. We will select three mice from each population.

R

library(dplyr)

controlPopulation <- filter(pheno, Sex == "F" & Diet == "chow") %>%

select(Bodyweight) %>% unlist

hfPopulation <- filter(pheno, Sex == "F" & Diet == "hf") %>%

select(Bodyweight) %>% unlist

We can compute the population parameters of interest using the mean function.

R

mu_hf <- mean(hfPopulation)

mu_control <- mean(controlPopulation)

print(mu_hf - mu_control)

OUTPUT

[1] 2.375517We can compute the population standard deviations of, say, a vector

x as well. However, we do not use the R function sd

because this function actually does not compute the population standard

deviation σx. Instead, sd assumes the

main argument is a random sample, say X, and provides an estimate

of σx, defined by sX above. As shown

in the equations above the actual final answer differs because one

divides by the sample size and the other by the sample size minus one.

We can see that with R code:

R

x <- controlPopulation

N <- length(x)

populationvar <- mean((x-mean(x))^2)

identical(var(x), populationvar)

OUTPUT

[1] FALSER

identical(var(x)*(N-1)/N, populationvar)

OUTPUT

[1] TRUESo to be mathematically correct, we do not use sd or

var. Instead, we use the popvar and

popsd function in rafalib:

R

library(rafalib)

sd_hf <- popsd(hfPopulation)

sd_control <- popsd(controlPopulation)

Remember that in practice we do not get to compute these population parameters. These are values we never see. In general, we want to estimate them from samples.

R

N <- 12

hf <- sample(hfPopulation, 12)

control <- sample(controlPopulation, 12)

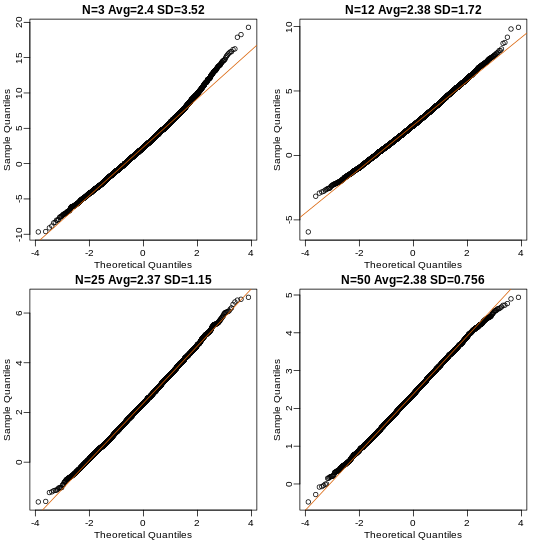

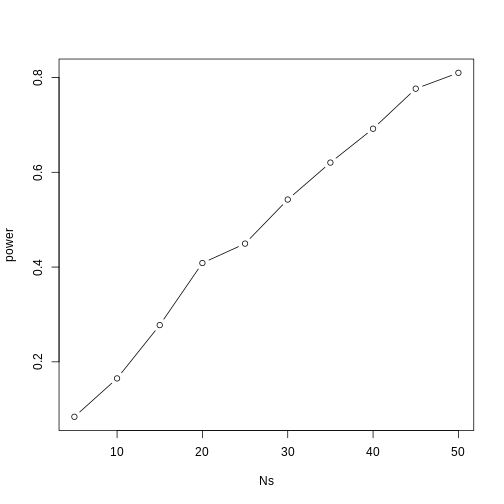

As we described, the CLT tells us that for large N, each of these is approximately normal with average population mean and standard error population variance divided by N. We mentioned that a rule of thumb is that N should be 30 or more. However, that is just a rule of thumb since the preciseness of the approximation depends on the population distribution. Here we can actually check the approximation and we do that for various values of N.

Now we use sapply and replicate instead of

for loops, which makes for cleaner code (we do not have to

pre-allocate a vector, R takes care of this for us):

R

Ns <- c(3,12,25,50)

B <- 10000 #number of simulations

res <- sapply(Ns,function(n) {

replicate(B,mean(sample(hfPopulation,n))-mean(sample(controlPopulation,n)))

})

Now we can use qq-plots to see how well CLT approximations works for these. If in fact the normal distribution is a good approximation, the points should fall on a straight line when compared to normal quantiles. The more it deviates, the worse the approximation. In the title, we also show the average and SD of the observed distribution, which demonstrates how the SD decreases with √N as predicted.

R

mypar(2,2)

for (i in seq(along=Ns)) {

titleavg <- signif(mean(res[,i]), 3)

titlesd <- signif(popsd(res[,i]), 3)

title <- paste0("N=", Ns[i]," Avg=", titleavg," SD=", titlesd)

qqnorm(res[,i], main=title)

qqline(res[,i], col=2)

}

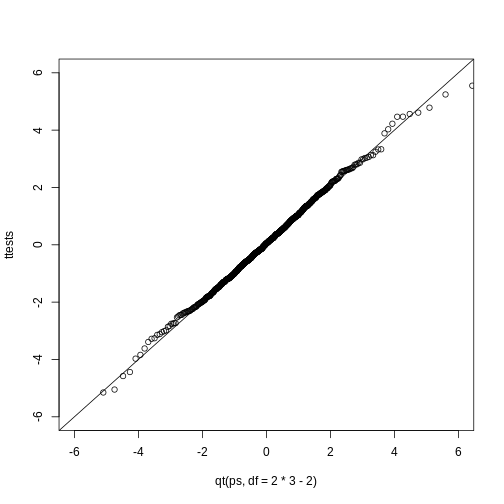

Here we see a pretty good fit even for 3. Why is this? Because the population itself is relatively close to normally distributed, the averages are close to normal as well (the sum of normals is also a normal). In practice, we actually calculate a ratio: we divide by the estimated standard deviation. Here is where the sample size starts to matter more.

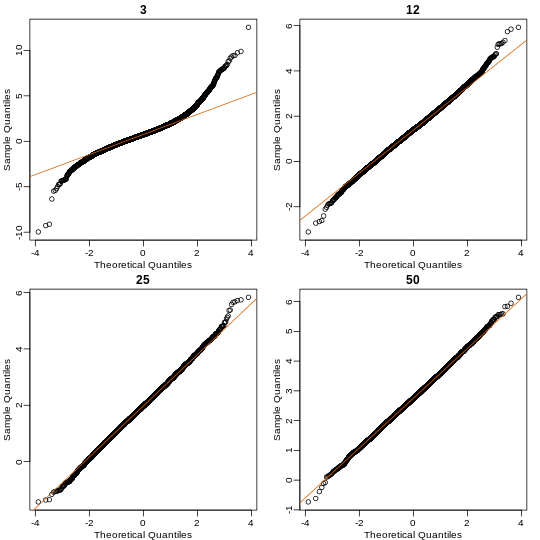

R

Ns <- c(3, 12, 25, 50)

B <- 10000 #number of simulations

##function to compute a t-stat

computetstat <- function(n) {

y <- sample(hfPopulation, n)

x <- sample(controlPopulation, n)

(mean(y) - mean(x))/sqrt(var(y)/n + var(x)/n)

}

res <- sapply(Ns, function(n) {

replicate(B, computetstat(n))

})

mypar(2,2)

for (i in seq(along=Ns)) {

qqnorm(res[,i], main=Ns[i])

qqline(res[,i], col=2)

}

So we see that for N = 3, the CLT does not provide a usable approximation. For N = 12, there is a slight deviation at the higher values, although the approximation appears useful. For 25 and 50, the approximation is spot on.

This simulation only proves that N = 12 is large enough in this case, not in general. As mentioned above, we will not be able to perform this simulation in most situations. We only use the simulation to illustrate the concepts behind the CLT and its limitations. In future sections, we will describe the approaches we actually use in practice.

These exercises use the female mouse weights data set we have previously downloaded.

Exercise 1

The CLT is a result from probability theory. Much of probability

theory was originally inspired by gambling. This theory is still used in

practice by casinos. For example, they can estimate how many people need

to play slots for there to be a 99.9999% probability of earning enough

money to cover expenses. Let’s try a simple example related to gambling.

Suppose we are interested in the proportion of times we see a 6 when

rolling n=100 die. This is a random variable which we can simulate with

x=sample(1:6, n, replace=TRUE) and the proportion we are

interested in can be expressed as an average: mean(x==6).

Because the die rolls are independent, the CLT applies. We want to roll

n dice 10,000 times and keep these proportions. This random

variable (proportion of 6s) has mean p=1/6 and variance p ×

(1-p)/n. So according to CLT z =

(mean(x==6) - p) / sqrt(p * (1-p)/n) should be normal with

mean 0 and SD 1.

Set the seed to 1, then use replicate to perform the

simulation, and report what proportion of times z was larger than 2 in

absolute value (CLT says it should be about 0.05).

Exercise 2

For the last simulation you can make a qqplot to confirm

the normal approximation. Now, the CLT is an asympototic result, meaning

it is closer and closer to being a perfect approximation as the sample

size increases. In practice, however, we need to decide if it is

appropriate for actual sample sizes. Is 10 enough? 15? 30? In the

example used in exercise 1, the original data is binary (either 6 or

not). In this case, the success probability also affects the

appropriateness of the CLT. With very low probabilities, we need larger

sample sizes for the CLT to “kick in”. Run the simulation from exercise

1, but for different values of p and n. For which of the

following is the normal approximation best?

A) p=0.5 and n=5

B) p=0.5 and n=30

C) p=0.01 and n=30

D) p=0.01 and n=100

Exercise 3

As we have already seen, the CLT also applies to averages of

quantitative data. A major difference with binary data, for which we

know the variance is p(1 - p), is that with quantitative data we

need to estimate the population standard deviation. In several previous

exercises we have illustrated statistical concepts with the unrealistic

situation of having access to the entire population. In practice, we do

not have access to entire populations. Instead, we obtain one random

sample and need to reach conclusions analyzing that data.

pheno is an example of a typical simple dataset

representing just one sample. We have 12 measurements for each of two

populations:

X <- filter(dat, Diet=="chow") %>%select(Bodyweight) %>%unlistY <- filter(dat, Diet=="hf") %>%select(Bodyweight) %>%unlist

We think of X as a random sample from the population of all mice

in the control diet and Y as a random sample from the population

of all mice in the high fat diet.

- Define the parameter μx as the average of the control population. We estimate this parameter with the sample average X̄. What is the sample average?

- We don’t know μX, but want to use X̄ to understand

μX. Which of the following uses CLT to understand how

well X̄ approximates μX?

- X̄ follows a normal distribution with mean 0 and standard deviation

1.

-

μX follows a normal distribution with mean X̄ and

standard deviation σX/√12 where σX

is the population standard deviation.

- X̄ follows a normal distribution with mean μX and

standard deviation σX where σX is

the population standard deviation.

- X̄ follows a normal distribution with mean μX and standard deviation σX/√12 where σX is the population standard deviation.

- The result above tells us the distribution of the following random

variable:

What does the CLT tell us is the mean

of Z (you don’t need code)?

What does the CLT tell us is the mean

of Z (you don’t need code)? - The result of 2 and 3 tell us that we know the distribution of the difference between our estimate and what we want to estimate, but don’t know. However, the equation involves the population standard deviation σX, which we don’t know. Given what we discussed, what is your estimate of σX?

- Use the CLT to approximate the probability that our estimate X̄ is off by more than 5.21 ounces from μX.

- Now we introduce the concept of a null hypothesis. We don’t know

μx nor μy. We want to quantify what

the data say about the possibility that the diet has no effect:

μx = μy. If we use CLT, then we approximate

the distribution of X̄ as normal with mean μX and

standard deviation σX and the distribution of Ȳ as

normal with mean μY and standard deviation

σY. This implies that the difference Ȳ - X̄ has mean 0.

We described that the standard deviation of this statistic (the standard

error) is

and that we estimate the

population standard deviations σX and

σY with the sample estimates. What is the estimate of

and that we estimate the

population standard deviations σX and

σY with the sample estimates. What is the estimate of

- So now we can compute Ȳ - X̄ as well as an estimate of this standard error and construct a t-statistic. What is this t-statistic?

- If we apply the CLT, what is the distribution of this

t-statistic?

- Normal with mean 0 and standard deviation 1.

- t-distributed with 22 degrees of freedom.

- Normal with mean 0 and standard deviation

- t-distributed with 12 degrees of freedom.

- Now we are ready to compute a p-value using the CLT. What is the probability of observing a quantity as large as what we computed in 8, when the null distribution is true?

- CLT provides an approximation for cases in which the sample size is

large. In practice, we can’t check the assumption because we only get to

see 1 outcome (which you computed above). As a result, if this

approximation is off, so is our p-value. As described earlier, there is

another approach that does not require a large sample size, but rather

that the distribution of the population is approximately normal. We

don’t get to see this distribution so it is again an assumption,

although we can look at the distribution of the sample with

qqnorm(X)andqqnorm(Y). If we are willing to assume this, then it follows that the t-statistic follows t-distribution. What is the p-value under the t-distribution approximation? Hint: use thet.testfunction. - With the CLT distribution, we obtained a p-value smaller than 0.05

and with the t-distribution, one that is larger. They can’t both be

right. What best describes the difference?

- A sample size of 12 is not large enough, so we have to use the

t-distribution approximation.

- These are two different assumptions. The t-distribution accounts for

the variability introduced by the estimation of the standard error and

thus, under the null, large values are more probable under the null

distribution.

- The population data is probably not normally distributed so the

t-distribution approximation is wrong.

- Neither assumption is useful. Both are wrong.

- .

- .

- .

- .

Content from t-tests in practice

Last updated on 2026-01-20 | Edit this page

Overview

Questions

- How are t-tests used in practice?

Objectives

t-tests in Practice

Introduction

We will now demonstrate how to obtain a p-value in practice. We begin by loading experimental data and walking you through the steps used to form a t-statistic and compute a p-value. We can perform this task with just a few lines of code (go to the end of this section to see them). However, to understand the concepts, we will construct a t-statistic from “scratch”.

Read in and prepare data

We start by reading in the data. A first important step is to identify which rows are associated with treatment and control, and to compute the difference in mean.

R

fWeights <- read.csv(file = "./data/femaleMiceWeights.csv") # we read this data in earlier

control <- filter(fWeights, Diet=="chow") %>%

select(Bodyweight) %>%

unlist

treatment <- filter(fWeights, Diet=="hf") %>%

select(Bodyweight) %>%

unlist

diff <- mean(treatment) - mean(control)

print(diff)

OUTPUT

[1] 3.020833We are asked to report a p-value. What do we do? We learned that

diff, referred to as the observed effect size, is

a random variable. We also learned that under the null hypothesis, the

mean of the distribution of diff is 0. What about the

standard error? We also learned that the standard error of this random

variable is the population standard deviation divided by the square root

of the sample size:

We use the sample standard deviation as an estimate of the population

standard deviation. In R, we simply use the sd function and

the SE is:

R

sd(control)/sqrt(length(control))

OUTPUT

[1] 0.8725323This is the SE of the sample average, but we actually want the SE of

diff. We saw how statistical theory tells us that the

variance of the difference of two random variables is the sum of its

variances, so we compute the variance and take the square root:

R

se <- sqrt(

var(treatment)/length(treatment) +

var(control)/length(control)

)

Statistical theory tells us that if we divide a random variable by its SE, we get a new random variable with an SE of 1.

R

tstat <- diff/se

This ratio is what we call the t-statistic. It’s the ratio of two random variables and thus a random variable. Once we know the distribution of this random variable, we can then easily compute a p-value.

As explained in the previous section, the CLT tells us that for large

sample sizes, both sample averages mean(treatment) and

mean(control) are normal. Statistical theory tells us that

the difference of two normally distributed random variables is again

normal, so CLT tells us that tstat is approximately normal

with mean 0 (the null hypothesis) and SD 1 (we divided by its SE).

So now to calculate a p-value all we need to do is ask: how often

does a normally distributed random variable exceed diff? R

has a built-in function, pnorm, to answer this specific

question. pnorm(a) returns the probability that a random

variable following the standard normal distribution falls below

a. To obtain the probability that it is larger than

a, we simply use 1-pnorm(a). We want to know

the probability of seeing something as extreme as diff:

either smaller (more negative) than -abs(diff) or larger

than abs(diff). We call these two regions “tails” and

calculate their size:

R

righttail <- 1 - pnorm(abs(tstat))

lefttail <- pnorm(-abs(tstat))

pval <- lefttail + righttail

print(pval)

OUTPUT

[1] 0.0398622In this case, the p-value is smaller than 0.05 and using the conventional cutoff of 0.05, we would call the difference statistically significant.

Now there is a problem. CLT works for large samples, but is 12 large enough? A rule of thumb for CLT is that 30 is a large enough sample size (but this is just a rule of thumb). The p-value we computed is only a valid approximation if the assumptions hold, which do not seem to be the case here. However, there is another option other than using CLT.

The t-distribution in Practice

As described earlier, statistical theory offers another useful result. If the distribution of the population is normal, then we can work out the exact distribution of the t-statistic without the need for the CLT. This is a big “if” given that, with small samples, it is hard to check if the population is normal. But for something like weight, we suspect that the population distribution is likely well approximated by normal and that we can use this approximation. Furthermore, we can look at a qq-plot for the samples. This shows that the approximation is at least close:

R

library(rafalib)

mypar(1,2)

qqnorm(treatment)

qqline(treatment, col=2)

qqnorm(control)

qqline(control, col=2)

If we use this approximation, then statistical theory tells us that

the distribution of the random variable tstat follows a

t-distribution. This is a much more complicated distribution than the

normal. The t-distribution has a location parameter like the normal and

another parameter called degrees of freedom. R has a nice

function that actually computes everything for us.

R

t.test(treatment, control)

OUTPUT

Welch Two Sample t-test

data: treatment and control

t = 2.0552, df = 20.236, p-value = 0.053

alternative hypothesis: true difference in means is not equal to 0

95 percent confidence interval:

-0.04296563 6.08463229

sample estimates:

mean of x mean of y

26.83417 23.81333 To see just the p-value, we can use the $ extractor:

R

result <- t.test(treatment,control)

result$p.value

OUTPUT

[1] 0.05299888The p-value is slightly bigger now. This is to be expected because

our CLT approximation considered the denominator of tstat

practically fixed (with large samples it practically is), while the

t-distribution approximation takes into account that the denominator

(the standard error of the difference) is a random variable. The smaller

the sample size, the more the denominator varies.

It may be confusing that one approximation gave us one p-value and another gave us another, because we expect there to be just one answer. However, this is not uncommon in data analysis. We used different assumptions, different approximations, and therefore we obtained different results.

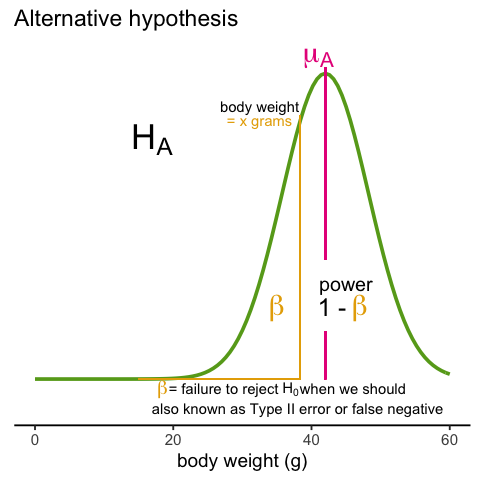

Later, in the power calculation section, we will describe type I and type II errors. As a preview, we will point out that the test based on the CLT approximation is more likely to incorrectly reject the null hypothesis (a false positive), while the t-distribution is more likely to incorrectly accept the null hypothesis (false negative).

Running the t-test in practice

Now that we have gone over the concepts, we can show the relatively simple code that one would use to actually compute a t-test:

R

control <- filter(fWeights, Diet=="chow") %>%

select(Bodyweight) %>%

unlist

treatment <- filter(fWeights, Diet=="hf") %>%

select(Bodyweight) %>%

unlist

t.test(treatment, control)

OUTPUT

Welch Two Sample t-test

data: treatment and control

t = 2.0552, df = 20.236, p-value = 0.053

alternative hypothesis: true difference in means is not equal to 0

95 percent confidence interval:

-0.04296563 6.08463229

sample estimates:

mean of x mean of y

26.83417 23.81333 The arguments to t.test can be of type

data.frame and thus we do not need to unlist them into numeric

objects.

- .

- .

- .

- .

Content from Confidence Intervals

Last updated on 2026-01-20 | Edit this page

Overview

Questions

- What is a confidence interval?

- When is it best to use a confidence interval?

Objectives

- Define a confidence interval.

- Explain why confidence intervals offer more information than p-values alone.

- Calculate a confidence interval.

Confidence Intervals

We have described how to compute p-values which are ubiquitous in the life sciences. However, we do not recommend reporting p-values as the only statistical summary of your results. The reason is simple: statistical significance does not guarantee scientific significance. With large enough sample sizes, one might detect a statistically significance difference in weight of, say, 1 microgram. But is this an important finding? Would we say a diet results in higher weight if the increase is less than a fraction of a percent? The problem with reporting only p-values is that you will not provide a very important piece of information: the effect size. Recall that the effect size is the observed difference. Sometimes the effect size is divided by the mean of the control group and so expressed as a percent increase.

A much more attractive alternative is to report confidence intervals. A confidence interval includes information about your estimated effect size and the uncertainty associated with this estimate. Here we use the mice data to illustrate the concept behind confidence intervals.

Confidence Interval for Population Mean

Before we show how to construct a confidence interval for the difference between the two groups, we will show how to construct a confidence interval for the population mean of control female mice. Then we will return to the group difference after we’ve learned how to build confidence intervals in the simple case. We start by reading in the data and selecting the appropriate rows:

R

pheno <- read.csv("./data/mice_pheno.csv") # we read this in earlier

chowPopulation <- pheno %>%

filter(Sex=="F" & Diet=="chow") %>%

select(Bodyweight) %>%

unlist

The population average μX is our parameter of interest here:

R

mu_chow <- mean(chowPopulation)

print(mu_chow)

OUTPUT

[1] 23.89338We are interested in estimating this parameter. In practice, we do not get to see the entire population so, as we did for p-values, we demonstrate how we can use samples to do this. Let’s start with a sample of size 30:

R

N <- 30

chow <- sample(chowPopulation, N)

print(mean(chow))

OUTPUT

[1] 24.03267We know this is a random variable, so the sample average will not be a perfect estimate. In fact, because in this illustrative example we know the value of the parameter, we can see that they are not exactly the same. A confidence interval is a statistical way of reporting our finding, the sample average, in a way that explicitly summarizes the variability of our random variable.

With a sample size of 30, we will use the CLT. The CLT tells us that

X̄ or mean(chow) follows a normal distribution with mean

μX or mean(chowPopulation) and standard

error approximately sX / √N or:

R

se <- sd(chow)/sqrt(N)

print(se)

OUTPUT

[1] 0.6875646Defining the Interval

A 95% confidence interval (we can use percentages other than 95%) is a random interval with a 95% probability of falling on the parameter we are estimating. Keep in mind that saying 95% of random intervals will fall on the true value (our definition above) is not the same as saying there is a 95% chance that the true value falls in our interval. To construct it, we note that the CLT tells us that √N (X̄ - μX) / sX follows a normal distribution with mean 0 and SD 1. This implies that the probability of this event:

which written in R code is:

R

pnorm(2) - pnorm(-2)

OUTPUT

[1] 0.9544997…is about 95% (to get closer use qnorm(1 - 0.05/2)

instead of 2). Now do some basic algebra to clear out everything and

leave μX alone in the middle and you get that the

following event:

has a probability of 95%.

Be aware that it is the edges of the interval X̄ ± 2sX / √N, not μX, that are random. Again, the definition of the confidence interval is that 95% of random intervals will contain the true, fixed value μX. For a specific interval that has been calculated, the probability is either 0 or 1 that it contains the fixed population mean μX.

Let’s demonstrate this logic through simulation. We can construct this interval with R relatively easily:

R

Q <- qnorm(1 - 0.05/2)

interval <- c(mean(chow) - Q * se, mean(chow) + Q * se )

interval

OUTPUT

[1] 22.68506 25.38027R

interval[1] < mu_chow & interval[2] > mu_chow

OUTPUT

[1] TRUEwhich happens to cover μX or

mean(chowPopulation). However, we can take another sample

and we might not be as lucky. In fact, the theory tells us that we will

cover μX 95% of the time. Because we have access to

the population data, we can confirm this by taking several new

samples:

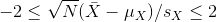

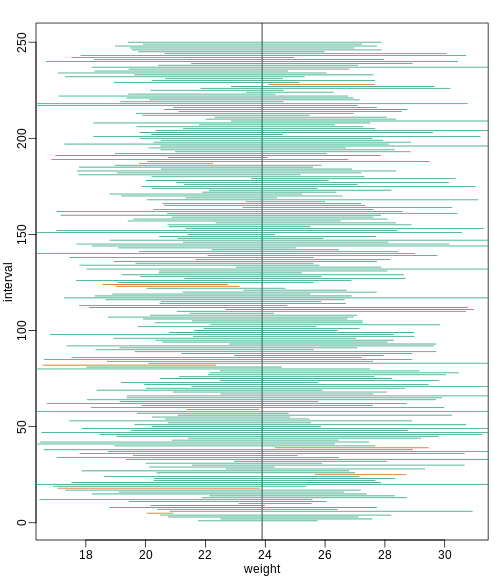

R

library(rafalib)

B <- 250

mypar()

plot(mean(chowPopulation) + c(-7,7), c(1,1), type="n",

xlab="weight", ylab="interval", ylim=c(1,B))

abline(v=mean(chowPopulation))

for (i in 1:B) {

chow <- sample(chowPopulation,N)

se <- sd(chow)/sqrt(N)

interval <- c(mean(chow) - Q * se, mean(chow) + Q * se)

covered <-

mean(chowPopulation) <= interval[2] & mean(chowPopulation) >= interval[1]

color <- ifelse(covered,1,2)

lines(interval, c(i,i),col=color)

}

You can run this repeatedly to see what happens. You will see that in about 5% of the cases, we fail to cover μX.

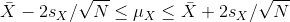

Small Sample Size and the CLT

For N = 30, the CLT works very well. However, if N = 5, do these confidence intervals work as well? We used the CLT to create our intervals, and with N = 5 it may not be as useful an approximation. We can confirm this with a simulation:

R

mypar()

plot(mean(chowPopulation) + c(-7,7), c(1,1), type="n",

xlab="weight", ylab="interval", ylim=c(1,B))

abline(v=mean(chowPopulation))

Q <- qnorm(1- 0.05/2)

N <- 5

for (i in 1:B) {

chow <- sample(chowPopulation, N)

se <- sd(chow)/sqrt(N)

interval <- c(mean(chow) - Q * se, mean(chow) + Q * se)

covered <- mean(chowPopulation) <= interval[2] & mean(chowPopulation) >= interval[1]

color <- ifelse(covered,1,2)

lines(interval, c(i,i),col=color)

}

Despite the intervals being larger (we are dividing by √5

instead of √30 ), we see many more intervals not covering

μX. This is because the CLT is incorrectly telling us

that the distribution of the mean(chow) is approximately

normal with standard deviation 1 when, in fact, it has a larger standard

deviation and a fatter tail (the parts of the distribution going to ±

∞). This mistake affects us in the calculation of Q,

which assumes a normal distribution and uses qnorm. The

t-distribution might be more appropriate. All we have to do is re-run

the above, but change how we calculate Q to use

qt instead of qnorm.

R

mypar()

plot(mean(chowPopulation) + c(-7,7), c(1,1), type="n",

xlab="weight", ylab="interval", ylim=c(1,B))

abline(v=mean(chowPopulation))

##Q <- qnorm(1- 0.05/2) ##no longer normal so use:

Q <- qt(1 - 0.05/2, df=4)

N <- 5

for (i in 1:B) {

chow <- sample(chowPopulation, N)

se <- sd(chow)/sqrt(N)

interval <- c(mean(chow) - Q * se, mean(chow) + Q * se )

covered <- mean(chowPopulation) <= interval[2] & mean(chowPopulation) >= interval[1]

color <- ifelse(covered,1,2)

lines(interval, c(i,i),col=color)

}

Now the intervals are made bigger. This is because the t-distribution has fatter tails and therefore:

R

qt(1 - 0.05/2, df=4)

OUTPUT

[1] 2.776445is bigger than…

R

qnorm(1 - 0.05/2)

OUTPUT

[1] 1.959964…which makes the intervals larger and hence cover μX more frequently; in fact, about 95% of the time.

Connection Between Confidence Intervals and p-values

We recommend that in practice confidence intervals be reported instead of p-values. If for some reason you are required to provide p-values, or required that your results are significant at the 0.05 of 0.01 levels, confidence intervals do provide this information.

If we are talking about a t-test p-value, we are asking if differences as extreme as the one we observe, Ȳ - X̄, are likely when the difference between the population averages is actually equal to zero. So we can form a confidence interval with the observed difference. Instead of writing Ȳ - X̄ repeatedly, let’s define this difference as a new variable d ≡ Ȳ - X̄.

Suppose you use CLT and report

with

with