Content from Singularity: Getting started

Last updated on 2024-09-17 | Edit this page

Estimated time: 50 minutes

Overview

Questions

- What is Singularity and why might I want to use it?

Objectives

- Understand what Singularity is and when you might want to use it.

- Undertake your first run of a simple Singularity container.

The episodes in this lesson will introduce you to the Singularity container platform and demonstrate how to set up and use Singularity.

This material is split into 2 parts:

Part I: Basic usage, working with images

- Singularity: Getting started: This introductory episode

Working with Singularity containers:

- The singularity cache: Why, where and how does Singularity cache images locally?

- Running commands within a Singularity container: How to run commands within a Singularity container.

- Working with files and Singularity containers: Moving files into a Singularity container; accessing files on the host from within a container.

- Using Docker images with Singularity: How to run Singularity containers from Docker images.

- Preparing to build Singularity images: Getting started with the Docker Singularity container.

- Building Singularity images: Explaining how to build and share your own Singularity images.

- Running MPI parallel jobs using Singularity containers: Explaining how to run MPI parallel codes from within Singularity containers.

Work in progress…

This lesson is new material that is under ongoing development. We will introduce Singularity and demonstrate how to work with it. As the tools and best practices continue to develop, elements of this material are likely to evolve. We welcome any comments or suggestions on how the material can be improved or extended.

Singularity - Part I

What is Singularity?

Singularity is a container platform that allows software engineers and researchers to easily share their work with others by packaging and deploying their software applications in a portable and reproducible manner. When you download a Singularity container image, you essentially receive a virtual computer disk that contains all of the necessary software, libraries and configuration to run one or more applications or undertake a particular task, e.g. to support a specific research project. This saves you the time and effort of installing and configuring software on your own system or setting up a new computer from scratch, as you can simply run a Singularity container from the image and have a virtual environment that is identical to the one used by the person who created the image. Container platforms like Singularity provide a convenient and consistent way to access and run software and tools. Singularity is increasingly widely used in the research community for supporting research projects as it allows users to isolate their software environments from the host operating system and can simplify tasks such as running multiple experiments simultaneously.

You may be familiar with Docker, another container platform that is now used widely. If you are, you will see that in some ways, Singularity is similar to Docker. However, in others, particularly in the system’s architecture, it is fundamentally different. These differences mean that Singularity is particularly well-suited to running on distributed, High Performance Computing (HPC) infrastructure, as well as a Linux laptop or desktop!

Later in this material, when we come to look at building Singularity images ourselves, we will make use of Docker to provide an environment in which we can run Singularity with administrative privileges. In this context, some basic knowledge of Docker is strongly recommended. If you are covering this module independently, or as part of a course that hasn’t covered Docker, you can find an introduction to Docker in the “Reproducible Computational Environments Using Containers: Introduction to Docker” lesson.

System administrators will not, generally, install Docker on shared computing platforms such as lab desktops, research clusters or HPC platforms because the design of Docker presents potential security issues for shared platforms with multiple users. Singularity, on the other hand, can be run by end-users entirely within “user space”, that is, no special administrative privileges need to be assigned to a user in order for them to run and interact with containers on a platform where Singularity has been installed.

What is the relationship between Singularity, SingularityCE and Apptainer?

Singularity is open source and was initially developed within the research community. The company Sylabs was founded in 2018 to provide commercial support for Singularity. In May 2021, Sylabs “forked” the codebase to create a new project called SingularityCE (where CE means “Community Edition”). This in effect marks a common point from which two projects—SingularityCE and Singularity—developed. Sylabs continue to develop both the free, open source SingularityCE and a Pro/Enterprise edition of the software. In November 2021, the original open source Singularity project renamed itself to Apptainer and joined the Linux Foundation.

At the time of writing, in the context of the material covered in

this lesson, Apptainer and Singularity are effectively interchangeable.

If you are working on a platform that now has Apptainer installed, you

might find that the only change you need to make when working through

this material is to use the the command apptainer instead

of singularity. This course will continue to refer to

Singularity until differences between the projects warrant choosing one

project or the other for the course material.

Getting started with Singularity

Initially developed within the research community, Singularity is open source and the repository is currently available in the “The Next Generation of High Performance Computing” GitHub organisation. Part I of this Singularity material is intended to be undertaken on a remote platform where Singularity has been pre-installed.

If you’re attending a taught version of this course, you will be provided with access details for a remote platform made available to you for use for Part I of the Singularity material. This platform will have the Singularity software pre-installed.

Installing Singularity on your own laptop/desktop

If you have a Linux system on which you have administrator access and you would like to install Singularity on this system, some information is provided at the start of Part II of the Singularity material.

Sign in to the remote platform, with Singularity installed, that

you’ve been provided with access to. Check that the

singularity command is available in your terminal:

OUTPUT

singularity version 3.5.3Depending on the version of Singularity installed on your system, you

may see a different version. At the time of writing, v3.5.3

is the latest release of Singularity.

Images and containers

We’ll start with a brief note on the terminology used in this section of the course. We refer to both images and containers. What is the distinction between these two terms?

Images are bundles of files including an operating system, software and potentially data and other application-related files. They may sometimes be referred to as a disk image or container image and they may be stored in different ways, perhaps as a single file, or as a group of files. Either way, we refer to this file, or collection of files, as an image.

A container is a virtual environment that is based on an image. That is, the files, applications, tools, etc that are available within a running container are determined by the image that the container is started from. It may be possible to start multiple container instances from an image. You could, perhaps, consider an image to be a form of template from which running container instances can be started.

Getting an image and running a Singularity container

If you recall from learning about Docker, Docker images are formed of

a set of layers that make up the complete image. When you pull

a Docker image from Docker Hub, you see the different layers being

downloaded to your system. They are stored in your local Docker

repository on your system and you can see details of the available

images using the docker command.

Singularity images are a little different. Singularity uses the Singularity Image Format (SIF)

and images are provided as single SIF files (with a

.sif filename extension). Singularity images can be pulled

from Singularity Hub, a

registry for container images. Singularity is also capable of running

containers based on images pulled from Docker Hub and some other sources.

We’ll look at accessing containers from Docker Hub later in the

Singularity material.

Singularity Hub

Note that in addition to providing a repository that you can pull images from, Singularity Hub can also build Singularity images for you from a recipe - a configuration file defining the steps to build an image. We’ll look at recipes and building images later.

Let’s begin by creating a test directory, changing into

it and pulling a test Hello World image from

Singularity Hub:

OUTPUT

INFO: Downloading shub image

59.75 MiB / 59.75 MiB [===============================================================================================================] 100.00% 52.03 MiB/s 1sWhat just happened?! We pulled a SIF image from Singularity Hub using

the singularity pull command and directed it to store the

image file using the name hello-world.sif in the current

directory. If you run the ls command, you should see that

the hello-world.sif file is now present in the current

directory. This is our image and we can now run a container based on

this image:

OUTPUT

RaawwWWWWWRRRR!! Avocado!The above command ran the hello-world container from the image we downloaded from Singularity Hub and the resulting output was shown.

How did the container determine what to do when we ran it?! What did running the container actually do to result in the displayed output?

When you run a container from a Singularity image without using any

additional command line arguments, the container runs the default run

script that is embedded within the image. This is a shell script that

can be used to run commands, tools or applications stored within the

image on container startup. We can inspect the image’s run script using

the singularity inspect command:

OUTPUT

#!/bin/sh

exec /bin/bash /rawr.shThis shows us the script within the hello-world.sif

image configured to run by default when we use the

singularity run command.

That concludes this introductory Singularity episode. The next episode looks in more detail at running containers.

Key Points

- Singularity is another container platform and it is often used in cluster/HPC/research environments.

- Singularity has a different security model to other container platforms, one of the key reasons that it is well suited to HPC and cluster environments.

- Singularity has its own container image format (SIF).

- The

singularitycommand can be used to pull images from Singularity Hub and run a container from an image file.

Content from The Singularity cache

Last updated on 2024-09-17 | Edit this page

Estimated time: 10 minutes

Overview

Questions

- Why does Singularity use a local cache?

- Where does Singularity store images?

Objectives

- Learn about Singularity’s image cache.

- Learn how to manage Singularity images stored locally.

Singularity’s image cache

While Singularity doesn’t have a local image repository in the same

way as Docker, it does cache downloaded image files. As we saw in the

previous episode, images are simply .sif files stored on

your local disk.

If you delete a local .sif image that you have pulled

from a remote image repository and then pull it again, if the image is

unchanged from the version you previously pulled, you will be given a

copy of the image file from your local cache rather than the image being

downloaded again from the remote source. This removes unnecessary

network transfers and is particularly useful for large images which may

take some time to transfer over the network. To demonstrate this, remove

the hello-world.sif file stored in your test

directory and then issue the pull command again:

OUTPUT

INFO: Use image from cacheAs we can see in the above output, the image has been returned from the cache and we don’t see the output that we saw previously showing the image being downloaded from Singularity Hub.

How do we know what is stored in the local cache? We can find out

using the singularity cache command:

OUTPUT

There are 1 container file(s) using 62.65 MB and 0 oci blob file(s) using 0.00 kB of space

Total space used: 62.65 MBThis tells us how many container files are stored in the cache and

how much disk space the cache is using but it doesn’t tell us

what is actually being stored. To find out more information we

can add the -v verbose flag to the list

command:

OUTPUT

NAME DATE CREATED SIZE TYPE

hello-world_latest.sif 2020-04-03 13:20:44 62.65 MB shub

There are 1 container file(s) using 62.65 MB and 0 oci blob file(s) using 0.00 kB of space

Total space used: 62.65 MBThis provides us with some more useful information about the actual

images stored in the cache. In the TYPE column we can see

that our image type is shub because it’s a SIF

image that has been pulled from Singularity Hub.

Cleaning the Singularity image cache

We can remove images from the cache using the

singularity cache clean command. Running the command

without any options will display a warning and ask you to confirm that

you want to remove everything from your cache.

You can also remove specific images or all images of a particular

type. Look at the output of singularity cache clean --help

for more information.

Cache location

By default, Singularity uses $HOME/.singularity/cache as

the location for the cache. You can change the location of the cache by

setting the SINGULARITY_CACHEDIR environment variable to

the cache location you want to use.

Key Points

- Singularity caches downloaded images so that an unchanged image

isn’t downloaded again when it is requested using the

singularity pullcommand. - You can free up space in the cache by removing all locally cached images or by specifying individual images to remove.

Content from Using Singularity containers to run commands

Last updated on 2024-09-17 | Edit this page

Estimated time: 15 minutes

Overview

Questions

- How do I run different commands within a container?

- How do I access an interactive shell within a container?

Objectives

- Learn how to run different commands when starting a container.

- Learn how to open an interactive shell within a container environment.

Running specific commands within a container

We saw earlier that we can use the singularity inspect

command to see the run script that a container is configured to run by

default. What if we want to run a different command within a

container?

If we know the path of an executable that we want to run within a

container, we can use the singularity exec command. For

example, using the hello-world.sif container that we’ve

already pulled from Singularity Hub, we can run the following within the

test directory where the hello-world.sif file

is located:

OUTPUT

Hello World!Here we see that a container has been started from the

hello-world.sif image and the /bin/echo

command has been run within the container, passing the input

Hello World!. The command has echoed the provided input to

the console and the container has terminated.

Note that the use of singularity exec has overriden any

run script set within the image metadata and the command that we

specified as an argument to singularity exec has been run

instead.

Basic exercise: Running a different command within the “hello-world” container

Can you run a container based on the hello-world.sif

image that prints the current date and time?

#### The difference between

singularity run and

singularity exec

Above we used the singularity exec command. In earlier

episodes of this course we used singularity run. To

clarify, the difference between these two commands is:

singularity run: This will run the default command set for containers based on the specfied image. This default command is set within the image metadata when the image is built (we’ll see more about this in later episodes). You do not specify a command to run when usingsingularity run, you simply specify the image file name. As we saw earlier, you can use thesingularity inspectcommand to see what command is run by default when starting a new container based on an image.singularity exec: This will start a container based on the specified image and run the command provided on the command line followingsingularity exec <image file name>. This will override any default command specified within the image metadata that would otherwise be run if you usedsingularity run.

Opening an interactive shell within a container

If you want to open an interactive shell within a container,

Singularity provides the singularity shell command. Again,

using the hello-world.sif image, and within our

test directory, we can run a shell within a container from

the hello-world image:

OUTPUT

Singularity> whoami

[<your username>]

Singularity> ls

hello-world.sif

Singularity> As shown above, we have opened a shell in a new container started

from the hello-world.sif image. Note that the shell prompt

has changed to show we are now within the Singularity container.

Discussion: Running a shell inside a Singularity container

Q: What do you notice about the output of the above commands entered within the Singularity container shell?

Q: Does this differ from what you might see within a Docker container?

Use the exit command to exit from the container

shell.

Key Points

- The

singularity execis an alternative tosingularity runthat allows you to start a container running a specific command. - The

singularity shellcommand can be used to start a container and run an interactive shell within it.

Content from Files in Singularity containers

Last updated on 2024-09-17 | Edit this page

Estimated time: 20 minutes

Overview

Questions

- How do I make data available in a Singularity container?

- What data is made available by default in a Singularity container?

Objectives

- Understand that some data from the host system is usually made available by default within a container

- Learn more about how Singularity handles users and binds directories from the host filesystem.

The way in which user accounts and access permissions are handeld in Singularity containers is very different from that in Docker (where you effectively always have superuser/root access). When running a Singularity container, you only have the same permissions to access files as the user you are running as on the host system.

In this episode we’ll look at working with files in the context of Singularity containers and how this links with Singularity’s approach to users and permissions within containers.

Users within a Singularity container

The first thing to note is that when you ran whoami

within the container shell you started at the end of the previous

episode, you should have seen the username that you were signed in as on

the host system when you ran the container.

For example, if my username were jc1000, I’d expect to

see the following:

But hang on! I downloaded the standard, public version of the

hello-world.sif image from Singularity Hub. I haven’t

customised it in any way. How is it configured with my own user

details?!

If you have any familiarity with Linux system administration, you may

be aware that in Linux, users and their Unix groups are configured in

the /etc/passwd and /etc/group files

respectively. In order for the shell within the container to know of my

user, the relevant user information needs to be available within these

files within the container.

Assuming this feature is enabled within the installation of

Singularity on your system, when the container is started, Singularity

appends the relevant user and group lines from the host system to the

/etc/passwd and /etc/group files within the

container \[1\].

This means that the host system can effectively ensure that you cannot access/modify/delete any data you should not be able to on the host system and you cannot run anything that you would not have permission to run on the host system since you are restricted to the same user permissions within the container as you are on the host system.

Files and directories within a Singularity container

Singularity also binds some directories from the

host system where you are running the singularity command

into the container that you’re starting. Note that this bind process is

not copying files into the running container, it is making an existing

directory on the host system visible and accessible within the container

environment. If you write files to this directory within the running

container, when the container shuts down, those changes will persist in

the relevant location on the host system.

There is a default configuration of which files and directories are bound into the container but ultimate control of how things are set up on the system where you are running Singularity is determined by the system administrator. As a result, this section provides an overview but you may find that things are a little different on the system that you’re running on.

One directory that is likely to be accessible within a container that

you start is your home directory. You may also find that the

directory from which you issued the singularity command

(the current working directory) is also mapped.

The mapping of file content and directories from a host system into a Singularity container is illustrated in the example below showing a subset of the directories on the host Linux system and in a Singularity container:

OUTPUT

Host system: Singularity container:

------------- ----------------------

/ /

├── bin ├── bin

├── etc ├── etc

│ ├── ... │ ├── ...

│ ├── group ─> user's group added to group file in container ─>│ ├── group

│ └── passwd ──> user info added to passwd file in container ──>│ └── passwd

├── home ├── usr

│ └── jc1000 ───> user home directory made available ──> ─┐ ├── sbin

├── usr in container via bind mount │ ├── home

├── sbin └────────>└── jc1000

└── ... └── ...

Questions and exercises: Files in Singularity containers

Q1: What do you notice about the ownership of files

in a container started from the hello-world image? (e.g. take a look at

the ownership of files in the root directory (/))

Exercise 1: In this container, try editing (for

example using the editor vi which should be avaiable in the

container) the /rawr.sh file. What do you notice?

If you’re not familiar with vi there are many quick

reference pages online showing the main commands for using the editor,

for example this

one.

Exercise 2: In your home directory within the container shell, try and create a simple text file. Is it possible to do this? If so, why? If not, why not?! If you can successfully create a file, what happens to it when you exit the shell and the container shuts down?

A1: Use the ls -l command to see a

detailed file listing including file ownership and permission details.

You should see that most of the files in the / directory

are owned by root, as you’d probably expect on any Linux

system. If you look at the files in your home directory, they should be

owned by you.

A Ex1: We’ve already seen from the previous answer

that the files in / are owned by root so we

wouldn’t expect to be able to edit them if we’re not the root user.

However, if you tried to edit /rawr.sh you probably saw

that the file was read only and, if you tried for example to delete the

file you would have seen an error similar to the following:

cannot remove '/rawr.sh': Read-only file system. This tells

us something else about the filesystem. It’s not just that we don’t have

permission to delete the file, the filesystem itself is read-only so

even the root user wouldn’t be able to edit/delete this

file. We’ll look at this in more detail shortly.

A Ex2: Within your home directory, you should be able to successfully create a file. Since you’re seeing your home directory on the host system which has been bound into the container, when you exit and the container shuts down, the file that you created within the container should still be present when you look at your home directory on the host system.

Binding additional host system directories to the container

You will sometimes need to bind additional host system directories into a container you are using over and above those bound by default. For example:

- There may be a shared dataset in a shard location that you need access to in the container

- You may require executables and software libraries in the container

The -B option to the singularity command is

used to specify additonal binds. For example, to bind the

/work/z19/shared directory into a container you could use

(note this directory is unlikely to exist on the host system you are

using so you’ll need to test this using a different directory):

OUTPUT

CP2K-regtest cube eleanor image256x192.pgm kevin pblas q-e-qe-6.7

ebe evince.simg image512x384.pgm low_priority.slurm pblas.tar.gz q-qe

Q1529568 edge192x128.pgm extrae image768x1152.pgm mkdir petsc regtest-ls-rtp_forCray

adrianj edge256x192.pgm gnuplot-5.4.1.tar.gz image768x768.pgm moose.job petsc-hypre udunits-2.2.28.tar.gz

antlr-2.7.7.tar.gz edge512x384.pgm hj job-defmpi-cpe-21.03-robust mrb4cab petsc-hypre-cpe21.03 xios-2.5

cdo-archer2.sif edge768x768.pgm image192x128.pgm jsindt paraver petsc-hypre-cpe21.03-gcc10.2.0Note that, by default, a bind is mounted at the same path in the

container as on the host system. You can also specify where a host

directory is mounted in the container by separating the host path from

the container path by a colon (:) in the option:

BASH

$ singularity shell -B /work/z19/shared:/shared-data hello-world.sif

Singularity> ls /shared-dataOUTPUT

CP2K-regtest cube eleanor image256x192.pgm kevin pblas q-e-qe-6.7

ebe evince.simg image512x384.pgm low_priority.slurm pblas.tar.gz q-qe

Q1529568 edge192x128.pgm extrae image768x1152.pgm mkdir petsc regtest-ls-rtp_forCray

adrianj edge256x192.pgm gnuplot-5.4.1.tar.gz image768x768.pgm moose.job petsc-hypre udunits-2.2.28.tar.gz

antlr-2.7.7.tar.gz edge512x384.pgm hj job-defmpi-cpe-21.03-robust mrb4cab petsc-hypre-cpe21.03 xios-2.5

cdo-archer2.sif edge768x768.pgm image192x128.pgm jsindt paraver petsc-hypre-cpe21.03-gcc10.2.0You can also specify multiple binds to -B by separating

them by commas (,).

You can also copy data into a container image at build time if there is some static data required in the image. We cover this later in the section on building Singularity containers.

References

\[1\] Gregory M. Kurzer, Containers for Science, Reproducibility and Mobility: Singularity P2. Intel HPC Developer Conference, 2017. Available at: https://www.intel.com/content/dam/www/public/us/en/documents/presentation/hpc-containers-singularity-advanced.pdf

Key Points

- Your current directory and home directory are usually available by default in a container.

- You have the same username and permissions in a container as on the host system.

- You can specify additional host system directories to be available in the container.

Content from Using Docker images with Singularity

Last updated on 2024-09-17 | Edit this page

Estimated time: 15 minutes

Overview

Questions

- How do I use Docker images with Singularity?

Objectives

- Learn how to run Singularity containers based on Docker images.

Using Docker images with Singularity

Singularity can also start containers directly from Docker images, opening up access to a huge number of existing container images available on Docker Hub and other registries.

While Singularity doesn’t actually run a container using the Docker image (it first converts it to a format suitable for use by Singularity), the approach used provides a seamless experience for the end user. When you direct Singularity to run a container based on pull a Docker image, Singularity pulls the slices or layers that make up the Docker image and converts them into a single-file Singularity SIF image.

For example, moving on from the simple Hello World examples

that we’ve looked at so far, let’s pull one of the official Docker Python

images. We’ll use the image with the tag

3.9.6-slim-buster which has Python 3.9.6 installed on

Debian’s Buster

(v10) Linux distribution:

OUTPUT

INFO: Converting OCI blobs to SIF format

INFO: Starting build...

Getting image source signatures

Copying blob 33847f680f63 done

Copying blob b693dfa28d38 done

Copying blob ef8f1a8cefd1 done

Copying blob 248d7d56b4a7 done

Copying blob 478d2dfa1a8d done

Copying config c7d70af7c3 done

Writing manifest to image destination

Storing signatures

2021/07/27 17:23:38 info unpack layer: sha256:33847f680f63fb1b343a9fc782e267b5abdbdb50d65d4b9bd2a136291d67cf75

2021/07/27 17:23:40 info unpack layer: sha256:b693dfa28d38fd92288f84a9e7ffeba93eba5caff2c1b7d9fe3385b6dd972b5d

2021/07/27 17:23:40 info unpack layer: sha256:ef8f1a8cefd144b4ee4871a7d0d9e34f67c8c266f516c221e6d20bca001ce2a5

2021/07/27 17:23:40 info unpack layer: sha256:248d7d56b4a792ca7bdfe866fde773a9cf2028f973216160323684ceabb36451

2021/07/27 17:23:40 info unpack layer: sha256:478d2dfa1a8d7fc4d9957aca29ae4f4187bc2e5365400a842aaefce8b01c2658

INFO: Creating SIF file...Note how we see singularity saying that it’s “Converting OCI blobs to SIF format”. We then see the layers of the Docker image being downloaded and unpacked and written into a single SIF file. Once the process is complete, we should see the python-3.9.6.sif image file in the current directory.

We can now run a container from this image as we would with any other singularity image.

Running the Python 3.9.6 image that we just pulled from Docker Hub

Try running the Python 3.9.6 image. What happens?

Try running some simple Python statements…

This should put you straight into a Python interactive shell within the running container:

Python 3.9.6 (default, Jul 22 2021, 15:24:21)

[GCC 8.3.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> Now try running some simple Python statements:

In addition to running a container and having it run the default run script, you could also start a container running a shell in case you want to undertake any configuration prior to running Python. This is covered in the following exercise:

Open a shell within a Python container

Try to run a shell within a singularity container based on the

python-3.9.6.sif image. That is, run a container that opens

a shell rather than the default Python interactive console as we saw

above. See if you can find more than one way to achieve this.

Within the shell, try starting the Python interactive console and running some Python commands.

Recall from the earlier material that we can use the

singularity shell command to open a shell within a

container. To open a regular shell within a container based on the

python-3.9.6.sif image, we can therefore simply run:

OUTPUT

Singularity> echo $SHELL

/bin/bash

Singularity> cat /etc/issue

Debian GNU/Linux 10 \n \l

Singularity> python

Python 3.9.6 (default, Jul 22 2021, 15:24:21)

[GCC 8.3.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> print('Hello World!')

Hello World!

>>> exit()

Singularity> exit

$ It is also possible to use the singularity exec command

to run an executable within a container. We could, therefore, use the

exec command to run /bin/bash:

OUTPUT

Singularity> echo $SHELL

/bin/bashYou can run the Python console from your container shell simply by

running the python command.

This concludes the fifth episode and Part I of the Singularity material. Part II contains a further three episodes where we’ll look at creating your own images and then more advanced use of containers for running MPI parallel applications.

References

\[1\] Gregory M. Kurzer, Containers for Science, Reproducibility and Mobility: Singularity P2. Intel HPC Developer Conference, 2017. Available at: https://www.intel.com/content/dam/www/public/us/en/documents/presentation/hpc-containers-singularity-advanced.pdf

Key Points

- Singularity can start a container from a Docker image which can be pulled directly from Docker Hub.

Content from Preparing to build Singularity images

Last updated on 2024-09-17 | Edit this page

Estimated time: 35 minutes

Overview

Questions

- What environment do I need to build a Singularity image and how do I set it up?

Objectives

- Understand how to the Docker Singularity image provides an environment for building Singularity images.

- Understand different ways to run containers based on the Docker Singularity image.

Singularity - Part II

Brief recap

In the five episodes covering Part I of this Singularity material we’ve seen how Singularity can be used on a computing platform where you don’t have any administrative privileges. The software was pre-installed and it was possible to work with existing images such as Singularity image files already stored on the platform or images obtained from a remote image repository such as Singularity Hub or Docker Hub.

It is clear that between Singularity Hub and Docker Hub there is a huge array of images available, pre-configured with a wide range of software applications, tools and services. But what if you want to create your own images or customise existing images?

In this first of three episodes in Part II of the Singularity material, we’ll look at preparing to build Singularity images.

Preparing to use Singularity for building images

So far you’ve been able to work with Singularity from your own user account as a non-privileged user. This part of the Singularity material requires that you use Singularity in an environment where you have administrative (root) access. While it is possible to build Singularity containers without root access, it is highly recommended that you do this as the root user, as highlighted in this section of the Singularity documentation. Bear in mind that the system that you use to build containers doesn’t have to be the system where you intend to run the containers. If, for example, you are intending to build a container that you can subsequently run on a Linux-based cluster, you could build the container on your own Linux-based desktop or laptop computer. You could then transfer the built image directly to the target platform or upload it to an image repository and pull it onto the target platform from this repository.

There are three different options for accessing a suitable environment to undertake the material in this part of the course:

- Run Singularity from within a Docker container - this will enable you to have the required privileges to build images

- Install Singularity locally on a system where you have administrative access

- Use Singularity on a system where it is already pre-installed and you have administrative (root) access

We’ll focus on the first option in this part of the course - running singularity from within a Docker container. If you would like to install Singularity directly on your system, see the box below for some further pointers. However, please note that the installation process is an advanced task that is beyond the scope of this course so we won’t be covering this.

Installing Singularity on your local system (optional) \[Advanced task\]

If you are running Linux and would like to install Singularity locally on your system, the source code is provided via the The Next Generation of High Performance Computing (HPCng) community’s Singularity repository. See the releases here. You will need to install various dependencies on your system and then build Singularity from source code.

If you are not familiar with building applications from source code, it is strongly recommended that you use the Docker Singularity image, as described below in the “Getting started with the Docker Singularity image” section rather than attempting to build and install Singularity yourself. The installation process is an advanced task that is beyond the scope of this session.

However, if you have Linux systems knowledge and would like to attempt a local install of Singularity, you can find details in the INSTALL.md file within the Singularity repository that explains how to install the prerequisites and build and install the software. Singularity is written in the Go programming language and Go is the main dependency that you’ll need to install on your system. The process of installing Go and any other requirements is detailed in the INSTALL.md file.

Note

If you do not have access to a system with Docker installed, or a Linux system where you can build and install Singularity but you have administrative privileges on another system, you could look at installing a virtualisation tool such as VirtualBox on which you could run a Linux Virtual Machine (VM) image. Within the Linux VM image, you will be able to install Singularity. Again this is beyond the scope of the course.

If you are not able to access/run Singularity yourself on a system where you have administrative privileges, you can still follow through this material as it is being taught (or read through it in your own time if you’re not participating in a taught version of the course) since it will be helpful to have an understanding of how Singularity images can be built.

You could also attempt to follow this section of the lesson without

using root and instead using the singularity command’s --fakeroot

option. However, you may encounter issues with permissions when trying

to build images and run your containers and this is why running the

commands as root is strongly recommended and is the approach described

in this lesson.

Getting started with the Docker Singularity image

The Singularity Docker image is available from Quay.io.

Familiarise yourself with the Docker Singularity image

Using your previously acquired Docker knowledge, get the Singularity image for

v3.5.3and ensure that you can run a Docker container using this image. For this exercise, we recommend using the image with thev3.5.3-slimtag since it’s a much smaller image.-

Create a directory (e.g.

$HOME/singularity_data) on your host machine that you can use for storage of definition files (we’ll introduce these shortly) and generated image files.This directory should be bind mounted into the Docker container at the location

/home/singularityevery time you run it - this will give you a location in which to store built images so that they are available on the host system once the container exits. (take a look at the-vswitch to thedocker runcommand)

Hint: To be able to build an image using the Docker Singularity

container, you’ll need to add the --privileged switch to

your docker command line.

Hint: If you want to run a shell within the Docker Singularity

container, you’ll need to override the entrypoint to tell the container

to run /bin/bash - take a look at Docker’s

--entrypoint switch.

Questions / Exercises:

- Can you run a container from the Docker Singularity image? What is happening when you run the container?

- Can you run an interactive

/bin/shshell in the Docker Singularity container? - Can you run an interactive Singularity shell in a Singularity container, within the Docker Singularity container?!

Answers:

- Can you run a container from the Docker Singularity image? What is happening when you run the container?

The name/tag of the Docker Singularity image we’ll be using is:

quay.io/singularity/singularity:v3.5.3-slim

Having a bound directory from the host system accessible within your

running Docker Singularity container will give you somewhere to place

created Singularity images so that they are accessible on the host

system after the container exits. Begin by changing into the directory

that you created above for storing your definiton files and built images

(e.g. $HOME/singularity_data).

Running a Docker container from the image and binding the current

directory to /home/singularity within the container can be

achieved as follows:

docker run --privileged --rm -v ${PWD}:/home/singularity quay.io/singularity/singularity:v3.5.3-slimNote that the image is configured to run the singularity

command by default. So, when you run a container from it with no

arguments, you see the singularity help output as if you had Singularity

installed locally and had typed singularity on the command

line.

To run a Singularity command, such as

singularity cache list, within the docker container

directly from the host system’s terminal you’d enter:

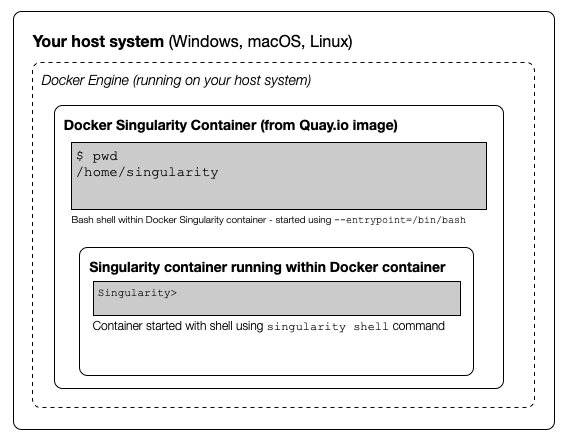

docker run --privileged --rm -v ${PWD}:/home/singularity quay.io/singularity/singularity:v3.5.3-slim cache listThe following diagram shows how the Docker Singularity image is being used to run a container on your host system and how a Singularity container can, in turn, be started within the Docker container:

- Can you run an interactive shell in the Docker Singularity container?

To start a shell within the Singularity Docker container where you

can then run the singularity command directly:

docker run -it --entrypoint=/bin/sh --privileged --rm -v ${PWD}:/home/singularity quay.io/singularity/singularity:v3.5.3-slimHere we use the --entrypoint switch to the

docker command to override the default behaviour when

starting the container and instead of running the

singularity command directly, we run a ‘sh’ shell. We also

add the -it switch to provide an interactive terminal

connection.

- Can you run an interactive Singularity shell in a Singularity container, within the Docker Singularity container?!

As shown in the diagram above, you can do this. It is necessary to

run singularity shell <image file name> within the

Docker Singularity container. You would use a command similar to the

following (assuming that my_test_image.sif is in the

current directory where you run this command):

docker run --rm -it --privileged -v ${PWD}:/home/singularity quay.io/singularity/singularity:v3.5.3-slim shell --contain /home/singularity/my_test_image.sifYou may notice there’s a flag being passed to singularity shell

(--contain - -c is the short form and also

works). What is this doing? When running a singularity container, you

may remember that we highlighted that some key files/directories from

the host system are mapped into containers by default when you start

them. The configuration in the Docker Singularity container attempts to

mount the file /etc/localtime into the Singularity

container but there is not a timezone configuration present in the

Docker Singularity container and this file doesn’t exist resulting in an

error. --contain prevents the default mounting of some key

files/directories into the container and prevents this error from

occurring. Later in this material, there’s an example of how to rectify

the issue by creating a timezone configuration in the Docker Singularity

container so that the --contain switch is no longer

needed.

Summary / Comments:

You may choose to:

- open a shell within the Docker image so you can work at a command

prompt and run the

singularitycommand directly - use the

docker runcommand to run a new container instance every time you want to run thesingularitycommand (the Docker Singularity image is configured with thesingularitycommand as its entrypoint).

Either option is fine for this section of the material.

To make things easier to read in the remainder of the material,

command examples will use the singularity command directly,

e.g. singularity cache list. If you’re running a shell in

the Docker Singularity container, you can enter the commands as they

appear. If you’re using the container’s default run behaviour and

running a container instance for each run of the command, you’ll need to

replace singularity with

docker run --privileged -v ${PWD}:/home/singularity quay.io/singularity/singularity:v3.5.3-slim

or similar.

This can be a little cumbersome to work with. However, if you’re

using Linux or macOS on your host system, you can add a command

alias to alias the command singularity on your host

system to run the Docker Singularity container, e.g. (for bash shells -

syntax for other shells varies):

alias singularity='docker run --privileged -v ${PWD}:/home/singularity quay.io/singularity/singularity:v3.5.3-slim'This means you’ll only have to type singularity at the

command line as shown in the examples throughout this section of the

material

Key Points

- A Docker image is provided to run Singularity - this avoids the need to have a local Singularity installation on your system.

- The Docker Singularity image can be used to build containers on Linux, macOS and Windows.

- You can also run Singularity containers within the Docker Singularity image.

Content from Building Singularity images

Last updated on 2024-09-17 | Edit this page

Estimated time: 60 minutes

Overview

Questions

- How do I create my own Singularity images?

Objectives

- Understand the different Singularity container file formats.

- Understand how to build and share your own Singularity containers.

Building Singularity images

Introduction

As a platform that is widely used in the scientific/research software and HPC communities, Singularity provides great support for reproducibility. If you build a Singularity image for some scientific software, it’s likely that you and/or others will want to be able to reproduce exactly the same environment again. Maybe you want to verify the results of the code or provide a means that others can use to verify the results to support a paper or report. Maybe you’re making a tool available to others and want to ensure that they have exactly the right version/configuration of the code.

Similarly to Docker and many other modern software tools, Singularity follows the “Configuration as code” approach and a container configuration can be stored in a file which can then be committed to your version control system alongside other code. Assuming it is suitably configured, this file can then be used by you or other individuals (or by automated build tools) to reproduce a container with the same configuration at some point in the future.

Different approaches to building images

There are various approaches to building Singularity images. We highlight two different approaches here and focus on one of them:

- Building within a sandbox: You can build a container interactively within a sandbox environment. This means you get a shell within the container environment and install and configure packages and code as you wish before exiting the sandbox and converting it into a container image.

-

Building from a Singularity

Definition File: This is Singularity’s equivalent to building a

Docker container from a

Dockerfileand we’ll discuss this approach in this section.

You can take a look at Singularity’s “Build a Container” documentation for more details on different approaches to building containers.

Why look at Singularity Definition Files?

Why do you think we might be looking at the definition file approach here rather than the sandbox approach?

The sandbox approach is great for prototyping and testing out an image configuration but it doesn’t provide the best support for our ultimate goal of reproducibility. If you spend time sitting at your terminal in front of a shell typing different commands to add configuration, maybe you realise you made a mistake so you undo one piece of configuration and change it. This goes on until you have your completed, working configuration but there’s no explicit record of exactly what you did to create that configuration.

Say your container image file gets deleted by accident, or someone else wants to create an equivalent image to test something. How will they do this and know for sure that they have the same configuration that you had? With a definition file, the configuration steps are explicitly defined and can be easily stored (and re-run).

Definition files are small text files while container files may be very large, multi-gigabyte files that are difficult and time consuming to move around. This makes definition files ideal for storing in a version control system along with their revisions.

Creating a Singularity Definition File

A Singularity Definition File is a text file that contains a series of statements that are used to create a container image. In line with the configuration as code approach mentioned above, the definition file can be stored in your code repository alongside your application code and used to create a reproducible image. This means that for a given commit in your repository, the version of the definition file present at that commit can be used to reproduce a container with a known state. It was pointed out earlier in the course, when covering Docker, that this property also applies for Dockerfiles.

We’ll now look at a very simple example of a definition file:

BASH

Bootstrap: docker

From: ubuntu:20.04

%post

apt-get -y update && apt-get install -y python

%runscript

python -c 'print("Hello World! Hello from our custom Singularity image!")'A definition file has a number of optional sections, specified using

the % prefix, that are used to define or undertake

different configuration during different stages of the image build

process. You can find full details in Singularity’s Definition

Files documentation. In our very simple example here, we only use

the %post and %runscript sections.

Let’s step through this definition file and look at the lines in more detail:

These first two lines define where to bootstrap our image from. Why can’t we just put some application binaries into a blank image? Any applications or tools that we want to run will need to interact with standard system libraries and potentially a wide range of other libraries and tools. These need to be available within the image and we therefore need some sort of operating system as the basis for our image. The most straightforward way to achieve this is to start from an existing base image containing an operating system. In this case, we’re going to start from a minimal Ubuntu 20.04 Linux Docker image. Note that we’re using a Docker image as the basis for creating a Singularity image. This demonstrates the flexibility in being able to start from different types of images when creating a new Singularity image.

The Bootstrap: docker line is similar to prefixing an

image path with docker:// when using, for example, the

singularity pull command. A range of different

bootstrap options are supported. From: ubuntu:20.04

says that we want to use the ubuntu image with the tag

20.04 from Docker Hub.

Next we have the %post section of the definition

file:

In this section of the file we can do tasks such as package installation, pulling data files from remote locations and undertaking local configuration within the image. The commands that appear in this section are standard shell commands and they are run within the context of our new container image. So, in the case of this example, these commands are being run within the context of a minimal Ubuntu 20.04 image that initially has only a very small set of core packages installed.

Here we use Ubuntu’s package manager to update our package indexes

and then install the python3 package along with any

required dependencies. The -y switches are used to accept,

by default, interactive prompts that might appear asking you to confirm

package updates or installation. This is required because our definition

file should be able to run in an unattended, non-interactive

environment.

Finally we have the %runscript section:

This section is used to define a script that should be run when a

container is started based on this image using the

singularity run command. In this simple example we use

python3 to print out some text to the console.

We can now save the contents of the simple defintion file shown above

to a file and build an image based on it. In the case of this example,

the definition file has been named my_test_image.def. (Note

that the instructions here assume you’ve bound the image output

directory you created to the /home/singularity directory in

your Docker Singularity container, as explained in the “Getting

started with the Docker Singularity image” section above.):

Recall from the details at the start of this section that if you are running your command from the host system command line, running an instance of a Docker container for each run of the command, your command will look something like this:

BASH

$ docker run --privileged --rm -v ${PWD}:/home/singularity quay.io/singularity/singularity:v3.5.3-slim build /home/singularity/my_test_image.sif /home/singularity/my_test_image.defThe above command requests the building of an image based on the

my_test_image.def file with the resulting image saved to

the my_test_image.sif file. Note that you will need to

prefix the command with sudo if you’re running a locally

installed version of Singularity and not running via Docker because it

is necessary to have administrative privileges to build the image. You

should see output similar to the following:

OUTPUT

INFO: Starting build...

Getting image source signatures

Copying blob d51af753c3d3 skipped: already exists

Copying blob fc878cd0a91c skipped: already exists

Copying blob 6154df8ff988 skipped: already exists

Copying blob fee5db0ff82f skipped: already exists

Copying config 95c3f3755f done

Writing manifest to image destination

Storing signatures

2020/04/29 13:36:35 info unpack layer: sha256:d51af753c3d3a984351448ec0f85ddafc580680fd6dfce9f4b09fdb367ee1e3e

2020/04/29 13:36:36 info unpack layer: sha256:fc878cd0a91c7bece56f668b2c79a19d94dd5471dae41fe5a7e14b4ae65251f6

2020/04/29 13:36:36 info unpack layer: sha256:6154df8ff9882934dc5bf265b8b85a3aeadba06387447ffa440f7af7f32b0e1d

2020/04/29 13:36:36 info unpack layer: sha256:fee5db0ff82f7aa5ace63497df4802bbadf8f2779ed3e1858605b791dc449425

INFO: Running post scriptlet

+ apt-get -y update

Get:1 http://archive.ubuntu.com/ubuntu focal InRelease [265 kB]

...

[Package update output truncated]

...

Fetched 13.4 MB in 2s (5575 kB/s)

Reading package lists... Done

+ apt-get install -y python3

Reading package lists... Done

...

[Package install output truncated]

...Processing triggers for libc-bin (2.31-0ubuntu9) ...

INFO: Adding runscript

INFO: Creating SIF file...

INFO: Build complete: my_test_image.sif

$ You should now have a my_test_image.sif file in the

current directory. Note that in the above output, where it says

INFO: Starting build... there is a series of

skipped: already exists messages for the

Copying blob lines. This is because the Docker image slices

for the Ubuntu 20.04 image have previously been downloaded and are

cached on the system where this example is being run. On your system, if

the image is not already cached, you will see the slices being

downloaded from Docker Hub when these lines of output appear.

Permissions of the created image file

You may find that the created Singularity image file on your host

filesystem is owned by the root user and not your user. In

this case, you won’t be able to change the ownership/permissions of the

file directly if you don’t have root access.

However, the image file will be readable by you and you should be able to take a copy of the file under a new name which you will then own. You will then be able to modify the permissions of this copy of the image and delete the original root-owned file since the default permissions should allow this.

Cluster platform configuration for running Singularity containers

Note to instructors: Add details into this box

of any custom configuration that needs to be done on the cluster

platform or other remote system that you’re providing access to for the

purpose of undertaking this course. If singularity does not

require any custom configuration by the user on the host platform, you

can remove this box.

It is recommended that you move the created .sif file to

a platform with an installation of Singularity, rather than attempting

to run the image using the Docker container. However, if you do wish to

try using the Docker container, see the notes below on “Using

singularity run from within the Docker container” for further

information.

If you have access to a remote platform with Singularity installed on

it, you should now move your created .sif image file to

this platform. You could, for example, do this using the command line

secure copy command scp.

Using scp (secure copy) to copy

files between systems

scp is a widely used tool that uses the SSH protocol to

securely copy files between systems. As such, the syntax is similar to

that of SSH.

For example, if you want to copy the my_image.sif file

from the current directory on your local system to your home directory

(e.g. /home/myuser/) on a remote system

(e.g. hpc.myinstitution.ac.uk) where an SSH private key is

required for login, you would use a command similar to the

following:

scp -i /path/to/keyfile/id_mykey ./my_image.sif myuser@hpc.myinstitution.ac.uk:/home/myuser/Note that if you leave off the /home/myuser and just end

the command with the :, the file will, by default, be

copied to your home directory.

We can now attempt to run a container from the image that we built:

If everything worked successfully, you should see the message printed by Python:

OUTPUT

Hello World! Hello from our custom Singularity image!Using singularity run from within

the Docker container

It is strongly recommended that you don’t use the Docker container for running Singularity images, only for creating them, since the Singularity command runs within the container as the root user.

However, for the purposes of this simple example, and potentially for

testing/debugging purposes it is useful to know how to run a Singularity

container within the Docker Singularity container. You may recall from

the Running a container from

the image section in the previous episode that we used the

--contain switch with the singularity command.

If you don’t use this switch, it is likely that you will get an error

relating to /etc/localtime similar to the following:

OUTPUT

WARNING: skipping mount of /etc/localtime: no such file or directory

FATAL: container creation failed: mount /etc/localtime->/etc/localtime error: while mounting /etc/localtime: mount source /etc/localtime doesn't existThis occurs because the /etc/localtime file that

provides timezone configuration is not present within the Docker

container. If you want to use the Docker container to test that your

newly created image runs, you can use the --contain switch,

or you can open a shell in the Docker container and add a timezone

configuration as described in the Alpine

Linux documentation:

The singularity run command should now work successfully

without needing to use --contain. Bear in mind that once

you exit the Docker Singularity container shell and shutdown the

container, this configuration will not persist.

More advanced definition files

Here we’ve looked at a very simple example of how to create an image. At this stage, you might want to have a go at creating your own definition file for some code of your own or an application that you work with regularly. There are several definition file sections that were not used in the above example, these are:

%setup%files%environment%startscript%test%labels%help

The Sections

part of the definition file documentation details all the sections

and provides an example definition file that makes use of all the

sections.

Additional Singularity features

Singularity has a wide range of features. You can find full details in the Singularity User Guide and we highlight a couple of key features here that may be of use/interest:

Remote Builder Capabilities: If you have access to a platform with Singularity installed but you don’t have root access to create containers, you may be able to use the Remote Builder functionality to offload the process of building an image to remote cloud resources. You’ll need to register for a cloud token via the link on the Remote Builder page.

Signing containers: If you do want to share

container image (.sif) files directly with colleagues or

collaborators, how can the people you send an image to be sure that they

have received the file without it being tampered with or suffering from

corruption during transfer/storage? And how can you be sure that the

same goes for any container image file you receive from others?

Singularity supports signing containers. This allows a digital signature

to be linked to an image file. This signature can be used to verify that

an image file has been signed by the holder of a specific key and that

the file is unchanged from when it was signed. You can find full details

of how to use this functionality in the Singularity documentation on Signing

and Verifying Containers.

Key Points

- Singularity definition files are used to define the build process and configuration for an image.

- Singularity’s Docker container provides a way to build images on a platform where Singularity is not installed but Docker is available.

- Existing images from remote registries such as Docker Hub and Singularity Hub can be used as a base for creating new Singularity images.

Content from Running MPI parallel jobs using Singularity containers

Last updated on 2024-09-17 | Edit this page

Estimated time: 70 minutes

Overview

Questions

- How do I set up and run an MPI job from a Singularity container?

Objectives

- Learn how MPI applications within Singularity containers can be run on HPC platforms

- Understand the challenges and related performance implications when running MPI jobs via Singularity

Running MPI parallel codes with Singularity containers

MPI overview

MPI - Message Passing Interface - is a widely used standard for parallel programming. It is used for exchanging messages/data between processes in a parallel application. If you’ve been involved in developing or working with computational science software, you may already be familiar with MPI and running MPI applications.

When working with an MPI code on a large-scale cluster, a common approach is to compile the code yourself, within your own user directory on the cluster platform, building against the supported MPI implementation on the cluster. Alternatively, if the code is widely used on the cluster, the platform administrators may build and package the application as a module so that it is easily accessible by all users of the cluster.

MPI codes with Singularity containers

We’ve already seen that building Singularity containers can be impractical without root access. Since we’re highly unlikely to have root access on a large institutional, regional or national cluster, building a container directly on the target platform is not normally an option.

If our target platform uses OpenMPI, one of the two widely used source MPI implementations, we can build/install a compatible OpenMPI version on our local build platform, or directly within the image as part of the image build process. We can then build our code that requires MPI, either interactively in an image sandbox or via a definition file.

If the target platform uses a version of MPI based on MPICH, the other widely used open source MPI implementation, there is ABI compatibility between MPICH and several other MPI implementations. In this case, you can build MPICH and your code on a local platform, within an image sandbox or as part of the image build process via a definition file, and you should be able to successfully run containers based on this image on your target cluster platform.

As described in Singularity’s MPI documentation, support for both OpenMPI and MPICH is provided. Instructions are given for building the relevant MPI version from source via a definition file and we’ll see this used in an example below.

Container portability and performance on HPC platforms

While building a container on a local system that is intended for use on a remote HPC platform does provide some level of portability, if you’re after the best possible performance, it can present some issues. The version of MPI in the container will need to be built and configured to support the hardware on your target platform if the best possible performance is to be achieved. Where a platform has specialist hardware with proprietary drivers, building on a different platform with different hardware present means that building with the right driver support for optimal performance is not likely to be possible. This is especially true if the version of MPI available is different (but compatible). Singularity’s MPI documentation highlights two different models for working with MPI codes. The hybrid model that we’ll be looking at here involves using the MPI executable from the MPI installation on the host system to launch singularity and run the application within the container. The application in the container is linked against and uses the MPI installation within the container which, in turn, communicates with the MPI daemon process running on the host system. In the following section we’ll look at building a Singularity image containing a small MPI application that can then be run using the hybrid model.

Building and running a Singularity image for an MPI code

Building and testing an image

This example makes the assumption that you’ll be building a container image on a local platform and then deploying it to a cluster with a different but compatible MPI implementation. See Singularity and MPI applications in the Singularity documentation for further information on how this works.

We’ll build an image from a definition file. Containers based on this image will be able to run MPI benchmarks using the OSU Micro-Benchmarks software.

In this example, the target platform is a remote HPC cluster that uses Intel MPI. The container can be built via the Singularity Docker image that we used in the previous episode of the Singularity material.

Begin by creating a directory and, within that directory, downloading and saving the “tarballs” for version 5.7.1 of the OSU Micro-Benchmarks from the OSU Micro-Benchmarks page and for MPICH version 3.4.2 from the MPICH downloads page.

In the same directory, save the following definition file content to

a .def file, e.g. osu_benchmarks.def:

OUTPUT

Bootstrap: docker

From: ubuntu:20.04

%files

/home/singularity/osu-micro-benchmarks-5.7.1.tgz /root/

/home/singularity/mpich-3.4.2.tar.gz /root/

%environment

export SINGULARITY_MPICH_DIR=/usr

export OSU_DIR=/usr/local/osu/libexec/osu-micro-benchmarks/mpi

%post

apt-get -y update && DEBIAN_FRONTEND=noninteractive apt-get -y install build-essential libfabric-dev libibverbs-dev gfortran

cd /root

tar zxvf mpich-3.4.2.tar.gz && cd mpich-3.4.2

echo "Configuring and building MPICH..."

./configure --prefix=/usr --with-device=ch3:nemesis:ofi && make -j2 && make install

cd /root

tar zxvf osu-micro-benchmarks-5.7.1.tgz

cd osu-micro-benchmarks-5.7.1/

echo "Configuring and building OSU Micro-Benchmarks..."

./configure --prefix=/usr/local/osu CC=/usr/bin/mpicc CXX=/usr/bin/mpicxx

make -j2 && make install

%runscript

echo "Rank ${PMI_RANK} - About to run: ${OSU_DIR}/$*"

exec ${OSU_DIR}/$*A quick overview of what the above definition file is doing:

- The image is being bootstrapped from the

ubuntu:20.04Docker image. - In the

%filessection: The OSU Micro-Benchmarks and MPICH tar files are copied from the current directory into the/rootdirectory within the image. - In the

%environmentsection: Set a couple of environment variables that will be available within all containers run from the generated image. - In the

%postsection:- Ubuntu’s

apt-getpackage manager is used to update the package directory and then install the compilers and other libraries required for the MPICH build. - The MPICH .tar.gz file is extracted and the configure, build and

install steps are run. Note the use of the

--with-deviceoption to configure MPICH to use the correct driver to support improved communication performance on a high performance cluster. - The OSU Micro-Benchmarks .tar.gz file is extracted and the configure, build and install steps are run to build the benchmark code from source.

- Ubuntu’s

- In the

%runscriptsection: A runscript is set up that will echo the rank number of the current process and then run the command provided as a command line argument.

Note that base path of the the executable to run

($OSU_DIR) is hardcoded in the run script. The command

line parameter that you provide when running a container instance based

on the image is then added to this base path. Example command line

parameters include: startup/osu_hello,

collective/osu_allgather, pt2pt/osu_latency,

one-sided/osu_put_latency.

Build and test the OSU Micro-Benchmarks image

Using the above definition file, build a Singularity image named

osu_benchmarks.sif.

Once the image has finished building, test it by running the

osu_hello benchmark that is found in the

startup benchmark folder.

NOTE: If you’re not using the Singularity Docker image to build

your Singularity image, you will need to edit the path to the .tar.gz

file in the %files section of the definition file.

You should be able to build an image from the definition file as follows:

Note that if you’re running the Singularity Docker container

directly from the command line to undertake your build, you’ll need to

provide the full path to the .def file

within the container - it is likely that this will

be different to the file path on your host system. For example, if

you’ve bind mounted the directory on your local system containing the

file to /home/singularity within the container, the full

path to the .def file will be

/home/singularity/osu_benchmarks.def.

Assuming the image builds successfully, you can then try running the container locally and also transfer the SIF file to a cluster platform that you have access to (that has Singularity installed) and run it there.

Let’s begin with a single-process run of

startup/osu_hello on your local system (where you

built the container) to ensure that we can run the container as

expected. We’ll use the MPI installation within the container

for this test. Note that when we run a parallel job on an HPC

cluster platform, we use the MPI installation on the cluster to

coordinate the run so things are a little different…

Start a shell in the Singularity container based on your image and

then run a single process job via mpirun:

BASH

$ singularity shell --contain /home/singularity/osu_benchmarks.sif

Singularity> mpirun -np 1 $OSU_DIR/startup/osu_helloYou should see output similar to the following:

OUTPUT

# OSU MPI Hello World Test v5.7.1

This is a test with 1 processesRunning Singularity containers via MPI

Assuming the above tests worked, we can now try undertaking a parallel run of one of the OSU benchmarking tools within our container image.

This is where things get interesting and we’ll begin by looking at how Singularity containers are run within an MPI environment.

If you’re familiar with running MPI codes, you’ll know that you use

mpirun (as we did in the previous example),

mpiexec or a similar MPI executable to start your

application. This executable may be run directly on the local system or

cluster platform that you’re using, or you may need to run it through a

job script submitted to a job scheduler. Your MPI-based application

code, which will be linked against the MPI libraries, will make MPI API

calls into these MPI libraries which in turn talk to the MPI daemon

process running on the host system. This daemon process handles the

communication between MPI processes, including talking to the daemons on

other nodes to exchange information between processes running on

different machines, as necessary.

When running code within a Singularity container, we don’t use the

MPI executables stored within the container (i.e. we DO NOT run

singularity exec mpirun -np <numprocs> /path/to/my/executable).

Instead we use the MPI installation on the host system to run

Singularity and start an instance of our executable from within a

container for each MPI process. Without Singularity support in an MPI

implementation, this results in starting a separate Singularity

container instance within each process. This can present some overhead

if a large number of processes are being run on a host. Where

Singularity support is built into an MPI implementation this can address

this potential issue and reduce the overhead of running code from within

a container as part of an MPI job.

Ultimately, this means that our running MPI code is linking to the MPI libraries from the MPI install within our container and these are, in turn, communicating with the MPI daemon on the host system which is part of the host system’s MPI installation. In the case of MPICH, these two installations of MPI may be different but as long as there is ABI compatibility between the version of MPI installed in your container image and the version on the host system, your job should run successfully.

We can now try running a 2-process MPI run of a point to point

benchmark osu_latency. If your local system has both MPI

and Singularity installed and has multiple cores, you can run this test

on that system. Alternatively you can run on a cluster. Note that you

may need to submit this command via a job submission script submitted to

a job scheduler if you’re running on a cluster. If you’re attending a

taught version of this course, some information will be provided below

in relation to the cluster that you’ve been provided with access to.

Undertake a parallel run of the

osu_latency benchmark (general example)

Move the osu_benchmarks.sif Singularity image onto the

cluster (or other suitable) platform where you’re going to undertake

your benchmark run.

You should be able to run the benchmark using a command similar to the one shown below. However, if you are running on a cluster, you may need to write and submit a job submission script at this point to initiate running of the benchmark.

As you can see in the mpirun command shown above, we have called

mpirun on the host system and are passing to MPI the

singularity executable for which the parameters are the

image file and any parameters we want to pass to the image’s run script,

in this case the path/name of the benchmark executable to run.

The following shows an example of the output you should expect to see. You should have latency values shown for message sizes up to 4MB.

OUTPUT

Rank 1 - About to run: /.../mpi/pt2pt/osu_latency

Rank 0 - About to run: /.../mpi/pt2pt/osu_latency