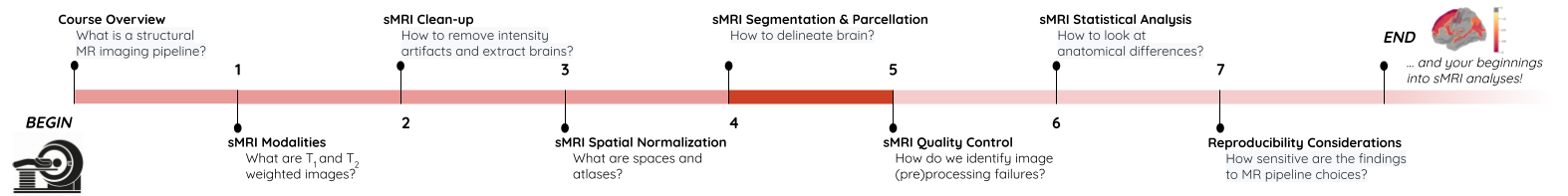

sMRI Segmentation and Parcellation

Last updated on 2024-02-19 | Edit this page

Estimated time: 35 minutes

Overview

Questions

- How do we segment the brain into tissue classes ?

- How do we further divide a tissue class into sub-components ?

- How are volumetric and surface data defined ?

Objectives

- Understand and visualize tissue segmentation

- Manipulate atlases to extract regions of interests

- Visualize and interact with both volumetric and surface data

You Are Here!

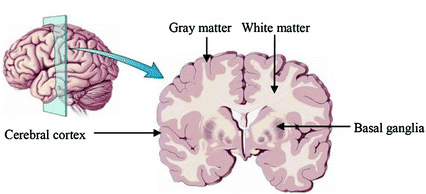

Segmentation of brain tissues

Brain anatomy is different for every individual. Brain tissues are typically divided into:

- grey matter (GM), containing neuron cell bodies

- white matter (WM), including neuron connection fibers wrapped in a special signal-accelerating substance called myelin

- cerebro-spinal fluid (CSF), a protecting fluid present mostly around the brain but also within brain cavities called ventricles

Each class can inform on different aspects of the brain. Therefore it is often useful to segment the brain in these tissue components for further processing.

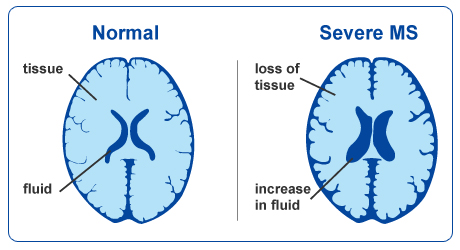

An important aspect to keep in mind is that aging and disease can cause tissue modifications. Common changes include a reduction in GM, as in the case of ageing and neurodegenerative diseases such as Alzheimer’s. A tumor can cause an important localized change of signal in the area most impacted by the tumor. Another example is visibly higher WM signal intensities on T2 MRI images, so called WM hyper-intensities, which can be related to vascular pathology (common in aging), or to myelin lesions characteristic of the multiple sclerosis disease.

This image is Copyright © My-MS.org and falls under Image License D

defined under the Image License section of the My-MS.org Disclaimer

page.

This image is Copyright © My-MS.org and falls under Image License D

defined under the Image License section of the My-MS.org Disclaimer

page.

The analysis of preprocessed structural images then often consists in:

- Identifying tissue classes – including pathological tissue when appropriate – and their sub-components: this is done by segmenting the MRI images, and the topic of the current episode

- Quantifying morphological differences: this is typically done by measuring morphological properties such as GM volume or thickness in the whole brain or within specific regions, and this is the topic of episode 6

Can we measure brain changes in healthy young adults as well ? This is what we will ultimately try to find out at the end of our lesson !

In this episode we will more precisely look at:

- how to segment images into tissue classes, and also also sub-regions for the case of GM

- how to visualize segmentation results both for volumetric and surface data

Which software to use for segmentation and parcellation ?

Common software to segment volumetric data include FSL, SPM and ANTS. One of the most used software to segment surface data (in addition of volumetric data) is Freesurfer. In this episode, we will use the outputs generated by smriprep/fmriprep, which are workflows relying on all these software to generate a large variety of segmentation outputs.

Tissue segmentation and visualization in practice

The tissues can be differentiated according to the MRI signal intensity, however as seen in episode 2, the main magnetic field can introduce bias and create signal inhomogeneities. It is therefore important to implement bias field correction before carrying out segmentation according to tissue intensities.

Usually the T1 MRI modality is used for segmentation as it offers the best contrast between GM, WM and CSF. However combining multiple modalities (such as T2, PD and FLAIR) can help improving the segmentation, especially in the presence of lesions.

Tissue segmentation is presented first for normal controls. Then examples of changes that can happen in disease are shown for patients having Alzheimer’s or the multiple sclerosis disease.

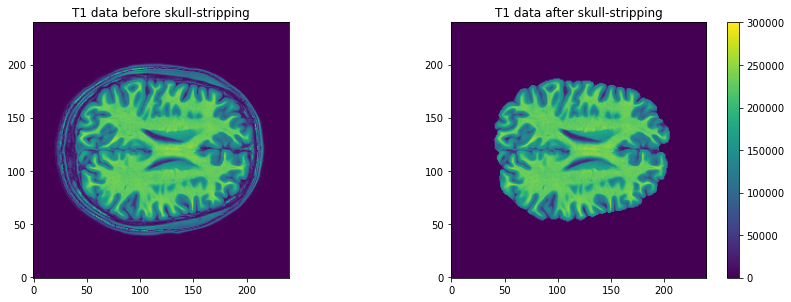

In normal controls

In normal controls, the number of tissue classes is usually fixed

(there are not additional classes of pathological tissue). In practice,

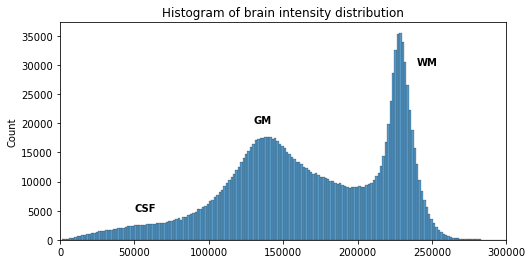

a distribution of the intensity of the different tissue types can be

obtained by loading T1 volumes with nibabel then plotting a

slice with matplotlib and displaying an histogram with

seaborn (a plotting library built on top of

matplotlib). Assuming we already loaded a T1 brain volume

t1_brain_data, visualizing the T1 data and the associated

intensity histogram is obtained with:

PYTHON

# Plot first figure

plt.imshow(t1_data[:, :, 110], vmax=300000, origin="lower")

plt.title('T1 data before skull-stripping')

# Plot second figure

plt.imshow(t1_brain_data[:, :, 110], vmax=300000, origin="lower")

plt.title('T1 data after skull-stripping')

PYTHON

# Compute the optimal bin size according to the data

bins = np.histogram_bin_edges(t1_brain_data[t1_brain_data != 0], bins='auto')

# Plot the histogram

sns.histplot(t1_brain_data[t1_brain_data != 0], bins=bins)

plt.xlim([0, 300000])

We can see here three main components corresponding to GM, WM and CSF.

Jupyter notebook challenge

Can you modify the code in the Jupyter notebook to plot the histogram of the brain before skull-stripping ?

Try to switch the variable of one T1 image for another.

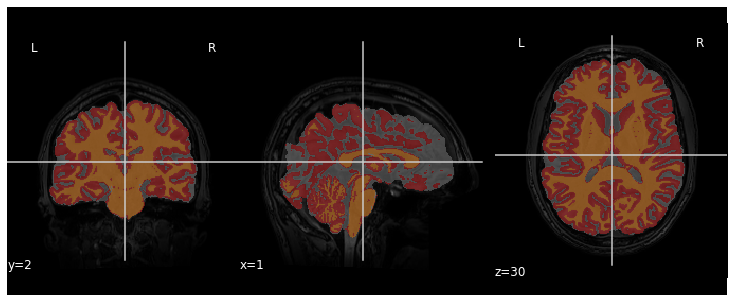

We can visualize the segmentation by loading adding an overlay

representing the different tissue classes after segmentation. For this,

we use the nilearn library to plot the t1_seg

segmentation data from smriprep/fmriprep.

PYTHON

from nilearn import plotting

plotting.plot_roi(roi_img=t1_seg, bg_img=t1, alpha=0.3, cmap="Set1");

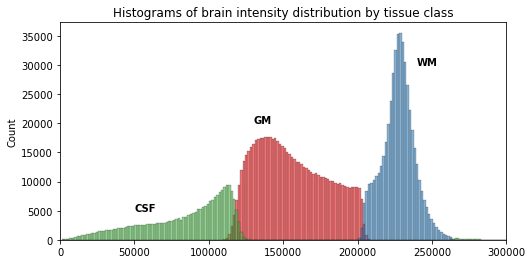

Now that we have access to each tissue class, we can check the contribution of each in the intensity histogram.

PYTHON

import itertools

labels = np.unique(t1_seg_data[t1_seg_data != 0]).astype('int')

palette = itertools.cycle(sns.color_palette("Set3"))

for label in labels:

sns.histplot(t1_brain_data[t1_seg_data == label], bins=bins, color=next(palette), alpha=0.6)

A simple matter of thresholding ?

From the previous histogram, does the intensity itself seem enough to differentiate between different tissue classes ?

From the shape of the histogram we can see there is some overlap between the tissue distributions. Segmentation on intensity alone is most often not enough, and additional information is required, such as the use of a template with a-priori tissue probability maps (probability of presence of a given tissue at a given location).

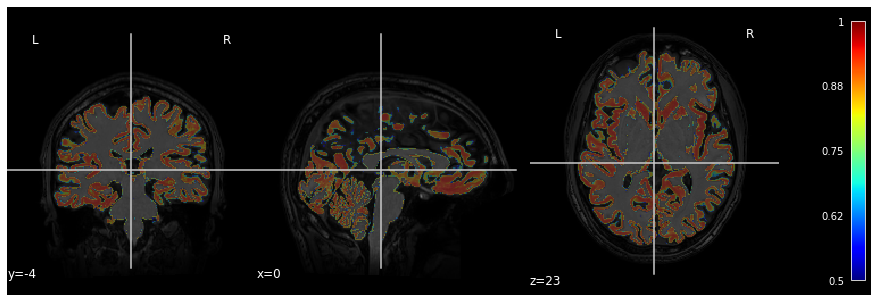

A question of probability

In the previous segmentation volume, each voxel has a single integer value (1, 2 or 3) according to which tissue class it belongs. In practice however the primary output of segmentation tools are probability maps: one probability map per tissue class. In this probability map, each voxel has a value between 0 and 1 to indicate the probability to belong to that tissue class.

Below is an example of visualization of a GM probability map

GM_probmap.

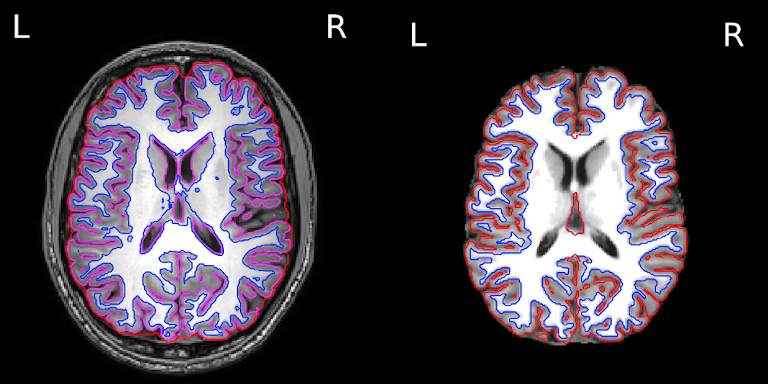

The segmentation into GM and WM voxels allows to identify surfaces surrounding these tissues. The images below show:

- on the left: the delineation of GM (in pink) and WM (in blue) before discarding deep GM structures such as the basal ganglia

- on the right: the delineation of the outer GM layer called the pial surface (in red), and the underlying white matter surface (in blue) after discarding deep GM

We can now have a closer look at surface data !

Surface data

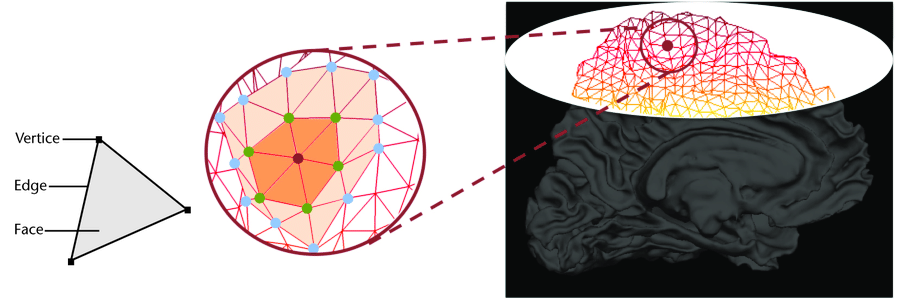

Some software such as Freesurfer specialize in surface data. Contrarily to volumetric data which are collections of voxels, surface data are collections of vertices and faces of a mesh.

The most commonly use surfaces are the pial GM and the underlying WM.

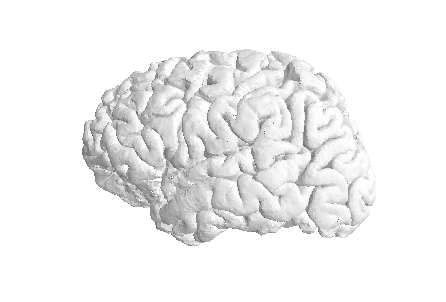

Let’s visualize the pial GM using nilearn on the Freesurfer

pial_surf_file output by smriprep/fmriprep and

loaded in the notebook.

PYTHON

# The pial surface is the most external surface of the GM

pial_surf = nib.load(pial_surf_file)

pial_verts, pial_faces = pial_surf.agg_data(('pointset', 'triangle'))

plotting.plot_surf((pial_verts, pial_faces))

Jupyter notebook challenge

Can you modify the code in the Jupyter notebook to plot the white matter surface ?

Try to explore the BIDSLayout output to find the right surface file.

In disease such as Alzheimer’s and multiple sclerosis

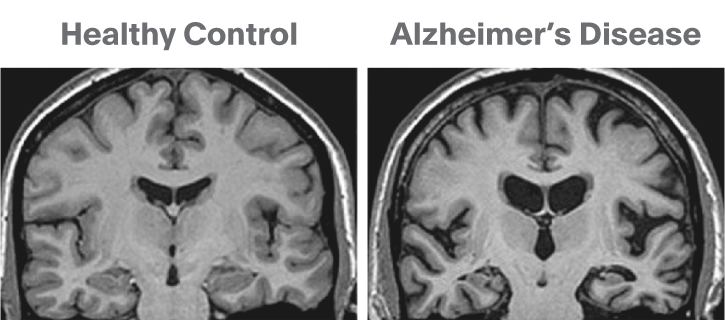

The brain GM volume tends to shrink with age, with the GM loss typically replaced with CSF. This process is called atrophy. In Alzheimer’s disease and other neuro-degenerative disease, atrophy is amplified (the “degeneration” is the loss of GM). Therefore special care is required when processing data of patients whose disease can cause changes in the brain morphology.

Below is a T1 image of an Alzheimer’s disease patient. An enlargement of the CSF-containing cavities, the ventricles, can be seen.

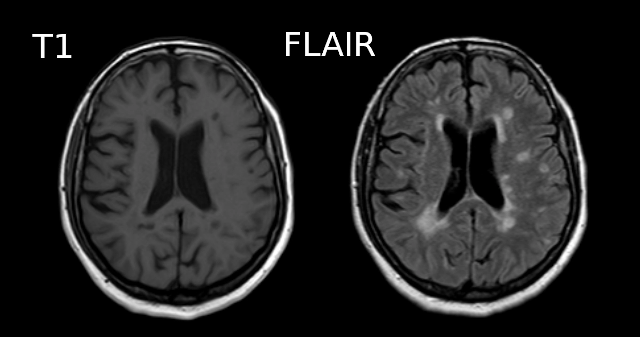

Below is a T1 and FLAIR image of an MS patient. The lesion-enhancing FLAIR modality clearly show lesions. These lesions can sometimes be seen on the T1 image. In this case they appear as dark spots called “black holes”.

Segmentation problems in disease

What problems do you expect in the tissue segmentation of multiple sclerosis images ?

An algorithm expecting three tissues types may misclassify lesions as GM on T1 images. Filling lesions with intensity corresponding to WM is a solution sometimes used to avoid these issues (e.g. with Freesurfer). Some software such as ANTs directly accepts a binary lesion mask as an input to deal with this kind of data.

Segmenting tissue classes into sub-components: atlasing, parcellation

A natural step after identifying tissue classes, is sub-dividing the tissue(s) of interest into sub-components. GM is commonly split into non-overlapping regions of interests (ROIs). This splitting process is called “parcellation” and allows to be more specific about the brain areas studied. Parcellation can be purely data-driven, anatomically-driven, or both.

In this lesson we focus on GM. WM parcellation is also common but it requires dedicated MRI sequences and analysis techniques, e.g. by identifying group of WM tracts called bundles with tractography (please refer to the lesson on diffusion MRI if you would like to know more about WM parcellation).

The usefulness of an atlas comes from the fact that each subject brain can be registered to the template on which that atlas is defined. A correct registration to the template is therefore essential for a correct labelling of the brain voxels / vertices. The template the images are registered to should also be the template on which the atlas has been defined (or highly similar).

Atlas vs Parcellation

A parcellation can be defined as the splitting of an object into non-overlapping components. Since an atlas divide the brain into regions, atlasing can be considered a kind of parcellation. Reciprocally, any kind of brain parcellation can be considered an “atlas”.

A difference is that an atlas is often expected to be defined on an entire brain, while a parcellation can be limited to a specific region (e.g. thalamus parcellation). A parcellation can also be applied for example to the cortical GM (the outer layer of the brain) or the subcortical GM for deep GM (GM structures below the cortex).

Note that Freesurfer call cortical parcellation “parcellation”

(denoting parc the associated files) and subcortical

parcellation “segmentation” (denoting seg the associated

files).

The picture can be muddled when considering probabilistic atlases. In this case each atlas region is associated to a probabilistic map. Just like tissue probability maps, each voxel (or vertex) has a given probability to belong to the region considered.

Probability atlas threshold

Given a probabilistic atlas, is there a single probability threshold which would guaranteed each brain volume voxel (or surface vertices) to have a unique label ? If yes, which threshold would you choose, and if not how would you proceed ?

In virtually all cases no single probability threshold will result in each voxel (or vertex) belonging to a unique ROI. A high threshold will result in voxels not having a label, while a low threshold will cause voxels to belong to overlapping probability maps. A “true” parcellation could be obtained by associating to each voxel (or vertex) the label with the maximum probability.

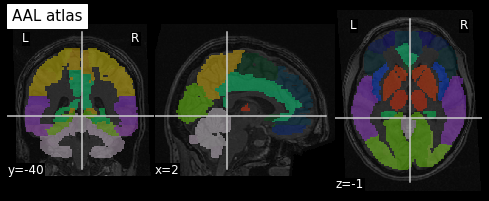

Visualizing and extracting ROIs of a non probabilistic volumetric atlas

An example of a volumetric atlas motivated by neuroanatomy is the

Automated Anatomical Labeling (AAL) atlas. Each ROI has an anatomically

meaningful text label. The atlas is aligned with the MNI MRI

single-subject brain. The atlas is represented as a 3D volume in which

each voxel has an integer index which corresponds to an ROI label,

e.g. 2401 for the

left hemisphere supplementary motor area.

Nilearn offers a collection of atlases in its

datasets module. The AAL atlas, text labels and integer

indices can all be obtained through this module.

PYTHON

from nilearn.datasets import fetch_atlas_aal

AAL_dataset = fetch_atlas_aal()

AAL_maps = AAL_dataset.maps

AAL_labels = AAL_dataset['labels']

AAL_labels_ix = AAL_dataset['indices']We can check the atlas dimension by loading it with

nibabel

OUTPUT

(91, 109, 91)Plotting the atlas

To plot the atlas we can use as background either the template on

which it was defined (or one highly similar), or a subject volume

aligned with that template. In our case we use the t1_mni

volume of our subject and use the plot_roi function of the

nilearn plotting module to add the atlas as an

overlay:

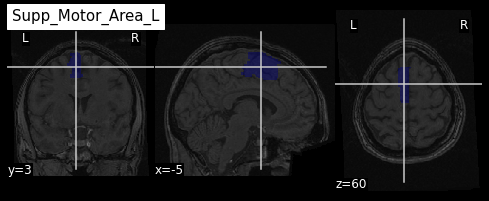

We can extract a specific ROI in two steps:

- Identify the integer index corresponding to the ROI

- Get all the voxels corresponding to that index

Identifying an ROI integer index

Sometimes the integer index directly correspond to the label list

index, e.g. if “visual cortex” is in position 3 in AAL_dataset[‘labels’]

then the corresponding integer index in the image is 3. For the AAL

atlas, the ROI indices are not in the order of the text labels so

nilearn provide a list of ROI indices. Since the list of

labels match the list of indices we proceed in two steps:

- Find the position of the ROI in the list of AAL_labels

- Use the same position in the list of indices

PYTHON

roi_label = "Supp_Motor_Area_L"

roi_label_pos = AAL_labels.index(roi_label)

roi_ix = int(AAL_labels_ix[roi_label_pos])

print(roi_ix)OUTPUT

2401Getting all voxels corresponding to that index

To create a binary ROI image from the ROI index, we:

- Create a boolean array with

Trueif the voxel label is equal to our ROI index, andFalseif not - Convert the boolean array to integer

PYTHON

roi_mask_arr_bool = (AAL_img.get_fdata() == roi_ix)

roi_mask_arr = roi_mask_arr_bool.astype(int)To create a nibabel image object, we then need to attach

the original image affine transform

we can now plot the ROI using the plot_roi function of

nilearn

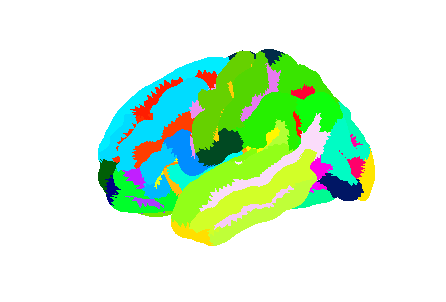

Visualizing and extracting ROIs of a surface atlas

For the previous atlas, the unit elements to receive a label were voxels. When considering the surface of the cortex, unit elements are the vertices of the surface mesh. Each such vertex can receive a label, and the result is a surface parcellation.

Freesurfer relies on two surface atlases: the Desikan-Killiany Atlas with 68 ROIs and the Destrieux Atlas with 148 ROIs. The Destrieux atlas is part of the nilearn datasets and we will focus on this atlas in the lesson.

Surface datasets are split in left and right hemisphere. Let’s aim at plotting the left hemisphere. As we will see also how to plot a specific ROI, we will also extract the atlas labels.

PYTHON

from nilearn.datasets import fetch_atlas_surf_destrieux

parcellation_L = destrieux['map_left']

destrieux_labels = destrieux['labels']Plotting the atlas

For plotting a 3D volume we needed a 3D image template. For a surface, we need a 3D surface (also called mesh) template. The Destrieux atlas we collected is defined on the fsaverage5 Freesurfer template, so we will need it to plot our atlas.

PYTHON

fsaverage = fetch_surf_fsaverage()

mesh = fsaverage['pial_left']

plotting.plot_surf_roi(mesh, roi_map=parcellation_L,

hemi='left', view='lateral');

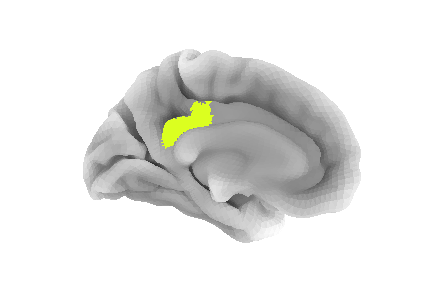

Extracting a specific ROI

To extract a specific ROI, we can proceed as we did for the AAL atlas but using the Destrieux labels.

PYTHON

pcc_region = b'G_cingul-Post-dorsal'

roi_ix = destrieux_labels.index(pcc_region)

roi_mask_arr = (parcellation_L == roi_ix).astype(int)To plot that ROI, we can use the plot_surf_roi function

of the nilearn plotting module. We will plot

the medial view since this ROI is located towards the central part of

the brain. We will also use the template as background image since most

vertices do not have a label to be plotted.

PYTHON

plotting.plot_surf_roi(mesh, roi_map=roi_mask_arr, hemi='left', view='medial',

bg_map=fsaverage['sulc_left']);

Jupyter notebook challenge

Can you modify the code in the Jupyter notebook to extract and display the right precuneus ?

This exercise involves a change of both ROI and hemisphere.

Because atlases can be overlaid on a subject brain registered to the atlas template, one can extract measurements specific to that subject within each atlas ROI. We will see common metrics and how to extract them in episode 6, and the important role of the WM and GM pial surfaces for this purpose.

- Brain segmentation and parcellation is a key step towards further analysis

- The brain can be represented via different data format (volume, surface)

- Multiple python libraries are particularly useful to manipulate brain data