Introduction

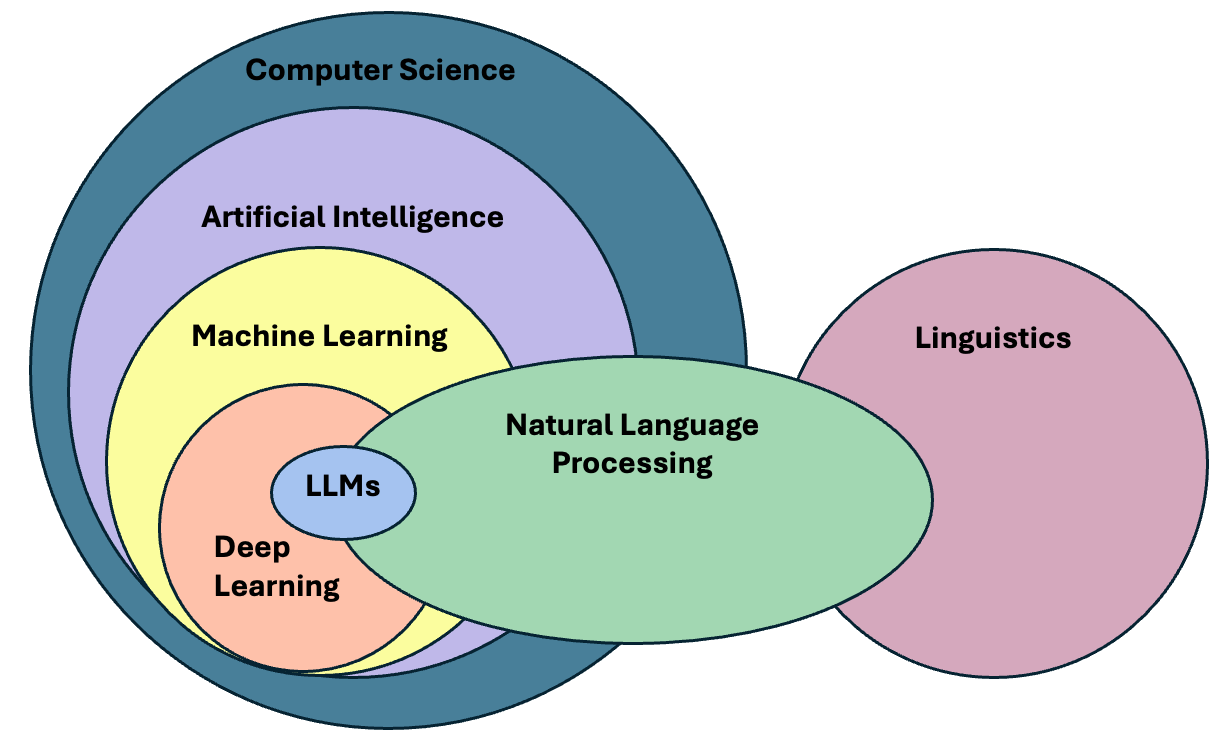

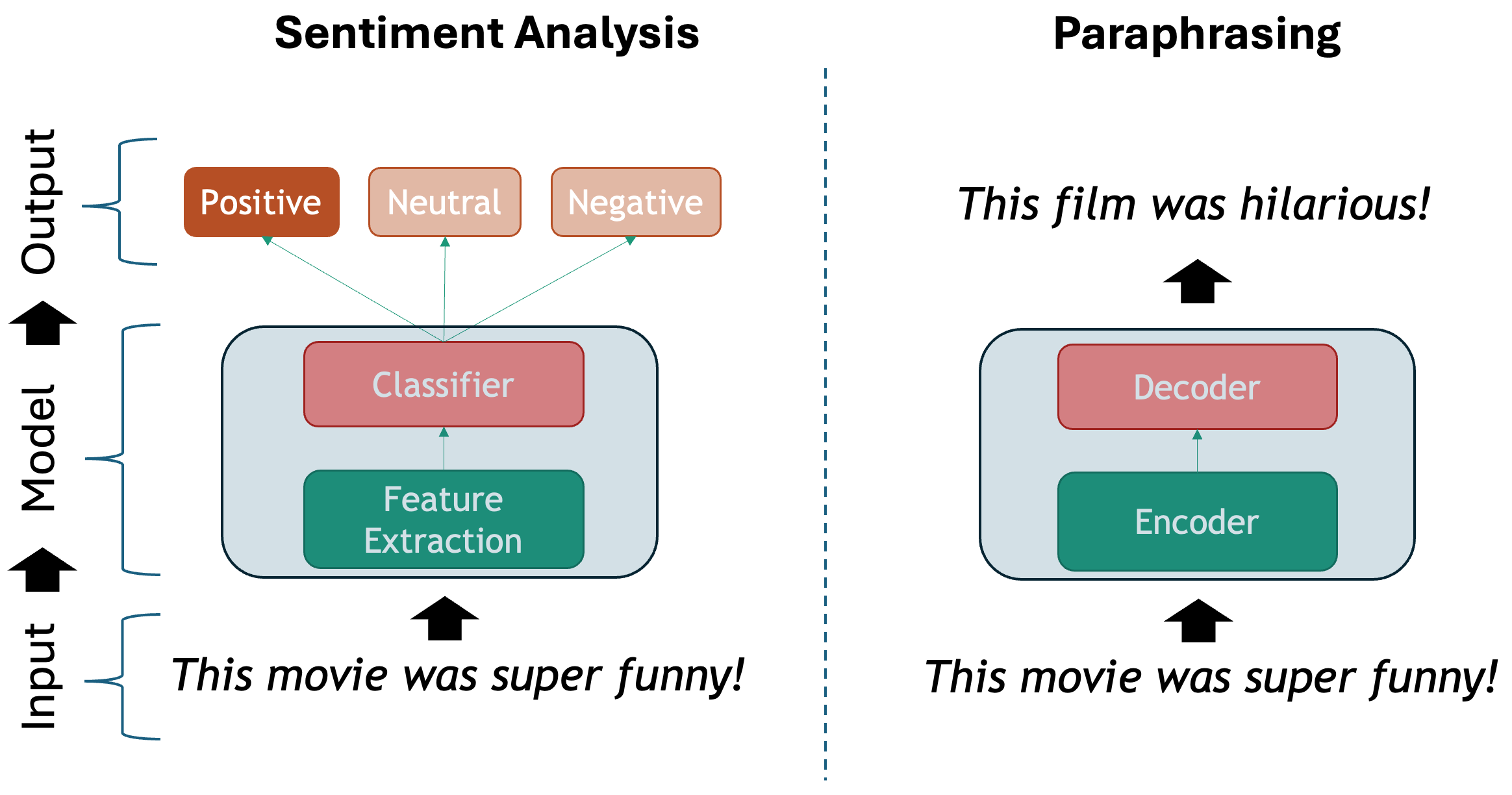

Figure 1

NLP is an interdisciplinary field, and LLMs are

just a subset of it

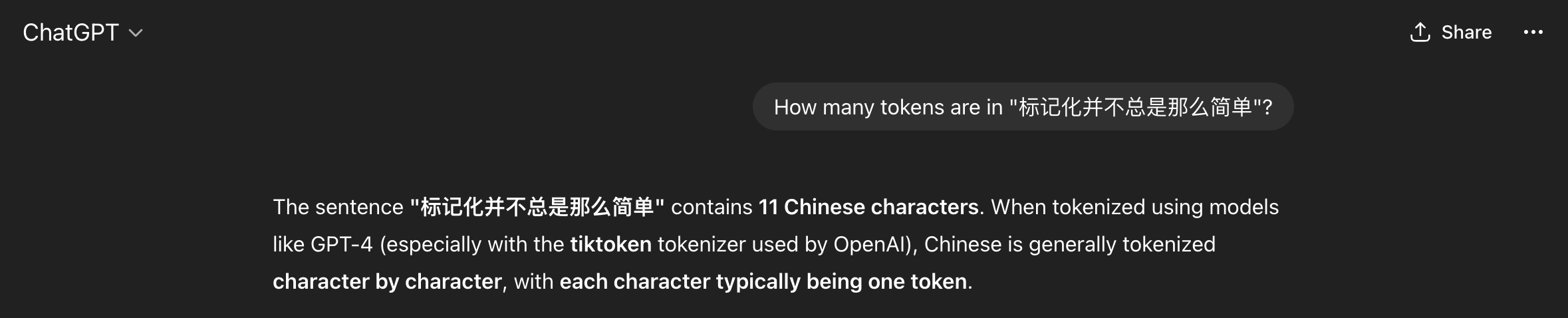

Figure 2

ChatGPT Just Works! Does it…?

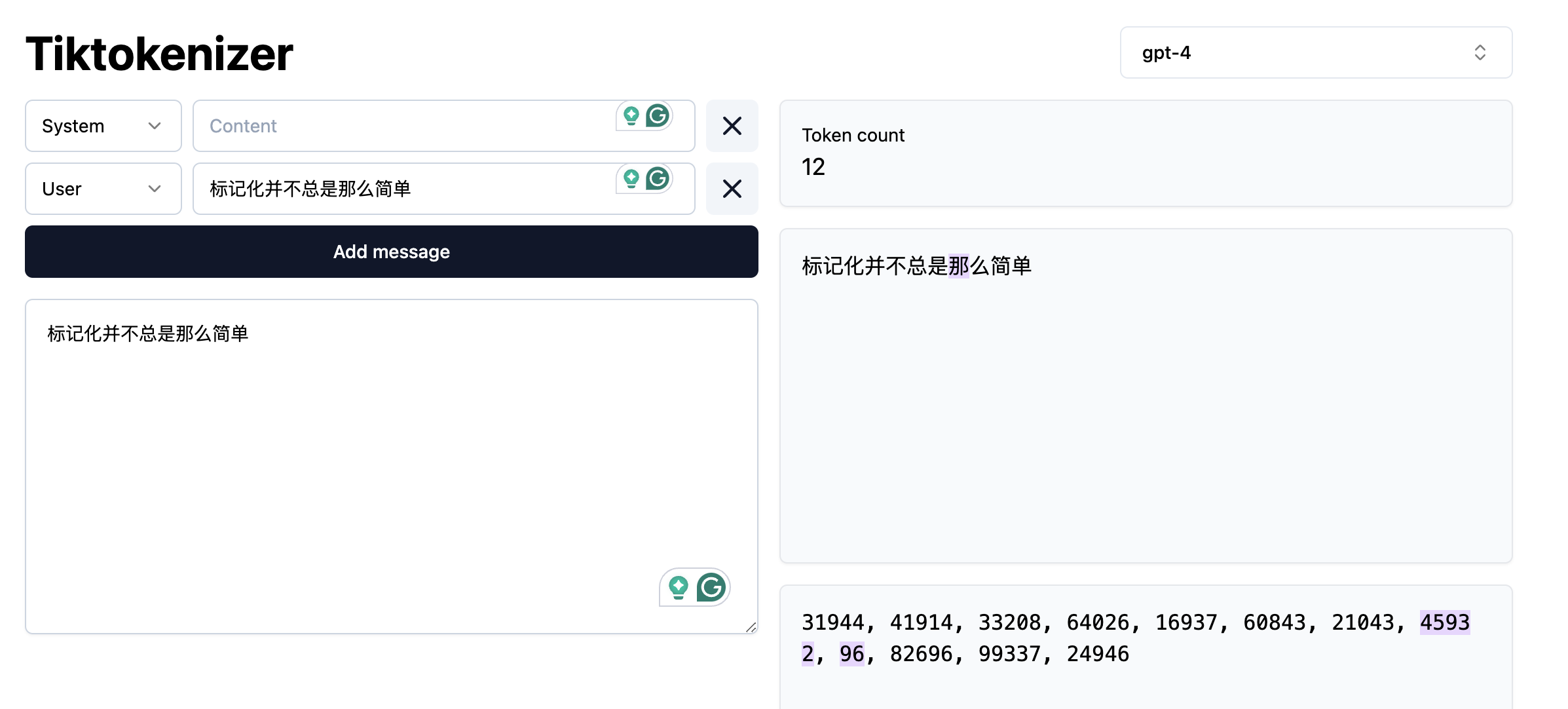

Figure 3

GPT-4 Tokenization Example

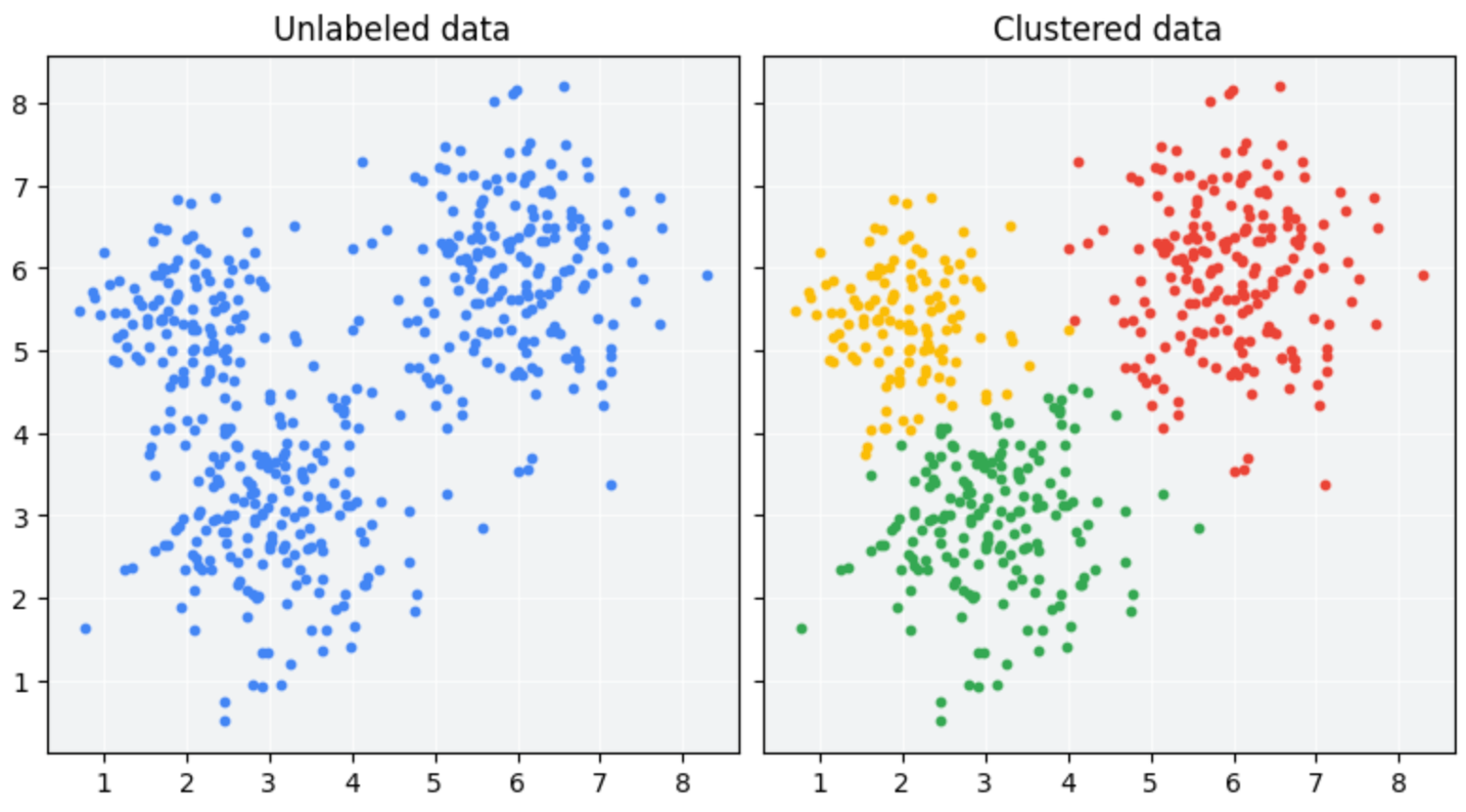

Figure 4

Unsupervised Learning

Figure 5

Supervised Learning

Figure 6

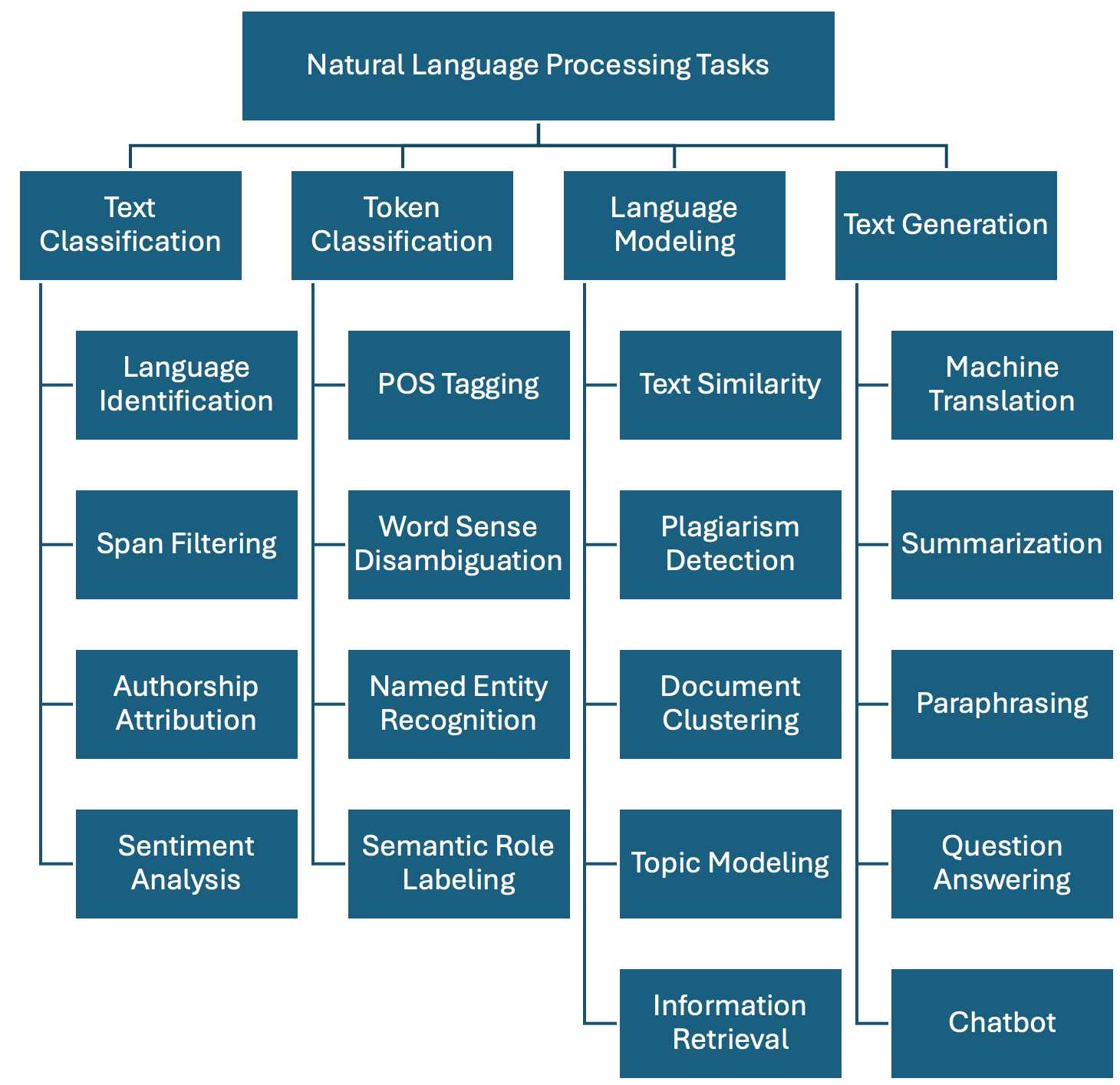

A taxonomy of NLP Tasks

Figure 7

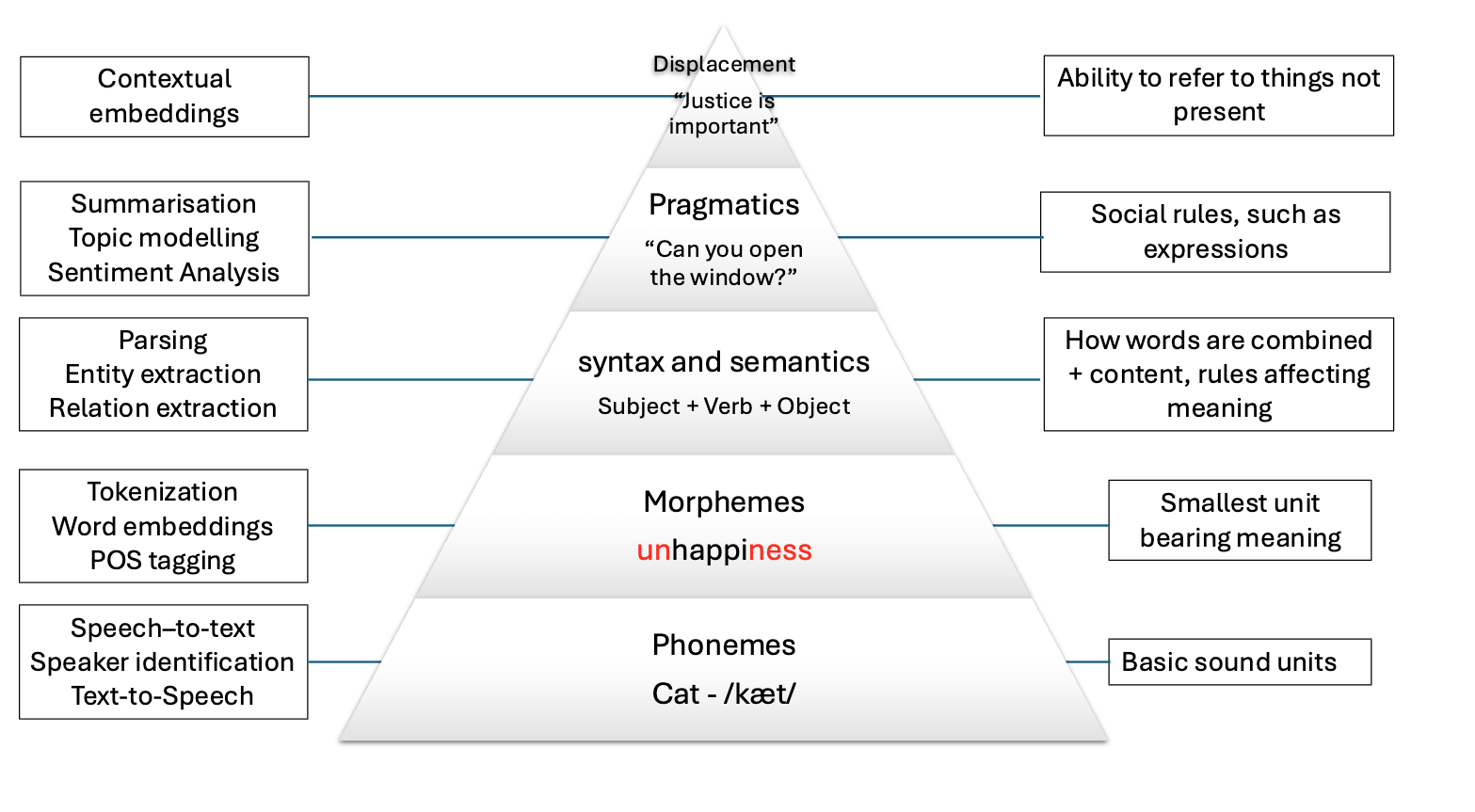

Levels of Language

Figure 8

Diagram showing building blocks of

language

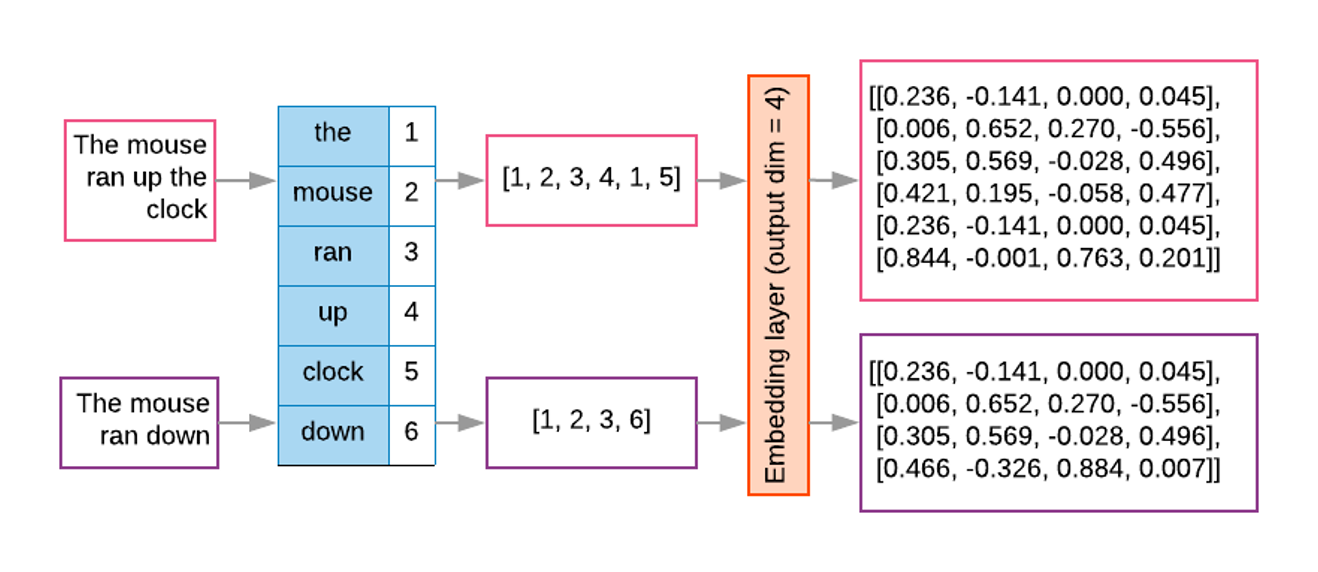

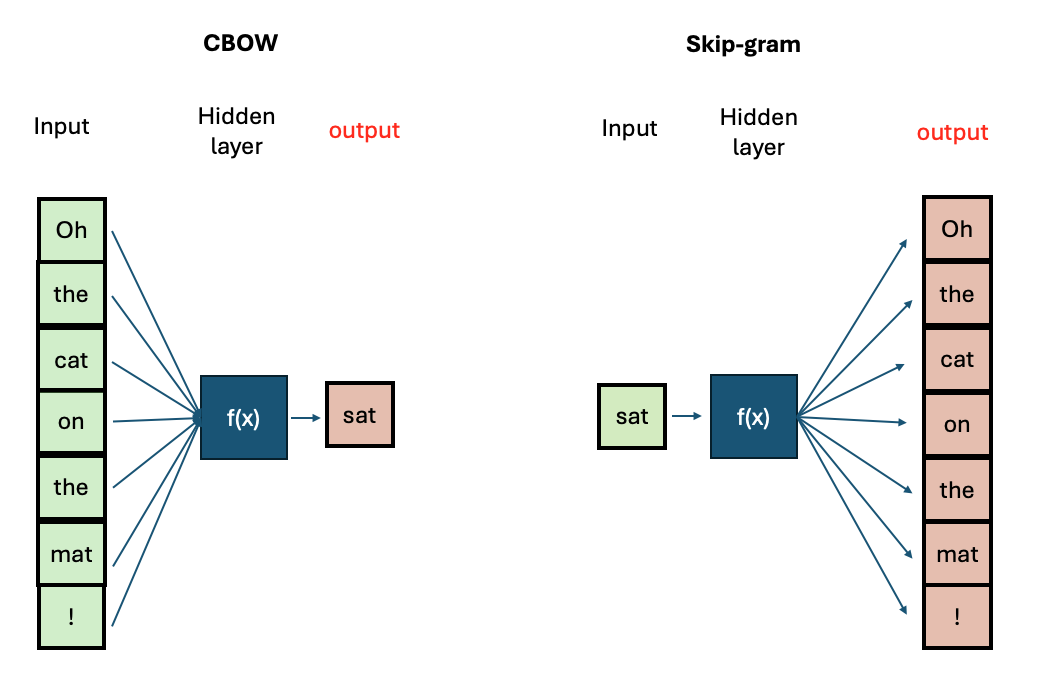

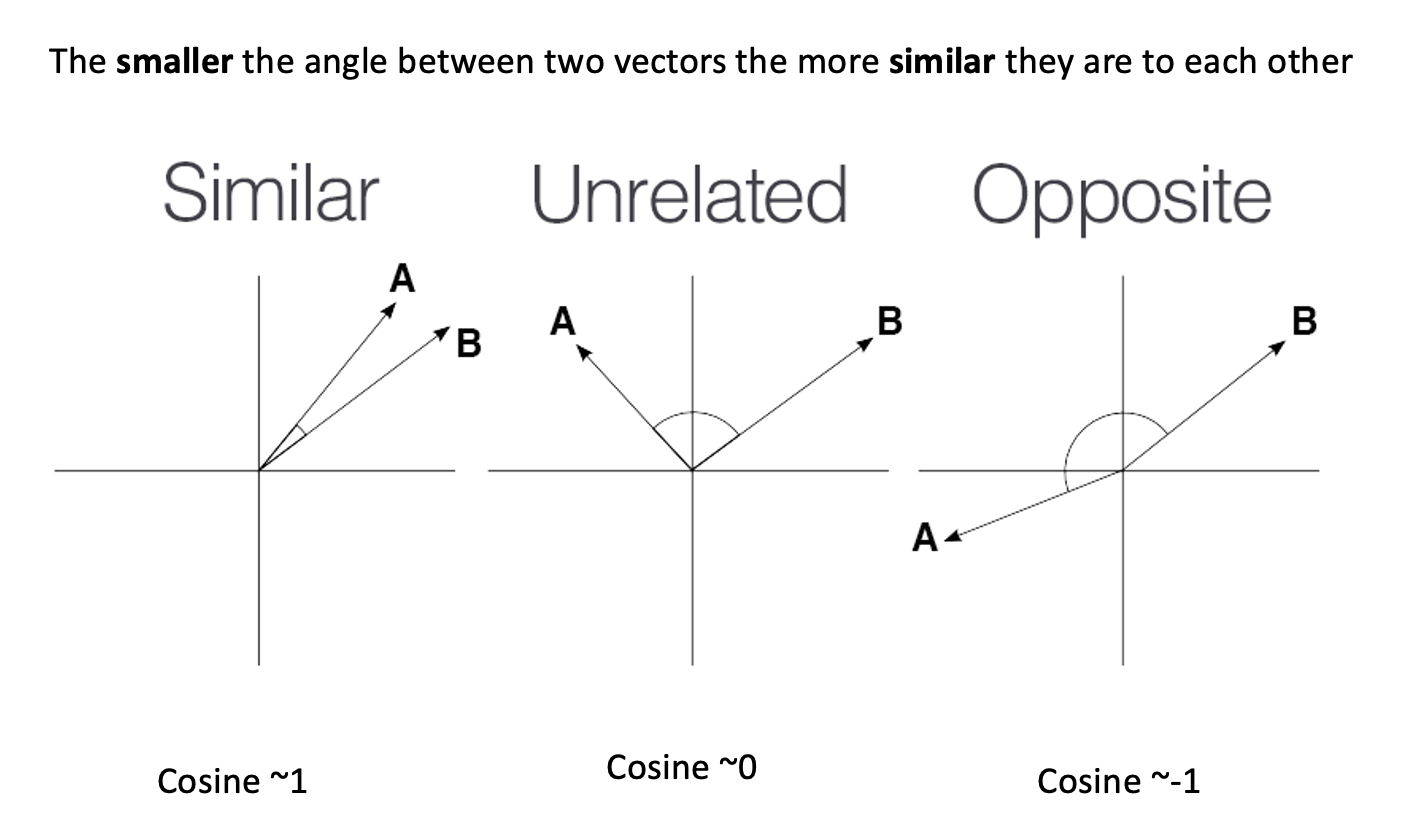

From words to vectors

Figure 1

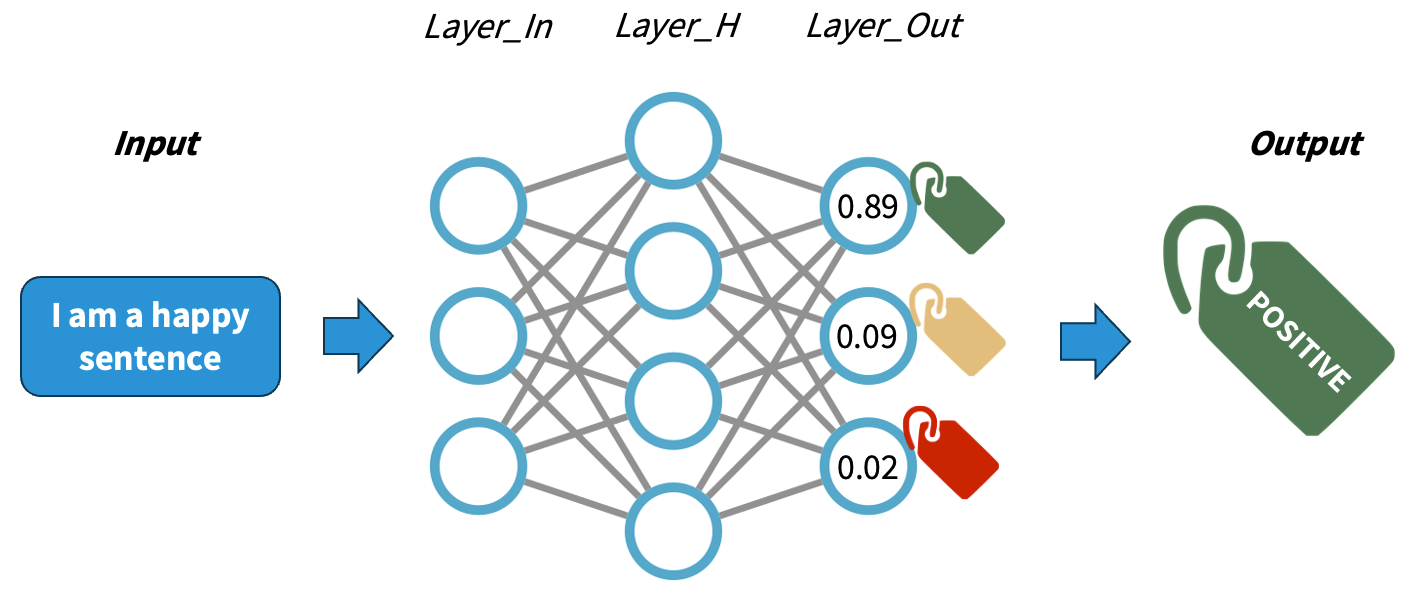

After seeing thousands of examples, each layer

represents different “features” that maximize the success of the task,

but they are not human-readable. The last layer acts as a classifier and

outputs the most likely label given the input

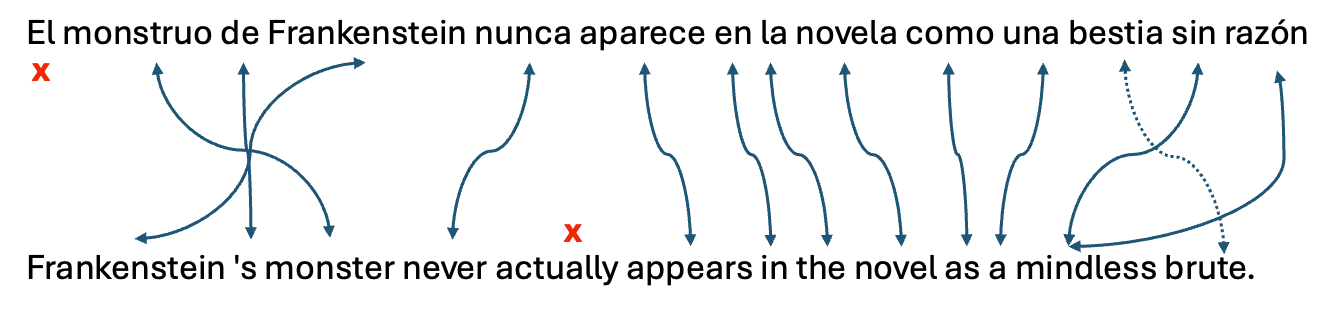

Figure 2

Figure 3

Figure 4

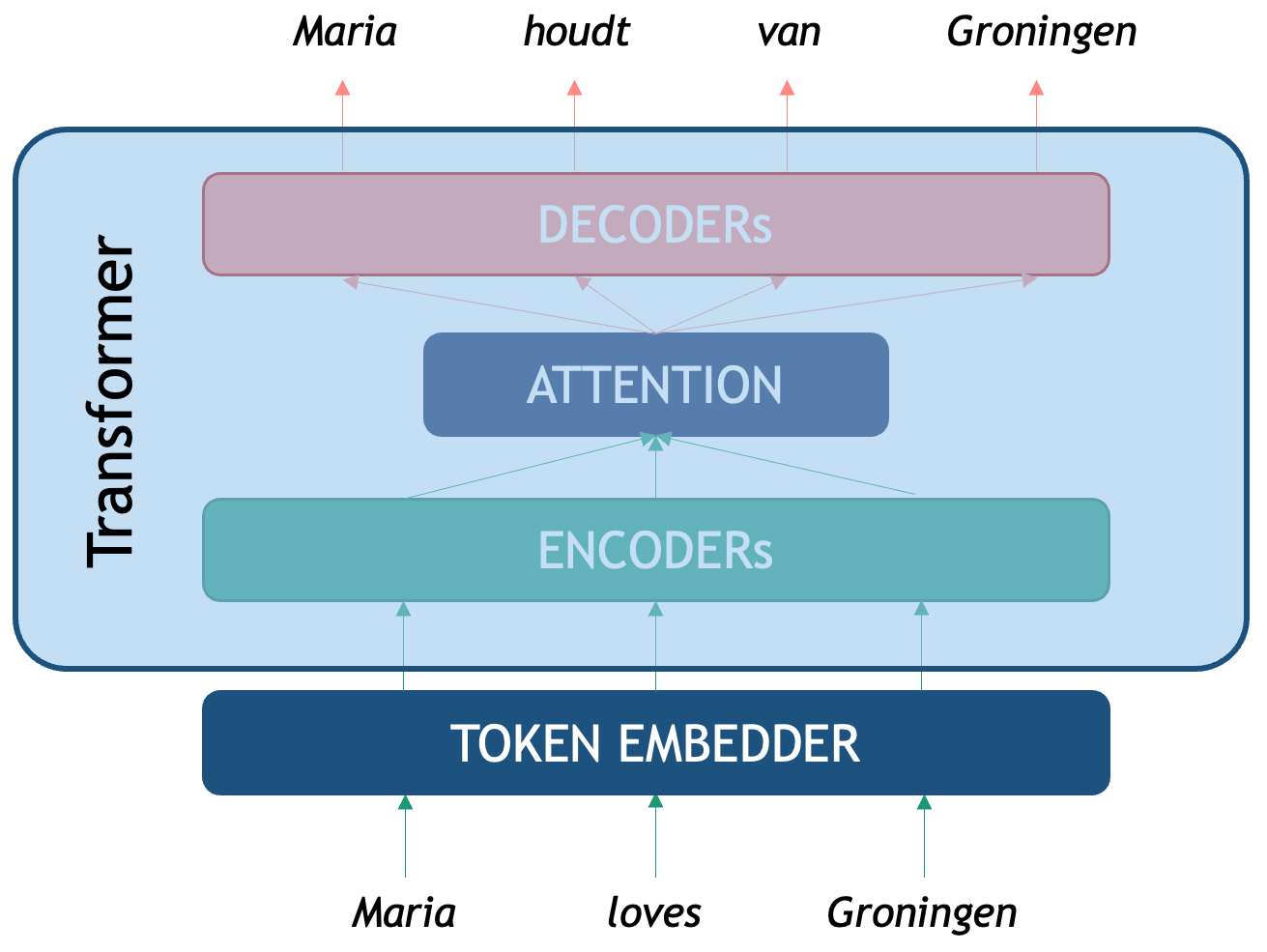

Transformers: BERT and Beyond

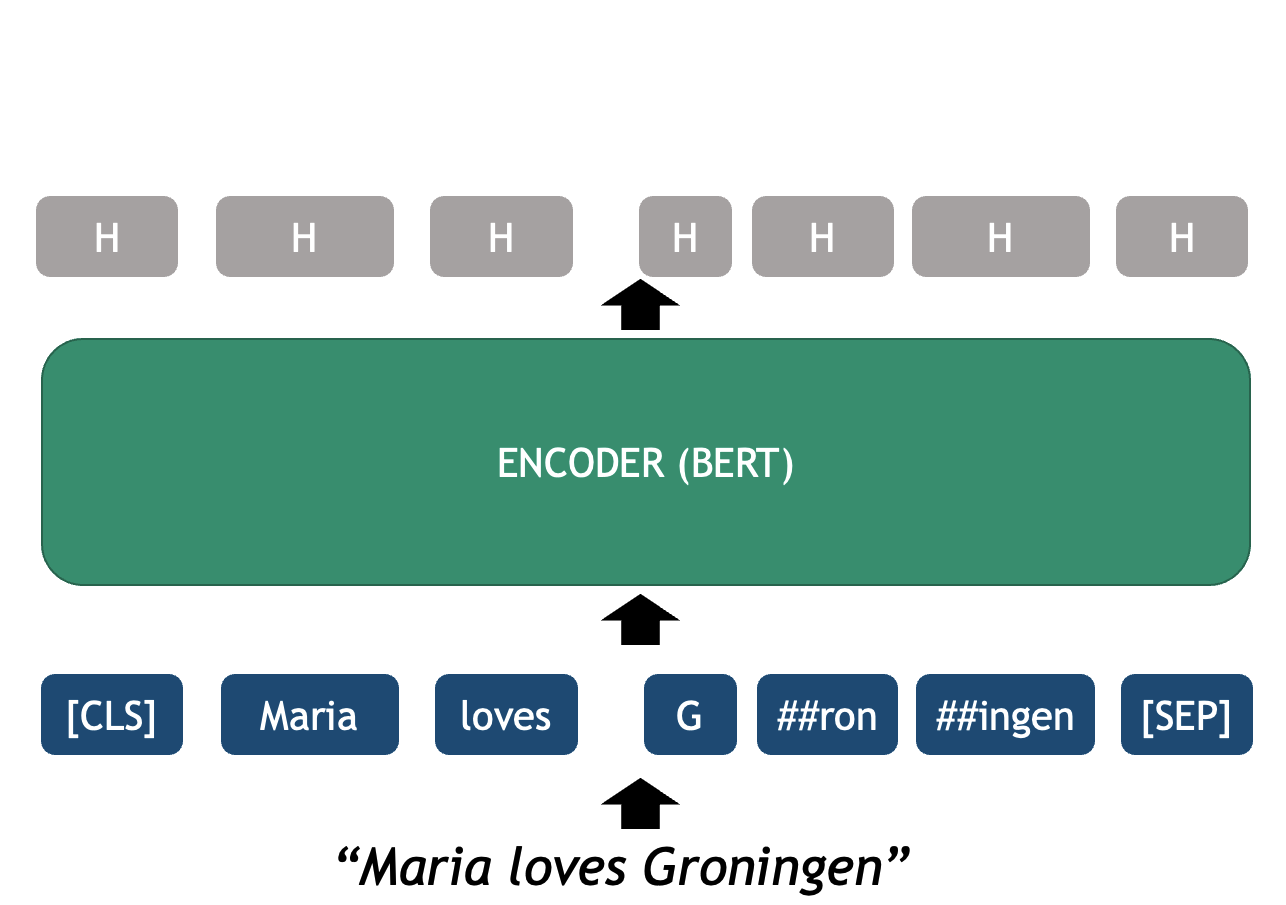

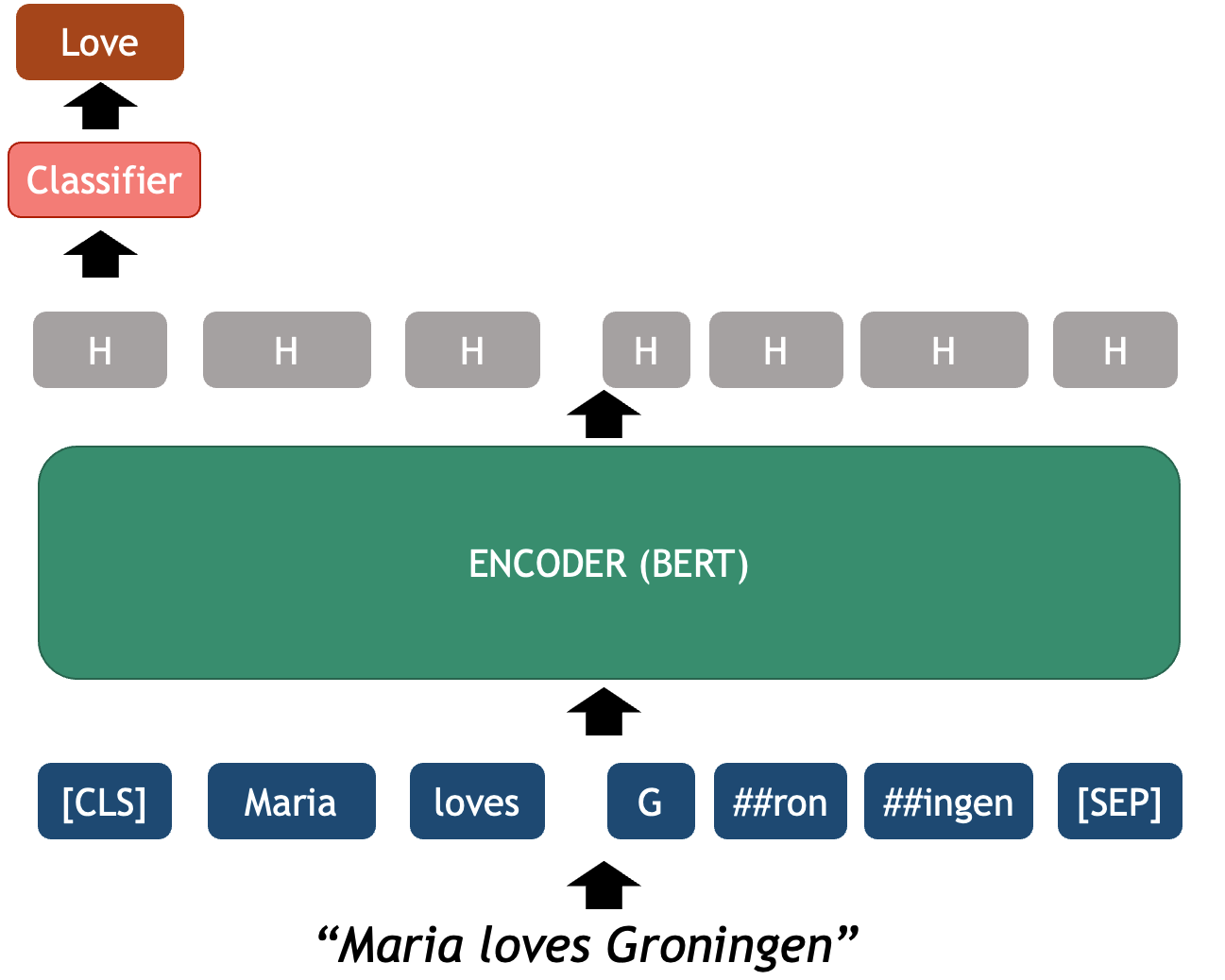

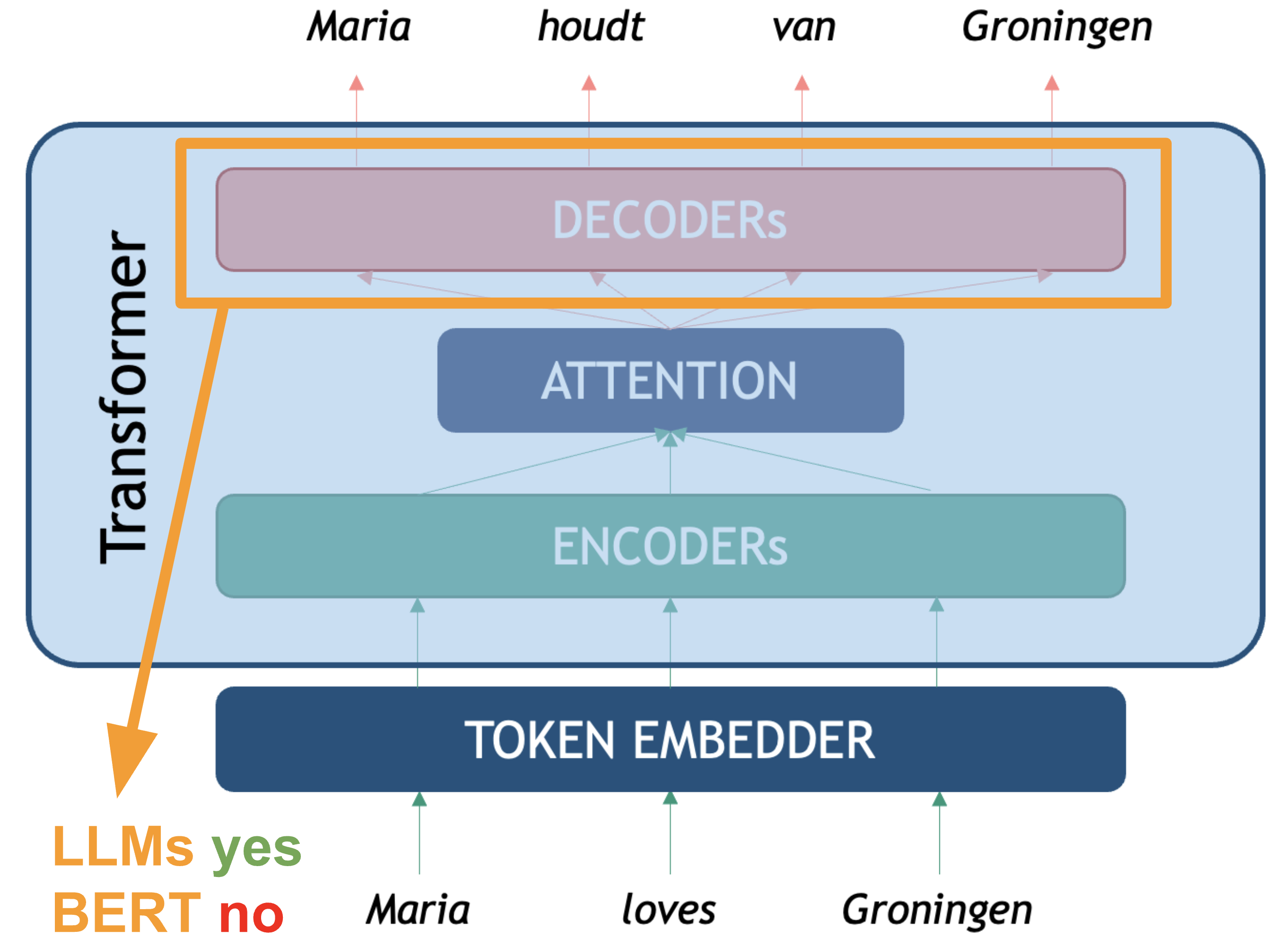

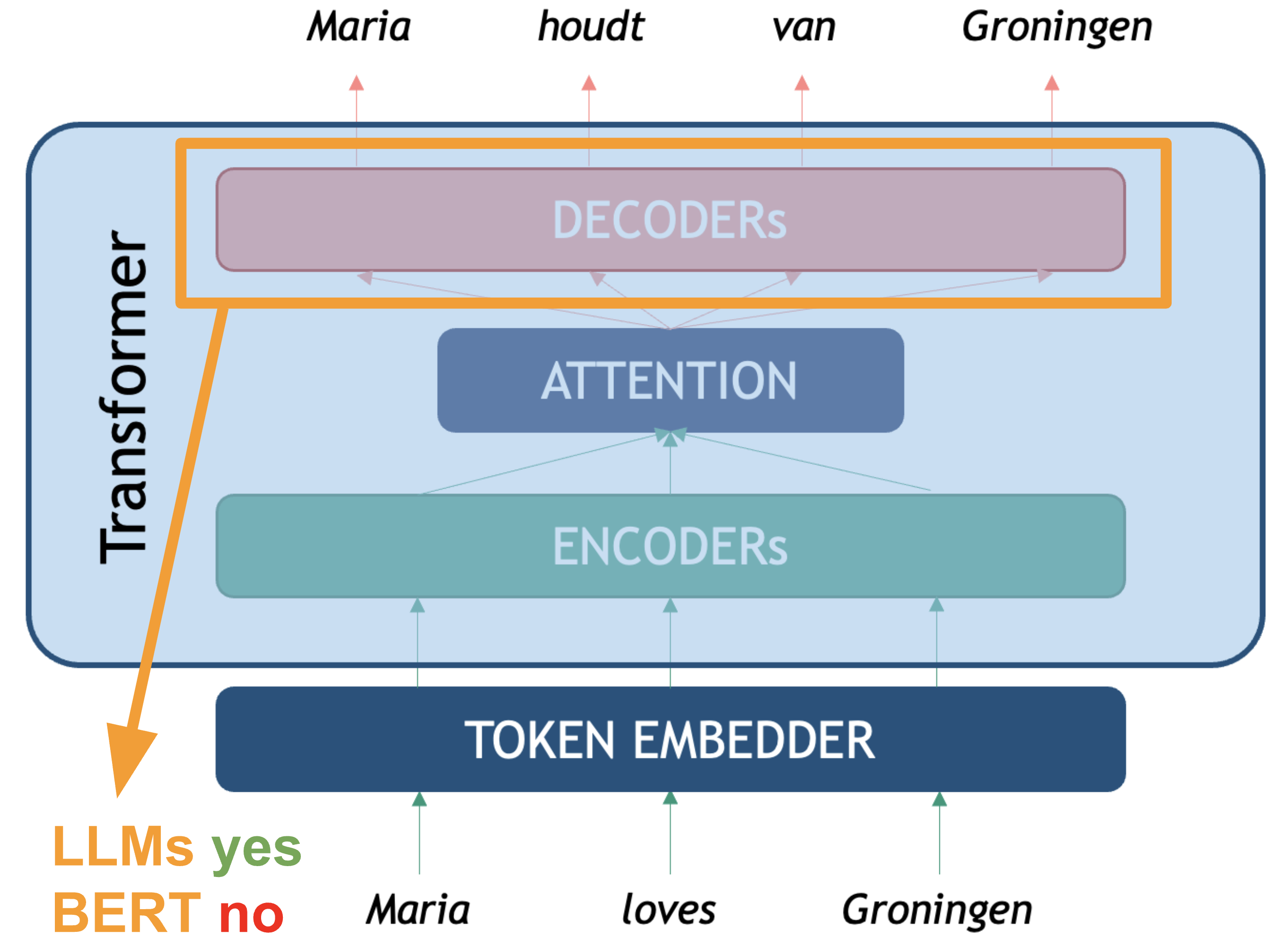

Figure 1

Transformer Architecture

Figure 2

Figure 3

BERT Architecture

Figure 4

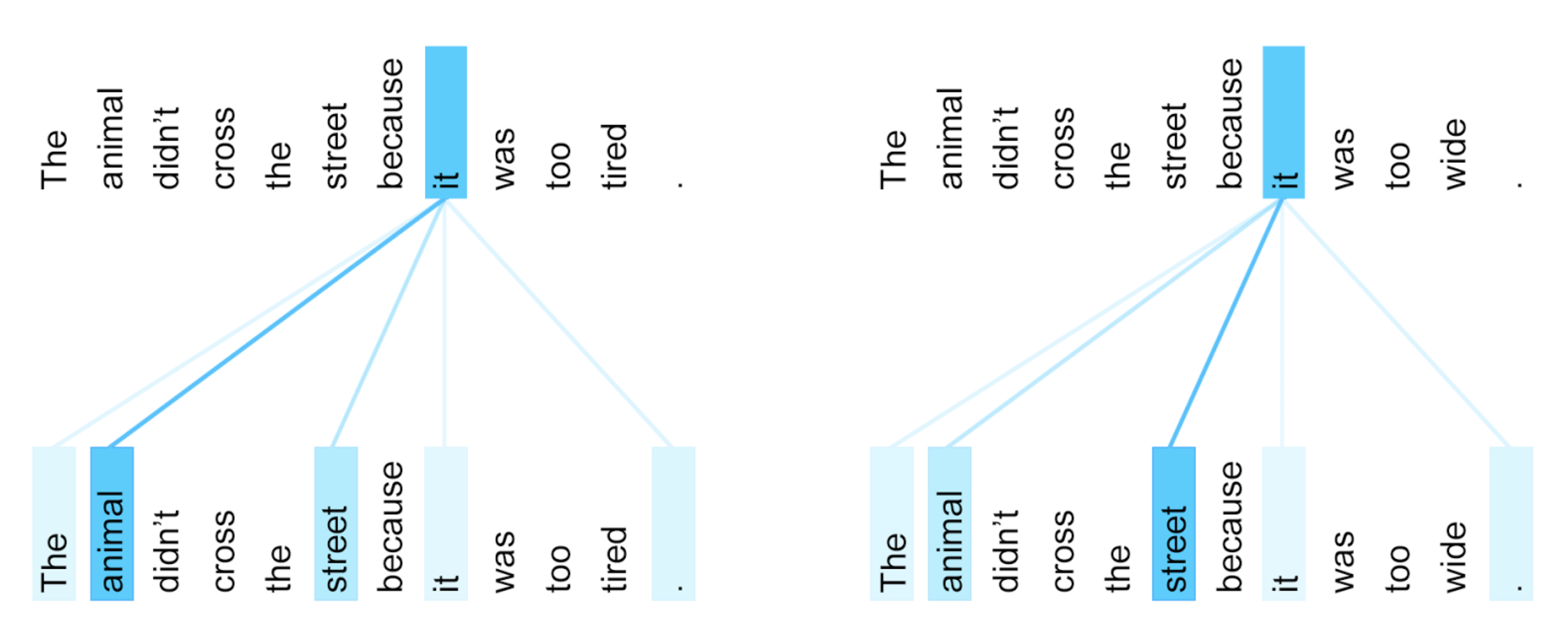

The Encoder Self-Attention Mechanism

Figure 5

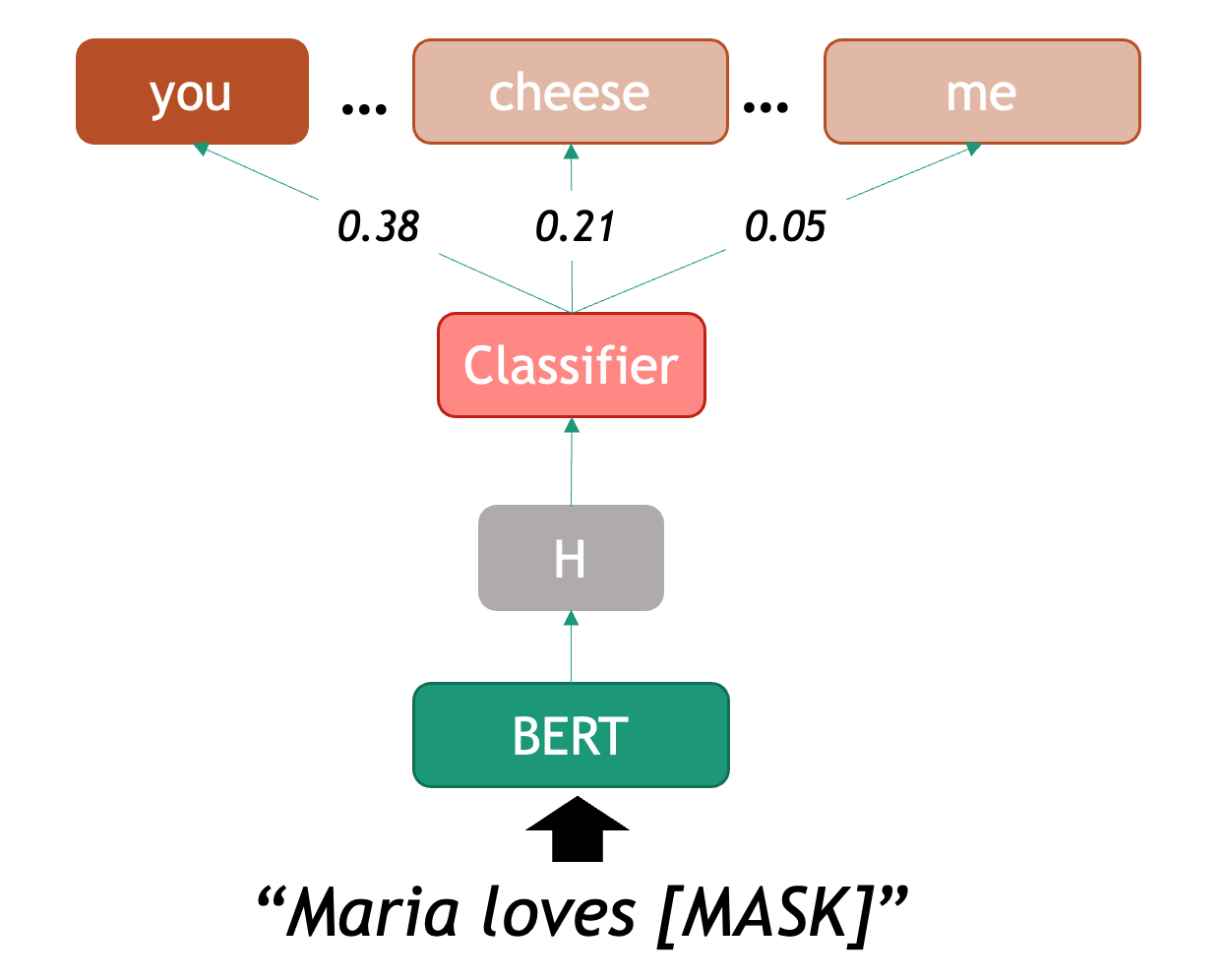

BERT Language Modeling

Figure 6

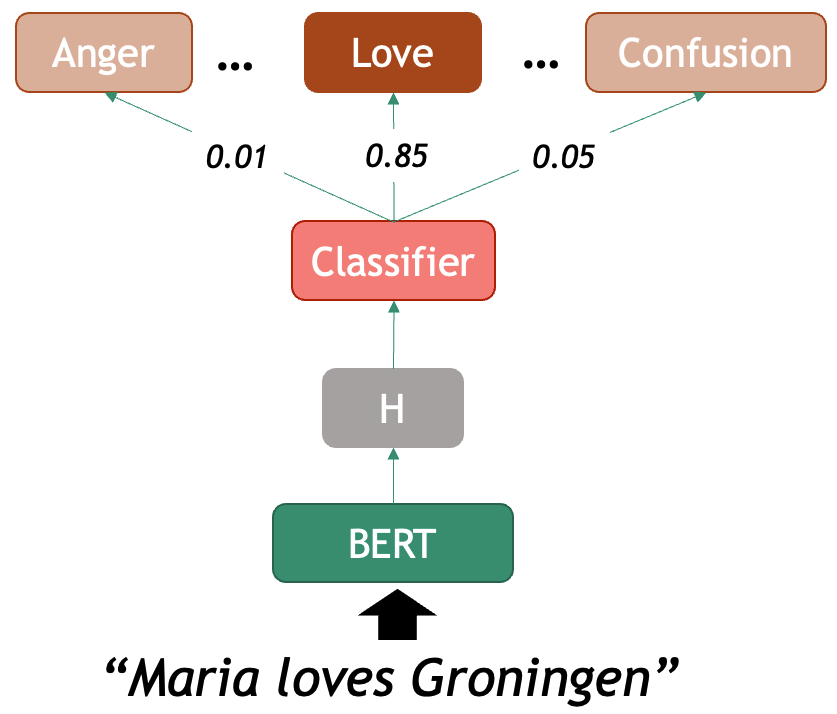

BERT as an Emotion Classifier

Figure 7

BERT as an Emotion Classifier

Figure 8

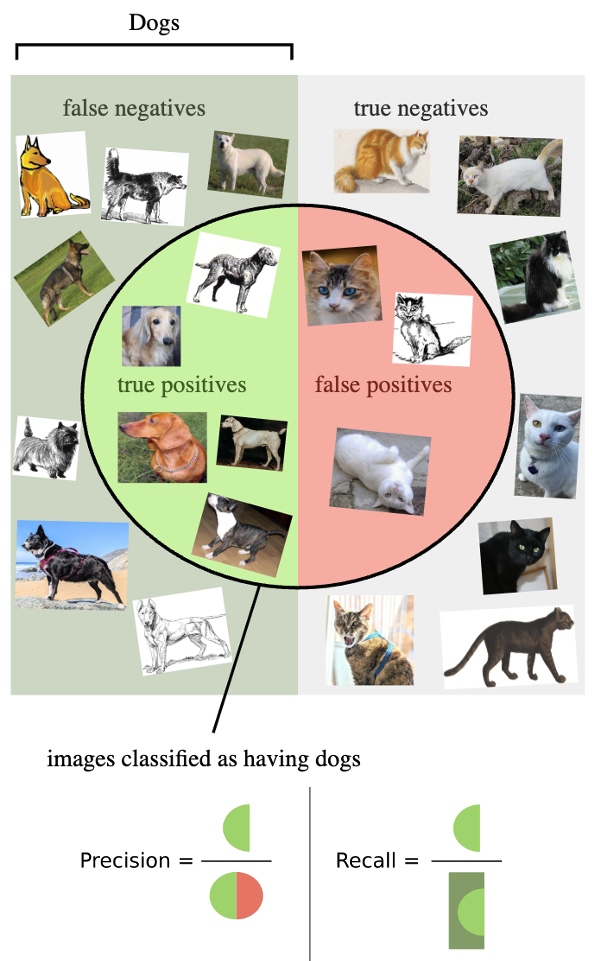

An example for a classifier of Cats and Dogs.

Source: Wikipedia

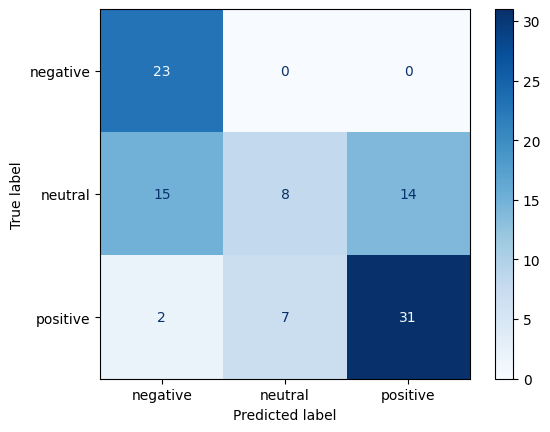

Figure 9

Figure 10

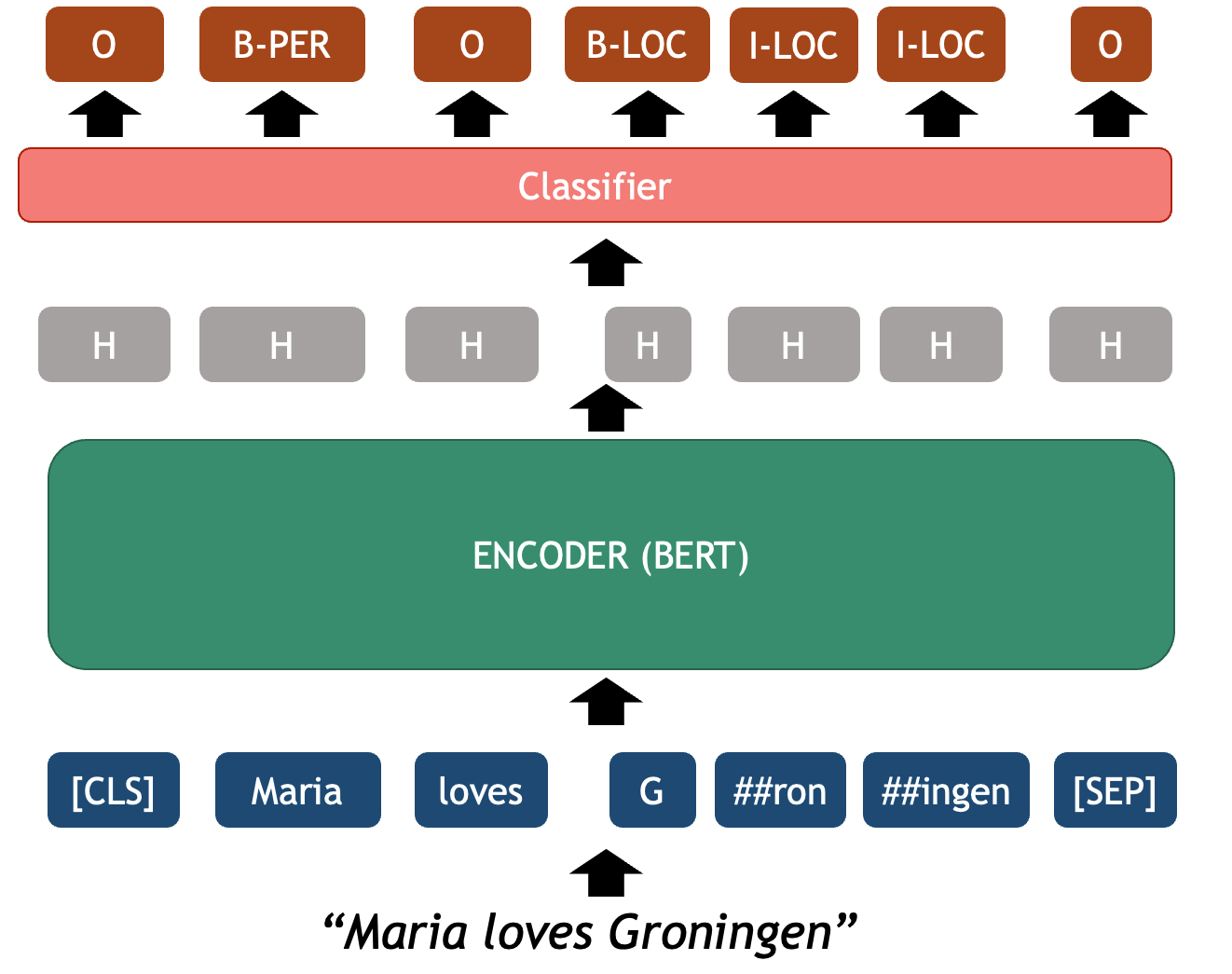

BERT as an NER Classifier

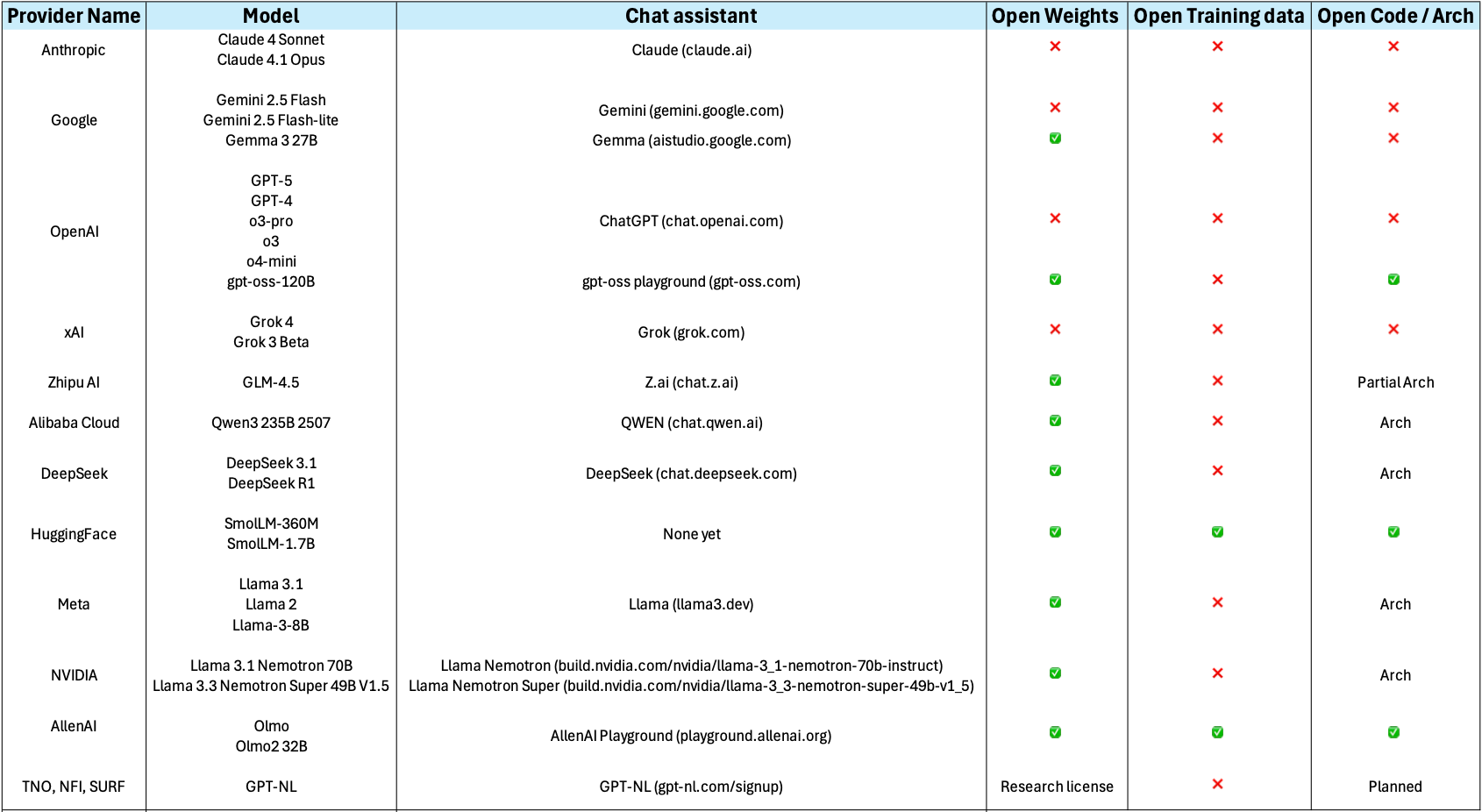

Using large language models

Figure 1

Figure 2

Figure 3

Figure 4

Figure 5

Figure 6

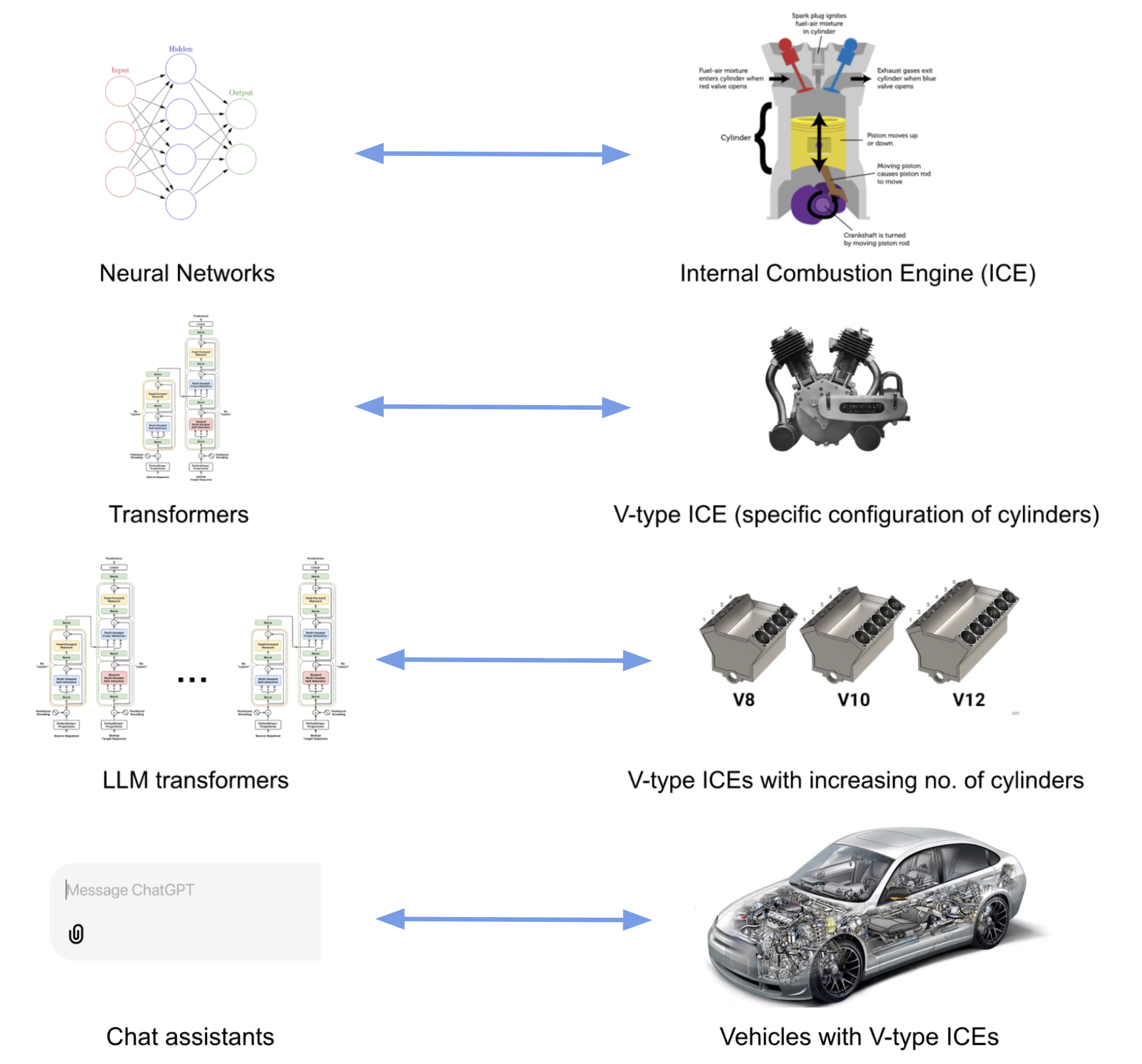

Generative LLMs correspond to the Decoder

component of the Transformer architecture