Basics of Testing

Overview

Teaching: 5 min

Exercises: 0 minQuestions

Why test?

Objectives

Understand the place of testing in a scientific workflow.

Understand that testing has many forms.

The first step toward getting the right answers from our programs is to assume that mistakes will happen and to guard against them. This is called defensive programming and the most common way to do it is to add alarms and tests into our code so that it checks itself.

Testing should be a seamless part of scientific software development process. This is analogous to experiment design in the experimental science world:

- At the beginning of a new project, tests can be used to help guide the overall architecture of the project.

- The act of writing tests can help clarify how the software should be perform when you are done.

- In fact, starting to write the tests before you even write the software might be advisable. (Such a practice is called test-driven development, which we will discuss in greater detail later in the lesson.)

There are many ways to test software, such as:

- Assertions

- Exceptions

- Unit Tests

- Regresson Tests

- Integration Tests

Exceptions and Assertions: While writing code, exceptions and assertions

can be added to sound an alarm as runtime problems come up. These kinds of

tests, are embedded in the software iteself and handle, as their name implies,

exceptional cases rather than the norm.

Unit Tests: Unit tests investigate the behavior of units of code (such as functions, classes, or data structures). By validating each software unit across the valid range of its input and output parameters, tracking down unexpected behavior that may appear when the units are combined is made vastly simpler.

Regression Tests: Regression tests defend against new bugs, or regressions, which might appear due to new software and updates.

Integration Tests: Integration tests check that various pieces of the software work together as expected.

Key Points

Tests check whether the observed result, from running the code, is what was expected ahead of time.

Tests should ideally be written before the code they are testing is written, however some tests must be written after the code is written.

Assertions and exceptions are like alarm systems embedded in the software, guarding against exceptional bahavior.

Unit tests try to test the smallest pieces of code possible, usually functions and methods.

Integration tests make sure that code units work together properly.

Regression tests ensure that everything works the same today as it did yesterday.

Assertions

Overview

Teaching: 10 min

Exercises: 0 minQuestions

How can we compare observed and expected values?

Objectives

Assertions are one line tests embedded in code.

Assertions can halt execution if something unexpected happens.

Assertions are the building blocks of tests.

Assertions are the simplest type of test. They are used as a tool for bounding acceptable behavior during runtime. The assert keyword in python has the following behavior:

>>> assert True == False

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

AssertionError

>>> assert True == True

That is, assertions halt code execution instantly if the comparison is false. It does nothing at all if the comparison is true. These are therefore a very good tool for guarding the function against foolish (e.g. human) input:

def mean(num_list):

assert len(num_list) != 0

return sum(num_list)/len(num_list)

The advantage of assertions is their ease of use. They are rarely more than one line of code. The disadvantage is that assertions halt execution indiscriminately and the helpfulness of the resulting error message is usually quite limited.

Also, input checking may require decending a rabbit hole of exceptional cases. What happens when the input provided to the mean function is a string, rather than a list of numbers?

- Open a Jupyter Notebook

- Create the following function:

def mean(num_list):

return sum(num_list)/len(num_list)

- In the function, insert an assertion that checks whether the input is actually a list.

Hint

Hint: Use the isinstance function.

Testing Near Equality

Assertions are also helpful for catching abnormal behaviors, such as those that arise with floating point arithmetic. Using the assert keyword, how could you test whether some value is almost the same as another value?

- My package, mynum, provides the number a.

- Use the

assertkeyword to check whether the number a is greater than 2.- Use the

assertkeyword to check that a is equal to 2 within an error of 0.003.

from mynum import a

# greater than 2 assertion here

# 0.003 assertion here

NumPy

The NumPy numerical computing library has a built-in function assert_allclose

for comparing numbers within a tolerance:

from numpy.testing import assert_allclose

from mynum import a

assert_allclose(a, 2, atol=0.003, rtol=0)

Key Points

Assertions are one line tests embedded in code.

The

assertkeyword is used to set an assertion.Assertions halt execution if the argument is false.

Assertions do nothing if the argument is true.

The

numpy.testingmodule provides tools numeric testing.Assertions are the building blocks of tests.

Exceptions

Overview

Teaching: 10 min

Exercises: 0 minQuestions

How do I handle unusual behavior while the code runs?

Objectives

Understand that exceptions are effectively specialized runtime tests

Learn when to use exceptions and what exceptions are available

Exceptions are more sophisticated than assertions. They are the standard error messaging system in most modern programming languages. Fundamentally, when an error is encountered, an informative exception is ‘thrown’ or ‘raised’.

For example, instead of the assertion in the case before, an exception can be used.

def mean(num_list):

if len(num_list) == 0:

raise Exception("The algebraic mean of an empty list is undefined. "

"Please provide a list of numbers")

else:

return sum(num_list)/len(num_list)

Once an exception is raised, it will be passed upward in the program scope. An exception be used to trigger additional error messages or an alternative behavior. rather than immediately halting code execution, the exception can be ‘caught’ upstream with a try-except block. When wrapped in a try-except block, the exception can be intercepted before it reaches global scope and halts execution.

To add information or replace the message before it is passed upstream, the try-catch block can be used to catch-and-reraise the exception:

def mean(num_list):

try:

return sum(num_list)/len(num_list)

except ZeroDivisionError as detail :

msg = "The algebraic mean of an empty list is undefined. Please provide a list of numbers."

raise ZeroDivisionError(detail.__str__() + "\n" + msg)

Alternatively, the exception can simply be handled intelligently. If an alternative behavior is preferred, the exception can be disregarded and a responsive behavior can be implemented like so:

def mean(num_list):

try:

return sum(num_list)/len(num_list)

except ZeroDivisionError :

return 0

If a single function might raise more than one type of exception, each can be caught and handled separately.

def mean(num_list):

try:

return sum(num_list)/len(num_list)

except ZeroDivisionError :

return 0

except TypeError as detail :

msg = "The algebraic mean of an non-numerical list is undefined.\

Please provide a list of numbers."

raise TypeError(detail.__str__() + "\n" + msg)

What else could go wrong?

- Think of some other type of exception that could be raised by the try block.

- Guard against it by adding an except clause.

- Use the mean function in three different ways, so that you cause each exceptional case.

Exceptions have the advantage of being simple to include and powerfully helpful to the user. However, not all behaviors can or should be found with runtime exceptions. Most behaviors should be validated with unit tests.

Key Points

Exceptions are effectively specialized runtime tests

Exceptions can be caught and handled with a try-except block

Many built-in Exception types are available

Design by Contract

Overview

Teaching: 5 min

Exercises: 10 minQuestions

What is Design by Contract?

Objectives

Learn to use Python contracts, PyContracts.

Learn to define simple and complicated contracts.

Learn about pre-, post- and invariant- conditions of a contract.

In Design by Contract, the interaction between an application and functions in a library is managed, metaphorically, by a contract. A contract for a function typically involves three different types of requirements.

- Pre-conditions: Things that must be true before a function begins its work.

- Post-conditions: Things that are guaranteed to be true after a function finishes its work.

- Invariant-conditions: Things that are guaranteed not to change as a function does its work.

In the examples here, we use PyContracts which uses Python decorator notation. Note: In the current implementation of PyContracts, only pre- and post-conditions are implemented. Invariants, if needed, may be handled using ordinary assertions. Finally, to simplify the examples here, the following imports are assumed…

from math import sqrt, log

from contracts import contract, new_contract

To demonstrate the use of contracts, in the example here, we implement our own version of an integer square root

function for perfect squares, called perfect_sqrt. We define a contract that indicates the caller is required

to pass an integer value greater than or equal to zero. This is an example of a pre-condition. Next, the function

is required to return an integer greater than or equal to zero. This is an example of a post-condition.

@contract(x='int,>=0',returns='int,>=0')

def perfect_sqrt(x):

retval = sqrt(x)

iretval = int(retval)

return iretval if iretval == retval else retval

Now, lets see what happens when we use this function to compute square roots.

>>> perfect_sqrt(4)

2

>>> perfect_sqrt(81)

9

Values of 4 and 81 are both integers. So, in these cases the caller has obeyed the pre-conditions of the contract. In addition, because both 4 and 81 are perfect squares, the function correctly returns their integer square root. So, the funtion has obeyed the post-conditions of the contract.

Now, lets see what happens when the caller fails to obey the pre-conditions of the contract by passing a negative number.

>>> perfect_sqrt(-4)

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "<decorator-gen-2>", line 2, in perfect_sqrt

File "/Library/Python/2.7/site-packages/PyContracts/contracts/main.py", line 253, in contracts_checker

raise e

contracts.interface.ContractNotRespected: Breach for argument 'x' to perfect_sqrt().

Condition -4 >= 0 not respected

checking: >=0 for value: Instance of <type 'int'>: -4

checking: int,>=0 for value: Instance of <type 'int'>: -4

Variables bound in inner context:

An exception is raised indicating a failure to obey the pre-condition for passing a value greather than or equal to zero. Next, lets see what happens when the function cannot obey the post-condition of the contract.

>>> perfect_sqrt(83)

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "<decorator-gen-2>", line 2, in perfect_sqrt

File "/Library/Python/2.7/site-packages/PyContracts/contracts/main.py", line 264, in contracts_checker

raise e

contracts.interface.ContractNotRespected: Breach for return value of perfect_sqrt().

.

.

.

checking: Int|np_scalar_int|np_scalar,array(int) for value: Instance of <type 'float'>: 9.1104335791443

checking: $(Int|np_scalar_int|np_scalar,array(int)) for value: Instance of <type 'float'>: 9.1104335791443

checking: int for value: Instance of <type 'float'>: 9.1104335791443

checking: int,>=0 for value: Instance of <type 'float'>: 9.1104335791443

Variables bound in inner context:

For the value of 83, although the caller obeyed the pre-conditions of the contract, the function does not return an integer value. It fails the post-condition and an exception is raised.

Extending Contracts

Sometimes, the simple built-in syntax for defining contracts is not sufficient. In this case, contracts can be extended by defining a function that implements a new contract. For example, number theory tells us that all perfect squares end in a digit of 1,4,5,6, or 9 or end in an even number of zero digits. We can define a new contract that checks these conditions.

@new_contract

def ends_ok(x):

ends14569 = x%10 in (1,4,5,6,9)

ends00 = int(round((log(x,10)))) % 2 == 0

if ends14569 or ends00:

return True

raise ValueError("%s doesn't end in 1,4,5,6 or 9 or even number of zeros"%x)

We can then use this function, ends_ok, in a contract specification

@contract(x='int,ends_ok,>=0',returns='int,>=0')

def perfect_sqrt2(x):

return int(sqrt(x))

Let’s see what happens when we try to use this perfect_sqrt2 function on a number that ends in an

odd number of zeros.

>>> perfect_sqrt2(49)

7

>>> perfect_sqrt2(1000)

Traceback (most recent call last):

File "../foo.py", line 24, in <module>

print "Perfect square root of 1000 = %d"%perfect_sqrt2(1000)

File "<decorator-gen-3>", line 2, in perfect_sqrt2

File "/Library/Python/2.7/site-packages/PyContracts/contracts/main.py", line 253, in contracts_checker

raise e

contracts.interface.ContractNotRespected: Breach for argument 'x' to perfect_sqrt2().

1000 doesn't end in 1,4,5,6 or 9 or even number of zeros

checking: callable() for value: Instance of <type 'int'>: 1000

checking: ends_ok for value: Instance of <type 'int'>: 1000

checking: int,ends_ok,>=0 for value: Instance of <type 'int'>: 1000

Variables bound in inner context:

Performance Considerations

Depending on the situation, checking validity of a contract can be expensive relative to the real work the function is supposed to perform. For example, suppose a function is designed to perform a binary search on a sorted list of numbers. A reasonable pre-condition for the operation is that the list it is given to search is indeed sorted. If the list is large, checking that it is properly sorted is even more expensive than performing a binary search.

In other words, contracts can negatively impact performance. For this reason, it is desirable for callers to

have a way to disable contract checks to avoid always paying whatever performance costs they incur. In PyContracts,

this can be accomplished either by setting an environment variable, DISABLE_CONTRACTS or by a call to

contracts.disable_all() before any @contracts statements are processed by the Python interpreter.

This allows developers to keep the checks in place while they are developing code and then disable them once

they are sure their code is working as expected.

Contracts are most helpful in the process of developing code. So, it is often good practice to write contracts for functions before the function implementations. Later, when development is complete and performance becomes important, contracts can be disabled. In this way, contracts are handled much like assertions. They are useful in developing code and then disabled once development is complete.

Learn more about Design by Contract in Python

Key Points

Design by Contract is a way of using Assertions for interface specification.

Pre-conditions are promises you agree to obey when calling a function.

Post-conditions are promises a function agrees to obey returning to you.

Unit Tests

Overview

Teaching: 10 min

Exercises: 0 minQuestions

What is a unit of code?

Objectives

Understand that functions are the atomistic unit of software.

Understand that simpler units are easier to test than complex ones.

Understand how to write a single unit test.

Understand how to run a single unit test.

Understand how test fixtures can help write tests.

Unit tests are so called because they exercise the functionality of the code by interrogating individual functions and methods. Functions and methods can often be considered the atomic units of software because they are indivisible. However, what is considered to be the smallest code unit is subjective. The body of a function can be long or short, and shorter functions are arguably more unit-like than long ones.

Thus what reasonably constitutes a code unit typically varies from project to project and language to language. A good guideline is that if the code cannot be made any simpler logically (you cannot split apart the addition operator) or practically (a function is self-contained and well defined), then it is a unit.

Functions are Like Paragraphs

Recall that humans can only hold a few ideas in our heads at once. Paragraphs in books, for example, become unwieldy after a few lines. Functions, generally, shouldn’t be longer than paragraphs. Robert C. Martin, the author of “Clean Code” said : “The first rule of functions is that they should be small. The second rule of functions is that they should be smaller than that.”

The desire to unit test code often has the effect of encouraging both the

code and the tests to be as small, well-defined, and modular as possible.

In Python, unit tests typically take the form of test functions that call and make

assertions about methods and functions in the code base. To run these test

functions, a test framework is often required to collect them together. For

now, we’ll write some tests for the mean function and simply run them

individually to see whether they fail. In the next session, we’ll use a test

framework to collect and run them.

Unit Tests Are Just Functions

Unit tests are typically made of three pieces, some set-up, a number of assertions, and some tear-down. Set-up can be as simple as initializing the input values or as complex as creating and initializing concrete instances of a class. Ultimately, the test occurs when an assertion is made, comparing the observed and expected values. For example, let us test that our mean function successfully calculates the known value for a simple list.

Before running the next code, save your mean function to a file called mean.py in the working directory.

You can use this code to save to file:

def mean(num_list):

try:

return sum(num_list)/len(num_list)

except ZeroDivisionError :

return 0

except TypeError as detail :

msg = "The algebraic mean of an non-numerical list is undefined.\

Please provide a list of numbers."

raise TypeError(detail.__str__() + "\n" + msg)

Now, back in your Jupyter Notebook run the following code:

from mean import *

def test_ints():

num_list = [1, 2, 3, 4, 5]

obs = mean(num_list)

exp = 3

assert obs == exp

The test above:

- sets up the input parameters (the simple list [1, 2, 3, 4, 5]);

- collects the observed result;

- declares the expected result (calculated with our human brain);

- and compares the two with an assertion.

A unit test suite is made up of many tests just like this one. A single implemented function may be tested in numerous ways.

In a file called test_mean.py, implement the following code:

from mean import *

def test_ints():

num_list = [1, 2, 3, 4, 5]

obs = mean(num_list)

exp = 3

assert obs == exp

def test_zero():

num_list=[0,2,4,6]

obs = mean(num_list)

exp = 3

assert obs == exp

def test_double():

# This one will fail in Python 2

num_list=[1,2,3,4]

obs = mean(num_list)

exp = 2.5

assert obs == exp

def test_long():

big = 100000000

obs = mean(range(1,big))

exp = big/2.0

assert obs == exp

def test_complex():

# given that complex numbers are an unordered field

# the arithmetic mean of complex numbers is meaningless

num_list = [2 + 3j, 3 + 4j, -32 - 2j]

obs = mean(num_list)

exp = NotImplemented

assert obs == exp

Use Jupyter Notebook to import the test_mean package and run each test like this:

from test_mean import *

test_ints()

test_zero()

test_double()

test_long()

test_complex() ## Please note that this one might fail. You'll get an error message showing which tests failed

Well, that was tedious.

Key Points

Functions are the atomistic unit of software.

Simpler units are easier to test than complex ones.

A single unit test is a function containing assertions.

Such a unit test is run just like any other function.

Running tests one at a time is pretty tedious, so we will use a framework instead.

Running Tests with pytest

Overview

Teaching: 10 min

Exercises: 0 minQuestions

How do I automate my tests?

Objectives

Understand how to run a test suite using the pytest framework

Understand how to read the output of a pytest test suite

We created a suite of tests for our mean function, but it was annoying to run them one at a time. It would be a lot better if there were some way to run them all at once, just reporting which tests fail and which succeed.

Thankfully, that exists. Recall our tests:

from mean import *

def test_ints():

num_list = [1,2,3,4,5]

obs = mean(num_list)

exp = 3

assert obs == exp

def test_zero():

num_list=[0,2,4,6]

obs = mean(num_list)

exp = 3

assert obs == exp

def test_double():

# This one will fail in Python 2

num_list=[1,2,3,4]

obs = mean(num_list)

exp = 2.5

assert obs == exp

def test_long():

big = 100000000

obs = mean(range(1,big))

exp = big/2.0

assert obs == exp

def test_complex():

# given that complex numbers are an unordered field

# the arithmetic mean of complex numbers is meaningless

num_list = [2 + 3j, 3 + 4j, -32 - 2j]

obs = mean(num_list)

exp = NotImplemented

assert obs == exp

Once these tests are written in a file called test_mean.py, the command

pytest can be run on the terminal or command line from the directory containing the tests (note that you’ll have to use py.test for older versions of the pytest package):

$ pytest

collected 5 items

test_mean.py ....F

================================== FAILURES ===================================

________________________________ test_complex _________________________________

def test_complex():

# given that complex numbers are an unordered field

# the arithmetic mean of complex numbers is meaningless

num_list = [2 + 3j, 3 + 4j, -32 - 2j]

obs = mean(num_list)

exp = NotImplemented

> assert obs == exp

E assert (-9+1.6666666666666667j) == NotImplemented

test_mean.py:34: AssertionError

===================== 1 failed, 4 passed in 2.71 seconds ======================

In the above case, the pytest package ‘sniffed-out’ the tests in the

directory and ran them together to produce a report of the sum of the files and

functions matching the regular expression [Tt]est[-_]*.

The major benefit a testing framework provides is exactly that, a utility to find and run the

tests automatically. With pytest, this is the command-line tool called

pytest. When pytest is run, it will search all directories below where it was called,

find all of the Python files in these directories whose names

start or end with test, import them, and run all of the functions and classes

whose names start with test or Test.

This automatic registration of test code saves tons of human time and allows us to

focus on what is important: writing more tests.

When you run pytest, it will print a dot (.) on the screen for every test

that passes,

an F for every test that fails or where there was an unexpected error.

In rarer situations you may also see an s indicating a

skipped tests (because the test is not applicable on your system) or a x for a known

failure (because the developers could not fix it promptly). After the dots, pytest

will print summary information.

Without changing the tests, alter the mean.py file from the previous section until it passes.

When it passes, pytest will produce results like the following:

$ pytest

collected 5 items

test_mean.py .....

========================== 5 passed in 2.68 seconds ===========================

Show what tests are executed

Using

pytest -vwill result inpytestlisting which tests are executed and whether they pass or not:$ py.testcollected 5 items test_mean.py ..... test_mean.py::test_ints PASSED test_mean.py::test_zero PASSED test_mean.py::test_double PASSED test_mean.py::test_long PASSED test_mean.py::test_complex PASSED ========================== 5 passed in 2.57 seconds ===========================

As we write more code, we would write more tests, and pytest would produce more dots. Each passing test is a small, satisfying reward for having written quality scientific software. Now that you know how to write tests, let’s go into what can go wrong.

Key Points

The

pytestcommand collects and runs tests starting withTestortest_.

.means the test passed

Fmeans the test failed or erred

xis a known failure

sis a purposefully skipped test

Edge and Corner Cases

Overview

Teaching: 10 min

Exercises: 0 minQuestions

How do I catch all the possible errors?

Objectives

Understand that edge cases are at the limit of the function’s behavior

Write a test for an edge case

Understand that corner cases are where two edge cases meet

Write a test for a corner case

What we saw in the tests for the mean function are called interior tests. The precise points that we tested did not matter. The mean function should have behaved as expected when it is within the valid range.

Edge Cases

The situation where the test examines either the beginning or the end of a range, but not the middle, is called an edge case. In a simple, one-dimensional problem, the two edge cases should always be tested along with at least one internal point. This ensures that you have good coverage over the range of values.

Anecdotally, it is important to test edges cases because this is where errors tend to arise. Qualitatively different behavior happens at boundaries. As such, they tend to have special code dedicated to them in the implementation.

Consider the Fibonacci sequence

Take a moment to recall everything you know about the Fibonacci sequence.

The fibonacci sequence is valid for all positive integers. To believe that a fibonacci sequence function is accurate throughout that space, is it necessary to check every expected output value of the fibonacci sequence? Given that the sequence is infinite, let’s hope not.

Indeed, what we should probably do is test a few values within the typical scope of the function, and then test values at the limit of the function’s behavior.

Consider the following simple Fibonacci function:

def fib(n):

if n == 0 or n == 1:

return n

else:

return fib(n - 1) + fib(n - 2)

This function has two edge cases: zero and one. For these values of n, the

fib() function does something special that does not apply to any other values.

Such cases should be tested explicitly. A minimally sufficient test suite

for this function

(assuming the fib function is in a file called mod.py)

would be:

from mod import fib

def test_fib0():

# test edge 0

obs = fib(0)

assert obs == 0

def test_fib1():

# test edge 1

obs = fib(1)

assert obs == 1

def test_fib6():

# test internal point

obs = fib(6)

assert obs == 8

Different functions will have different edge cases.

Often, you need not test for cases that are outside the valid range, unless you

want to test that the function fails. In the fib() function negative and

noninteger values are not valid inputs. Tests for these classes of numbers serve

you well if you want to make sure that the function fails as expected. Indeed, we

learned in the assertions section that this is actually quite a good idea.

Test for Graceful Failure

The

fib()function should probably return the Python built-inNotImplementedvalue for negative and noninteger values.

- Create a file called

test_fib.py- Copy the three tests above into that file.

- Write two new tests that check for the expected return value

(NotImplemented) in each case (for negative input and noninteger input respectively).

Edge cases are not where the story ends, though, as we will see next.

Corner Cases

When two or more edge cases are combined, it is called a corner case.

If a function is parametrized by two linear and independent variables, a test

that is at the extreme of both variables is in a corner. As a demonstration,

consider the case of the function (sin(x) / x) * (sin(y) / y), presented here:

import numpy as np

def sinc2d(x, y):

if x == 0.0 and y == 0.0:

return 1.0

elif x == 0.0:

return np.sin(y) / y

elif y == 0.0:

return np.sin(x) / x

else:

return (np.sin(x) / x) * (np.sin(y) / y)

The function sin(x)/x is called the sinc() function. We know that at

the point where x = 0, then

sinc(x) == 1.0. In the code just shown, sinc2d() is a two-dimensional version

of this function. When both x and y

are zero, it is a corner case because it requires a special value for both

variables. If either x or y but not both are zero, these are edge

cases. If neither is zero, this is a regular internal point.

A minimal test suite for this function would include a separate test for the each of the edge cases, and an internal point. For example:

import numpy as np

from mod import sinc2d

def test_internal():

exp = (2.0 / np.pi) * (-2.0 / (3.0 * np.pi))

obs = sinc2d(np.pi / 2.0, 3.0 * np.pi / 2.0)

assert obs == exp

def test_edge_x():

exp = (-2.0 / (3.0 * np.pi))

obs = sinc2d(0.0, 3.0 * np.pi / 2.0)

assert obs == exp

def test_edge_y():

exp = (2.0 / np.pi)

obs = sinc2d(np.pi / 2.0, 0.0)

assert obs == exp

Write a Corner Case

The sinc2d example will also need a test for the corner case, where both x and y are 0.0.

- Insert the sinc2d function code (above) into a file called mod.py.

- Add the edge and internal case tests (above) to a test_sinc2d.py file.

- Invent and implement a corner case test in that file.

- Run all of the tests using

pyteston the command line.

Corner cases can be even trickier to find and debug than edge cases because of their increased complexity. This complexity, however, makes them even more important to explicitly test.

Whether internal, edge, or corner cases, we have started to build up a classification system for the tests themselves. In the following sections, we will build this system up even more based on the role that the tests have in the software architecture.

Key Points

Functions often fail at the edge of their range of validity

Edge case tests query the limits of a function’s behavior

Corner cases are where two edge cases meet

Integration and Regression Tests

Overview

Teaching: 10 min

Exercises: 0 minQuestions

How do we test more than a single unit of software?

Objectives

Understand the purpose of integration and regression tests

Understand how to implement an integration test

Integration Tests

You can think of a software project like a clock. Functions and classes are the gears and cogs that make up the system. On their own, they can be of the highest quality. Unit tests verify that each gear is well made. However, the clock still needs to be put together. The gears need to fit with one another.

Telling The Time

Integration tests are the class of tests that verify that multiple moving pieces and gears inside the clock work well together. Where unit tests investigate the gears, integration tests look at the position of the hands to determine if the clock can tell time correctly. They look at the system as a whole or at its subsystems. Integration tests typically function at a higher level conceptually than unit tests. Thus, writing integration tests also happens at a higher level.

Because they deal with gluing code together, there are typically fewer integration tests in a test suite than there are unit tests. However, integration tests are no less important. Integration tests are essential for having adequate testing. They encompass all of the cases that you cannot hit through plain unit testing.

Sometimes, especially in probabilistic or stochastic codes, the precise behavior of an integration test cannot be determined beforehand. That is OK. In these situations it is acceptable for integration tests to verify average or aggregate behavior rather than exact values. Sometimes you can mitigate nondeterminism by saving seed values to a random number generator, but this is not always going to be possible. It is better to have an imperfect integration test than no integration test at all.

As a simple example, consider the three functions a(), b(),

and c(). The a() function adds one to a number, b() multiplies a number

by two, and c() composes them. These functions are defined as follows:

def a(x):

return x + 1

def b(x):

return 2 * x

def c(x):

return b(a(x))

The a() and b() functions can each be unit tested because they each do one thing.

However, c() cannot be truly unit tested because all of the real work is farmed

out to a() and b(). Testing c() will be a test of whether a() and

b() can be integrated together.

Integration tests still follow the pattern of comparing expected

results to observed results. A sample test_c() is implemented here:

from mod import c

def test_c():

exp = 6

obs = c(2)

assert obs == exp

Given the lack of clarity in what is defined as a code unit, what is considered an integration test is also a little fuzzy. Integration tests can range from the extremely simple (like the one just shown) to the very complex. A good delimiter, though, is in opposition to the unit tests. If a function or class only combines two or more unit-tested pieces of code, then you need an integration test. If a function implements new behavior that is not otherwise tested, you need a unit test.

The structure of integration tests is very similar to that of unit tests. There is an expected result, which is compared against the observed value. However, what goes in to creating the expected result or setting up the code to run can be considerably more complicated and more involved. Integration tests can also take much longer to run because of how much more work they do. This is a useful classification to keep in mind while writing tests. It helps separate out which test should be easy to write (unit) and which ones may require more careful consideration (integration).

Integration tests, however, are not the end of the story.

Regression Tests

Regression tests are qualitatively different from both unit and integration tests. Rather than assuming that the test author knows what the expected result should be, regression tests look to the past for the expected behavior. The expected result is taken as what was previously computed for the same inputs.

The Past as Truth

Regression tests assume that the past is “correct.” They are great for letting developers know when and how a code base has changed. They are not great for letting anyone know why the change occurred. The change between what a code produces now and what it computed before is called a regression.

Like integration tests, regression tests tend to be high level. They often operate on an entire code base. They are particularly common and useful for physics simulators.

A common regression test strategy spans multiple code versions. Suppose there is an input file for version X of a simulator. We can run the simulation and then store the output file for later use, typically somewhere accessible online. While version Y is being developed, the test suite will automatically download the output for version X, run the same input file for version Y, and then compare the two output files. If anything is significantly different between them, the test fails.

In the event of a regression test failure, the onus is on the current developers to explain why. Sometimes there are backward-incompatible changes that had to be made. The regression test failure is thus justified, and a new version of the output file should be uploaded as the version to test against. However, if the test fails because the physics is wrong, then the developer should fix the latest version of the code as soon as possible.

Regression tests can and do catch failures that integration and unit tests miss. Regression tests act as an automated short-term memory for a project. Unfortunately, each project will have a slightly different approach to regression testing based on the needs of the software. Testing frameworks provide tools to help with building regression tests but do not offer any sophistication beyond what has already been seen in this chapter.

Depending on the kind of project, regression tests may or may not be needed. They are only truly needed if the project is a simulator. Having a suite of regression tests that cover the range of physical possibilities is vital to ensuring that the simulator still works. In most other cases, you can get away with only having unit and integration tests.

While more test classifications exist for more specialized situations, we have covered what you will need to know for almost every situation in computational physics.

Key Points

Integration tests interrogate the coopration of pieces of the software

Regression tests use past behavior as the expected result

Continuous Integration

Overview

Teaching: 10 min

Exercises: 0 minQuestions

How can I automate running the tests on more platforms than my own?

Objectives

Understand how continuous integration speeds software development

Understand the benefits of continuous integration

Implement a continuous integration server

Identify a few options for hosting a continuous integration server

To make running the tests as easy as possible, many software development teams implement a strategy called continuous integration. As its name implies, continuous integration integrates the test suite into the development process. Every time a change is made to the repository, the continuous integration system builds and checks that code.

Thought Experiment: Does Your Software Work on Your Colleague’s Computer?

Imagine you developed software on a MacOSX computer. Last week, you helped your office mate build and run it on their Linux computer. You’ve made some changes since then.

- How can you be sure it will still work if they update their repository when they come back from vacation?

- How long will that process take?

The typical story in a research lab is that, well, you don’t know whether it will work on your colleagues’ machine until you try rebuilding it on their machine. If you have a build system, it might take a few minutes to update the repository, rebuild the code, and run the tests. If you don’t have a build system, it could take all afternoon just to see if your new changes are compatible.

Let The Computers Do The Work

Scientists are good at creative insights, conceptual understanding, critical analysis, and consuming espresso. Computers are good at following instructions. Science would be more fun if the scientists could just give the computers the instructions and go grab an espresso.

Continuous integration servers allow just that. Based on your instructions, a continuous integration server can:

- check out new code from a repository

- spin up instances of supported operating systems (i.e. various versions of OSX, Linux, Windows, etc.).

- spin up those instances with different software versions (i.e. python 2.7 and python 3.0)

- run the build and test scripts

- check for errors

- and report the results.

Since the first step the server conducts is to check out the code from a repository, we’ll need to put our code online to make use of this kind of server (unless we are able/willing to set up our own CI server).

Set Up a Mean Git Repository on GitHub

Your

mean.pytest_mean.pyfiles can be the contents of a repository on GitHub.

- Go to GitHub and create a repository called mean.

- Clone that repository (git clone https://github.com:yourusername/mean)

- Copy the

mean.pyandtest_mean.pyfiles into the repository directory.- Use git to

add,commit, andpushthe two files to GitHub.

Giving Instructions

Your work on the mean function has both code and tests. Let’s copy that code into its own repository and add continuous integration to that repository.

What is required

It doesn’t need a build system, because Python does not need to be compiled.

- What does it need?

- Write the names of the software dependencies in a file called requirements.txt and save the file.

- In fact, why don’t you go ahead and version control it?

Travis-CI

Travis is a continous integration server hosting platform. It’s commonly used in Ruby development circles as well as in the scientific python community.

To use Travis, all you need is an account. It’s free so someone in your group should sign up for a Travis account. Then follow the instructions on the Travis website to connect your Travis account with GitHub.

A file called .travis.yml in your repository will signal to Travis that you want to

build and test this repository on Travis-CI. Such a file, for our purposes, is very simple:

language: python

python:

- "2.6"

- "2.7"

- "3.2"

- "3.3"

- "3.4"

- "nightly"

# command to install dependencies

install:

- "pip install -r requirements.txt"

# command to run tests

script: pytest

yml file syntax

The exact syntax of the

.travis.ymlfile is very important. Make sure to use spaces (not tabs). https://lint.travis-ci.org/ can be used to check for typographic errors.

You can see how the python package manager, pip, will use your requirements.txt file from the previous exercise. That requirements.txt file is a conventional way to list all of the python packages that we need. If we needed pytest, numpy, and pymol, the requirements.txt file would look like this:

numpy

pymol

pytest

Last steps

- Add .travis.yml to your repository

- Commit and push it.

- Check the situation at your server

Some guidance on debugging problems if the tests fail to run can be found in the lesson discussion document.

Continuous Integration Hosting

We gave the example of Travis because it’s very very simple to spin up. While it is able to run many flavors of Linux, it currently doesn’t support other platforms as well. Depending on your needs, you may consider other services such as:

Key Points

Servers exist for automatically running your tests

Running the tests can be triggered by a GitHub pull request

CI allows cross-platform build testing

A

.travis.ymlfile configures a build on the travis-ci serversMany free CI servers are available

Test Driven Development

Overview

Teaching: 10 min

Exercises: 0 minQuestions

How do you make testing part of the code writing process?

Objectives

Learn about the benefits and drawbacks of Test Driven Development.

Write a test before writing the code.

Test-driven Development (TDD) takes the workflow of writing code and writing tests and turns it on its head. TDD is a software development process where you write the tests first. Before you write a single line of a function, you first write the test for that function.

After you write a test, you are then allowed to proceed to write the function that you are testing. However, you are only supposed to implement enough of the function so that the test passes. If the function does not do what is needed, you write another test and then go back and modify the function. You repeat this process of test-then-implement until the function is completely implemented for your current needs.

The Big Idea

This design philosophy was most strongly put forth by Kent Beck in his book Test-Driven Development: By Example.

The central claim to TDD is that at the end of the process you have an implementation that is well tested for your use case, and the process itself is more efficient. You stop when your tests pass and you do not need any more features. You do not spend any time implementing options and features on the off chance that they will prove helpful later. You get what you need when you need it, and no more. TDD is a very powerful idea, though it can be hard to follow religiously.

The most important takeaway from test-driven development is that the moment you start writing code, you should be considering how to test that code. The tests should be written and presented in tandem with the implementation. Testing is too important to be an afterthought.

You Do You

Developers who practice strict TDD will tell you that it is the best thing since sliced arrays. However, do what works for you. The choice whether to pursue classic TDD is a personal decision.

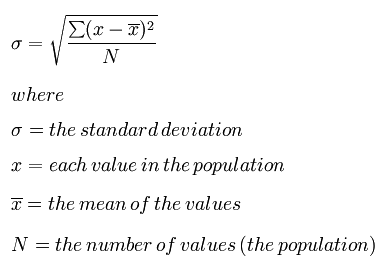

The following example illustrates classic TDD for a standard deviation

function, std().

To start, we write a test for computing the standard deviation from a list of numbers as follows:

from mod import std

def test_std1():

obs = std([0.0, 2.0])

exp = 1.0

assert obs == exp

Next, we write the minimal version of std() that will cause test_std1() to

pass:

def std(vals):

# surely this is cheating...

return 1.0

As you can see, the minimal version simply returns the expected result for the sole case that we are testing. If we only ever want to take the standard deviation of the numbers 0.0 and 2.0, or 1.0 and 3.0, and so on, then this implementation will work perfectly. If we want to branch out, then we probably need to write more robust code. However, before we can write more code, we first need to add another test or two:

def test_std1():

obs = std([0.0, 2.0])

exp = 1.0

assert_equal(obs, exp)

def test_std2():

# Test the fiducial case when we pass in an empty list.

obs = std([])

exp = 0.0

assert_equal(obs, exp)

def test_std3():

# Test a real case where the answer is not one.

obs = std([0.0, 4.0])

exp = 2.0

assert_equal(obs, exp)

A simple function implementation that would make these tests pass could be as follows:

def std(vals):

# a little better

if len(vals) == 0: # Special case the empty list.

return 0.0

return vals[-1] / 2.0 # By being clever, we can get away without doing real work.

Are we done? No. Of course not. Even though the tests all pass, this is clearly still not a generic standard deviation function. To create a better implementation, TDD states that we again need to expand the test suite:

def test_std1():

obs = std([0.0, 2.0])

exp = 1.0

assert_equal(obs, exp)

def test_std2():

obs = std([])

exp = 0.0

assert_equal(obs, exp)

def test_std3():

obs = std([0.0, 4.0])

exp = 2.0

assert_equal(obs, exp)

def test_std4():

# The first value is not zero.

obs = std([1.0, 3.0])

exp = 1.0

assert_equal(obs, exp)

def test_std5():

# Here, we have more than two values, but all of the values are the same.

obs = std([1.0, 1.0, 1.0])

exp = 0.0

assert_equal(obs, exp)

At this point, we may as well try to implement a generic standard deviation function. Recall:

We would spend more time trying to come up with clever approximations to the standard deviation than we would spend actually coding it.

- Copy the five tests above into a file called test_std.py

- Open mod.py

- Add an implementation that actually calculates a standard deviation.

- Run the tests above. Did they pass?

It is important to note that we could improve this function by

writing further tests. For example, this std() ignores the situation where infinity

is an element of the values list. There is always more that can be tested. TDD

prevents you from going overboard by telling you to stop testing when you

have achieved all of your use cases.

Key Points

Test driven development is a common software development technique

By writing the tests first, the function requirements are very explicit

TDD is not for everyone

TDD requires vigilance for success

Fixtures

Overview

Teaching: 10 min

Exercises: 0 minQuestions

How do I create and cleanup the data I need to test the code?

Objectives

Understand how test fixtures can help write tests.

The above example didn’t require much setup or teardown. Consider, however, the

following example that could arise when comunicating with third-party programs.

You have a function f() which will write a file named yes.txt to disk with

the value 42 but only if a file no.txt does not exist. To truly test that the

function works, you would want to ensure that neither yes.txt nor no.txt

existed before you ran your test. After the test, you want to clean up after

yourself before the next test comes along. You could write the test, setup,

and teardown functions as follows:

import os

from mod import f

def f_setup():

# The f_setup() function tests ensure that neither the yes.txt nor the

# no.txt files exist.

files = os.listdir('.')

if 'no.txt' in files:

os.remove('no.txt')

if 'yes.txt' in files:

os.remove('yes.txt')

def f_teardown():

# The f_teardown() function removes the yes.txt file, if it was created.

files = os.listdir('.')

if 'yes.txt' in files:

os.remove('yes.txt')

def test_f():

# The first action of test_f() is to make sure the file system is clean.

f_setup()

exp = 42

f()

with open('yes.txt', 'r') as fhandle:

obs = int(fhandle.read())

assert obs == exp

# The last action of test_f() is to clean up after itself.

f_teardown()

The above implementation of setup and teardown is usually fine.

However, it does not guarantee that the f_setup() and the f_teardown()

functions will be called. This is because an unexpected error anywhere in

the body of f() or test_f() will cause the test to abort before the

teardown function is reached.

These setup and teardown behaviors are needed when test fixtures must be created. A fixture is any environmental state or object that is required for the test to successfully run.

As above, a function that is executed before the test to prepare the fixture

is called a setup function. One that is executed to mop-up side effects

after a test is run is called a teardown function.

By giving our setup and teardown functions special names pytest will

ensure that they are run before and after our test function regardless of

what happens in the test function.

Those special names are setup_function and teardown_function,

and each needs to take a single argument: the test function being run

(in this case we will not use the argument).

import os

from mod import f

def setup_function(func):

# The setup_function() function tests ensure that neither the yes.txt nor the

# no.txt files exist.

files = os.listdir('.')

if 'no.txt' in files:

os.remove('no.txt')

if 'yes.txt' in files:

os.remove('yes.txt')

def teardown_function(func):

# The f_teardown() function removes the yes.txt file, if it was created.

files = os.listdir('.')

if 'yes.txt' in files:

os.remove('yes.txt')

def test_f():

exp = 42

f()

with open('yes.txt', 'r') as fhandle:

obs = int(fhandle.read())

assert obs == exp

The setup and teardown functions make our test simpler and the teardown function is guaranteed to be run even if an exception happens in our test. In addition, the setup and teardown functions will be automatically called for every test in a given file so that each begins and ends with clean state. (Pytest has its own neat fixture system that we won’t cover here.)

Key Points

It may be necessary to set up “fixtures” composing the test environment.

Key Points and Glossary

Overview

Teaching: min

Exercises: minQuestions

Objectives

Glossary

- assert

- A keyword that halts code execution when its argument is false.

- continuous integration

- Automatically checking the building and testing process accross platforms.

- exceptions

- Customizeable cousin of assertions.

- except

- A keyword used to catch and carefully handle that exception.

- integration test

- Tests that check that various pieces of the software work together as expected.

pytest- A Python package with testing utilities.

pytest- A command-line program that collects and runs unit tests.

- regression test

- Tests that defend against new bugs, or regressions, which might appear due to new software and updates.

- test-driven development

- A software development strategy in which the tests are written before the code.

- try

- A keyword that guards a piece of code which may throw an exception.

- unit test

- Tests that investigate the behavior of units of code (such as functions, classes, or data structures).

Key Points